1. Introduction

A wireless sensor network (WSN) is a wireless network composed of a large number of sensor nodes. Each sensor node needs to collect a large amount of data and transmit it to the sink node. Traditional data acquisition and transmission methods will cause huge energy consumption and reduce the service life of nodes [

1]. If the collected data is compressed before transmission, the energy consumption can be greatly reduced and the node life can be prolonged. However, the traditional Nyquist sampling frequency is too high, resulting in excess energy consumption. To solve the problem that Nyquist’s sampling frequency is too high. Jose et al. proposed to apply the compressed sensing (CS) [

2] theory to wireless sensor networks. The CS theory shows that as long as the signal is sparse or can be sparsely represented, the original signal can be reconstructed undistorted from the measurement of a linear combination far below Nyquist sampling frequency. The original signals of wireless sensor networks are sparse, as such, and CS theory is widely used in the reconstruction of sparse original signals in wireless sensor networks.

At present, the main reconstruction algorithms studied include greedy iterative algorithms [

3], non-convex optimization algorithms [

4], and convex optimization algorithms [

5]. The most classical greedy iterative algorithm is the matching pursuit (MP) algorithm [

6]. The algorithm uses residuals to reconstruct sparse signals. The algorithm is relatively simple, and the reconstruction rate is fast, but the sparsity of signals in a certain transformation domain is required, and the reconstruction accuracy is poor. It is suitable for cases with a large amount of data and low reconstruction accuracy requirements, but it is not suitable for fields with high reconstruction accuracy requirements. Gorodnitsky proposed a sparse signal reconstruction algorithm based on the wavelet transform [

7]. Daubechies proposed a sparse reconstruction algorithm based on logic and iterative weighted least squares minimization [

8]. Both algorithms belong to non-convex optimization algorithms, which can improve the reconstruction accuracy to a certain extent but whose improvement effect is limited. Daubechies et al. proposed convex optimization algorithms to reconstruct sparse signals in wireless sensor networks [

9]. This method transforms the sparse signal recovery problem into a convex optimization problem and solves the minimum value of the objective function. The results obtained are globally optimal, and the reconstruction accuracy is good and applicable to the realm with high reconstruction accuracy. It requires fewer measurements, but the computational complexity is very high. A typical convex optimization algorithm is the basis pursuit (BP) algorithm [

10]. The BP algorithm has high reconstruction accuracy but a low reconstruction rate. William et al. proposed a gradient-based sparse recovery method [

11], which has high reconstruction accuracy and a fast first-order convergence rate.

Ma Duxiang proposed to apply Newton’s method to a sparse signal reconstruction algorithm [

12]. Newton’s search direction is used to replace the steepest descent direction and obtain the second-order convergence rate, which improves the reconstruction rate of the sparse signal to a certain extent. Newton’s method requires the inverse of the Hessian matrix, which requires large computational quantities and can improve the reconstruction rate but is limited. The computational quantities are the complexity and time of the algorithm. The longer the algorithm runs, the greater the computational quantities. Chen Fenghua used the BFGS [

13] algorithm to solve the problem of large computational quantities in wireless sensor networks. The BFGS algorithm replaces the inverse of the Hessian matrix with an approximate matrix

Hk by iteration, which reduces the amount of computation. However, the BFGS algorithm needs to calculate and store the

n × n matrix

Hk. When dimension

n is large, the reduced computation amount is limited. Therefore, the L-BFGS [

14] quasi-Newton algorithm is proposed in this paper to solve the problem of sparse signal reconstruction in wireless sensors based on compressed sensing. The L-BFGS algorithm does not need to calculate and store

Hk directly but only needs to store

m (

m<<n) vector pairs (

sk,

uk). This solves the shortcoming of the BFGS quasi-Newton algorithm, which must calculate and store the matrix

Hk directly; the size of

Hk is

n × n. In

Section 5, we prove the feasibility of using the L-BFGS quasi-Newton algorithm to solve the problem of sparse signal reconstruction in wireless sensors based on compressed sensing.

2. Signal Reconstruction Algorithm Based on Newton Method

In WSNs, it is known that sparse signals

, and the matrix

(

m<<n) [

13].

is the observation vector. They satisfy the following relation:

How to reconstruct the original signal from a linear system is the core problem of sparse signal reconstruction using compressed sensing in wireless sensor networks. Equation (1) is underdetermined and has infinite sets of solutions.

In the compressed sensing problem, if the original signal

is known to be sparse under a certain transformation, the Equation (1) can be transformed into an

L0 norm minimization problem, and the signal is reconstructed by solving the

L0 norm minimization problem:

the

L0 norm here is the number of nonzero elements of the vector.

Research shows that sparse signals can be accurately reconstructed by solving the linear optimization of the

L0 norm minimization problem. However, the

L0 norm problem is NP-hard. In other words, the solution to the

L0 norm minimization problem needs to enumerate all the permutations and combinations of non-zero values in the original signal. Under certain conditions, the

L1 norm minimization problem is the optimal convex approximation of the

L0 norm minimization problem [

15], and the solution to the

L1 norm minimization problem is simple. Therefore, solving the

L0 norm minimization problem is transformed into solving the

L1 norm minimization problem:

The L1 norm minimization problem is a convex optimization problem. There are many methods to solve convex optimization problems, and Newton’s method is one of them. Newton’s method uses the first-order matrix and second-order Hessian matrix to approximate the objective function quadratic, and the result is faster than other convex optimization algorithms.

In practice, it is difficult to directly solve the linear programming problem (3). Since matrix

A is very large and complex to calculate. To simplify the calculation, problem (3) can be transformed into an

L1 regularized least squares problem [

16].

where

λ is a regularization parameter.

Since ∥x∥1 is a nonsmooth convex function for x, the subgradient basic approach is not effective in solving the problem (4). The L1 minimization problem (3) can be solved by solving a suitable smooth form of problem (4).

According to the literature [

17], using the Nesterov smoothing technique, ∥

x∥

1 is smoothed into the following smooth function:

where:

H(u) is the Huber penalty function,

τ is a smooth parameter, see literature [

18] for relevant research.

The smooth function

f(

x) is convex, the gradient ∇

f (

x) is Lipchitz continuous, and its components are:

which is:

For ease of calculation, let

. Convert the

L1 regularized least squares problem (4) into an unconstrained smooth convex programming problem:

where

F(

x) is differentiable when Newton’s method is adopted, the search direction of

F(

x) is:

The gradient of

F(

x) is:

∇

F(x) is Lipchitz continuous, for the convenience of record, let:

xk is the iteration point of step k, xk+1 is the iteration point of step k + 1, sk is the iteration difference of adjacent iteration points, gk is the gradient of F(x), uk is the gradient difference of F(x).

Then, the search direction of Newton’s method can be simplified as follows:

When solving the problem (5), the iteration formula of Newton’s method is:

When the Newton method is used to solve the sparse signal reconstruction problem in wireless sensor networks, its advantage is that it has a second-order convergence rate and that the reconstruction rate is higher than that of other convex optimization algorithms. The disadvantage of Newton’s method is that it needs the inverse of the second-order Hessian matrix and a large amount of computation, which will reduce the reconstruction rate to a certain extent. In addition, it cannot guarantee that the search direction is the descending direction of the objective function F(x) at x when is not positive definite.

Based on the existing problems of the Newton method, this paper proposes the L-BFGS quasi-Newton method to solve the problem of sparse signal reconstruction in wireless sensor networks. In

Section 5, it is proven whether the L-BFGS quasi-Newton method can solve the sparse reconstruction problem of wireless sensor networks.

3. Principles

The L-BFGS quasi-Newton method is an improvement of the BFGS quasi-Newton method. The L-BFGS quasi-newton method uses the information of the objective function value and the first derivative to construct a suitable Hk to approximate the inverse of the Hessian matrix without directly calculating and storing the matrix Hk. The complexity of the L-BFGS algorithm is lower than that of the BFGS algorithm, and the convergence speed of the Newton method is maintained. The constructed Hk is positive definite, which ensures that the search direction is the descending direction of the target function F(x) at x.

Before starting the L-BFGS algorithm, we first recall the famous BFGS method. An important problem in the BFGS quasi-Newton method is constructing a suitable matrix Bk, which makes Bk is an approximate matrix of the Hessian matrix. Bk needs to meet the following conditions:

(1) For all k, Bk is symmetric and positive definite, ensuring that the direction generated by the algorithm is the descending direction of the objective function F(x) at xk;

(2) The updating rule of a matrix Bk is relatively simple.

In this paper, the search direction

dk is obtained by solving the following linear equation:

The updating rule of the matrix

Bk is:

When a suitable

Bk has been constructed,

Hk satisfies the condition

. The Sherman-Morrison Woodbury [

19] formula is used twice to obtain the updated formula of the matrix

Hk is:

where

Hk is the approximation of the inverse of the Hessian matrix and

I is the identity matrix of order

n,

Hk should satisfy the following conditions:

- (1)

Hk satisfies the Quasi-Newton equation:

- (2)

Hk is a positive definite symmetric matrix;

- (3)

The update from Hk to Hk+1 is a low-rank update.

The specific process of the BFGS algorithm is shown in the literature [

13]. It is known that the curvature condition

can guarantee the positive definiteness of

Hk+1 when

Hk is positive definite. From Formula (8), an efficient recursive procedure is derived to compute the matrix-vector product

Hk. For completeness, we describe the L-BFGS recursive procedure for

Hk in Algorithm 1.

| Algorithm 1: L-BFGS two-loop recursion |

1. Input:;

2.

3. for do

![Electronics 12 01267 i001 Electronics 12 01267 i001]()

6. end

7.

8. for do

![Electronics 12 01267 i002 Electronics 12 01267 i002]()

11. end

12. Return the result |

The two-loop recursive scheme can find the descent direction only through a simple calculation. Hence, it is cheap to generate the search direction by using Algorithm 1.

4. Algorithm

In the L-BFGS method,

Hk no longer needs to be calculated and stored directly. Instead, the

m latest vectors (

sk,uk) saved in the memory are used to update the

Hk, which only needs to store matrices of the size

m × n, saving a lot of computation cost and improving the reconstruction rate. The specific step of the L-BFGS algorithm is Algorithm 2.

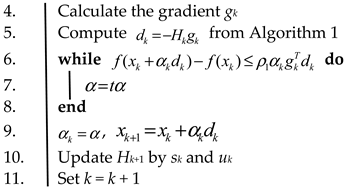

| Algorithm 2: Limit memory BFGS Algorithm(L-BFGS) |

1.Given: ;

2. .

3. while do

![Electronics 12 01267 i003 Electronics 12 01267 i003]()

12. end |

In the L-BFGS algorithm, the Wolfe line search is used to obtain the step size

αk. Wolfe line search belongs to a kind of inexact linear search. When using Wolfe line search to find the step size

αk,

αk is required to satisfy the following:

To make (9), flexible, we employ the non-monotone reduction condition on the:

the parameter

ρ1 and

ρ2 satisfies the conditions (0 <

ρ1 <

ρ2 < 1).

Finally, compared with the BFGS algorithm, the L-BFGS algorithm does not need to calculate and store Hk directly; it only needs to store matrices of the size m × d and calculate Hk by matrices of storage. The high reconstruction rate is improved by discarding some vectors and sacrificing a little reconstruction accuracy. Therefore, compared with the BFGS quasi-Newton method, the L-BFGS quasi-Newton method greatly reduces the computational complexity and makes the reconstruction rate of a sparse signal faster.

5. Verify the Feasibility of the L-BFGS Algorithm

According to

Section 2, the sparse signal reconstruction problem of wireless sensor networks can be transformed into the

L1 norm minimization problem. When the solution is the optimal solution to the

L1 norm minimization problem, the sparse signal can be accurately reconstructed. In other words, when algorithm 2 meets global convergence, it is feasible.

First, we give some useful inequalities in the following lemma.

Lemma 1. Let Bk be symmetric and positive definite, and Bk+1 is determined by the L-BFGS correction Equation (7), then the necessary and sufficient condition for Bk+1 symmetric and positive definite is .

Lemma 1 shows that, if the initial matrix

B0 is symmetric and positive definite and maintains

(

) during the iteration. Then, the matrix sequence {

Bk+1 } generated by the L-BFGS correction formula is symmetric and positive definite, so that the equation

has a unique solution

dk. It is easy to deduce that

Since

, so

.

dk is the descending direction of the target function

F(x) at

xk.Lemma 2. If the Wolfe search criterion is adopted in the L-BFGS corrected quasi-Newton algorithm, there is .

Proof. Since

, according to step 5 of algorithm 2, we have

it is easy to deduce that

Since

and

, so

.

Bk+1 is positively definite. The search direction generated by the algorithm is guaranteed to be the descending direction of the objective function

F(

x) at

xk, making the algorithm work normally.□

Lemma 3. If the smooth function of the L1 norm ∥x∥1 obtained by the smoothing technique is:where:then it can be proven that Proof. when when we have that: □

From Lemma 3 and the mechanism of Algorithm 2, we immediately obtain the following theorem.

Theorem 1. Let {xk} denote the sequence generated by L-BFGS quasi-Newton method iteration. When the smoothing parameter of ∥x∥1 satisfies τk→0, then {xk} is a bounded sequence. Let x* denote any limit point of {xk}, then x* is the optimal solution to the L1 norm minimization problem.

Prove the smooth parameter τk for the step k, make = arg denotes the minimum point of the objective function Fk(x) at step k, and z denote any vector satisfying .

Because

so

also because

, so:

And from Lemma 3,

then:

Since

so:

In combination with Equations (11) and (12), the smoothness of

is:

In addition, when the smoothing parameter τk→0, {xk} is a bounded sequence. On the other hand, it can also be proved that the limit point x* of the sequence {xk} is feasible. The limit point x* is the KKT point of the L1 norm minimization problem. Since ∥x∥1 is convex and problem (3) is a convex programming problem, the KKT condition is sufficient for optimality, so x* is the optimal solution to the ∥x∥1 norm minimization problem (3). It can be seen that the sparse signal of the wireless sensor network can be accurately reconstructed by the L-BFGS quasi-Newton method.

Corollary 1. Let the L1 norm minimization problem have a unique optimal solution, and let {xk} represent the sequence generated by an iteration of the L-BFGS method. When k→∞ and τk→0, the xk converges to x*, where x* = arg min{∥x ∥1:Ax = y}.

6. L-BFGS Quasi-Newton Method Steps

The L-BFGS quasi-Newton method is developed from the BFGS quasi-Newton method. The basic idea of the BFGS quasi-Newton method is to use the identity matrix I to gradually approximate the H matrix, which avoids directly calculating the inverse of the Hessian matrix. However, Hk should be stored each time. Hk is very large, and the reconstruction rate of the algorithm is lost by direct calculation. According to Equation (8), each iteration of Hk is obtained by iterating curvature information (sk,uk). Since Hk cannot be stored easily, then store all the curvature information (sk,uk). This kind of storage saves memory space and improves the speed of the algorithm.

When the number of iterations is very large, all (sk,uk) cannot be saved. The reconstruction rate can be improved by discarding some original (sk,uk). Assuming that the number of storage vectors set is m. When the iteration is m + 1 times, (s1,u1) will be thrown away, and when the iteration is m + 2 times, (s2,u2) will be thrown away. By parity of reasoning, only the latest m-group (sk,uk) is retained. In this way, although the reconstruction accuracy is lost, the memory is saved, the algorithm complexity is reduced, and the reconstruction rate is improved. So the L-BFGS algorithm can be understood as another optimization of the BFGS algorithm.

When the L-BFGS quasi-Newton method is used to solve the sparse signal reconstruction problem in wireless sensor networks, the specific steps are as follows:

Step 1: First initialize the variable, select the initial point x0, and define the operation error ε. The general value of ε is between 0 and 1, and the initial value of the H matrix is the identity matrix I of order n;

Step 2: Let k = 1 and calculate the gradient gk at this time;

Step 3: Calculate gk+1. If the norm of gk+1 satisfies the operating error defined, namely , it indicates that the cut-off condition is met and the algorithm is terminated. The iteration point xk+1 is the optimal solution. If the cut-off conditions are not met, it is necessary to go to step 4 and continue to solve the optimal solution;

Step 4: Calculate the search direction dk and the step factor αk, and update xk+1; to ensure that the search direction is correct. There are many methods to calculate the step factor αk. Here, the inexact Wolfe line search is used to solve the step factor αk. The step size can be guaranteed to be larger than 0. In the correct search direction, the xk+1 is closer and closer to the optimal solution;

Step 5: If k ≥ m, keep the latest curvature information (sk,uk), and delete (sk-m,uk-m). The L-BFGS quasi-Newton method will retain m bits of data. When it exceeds m bits, it will delete the curvature information before m bits to improve the calculation speed. This is also the difference between the BFGS quasi-Newton method;

Step 6: Let k = k + 1; transfer to step 3. Start iterating until the optimal solution is obtained and the algorithm stops.

Special remarks: In step 1, for the initial value x0, the initial value x0 of iteration should be selected near the root to ensure the convergence of the iterative process. The most common way is to choose the initial value by using Newton’s convergence theorem. In step 4, the correctness of the search direction dk should be ensured. If the search direction is not the descending direction at x, the iterative point will deviate more and more from the optimal point, and the sparse signal cannot be accurately reconstructed. There are many ways to calculate the descending direction, dk. In this paper, a two-loop recursive algorithm is adopted to find the search direction, which can ensure the accuracy of the search direction and the two-loop recursive algorithm is simple. In step 5, if k ≥ m is satisfied, calculate the new curvature information and discard the curvature information before m-bit

(sk-m,uk-m). By giving up small reconstruction accuracy in exchange for a faster reconstruction rate. If k ≥ m is not satisfied, directly calculate (sk,uk). In step 6, let k = k + 1 and start a new iteration; no initialization is required. The result of the previous iteration is its initial value, and the iteration continues until the optimal solution is obtained.

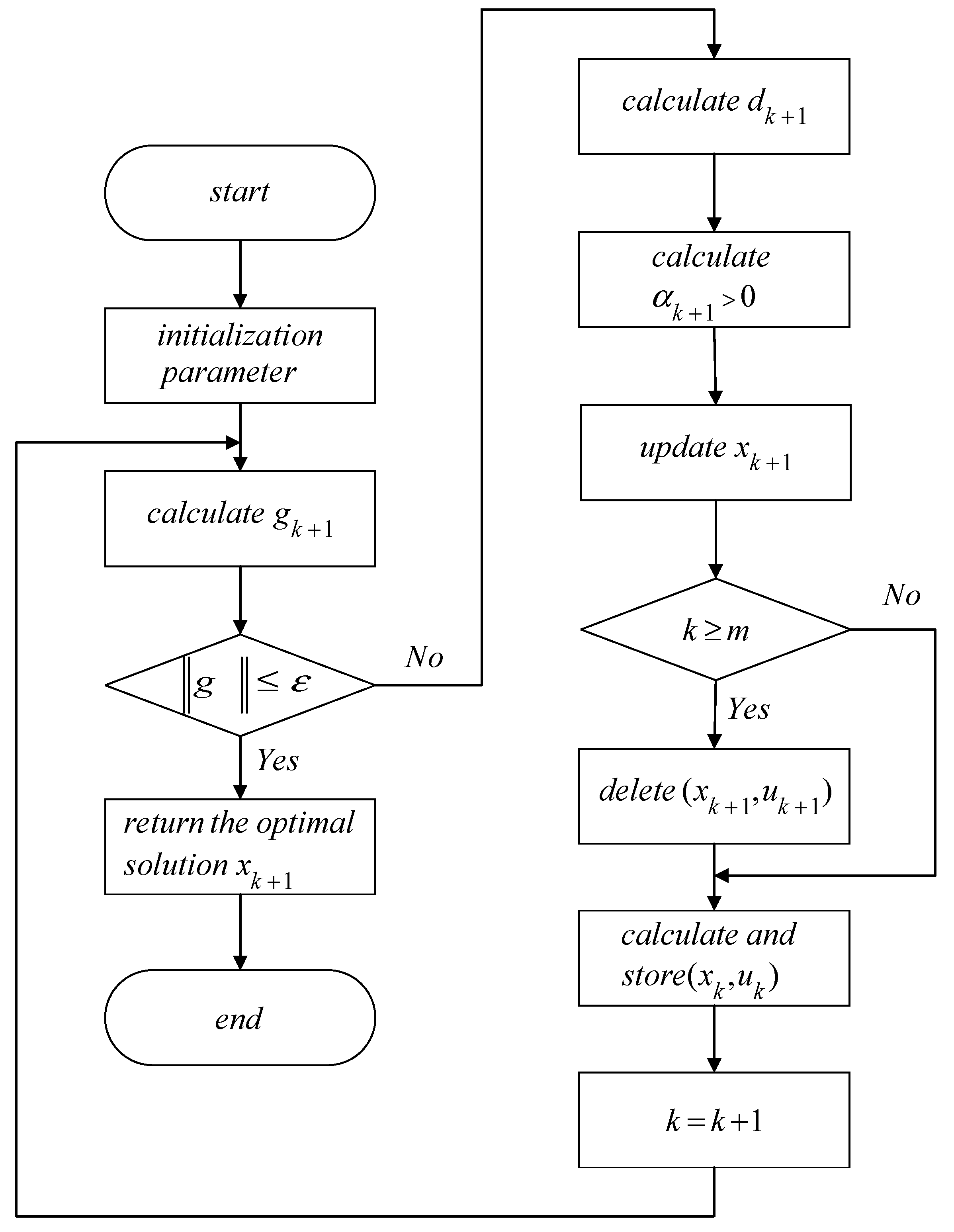

The flow chart of the L-BFGS quasi-Newton method is shown in

Figure 1 as follows:

7. Experimental Simulation

To verify the effectiveness of the L-BFGS quasi-Newton method in wireless sensor networks for signal reconstruction, simulation experiments are carried out. The Gaussian matrix can satisfy the constraint isometry with high probability. In the experiment, the measurement matrix Φ∈RM×N with Gaussian distribution is used as the observation matrix, and the Gaussian sparse signal x ∈ RN with variable sparsity is used as the original signal. The value range of sparsity K is [1,70].

The simulation experiment in this paper takes the reconstruction accuracy and reconstruction time of each algorithm for the original signal as the main evaluation criteria, and the unit of time is a second (s). In the simulation experiment, each experiment is carried out 1000 times, and the average reconstruction accuracy and average reconstruction time of the 1000 experiments are taken as the final reconstruction accuracy and reconstruction time.

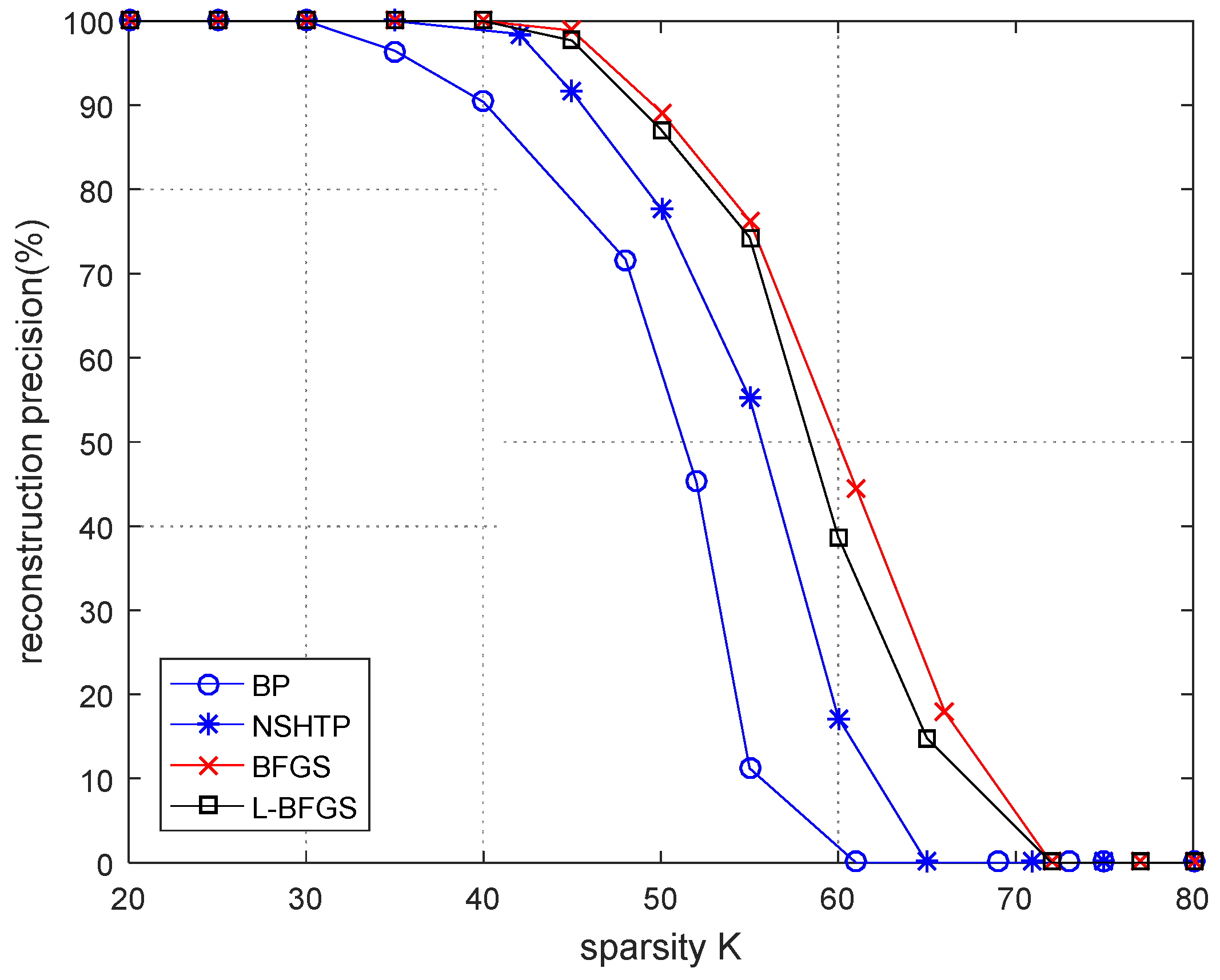

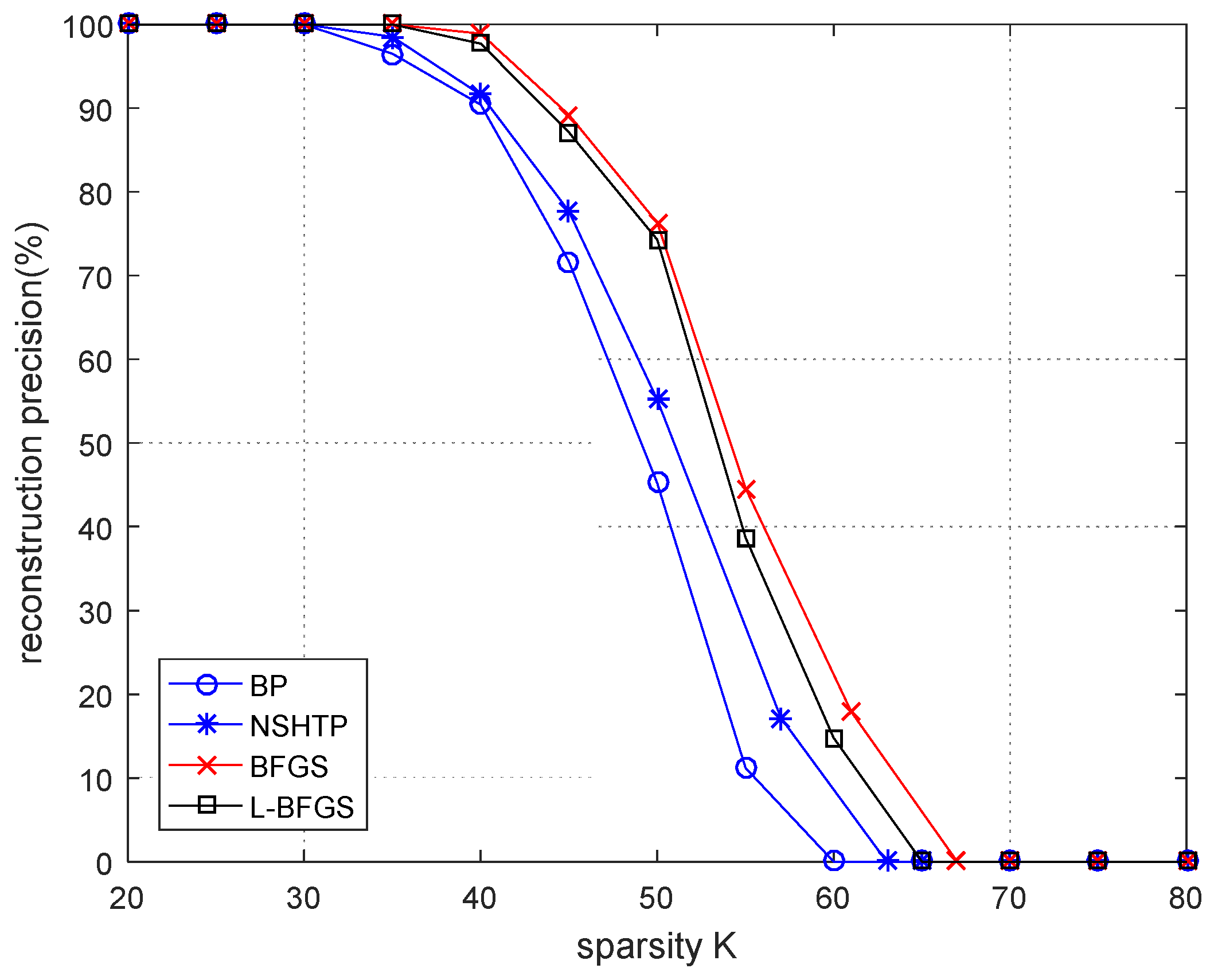

This paper mainly compares the reconstruction accuracy and reconstruction time of the BP algorithm, the NSHTP [

20] algorithm, the BFGS algorithm, and the L-BFGS algorithm under conditions of sparsity. When the data changes, the difference in reconstruction accuracy is small. This paper mainly analyzes the reconstruction accuracy of two sets of data: n = 512, m = 256, and n = 256, m = 128. The reconstruction time is greatly affected by the data size. To better compare the reconstruction time of each algorithm, four groups of data are selected to compare and test: n = 128, m = 64; n = 256, m = 128; n = 512, m = 256; n = 1024, m = 512.

First, there are two simulation diagrams about the reconstruction accuracy. In

Figure 2, n = 256 and m = 128. As can be seen from the figure, there is little difference in reconstruction accuracy between the four algorithms. The reconstruction rate of the four algorithms is high. The BP algorithm has the worst reconstruction accuracy. The reconstruction accuracy of the BP algorithm starts to decrease when K = 35. The BP algorithm cannot reconstruct the signal when K = 62. The BFGS algorithm has the best reconstruction accuracy, and the difference between the proposed algorithm and the BFGS algorithm is very small. Both algorithms begin to reduce the reconstruction accuracy when K = 40 and cannot reconstruct the signal when K = 72. In general, the reconstruction accuracy of the BFGS algorithm is slightly higher than that of the proposed algorithm, but the reconstruction accuracy of the proposed algorithm is higher than that of the NSHTP algorithm and the BP algorithm.

In

Figure 3, n = 512 and m = 256. At this time, the reconstruction accuracy is slightly different for n = 256 and m = 128; however, the reconstruction accuracy of all algorithms has deteriorated. It shows that the larger n and m, the worse the reconstruction accuracy. The difference between the proposed algorithm and the BFGS algorithm is still very small and within an acceptable range.

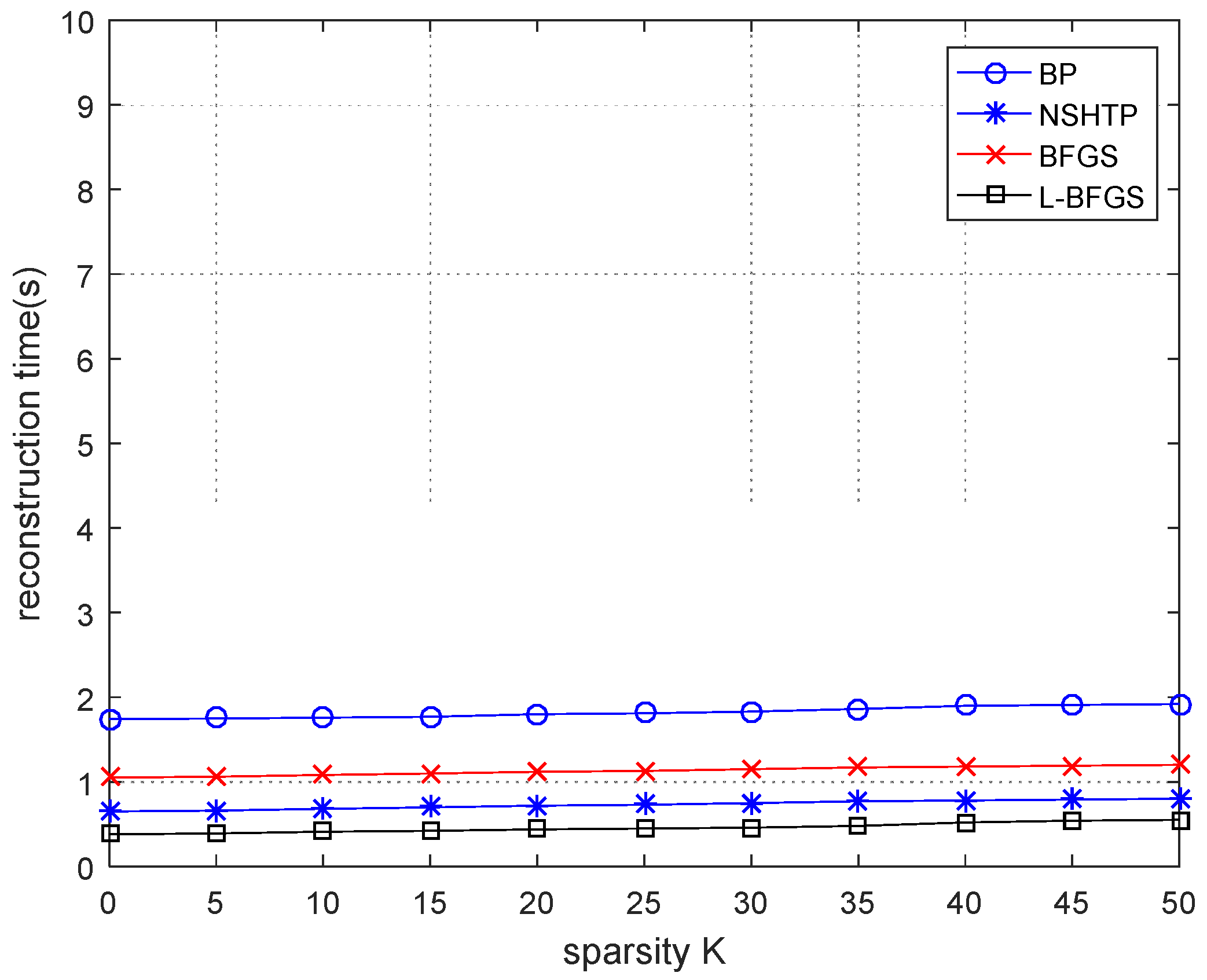

Secondly, there are four simulation diagrams about the reconstruction rate. In

Figure 4, n = 128 and m = 64. It can be seen from

Figure 4 that the reconstruction time of the BP algorithm is higher than the other three algorithms, which require the longest reconstruction time and the lowest reconstruction rate. The reconstruction time of the NSHTP algorithm and BFGS algorithm is between the BP algorithm and the proposed algorithm, and the proposed algorithm requires the shortest reconstruction time and the highest reconstruction rate. The reconstruction time of the BFGS algorithm is twice that of the proposed algorithm, and the reconstruction rate of the proposed algorithm is higher than that of the BFGS algorithm. As can be seen from the figure, when the data is short, the reconstruction rate of all algorithms is high, and the sparsity has little influence on the reconstruction time. There is no obvious mutation point when the sparsity increases in the reconstruction time.

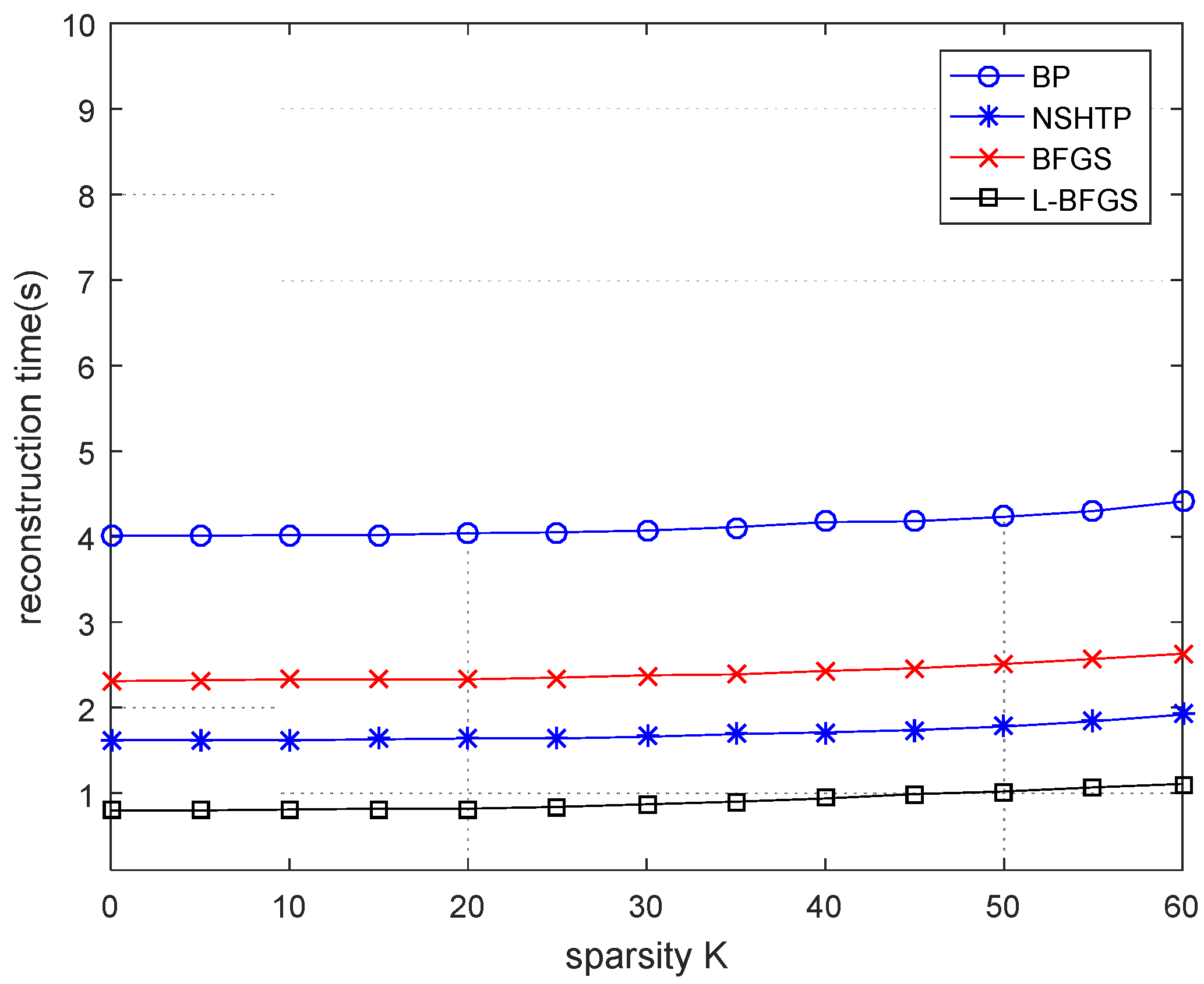

In

Figure 5, n = 256 and m = 128. The reconstruction time by the four algorithms is significantly higher than that when n = 128 and m = 64. It indicates that with the increase in data length, the time for signal reconstruction is longer and the reconstruction rate is lower. As can be seen from

Figure 5, the proposed algorithm has the shortest reconstruction time and the fastest reconstruction rate. The reconstruction time of the BP algorithm is the longest, and the reconstruction rate is the worst. The reconstruction times of the NSHTP algorithm and the BFGS algorithm are between the BP algorithm and the proposed algorithm. In general, when the signal length increases, the reconstruction rate of all algorithms will be affected, but the reconstruction rate of the proposed algorithm is the fastest, which is significantly higher than the BFGS algorithm.

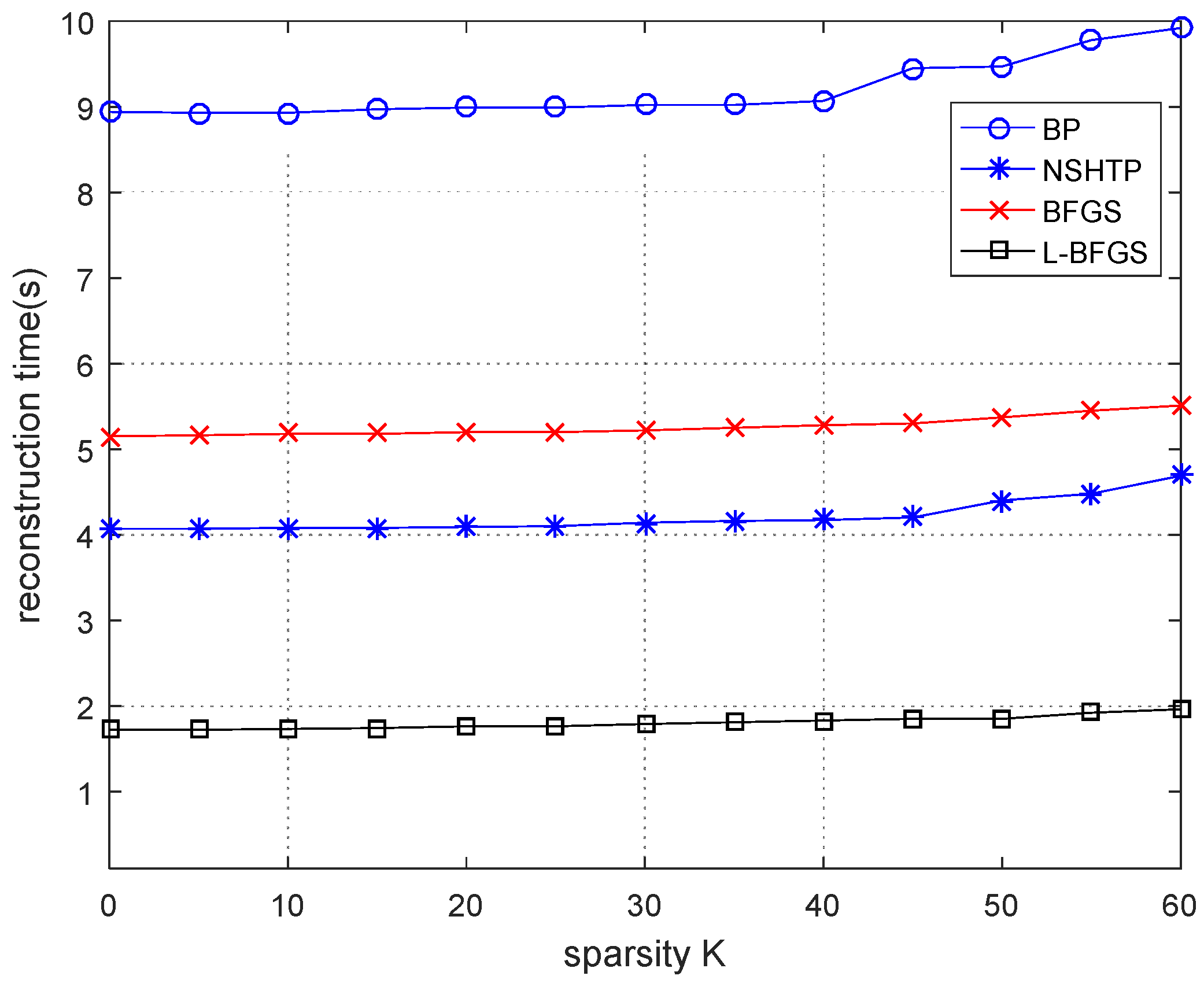

In

Figure 6, n = 512 and m = 256. All algorithms require longer reconstruction times, which get longer as the length of the data increases. The proposed algorithm still has the shortest reconstruction time and the fastest reconstruction rate. The BP algorithm still has the worst reconstruction rate. When K = 40, the BP algorithm has a small mutation point, and when K = 45, the NSHTP algorithm also has a small mutation point. Both the proposed algorithm and the BFGS algorithm have no obvious mutation point. It indicates that when the data length increases, the sparsity has little influence on the BP algorithm and the NSHTP algorithm but no obvious influence on the proposed algorithm and the BFGS algorithm. In general, when n = 512 and m = 256, the proposed algorithm has the shortest reconstruction time and the fastest reconstruction rate among the four algorithms. It is less affected by sparsity, and the reconstruction rate is significantly higher than the BFGS algorithm.

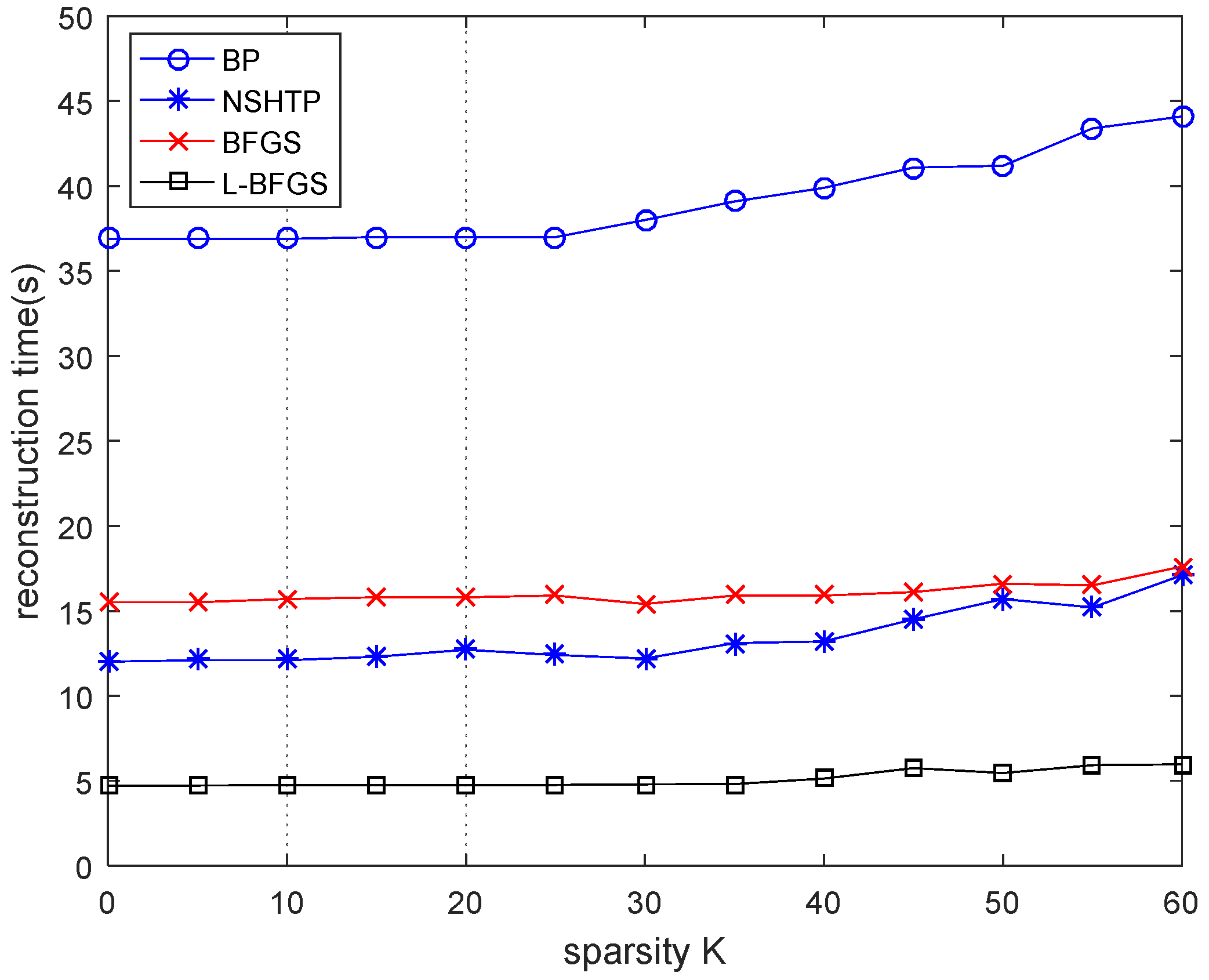

In

Figure 7, n = 1024 and m = 512.The exact reconstruction time of all algorithms becomes longer, but the reconstruction time of the proposed algorithm is the shortest and the reconstruction rate is the fastest. The BP algorithm has the longest reconstruction time and the worst reconstruction rate. As shown in

Figure 7, with the increase in sparsity, the BP algorithm has a mutation point when K = 30. When K = 35, the NSHTP algorithm has a mutation point, and the BFGS algorithm has a small mutation point. When K = 45, small mutation points appear in the proposed algorithm. It indicates that when n = 1024 and m = 512, sparsity has an impact on all algorithms. The BP algorithm and the NSHTP algorithm have a greater impact, and the proposed algorithm and the BFGS algorithm have a smaller impact. In general, the reconstruction rate of the proposed algorithm is the fastest and most stable, which is significantly better than the BFGS algorithm in the reconstruction rate.

Finally, by comparing n = 128, m = 64; n = 256, m = 128; n = 512, m = 256; n = 1024, m = 512, the reconstruction time of four groups of data was simulated. It can be found that the larger the length of data, the longer the time for signal reconstruction, and the worse the rate for signal reconstruction. When n = 512, m = 256, and K = 25, the signal reconstruction time of the BP algorithm is 9.11 s, the NSHTP algorithm is 4.23 s, the BFGS algorithm is 5.17 s, and the proposed algorithm is 1.93 s. When n = 1024, m = 512, and K = 25, the signal reconstruction time of the BP algorithm becomes 37.25 s, the NSHTP algorithm becomes 12.57 s, the BFGS algorithm becomes 15.93 s, and the proposed algorithm becomes 4.84 s. It can be seen that when the data length and matrix size are doubled, the reconstruction time of the BP algorithm is more than 4 times the growth of the data, the reconstruction times of the NSHTP algorithm and BFGS algorithm have been tripled, and the proposed algorithm becomes 2.5 times the growth of the data. It shows that when n and m grow, the reconstruction rate of the proposed algorithm is the most stable and fastest. Through the simulation of reconstruction accuracy and reconstruction rate, it can be seen that the L-BFGS algorithm is slightly worse than the BFGS algorithm in reconstruction accuracy, but the reconstruction rate is significantly higher than the BFGS algorithm. In general, the L-BFGS algorithm is superior to the BFGS algorithm and is more suitable for sparse signal reconstruction in wireless sensor networks.

8. Conclusions

Aiming at the problem of a low reconstruction rate when the Newton method is used to reconstruct sparse signals in wireless sensor networks, this paper proposes to use the L-BFGS quasi-Newton algorithm to improve the reconstruction rate. The L-BFGS does not need to store and calculate Hk directly; only two matrices of size m × n need to be stored. This algorithm, by discarding some vectors and sacrificing some reconstruction accuracy, greatly reduces the reconstruction time and improves the reconstruction rate compared with the BFGS algorithm. At the same time, the simulation experiment is carried out to compare the reconstruction accuracy and reconstruction time of the proposed algorithms, the BFGS algorithm, the NSHTP algorithm, and the BP algorithm. Experiments show that the reconstruction accuracy of the proposed algorithm is higher than that of the BP algorithm and the NSHTP algorithm but slightly lower than that of the BFGS algorithm. However, the reconstruction rate of the proposed algorithm is the fastest and most stable among the four algorithms, which is two times higher than the BFGS algorithm. Therefore, the proposed algorithm has a high reconstruction rate and certain advantages in terms of reconstruction accuracy. It is a better reconstruction algorithm than the BFGS algorithm.