RESTful API Analysis, Recommendation, and Client Code Retrieval

Abstract

1. Introduction

2. Background and Related Work

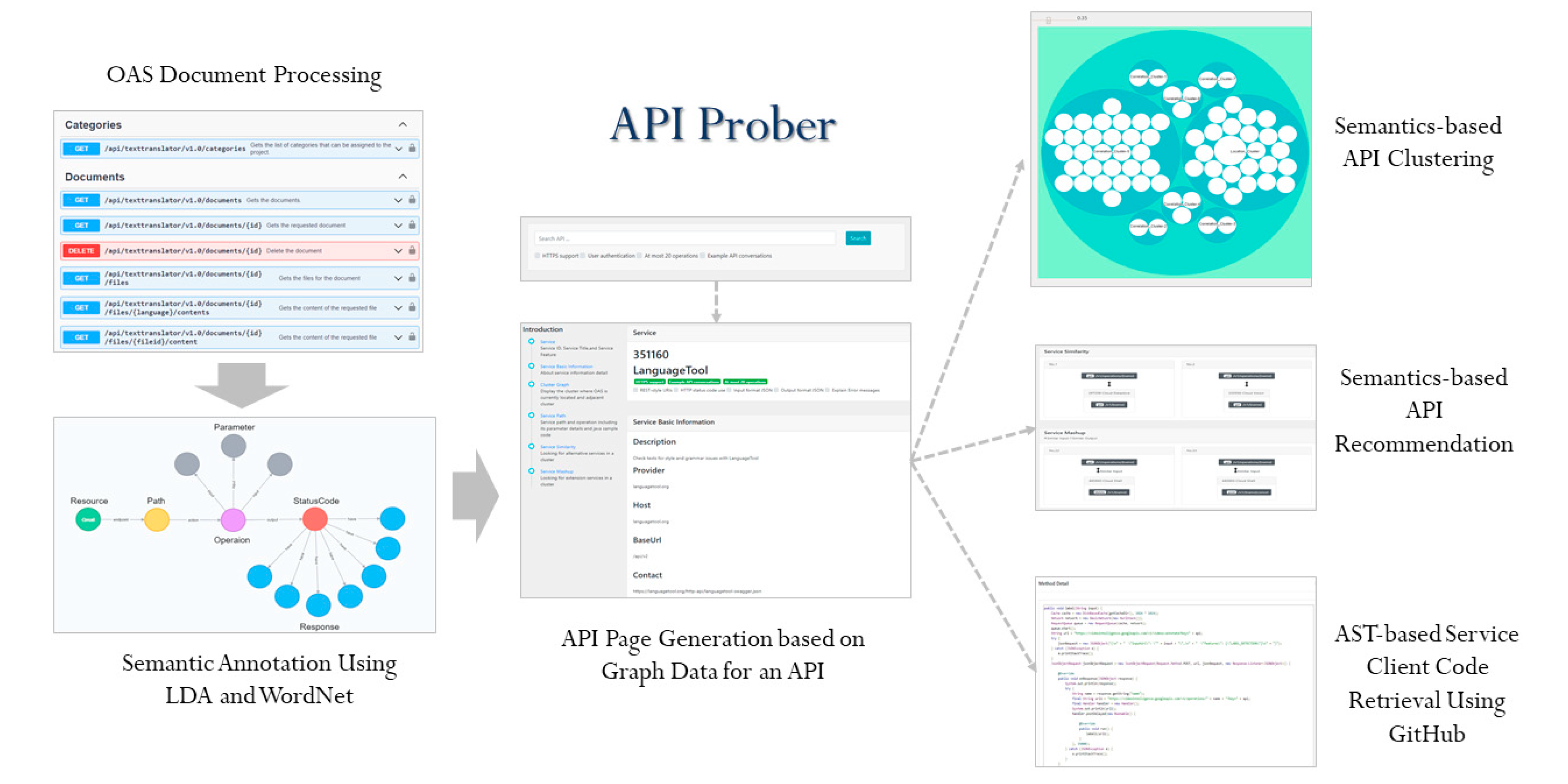

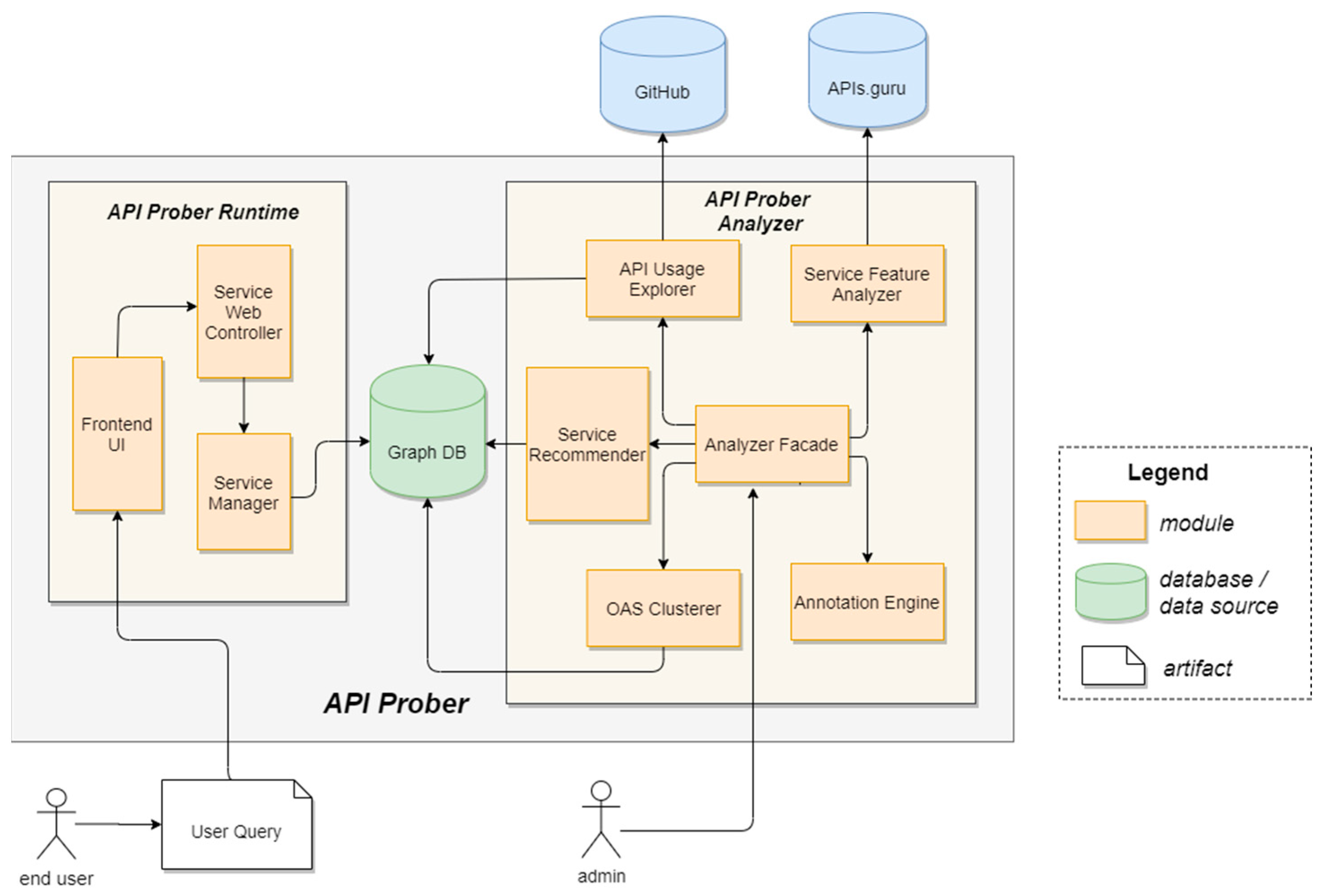

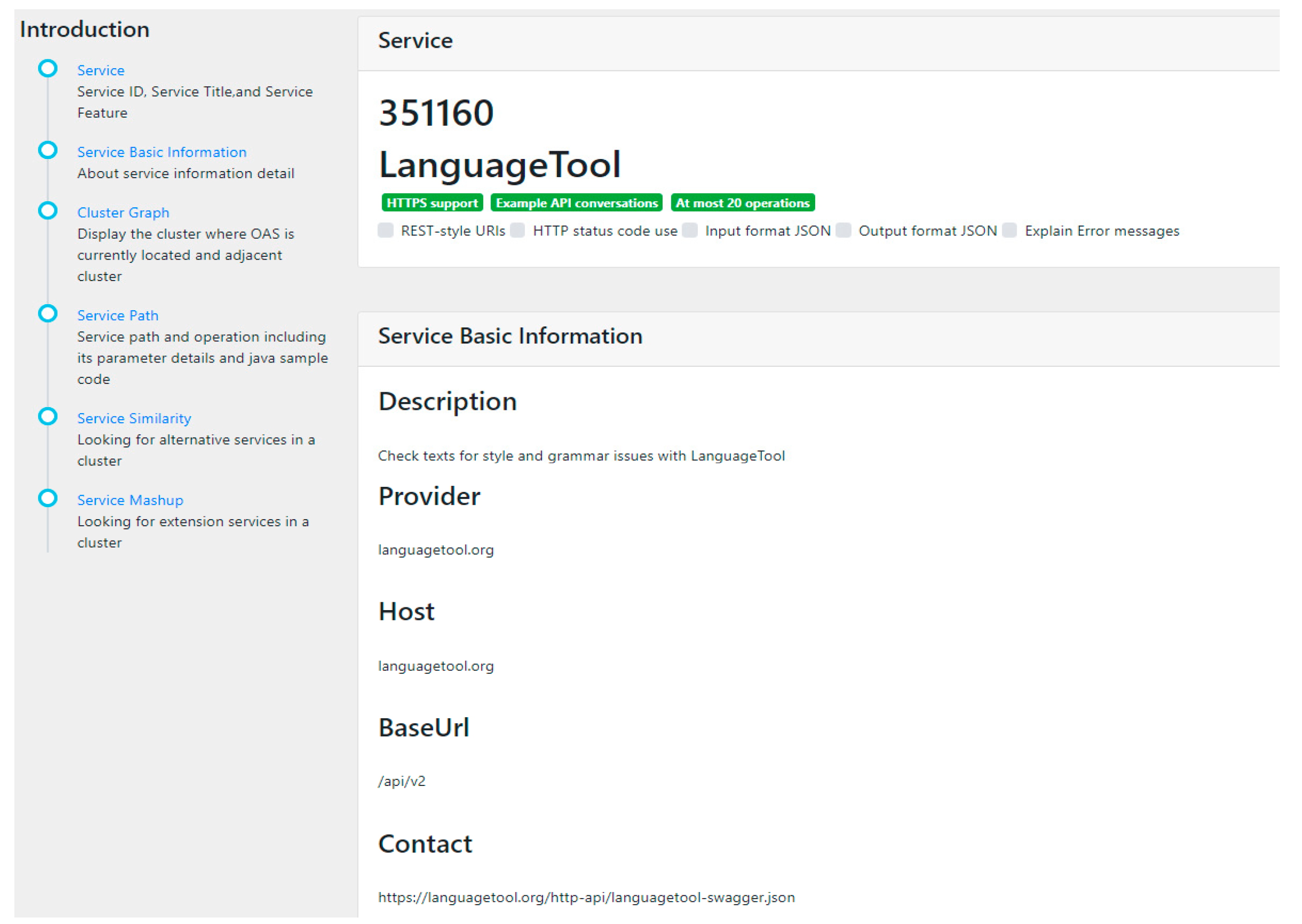

3. Approach Descriptions

3.1. Transforming OAS into Nodes

3.2. Web API Clustering

3.2.1. Document Concept Score (DCS)

3.2.2. Parameter Settings for Clustering

- The number of LDA topics: API Prober uses the words of the retrieved LDA topics for the annotation of OAS documents;

- Weights of resources and operations for DCS: API Prober uses the resource and operations portions of OAS files to calculate the DCS score (Equation (2)). In general, the resource part describes the overall purpose of the service, and the operations part specifies the functionality of a service endpoint;

- Weight of WordNetScore: Using WordNet, API Prober generates additional terms similar to the topic words produced by LDA. Word expansion can significantly increase the probability of matching services and enhance matching precision. In API Prober, in Equation (1) ranges from 0 to 1;

- Clustering method applied: Finally, it is necessary to select a clustering method from among complete linkage, average linkage, centroid linkage, and weighted linkage. Note that single linkage [24] is not included due to the fact that it produces unreasonably large clusters rather than size-balanced clusters.

3.2.3. Evaluation Methods and Parameter Settings for Service Clustering

3.3. API Recommendations Based on Identified Clusters

- Substitutable services have input and output parameters similar to those of the target service. Substitutable services can be used as an alternative in situations where the target service fails to satisfy user requirements pertaining to quality or functionality. For example, a user could build a flight reservation system integrating multiple ticket ordering services from various airlines;

- Mergeable services provide “horizontal” service compositions integrating multiple services with similar inputs or outputs. Input-oriented mergeable services can be used to integrate output data from different services, based on a given input. For example, a user could build a housing recommendation system integrating information related to the proximity of restaurants, convenience stores, and schools. Output-oriented mergeable services can help users to collect multiple data items for multiple queries. For example, a user could build a music discovery system that searches for songs based on singer names, song names, composers, lyricists, and movie names.

- Calculation of Parameter Concept Scores (PCS):

- 2.

- Calculation of Hungarian Mapping Scores (HMS):

- API Prober calculates the degree to which the input parameters of the target service (TS) match the input parameters of a candidate service (CS);

- : API Prober calculates the degree to which the output parameters of a CS match the expected output parameters of the TS.

- 3.

- Service Recommendation:

3.4. Discovering Service Client Code Examples

- (1)

- Import: libraries used in the Java file, indicating the relevant functions that may be used in the Java file;

- (2)

- Class: class of the Java file;

- (3)

- Class/instance variable: variables declared in the Java file at the class level;

- (4)

- Inner class: one or more internal classes are contained in Java files, and these are retrieved via a recursive search;

- (5)

- Method name: name of a method declared in the class;

- (6)

- Method body: internal representation of the method body;

- (7)

- Statement: a statement line in the method, which is the smallest unit in Java Parser.

- The service path may have a valid superset. When designing an API, the handling of resources by the service provider tends to vary according to service operations, which commonly results in service paths with a superset/subset design. For example, we may have the following similar but different operations: http://mysearch.com/search, http://mysearch.com/search/group. To reduce the probability of false searches when searching for code fragments, JMP determines whether the retrieved path is part of a longer path (i.e., a subset of the retrieved path) and keeps only the longer path;

- APIs based on the REST style may contain resources that can be manipulated. A service based on the REST style may contain resource-based operations such as http://mysearch.com/search/group/{groupid};

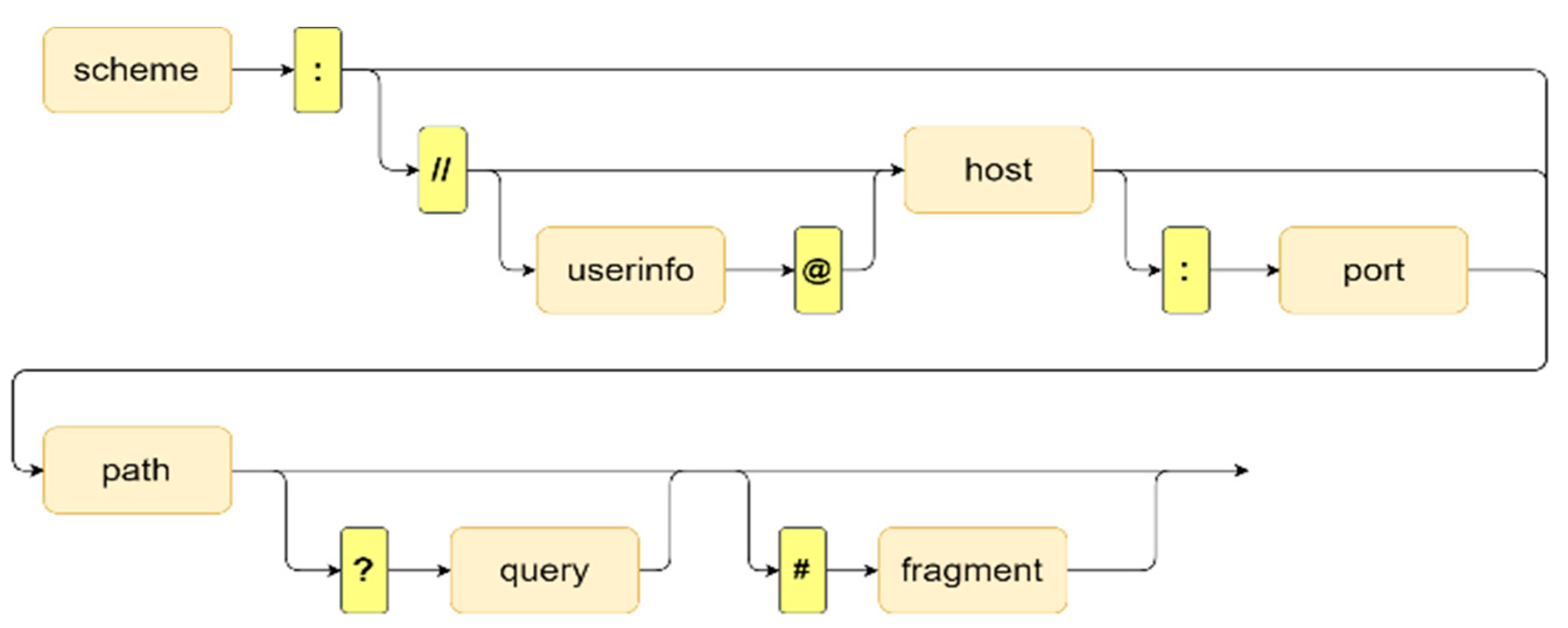

- The fact that there is no way to identify the arguments used in a resource-style operation makes it difficult to match codes. In this case, we use a substitution symbol (such as <<token>>) instead. JMP analyzes the URI according to its syntax (shown in Figure 5) and uses an optional query component (question mark) as a separator during tokenization. It keeps only the first token due to the fact that the second token may contain the query string or the content to be transmitted. For example, for the service path http://mysearch.com/search?q=wiki, JMP keeps only http://mysearch.com/search for subsequent matching;

- 4.

- Java language features: JMP uses semicolons and commas as terminal symbols when matching service paths. Note that the semicolon indicates that the statement ends, whereas the comma indicates the insertion of an argument in Java.

- 1.

- Performing service path matching for different cases: there are two common situations involving using Web APIs in Java.

- ▪

- Used after a class variable or an instance variable is declared: JMP searches for class/instance variables using AST and compares them using the service path matching method to determine whether the service path is included in the variable. If the use of the service path is confirmed, then the variable’s name is recorded, and the methods that use the variable are also retrieved and saved;

- ▪

- Declared directly in the method and used in the method: JMP searches for all methods using AST and compares them using service path matching to determine whether the service path is used in the method. If the use of the service path is confirmed, then the method is saved;

- ▪

- Used in the return statement: JMP also uses the service path matching method to determine whether the service path is contained in the return statement. If the use of the service path is confirmed, then the method with the return statement is also saved.

- 2.

- Scores are assigned to extracted codes using the following scoring rules. (1) If the example does not pass the previous step, then it is assigned a score of s1, (2) the scores of s2 to s5 in Table 3 are based on the following guidelines:

- ▪

- s2: Service paths are used only in the return statement and are not used in the method. This kind of example is not very helpful for users;

- ▪

- s3: service paths are used in instance/class variables or methods;

- ▪

- s4 and s5: From the Maven website, we collected widely used HTTP libraries commonly used to invoke Web APIs. If one or more libraries are used in the source code, then it is likely that it is a service client invoking Web APIs. Using the Import information in AST, JMP determines whether a code example imports the HTTP library to determine whether it is a possible service code example. The HTTP libraries supported by API Prober include Apache Commons Httpclient, Apache Httpclient, Apache Httpcore, Google Volley, Loopj Android Http, Mashape Unirest, okhttp3, and RestTemplate.

4. Experimental Evaluations

4.1. Analysis for Service Clustering

4.1.1. Experimental Setup

4.1.2. Experimental Results

- API Prober cannot directly produce an appropriate name for each cluster. The cluster names could be given manually to improve the readability of the cluster graph;

- When new Web APIs are added, the proposed clustering process needs to be re-conducted; it needs a considerable processing time.

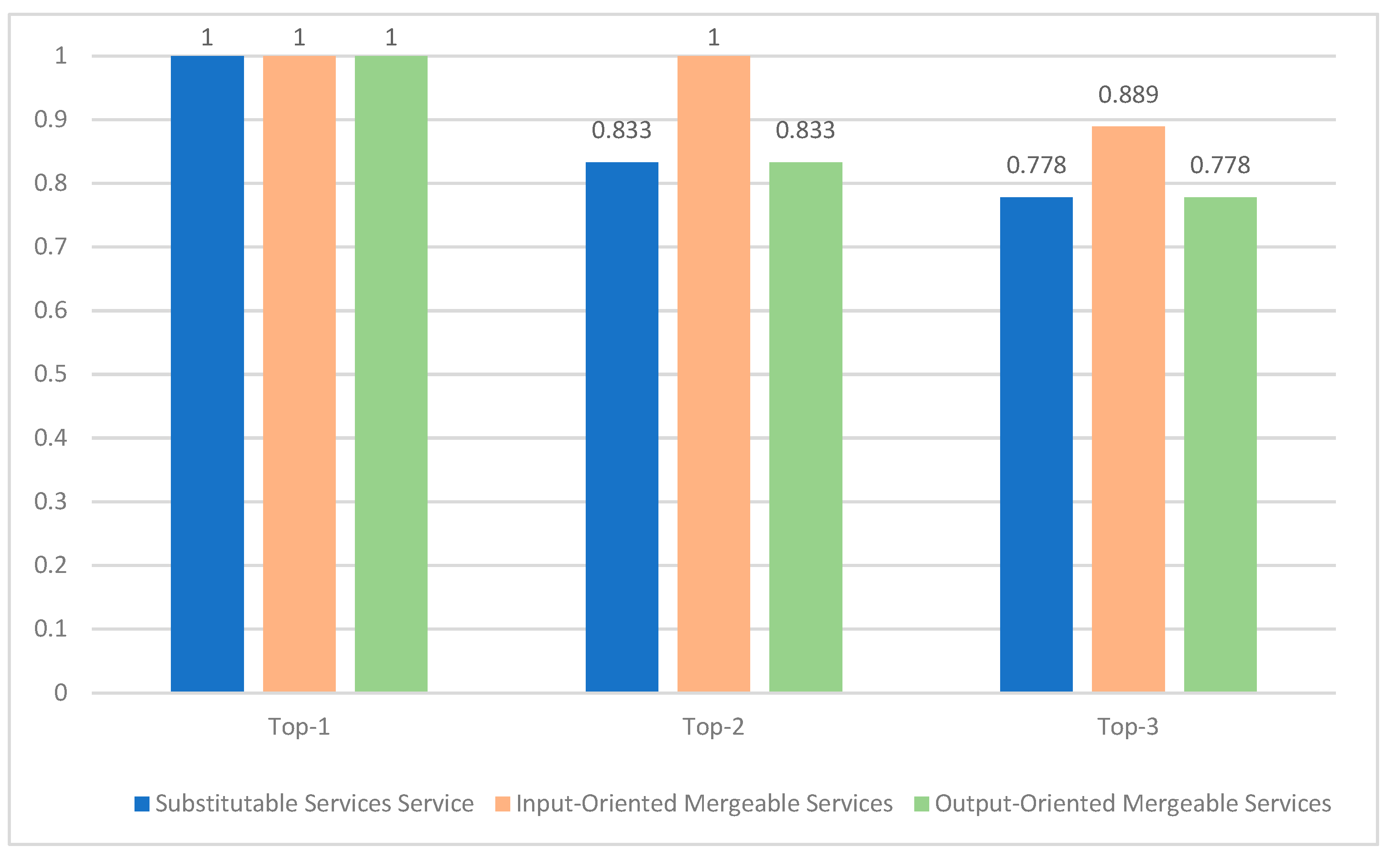

4.2. Evaluation of Recommended Mergeable and Substitutable Services

4.2.1. Experimental Setup

- Substitutable services: both input and output parameters of the recommended service were sufficiently similar to those of the target service;

- Input-oriented mergeable services: input parameters of the recommended service were sufficiently similar to those of the target service;

- Output-oriented mergeable services: output parameters of the recommended service were sufficiently similar to those of the target service.

4.2.2. Experimental Results

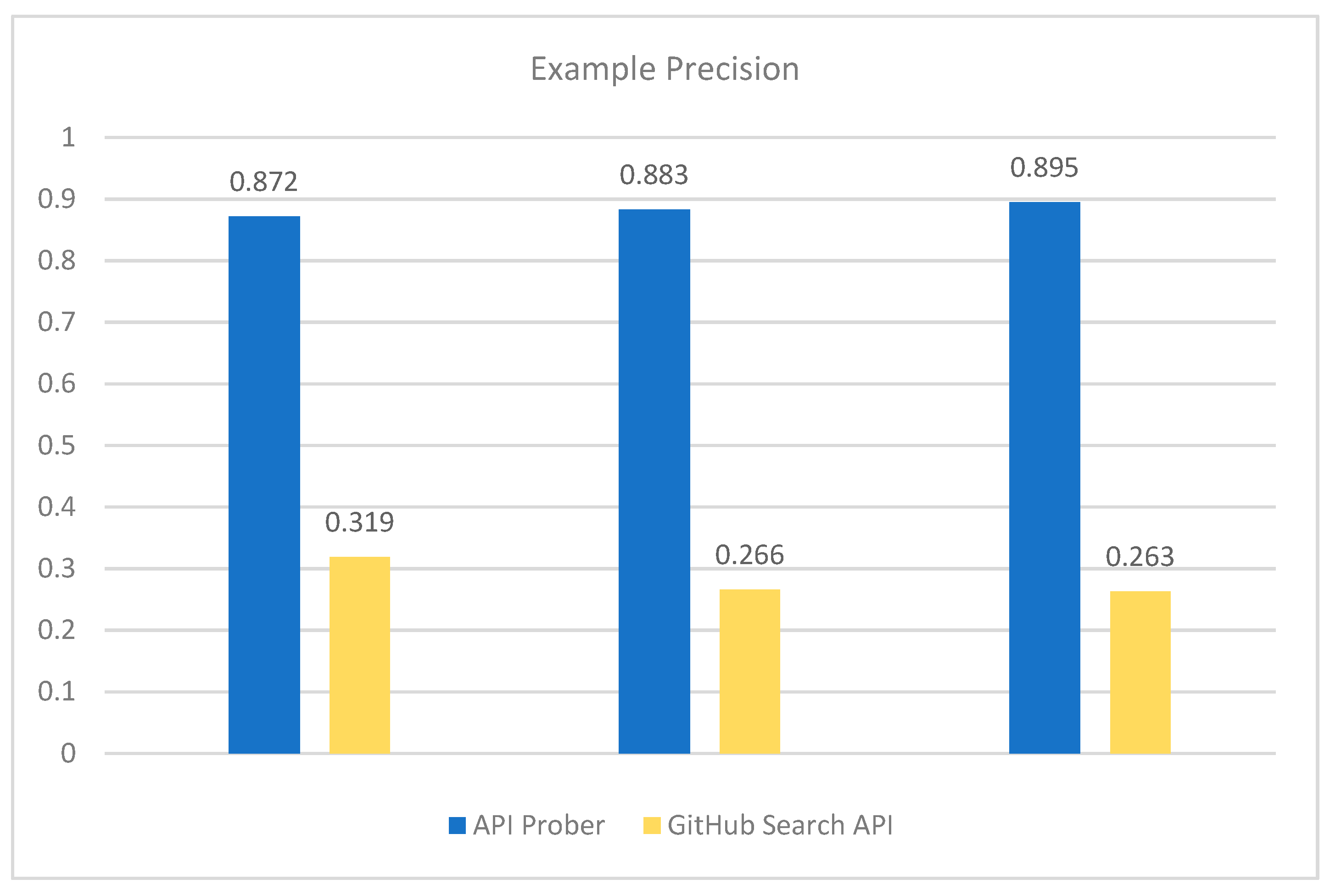

4.3. Evaluations of Discovery of API Client Code Examples

4.3.1. Experimental Setup

4.3.2. Experimental Results

- Service path was not used: In some Java files, the service path is used as a string for a specific output or description, rather than for service invocation. A real-world example is presented in Table 5;

- Variable names of excessive simplicity: Some class variables do not have specialized and meaningful names, such that the Java Method Parser misidentified the class variable name. In the real-world example in Table 6, the service path was declared in the class variable (url), such that API Prober misidentified a code example;

- Special use behavior: In some Java coding, the service path is split into different pieces to operate different service paths under the same OAS service. In the real-world example in Table 7, API Prober found the service path in the class variable and its method; however, the user added other resource operations to the class variable, resulting in an error of code discovery.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Fielding, R.T.; Taylor, R.N. Principled design of the modern web architecture. ACM Trans. Internet Technol. 2002, 2, 115–150. [Google Scholar] [CrossRef]

- Gat, I.; Remencius, T.; Sillitti, A.; Succi, G.; Vlasenko, J. The API economy: Playing the devil’s advocate. Cutter IT Journal 2013, 26, 6–11. [Google Scholar]

- ProgrammableWeb. Available online: http://www.programmableweb.com (accessed on 10 January 2023).

- RapidAPI Hub. Available online: https://rapidapi.com/hub (accessed on 10 January 2023).

- APIs.guru. Available online: https://apis.guru/ (accessed on 10 January 2023).

- Wittern, E.; Muthusamy, V.; Laredo, J.A.; Vukovic, M.; Slominski, A.; Rajagopalan, S.; Jamjoom, H.; Natarajan, A. API Harmony: Graph-based search and selection of APIs in the cloud. IBM J. Res. Dev. 2016, 60, 12:1–12:11. [Google Scholar] [CrossRef]

- OpenAPI Specification (OAS). Available online: https://swagger.io/docs/specification/ (accessed on 10 January 2023).

- Wilkinson, M.D.; Dumontier, M.; Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.-W.; da Silva Santos, L.B.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Neumann, A.; Laranjeiro, N.; Bernardino, J. An Analysis of Public REST Web Service APIs. IEEE Trans. Serv. Comput. 2018, 14, 957–970. [Google Scholar] [CrossRef]

- Webber, J. A programmatic introduction to neo4j. In Proceedings of the the 3rd Annual Conference on Systems, Programming, and Applications: Software for Humanity, Tucson, AZ, USA, 19–26 October 2012; pp. 217–218. [Google Scholar]

- JavaParser. Available online: http://javaparser.org/ (accessed on 10 January 2023).

- Agrawal, R.; Phatak, M. A novel algorithm for automatic document clustering. In Proceedings of the 3rd IEEE International Advance Computing Conference (IACC), Ghaziabad, India, 22–23 February 2013; pp. 877–882. [Google Scholar]

- Reddy, V.S.; Kinnicutt, P.; Lee, R. Text Document Clustering: The Application of Cluster Analysis to Textual Document. In Proceedings of the International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 15–17 December 2016; pp. 1174–1179. [Google Scholar]

- Rahman, M.M.; Liu, X.; Cao, B. Web API Recommendation for Mashup Development Using Matrix Factorization on Integrated Content and Network-Based Service Clustering. In Proceedings of the IEEE International Conference on Services Computing (SCC), Honolulu, HI, USA, 25–30 June 2017; pp. 225–232. [Google Scholar]

- Fletcher, K. Regularizing Matrix Factorization with Implicit User Preference Embeddings for Web API Recommendation. In Proceedings of the IEEE International Conference on Services Computing (SCC), Milan, Italy, 8–13 July 2019; pp. 1–8. [Google Scholar]

- Zou, G.; Qin, Z.; He, Q.; Wang, P.; Zhang, B.; Gan, Y. DeepWSC: Clustering Web Services via Integrating Service Composability into Deep Semantic Features. IEEE Trans. Serv. Comput. 2022, 15, 1940–1953. [Google Scholar] [CrossRef]

- APIs.io. Available online: http://apis.io/ (accessed on 10 January 2023).

- Mashape. Available online: https://www.mashape.com/ (accessed on 10 January 2023).

- Ma, S.-P.; Lin, H.-J.; Hsu, M.-J. Semantic Restful Service Composition Using Task Specification. Int. J. Softw. Eng. Knowl. Eng. 2020, 30, 835–857. [Google Scholar] [CrossRef]

- Ma, S.-P.; Hsu, M.-J.; Chen, H.-J.; Su, Y.-S. API Prober—A Tool for Analyzing Web API Features and Clustering Web APIs; Springer International Publishing: Cham, Switzerland, 2020; pp. 81–96. [Google Scholar]

- Li, Y.; Bandar, Z.A.; McLean, D. An approach for measuring semantic similarity between words using multiple information sources. IEEE Trans. Knowl. Data Eng. 2003, 15, 871–882. [Google Scholar] [CrossRef]

- Haupt, F.; Leymann, F.; Scherer, A.; Vukojevic-Haupt, K. A Framework for the Structural Analysis of REST APIs. In Proceedings of the IEEE International Conference on Software Architecture (ICSA), Gothenburg, Sweden, 3–7 April 2017; pp. 55–58. [Google Scholar]

- Agarwal, N.; Sikka, G.; Awasthi, L.K. Web service clustering approaches to enhance service discovery: A review. In Recent Innovations in Computing; Springer: Singapore, 2021. [Google Scholar]

- Aggarwal, C.; Zhai, C. A Survey of Text Clustering Algorithms. In Mining Text Data; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Vinh, N.X.; Epps, J.; Bailey, J. Information theoretic measures for clusterings comparison: Variants, properties, normalization and correction for chance. J. Mach. Learn. Res. 2010, 11, 2837–2854. [Google Scholar]

- Ma, S.-P.; Chen, Y.-J.; Syu, Y.; Lin, H.-J.; Fanjiang, Y.-Y. Test-Oriented RESTful Service Discovery with Semantic Interface Compatibility. IEEE Trans. Serv. Comput. 2021, 14, 1571–1584. [Google Scholar] [CrossRef]

- Sohan, S.M.; Maurer, F.; Anslow, C.; Robillard, M.P. A study of the effectiveness of usage examples in REST API documentation. In Proceedings of the IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), Raleigh, NC, USA, 11–14 October 2017; pp. 53–61. [Google Scholar]

- Cosentino, V.; Izquierdo, J.L.C.; Cabot, J. Findings from GitHub: Methods, datasets and limitations. In Proceedings of the IEEE/ACM 13th Working Conference on Mining Software Repositories (MSR), Austin, TX, USA, 14–15 May 2016; pp. 137–141. [Google Scholar]

| REST Features | Service Clustering | Client Code Discovery | Service Recommendation | |

|---|---|---|---|---|

| APIs.io | ▲ | ✕ | ✕ | ✕ |

| APIs.guru | ✕ | ✕ | ✕ | ✕ |

| Mashape/Rapid API | ✕ | ▲ | ▲ | ◯ (QoS-based) |

| ProgrammableWeb | ✕ | ✕ | ▲ | ✕ |

| API Harmony | ▲ | ✕ | ▲ | ✕ |

| API Prober | ◯ | ◯ | ◯ | ◯ (interface-based) |

| Node | Information |

|---|---|

| Resource | $.info.scheme: https $.info.host: api.github.com $.info.basePath:/ |

| Path | $.paths.{path_name}:/emojis |

| Score | Description |

|---|---|

| s1 | Original results of GitHub search API |

| s2 | Service path used in a return statement |

| s3 | Service paths used in class/instance variables or methods |

| s4 | Service path used in return statement and HTTP library applied |

| s5 | Service paths used in class variables or class methods and HTTP library applied |

| Topic (Named Manually) | Number of Services | Representative Services | Evaluation Results |

|---|---|---|---|

| Application performance management | 90 |

| Valid |

| Database management | 87 |

| Valid |

| Security | 51 |

| Valid |

| Network management | 37 |

| Valid |

| Authorization management | 28 |

| Valid |

| Score | 3 |

| Service path | https://www.googleapis.com/gmail/v1/users/{userId}/messages/send (accessed on 10 January 2023) |

| Error fragment | endpoint.setDescription (“Give the API method endpoint to send email” + “ (E.g: -for gmail: https://www.googleapis.com/gmail/v1/users/[userId]/messages/send)”); |

| Score | 5 |

| Service path | https://www.googleapis.com/blogger/v3/blogs/byurl (accessed on 10 January 2023) |

| Class variable | static String url = “https://www.googleapis.com/blogger/v3/blogs/byurl?url=http://strandedhhj.blogspot.com/”; |

| Error fragment | String blogURL = mResponseObject.getString(“url”); |

| Score | 5 |

| Service path | https://api.ebay.com/sell/fulfillment/v1/order (accessed on 10 January 2023) |

| Class variable | public static final String getOrderUrl = “https://api.ebay.com/sell/fulfillment/v1/order”; |

| Error fragment | public static void getOrder(String accessToken, String orderId) { String value = httpClient.doGet(getOrderUrl+”/orderId”, headers, null); System.out.println(value); } |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, S.-P.; Hsu, M.-J.; Chen, H.-J.; Lin, C.-J. RESTful API Analysis, Recommendation, and Client Code Retrieval. Electronics 2023, 12, 1252. https://doi.org/10.3390/electronics12051252

Ma S-P, Hsu M-J, Chen H-J, Lin C-J. RESTful API Analysis, Recommendation, and Client Code Retrieval. Electronics. 2023; 12(5):1252. https://doi.org/10.3390/electronics12051252

Chicago/Turabian StyleMa, Shang-Pin, Ming-Jen Hsu, Hsiao-Jung Chen, and Chuan-Jie Lin. 2023. "RESTful API Analysis, Recommendation, and Client Code Retrieval" Electronics 12, no. 5: 1252. https://doi.org/10.3390/electronics12051252

APA StyleMa, S.-P., Hsu, M.-J., Chen, H.-J., & Lin, C.-J. (2023). RESTful API Analysis, Recommendation, and Client Code Retrieval. Electronics, 12(5), 1252. https://doi.org/10.3390/electronics12051252