Swin-UperNet: A Semantic Segmentation Model for Mangroves and Spartina alterniflora Loisel Based on UperNet

Abstract

1. Introduction

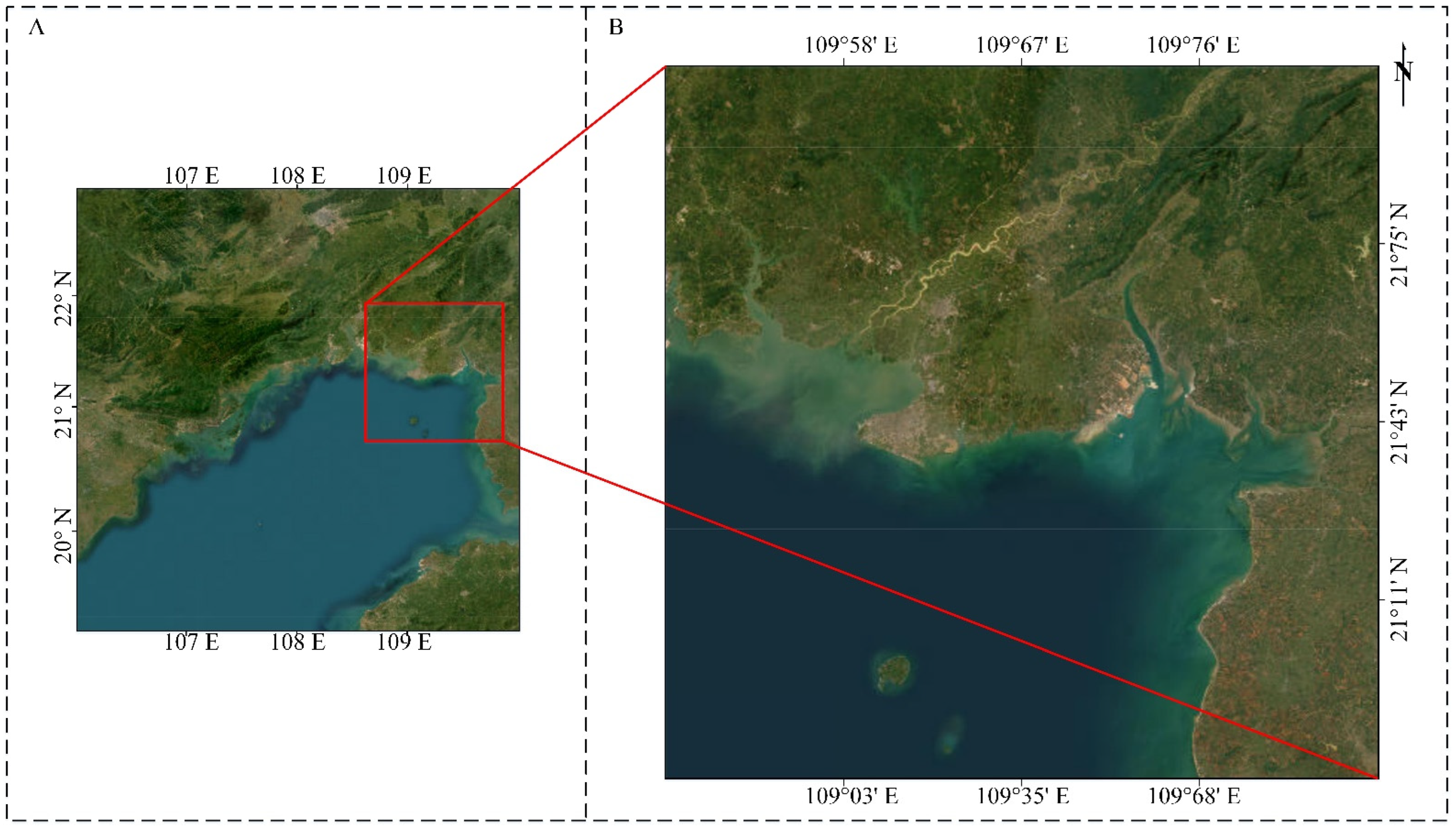

2. Data and Preprocessing

2.1. Data

2.1.1. GF-1

2.1.2. GF-6

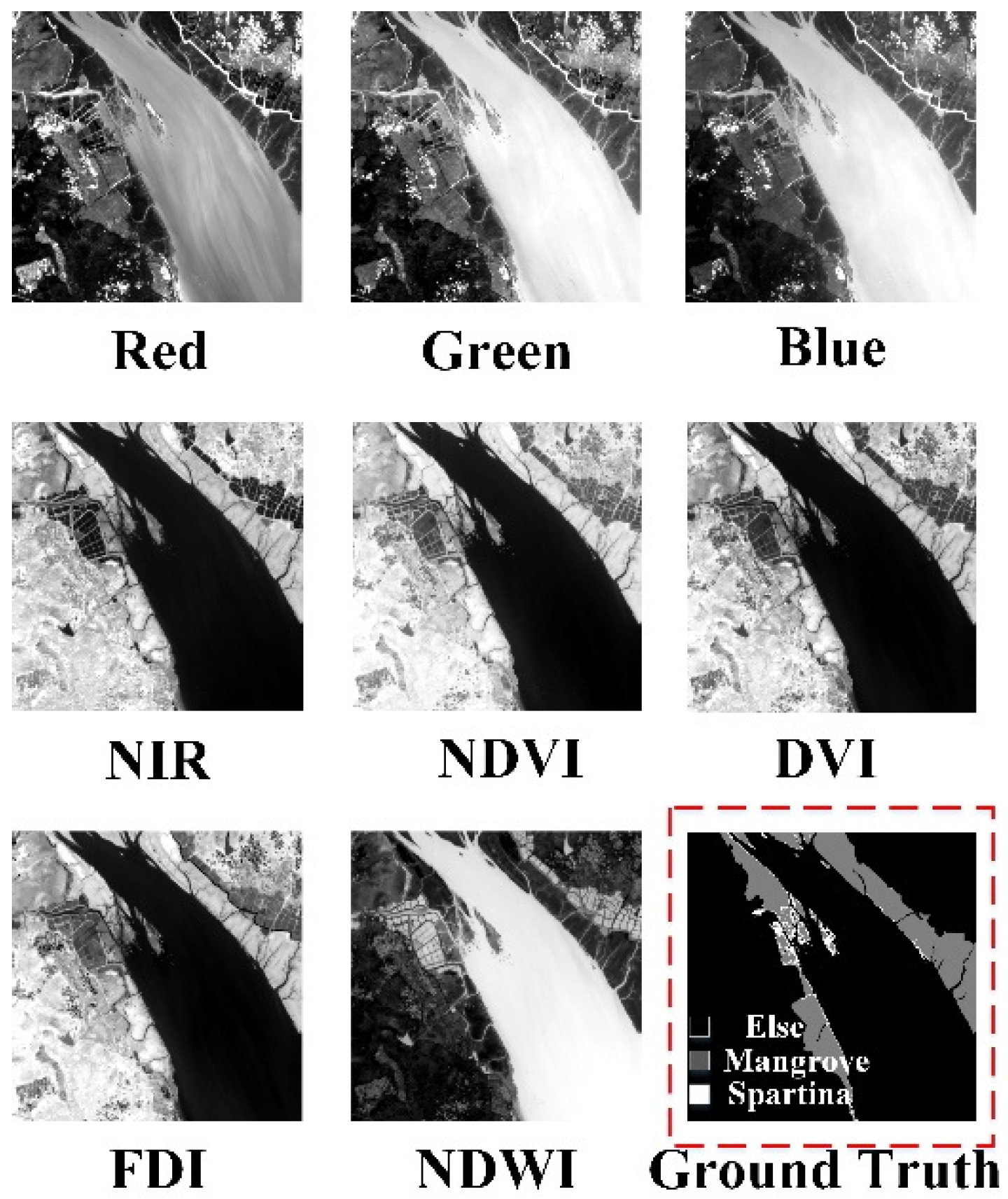

2.2. Data Preprocessing

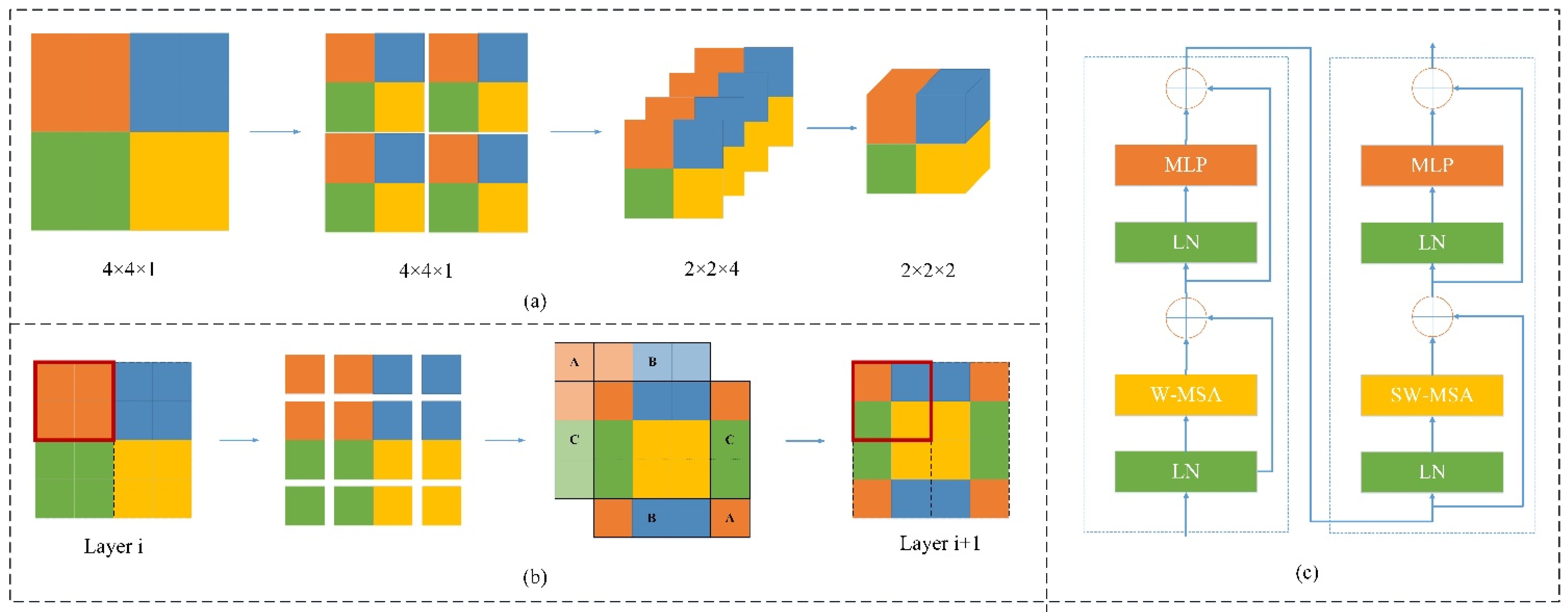

3. Methods

3.1. Data Concatenation Module

3.2. Boundary Optimization Module

3.3. Loss Function

4. Result and Discussion

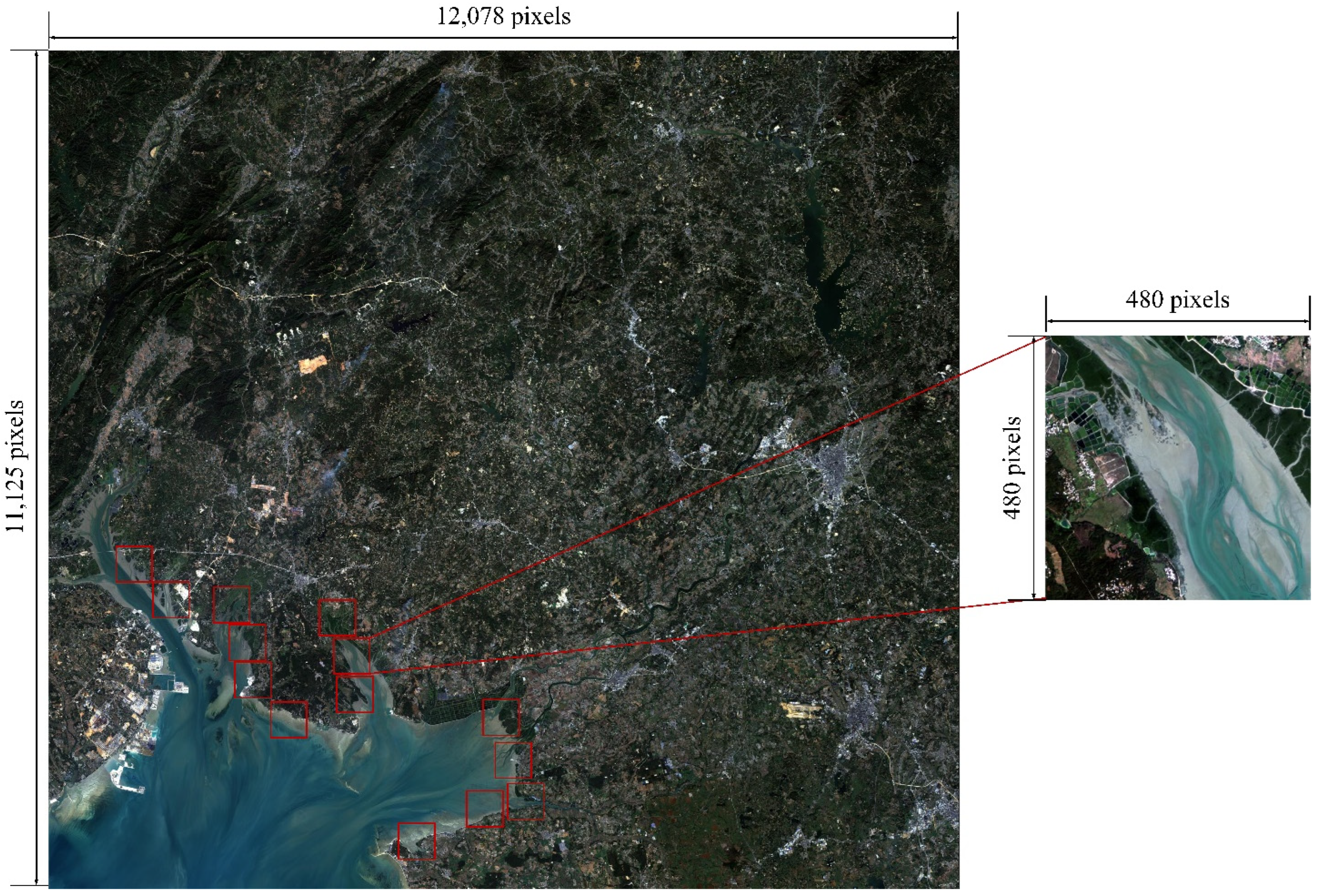

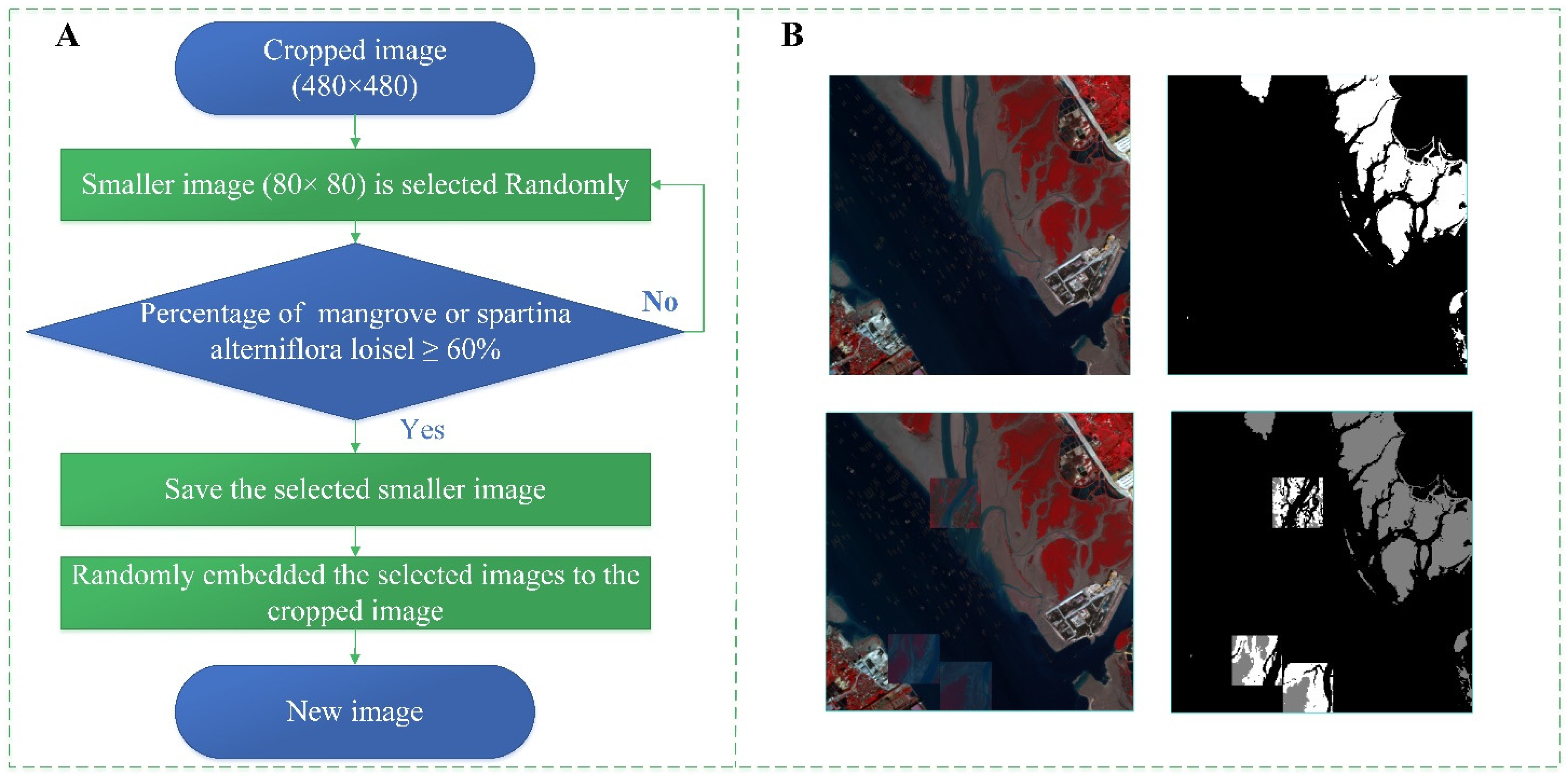

4.1. Experimental Data

4.2. Experimental Setups

4.3. Evaluation Metrics

4.4. Ablation Experiment

4.5. Comparison Experiment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Liao, B.W.; Zhang, Q.M. Area, distribution and species composition of mangroves in China. Wetl. Sci. 2014, 12, 435–440. [Google Scholar] [CrossRef]

- Zhou, T.; Liu, S.; Feng, Z.; Liu, G.; Gan, Q.; Peng, S. Use of exotic plants to control Spartina alterniflora invasion and promote mangrove restoration. Sci. Rep. 2015, 5, 12980. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Nie, S.; Sang, Y.; Mo, S.; Li, J.; Kashif, M.; Su, G.; Yan, B.; Jiang, C. Effects of Spartina alterniflora invasion on nitrogen fixation and phosphorus solubilization in a subtropical marine mangrove ecosystem. Microbiol. Spectr. 2022, 10, e00682-21. [Google Scholar] [CrossRef] [PubMed]

- Kasturi, K.; Afsaneh, S.; Arthur, C.; Hong, G.; Tan, K.P.; Ho, C.S.; Rasli, F.N. Satellite images for monitoring mangrove cover changes in a fast growing economic region in southern peninsular malaysia. Remote Sens. 2015, 7, 14360–14385. [Google Scholar] [CrossRef]

- Volpi, M.; Ferrari, V. Semantic segmentation of urban scenes by learning local class interactions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Liu, K.; Mattyus, G. Fast multiclass vehicle detection on aerial images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1938–1942. [Google Scholar] [CrossRef]

- Audebert, N.; Saux, B.L.; Lefèvre, S. Segment-before-detect: Vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

- Kemker, R.; Salvaggio, C.; Kanan, C. Algorithms for semantic segmentation of multispectral remote sensing imagery using deep learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 60–77. [Google Scholar] [CrossRef]

- Kemker, R.; Gewali, U.B.; Kanan, C. EarthMapper: A Tool Box for the Semantic Segmentation of Remote Sensing Imagery. arXiv preprint 2018, arXiv:1804.00292. [Google Scholar]

- Fu, W.; Shao, P.; Dong, T.; Liu, Z. Novel Higher-Order Clique Conditional Random Field to Unsupervised Change Detection for Remote Sensing Images. Remote Sens. 2022, 14, 3651. [Google Scholar] [CrossRef]

- Shao, P.; Shi, W.; Liu, Z.; Dong, T. Unsupervised change detection using fuzzy topology-based majority voting. Remote Sens. 2021, 13, 3171. [Google Scholar] [CrossRef]

- Liu, K.; Zhu, Y.; Qian, L.I.; Yuenan, L.I.; Xiao, W.; Meng, L. Analysis on mangrove resources changes of zhenhai bay in guangdong based on multi source remote sensing images. Trop. Geogr. 2016, 36, 850–859. [Google Scholar]

- Maurya, K.; Mahajan, S.; Chaube, N. Remote sensing techniques: Mapping and monitoring of mangrove ecosystem—A review. Complex Intell. Syst. 2021, 7, 2797–2818. [Google Scholar] [CrossRef]

- Jia, M.; Zhang, Y.; Wang, Z.; Song, K.; Ren, C. Mapping the distribution of mangrove species in the Core Zone of Mai Po Marshes Nature Reserve, Hong Kong, using hyperspectral data and high-resolution data. Int. J. Appl. Earth Obs. Geoinf. 2014, 33, 226–231. [Google Scholar] [CrossRef]

- Pham, L.T.; Brabyn, L. Monitoring mangrove biomass change in Vietnam using SPOT images and an object-based approach combined with machine learning algorithms. ISPRS J. Photogramm. Remote Sens. 2017, 128, 86–97. [Google Scholar] [CrossRef]

- Hermon, D.; Ganefri, P.A.; Oktorie, O. The model of mangrove land cover change for the estimation of blue carbon stock change in Belitung Island-Indonesia. Int. J. Appl. Environ. Sci. 2018, 13, 191–202. [Google Scholar]

- Pham, T.D.; Yoshino, K. Mangrove mapping and change detection using multi-temporal Landsat imagery in Hai Phong city, Vietnam. In Proceedings of the International Symposium on Cartography in Internet and Ubiquitous Environments, Tokyo, Japan, 17 March 2015; pp. 17–19. [Google Scholar]

- Wu, Z.; Gao, Y.; Li, L.; Xue, J.; Li, Y. Semantic segmentation of high-resolution remote sensing images using fully convolutional network with adaptive threshold. Connect. Sci. 2019, 31, 169–184. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv Preprint 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Long, J.; Evan, S.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 39, 640–652. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic Image Segmentation with Deep Convolutional Nets and Fully Connected CRFs. arXiv Preprint 2014, arXiv:1412.7062. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Jian, S. Unified Perceptual Parsing for Scene Understanding. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar] [CrossRef]

- Kampffmeyer, M.; Salberg, A.B.; Jenssen, R. Semantic Segmentation of Small Objects and Modeling of Uncertainty in Urban Remote Sensing Images Using Deep Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Las Vegas, NV, USA, 19 December 2016; pp. 680–688. [Google Scholar] [CrossRef]

- Hamaguchi, R.; Fujita, A.; Nemoto, K.; Imaizumi, T.; Hikosaka, S. Effective Use of Dilated Convolutions for Segmenting Small Object Instances in Remote Sensing Imagery. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1442–1450. [Google Scholar] [CrossRef]

- Guo, M.; Yu, Z.; Xu, Y.; Huang, Y.; Li, C. ME-Net: A Deep Convolutional Neural Network for Extracting Mangrove Using Sentinel-2A Data. Remote Sens. 2021, 13, 1292. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv Preprint 2020, arXiv:2010.11929. [Google Scholar]

- Han, K.; Xiao, A.; Wu, E.; Guo, J.; Xu, C.; Wang, Y. Transformer in transformer. Adv. Neural Inf. Process. Syst. 2021, 34, 15908–15919. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 March 2021; pp. 568–578. [Google Scholar] [CrossRef]

- Yuan, L.; Chen, Y.; Wang, T.; Yu, W.; Shi, Y.; Tay, F.E.; Francis, E.; Feng, J.; Yan, S. Tokens-to-token vit: Training vision transformers from scratch on imagenet. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 538–547. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, N. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- DeFries, R.S.; Townshend, J.R.G. NDVI-derived land cover classifications at a global scale. Int. J. Remote Sens. 1994, 15, 3567–3586. [Google Scholar] [CrossRef]

- Peter, B.; Richard, L. The delineation of tree crowns in Australian mixed species forests using hyperspectral Compact Airborne Spectrographic Imager (CASI) data. Remote Sens. Environ. 2006, 101, 230–248. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Chen, J.; Chen, Y.; Li, J.; Yan, G.; Zhang, X. Analysis of ndvi and scaled difference vegetation index retrievals of vegetation fraction. Remote Sens. Environ. 2006, 101, 366–378. [Google Scholar] [CrossRef]

- Gao, B.C. NDWI. A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant Remote Sensing Vegetation Indexs: A Review of Developments and Applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Huete, A.R. Vegetation Indexs, Remote Sensing and Forest Monitoring. Geogr. Compass 2012, 6, 513–532. [Google Scholar] [CrossRef]

- Berman, M.; Triki, A.R.; Blaschko, M.B. The lovasz-softmax loss: A tractable surrogate for the optimization of the intersection- over-union measure in neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4413–4421. [Google Scholar] [CrossRef]

- Nova, R.; Nurmaini, S.; Partan, R.U.; Putra, S.T. Automated image segmentation for cardiac septal defects based on contour region with convolutional neural networks: A preliminary study. Inform. Med. Unlocked 2021, 24, 100601. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, Y.; Liu, S.; Shi, J.; Loy, C.C.; Lin, D.; Jia, J. Psanet: Point-wise spatial attention network for scene parsing. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 267–283. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv Preprint 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3141–3149. [Google Scholar] [CrossRef]

- Yuan, Y.; Chen, X.; Wang, J. Object-contextual representations for semantic segmentation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 173–190. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

| Study Area | Satellite | Number of Images | Date |

|---|---|---|---|

| Northeastern coast of Beibu Gulf, Guangxi, China | GF-1 | 20 | 23 August 2016–18 December 2021 |

| GF-6 | 7 | 23 November 2019–4 December 2021 |

| Band | Band Name | Wavelength (μm) | Spatial Resolution (m) | Temporal Resolution (Days) | Swath Width (km) |

|---|---|---|---|---|---|

| B1 | Blue | 0.45–0.52 | 8 | 4 | 60 |

| B2 | Green | 0.52–0.59 | |||

| B3 | Red | 0.63–0.69 | |||

| B4 | Near infrared | 0.77–0.89 |

| Band | Band Name | Wavelength (μm) | Spatial Resolution (m) | Temporal Resolution (Days) | Swath Width (km) |

|---|---|---|---|---|---|

| B1 | Blue | 0.45–0.52 | 8 | 4 | 90 |

| B2 | Green | 0.52–0.60 | |||

| B3 | Red | 0.63–0.69 | |||

| B4 | Near infrared | 0.77–0.90 |

| Index | Calculation Method | Calculation in GF-1/GF-6 |

|---|---|---|

| NDVI | NDVI = (NIR − R)/(NIR + R) | (B4 − B3)/(B4 + B3) |

| FDI | FDI = NIR − (Red + Green) | B4 − (B3 + B2) |

| DVI | DVI = NIR − R | B4 − B3 |

| NDWI | NDWI = (Green − NIR)/(Green + NIR) | (B2 − B4)/(B2 + B4) |

| Loss Function | DP | DCM | Backbone | BOM | mIoU (%) | PA (%) | |||

|---|---|---|---|---|---|---|---|---|---|

| Cross-Entropy | Lovasz-Softmax | Ours | ResNet-50 | ST-Tiny | |||||

| √ | √ | 47.39 | 93.46 | ||||||

| √ | √ | 55.05 | 89.71 | ||||||

| √ | √ | 56.26 | 94.29 | ||||||

| √ | √ | √ | 81.06 | 97.05 | |||||

| √ | √ | √ | √ | 89.91 | 98.86 | ||||

| √ | √ | √ | √ | 82.87 | 94.44 | ||||

| √ | √ | √ | √ | √ | 90.0 | 98.87 | |||

| Input Channel | Mangrove | Spartina alterniflora Loisel | mIoU (%) | PA (%) | ||

|---|---|---|---|---|---|---|

| IoU (%) | PA (%) | IoU (%) | PA (%) | |||

| NIR + R + G | 83.0 | 87.37 | 63.18 | 69.65 | 81.06 | 97.05 |

| NIR + R + G + NDVI | 89.59 | 92.57 | 73.89 | 81.38 | 87.19 | 98.10 |

| NIR + R + G + NDVI + FDI | 90.0 | 93.06 | 74.68 | 81.49 | 87.62 | 98.18 |

| NIR + R + G + NDVI + FDI + DVI | 90.15 | 93.11 | 75.28 | 82.38 | 87.88 | 98.21 |

| NIR + R + G + NDVI + FDI + DVI + NDWI | 90.21 | 93.24 | 75.45 | 82.78 | 87.95 | 98.22 |

| NIR + R + G + B + NDVI + FDI + DVI + NDWI (Ours) | 91.03 | 98.35 | 79.65 | 89.15 | 89.91 | 98.86 |

| Model | Backbone | Mangrove | Spartina alterniflora Loisel | mIoU (%) | PA (%) | FPS | ||

|---|---|---|---|---|---|---|---|---|

| IoU (%) | PA (%) | IoU (%) | PA (%) | |||||

| PSPNet | ResNet50 | 82.28 ± 1.85 | 87.83 ± 2.15 | 58.59 ± 2.03 | 70.35 ± 3.38 | 77.23 ± 2.98 | 96.36 ± 0.34 | 5 |

| PSANet | ResNet50 | 82.11 ± 0.97 | 87.78 ± 1.76 | 56.75 ± 2.51 | 65.85 ± 4.01 | 76.49 ± 3.27 | 96.16 ± 0.75 | 4 |

| DeepLabv3 | ResNet50 | 82.0 ± 0.55 | 87.82 ± 1.08 | 56.31 ± 2.58 | 62.0 ± 4.37 | 75.93 ± 3.08 | 96.59 ± 0.32 | 3 |

| DANet | ResNet50 | 79.53 ± 3.74 | 86.78 ± 2.20 | 53.69 ± 3.37 | 61.74 ± 4.53 | 75.53 ± 3.61 | 95.86 ± 2.14 | 5 |

| FCN | HRNet18 | 84.74 ± 1.54 | 89.43 ± 1.87 | 62.98 ± 2.33 | 74.24 ± 1.89 | 81.68 ± 1.43 | 96.31 ± 1.33 | 12 |

| OCRNet | HRNet18 | 83.04 ± 3.05 | 86.98 ± 3.6 | 60.45 ± 5.14 | 67.44 ± 7.55 | 80.77 ± 2.31 | 96.94 ± 0.54 | 8 |

| DeepLabv3+ | ResNet50 | 69.80 ± 3.33 | 73.33 ± 13.41 | 52.47 ± 8.53 | 60.44 ± 11.72 | 72.51 ± 1.94 | 95.30 ± 1.68 | 5 |

| Ours | Swin transformer tiny | 91.10 ± 0.15 | 96.64 ± 0.51 | 79.89 ± 1.26 | 89.50 ± 1.42 | 90.0 ± 0.43 | 98.87 ± 0.07 | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Li, J.; Tan, Z.; Liu, X.; Li, M. Swin-UperNet: A Semantic Segmentation Model for Mangroves and Spartina alterniflora Loisel Based on UperNet. Electronics 2023, 12, 1111. https://doi.org/10.3390/electronics12051111

Wang Z, Li J, Tan Z, Liu X, Li M. Swin-UperNet: A Semantic Segmentation Model for Mangroves and Spartina alterniflora Loisel Based on UperNet. Electronics. 2023; 12(5):1111. https://doi.org/10.3390/electronics12051111

Chicago/Turabian StyleWang, Zhenhua, Jing Li, Zhilian Tan, Xiangfeng Liu, and Mingjie Li. 2023. "Swin-UperNet: A Semantic Segmentation Model for Mangroves and Spartina alterniflora Loisel Based on UperNet" Electronics 12, no. 5: 1111. https://doi.org/10.3390/electronics12051111

APA StyleWang, Z., Li, J., Tan, Z., Liu, X., & Li, M. (2023). Swin-UperNet: A Semantic Segmentation Model for Mangroves and Spartina alterniflora Loisel Based on UperNet. Electronics, 12(5), 1111. https://doi.org/10.3390/electronics12051111