Study on Driver Cross-Subject Emotion Recognition Based on Raw Multi-Channels EEG Data

Abstract

1. Introduction

- A novel end-to-end cross-subject EEG model for emotion recognition that automatically extracts temporal features from raw EEG data through large kernel sizes and attention pooling, and outperforms other EEG-based models using the proposed features.

- The paper explores the impact of selecting 10 channels from the frontal and temporal lobes on the training results of 62-channel EEG data.

- The paper conducts extensive ablation experiments, mainly focusing on the impact positions of the BN and dropout layers in CNN and the influence of multilayer convolution on model training results. These experiments demonstrate the reliability of the proposed model.

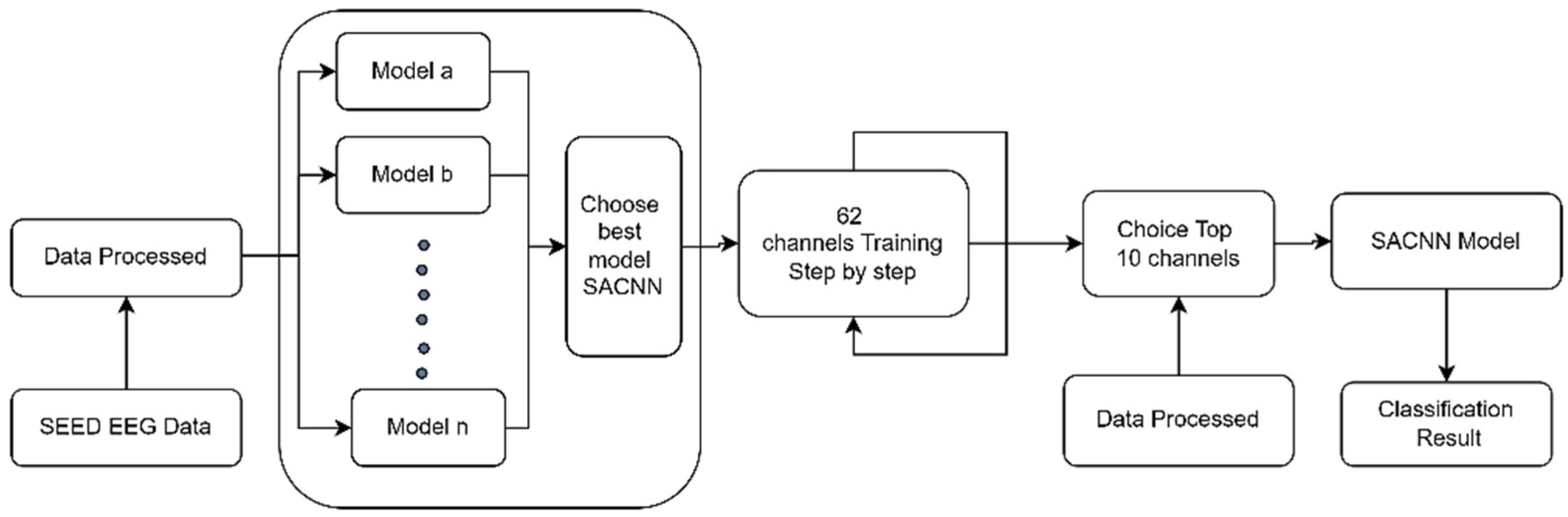

2. Methodology

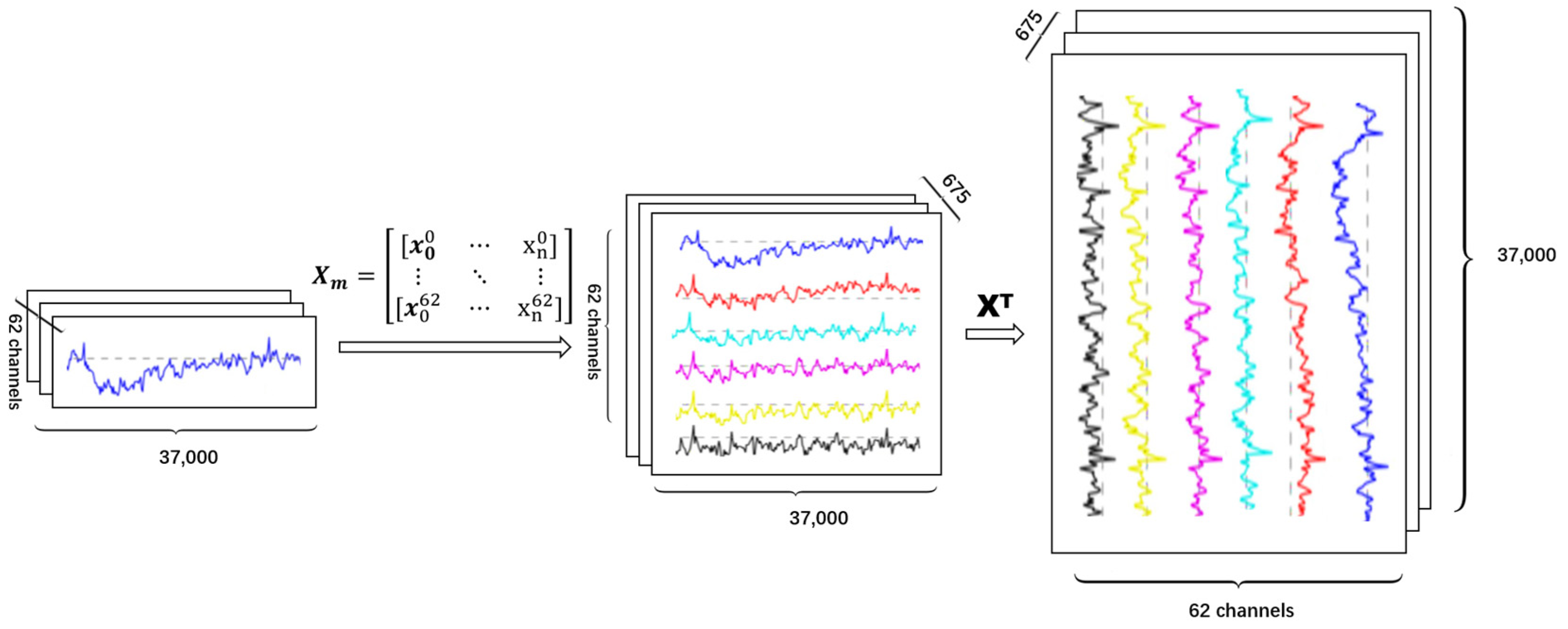

2.1. Preprocessing

| Algorithm 1 Preprocessing downsampling algorithm |

| input: SEED Dataset, input: Data Data array constructed by reading in layer by layer, input: n Data size output: The downsampled cross-subject matrix data 1: function DownSample(Data) 2: result ← [] 3: minLen ← The minimum data length for a single time series under a single feature 4: resIndex ← 0 5: for <all Data> do 6: dif f = Data length of the current time series/minLen 7: if dif f == 1 then 8: eys ← Data of the current time series 9: result[resIndex + +] ← eye.T 10: continue 11: end if 12: eye ← [] 13: eyeIdex ← 0 14: for <Time series data under current features> do 15: temp ← 0 16: f lag ← 0 17: tempT imeList ← [] 18: tempT imeListIndex ← 0 19: while temp < Length of time series under current features do 20: if dif f + f lag − int(dif f ) >= 1 then 21: x = dif f + int(dif f + f lag − int(dif f )) 22: f lag ← f lag – 1 23: else 24: x ← dif f 25: end if 26: tempT imeList[tempT imeListIndex + +] ← sum(The time series k to k+int(x) underthe current feature) 27: k ← k + int(x) 28: if k + 1 + int(dif f ) >= Length of time series under current features then 29: f lag ← 1 30: end if 31: f lag ← f lag + (dif f − int(dif f )) 32: end while 33: eye[eyeIndex + +] ← tempT imeList 34: end for 35: result[resIndex + +] ← eye.T 36: end for 37: return result 38: end function |

2.2. Channel Choice

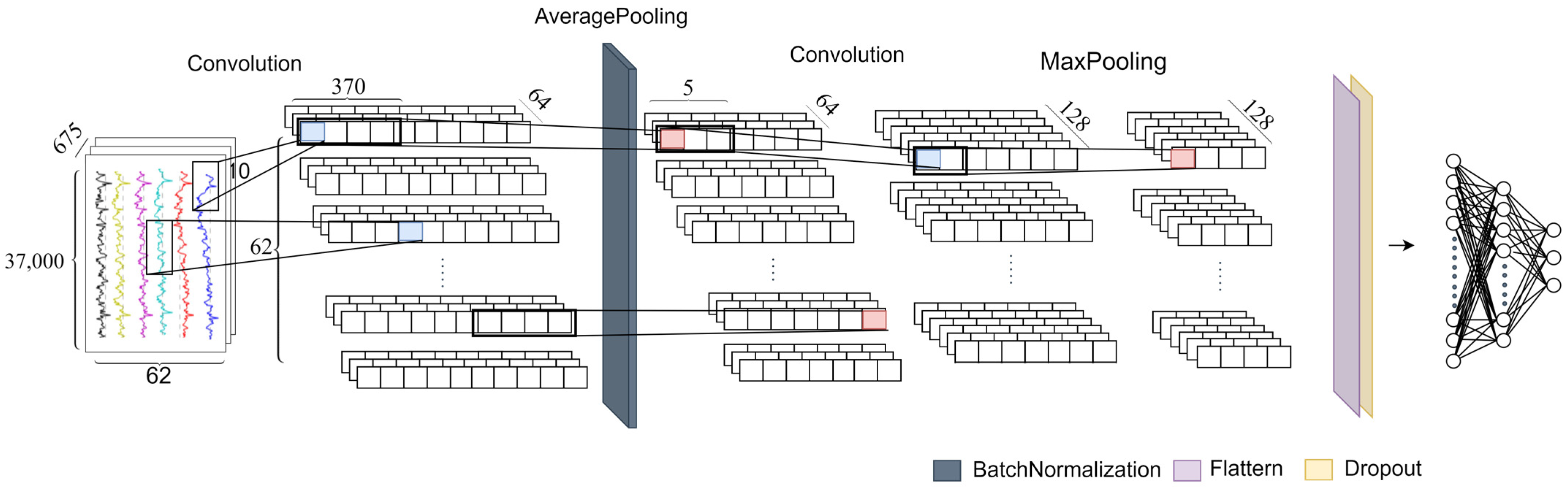

2.3. SACNN Model

3. Experiments and Result

3.1. Dataset

3.2. Experimental Setup

3.3. Ablation Studies

3.4. Results and Analysis

- (A)

- Multi-Conv Blocks Ablation Studies

- (B)

- BN Layers Ablation Studies

- (C)

- Dropout Layers Ablation Studies

- (D)

- Multi-Channels Results

- (E)

- Compared Models Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Matthias, G.; Hu, Y.H.; Zhou, X.; Ning, B.W.; Mi, J.Y.; Liu, J.; Wang, G.Y.; Wang, J.; Dong, C.; Zhang, L.D. Road traffic and transport safety development report. China Emerg. Manag. 2017, 2018, 48–58. [Google Scholar]

- Fajola, A.; Oduneye, F.; Ogbimi, R.; Mosuro, O.O.; Oyo-Ita, A.A.; Ovwigho, U. Taking Goal Zero Outside the Fence: Lifestyle and Health Influences on Tanker and Commercial Drivers’ Performance and Road Safety. In Proceedings of the SPE International Conference and Exhibition on Health, Safety, Environment, and Sustainability, Bogotá, Colombia, 27–31 July 2020; pp. 110–120. [Google Scholar]

- Niu, Z.; Lin, M.; Chen, Q.; Bai, L. Correlation analysis between risky driving behaviors and characteristics of commercial vehicle drivers. In Proceedings of the 2015 International Conference on Information Technology and Intelligent Transportation Systems ITITS 2015, Xi’an, China, 12–13 December 2015; pp. 677–685. [Google Scholar]

- Kaplan, S.; Guvensan, M.A.; Yavuz, A.G.; Karalurt, Y. Driver Behavior Analysis for Safe Driving: A Survey. IEEE Trans. Intell. Transp. Syst. 2015, 16, 3017–3032. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, T.; Pan, F.; Guo, T.; Liu, R. Analysis of the influence of driver factors on road traffic accident indicators. China J. Saf. Sci. 2014, 24, 79–84. [Google Scholar]

- Gietelink, O.; Ploeg, J.; Schutter, B.D.; Verhaegen, M. Development of advanced driver assistance systems with vehicle hardware-in-the-loop simulations. Veh. Syst. Dyn. 2006, 44, 569–590. [Google Scholar] [CrossRef]

- Jo, J.; Lee, S.J.; Jung, H.G.; Park, K.R.; Kim, J. Vision-based method for detecting driver drowsiness and distraction in driver monitoring system. Opt. Eng. 2011, 50, 7202–7208. [Google Scholar] [CrossRef]

- Peng, L.; Wu, C.; Huang, Z.; Zhong, M. Novel vehicle motion model considering driver behavior for trajectory prediction and driving risk detection. Transp. Res. Rec. 2014, 2434, 123–124. [Google Scholar] [CrossRef]

- Stemmler, G. Somatovisceral Activation during Anger. In International Handbook of Anger; Springer: Berlin/Heidelberg, Germany, 2010; pp. 103–121. [Google Scholar]

- Da Silva, F.L. Electroencephalography: Basic Principles, Clinical Applications, and Related Fields; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2005. [Google Scholar]

- Korats, G.; Le Cam, S.; Ranta, R.; Hamid, M. Applying ICA in EEG: Choice of the Window Length and of the Decorrelation Method. In Proceedings of the International Joint Conference on Biomedical Engineering Systems and Technologies, Valetta, Malta, 1–4 February 2012; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Yang, H.; Han, J.; Min, K. A Multi-Column CNN Model for Emotion Recognition from EEG Signals. Sensors 2019, 19, 4736. [Google Scholar] [CrossRef]

- Ekman, P.; Davidson, R.J. Voluntary smiling changes regional brain activity. Psychol. Sci. 1993, 4, 342–345. [Google Scholar] [CrossRef]

- Wei, C.; Chen, L.; Song, Z.; Lou, X.G.; Li, D.D. EEG-based emotion recognition using simple recurrent units network and ensemble learning. Biomed. Signal Process. Control 2020, 58, 101756. [Google Scholar] [CrossRef]

- Kamble, K.; Sengupta, J. A comprehensive survey on emotion recognition based on electroencephalograph (EEG) signals. Multimed. Tools Appl. 2023, 1–36. [Google Scholar] [CrossRef]

- Zheng, W.L.; Zhu, J.Y.; Lu, B.L. Identifying stable patterns over time for emotion recognition from EEG. IEEE Trans. Affect. Comput. 2017, 10, 417–429. [Google Scholar] [CrossRef]

- Duan, R.N.; Zhu, J.Y.; Lu, B.L. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), San Diego, CA, USA, 6–8 November 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 81–84. [Google Scholar]

- Kamble, K.S.; Sengupta, J. Ensemble machine learning-based affective computing for emotion recognition using dual-decomposed EEG signals. IEEE Sens. J. 2021, 22, 2496–2507. [Google Scholar] [CrossRef]

- Khare, S.K.; Bajaj, V. An evolutionary optimized variational mode decomposition for emotion recognition. IEEE Sens. J. 2020, 21, 2035–2042. [Google Scholar] [CrossRef]

- Keelawat, P.; Thammasan, N.; Kijsirikul, B.; Numao, M. Subject-Independent Emotion Recognition During Music Listening Based on EEG Using Deep Convolutional Neural Networks. In Proceedings of the 2019 IEEE 15th International Colloquium on Signal Processing Its Applications (CSPA), Pulau Pinang, Malaysia, 8–9 March 2019; pp. 21–26. [Google Scholar]

- Yang, Y.; Wu, Q.M.J.; Zheng, W.L.; Lu, B.L. EEG-based emotion recognition using hierarchical network with subnetwork nodes. IEEE Trans. Cogn. Dev. Syst. 2017, 10, 408–419. [Google Scholar] [CrossRef]

- Wang, Y.; Qiu, S.; Li, J.; Ma, X.; Liang, Z.; Li, H.; He, H. EEG-based emotion recognition with similarity learning network. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1209–1212. [Google Scholar]

- Li, X.; Song, D.; Zhang, P.; Yu, G.; Hou, Y.; Hu, B. Emotion recognition from multi-channel EEG data through Convolutional Recurrent Neural Network. In Proceedings of the 2016 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Shenzhen, China, 15–18 December 2016; pp. 352–359. [Google Scholar]

- Song, T.; Zheng, W.; Song, P.; Cui, Z. EEG Emotion Recognition Using Dynamical Graph Convolutional Neural Networks. IEEE Trans. Affect. Comput. 2020, 11, 532–541. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, L.; Wu, H.; Zhao, H. Transformers for EEG emotion recognition. arXiv 2021, arXiv:2110.06553. [Google Scholar]

- Lin, Y.P.; Jung, T.P. Improving EEG-based emotion classification using conditional transfer learning. Front. Hum. Neurosci. 2017, 11, 334. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. Personalizing EEG-based affective models with transfer learning. In Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 2732–2738. [Google Scholar]

- Luo, Y.; Zhang, S.Y.; Zheng, W.L.; Lu, B.L. WGAN domain adaptation for EEG-based emotion recognition. In Proceedings of the International Conference on Neural Information Processing, Siem Reap, Cambodia, 13–16 December 2018; Springer: Cham, Switzerland, 2018; pp. 275–286. [Google Scholar]

- Meng, M.; Hu, J.; Gao, Y.; Kong, W.; Luo, Z. A deep subdomain associate adaptation network for cross-session and cross-subject EEG emotion recognition. Biomed. Signal Process. Control 2022, 78, 103873. [Google Scholar] [CrossRef]

- Wang, Y.; Qiu, S.; Li, D.; Du, C.; Lu, B.L.; He, H. Multi-modal domain adaptation variational autoencoder for eeg-based emotion recognition. IEEE/CAA J. Autom. Sin. 2022, 9, 1612–1626. [Google Scholar] [CrossRef]

- Li, Z.; Zhu, E.; Jin, M.; Fan, C.; He, H.; Cai, T.; Li, J. Dynamic Domain Adaptation for Class-Aware Cross-Subject and Cross-Session EEG Emotion Recognition. IEEE J. Biomed. Health Inform. 2022, 26, 5964–5973. [Google Scholar] [CrossRef]

- Herrera, S.R.; Ceberio, M.; Kreinovich, V. When Is Deep Learning Better and When Is Shallow Learning Better: Qualitative Analysis. Int. J. Parallel Emerg. Distrib. Syst. 2022, 37, 589–595. [Google Scholar] [CrossRef]

- Kim, D.E. Comparison of Shallow and Deep Neural Networks in Network Intrusion Detection. Master’s Thesis, California State University, Fullerton, CA, USA, 2017. [Google Scholar]

| Model a | Model b | Model c | Model d | Model e | Model f | Model g | Model h | Model i |

|---|---|---|---|---|---|---|---|---|

| CNN-1 (MP + BN + DP) | CNN-2 (AP + BN + DP) | CNN-2 (AP_MP + BN + DP) | CNN-2 (MP_AP + BN + DP) | CNN-5 (MP + 1_BN + DP) | CNN-5 (MP + Multi_BN + DP) | CNN-10 (MP + 1_BN + DP) | CNN-10 (MP + Multi_BN + DP) | CNN-10 (MP_AP + BN + DP + Res) |

| Input (62 Channels Raw EEG Data) | ||||||||

| Conv1D 10–64 BN | Conv1D 10–64 BN | Conv1D 10–64 BN | Conv1D 10–64 BN | Conv1D 10–64 BN | Conv1D 10–64 BN | Conv1D 10–64 BN | Conv1D 10–64 Conv1D 10–64 BN | Conv1D 10–64 Conv1D 10–64 BN |

| Maxpooling1D 370 | Averagepooling1D 370 | Maxpooling1D 370 | ||||||

| DP 0.2 | Conv1D 5–128 | Conv1D 5–128 | Conv1D 5–128 | Conv1D 5–128 | Conv1D 5–128 BN | Conv1D 5–128 | Conv1D 5–128 Conv1D 5–128 BN | Conv1D 5–128 Conv1D 5–128 BN |

| Averagepooling1D 2 | Maxpooling1D 2 | Averagepooling1D 2 | Maxpooling1D 2 | |||||

| DP 0.2 | Conv1D 5–256 | Conv1D 5–128 BN | Conv1D 5–256 Conv1D 5–256 | Conv1D 5–256 Conv1D 5–256 BN | Conv1D 5–256 Conv1D 5–256 | |||

| Maxpooling1D 2 | Averagepooling1D 2-Residual | |||||||

| Conv1D 5–128 | Conv1D 5–128 BN | Conv1D 3–128 Conv1D 3–128 | Conv1D 3–128 Conv1D 3–128 BN | Conv1D 3–128 Conv1D 3–128 | ||||

| Maxpooling1D 2 | Averagepooling1D 2 | |||||||

| Conv1D 5–64 | Conv1D 5–64 BN | Conv1D 3–64 Conv1D 3–64 | Conv1D 3–64 Conv1D 3–64 BN | Conv1D 3–64 Conv1D 3–64 | ||||

| Maxpooling1D 2 | Averagepooling1D 2-Residual | |||||||

| DP 0.2 | BN | |||||||

| FC—1024 | ||||||||

| FC—256 | ||||||||

| FC—3 (Softmax) | ||||||||

| Model a | Model b | Model c | Model d | Model e | Model f | Model g | Model h | Model i | |

|---|---|---|---|---|---|---|---|---|---|

| Loss | 0.472 ± 0.056 | 0.486 ± 0.016 | 0.363 ± 0.029 | 0.431 ± 0.028 | 1.477 ± 0.056 | 0.814 ± 0.085 | 1.1 ± 0.005 | 1.428 ± 0.045 | 0.793 ± 0.091 |

| Accuracy | 0.874 ± 0.043 | 0.837 ± 0.008 | 0.881 ± 0.022 | 0.867 ± 0.013 | 0.578 ± 0.013 | 0.667 ± 0.067 | 0.333 ± 0.001 | 0.667 ± 0.052 | 0.667 ± 0.027 |

| Model c1 | Model c2 | Model c3 | Model c4 | Model c5 | Model c6 | Model c7 | Model c8 | |

|---|---|---|---|---|---|---|---|---|

| Block1-BN | √ | √ | √ | √ | ||||

| Block2-BN | √ | √ | √ | √ | ||||

| Block3-BN | √ | √ | √ | √ | ||||

| Loss | 0.461 ± 0.007 | 0.506 ± 0.01 | 1.082 ± 0.018 | 0.437 ± 0.068 | 0.543 ± 0.032 | 0.729 ± 0.126 | 0.822 ± 0.074 | 1.107 ± 0.006 |

| Accuracy | 0.852 ± 0.029 | 0.867 ± 0.056 | 0.563 ± 0.107 | 0.852 ± 0.127 | 0.849 ± 0.096 | 0.731 ± 0.176 | 0.652 ± 0.211 | 0.333 ± 0.007 |

| Model c1 | Model c2 | Model c3 | Model c4 | Model c5 | Model c6 | Model c7 | Model c8 | Model c9 | |

|---|---|---|---|---|---|---|---|---|---|

| Block1-DP 0.2 | √ | √ | √ | √ | |||||

| Block2-DP 0.2 | √ | √ | √ | √ | |||||

| Block3-DP 0.2 | √ | √ | √ | √ | |||||

| Block3-DP 0.5 | √ | ||||||||

| Loss | 1.12 ± 0.0083 | 1.164 ± 0.02 | 1.081 ± 0.023 | 1.11 ± 0.017 | 1.1 ± 0.01 | 1.107 ± 0.006 | 1.114 ± 0.02 | 1.10 ± 0.02 | 1.107 ± 0.006 |

| Accuracy | 0.326 ± 0.005 | 0.33 ± 0.016 | 0.437 ± 0.015 | 0.34 ± 0.016 | 0.33 ± 0.005 | 0.333 ± 0.001 | 0.348 ± 0.019 | 0.36 ± 0.015 | 0.33 ± 0.007 |

| Channel | Mean-acc | Channel | Mean-acc | Channel | Mean-acc | |||

|---|---|---|---|---|---|---|---|---|

| 1 | F8 | 0.724 ± 0.015 | 5 | FPZ | 0.644 ± 0.022 | 8 | F6 | 0.620 ± 0.022 |

| 2 | T7 | 0.691 ± 0.012 | 6 | FT8 | 0.641 ± 0.016 | 9 | C5 | 0.610 ± 0.017 |

| 3 | FT7 | 0.689 ± 0.021 | 7 | FP2 | 0.624 ± 0.025 | 10 | FP1 | 0.599 ± 0.023 |

| 4 | FC6 | 0.652 ± 0.012 |

| Model | Single | Multi | All |

|---|---|---|---|

| SVM | 0.748 ± 0.001 | 0.763 ± 0.001 | 0.333 ± 0.001 |

| DBN | 0.726 ± 0.013 | 0.826 ± 0.017 | 0.392 ± 0.027 |

| LSTM | 0.541 ± 0.021 | 0.444 ± 0.031 | 0.348 ± 0.058 |

| CNN-LSTM | 0.6593 ± 0.018 | 0.844 ± 0.014 | 0.444 ± 0.043 |

| Ours | 0.724 ± 0.015 | 0.918 ± 0.012 | 0.881 ± 0.022 |

| Model | Transfer Learning | WGANDA | DSAAN | MMDA-VAE | DDA | Ours |

|---|---|---|---|---|---|---|

| Res | 76.31% | 87.07% | 88.25% | 89.64% | 91.08% | 91.82% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Chen, M.; Feng, G. Study on Driver Cross-Subject Emotion Recognition Based on Raw Multi-Channels EEG Data. Electronics 2023, 12, 2359. https://doi.org/10.3390/electronics12112359

Wang Z, Chen M, Feng G. Study on Driver Cross-Subject Emotion Recognition Based on Raw Multi-Channels EEG Data. Electronics. 2023; 12(11):2359. https://doi.org/10.3390/electronics12112359

Chicago/Turabian StyleWang, Zhirong, Ming Chen, and Guofu Feng. 2023. "Study on Driver Cross-Subject Emotion Recognition Based on Raw Multi-Channels EEG Data" Electronics 12, no. 11: 2359. https://doi.org/10.3390/electronics12112359

APA StyleWang, Z., Chen, M., & Feng, G. (2023). Study on Driver Cross-Subject Emotion Recognition Based on Raw Multi-Channels EEG Data. Electronics, 12(11), 2359. https://doi.org/10.3390/electronics12112359