Abstract

With the development of science and computer technology, social networks are changing our daily lives. However, this leads to new, often hidden dangers in areas such as cybersecurity. Of these, the most complex and harmful is the Advanced Persistent Threat attack (APT attack). The development of personality analysis and prediction technology provides the APT attack a good opportunity to infiltrate personality privacy. Malicious people can exploit existing personality classifiers to attack social texts and steal users’ personal information. Therefore, it is of high importance to hide personal privacy information in social texts. Based on the personality privacy protection technology of adversarial examples, we proposed a Supervised Character Resemble Substitution personality adversarial method (SCRS) in this paper, which hides personality information in social texts through adversarial examples to realize personality privacy protection. The adversarial examples should be capable of successfully disturbing the personality classifier while maintaining the original semantics without reducing human readability. Therefore, this paper proposes a measure index of “label contribution” to select the words that are important to the label. At the same time, in order to maintain higher readability, this paper uses character-level resemble substitution to generate adversarial examples. Experimental validation shows that our method is able to generate adversarial examples with good attack effect and high readability.

1. Introduction

With the development of science and technology, network technology now sees widespread use, providing great convenience in daily life, but the resulting network security problems are also becoming increasingly prominent. At present, there are viruses, spyware, malicious plug-ins, spam, and other hidden dangers in the networks, which can steal users’ personal information through various means. Some of them even use this information and take certain measures to steal personal information, representing great hidden dangers to the protection of personal privacy. Personality has the characteristics of uniqueness, stability, unity, and functionality, which provides an opportunity for personality analysis and prediction. In recent years, researchers have presented the Big Five model of personality. Personality calculation and the personality classification method have also been developed gradually, aiming at social texts’ classification of personality. Using a deep-learning model, combined with the big five personality theory and application to social networks [1], as well as an analysis of the content of social texts, it is possible to infer the corresponding author’s personality type classification.

In recent years, APT attacks have begun to appear and develop rapidly, attracting extensive attention in the field of network security. An APT attack is an organized, targeted, and planned attack on a high-value target with continuous and effective concealment. Such attacks display strong concealment and pertinence. In terms of personality privacy, the development of personality analysis [2] and classification prediction technology [3,4,5] provides opportunities for APT attacks. People with malicious intentions can use existing personality classifiers to attack social texts to steal user information, and then steal personality privacy information, which will lead to the disclosure of personal privacy information. Therefore, it is very important to hide personal privacy information in social texts in order to protect users’ privacy. However, there are no defensive measures against personal privacy disclosure at home and abroad.

With the development of high computing devices, Machine Learning (ML) has been proposed to guide computers to learn information from data, use algorithms to improve the results of learning, and make the best decisions. Deep Learning (DL), a branch of ML, is more complex than ML and has more powerful features and effects. Neural Networks (NNs) are a foundation of DL, enabling complex information processing by simulating the logic of the human brain. Deep Neural Networks (DNNs), also called fully connected neural networks, are also common in DL. It is a kind of multi-layered, unsupervised neural network, which usually requires a large amount of data for training. In recent years, it has been widely used in many artificial intelligence applications and has excellent performance. However, DNNs are vulnerable to adversarial attacks. These attacks are produced by disturbances that are not easily detectable by humans but can fool DNNs into making false predictions. The study of adversarial examples was first applied in the field of Computer Vision (CV) for image classification tasks, usually by adding small disturbances to images to generate adversarial examples. The study of methods for image generation of adversarial examples is relatively mature. Of these methods, the most commonly used are FGSM (Fast Gradient Sign Method); JSMA (Jacobian-based Saliency Map Attacks); GAN (Generative Adversarial Network), etc. Inspired by generative adversarial examples in CV, research on attacks in natural language processing (NLP) applications has emerged in recent years. While CV focuses on images, NLP focuses on text processing. If we apply the idea of adversarial examples to the task of personality privacy protection, we can protect the personality information in social text by generating adversarial examples to cause false judgments on the personality classifier. Therefore, we need to design an adversarial examples generation method for personality privacy to protect the personality information in social texts. However, the many differences between images and texts become apparent during processing. Images are continuous, while texts are discrete. After slightly modifying the image embedding, it can be mapped to a new image, but after slightly modifying the text embedding, it may not be mapped to a new text example. At the same time, because the texts are relatively complex, there will be polysemy of words and sentences. The same word will express different semantics in different contexts, and the same sentence, with different punctuation, will also express different semantics. The semantics of texts need to be fully combined with the context for more accurate expression, so the semantic expression of texts has always been a difficult point in NLP, which also creates difficulties in the study of adversarial examples. Therefore, the processing of texts is more difficult than the processing of images, and the direct application of attack methods in CV to NLP cannot achieve good results [6,7]. The emergence of the BERT (Bidirectional Encoder Representations from Transformers) model [8] is a recognized milestone in the field of NLP. Its use of bidirectional encoding allows text training to include more contextual information, and it plays an important role in various NLP tasks, so we apply this excellent performance to our research as well.

For adversarial examples of personal privacy, four conditions need to be met:

- Effectiveness: The adversarial examples must be able to successfully attack the personality classifier so that the classifier can make incorrect judgments, but it will not affect human understanding;

- Readability: The adversarial examples are complete and fluent sentences without obvious grammatical errors;

- Semantic similarity: The adversarial examples should achieve semantic retention and be similar to the semantics of the original texts;

- Robustness: The adversarial examples need to be able to deal with defenses and maintain characteristics that can successfully attack the personality classifier.

Therefore, the adversarial examples generation method should take these four conditions into consideration, not only to ensure a successful attack on personality tags but also to ensure semantic retention. At the same time, it must be smooth and complete, and not be easily defended. In this paper, we propose using the SCRS method to generate adversarial examples. The main contributions of this paper are as follows:

- We propose a calculation method of “label contribution”. Since texts are discrete, it is difficult to directly generate adversarial examples by attacking the original examples. We use the FGSM in CV as the basic method. We determine the words that are most affected by the gradient by calculating the similarity between the adversarial examples and the original examples. It is considered that its label contribution is the highest. Perturbing the words using the highest possible label contribution, we consider the highest degree to which the antagonistic samples cause errors in the classifier’s judgments;

- We design a character-level resemble substitution to modify N words with high label contribution to ensure that human comprehension errors are minimized;

- We use the BERT multi-label classification model for verification. The results show that our adversarial examples are effective for personal privacy protection, and the readability of adversarial examples is relatively high.

The rest of this paper is organized as follows. We present related work from a range of authors in Section 2. We describe the SCRS method in detail in Section 3. We show the content of the experiments, including the dataset, text preprocessing model, target model, and experimental results, in Section 4. We present the conclusions and future work in Section 5.

2. Related Work

At present, in studies based on adversarial examples generated at home and abroad, there has been no research on personality classification tasks. Most studies have been aimed at other text classification tasks, such as sentiment classification, spam classification, etc., as well as text entailment tasks, machine translation tasks, etc. Thus far, no researchers have conducted research on generating adversarial samples in the field of personality privacy protection.

Generation methods for most textual adversarial samples use the strategy of selecting important words and changing them, but their methods differ in the way they select important words and in the way they replace words. For example, Wang et al. [9] proposed a new method, AD-ER, to generate natural language adversarial examples in Chinese. They selected words that are important to the text by calculating the TF-IDF value of the words, and they designed four strategies for word variants: homophone substitution; similar word substitution; Chinese Pinyin substitution; and word-split substitution. As a result, adversarial examples have better diversity and readability. Similarly, Samanta et al. [10] also generated adversarial examples by locating important or prominent words in the text, using deletion or substitution operations on these words, or introducing new words to generate adversarial samples. They found significant words by calculating the class attribution probability contribution of words—that is, the change in sample classification probability values before and after deleting a word—and then modified the text using substitution, insertion, and deletion strategies. In their subsequent work [11], they selected the adverbs with the greatest contribution to the text in the original sample for deletion by measuring the loss gradient and setting up a candidate word set. If the semantics of the sentence after deleting the word was incorrect, they selected a word from the candidate word set and inserted it into the current position. They used this method to generate adversarial examples. Ebrahimi, J. et al. [12] proposed a white-box adversarial examples strategy for gradient-based character-level substitution generation, relying on atomic flip operations, and representing character-level operations as vectors in the input space. Based on the gradients of one-hot input vectors, they estimated the loss change in the directional derivatives of these vectors, exchanging one token for another. The team then improved on their previous work [13] by proposing controlled attacks; that is, removing specific words from the output. This maximizes the loss function and minimizes , where is the target word of the controlled attack and is the word that replaces . In addition, Gao et al. [14] proposed a DeepWordBug method in which they introduced two new scoring strategies: Temporal Scoring (TS) and Time Tailed Scoring (TTS), to find significant words and then performed simple character transformations on the words, including random deletion, random substitution, random insertion, and swap character methods. Subsequently, Jin et al. [15] proposed the TEXTFOOLER method, which introduced the importance score, , to calculate the predicted change in the sentence before and after the deletion of word . They then selected words for the substitution operation based on the importance score, extracted synonyms, and replacement candidate sets and selected the closest synonym to start the substitution by calculating the cosine similarity. Additionally, Liang et al. [16] utilized the idea of FGSM on images to identify text examples that are important for classification by computing the gradient of the input. They also designed three perturbation strategies of insertion, modification, and removal to generate adversarial examples. They used back-propagation to calculate the gradient of the cost for each training example as follows:

where is the model function, is the original example, is the target output class. The character with the largest gradient in the calculation result was named the hot character and the phrase that contained enough hot characters and the highest frequency was named Hot Training Phrases (HTPs). Next, they performed three perturbation strategies based on this information.

Additionally, some scholars used existing methods or machine learning models to generate new adversarial examples directly. Cheng et al. [17] proposed a gradient-based method, AdvGen, to attack Neural Machine Translation (NMT) models. They used a language model to find the most likely substitution for a word, which can achieve semantic preservation, guided by a translation loss, by considering the similarity between the gradients of the loss function and the distance between a word and its substitution to generate adversarial examples. Kathrin, G. et al. [18] extracted features from the original examples, obtained the static features of the software application, and used the binary indicator feature vector to represent an application. Using the JSMA method of the image, the gradient of the model Jacobian matrix was calculated to estimate the disturbance direction. Adversarial examples are designed on the input feature vector, where the perturbation is a binary value flip operation (i.e., 0 → 1 or 1 → 0).

A small group of researchers has also been performing modification operations at the sentence level to generate adversarial examples. Matthias, B. et al. [19] proposed two white-box attacks to compare the robustness of CNN and RNN. One uses the model’s self-attention distribution to find key sentences and then swaps the most concerning words with other words that have been randomly selected. The other deletes the most concerning sentences.

Since the white-box attack masters the parameters of the victim model, the loss function can be used to find the part with the best attack effect. Most of the research designs the generation strategy of adversarial examples by finding the direction with the largest gradient descent.

However, for the white-box attack task, the final generated adversarial samples are directly related to the parameters of the classifier, so it is particularly important to select a classifier with high classification accuracy and agility to generate the adversarial samples. The BERT model uses a transformer decoder as the extractor and corresponding mask training method, which shows satisfactory results in many NLP tasks and has broken many records in natural language processing in the past five years. Marco, S. et al. verified a detection task using fake news communicators (users who share fake news) during an NLP text classification task [20]. Their experimental results proved that a model based on a transformer is not superior to other deep and non-deep SotA models. Therefore, they believed that a large pre-trained in-depth model such as a transformer model is not necessarily the best choice. However, in the research carried out by Marco, S. et al. on multi-label classification tasks and binary classification tasks [21], their experimental results proved that a model based on a transformer model has a better classification effect. PAN@CLEF2022 presented the Irony and Stereotype Purveyors (ISSs) task on Twitter [22], which investigates whether Twitter writers are likely to spread sarcasm and stereotypes. The model used by Marco, S. et al. consisted of logistic regression (LR) that takes the predictions provided by the first-stage classifier (called the voter) as input [23]. Voters are composed of a convolutional neural network (CNN), support vector machine (SVM), naïve Bayesian classifier (NB), and decision tree (DT), and their model achieved an accuracy level of 0.9444. Yu, W. et al. designed a feature-based model based on the fine-tuned BERT model [24], which achieved an accuracy level of 0.9944. Therefore, the performance of the BERT model in social text classification tasks is more satisfactory. Compared with multi-classification tasks, the BERT model is more suitable for multi-label classification tasks and binary classification tasks. In our work, it was determined that it was better to select classifiers based on the BERT model.

3. SCRS of Personality Adversarial Method

3.1. SCRS

There are many similarities between NLP and CV, so there are many ways for these methods to learn from one another. The image adversarial examples technology is very mature, but because the text is not continuous like an image, the image adversarial examples technology needs to be modified before it can be used in the texts. The generated adversarial examples need to meet two conditions: one is to be able to attack the label, and the other is to retain the semantics so that the generated adversarial examples can maintain the original semantics and will not create misunderstandings. This paper proposes a strategy that can transfer the methods in CV to NLP; finds words that influence the personality classifier through the similarity calculation of word embedding and perturbation word embedding; and proposes the “label contribution” to locate important words. The words are changed slightly so that the condition of attacking the label can be satisfied. For the condition of semantic preservation, we propose a character-level similarity substitution, which can make it more readable and cause as few misunderstandings as possible.

In this paper, the FGSM in CV is used as the basic method to perturb the text word embedding. The idea of FGSM is that the classification model completes the parameter update by subtracting the gradient during training, which can progressively decrease the loss of the model, thereby increasing the probability that the model is predicted to be correct. The goal of adversarial examples is the opposite of that of the classification model, so if a gradient can be added to the input of the image, the loss value of the modified image when passing through the model becomes larger, and the probability that the model predicts correctly becomes smaller. In the white-box environment, by calculating the derivative of the model with respect to the input, using the signature function to obtain its specific gradient direction, and then multiplying it by a step size, the resulting perturbation is added to the original input, and the examples after the FGSM perturbation are obtained. The expression for the perturbation is

where is the original sample, is the sample after perturbation, is the hyperparameter, () is the sign function, and is the loss function. The “fast” method is named thus because it does not require an iterative process to compute the generated adversarial examples, making it much faster than other methods. This article applies the image method to the text, using FGSM to obtain a perturbed word vector, but this perturbed word vector does not directly correspond to a sentence, so we used it to find the word with the largest perturbed distance. It is defined as a word with high label contribution.

3.2. Design Strategy

3.2.1. Label Contribution Calculation Strategy

For adversarial examples, adding disturbances that cause errors in classifier judgments is the most important task. However, for the whole text, if disturbances are added at random locations throughout the text, the effect on the classifier will also be random and unpredictable, and it will be impossible to ensure that every disturbance is effective. Therefore, to increase the effectiveness of the disturbances, we introduced label contribution to help us find the most important words for a text sample. We believed that disturbing these words will impact the classifier’s ability to make a judgment.

In a white-box attack, the model parameters are known, so gradients can be introduced to guide us in selecting important words. In this paper, the FGSM of image adversarial examples was introduced, and the method was transferred to NLP and reused after modification. FGSM can calculate the derivative of the model to the input in the white-box environment and calculate a disturbance to add to the word embedding of the original input, which can increase the loss and increase the probability of the model’s incorrect prediction. However, since the texts are discrete, the perturbed word embedding obtained by using FGSM cannot be directly mapped to a new text , so this paper uses the distance between the word vector before perturbation and the word vector after perturbation to measure the label contribution of the word. The larger the distance, the greater the label contribution of the word. The method for calculating the label contribution of words designed for the white-box environment is as follows:

For the example , , is the word in ; put into the classifier to obtain the prediction result ; that is, . The word embedding of is , and FGSM is used to add perturbation to the word embedding to obtain . The expression is

where is a hyperparameter, () is sign function, and is the loss function. For the word embedding of a word, this paper defined as the distance before and after the word vector perturbation, and the formula is

Therefore, for a certain word , the calculation formula of label contribution is

3.2.2. Character-Level Resemble Substitution Strategy

Since the adversarial examples also need to meet the conditions of semantic retention and the readability should be as high as possible, the adversarial examples generation strategy adopted in this paper was to directly perform character-level substitution of words with high label contribution. To reduce the disturbance perceived by humans, this paper adopted the resemble substitution of words, which can visually weaken the changes. At the same time, by changing the characters to cause spelling errors, the deep learning model does not recognize the word or recognizes it as other words, resulting in the model’s prediction error and reducing the accuracy of the personality classifier, which can successfully protect users’ personal information.

The strategy of character-level substitution is as follows: calculate the label contribution of each word and sort them according to the label contribution from high to low, and then select m words to replace them in sequence. For example, o can be replaced by 0; l can be replaced by 1. In order to ensure stronger readability of the replaced sentences and a higher success rate of the attack, the number of word substitutions in this paper’s strategy was measured by the percentage of the total number of words in the sentence. The substitution of high and low characters should be avoided and the replaced characters should be as close to the middle part as possible, reducing the risk of confusion. Table 1 shows the correspondence table of character resemble substitution.

Table 1.

Character substitution correspondence.

3.2.3. Whole Process

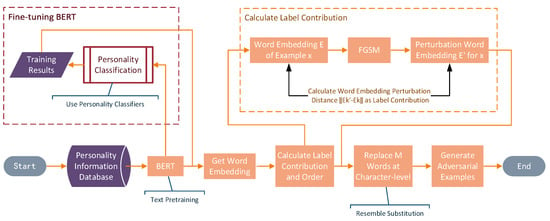

The overall block diagram of the SCRS is shown in Figure 1. We used BERT for text pre-training and the personality dataset to fine-tune the BERT model so that the word embedding obtained by BERT was more in line with our personality privacy protection task. Then, we used the FGSM to find the label contribution of the word, selecting the words that were important for the label to be modified according to the label contribution to generate adversarial examples.

Figure 1.

Block diagram of SCRS.

The algorithm implementation steps of the entire SCRS method are shown in Algorithm 1.

| Algorithm 1. Algorithm for the SCRS method. |

| Input: examples , personality classifier , attack strength parameter of FGSM, word substitution dictionary T, replace word proportion θ |

| 1: |

| 2: |

| 3: for = 1… do |

| 4: |

| 5: |

| 6: |

| 7: for = 1… do |

| 8: = |

| 9: |

| 10: end for |

| 11: end for |

| 12: return |

4. Experiments

4.1. Datasets and Text Pre-Trained Models

This paper used two datasets for experimental validation, both based on the Big Five personality classification of personality information datasets, one of which was created by David Stillwell in 2007. This classification is available at http://mypersonality.org (accessed on 9 December 2022). Another dataset is James Pennebaker and Laura King’s stream-of-consciousness essay dataset, which contains 2468 anonymous essays tagged with the authors’ personality traits. Both datasets correspond to the Big Five personality classification, which has five dimensions: EXT, NEU, AGR, CON, and OPN, so the personality classification problem can be approximated as a multi-label binary classification problem.

For text examples, the first task was to make the model understand the words and sentences in the dataset; that is, to convert the words that cannot be directly calculated in the text into computable vectors or matrices. These vectors must be able to better reflect the meaning of the corresponding word in the sentence. Therefore, it was important to achieve good word embedding so that more semantic information can be expressed, which requires pre-training tasks. Its purpose was to train the underlying models of the downstream tasks in advance and then use the respective sample data of the downstream tasks to train the respective models, which can greatly increase the convergence speed. Therefore, in order to improve the effectiveness of the results of this paper, we used one of the most prominent breakthrough technologies in NLP in recent years: the BERT model for text pre-training.

The BERT model, first proposed by the Google team [8], is a self-encoding language model that pre-trains the deep bidirectional representation of texts by jointly adjusting the context in all layers of the Transformer. The constituent element of BERT is the Transformer model [25], which adopts the encoder structure of the Transformer model. The BERT-base model contains 12 encoders. BERT has designed two tasks for pre-training. The first task is to use the MaskLM method to train the language model, as follows: when inputting a sentence, randomly select some words to be predicted and use a special symbol [MASK] to replace them, and then let the model learn the words that should be filled in these places according to the given labels. The second task is to add a sentence-level continuity prediction task based on the bidirectional language model; that is, to predict whether the two sentences input to BERT are continuous sentences, which can better allow the model to learn the relationships between text context fragments. Therefore, compared with RNN and LSTM, BERT can be executed concurrently and can extract the relational features in words and sentences, thus reflecting the semantics of sentences more comprehensively. Compared with Word2Vec, it can obtain word meaning according to the context of the sentence, avoiding ambiguity as much as possible. However, because BERT requires many parameters and the model is large, the hardware requirements for training are very high, and when the training data are small, it is easy to cause overfitting.

4.2. Experimental Settings

To evaluate the effectiveness of SCRS, we trained a BERT multi-label classification model using the mypersonality dataset and stream-of-consciousness essay dataset, respectively, and used these two individual models as personality privacy classifier models. Using FGSM, the original examples were changed to generate adversarial examples. Since white-box adversarial examples require specific changes to the gradient, this experiment adopted a closed experiment. To more clearly illustrate the impact of word modification on the classification results, we took two variables to demonstrate the effectiveness of the attack. The first variable was the number of modified words. We dynamically determined the number of modified words according to the length of the sentence. Therefore, we used the replacement word ratio θ to determine the number of modified words. In our experiment, θ increased from 0% in 5% increments. The classification effect of the adversarial examples attack was recorded separately. The second variable was the attack strength parameter of FGSM. The attack effects of adversarial examples under different attack strengths were also different, which also demonstrated the effectiveness of our method.

4.3. Adversarial Examples Results

In this paper, we calculated the label contribution degree through the word embedding of the original example and the word embeddings generated by SCRS and sorted them according to the label contribution from high to low. According to the difference in the substitution word ratio θ, we listed several adversarial examples generated using the mypersonality dataset, as shown in Table 2. As can be seen from the table below, the generated adversarial examples were more readable and achieved the task of semantic retention of adversarial examples.

Table 2.

Adversarial examples of SCRS.

4.4. Attack Effect Display

- (1)

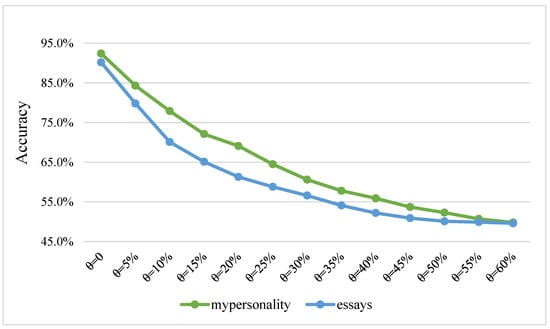

- Experimental results

According to the above two variables, we conducted an attack experiment against the examples. The experimental results are shown in Figure 2. From the figure, we can see that after attacking the two datasets, the success rate of the classifier was around 50% when the word substitution ratio θ surpassed 50%. Since our task was a multi-label binary classification task, 50% of the classifications were accurate. The rate indicates that the classifier did nothing, proving that our attack was effective. Since our task was a multi-label binary classification task, a classification accuracy of 50% indicates that the classifier was not doing anything, proving that our attack was effective. With the increase in the word substitution ratio θ, the classification effect of the classifier showed a downward trend; that is, the attack effect became more and more obvious, and the adversarial samples generated by essays performed better than those generated by mypersonality. Moreover, with the increase in the substituted words, the rate of decline in the classifier effect slowly decreased, which suggests that a small modification to the word can cause the classifier to make an incorrect judgment. Thus, causing the classifier to fail for more samples became increasingly difficult. The table also shows the classification results under different attack strengths. The greater the attack strength, the greater the decline in the classifier’s accuracy. A decrease in the classification accuracy indicates that the classifier’s ability to judge personality privacy data had decreased, and the accuracy of personality classification had also decreased. This effectively plays a role in protecting personal privacy.

Figure 2.

Experimental results of attack.

- (2)

- Comparison

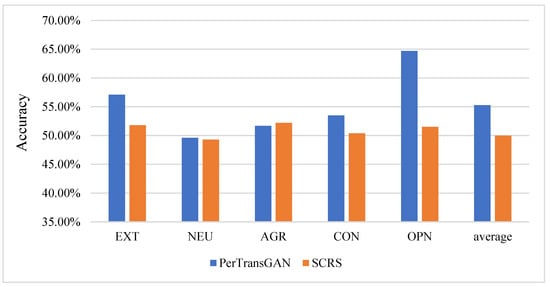

To verify the performance of the SCRS method, we compared it with the PerTransGAN method proposed in our team’s previous work [26]. The SCRS method uses generative adversarial networks (GANs) to protect the privacy of the personalities hidden in text data through text transformation. The classification accuracy of all five personalities generated by the PerTransGAN model decreased to different degrees, and the average classification accuracy remained at around 55%. However, our SCRC method caused the classification accuracy to decrease to around 50%, therefore showing better performance. We compared the degrees of decrease demonstrating the classification accuracy for the five personalities, as shown in Figure 3. The lower classification accuracy proves that the attack was more effective and also proves that the generation method to obtain adversarial samples was superior. The comparison experiments showed that our method performed better in terms of attack effectiveness.

Figure 3.

Comparison of the experimental results.

5. Conclusions

In this paper, transferring FGSM in CV to NLP, we proposed a white-box adversarial examples generation method SCRS based on personality privacy protection. The experimental results showed that the adversarial examples generated by SCRS can successfully cause the classifier to make an incorrect judgment while maintaining the original semantics, and the readability is strong. Therefore, SCRS can realize the protection of personal privacy information.

In our experiments, semantic retention was achieved through character-level resemble substitution, ensuring that the adversarial examples recognized by humans resemble the original examples as much as possible. However, although character-level substitution results in spelling mistakes, there are existing methods for checking spelling errors in NLP. Therefore, our adversarial examples generation method has the risk of being defended against; that is, it cannot fully guarantee the protection of personal privacy information. In future work, we will improve the semantic retention task in generative adversarial examples methods.

Author Contributions

Conceptualization, X.W., S.C., and K.Z.; methodology, X.W. and S.C.; software, S.C. and X.G.; validation, S.C. and Y.S.; formal analysis, S.C. and Y.S.; investigation, X.W., S.C., and Y.S.; resources, X.W.; data curation, X.W.; writing—original draft preparation, S.C.; writing—review and editing, X.W.; visualization, S.C.; supervision, X.G. and Y.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Beijing Natural Science Foundation (4202002).

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wegener, D.T.; Fabrigar, L.R.; Pek, J.; Hosington-Shaw, K.J. Evaluating Research in Personality and Social Psychology: Considerations of Statistical Power and Concerns about False Findings. Personal. Soc. Psychol. Bull. 2021, 48, 1105–1117. [Google Scholar] [CrossRef]

- Rastogi, R.; Chaturvedi, D.K.; Satya, S.; Arora, N.; Singh, A. Intelligent Personality Analysis on Indicators in IoT-MMBD-Enabled Environment. In Multimedia Big Data Computing for IoT Applications; Springer: Singapore, 2020; Volume 163, pp. 185–215. [Google Scholar]

- Alireza, S.; Shafigheh, H.; Masoud, R.A. Personality classification based on profiles of social networks’ users and the five-factor model of personality. Hum. Cent. Comput. Inf. Sci. 2018, 8, 24. [Google Scholar]

- Keh, S.S.; Cheng, I.T. Myers-Briggs Personality Classification and Personality-Specific Language Generation Using Pre-trained Language Models. arXiv 2019, arXiv:1907.06333. [Google Scholar]

- Sood, A.; Bhatia, R. Baron-Cohen Model Based Personality Classification Using Ensemble Learning. Data Sci. Big Data Anal. 2019, 16, 57–65. [Google Scholar]

- Zhang, W.E.; Sheng, Q.Z.; Alhazmi, A.; Li, C. Adversarial Attacks on Deep Learning Models in Natural Language Processing: A Survey. ACM Trans. Intell. Syst. Technol. 2020, 11, 1–41. [Google Scholar] [CrossRef]

- Zhang, H.; Zhou, H.; Miao, N.; Li, L. Generating Fluent Adversarial Examples for Natural Languages. In Proceedings of the ACL, Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Wang, C.; Zeng, J.; Wu, C. Generating Fluent Chinese Adversarial Examples for Sentiment Classification. Proceedings of 14th International Conference on Anti-Counterfeiting, Security, and Identification (ASID), Xiamen, China, 30 October–1 November 2020. [Google Scholar]

- Samanta, S.; Mehta, S. Towards Crafting Text Adversarial Samples. arXiv 2017, arXiv:1707.02812. [Google Scholar]

- Samanta, S.; Mehta, S. Generating Adversarial Text Samples. In Proceedings of the European Conference on Information Retrieval (ECIR), Grenoble, France, 26–29 March 2018. [Google Scholar]

- Ebrahimi, J.; Rao, A.; Lowd, D.; Dou, D. HotFlip: White-Box Adversarial Examples for Text Classification. In Proceedings of the Association for Computational Linguistics (ACL), Melbourne, Australia, 15–20 July 2018. [Google Scholar]

- Ebrahimi, J.; Lowd, D.; Dou, D. On Adversarial Examples for Character-Level Neural Machine Translation. In Proceedings of the 27th International Conference on Computational Linguistics (COLING), Santa Fe, NM, USA, 20–26 August 2018. [Google Scholar]

- Gao, J.; Lanchantin, J.; Soffa, M.L.; Qi, Y. Black-box Generation of Adversarial Text Sequences to Evade Deep Learning Classifiers. In Proceedings of the 2018 IEEE Security and Privacy Workshops (SPW), San Francisco, CA, USA, 24 May 2018. [Google Scholar]

- Jin, D.; Jin, Z.; Zhou, J.T.; Szolovits, P. Is BERT Really Robust? A Strong Baseline for Natural Language Attack on Text Classification and Entailment. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Liang, B.; Li, H.; Su, M.; Bian, P.; Li, X.; Shi, W. Deep Text Classification Can be Fooled. In Proceedings of the International Joint Conferences on Artificial Intelligence (IJCAI), Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Cheng, Y.; Jiang, L.; Macherey, W. Robust Neural Machine Translation with Doubly Adversarial Inputs. In Proceedings of the Association for Computational Linguistics (ACL), Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Grosse, K.; Papernot, N.; Manoharan, P.; Backes, M.; McDaniel, P.D. Adversarial Examples for Malware Detection. In Proceedings of the European Symposium on Research in Computer Security (ESORICS), Oslo, Norway, 11–15 September 2017. [Google Scholar]

- Blohm, M.; Jagfeld, G.; Sood, E.; Yu, X.; Vu, N.T. Comparing Attention-Based Convolutional and Recurrent Neural Networks: Success and Limitations in Machine Reading Comprehension. In Proceedings of the Conference on Computational Natural Language Learning (CoNLL), Brussels, Belgium, 31 October–1 November 2018. [Google Scholar]

- Siino, M.; Di Nuovo, E.; Tinnirello, I.; La Cascia, M. Fake News Spreaders Detection: Sometimes Attention Is Not All You Need. Information 2022, 13, 426. [Google Scholar] [CrossRef]

- Siino, M.; Cascia, M.; Tinnirello, I. McRock at SemEval-2022 Task 4: Patronizing and Condescending Language Detection using Multi-Channel CNN, Hybrid LSTM, DistilBERT and XLNet. In Proceedings of the 16th International Workshop on Semantic Evaluation, Seattle, WA, USA, 14–15 July 2022. [Google Scholar]

- Bevendorff, J.; Chulvi, B.; Fersini, E.; Heini, A.; Kestemont, M.; Kredens, K.; Mayerl, M.; Ortega-Bueno, R.; Pezik, P.; Potthast, M.; et al. Overview of PAN 2022: Authorship Verification, Profiling Irony and Stereotype Spreaders, and Style Change Detection. In Experimental IR Meets Multilinguality, Multimodality, and Interaction, Proceedings of the Thirteenth International Conference of the CLEF Association (CLEF 2022), Bologna, Italy, 5–8 September 2022; Barron-Cedeno, A., Martino, G.D.S., Esposti, M.D., Sebastiani, F., Macdonald, C., Pasi, G., Hanbury, A., Potthast, M., Faggioli, G., Ferro, N., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; Volume 13390, p. 13390. [Google Scholar]

- Marco, S.; Ilenia, T.; Marco, L.C. T100: A modern classic ensemble to profile irony and stereotype spreaders. In Proceedings of the CLEF 2022 Labs and Workshops, Bologna, Italy, 5–8 September 2022. [Google Scholar]

- Wentao, Y.; Benedikt, B.; Dorothea, K. BERT-based ironic authors profiling. In Proceedings of the CLEF 2022 Labs and Workshops, Bologna, Italy, 5–8 September 2022. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N. Attention is all you need. In Proceedings of the International Conference on Neural Information Processing Systems (NIPS), Red Hook, NY, USA, 4–9 December 2017. [Google Scholar]

- Sui, Y.; Wang, X.; Zheng, K.; Shi, Y.; Cao, S. Personality Privacy Protection Method of Social Users Based on Generative Adversarial Networks. Comput. Intell. Neurosci. 2022, 2022, 13. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).