RETRACTED: Detection and Grade Classification of Diabetic Retinopathy and Adult Vitelliform Macular Dystrophy Based on Ophthalmoscopy Images

Abstract

1. Introduction

2. Related Works

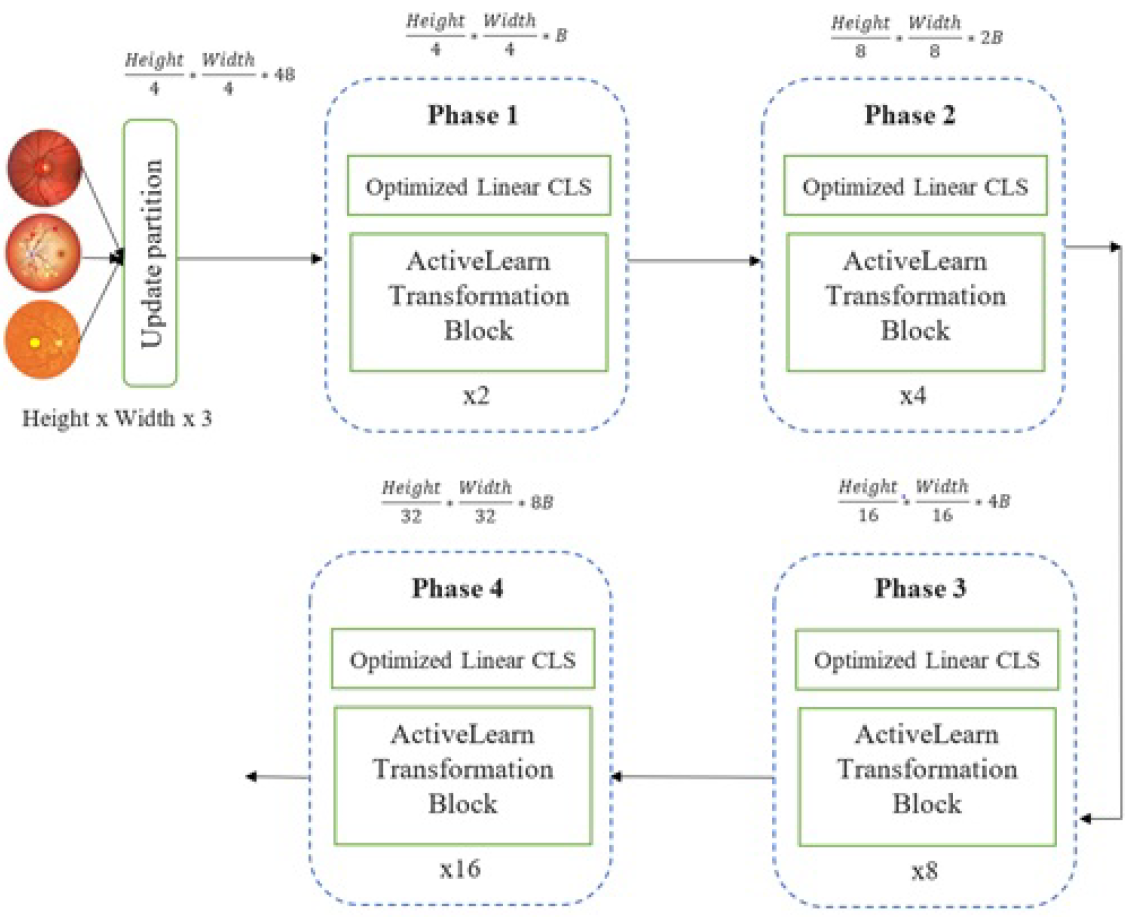

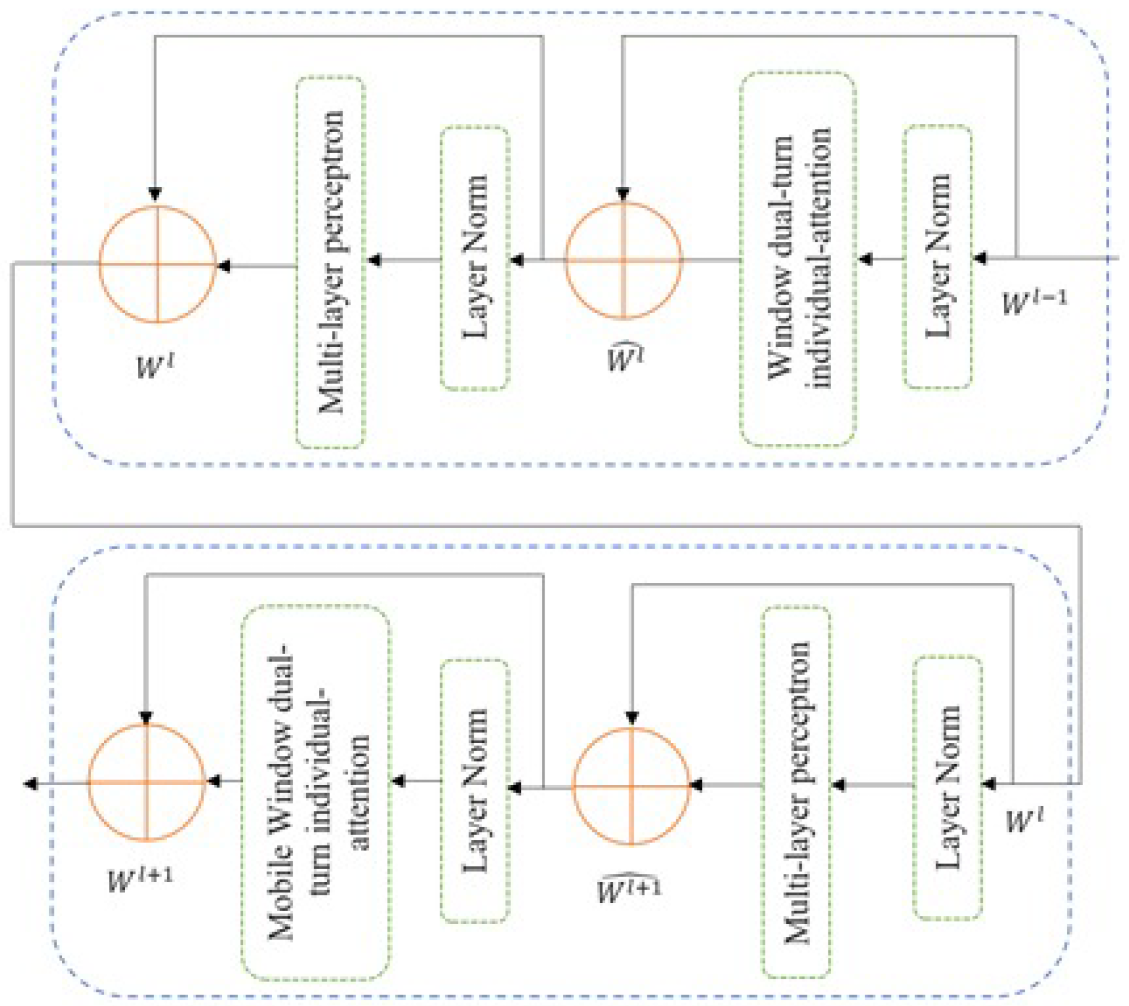

3. Proposed System Framework

3.1. Dataset Availability

3.2. Pre-Processing Mode

3.3. Training and Testing Phase

3.4. Parameters-Performance Metric

Parameter Configuration

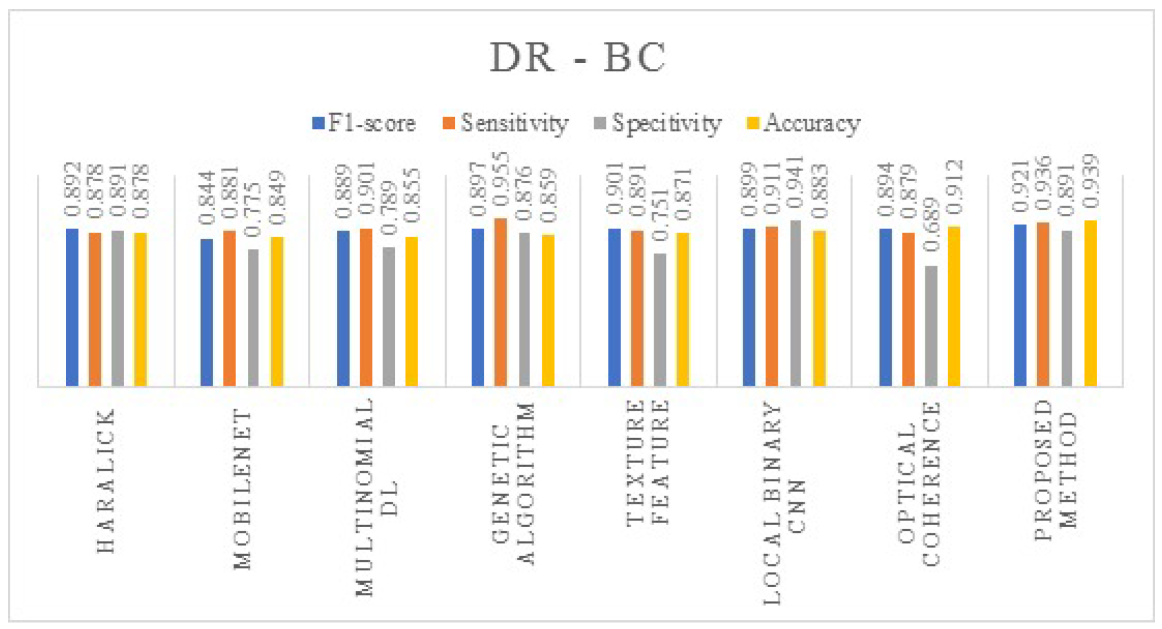

4. Results and Discussions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual Event, Austria, 3–7 May 2021; pp. 1–21. [Google Scholar]

- Das, S.; Kharbanda, K.; Suchetha, M.; Raman, R.; Dhas, E. Deep learning architecture based on segmented fundus image features for classification of diabetic retinopathy. Biomed. Signal Process. Control. 2021, 68, 102600. [Google Scholar] [CrossRef]

- Dong, L.; Yang, Q.; Zhang, R.H.; Wei, W.B. Artificial intelligence for the detection of age-related macular degeneration in colour fundus photographs: A systematic review and meta-analysis. Eclinical Med. 2021, 35, 100875. [Google Scholar] [CrossRef] [PubMed]

- Alqudah, A.M.; Alquran, H.; Abu-Qasmieh, I.; Al-Badarneh, A. Employing Image Processing Techniques and Artificial Intelligence for Automated Eye Diagnosis Using Digital Eye Fundus Images. J. Biomimetics Biomater. Biomed. Eng. 2018, 39, 40–56. [Google Scholar] [CrossRef]

- Dua, J.; Zou, B.; Ouyang, P.; Zhao, R. Retinal microaneurysm detection based on transformation splicing and multi-context ensemble learning. Biomed. Signal Process. Control. 2022, 74, 103536. [Google Scholar] [CrossRef]

- Gayathri, S.; Krishna, A.K.; Gopi, V.P.; Palanisamy, P. Automated Binary and Multiclass Classification of Diabetic Retinopathy Using Haralick and Multiresolution Features. IEEE Access 2020, 8, 57497–57504. [Google Scholar] [CrossRef]

- Bein, N.; Rajpurkar, P.; Ball, R.L.; Irvin, J. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: Development and retrospective validation of MRNet. PLoS Med. 2019, 11, e1002699. [Google Scholar] [CrossRef]

- Adriman, R.; Muchtar, K.; Maulina, N. Performance Evaluation of Binary Classification of Diabetic Retinopathy through Deep Learning Techniques using Texture Feature. Procedia Comput. Sci. 2021, 179, 88–94. [Google Scholar] [CrossRef]

- Ullah, N.; Mohmand, M.I.; Ullah, K. Diabetic Retinopathy Detection Using Genetic Algorithm-Based CNN Features and Error Correction Output Code SVM Framework Classification Model. Wirel. Commun. Mob. Comput. 2022, 2, 7095528. [Google Scholar] [CrossRef]

- Pavate, A.; Mistry, J.; Palve, R.; Gami, N. Diabetic Retinopathy Detection-MobileNet Binary Classifier. Acta Sci. Med. Sci. 2020, 4, 86–91. [Google Scholar] [CrossRef]

- Trivedi, A.; Desbiens, J.; Gross, R.; Gupta, S.; Ferres, J.M.L.; Dodhia, R. Binary Mode Multinomial Deep Learning Model for more efficient Automated Diabetic Retinopathy Detection. In Proceedings of the 33rd Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; Volume 11, pp. 1–7. [Google Scholar]

- Macsik, P.; Pavlovicova, J.; Goga, J.; Kajan, S. Local Binary CNN for Diabetic Retinopathy Classification on Fundus Images. Acta Polytech. Hung. 2022, 19, 27–45. [Google Scholar]

- Miere, A.; Excoffier, J.-B.; Pallonne, C.; Ansary, M.F.; Kerr, S.; Ortala, M.; Souied, E. Deep learning-based classification of diabetic retinopathy with or without macular ischemia using optical coherence tomography angiography images. Investig. Ophthalmol. Vis. Sci. 2022, 63, 1–13. [Google Scholar]

- Miao, Y.; Tang, S. Classification of Diabetic Retinopathy Based on Multiscale Hybrid Attention Mechanism and Residual Algorithm. Mach. Learn. Energy Effic. Wirel. Commun. Mob. Comput. 2022, 2022, 5441366. [Google Scholar] [CrossRef]

- Nakayama, L.F.; Ribeiro, L.Z.; Gonçalves, M.B.; Ferraz, D.A.; Nazareth, H. Diabetic retinopathy classification for supervised machine learning algorithms. Int. J. Retin. Vitr. 2022, 1, 1–5. [Google Scholar] [CrossRef]

- Saravanan, S.; Kumar, V.V.; Sarveshwaran, V.; Indirajithu, A.; Elangovan, D.; Allayear, S.M. Computational and Mathematical Methods in Medicine Glioma Brain Tumor Detection and Classification Using Convolutional Neural Network. Comput. Math. Methods Med. 2022, 2022, 4380901. [Google Scholar] [CrossRef]

- Zhang, G.; Sun, B.; Chen, Z.; Gao, Y.; Zhang, Z.; Li, K.; Yang, W. Diabetic Retinopathy Grading by Deep Graph Correlation Network on Retinal Images Without Manual Annotations. Front. Med. 2022, 9, 872214. [Google Scholar] [CrossRef]

- Li, X.; Xia, H.; Lu, L. ECA-CBAM: Classification of Diabetic Retinopathy: Classification of diabetic retinopathy by cross-combined attention mechanism. In Proceedings of the ICIAI 2022: 2022 the 6th International Conference on Innovation in Artificial Intelligence, Guangzhou, China, 4–6 March 2022; pp. 78–92. [Google Scholar]

- Selvachandran, G.; Quek, S.G.; Paramesran, R.; Ding, W. Developments in the detection of diabetic retinopathy: A state-of-the-art review of computer-aided diagnosis and machine learning methods. Artifcial Intell. Rev. 2022, 11, 1–13. [Google Scholar] [CrossRef]

- Das, D.; Kr, S.; Biswas; Bandyopadhyay, S. A critical review on diagnosis of diabetic retinopathy using machine learning and deep learning. Multimed. Tools Appl. 2022, 81, 25613–25655. [Google Scholar] [CrossRef]

- Alahmadi, M. Texture Attention Network for Diabetic Retinopathy Classification. IEEE Access 2022, 10, 55522–55532. [Google Scholar] [CrossRef]

- Sivaparthipan, C.B.; Muthu, B.A.; Manogaran, G.; Maram, B.; Sundarasekar, R.; Krishnamoorthy, S.; Hsu, C.H.; Chandran, K. Innovative and efficient method of robotics for helping the Parkinson’s disease patient using IoT in big data analytics. Trans. Emerg. Telecommun. Technol. 2020, 31, e3838. [Google Scholar] [CrossRef]

- Lakshmanaprabu, S.K.; Mohanty, S.N.; Krishnamoorthy, S.; Uthayakumar, J.; Shankar, K. Online clinical decision support system using optimal deep neural networks. Appl. Soft Comput. 2019, 81, 105487. [Google Scholar]

- Pham, H.N.; Tan, R.J.; Cai, Y.T.; Mustafa, S.; Yeo, N.C.; Lim, H.J.; Do, T.T.T.; Nguyen, B.P.; Chua, M.C.H. Automated grading in diabetic retinopathy using image processing and modified efficientnet. In Proceedings of the International Conference on Computational Collective Intelligence, Da Nang, Vietnam, 30 November–3 December 2020; Springer: Berlin/Heidelberg, Germany; pp. 505–515. [Google Scholar]

- Nguyen, Q.H.; Muthuraman, R.; Singh, L.; Sen, G.; Tran, A.C.; Nguyen, B.P.; Chua, M. Diabetic retinopathy detection using deep learning. In Proceedings of the 4th International Conference on Machine Learning and Soft Computing, Haiphong City, Vietnam, 17–19 January 2020; pp. 103–107. [Google Scholar]

- Vipparthi, V.; Rao, D.R.; Mullu, S.; Patlolla, V. Diabetic Retinopathy Classification using Deep Learning Techniques. In Proceedings of the 2022 3rd International Conference on Electronics and Sustainable Communication Systems (ICESC), IEEE, Coimbatore, India, 17–19 August 2022; pp. 840–846. [Google Scholar]

- Tsiknakis, N.; Theodoropoulos, D.; Manikis, G.; Ktistakis, E.; Boutsora, O.; Berto, A.; Scarpa, F.; Scarpa, A.; Fotiadis, D.I.; Marias, K. Deep learning for diabetic retinopathy detection and classification based on fundus images: A review. Comput. Biol. Med. 2021, 135, 104599. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, B.P.; Tay, W.-L.; Chui, C.-K. Robust Biometric Recognition from Palm Depth Images for Gloved Hands. IEEE Trans. Hum.-Mach. Syst. 2015, 45, 799–804. [Google Scholar] [CrossRef]

- Wang, A.X.; Chukova, S.S.; Nguyen, B.P. Implementation and Analysis of Centroid Displacement-Based k-Nearest Neighbors. In Proceedings of the 18th International Conference, Advanced Data Mining and Applications: ADMA 2022, Brisbane, QLD, Australia, 28–30 November 2022; pp. 431–443. [Google Scholar]

- Chang, C.-C.; Lin, C.-J. LIBSVM: A Library for Support Vector Machines. ACM Trans. Intell. Syst. Technol. 2001, 2, 1–27. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Jin, K.; Huang, X.; Zhou, J.; Li, Y.; Yan, Y.; Sun, Y.; Zhang, Q.; Wang, Y.; Ye, J. Fives: A Fundus Image Dataset for Artificial Intelligence based Vessel Segmentation. Data Descriptor. Sci. Data 2022, 475, 1–8. [Google Scholar] [CrossRef]

- Zhang, Z.; Wang, L.; Zheng, W.; Yin, L.; Hu, R.; Yang, B. Endoscope image mosaic based on pyramid ORB. Biomed. Signal Process. Control 2021, 71, 103261. [Google Scholar] [CrossRef]

- Cao, Z.; Wang, Y.; Zheng, W.; Yin, L.; Tang, Y.; Miao, W.; Liu, S.; Yang, B. The algorithm of stereo vision and shape from shading based on endoscope imaging. Biomed. Signal Process. Control 2022, 76, 103658. [Google Scholar] [CrossRef]

- Qin, X.; Ban, Y.; Wu, P.; Yang, B.; Liu, S.; Yin, L.; Liu, M.; Zheng, W. Improved Image Fusion Method Based on Sparse Decomposition. Electronics 2022, 11, 2321. [Google Scholar] [CrossRef]

- Liu, R.; Wang, X.; Wu, Q.; Dai, L.; Fang, X.; Yan, T.; Son, J.; Tang, S.; Li, J.; Gao, Z.; et al. DeepDRiD: Diabetic Retinopathy—Grading and Image Quality Estimation Challenge. Patterns 2022, 3, 100512. [Google Scholar] [CrossRef]

- Mohanarathinam, A.; Manikandababu, C.S.; Prakash, N.B.; Hemalakshmi, G.R.; Subramaniam, K. Diabetic Retinopathy Detection and Classification using Hybrid Multiclass SVM classifier and Deeplearning techniques. Math. Stat. Eng. Appl. 2022, 71, 891–903. [Google Scholar]

| Diseases | Classes | Numbers |

|---|---|---|

| Retinopathy grade | 0 | 546 |

| 1 | 153 | |

| 2 | 247 | |

| 3 | 254 | |

| Macular Edema | 0 | 947 |

| 1 | 75 | |

| 2 | 151 |

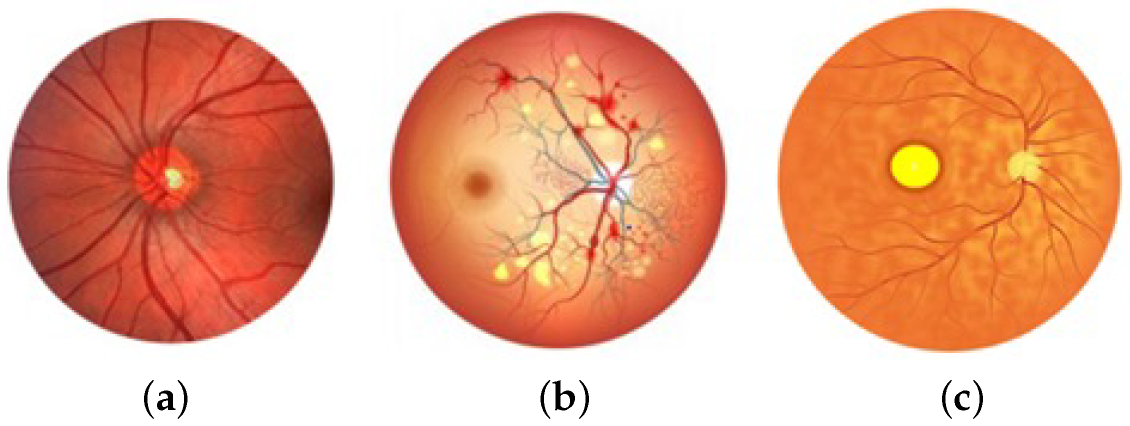

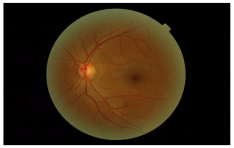

| Class | Grade | Sample Image |

|---|---|---|

| Healthy | 0 |  |

| 1 |  | |

| Retinopathy grade | 2 |  |

| 3 |  |

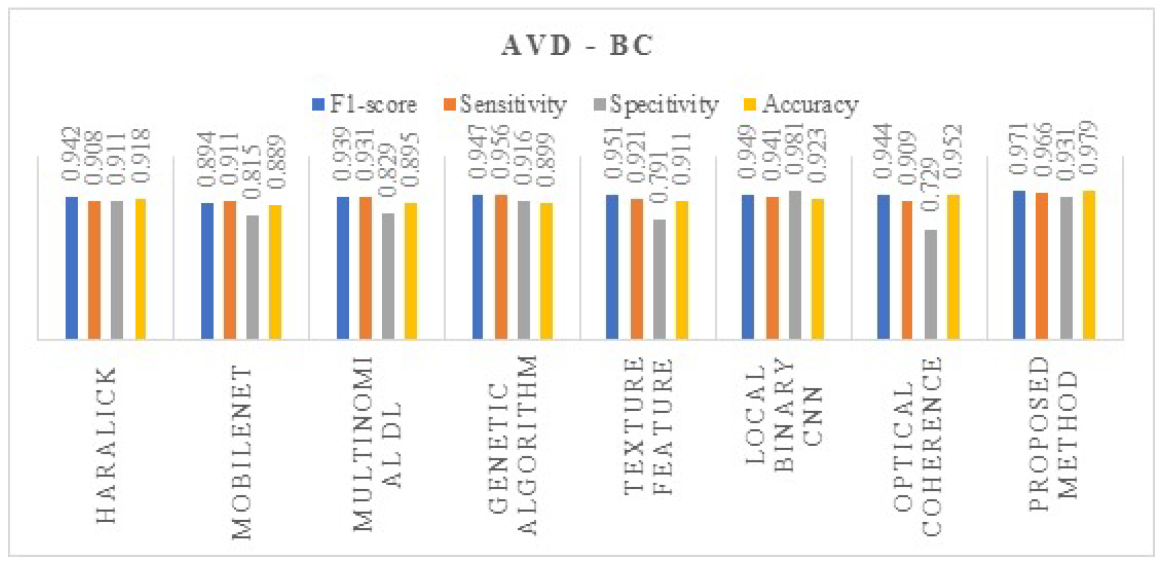

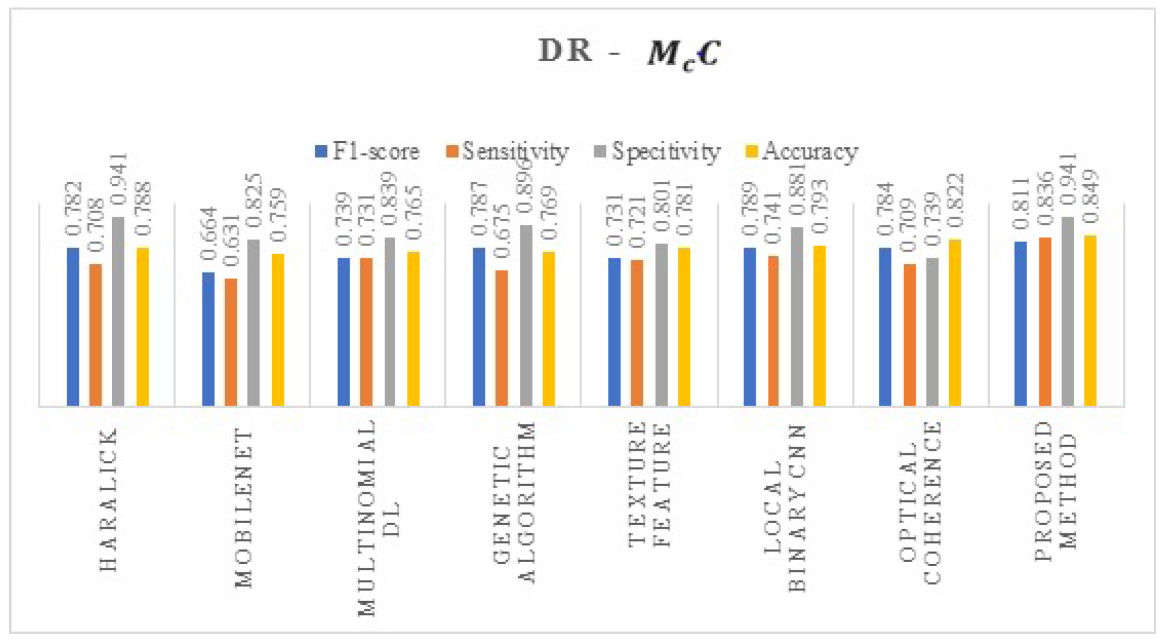

| Techniques | Dataset |

|---|---|

| Haralick [6] | DIARETDB0 |

| MobileNet [10] | Aptos |

| Multinomial DL [11] | ImageNet |

| Genetic Algorithm [9] | Kaggle |

| Texture Feature [8] | Aptos 2019 |

| Local Binary CNN [12] | Aptos 2021 |

| Optical coherence [13] | OCTA 500 |

| Proposed method | DeepDRiD |

| Images | Techniques | F1-Score | Sensitivity | Specificity | Accuracy |

|---|---|---|---|---|---|

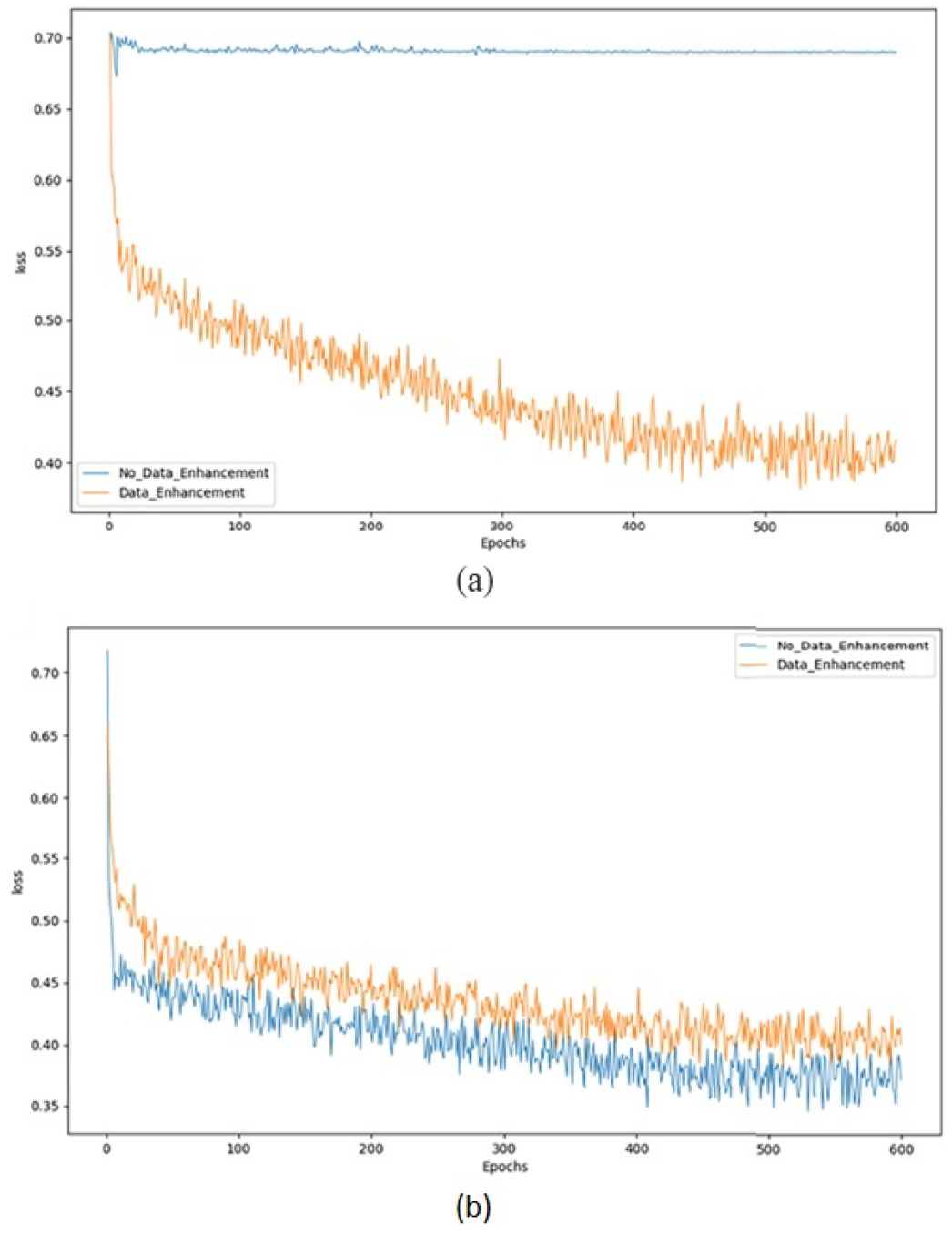

| DR | Without Augmentation | 0.694 | 0.58 | 0.49 | 0.61 |

| With Augmentation | 0.921 | 0.936 | 0.891 | 0.939 | |

| AVD | Without Augmentation | 0.841 | 0.712 | 0.918 | 0.946 |

| With Augmentation | 0.971 | 0.966 | 0.931 | 0.979 |

| Images | Techniques | F1-Score | Sensitivity | Specificity | Accuracy |

|---|---|---|---|---|---|

| DR | Transfer learning | 0.829 | 0.78 | 0.69 | 0.751 |

| AVD | Transfer learning | 0.794 | 0.76 | 0.64 | 0.675 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Srinivasan, S.; Nagarnaidu Rajaperumal, R.; Mathivanan, S.K.; Jayagopal, P.; Krishnamoorthy, S.; Kardy, S. RETRACTED: Detection and Grade Classification of Diabetic Retinopathy and Adult Vitelliform Macular Dystrophy Based on Ophthalmoscopy Images. Electronics 2023, 12, 862. https://doi.org/10.3390/electronics12040862

Srinivasan S, Nagarnaidu Rajaperumal R, Mathivanan SK, Jayagopal P, Krishnamoorthy S, Kardy S. RETRACTED: Detection and Grade Classification of Diabetic Retinopathy and Adult Vitelliform Macular Dystrophy Based on Ophthalmoscopy Images. Electronics. 2023; 12(4):862. https://doi.org/10.3390/electronics12040862

Chicago/Turabian StyleSrinivasan, Saravanan, Rajalakshmi Nagarnaidu Rajaperumal, Sandeep Kumar Mathivanan, Prabhu Jayagopal, Sujatha Krishnamoorthy, and Seifedine Kardy. 2023. "RETRACTED: Detection and Grade Classification of Diabetic Retinopathy and Adult Vitelliform Macular Dystrophy Based on Ophthalmoscopy Images" Electronics 12, no. 4: 862. https://doi.org/10.3390/electronics12040862

APA StyleSrinivasan, S., Nagarnaidu Rajaperumal, R., Mathivanan, S. K., Jayagopal, P., Krishnamoorthy, S., & Kardy, S. (2023). RETRACTED: Detection and Grade Classification of Diabetic Retinopathy and Adult Vitelliform Macular Dystrophy Based on Ophthalmoscopy Images. Electronics, 12(4), 862. https://doi.org/10.3390/electronics12040862