5.1. Datasets

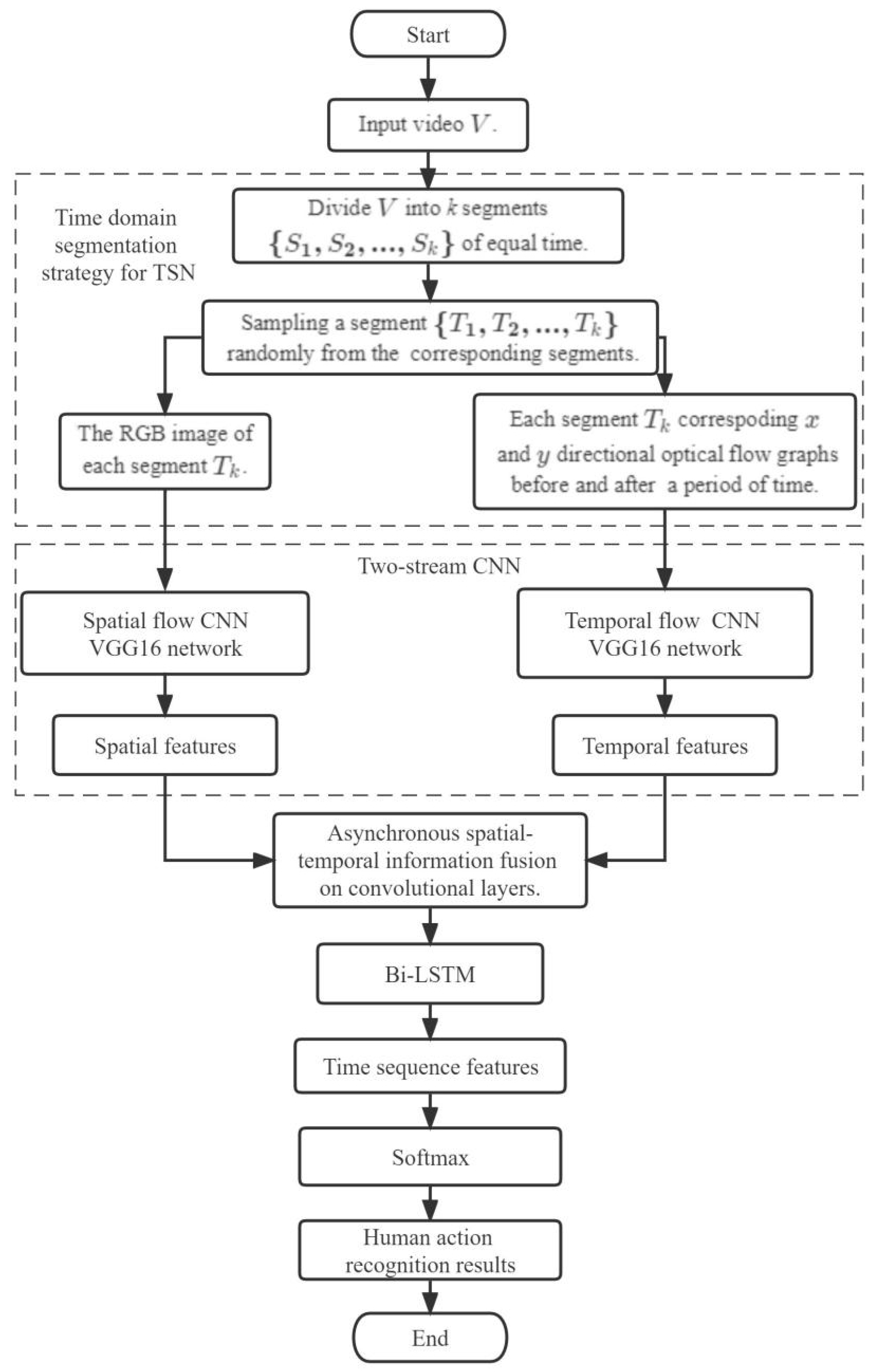

This paper studies the action recognition for the whole emergency rescue video sequences, which is the classification of emergency rescue actions for the whole video data, so the annotation file is in the form of and the action () is specified action for each video (video). Experiments mainly adopt an improved self-built emergency rescue dataset and the publicly available dataset UCF101.

5.1.1. An Improved Emergency Rescue Dataset

Based on referring to the abundant information, there are few action datasets in emergency rescue scenes. The existing datasets for spatial-temporal action recognition usually provide sparse annotations for composite actions in brief video clips. The emergency rescue dataset used in experiments is an improved research result of the authors [

4].

The video dataset of spatiotemporally localized Atomic Visual Actions (AVA) densely annotates 80 atomic visual actions in 430 15-min video clips, where actions are localized in space and time, resulting in 1.58M action labels with multiple labels per person occurring frequently. The AVA dataset defines atomic visual actions using movies to gather a varied set of action representations. This departs from existing datasets for spatial-temporal action recognition, which typically provides sparse annotations for composite actions in short video clips. AVA, with its realistic scene and action complexity, exposes the intrinsic difficulty of action recognition. Since there are many people and multiple actions in the identification scenes of dynamic emergency rescue, the self-built dataset of literature [

4] is built with reference to AVA.

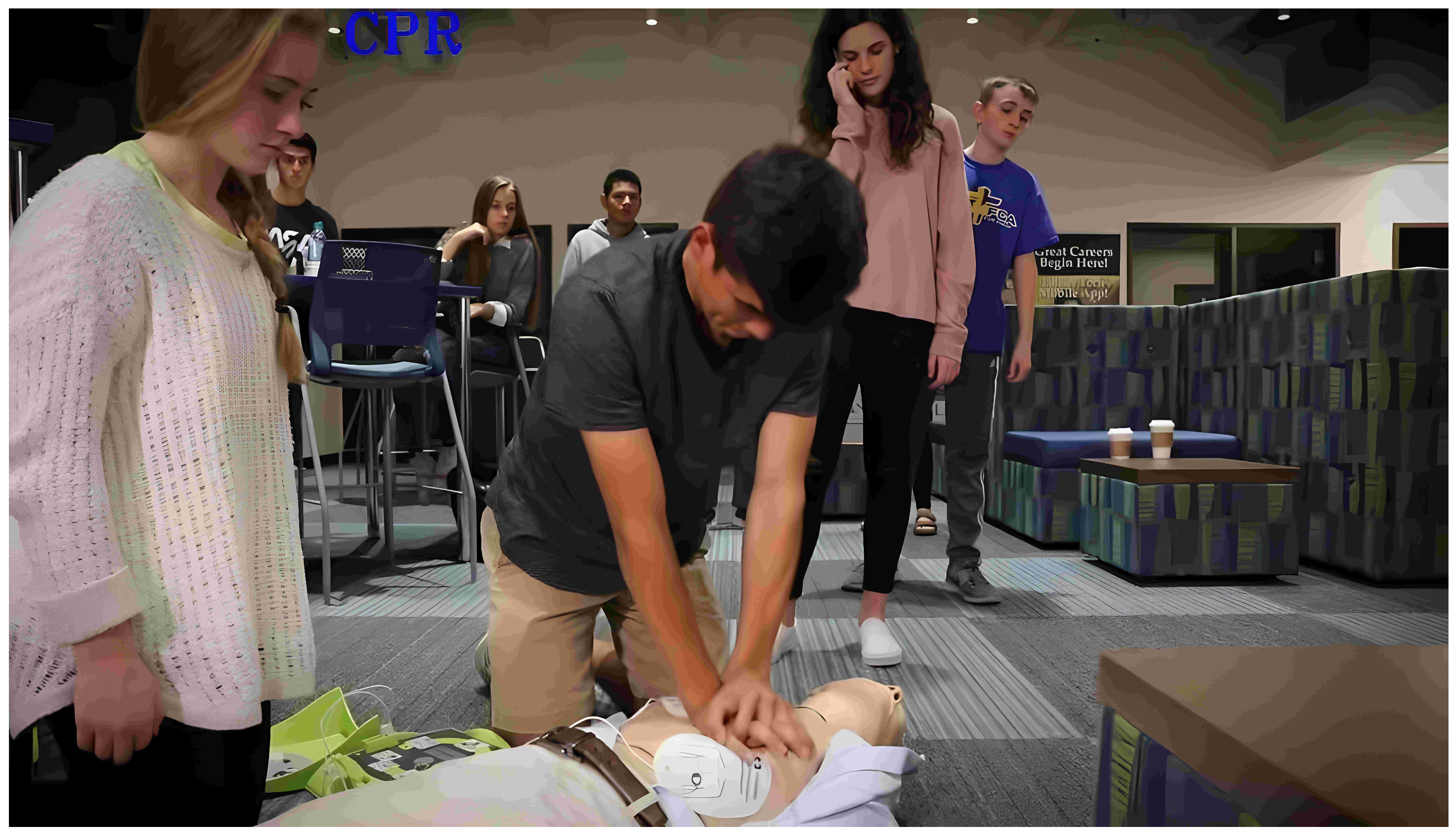

The data divided the actions in the dynamic scenes of emergency rescue into daily actions and medical rescue actions including carrying, cardio-pulmonary resuscitation (CPR), bandage, infusion, injection, oxygen supply, standing, walking, running, lying, sitting, and crouching/kneeling.

We collected various videos about emergency rescue scenes from a variety of video websites such as YouTube, Tencent Video, and Bilibili, and intercepted the videos to obtain the segments related to emergency rescue operations using the video editing software FFmpeg, and include a total of 700 video segments. In addition to the videos collected in the literature [

4], the daily actions also use some segments of the KTH public dataset [

29]. To increase the recognition accuracy of small targets for large ranges, some small target data are also added to the dataset. Some examples of the dataset are shown in

Figure 9.

A bounding box is used to locate a person and his or her actions. For each piece of video data, keyframes are extracted, and human-centered annotations are performed. In each keyframe, each person is marked with the preset action vocabularies of the paper that may have multiple actions.

To improve the annotation efficiency, Faster R-CNN is used to detect the position of the person, and Via software is utilized to annotate actions and people. In the stage of action annotation, this paper deletes all incorrect bounding boxes and adds missing bounding boxes to ensure high accuracy. During the labeling stage, each video clip is annotated by three independent annotators to ensure the accuracy of the dataset as much as possible.

Marking all actions of all people in all keyframes, most person-bounding boxes have multiple labels, which naturally leads to a type imbalance between action categories. Compared to daily actions, there are fewer medical actions. This paper refers to the features of the AVA dataset and runs the identification model on actions without adopting the manually constructed and balanced datasets. For the actions annotated by the self-built dataset, the frequency distribution of the various action categories is counted in

Figure 10.

The number of manual annotation samples is smaller. To prevent the overfitting phenomenon in the network and increase sample diversity, this paper collects action sequence video data as the emergency rescue human action data for annotation and then expands the data through data augmentation methods.

Data augmentation can address the issue of sample class imbalance and prevent overfitting in neural networks. The preprocessing includes short-edge resizing as well as normal operations. During the model training process, image augmentation methods of cropping sampling, translation transformation, and random flipping are used for the images.

The dataset has a great influence on the experiment results. To ensure the accuracy of the dataset, the paper trains the model on both the self-built and UCF101 datasets, and chooses the model with the best result to further test and adjust the dataset.

5.1.2. UCF101 Dataset

The mainstream action recognition dataset UCF101 has 13,320 videos from 101 action categories. The action categories include human–object interaction, human–human interaction, playing musical instruments, body-motion only, and sports. Since most of the available action recognition datasets are unrealistic and performed by participants in stages, UCF101 aims to encourage further research on action recognition by learning and exploring new realistic action categories. The database consists of realistic user-uploaded videos containing camera motion and cluttered backgrounds. UCF101 is currently the most challenging dataset of actions.

5.3. Analysis of the Experiment Results

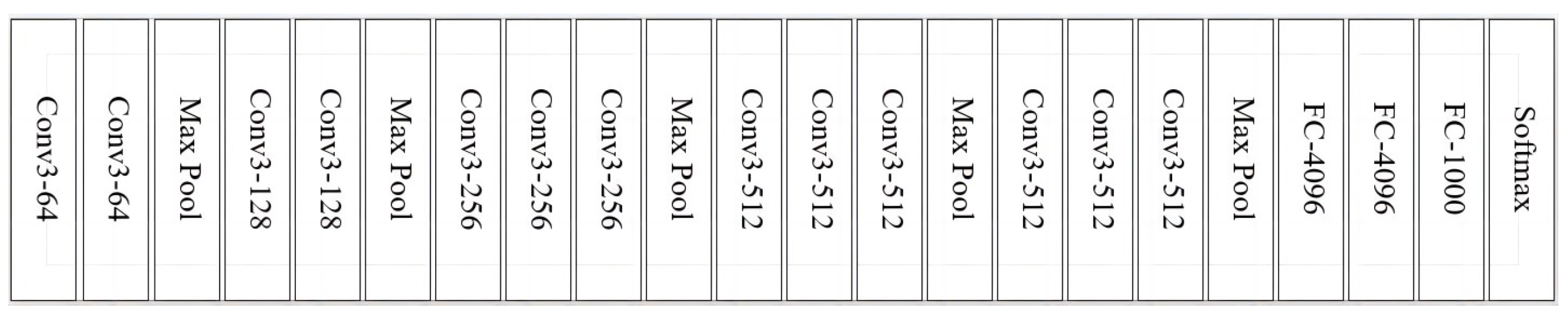

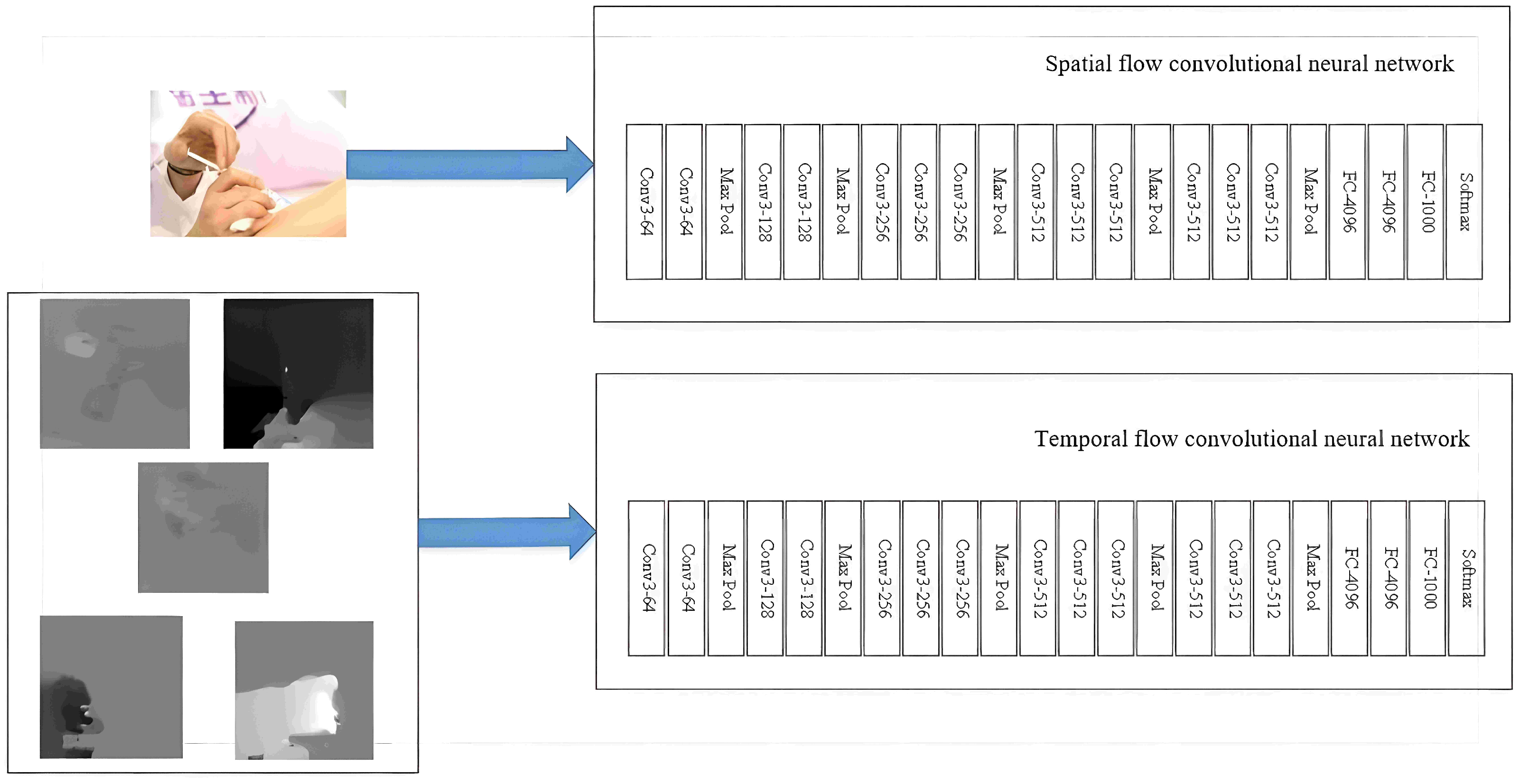

To reflect the effectiveness of the spatial-temporal asynchronous fusion network alone, the VGG_16 network structure is adopted in the fusion network.

(1) Analysis of experiment results of spatial-temporal asynchronous information

The model inputs a total of

superimposed optical flows of successive frames, including synchronous and asynchronous information on spatial and motion sequences. The effect on the model is studied by taking different values of

k, and the results are shown in

Table 4.

The result is best when k is taken as 10, that is, a superimposed optical flow field from (− 20) to ( + 20) for consecutive 40 frames. When k is, respectively, taken as 15 and 20, there is too much confusing information, which can easily decrease the recognition accuracy.

When training the spatial-temporal fusion network, the input of the image recognition network is a static image frame at time . The input of the optical flow network is superimposed optical flow fields from (− 20) to ( + 20) centered on the time , forming a 2 × 40 = 80 channel optical flow block, which is cut into 20 channel superimposed optical flow blocks by a sliding window with a step size of 5. Due to the limitation of hardware memory, the batch size used for network training is 8, which is equivalent to randomly sampling 8 frames of static images during each training and the optical flow fields of 20 frames before and after each frame.

Due to the strong spatial-temporal asynchrony of actions, this paper fully utilizes spatial and temporal asynchronous actions to improve accuracy by integrating the asynchronous information of spatial and temporal features. The effects of the spatial-temporal information on the recognition accuracy are shown in

Table 5.

(2) Analysis of the experiment results of the fusion methods

In this paper, spatial-temporal features are fused in the convolutional layer inside the two-stream fusion VGG16 model. During the experiment, the spatial-temporal feature fusion is performed on the convolutional layer Conv3 of the two-stream structure. The paper compares the separate spatial flow, temporal flow networks, and the two-stream networks, respectively. The action recognition accuracy of the different fusion methods is shown in

Table 6.

As can be seen from

Table 6, the fusion of spatial-temporal asynchronous information in the convolutional layer Conv3 has the best effect. Different from the loss of information in the fully connected layer fusion, the fusion in the convolutional layer can not only retain better middle-level information of time and space but also obtain higher accuracy.

(3) Experiment comparison analysis of the action recognition for the spatial-temporal fusion CNN

To verify the effectiveness of the spatial-temporal fusion CNN in action recognition, the proposed method is compared with TSN, two-stream network, and two-stream network + Bi-LSTM methods, as shown in

Table 7. It can be seen that the proposed method improves recognition accuracy.

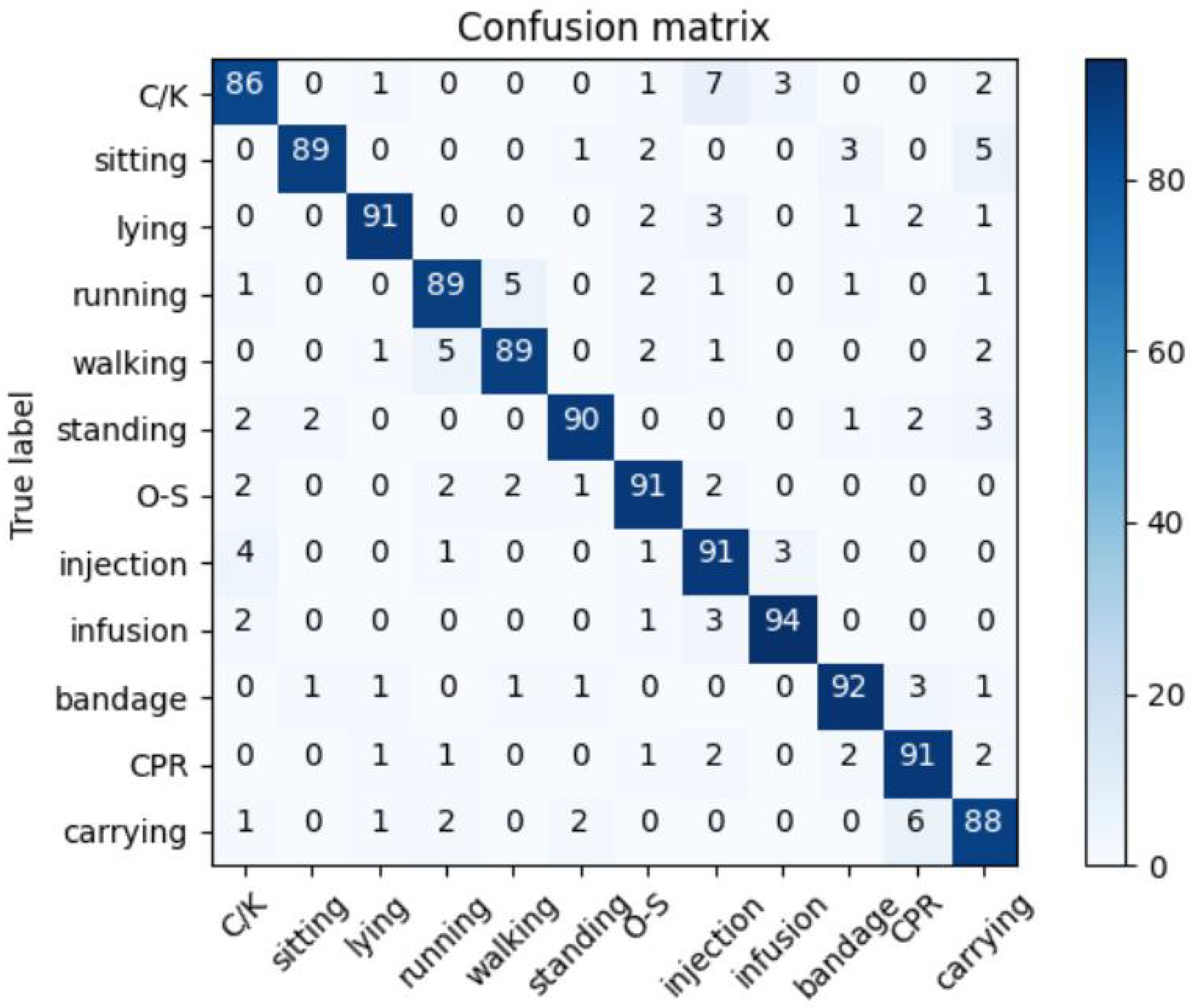

To give the model performance more intuitively, a confusion matrix is used to present the degree of confusion between the predicted and actual categories of the model, as shown in

Figure 11.

In

Figure 11, C/K represents crouching/kneeling, and O-S indicates oxygen supply. As can be seen from the confusion matrix in

Figure 11, among the confusing actions, carrying is a 7% probability of being identified as walking because carrying has a certain overlap with walking and running, and other actions are similar. CPR is a 6% probability of identifying as C/K since CPR in emergency rescue situations is mostly in a kneeling position. Injection and infusion can be confused with each other, i.e., 5% of the injections will be predicted to be infusions and vice versa. Other actions are misclassified with a small probability. In daily human actions, the common action of walking is a 3% probability of being recognized as running, 1% probability of being identified as standing, and 4% probability of being recognized as carrying, because it is easy to be misclassified as carrying when several people gather in one place. C/K is a 6% probability of being identified as sitting. The above actions are easily confusing daily actions and medical rescue actions. There are still some misclassifications of easily confusing actions, but the misclassifications have reduced to some extent, and the model has a better ability to distinguish confusing actions.

The recognition results are visualized as shown in

Figure 12,

Figure 13 and

Figure 14. In

Figure 12, the optical flow captures the dynamic action sequence information about the action of dressing a wound, although the injured man is sitting and relatively still, the action sequences of the ambulanceman capture the bandage, so the recognition results are the action of the bandage.

Figure 13 shows the recognition results when the persons in the video are carrying uniformly.

For the more complex background situations, when the persons in the video perform different actions, the recognition result is the action with the highest probability of all actions, i.e., performing the most important action.

Figure 14 gives the recognition results when the people in the video perform different actions, respectively, standing, C/K, and CPR, the dynamic action of the main person is CPR, and the recognition results are CPR, which means that the main execution action of the video is CPR.

Moreover, to verify the effectiveness of the proposed spatial-temporal fusion model, experiments are also conducted on the mainstream dataset UCF101 to compare the proposed method with the classical and advanced methods.

This paper compares single-flow CNN and various improved methods based on two-flow CNN, including the algorithm based on C3D, the traditional recognition algorithm based on two-stream convolutional networks (Two-stream Convnet), the Long-Term Recurrent Convolutional Networks (LRCN)-based recognition algorithm, two-stream network and LSTM fusion recognition algorithm (Two-stream + LSTM), recognition algorithm fused two-stream network and LSTM in convolutional layer (Two-stream + LSTM + ConvFusion), the improved human action recognition algorithm of Spatial Transformer Networks (STN) and CNN fusion [

31], and the two-stream 3D Convnet fusion action recognition algorithm [

15]. The comparison results are shown in

Table 8.

The comparison results show the proposed spatial and temporal fusion method has the best recognition effect, the method can accurately recognize the human action in the videos and verify the effectiveness of the method.

In terms of speed, on the UCF101 public dataset, the time complexity of this method is determined by TSN and Bi-LSTM with a running speed of 197.2 fps. The time complexity of C3D is determined by the convolutional layer with a running speed of 313 fps. The time complexity of the two-stream network is also determined by the convolutional layer with a running speed of 33.3 fps. The LRCN method is simpler than the C3D network structure, has a small number of parameters and is easy to train, and runs faster than the C3D network. The time complexity of literature [

32] is determined by Two-stream together with LSTM with a running speed of 29.7 fps. The time complexity of literature [

33], literature [

31], and literature [

15] is determined by the convolutional layer and LSTM. Literature [

33] runs at two speeds, 6 fps when the input is an optic flow image and 30 fps when the input is an RGB frame. The time complexity of literature [

31] is determined jointly by STN and CNN, and its running speed is 37 fps. Literature [

15] runs at two speeds, processed at 186.6 fps when the input is an RGB frame. When the input is an optical flow image, the processing speed is 185.9 fps.

As can be seen from

Table 8, the speed of the proposed method is slower than both the C3D and LRCN methods, mainly due to the high time complexity caused by the introduction of Bi-LSTM. Compared with the other methods, the proposed method is fast. Overall, the proposed method has the highest recognition accuracy and relatively fast speed.

In recent years, human action recognition methods have focused on deep learning. At present, the latest methods mainly include the TS-PVAN action recognition model based on attention mechanism [

34], skeleton-based ST-GCN for human action recognition with extended skeleton graph and partitioning strategy [

35], human action recognition based on 2D CNN and Transformer [

36], linear dynamical system approach for human action recognition with two-stream deep features [

37], and hybrid handcrafted and learned feature framework for human action recognition [

38]. Comparative analysis with the latest methods is as follows.

(1) The TS-PVAN action recognition model based on attention mechanism can adequately extract spatial features and possess certain generalization abilities, but the temporal network of the TS-PVAN cannot efficiently model long-range time structure and extract rich long-term temporal information. This paper introduces Bi-LSTM to model long-term motion and fully mine the long-term temporal information.

(2) The human action recognition method combined Skeleton-based ST-GCN with extended skeleton graph and partitioning strategy can extract the non-adjacent joint relationship information in the human skeleton images, and divide the input graph of Graph Convolutional Network (GCN) into five types of fixed length tensors by the partition strategy, to include the maximum motion dependency. However, this method does not consider the temporal features. The proposed method extracts temporal information using the temporal network of the two-stream network.

(3) 2D CNN is one of the mainstream methods for human action recognition at present. 2D CNN-based framework not only has the advantages of lightweight and fast reasoning ability but also operates on short segments of sparsely sampled whole videos. However, 2D CNN still suffers from the insufficient representation of some action features and a lack of temporal modeling capability. The human action recognition method based on 2D CNN and Transformer adopts 2D CNN architecture of channel-spatial attention mechanism to extract spatial features in frames, utilizes Transformer to extract complex temporal information between different frames, and improves the recognition accuracy. However, Transformer extracts spatial and temporal features in sequential order, and as the number of frames increases, the number of parameters also increases substantially, causing a burden for the calculation. The paper adopts a dual-stream network structure to extract the spatial-temporal information, so the spatial-temporal feature extraction is in parallel, and the TSN sparse sampling strategy is used to avoid the greater computational burden caused by the increase in the number of frames.

(4) The human action recognition method combined linear dynamical system approach with two-stream deep features captures the spatial-temporal features of human action using a dual-stream structure. The method operates directly on video sequences. The longer the video sequence is, the more time is consumed. The presented method adopts the time domain segmentation strategy for TSN to randomly sample fragments and speed up the operation.

(5) The human action recognition method based on hybrid handcrafted and learned feature framework uses a two-dimensional wavelet transform to decompose video frames into separable frequency and directional components to extract motion information. The dense trajectory method is used to extract feature points for tracking continuous frames. However, this method can only deal with videos with clear action boundaries, which is also a disadvantage of the proposed method.