Time Synchronization and Space Registration of Roadside LiDAR and Camera

Abstract

1. Introduction

2. Related Work

3. Data Collection and Methods

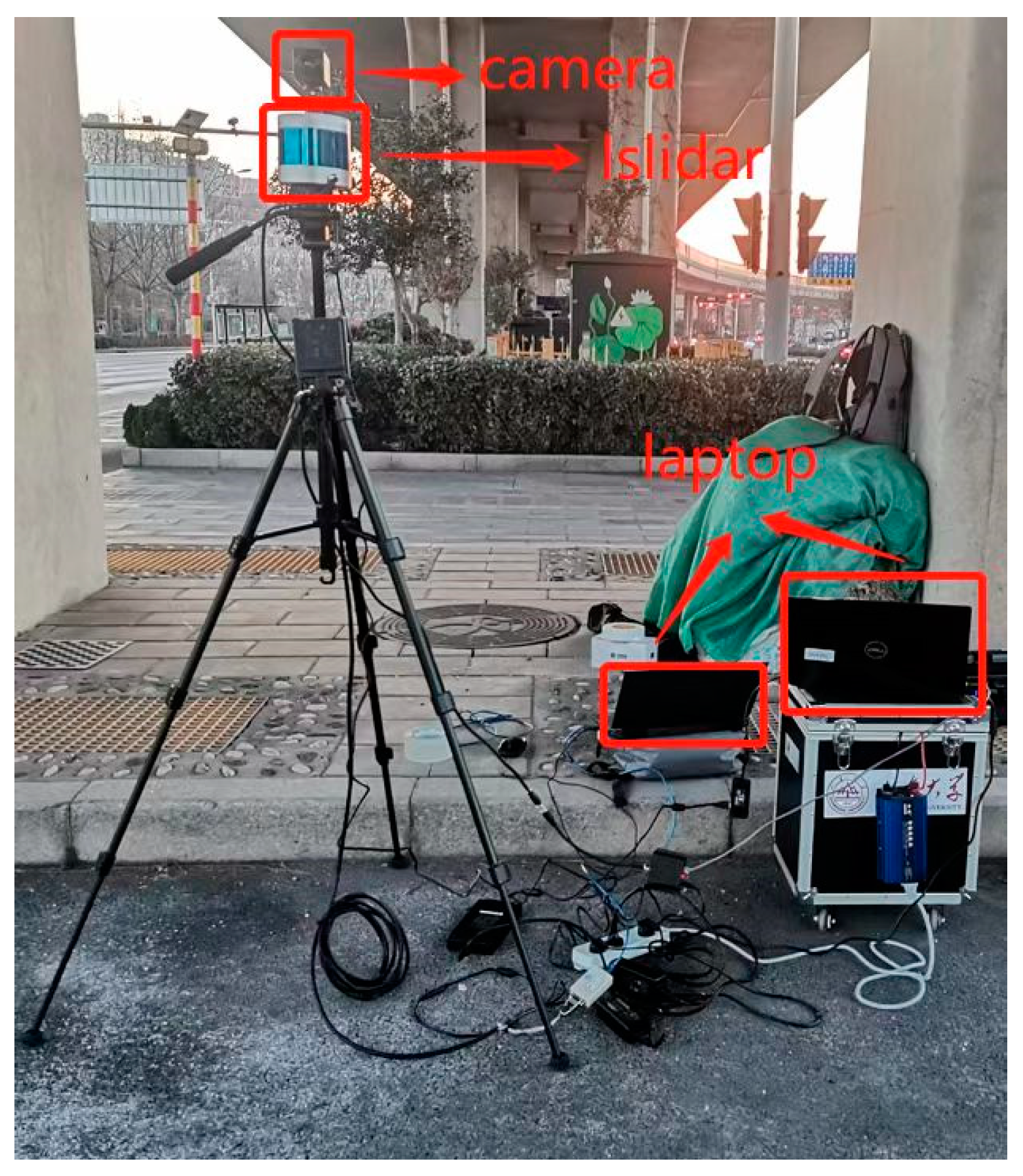

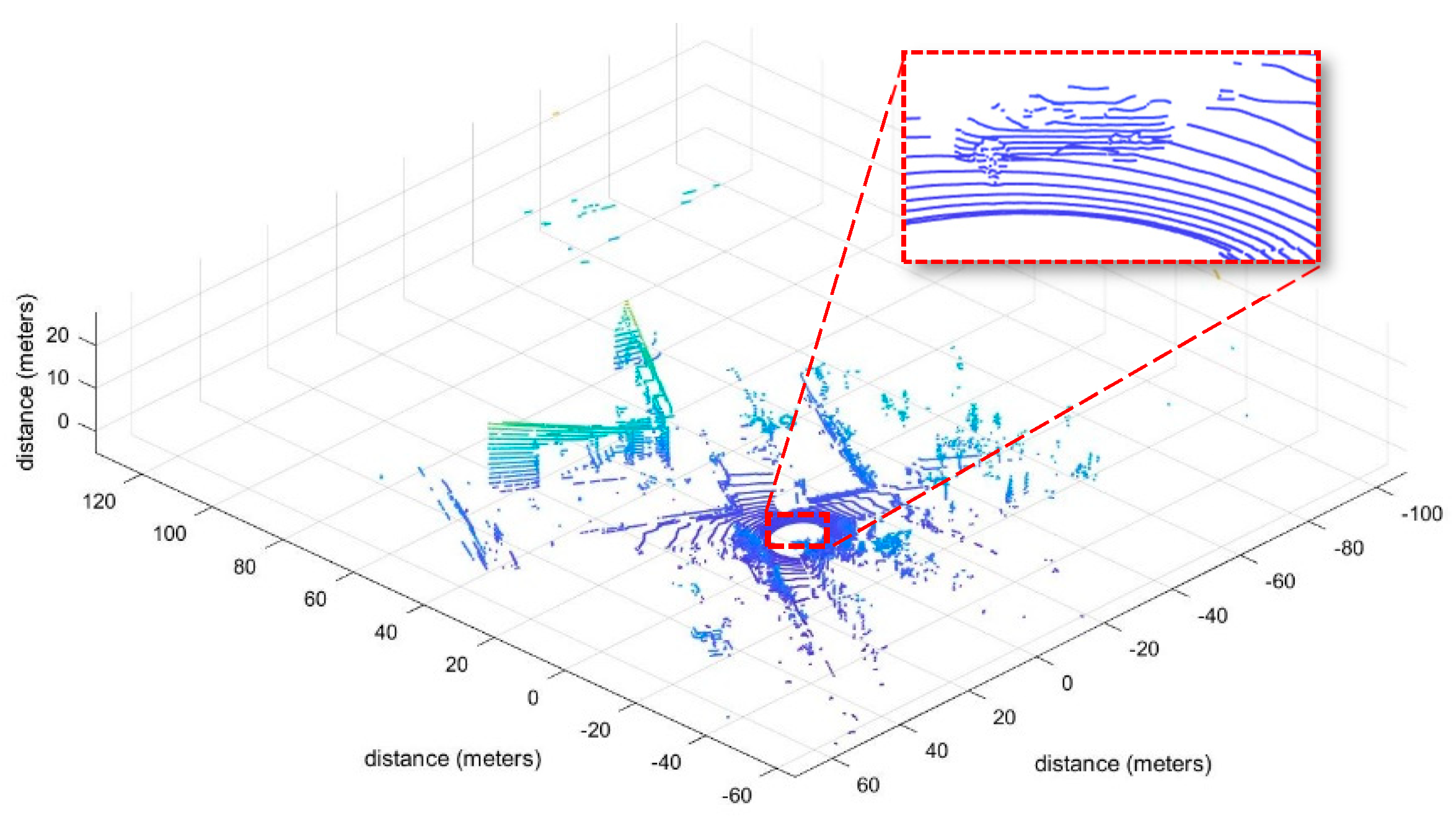

3.1. Data Collection

3.2. Time Synchronization

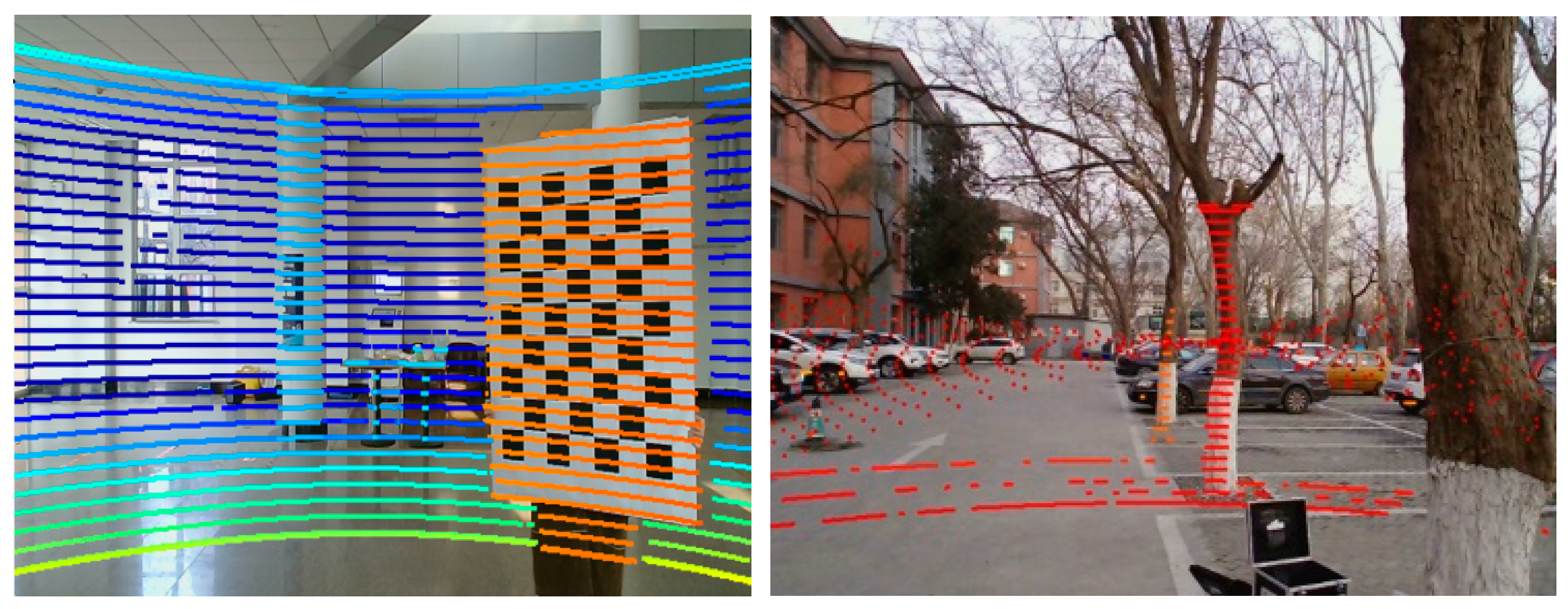

3.3. Space Registration

4. Experimental Analysis

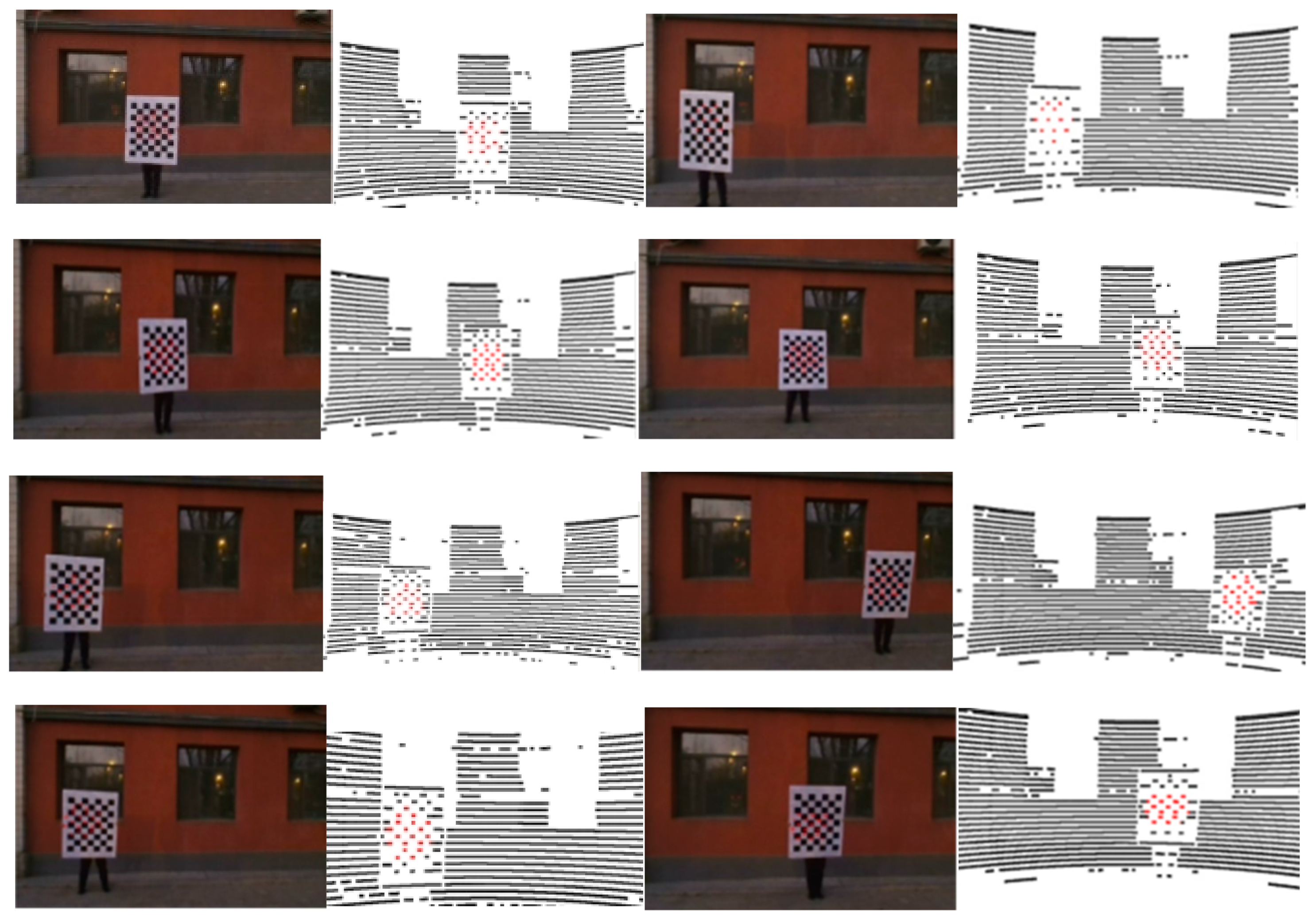

4.1. Time Synchronization Verification Method

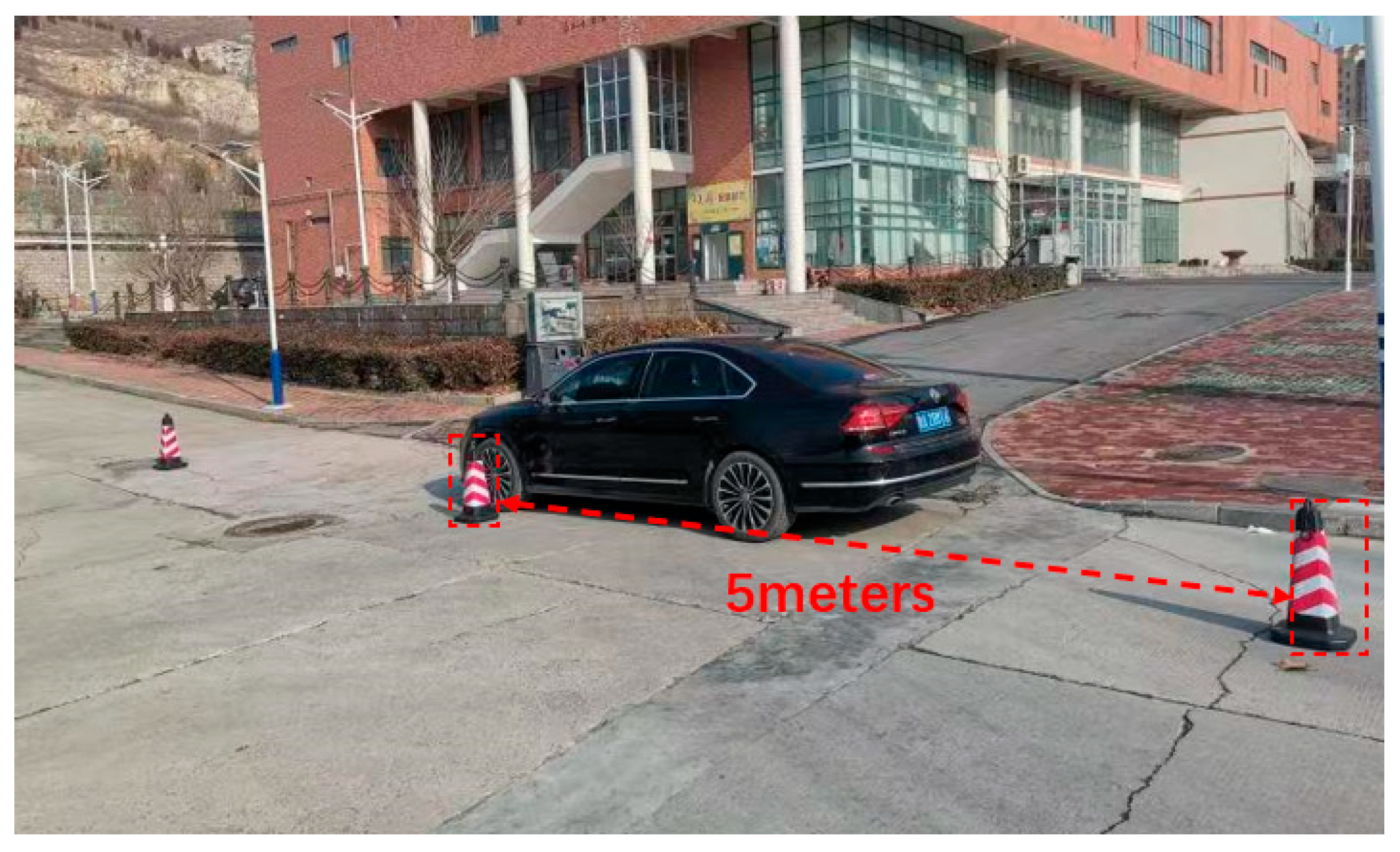

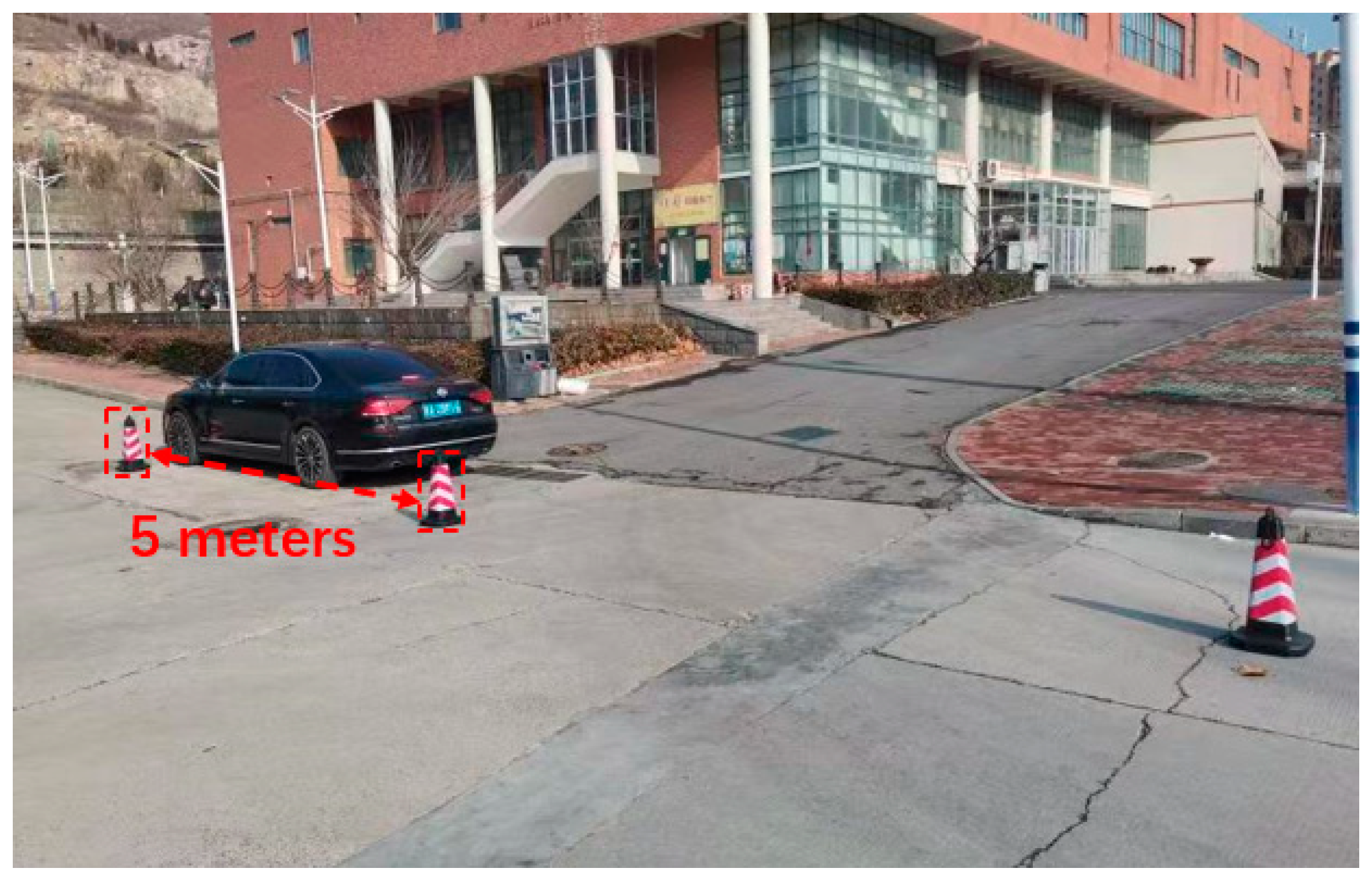

4.2. Space Registration Verification Method

5. Results and Discussions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Beltrán, J.; Guindel, C.; García, F. Automatic extrinsic calibration method for lidar and camera sensor setups. arXiv 2021, arXiv:2101.04431. [Google Scholar] [CrossRef]

- Raj, T.; Hashim, F.H.; Huddin, A.B.; Ibrahim, M.F.; Hussain, A. A Survey on LiDAR Scanning Mechanisms. Electronics 2020, 9, 741. [Google Scholar] [CrossRef]

- Wu, J.; Xiong, Z. A soft time synchronization framework for multi-sensors in autonomous localization and navigation. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Auckland, New Zealand, 9–12 July 2018; pp. 694–699. [Google Scholar]

- Wu, J.; Zhang, Y.; Xu, H. A novel skateboarder-related near-crash identification method with roadside LiDAR data. Accid. Anal. Prev. 2020, 137, 105438. [Google Scholar] [CrossRef] [PubMed]

- Guan, L.; Chen, Y.; Wang, G.; Lei, X. Real-Time Vehicle Detection Framework Based on the Fusion of LiDAR and Camera. Electronics 2020, 9, 451. [Google Scholar] [CrossRef]

- Wei, P.; Cagle, L.; Reza, T.; Ball, J.; Gafford, J. LiDAR and Camera Detection Fusion in a Real-Time Industrial Multi-Sensor Collision Avoidance System. Electronics 2018, 7, 84. [Google Scholar] [CrossRef]

- Lin, J.; Zhang, F. Loam livox: A fast, robust, high-precision LiDAR odometry and mapping package for LiDARs of small FoV. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 3126–3131. [Google Scholar]

- Wu, J.; Xu, H.; Tian, Y.; Zhang, Y.; Zhao, J.; Lv, B. An automatic lane identification method for the roadside light detection and ranging sensor. J. Intell. Transp. Syst. 2020, 24, 467–479. [Google Scholar] [CrossRef]

- Franke, U.; Pfeiffer, D.; Rabe, C.; Knoeppel, C.; Enzweiler, M.; Stein, F.; Herrtwich, R. Making bertha see. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 2–8 December 2013; pp. 214–221. [Google Scholar]

- Wang, Z.; Wang, L.; Xiao, L.; Dai, B. Unsupervised Subcategory Domain Adaptive Network for 3D Object Detection in LiDAR. Electronics 2021, 10, 927. [Google Scholar] [CrossRef]

- Guo, Y.P.; Zou, K.; Chen, S.D. Road Side Perception Simulation System for Vehicle-Road Cooperation. Comput. Syst. Appl. 2021, 30, 92–98. [Google Scholar]

- Chen, J.; Tian, S.; Xu, H.; Yue, R.; Sun, Y.; Cui, Y. Architecture of Vehicle Trajectories Extraction With Roadside LiDAR Serving Connected Vehicles. IEEE Access 2019, 7, 100406–100415. [Google Scholar] [CrossRef]

- Song, H.; Choi, W.; Kim, H. Robust Vision-Based Relative-Localization Approach Using an RGB-Depth Camera and LiDAR Sensor Fusion. IEEE Trans. Ind. Electron. 2016, 63, 3725–3736. [Google Scholar] [CrossRef]

- Yoo, J.H.; Kim, Y.; Kim, J.; Choi, J.W. 3d-cvf: Generating joint camera and lidar features using cross-view spatial feature fusion for 3d object detection. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 720–736. [Google Scholar]

- Bruscato, L.T.; Heimfarth, T.; de Freitas, E.P. Enhancing Time Synchronization Support in Wireless Sensor Networks. Sensors 2017, 17, 2956. [Google Scholar] [CrossRef]

- Li, J.; Mechitov, K.A.; Kim, R.E.; Spencer, B.F., Jr. Efficient time synchronization for structural health monitoring using wireless smart sensor networks. Struct. Control Health Monit. 2016, 23, 470–486. [Google Scholar] [CrossRef]

- Liu, S.; Yu, B.; Liu, Y.; Zhang, K.; Qiao, Y.; Li, T.Y.; Tang, J.; Zhu, Y. Brief industry paper: The matter of time—A general and efficient system for precise sensor synchronization in robotic computing. In Proceedings of the IEEE 27th Real-Time and Embedded Technology and Applications Symposium, Nashville, TN, USA, 18–21 May 2021; pp. 413–416. [Google Scholar]

- Li, J.; Zhang, X.; Li, J.; Liu, Y.; Wang, J. Building and optimization of 3D semantic map based on Lidar and camera fusion. Neurocomputing 2020, 409, 394–407. [Google Scholar] [CrossRef]

- Yu, B.; Hu, W.; Xu, L.; Tang, J.; Liu, S.; Zhu, Y. Building the computing system for autonomous micromobility vehicles: Design constraints and architectural optimizations. In Proceedings of the Annual IEEE/ACM International Symposium on Microarchitecture, Athens, Greece, 17–21 October 2020; pp. 1067–1081. [Google Scholar] [CrossRef]

- Zhao, L.; Zhou, H.; Zhu, X.; Song, X.; Li, H.; Tao, W. Lif-seg: Lidar and camera image fusion for 3d lidar semantic segmentation. arXiv 2021, arXiv:2108.07511. [Google Scholar]

- Zheng, J.; Li, S.; Li, N.; Fu, Q.; Liu, S.; Yan, G. A LiDAR-Aided Inertial Positioning Approach for a Longwall Shearer in Underground Coal Mining. Math. Probl. Eng. 2021, 2021, 6616090. [Google Scholar] [CrossRef]

- Moleski, T.W.; Wilhelm, J. Trilateration Positioning Using Hybrid Camera-LiDAR System; AIAA Scitech 2020 Forum: Orlando, FL, USA, 2020; p. 0393. [Google Scholar]

- Chang, X.; Chen, X.D.; Zhang, J.C. Target detection and tracking based on Lidar and camera information fusion. Opto-Electron. Eng. 2019, 46, 1–11. [Google Scholar]

- Liu, Z. Research on Spatio-Temporal Consistency and Information Fusion Technology of Multi-Sensor. Ph.D. Thesis, National University of Defense Technology, Changsha, China, 2008. [Google Scholar]

- Pusztai, Z.; Eichhardt, I.; Hajder, L. Accurate calibration of multi-lidar-multi-camera systems. Sensors 2018, 18, 2139. [Google Scholar] [CrossRef]

- Faizullin, M.; Kornilova, A.; Ferrer, G. Open-Source LiDAR Time Synchronization System by Mimicking GPS-clock. arXiv 2021, arXiv:2107.02625. [Google Scholar]

- Nikolic, J.; Rehder, J.; Burri, M.; Gohl, P.; Leutenegger, S.; Furgale, P.T.; Siegwart, R. A synchronized visual-inertial sensor system with FPGA pre-processing for accurate real-time SLAM. In Proceedings of the IEEE International Conference on Robotics and Automation, Hong Kong, China, 31 May–7 June 2014; pp. 431–437. [Google Scholar]

- Wu, J.; Xu, H.; Zheng, Y.; Zhang, Y.; Lv, B.; Tian, Z. Automatic Vehicle Classification using Roadside LiDAR Data. Transp. Res. Rec. J. Transp. Res. Board 2019, 2673, 153–164. [Google Scholar] [CrossRef]

- Anderton, D.C. Synchronized Line-Scan LIDAR/EO Imager for Creating 3D Images of Dynamic Scenes: Prototype II. Master’s Thesis, Utah State University, Logan, UT, USA, 2005. [Google Scholar] [CrossRef]

- Kim, R.; Nagayama, T.; Jo, H.; Spencer, J.B.F. Preliminary study of low-cost GPS receivers for time synchronization of wireless sensors. In Proceedings of the Sensors and Smart Structures Technologies for Civil, Mechanical, and Aerospace Systems, San Diego, CA, USA, 26–30 April 2012; Volume 8345, p. 83451A. [Google Scholar]

- Koo, K.Y.; Hester, D.; Kim, S. Time Synchronization for Wireless Sensors Using Low-Cost GPS Module and Arduino. Front. Built Environ. 2019, 4, 82. [Google Scholar] [CrossRef]

- Skog, I.; Handel, P. Time Synchronization Errors in Loosely Coupled GPS-Aided Inertial Navigation Systems. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1014–1023. [Google Scholar] [CrossRef]

- Zofka, M.R.; Tottel, L.; Zipfl, M.; Heinrich, M.; Fleck, T.; Schulz, P.; Zollner, J.M. Pushing ROS towards the Dark Side: A ROS-based Co-Simulation Architecture for Mixed-Reality Test Systems for Autonomous Vehicles. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, Karlsruhe, Germany, 14–16 September 2020; pp. 204–211. [Google Scholar]

- Anwar, K.; Wibowo, I.K.; Dewantara, B.S.B.; Bachtiar, M.M.; Haq, M.A. ROS Based Multi-Data Sensors Synchronization for Robot Soccer ERSOW. In Proceedings of the International Electronics Symposium, Surabaya, Indonesia, 29–30 September 2021; pp. 167–172. [Google Scholar]

- Furgale, P.; Rehder, J.; Siegwart, R. Unified temporal and spatial calibration for multi-sensor systems. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1280–1286. [Google Scholar]

- Zhang, Y.; Di, X.; Yan, S.; Zhang, B.; Qi, B.; Wang, C. A Simple Self-calibration Method for The Internal Time Synchronization of MEMS LiDAR. arXiv 2021, arXiv:2109.12506. [Google Scholar]

- Zheng, B.; Huang, X.; Ishikawa, R.; Oishi, T.; Ikeuchi, K. A new flying range sensor: Aerial scan in omni-directions. In Proceedings of the International Conference on 3D Vision, Lyon, France, 19–22 October 2015; pp. 623–631. [Google Scholar]

- Galilea, J.L.L.; Lavest, J.-M.; Vazquez, C.A.L.; Vicente, A.G.; Munoz, I.B. Calibration of a High-Accuracy 3-D Coordinate Measurement Sensor Based on Laser Beam and CMOS Camera. IEEE Trans. Instrum. Meas. 2009, 58, 3341–3346. [Google Scholar] [CrossRef]

- Zhang, J.; Kaess, M.; Singh, S. A real-time method for depth enhanced visual odometry. Auton. Robot. 2015, 41, 31–43. [Google Scholar] [CrossRef]

- Zhang, Q.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Sendai, Japan, 28 September–2 October 2004; Volume 3, pp. 2301–2306. [Google Scholar]

- Xiang, Z.Y.; Zheng, L. Novel joint calibration method of camera and 3D laser range finder. J. Zhejiang Univ. 2009, 43, 1401–1405. [Google Scholar]

- Chai, Z.; Sun, Y.; Xiong, Z. A novel method for LiDAR camera calibration by plane fitting. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics, Auckland, New Zealand, 9–12 July 2018; pp. 286–291. [Google Scholar]

- Lyu, Y.; Bai, L.; Elhousni, M.; Huang, X. An interactive lidar to camera calibration. In Proceedings of the IEEE High Performance Extreme Computing Conference, Waltham, MA, USA, 24–26 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Pusztai, Z.; Hajder, L. Accurate calibration of LiDAR-camera systems using ordinary boxes. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 6–9 June 2017; pp. 394–402. [Google Scholar]

- Taylor, Z.; Nieto, J. Motion-Based Calibration of Multimodal Sensor Extrinsics and Timing Offset Estimation. IEEE Trans. Robot. 2016, 32, 1215–1229. [Google Scholar] [CrossRef]

- Wu, J.; Xu, H.; Zheng, J.; Zhao, J. Automatic Vehicle Detection With Roadside LiDAR Data Under Rainy and Snowy Conditions. IEEE Intell. Transp. Syst. Mag. 2020, 13, 197–209. [Google Scholar] [CrossRef]

- Cui, Y.; Xu, H.; Wu, J.; Sun, Y.; Zhao, J. Automatic Vehicle Tracking With Roadside LiDAR Data for the Connected-Vehicles System. IEEE Intell. Syst. 2019, 34, 44–51. [Google Scholar] [CrossRef]

- Yiğitler, H.; Badihi, B.; Jäntti, R. Overview of time synchronization for IoT deployments: Clock discipline algorithms and protocols. Sensors 2020, 20, 5928. [Google Scholar] [CrossRef]

- Kolar, P.; Benavidez, P.; Jamshidi, M. Survey of Datafusion Techniques for Laser and Vision Based Sensor Integration for Autonomous Navigation. Sensors 2020, 20, 2180. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Piscataway, NJ, USA, 20–27 September 1999; Volume 1. [Google Scholar]

- Zhou, K.; Hou, Q.; Wang, R.; Guo, B. Real-time KD-tree construction on graphics hardware. ACM Trans. Graph. 2008, 27, 1–11. [Google Scholar] [CrossRef]

- Zhou, L.; Li, Z.; Kaess, M. Automatic extrinsic calibration of a camera and a 3d lidar using line and plane correspondences. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 5562–5569. [Google Scholar] [CrossRef]

| Indicator | Value |

|---|---|

| Laser beams | 32 |

| Scan FOV | 40° × 360° |

| Vertical angle resolution | 0.33° |

| Rotation rate | 300/600/1200 (r/min) |

| Laser wavelength | 905 nm |

| Vertical field of view | −16°~+15° |

| Operating temperature | −20~60 °C |

| Single echo data rate | 650,000 points/s |

| Measuring range | 100 m~200 m |

| Communication Interface | PPS/UDP |

| Height (cm) | Horizontal Distance (cm) | Reprojection Error (Pixel) |

|---|---|---|

| 10 | 50 | 0.159981 |

| 10 | 100 | 0.166632 |

| 10 | 150 | 0.263361 |

| 10 | 200 | 0.339633 |

| 20 | 50 | 0.169532 |

| 20 | 100 | 0.176923 |

| 20 | 150 | 0.294632 |

| 20 | 200 | 0.369654 |

| 30 | 50 | 0.219987 |

| 30 | 100 | 0.321463 |

| 30 | 150 | 0.322134 |

| 30 | 200 | 0.329786 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Liu, S.; Wang, X.; Lan, X. Time Synchronization and Space Registration of Roadside LiDAR and Camera. Electronics 2023, 12, 537. https://doi.org/10.3390/electronics12030537

Wang C, Liu S, Wang X, Lan X. Time Synchronization and Space Registration of Roadside LiDAR and Camera. Electronics. 2023; 12(3):537. https://doi.org/10.3390/electronics12030537

Chicago/Turabian StyleWang, Chuan, Shijie Liu, Xiaoyan Wang, and Xiaowei Lan. 2023. "Time Synchronization and Space Registration of Roadside LiDAR and Camera" Electronics 12, no. 3: 537. https://doi.org/10.3390/electronics12030537

APA StyleWang, C., Liu, S., Wang, X., & Lan, X. (2023). Time Synchronization and Space Registration of Roadside LiDAR and Camera. Electronics, 12(3), 537. https://doi.org/10.3390/electronics12030537