Radar Signal Recognition Based on Bagging SVM

Abstract

:1. Introduction

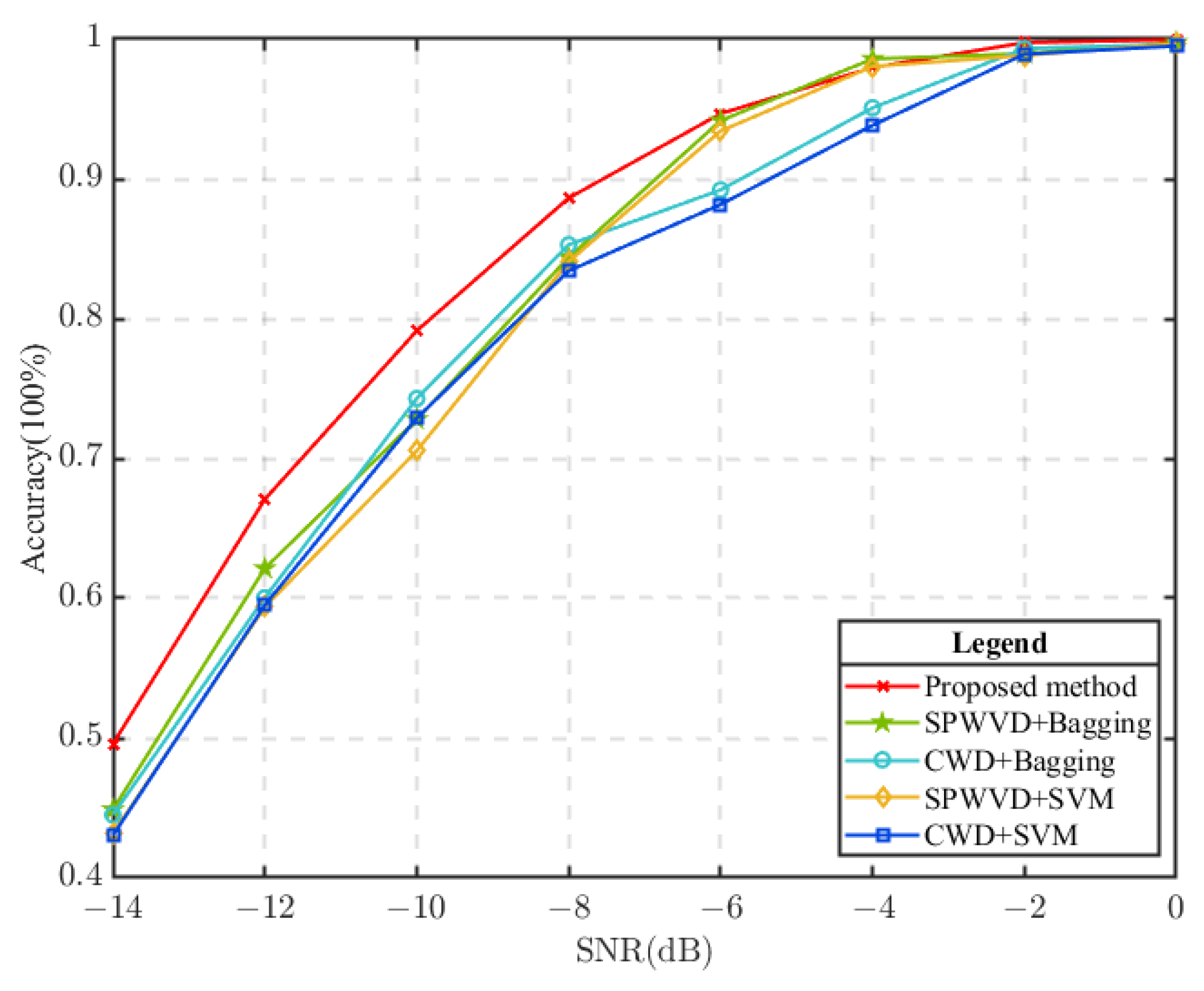

- We present a new method for radar signal recognition by exploiting bagging SVMs, which improves the predictive performance of a single classifier and achieves a higher overall accuracy;

- The high time–frequency aggregation of CWD and the crosstalk resistance of SPWVD are combined to effectively improve the accuracy and robustness of the radar signal recognition;

- Extensive experiments on 12 types of radar signals demonstrate the proposed method significantly outperforms other traditional methods and achieves competitive recognition accuracy over several deep learning methods.

2. The Proposed Method

2.1. Time–Frequency Transformation

2.1.1. Choi–Williams Distribution

2.1.2. Smoothed Pseudo Wigner–Ville Distribution

2.2. HOG Feature Extraction

2.2.1. Gradient Calculation

2.2.2. Gradient Direction Histogram Construction

2.2.3. Histogram Normalization of Overlapping Blocks

2.3. Signal Recognition via Bagging SVM

3. Experiments

3.1. Dataset Description

3.2. Performance Comparison and Analysis

3.3. Model Efficiency Comparison

3.4. Ablation Experiment

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Sample Availability

References

- Golden, A., Jr. Radar Electronic Warfare; American Institute of Aeronautics and Astronautics, Inc.: Reston, VA, USA, 1988. [Google Scholar]

- Baseghi, B. Characterizing radar emissions using a robust countermeasure receiver. IEEE Trans. Aerosp. Electron. Syst. 1992, 28, 741–755. [Google Scholar] [CrossRef]

- Jäntti, J.; Chaudhari, S.; Koivunen, V. Detection and classification of OFDM waveforms using cepstral analysis. IEEE Trans. Signal Process. 2015, 63, 4284–4299. [Google Scholar] [CrossRef]

- Lin, A.; Ling, H. Doppler and direction-of-arrival (DDOA) radar for multiple-mover sensing. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 1496–1509. [Google Scholar] [CrossRef]

- Li, X.; Liu, Z.; Huang, Z. Deinterleaving of pulse streams with denoising autoencoders. IEEE Trans. Aerosp. Electron. Syst. 2020, 56, 4767–4778. [Google Scholar] [CrossRef]

- Dudczyk, J. A method of feature selection in the aspect of specific identification of radar signals. Bull. Pol. Acad. Sci. Tech. Sci. 2017, 65, 113–119. [Google Scholar] [CrossRef]

- Guo, Q.; Nan, P.; Wan, J. Signal classification method based on data mining for multi-mode radar. J. Syst. Eng. Electron. 2016, 27, 1010–1017. [Google Scholar] [CrossRef]

- Gao, J.; Lu, Y.; Qi, J.; Shen, L. A radar signal recognition system based on non-negative matrix factorization network and improved artificial bee colony algorithm. IEEE Access 2019, 7, 117612–117626. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, X. Radar signal classification based on cascade of STFT, PCA and naïve Bayes. In Proceedings of the 2016 7th International Conference on Intelligent Systems, Modelling and Simulation (ISMS), Bangkok, Thailand, 25–27 January 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 191–196. [Google Scholar]

- Yang, B.; Hong, T.; Ma, L.; Jiang, W. A Recognition Algorithm for Complex Spatial Electromagnetic Signal Perception Based on KNN. In Proceedings of the 2022 International Conference on Microwave and Millimeter Wave Technology (ICMMT), Harbin, China, 12–15 August 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–3. [Google Scholar]

- Guo, Q.; Yu, X.; Ruan, G. LPI radar waveform recognition based on deep convolutional neural network transfer learning. Symmetry 2019, 11, 540. [Google Scholar] [CrossRef]

- Li, S.; Quan, D.; Wang, X.; Jin, X. LPI Radar signal modulation recognition with feature fusion based on time-frequency transforms. In Proceedings of the 2021 13th International Conference on Wireless Communications and Signal Processing (WCSP), Virtual, 20–22 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- Daying, Q.; Zeyu, T.; Yun, C.; Weizhong, L.; Xiaofeng, W.; Dongping, Z. Radar emitter signal recognition based on MSST and HOG feature extraction. J. Beijing Univ. Aeronaut. Astronaut. 2022, 49, 538–547. [Google Scholar]

- Kong, S.H.; Kim, M.; Hoang, L.M.; Kim, E. Automatic LPI radar waveform recognition using CNN. IEEE Access 2018, 6, 4207–4219. [Google Scholar] [CrossRef]

- Han, H.; Yi, Z.; Zhu, Z.; Li, L.; Gong, S.; Li, B.; Wang, M. Automatic Modulation Recognition Based on Deep-Learning Features Fusion of Signal and Constellation Diagram. Electronics 2023, 12, 552. [Google Scholar] [CrossRef]

- Jing, L.; Tian, Y. Self-supervised visual feature learning with deep neural networks: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4037–4058. [Google Scholar] [CrossRef] [PubMed]

- Walenczykowska, M.; Kawalec, A. Radar signal recognition using Wavelet Transform and Machine Learning. In Proceedings of the 2022 23rd International Radar Symposium (IRS), Gdansk, Poland, 12–14 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 492–495. [Google Scholar]

- Cheng, Y.; Guo, M.; Guo, L. Radar signal recognition exploiting information geometry and support vector machine. IET Signal Process. 2023, 17, e12167. [Google Scholar] [CrossRef]

- Huynh-The, T.; Doan, V.S.; Hua, C.H.; Pham, Q.V.; Nguyen, T.V.; Kim, D.S. Accurate LPI radar waveform recognition with CWD-TFA for deep convolutional network. IEEE Wirel. Commun. Lett. 2021, 10, 1638–1642. [Google Scholar] [CrossRef]

- Si, W.; Wan, C.; Deng, Z. Intra-pulse modulation recognition of dual-component radar signals based on deep convolutional neural network. IEEE Commun. Lett. 2021, 25, 3305–3309. [Google Scholar] [CrossRef]

- Feng, Z.; Liang, M.; Chu, F. Recent advances in time–frequency analysis methods for machinery fault diagnosis: A review with application examples. Mech. Syst. Signal Process. 2013, 38, 165–205. [Google Scholar] [CrossRef]

- Liu, Y.; Xiao, P.; Wu, H.; Xiao, W. LPI radar signal detection based on radial integration of Choi-Williams time-frequency image. J. Syst. Eng. Electron. 2015, 26, 973–981. [Google Scholar] [CrossRef]

- Dadi, H.S.; Pillutla, G.M. Improved face recognition rate using HOG features and SVM classifier. IOSR J. Electron. Commun. Eng. 2016, 11, 34–44. [Google Scholar] [CrossRef]

- Shu, C.; Ding, X.; Fang, C. Histogram of the oriented gradient for face recognition. Tsinghua Sci. Technol. 2011, 16, 216–224. [Google Scholar] [CrossRef]

- Lin, J.; Chen, H.; Li, S.; Liu, Y.; Li, X.; Yu, B. Accurate prediction of potential druggable proteins based on genetic algorithm and Bagging-SVM ensemble classifier. Artif. Intell. Med. 2019, 98, 35–47. [Google Scholar] [CrossRef] [PubMed]

| Radar Waveform | Simulation Parameter | Range |

|---|---|---|

| All | Sampling frequency (fs) | 100 MHz |

| Center frequency | U(fs/6, fs/5) | |

| LFM | Bandwidth | U(fs/20, fs/16) |

| COSTA | Fundament frequency | U(fs/30, fs/24) |

| Code length | [3, 4, 5, 6] | |

| BPSK | Code length | [7, 11, 13] |

| FRANK | Cycles per phase code | [3, 4, 5] |

| Number of frequency steps | [6, 7, 8] | |

| P1 | Cycles per phase code | [3, 4, 5] |

| Number of frequency steps | [6, 7, 8] | |

| P2 | Cycles per phase code | [3, 4, 5] |

| Number of frequency steps | [6, 8] | |

| P3 | Cycles per phase code | [3, 4, 5] |

| Number of sub-codes | [36, 49, 64] | |

| P4 | Cycles per phase code | [3, 4, 5] |

| Number of sub-codes | [36, 49, 64] | |

| T1 | Number of segments | [4, 5, 6] |

| T2 | Number of phase states | 2 |

| T3 | Bandwidth | U(fs/20, fs/10) |

| Number of segments | [4, 5, 6] | |

| T4 | Bandwidth | U(fs/20, fs/10) |

| Number of segments | [4, 5, 6] |

| Method | Test Duration (s) |

|---|---|

| Proposed method | 63 |

| CWT+CNN | 36 |

| Alexnet+SVM | 174 |

| LPI-net | 181 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, K.; Qi, Y.; Shen, L.; Wang, X.; Quan, D.; Zhang, D. Radar Signal Recognition Based on Bagging SVM. Electronics 2023, 12, 4981. https://doi.org/10.3390/electronics12244981

Yu K, Qi Y, Shen L, Wang X, Quan D, Zhang D. Radar Signal Recognition Based on Bagging SVM. Electronics. 2023; 12(24):4981. https://doi.org/10.3390/electronics12244981

Chicago/Turabian StyleYu, Kaiyin, Yuanyuan Qi, Lai Shen, Xiaofeng Wang, Daying Quan, and Dongping Zhang. 2023. "Radar Signal Recognition Based on Bagging SVM" Electronics 12, no. 24: 4981. https://doi.org/10.3390/electronics12244981

APA StyleYu, K., Qi, Y., Shen, L., Wang, X., Quan, D., & Zhang, D. (2023). Radar Signal Recognition Based on Bagging SVM. Electronics, 12(24), 4981. https://doi.org/10.3390/electronics12244981