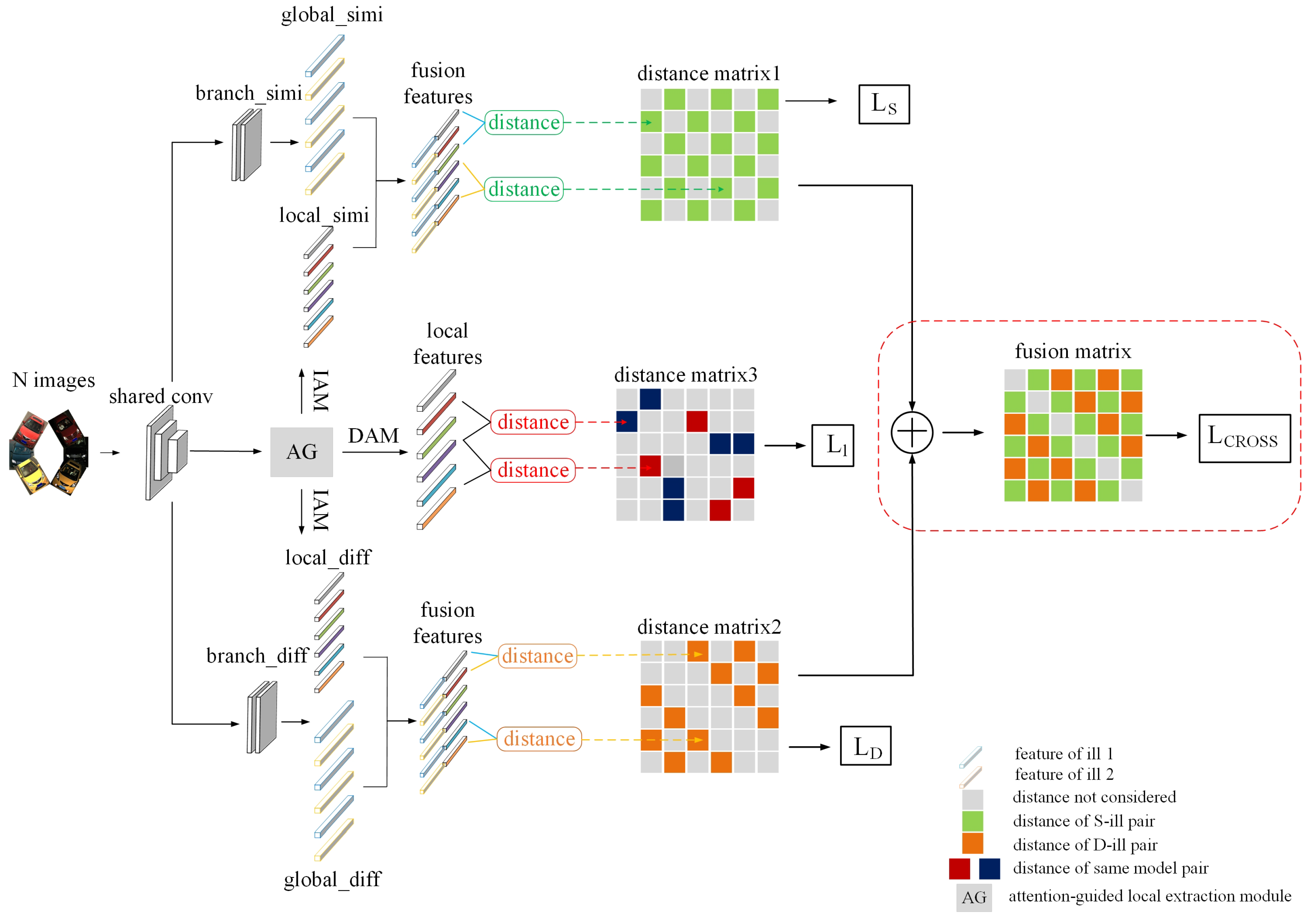

The network architecture for the proposed algorithm is illustrated in

Figure 3. This framework constituted a two-stage progressive deep learning process involving illumination conditions and vehicle attributes. During the first stage (

Section 3.2), we established an illumination-aware network (IANet) consisting of two branches with identical structures and applied it to both S-IL and D-IL image pairs. This two-branch network leveraged the coarse-grained illumination labels to supervise the learning of illumination-specific features. The Stage I model (IANet) then enabled the retrieval of samples under different lighting conditions. During the second stage (

Section 3.3), we introduced a guided local feature extraction process to generate local features. This process included an illumination-aware local feature extraction module (IAM) and a detail-aware local feature extraction module (DAM). This attention mechanism facilitated the learning of distinguishing features among different vehicles with similar appearances, under the supervision of fine-grained model labels. The Stage II model was specifically designed to extract discriminative features from local areas to distinguish among similar types of vehicles. We adopted triplet loss as a learning baseline metric, as discussed in

Section 3.1.

3.1. Metric Learning Baseline

We adopted triplet loss to construct a metric learning baseline. Given an image pair

, distances were calculated using

, where

and

represent images from the dataset

X and

D denotes the Euclidean distance between features. The function

f then mapped raw images to their respective features. An example is provided given three samples:

x,

, and

, where

x and

belong to the same class (i.e., the same vehicle ID), while

x and

belong to different classes. A positive pair

and a negative pair

can then be formed, for which the triplet loss is defined as follows:

where

is a margin enforced between positive and negative pairs. Equation (

1) aims to minimize the distance between samples with the same ID while maximizing the distance between samples with different IDs.

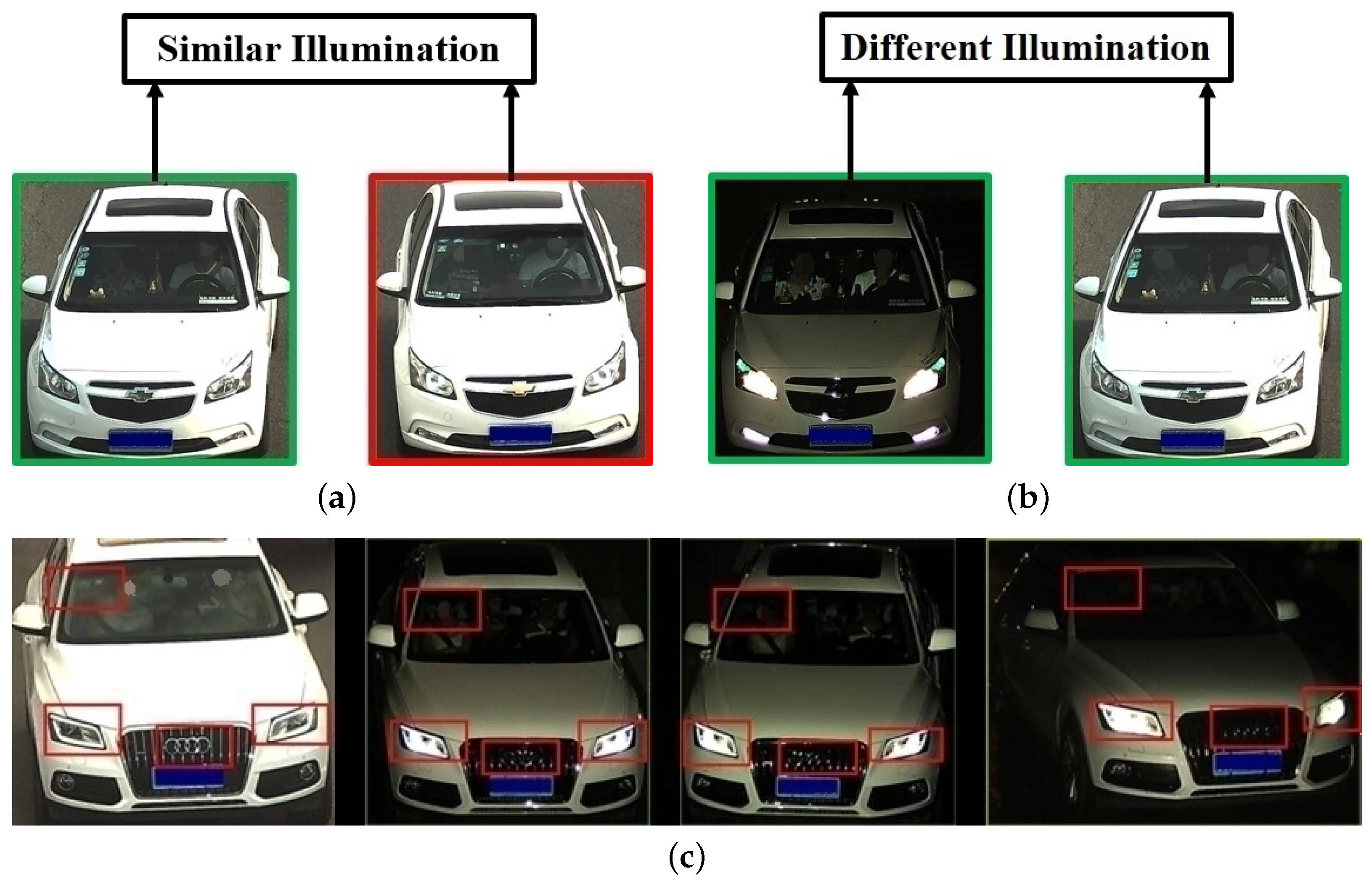

3.2. Illumination-Aware Metric Learning

Inspired by previous work [

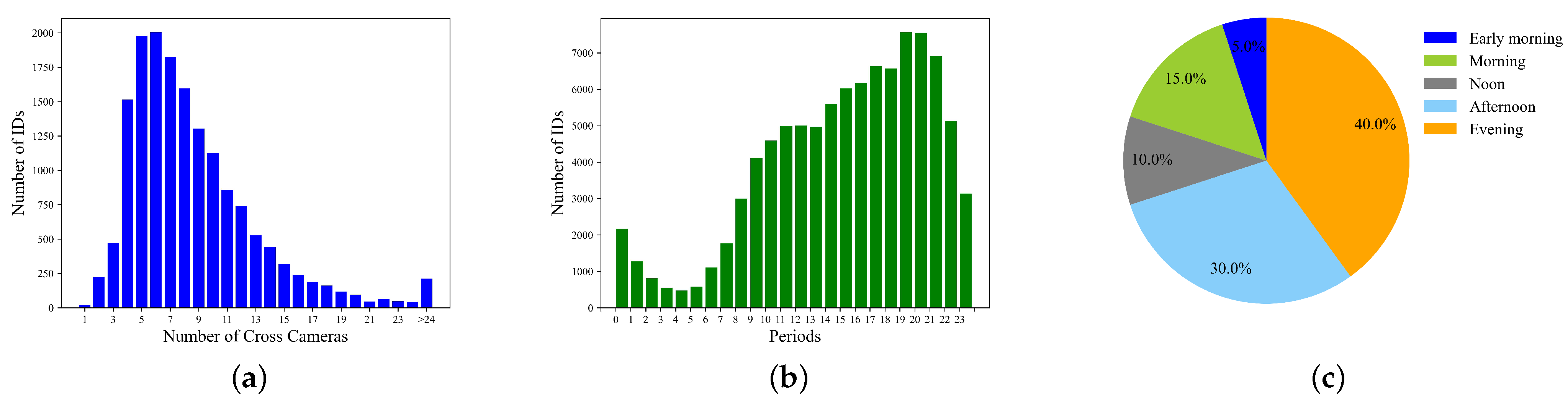

25], we propose an illumination-aware network that learns two separate deep metrics for S-IL and D-IL samples. A coarse-grained classification was included to divide the images into two distinct illumination types. We then employed IANet to learn illumination-specific metrics via the explicitly modeling of lighting conditions. Since it is difficult to manually annotate real-world environments with fine-grained labels, coarse-grained labels were assigned to the images (i.e., daytime and nighttime). Images including annotated timestamps ranging from 06:00 to 18:00 were labeled daytime, and those spanning from 18:00 to 06:00 were labeled nighttime. Datasets lacking a timestamp were categorized using an illumination predictor trained on VERI-DAN samples. Images with the same illumination label were denoted as S-IL pairs, and those with different labels were denoted as D-IL pairs. This convention produced four types of image pairs:

(S-IL positive),

(D-IL positive),

(S-IL negative), and

(D-IL negative).

Images were mapped into two distinct illumination-specific feature spaces using two convolutional branches with identical structures, which did not share any parameters. Each branch layer could be viewed as a function for illumination-specific feature extraction (i.e.,

and

). For each image in a mini-batch, IANet generated two distinct features using

and

, as illustrated in

Figure 3. Pair-wise distances in the S-IL feature space were then calculated from

, and distances in the D-IL feature space were determined by

. Equation (

1) was decomposed into two types of constraints: within-space and cross-space constraints. The within-space constraints expect

to be smaller than

in the S-IL feature space, and

to be smaller than

in the D-IL feature space. The cross-space constraints expect

to be smaller than

, and

to be smaller than

.

Within-space constraints: Two triplet loss terms were introduced, one in each of the S-IL and D-IL feature spaces, to ensure that positive samples were closer to each other than negative samples. Triplet loss in the S-IL feature space was defined as follows:

and in the D-IL feature space as follows:

Within-space constraints were then implemented through a summation of

and

as follows:

Within each illumination-specific feature domain, the correlating loss function operated solely on illumination-specific samples. In other words, we used only S-IL pairs to calculate , while was optimized solely by D-IL pairs.

Cross-space constraints: Focusing solely on single-feature spaces runs the risk of underestimating the complex issue of illumination variability, which in turn could limit re-ID accuracy. As such, we further proposed cross-space constraints between (

,

) and (

,

), which were implemented using the following triplet loss function:

Loss functions in the Stage I model: The total triplet loss enforced in the first stage can then be expressed as follows:

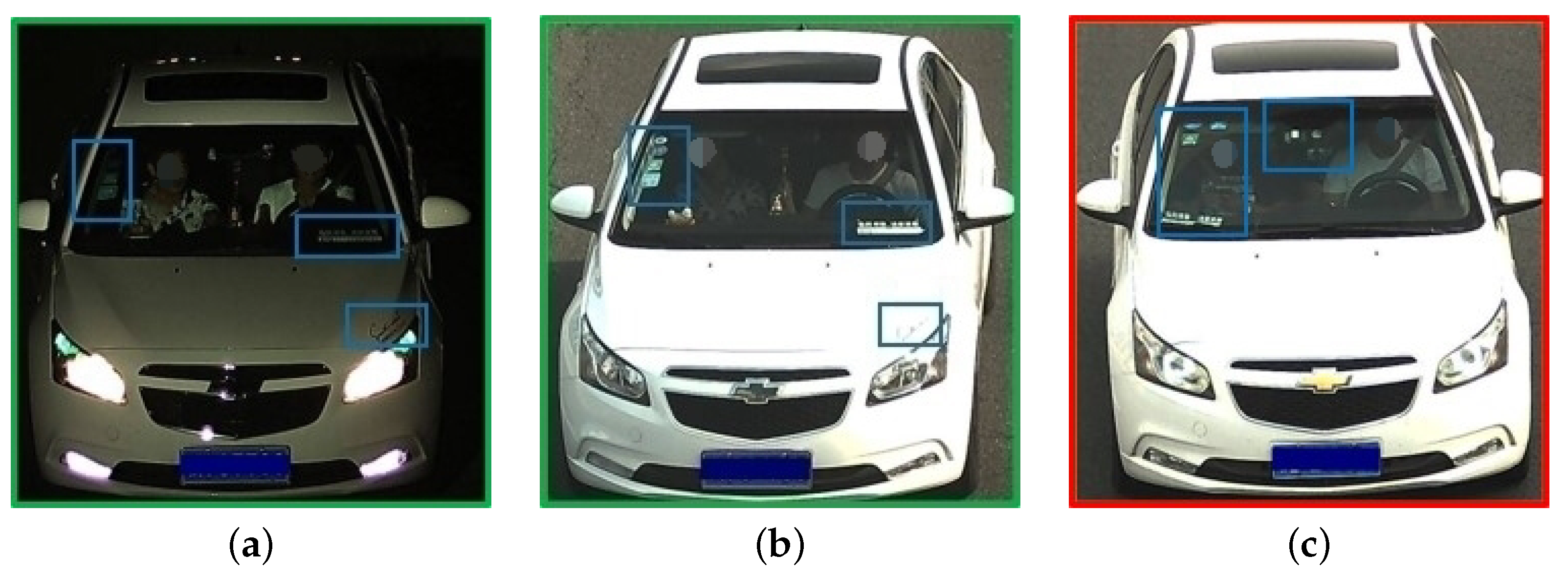

3.3. Detail-Aware Discriminative Feature Learning

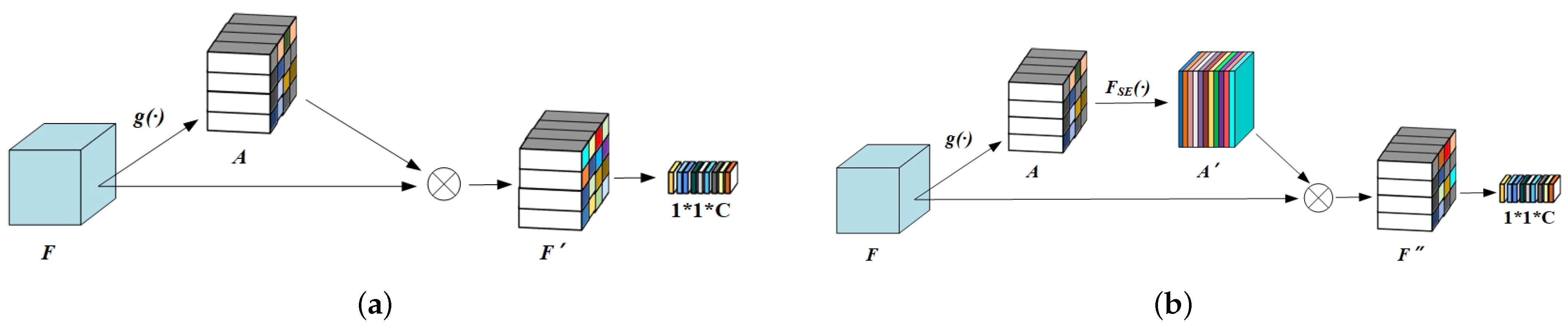

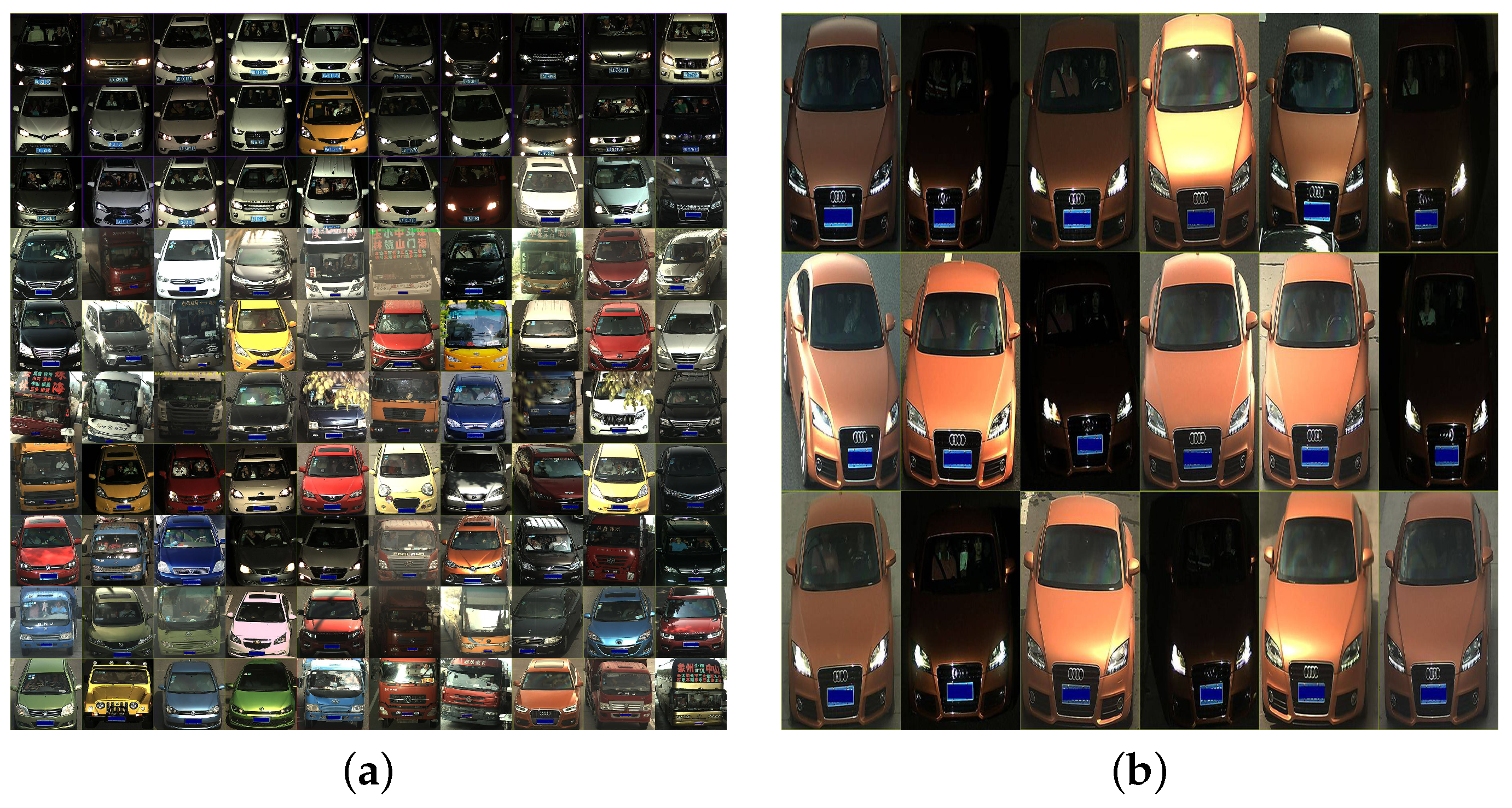

We observed that vehicles with similar appearances often exhibited differences in localized regions, such as windshield decorations, as depicted in

Figure 2. Thus, we suggested that re-ID accuracy could be improved by enhancing an algorithm’s capacity to capture these distinctive local details in the second stage. The neural network encoded images in a progressive process [

31,

32], beginning with fine-grained details and gradually expanding to local and global information. Thus, mid-level features from the middle layers of the network facilitated the extraction of local area features for the vehicle. As such, based on this approach, we proposed a detail-aware discriminative feature learning process for vehicle re-ID. This process incorporated a local feature extraction module with attention mechanisms included to extract local features. Local constraints were then introduced to guide the generation of these features and devise an illumination-balanced sampling strategy to optimize local constraints.

Attention-guided local feature extraction module (AG): Different vehicle parts play varying roles in distinguishing among vehicles that are similar in appearance. Specifically, areas such as a car logo or windshield, for which marked dissimilarities exist between individual vehicles, are more important than common features, such as doors and hoods. To this end, we introduced an attention mechanism to learn from these distinctive areas. This attention-guided process consisted of a detail-aware local feature extraction module (DAM) and an illumination-aware local feature extraction module (IAM), as illustrated in

Figure 4.

The DAM generated detail-aware discriminative local features to enforce local constraints, as shown in

Figure 4a. Mid-level features are denoted in the figure by

F, with dimensions

, where

H,

W, and

C represent the height, width, and number of channels in the feature layer, respectively. The attention local feature map

was then generated with the following equation:

where

is a convolution kernel,

is the sigmoid function, and ⊗ denotes element-wise multiplication between two tensors. Global maximum pooling was then applied to

to produce the final local feature vector (i.e.,

in

Figure 3). Each channel in

represents a specific vehicle region, and spatial points within the vector indicate the significance of each region. In this way, the incorporated attention map was able to guide the network’s focus toward the significant areas of each vehicle.

As demonstrated in

Figure 4b, IAM generated two different types of local features that were discriminative in S-IL and D-IL feature spaces, respectively. The appearance of certain distinguishing areas, such as headlights, differed significantly as the illumination changed. In other words, specific visual cues may become more or less significant in different feature spaces. To this end, we further introduced squeeze and excitation modules [

33] to identify illumination-specific local features for the S-IL and D-IL space. The corresponding feature map

is obtained as follows:

where

denotes the squeeze-and-excitation block. Consequently, we obtained two different local features from IAM (i.e.,

and

), as shown in

Figure 3. We then employed the union of illumination-specific local features with global features (extracted from the branch network) to enforce within-space and cross-space constraints. This process was distinguished from the formulation defined in

Section 3.2 by using

,

, and

to denote corresponding loss terms calculated from the fusion features.

Detail-aware local constraints: In real-world scenarios, differences between vehicles of the same model were concentrated primarily in regions such as inspection marks and personalized decorations. As such, training a triplet loss function using hard negatives from the same vehicle model served as a guiding mechanism that directed network attention toward relevant discriminative local regions. In the following notation,

denotes a vehicle belonging to model

m. A typical triplet in the local constraints is denoted by (

), where

and

exhibit the same ID, while

has a different ID but shares a model type with

and

. Following these definitions,

(same-model positive pair) and

(same-model negative pair) are denoted as (

) and (

), respectively. Formally, local constraints were enforced through triplet loss as follows:

where

is a margin enforced between positive and negative pairs. All negative samples in the proposed local constraints shared a model type with the anchor, which was conducive to guiding the generation of discriminative local features. Note that

is an advanced version of

, since different vehicles of the same model were prone to generate the hardest negatives in S-IL and D-IL feature spaces. Thus, we removed

from the final model after introducing local constraints.

Loss functions in the Stage II model: The total triplet loss function in the Stage II model can be expressed as follows:

Illumination-balanced sampling strategy: Maintaining a balance between S-IL and D-IL pairs is necessary in a mini-batch to train an illumination-aware network against variations in lighting. However, in most cases, the number of daytime images in each mini-batch is much larger than that of the nighttime images. As a result, the network may tend to learn from images captured in the daytime and may not be able to identify a robust correlation among samples with different illumination. To address this issue, we designed a function to ensure that each vehicle provided an equal number of images for both types of lighting. Specifically, the algorithm selected N daytime images and N nighttime images for each vehicle ID in a minibatch. If a vehicle ID exhibited fewer than N daytime images, the algorithm duplicated these samples to produce N images. The effectiveness of this balanced sampling strategy will be illustrated in

Section 5.4.2.

3.4. Training and Inference

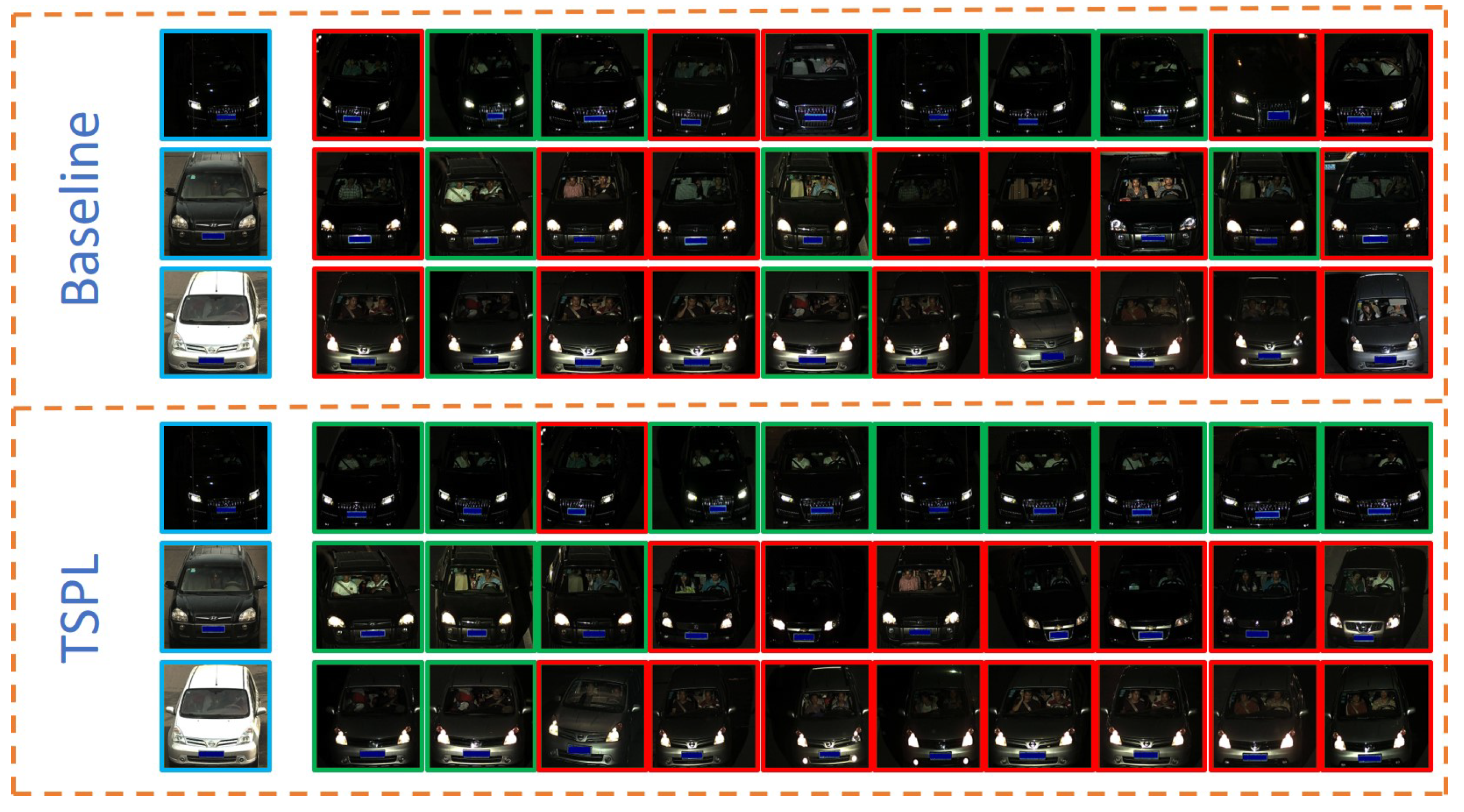

TSPL expressed the fusion of local features with global features extracted from “branch_conv” as joint vehicle features, as demonstrated in

Figure 3. During training, “branch_conv” output dual global features for each image in different feature spaces (i.e.,

in S-IL and

in D-IL). In contrast, IAM output dual illumination-specific local features to form joint representation with global features (i.e.,

and

). DAM then output detail-aware local features to optimize the local constraints. Given

N input images, TSPL generated two illumination-specific distance matrices containing

distance value elements (i.e., matrix1 and matrix2). Only

and

(denoted by the green and brown cells in

Figure 3) contributed to

and

loss, respectively. TSPL also generated a local distance matrix containing

distance value elements calculated from local features. Distances from the same model (denoted by the colored cells in matrix3) were then used to calculate the triplet loss

and to enforce detail-aware local constraints.

Note that

was not incorporated into the final model but was a component of the ablation study. The generation of

was illustrated by the red dashed line box shown in

Figure 3. In the

, the green cells were related to S-IL pair distance values in matrix1, while the brown cells were related to D-IL distance values in matrix2. During the testing phase, a specific procedure was followed based on the illumination conditions of query and gallery images. If these images were identified as a S-IL pair, their distance was calculated by

using a S-IL branch; otherwise,

was employed through a D-IL branch. Distances were also calculated from local features and the union of these results provided joint distances between the query and gallery images.