Abstract

Link prediction is a crucial area of study within complex networks research. Mapping nodes to low-dimensional vectors through network embeddings is a vital technique for link prediction. Most of the existing methods employ “node–edge”-structured networks to model the data and learn node embeddings. In this paper, we initially introduce the Clique structure to enhance the existing model and investigate the impact of introducing two Clique structures (LECON: Learning Embedding based on Clique Of the Network) and nine motifs (LEMON: Learning Embedding based on Motif Of the Network), respectively, on experimental performance. Subsequently, we introduce a hypergraph to model the network and reconfigure the network by mapping hypermotifs to two structures: open hypermotif and closed hypermotif, respectively. Then, we introduce hypermotifs as supernodes to capture the structural similarity between nodes in the network (HMRLH: HyperMotif Representation Learning on Hypergraph). After that, taking into account the connectivity and structural similarity of the involved nodes, we propose the Depth and Breadth Motif Random Walk method to acquire node sequences. We then apply this method to the LEMON (LEMON-DB: LEMON-Depth and Breadth Motif Random Walk) and HMRLH (HMRLH-DB: HMRLH-Depth and Breadth Motif Random Walk) algorithms. The experimental results on four different datasets indicate that, compared with the LEMON method, the LECON method improves experimental performance while reducing time complexity. The HMRLH method, utilizing hypernetwork modeling, proves more effective in extracting node features. The LEMON-DB and HMRLH-DB methods, incorporating new random walk approaches, outperform the original methods in the field of link prediction. Compared with state-of-the-art baselines, the proposed approach in this paper effectively enhances link prediction accuracy, demonstrating a certain level of superiority.

1. Introduction

Networks are an abstract representation of complex systems throughout the sciences. Studying complex networks can reveal numerous potential associations between elements. Traditional network models represent individual elements as nodes and capture the relationships between elements through links connecting the nodes [1,2,3]. In this way, the relationships between pairs of individuals can be represented as links in a social network, the functions of pairs of proteins can be abstracted as links in a protein network, and an email network can also serve as a representation of interactions among pairs of colleagues or other people [4,5,6,7]. However, the representation through pairs alone cannot adequately describe systems that involve higher-order interactions between more than two entities. We can observe several relevant examples. For instance, people often interact within social groups, associative relations among proteins frequently involve the function of multiple proteins, rather than just pairs, and people also frequently send group emails.

Link prediction is an important research topic in complex networks, which deals with the task of predicting the likelihood of two nodes in a graph being connected at some point in the future [8,9]. Predicting unknown links often relies on the known ones in the network. Traditional network models only describe the relationships between pairs of nodes. Therefore, some classic algorithms often extract only low-order features of the nodes from the network, such as the hub depressed index (HDI) [10], Adamic-Adar [11] based on common neighbors, and resource allocation indicators (RA) [12], among others. In addition, graph embedding, which aims to learn the features of nodes, has emerged as an effective methodology for many downstream tasks, such as link prediction [13,14,15,16], community detection [17,18], etc. The fundamental concept of these algorithms is to acquire node sequences through random walks in the network and subsequently learn the embedding of each node using a skip-gram model [19,20]. This approach characterizes the higher-order structure features of nodes within the network. However, many previous works have employed connectivity or structural similarity as measures of node similarity, such as DeepWalk [21], graph2vec [22], and node2vec [23]. Connectivity reflects the similarity between nodes in the network where links exist. For example, when two nodes have more common neighbors, it is highly likely that a link will exist between them. On the other hand, structural similarity measures whether two nodes have similar local structures in the global network. For instance, in a social network, if both managers of two departments are local hub nodes, it is more likely that a link will exist between them. Most known representation techniques only learn representations for nodes from the perspective of one kind of similarity. LEMON [24] introduces motifs as supervertices in the network for random walks and captures the structural similarity of nodes by performing random walks to the supervertices. However, this approach introduces nine types of motifs, considering too many structural features in the second and third orders. This might lead to overfitting during the process of learning node embeddings. Additionally, having more motifs implies higher time complexity, making the model less suitable for application in large-scale networks. Meanwhile, this approach considering connectivity and structural similarity is based on traditional networks, which only can illustrate the relationship between pairs of nodes. A significant amount of information is discarded when a network is generated, such as group emails, student class, multiple proteins information, etc. Therefore, how to characterize higher-order interaction features of nodes in a network is a problem that we have to address.

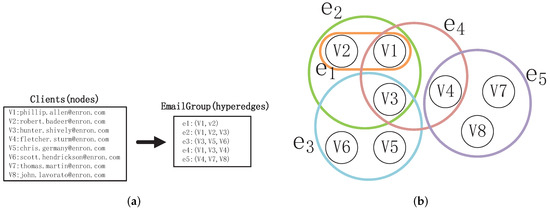

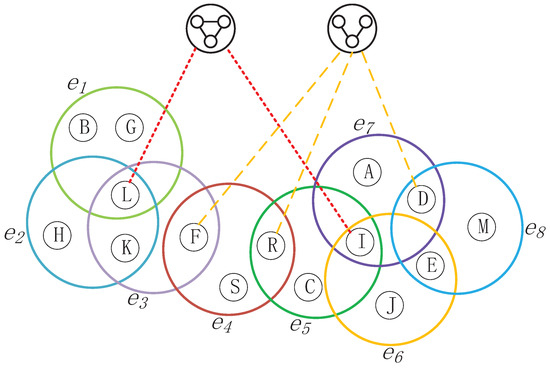

Hypergraphs [25], which consist of nodes and hyperedges, can tackle the aforementioned problem. Each hyperedge has the capability to connect any number of nodes, representing higher-order interactions within groups of nodes [26,27,28]. For instance, the interaction relationships shown in Figure 1a are naturally represented by the hypergraph depicted in Figure 1b Each hypergraph corresponds to an email. The nodes in the hypergraph represent the Enron employees who are senders or recipients of the email. The hypergraph represents the interaction between and , while the hypergraph describes the interaction among , , and . This demonstrates that a hypergraph is capable of distinguishing pairwise relationships and higher-order interaction relationships. Additionally, the hyperedge represents pairwise relationships, thus traditional “node–edge”-structured networks can be regarded as a special form of hypergraph.

Figure 1.

This figure gives an example of a hypergraph: (a) email interactions. (b) hypergraph: represents interaction of email.

In this paper addressing the issues of overfitting and excessively high time complexity in the LEMON method, we propose the Learning Embedding based on Clique Of the Network method (LECON), which utilizes Clique structures instead of motifs as supernodes to reconstruct the network. Then, the node sequences are input into the skip-gram model for learning node embedding vectors. Subsequently, addressing the limitation of traditional “node–edge”-structured networks in capturing group relationships, we present the HyperMotif Representation Learning on Hypergraph (HMRLH) method, which employs hypergraphs to model the network and incorporates hypermotifs as supernodes for assessing the structural similarity among nodes. Additionally, to acquire node sequences that more accurately capture node characteristics, we introduce a Depth and Breadth Motif Random Walk method. This method incorporates hyperparameters, designated as p and q, to regulate the walking patterns. We employ this walking technique independently with the LEMON and HMRLH methods to assess its efficacy.

The structure of this paper is organized as follows: Section 1 elucidates the current state of affairs in both domestic and international contexts, along with the shortcomings of existing algorithms. Section 2 introduces some of the prior work that is referenced in this paper; consequently, Section 3 provides a detailed description of the structure of our proposed methods. In Section 4, we conduct experiments to validate the effectiveness and feasibility of our proposed methods. Finally, we summarize and discuss all the work presented in this paper.

2. Preliminaries

2.1. LEMON

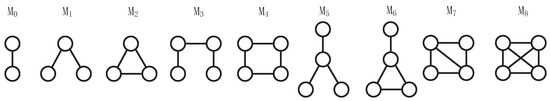

Higher-order interactions are prevalent in the real world, and traditional "node–edge" structures in networks fail to capture the intricate connections between entities. For instance, scenarios like multiple authors collaborating on a research paper in a coauthorship network or relationships between recipients of group emails in an email network cannot be effectively represented using the conventional "node–edge" structure. Motifs [29] are crucial structures for characterizing higher-order network structures and can depict high-order interactions between nodes in traditional networks. The LEMON framework introduces nine types of 2, 3, and 4-node motifs to characterize higher-order structures in the network, as shown in Figure 2.

Figure 2.

2, 3, and 4-node undirected motifs.

Unlike traditional approaches that implicitly utilize motifs when learning node embeddings [30], LEMON explicitly introduces motifs as supernodes into the network to characterize the structural similarity between nodes. In order to utilize supernodes for learning node vectors, the LEMON framework introduces a motif-step random walk method based on the reconstructed network after introducing supernodes. Assuming starting from node u, the selection of the next node that one might wander to is illustrated in Formula (1).

where q represents the probability of walking to a supernode, with a higher q value indicating a greater likelihood of walking to a supernode, and denotes the number of neighboring nodes of node u in the regular network. represents the weight of structural edges between node u and supernode , which is calculated as follows:

where denotes the number of motif type i containing nodes u, and V represents the set of nodes in the network.

After obtaining the node sequences, we feed the collected node sequences into the skip-gram model [31], which maps the vertices to low-dimensional embedding vectors.

2.2. Clique

Clique structures [32] are local, fully connected subgraphs within a network and are one of the fundamental components of complex networks. They are widely found in real networks such as protein–protein interaction networks, social networks, research collaboration networks, and drug–drug interaction networks. Clique structures serve as important tools for studying network characteristics and capturing interactions among multiple entities. Clique is a special type of motif structure that differs from typical motifs in that it refers specifically to fully connected structures among nodes. In Figure 3, 3- and 4-Cliques are illustrated.

Figure 3.

Illustration of 3- and 4-Cliques structure when the green node is the center node.

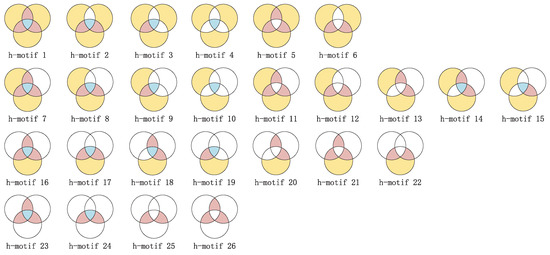

2.3. Hypermotif

In traditional networks, motifs are often used to directly characterize higher-order interactions between nodes, while in hypernetworks, hypermotifs [33] study the interactions between hyperedges. Taking into account the time complexity, we focus on studying third-order hypermotifs in this context. Based on the overlapping relationships among three hyperedges, we can categorize the interactions between these three hyperedges into 26 different types, as illustrated in Figure 4.

Figure 4.

Third-order hypermotifs: where yellow represents the set of nodes unique to this hyperedge, pink indicates the set of nodes shared by adjacent hyperedges, blue represents the set of nodes shared by three hyperedges, and the blank area indicates that the node count for this specific set is zero.

3. Approach

Firstly, we examined the influence of considering only two fully connected motifs (Cliques) on the algorithm’s experimental performance, utilizing the nine motifs introduced by LEMON. Subsequently, we introduce a novel representation learning method called HMRLH, which builds upon LEMON, to establish a relationship between connectivity and structural similarity using hypergraphs. Finally, we introduced a Depth and Breadth Motif Random Walk method to capture node sequences and applied it to both the LEMON and HMRLH methods.

3.1. LECON

LEMON extracts the structural information of nodes through motifs. However, it introduces nine types of motifs, including second-order, third-order, and fourth-order motifs. More motifs imply higher time complexity, which may make it unsuitable for large-scale networks. Additionally, the use of multiple motifs in learning node features can potentially lead to overfitting.

Based on the aforementioned issues, we conducted a comparative study by considering only two fully connected motifs (Cliques) among the nine motifs introduced by LEMON. This modified approach is referred to as LECON (Learning Embedding based on Clique Of the Network).

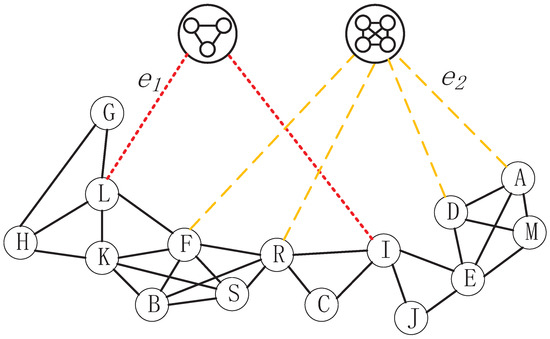

As shown in Figure 5, LECON introduces only 2-Cliques and 3-Cliques as supernodes. If a node v is part of these Cliques, a structural edge will be established between node v and the respective Clique. In a "node–edge" network, node L is connected to nodes G, H, K, and F, respectively. Upon observation, it becomes evident that nodes L, G, and H form a 2-Clique, which leads to node L generating a structural edge () with the 2-Clique supernode. Similarly, nodes A, D,M, and E constitute a 3-Clique, resulting in the generation of a structural edge () between node A and the 3-Clique supernode.

Figure 5.

Schematic of the reconstructed network after incorporating the Clique as supernodes, where the red dashed lines represent a 3-Clique structure formed by the node and its surrounding neighboring nodes. This results in an edge connecting the node to the hypernode of the 3-Clique, referred to as a structural edge. Similarly, the yellow dashed lines indicate a 4-Clique structure formed by the node and its neighboring nodes, leading to the generation of a structural edge between the node and the hypernode of the 4-Clique.

3.2. HMRLH

While the LEMON algorithm considers the motif structure of traditional "node–edge"-structured networks, it is important to note that "node–edge"-structured networks can only represent pairwise relationships between nodes. When higher-order interactions are present, "node–edge’-structured networks exhibit limited expressiveness. To enhance the expressiveness of the model, we propose using a hypergraph to model the network and introducing hypermotifs as supernodes. However, in the third order alone, there are 26 species of hypermotifs. Treating all of them as supernodes would significantly increase the model’s time complexity. Based on the findings in Section 4.4.1, it can be concluded that when LECON considers only two fully connected motifs, there is minimal loss, and in some cases, even an improvement in modeling effectiveness, all while reducing time complexity. Thus, to address the challenge of high time complexity, we categorize the 26 hypermotifs into two types: open and closed hypermotifs.

In this study, we introduce mapped third-order hypermotifs as supernodes in the hypernetwork to construct the HMRLH (HyperMotif Representation Learning on Hypergraph) model. Using the third-order hypermotif as an example, we categorize it as a closed motif when these three hyperedges share one or more common nodes, or when there is a common node between every pair of the three hyperedges. On the contrary, if only one of the three hyperedges shares a common node with the other two hyperedges, we consider that these three hyperedges form an open motif.

As shown in Figure 6, hyperedges , , and share the common node L, so we map them to a closed hypermotif. Furthermore, a structural edge will be generated between nodes that belong to these hyperedges and the closed hypermotif. Regarding hyperedges , , and , hyperedge shares one common node with the hyperedges and , respectively, while hyperedges and do not share a common node. This falls under the category of mapping to open hypermotifs, as we described.

Figure 6.

Schematic of the reconstructed network modeled using a hypergraph and the introduction of hypermotifs as supernodes.Where the red dashed lines represent a closed hypermotif formed by the hyperedge containing the node and the surrounding hyperedges. This results in an edge connecting the node to the hypernode of the closed hypermotif, referred to as a structural edge. Similarly, the yellow dashed lines indicate an opened hypermotif formed by the hyperedge containing the node and the surrounding hyperedges, leading to the generation of a structural edge between the node and the hypernode of the opened hypermotif.

Unlike LEMON, in the HMRLH model, the weights of structural edges between node u and supernode are denoted as , as shown below:

where indicates the number of the i-th type of hypermotif containing hyperedges .

As can be seen from Figure 6, hyperedges , , and construct a closed hypermotif; hence, , , and are all equal to 1. For node H, which only belongs to , is equal to 1. However, for node L, which belongs to all hyperedges , , and , is equal to the sum of , , and .

3.3. Depth and Breadth Motif Random Walk

For the motif-step random walk method used by LEMON, only whether the nodes are neighboring nodes or not is considered when the random walk is between nodes. Previous work, such as node2vec [23], demonstrated that sequences obtained by considering both depth-first and breadth-first traversal are more reflective of the structural properties of the nodes during random walks. Therefore, inspired by node2vec, this work introduces parameters p and q to control the random walk between neighboring nodes based on motif-step random walk. Assuming starting from node u, the formula for random walk is as follows:

where c indicates the probability of walks traveling from a normal node to a supernode, while the probability of traveling on normal edges is equal to . When traveling on normal edges, that is, when wandering to the first-order neighboring node in the network at the next step, the probability of going from node v to x is shown in the below formula. First, we consider that a random walk has occurred on edge , and then we decide which node to walk to next based on the probability.

where represents the distance between node t and the next node x. The parameter p controls the likelihood of revisiting the previous node t, which are two hyperparameters. If p is set to a high value (>(q,1)), we are less likely to sample already visited nodes in the next step. The parameter q controls our preference for walking with BFS or DFS. If , the random walk is biased towards the first-order neighbors of node t, approximating BFS. In this sense, our sampling focuses on nodes within a small local range. On the contrary, if , the walk is more likely to visit second-order neighbors of node t in relation to node v.

3.4. LECON-DB and HMRLH-DB

In order to verify the effectiveness of our proposed Depth and Breadth Motif Random Walk method, we incorporated it into the LEMON and HMRLH methods for experimental validation. These modified methods are referred to as LEMON-DB and HMRLH-DB, respectively.

4. Result

4.1. Datasets

Since the method proposed in this paper places more emphasis on the higher-order structure within the network, we chose networks with varying densities for separate comparative experiments. We utilized the following datasets for conducting our experiments:

- Email-Enron [34]: Each hyperedge corresponds to an email, with the nodes within the hyperedge representing Enron employees who either sent or received the email. The dataset was downloaded from https://snap.stanford.edu/data/email-Enron.html (accessed on 27 May 2023).

- TCM: This dataset comprises 100 ancient classical prescriptions provided by the State Administration of Traditional Chinese Medicine (SATCM) in China.

- TFD: The dataset consists of formulas contained in the classical Chinese medical text “Treatise on Febrile Diseases”.

- SPGC: This dataset is sourced from the first 25 volumes of the classical Chinese medical text “Synopsis of Prescriptions of the Golden Chamber”.

Among them, the TCM, TFD, and SPGC datasets were constructed by our team members who collected and organized relevant documents.

As shown in Table 1, we present some statistics of the datasets.

Table 1.

Statistics of the datasets. Where denotes the number of nodes in the network, refers to the number of edges in the “node–edge” network, and represents the number of hyperedges in the hypernetwork.

It can be observed that the Email-Enron dataset comprises 143 nodes, 2585 edges, 1542 hyperedges, and an average degree of 36.13. These statistics underscore the higher network density and the more pronounced higher-order structure within the Email-Enron dataset. In contrast, we utilize a TCM dataset characterized by a greater number of nodes, fewer edges, half the average degree of the Email-Enron dataset, relatively low network density, and fewer higher-order features. In addition, to further investigate the impact of the network’s higher-order structure on algorithm performance, we introduced the TFD and SPGC datasets. Compared with the TCM dataset, these two datasets increase the network’s average node degree by adding more nodes and edges, respectively, resulting in denser networks with more prominent higher-order features.

4.2. Evaluation Metrics

This paper evaluates the performance of the algorithm using the area under the ROC curve (AUC) and precision. The AUC is employed to assess the overall performance of the algorithm, whereas precision is focused on evaluating the accuracy of the top-ranked edges in the prediction results.

4.3. Experimental Environment

The experiments in this paper were conducted on a Windows 10 operating system with 16 GB of RAM and an i7-7600U 2.90 GHz CPU. The programming language used was Python, version 3.8.8. The construction of the adjacency matrix was implemented using NumPy, version 1.19.5, and the traditional network was built using NetworkX, version 2.3.

4.4. Experimental Results

In order to better validate the performance of the method proposed in this paper, we conducted five sets of comparison experiments with other related algorithms on each of the four datasets mentioned above. We utilize cosine similarity to measure the similarity between nodes and rank the predicted edges by score.

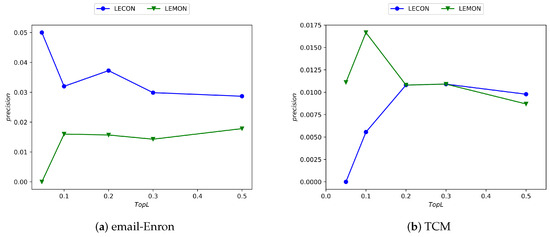

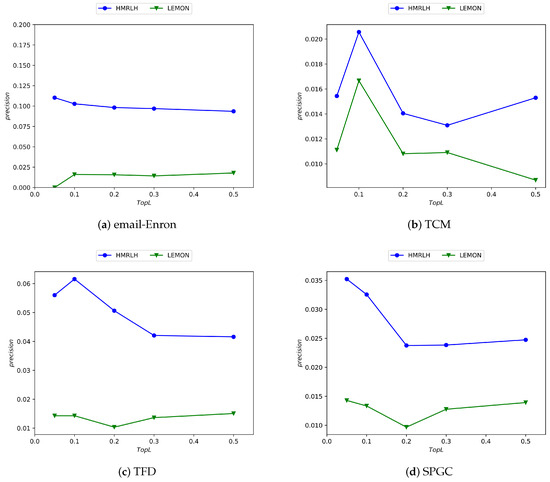

4.4.1. LECON and LEMON

LEMON incorporates nine types of 3-motifs as supernodes. To address time complexity concerns, we propose the LECON algorithm, which focuses solely on two of these fully connected structures derived from LEMON. Below, we can observe the performance of both algorithms across four distinct datasets.

As depicted in Figure 7, the horizontal axis corresponds to the percentage of top-L-ranked edges by precision score, while the vertical axis indicates the precision value. It is evident from the figure that the LECON algorithm exhibits improved performance across nearly all datasets compared with the LEMON algorithm, despite the reduction in time complexity.

Figure 7.

Experimental results comparing the LECON and LEMON methods across four datasets.

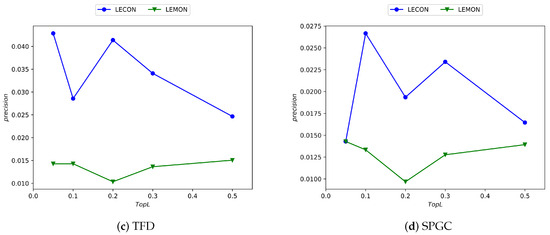

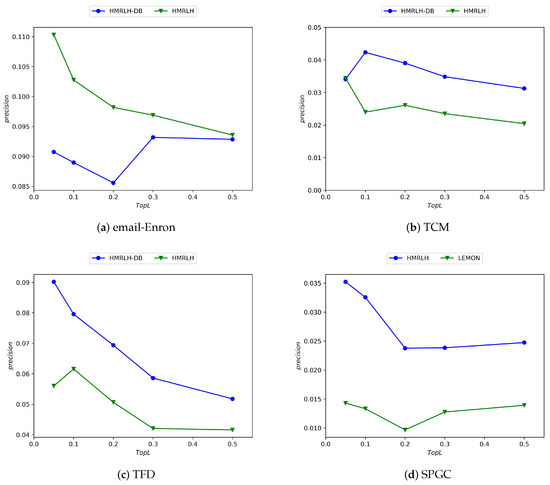

4.4.2. LEMON-DB and LEMON

To validate the effectiveness of the Depth and Breadth Motif Random Walk method, we incorporated it into the LEMON algorithm and conducted comparative experiments. The experimental results are as follows.

Figure 8 clearly illustrates that the performance of the LEMON-DB method, following the incorporation of the Depth and Breadth Motif Random Walk, surpasses that of the original LEMON algorithm. This demonstrates that the Depth and Breadth Motif Random Walk method effectively captures the network node features.

Figure 8.

Experimental results comparing the LEMON-DB and LEMON methods across four datasets.

4.4.3. HMRLH and LEMON

In this section, to validate the effectiveness of hypergraph modeling in characterizing node features, we compare the HMRLH method using hypergraphs and hypermotifs with the LEMON method. The experimental results are presented Figure 9:

Figure 9.

Experimental results comparing the HMRLH and LEMON methods across four datasets.

From the experimental results, it can be concluded that the HMRLH method, which incorporates hypergraph and h-motif modeling, outperforms the LEMON algorithm on the datasets used in this paper. This further underscores the advantages of hypergraph modeling in capturing higher-order interaction structures compared with traditional network structures.

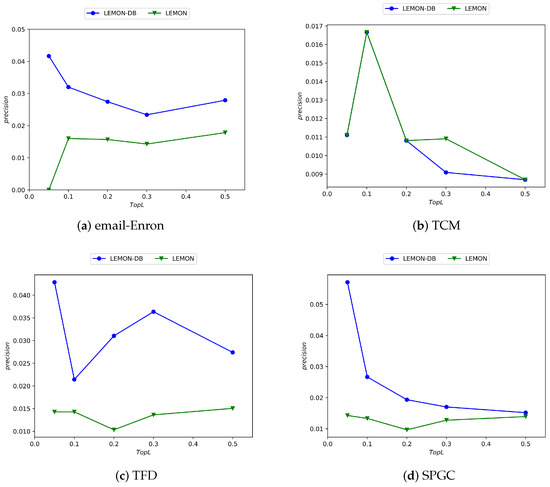

4.4.4. HMRLH-DB and HMRLH

Similarly, to further validate the performance of the Depth and Breadth Motif Random Walk method, we introduced it into HMRLH, resulting in the HMRLH-DB approach. Below are the comparative experimental results between HMRLH-DB and HMRLH.

From Figure 10, it can be observed that only in the Email-Enron dataset is the performance of the HMRLH-DB method slightly inferior to that of HMRLH. However, in the other three datasets, the HMRLH-DB method outperforms HMRLH. This further demonstrates the effectiveness and feasibility of the Depth and Breadth Motif Random Walk method.

Figure 10.

Experimental results comparing the HMRLH-DB and HMRLH methods across four datasets.

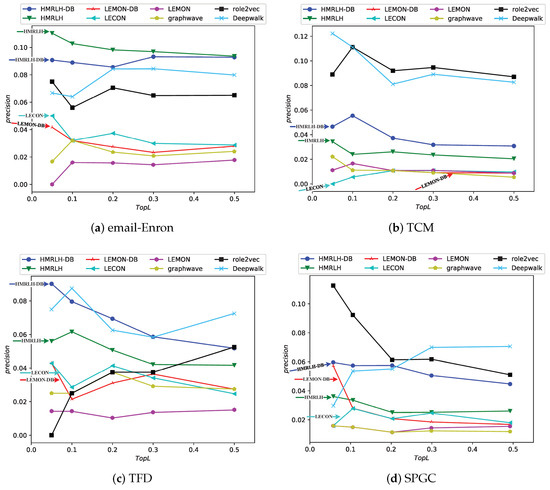

4.4.5. Comparison with Baselines

In this section, we use the four proposed methods to compare them with the following baselines.

- LEMON [24]: The LEMON method, which constructs a network by introducing nine types of motifs as supernodes and then learns node features through random walks, serves as the primary reference approach in our work.

- DeepWalk [21]: Deepwalk extends recent developments in language modeling and unsupervised feature learning, originally applied to word sequences, to the domain of graphs. It leverages local information extracted from truncated random walks, treating these walks as analogous to sentences, to acquire latent representations.

- Graphwave [35]: GraphWave is an approach that captures the network neighborhood of each node through a low-dimensional embedding, utilizing heat wavelet diffusion patterns.

- Role2vec [36]: Role2Vec framework employs the versatile concept of attributed random walks, providing a foundation for extending current techniques like DeepWalk, node2vec, and numerous others that make use of random walks.

The experimental results are depicted in the Figure 11:

Figure 11.

Experimental results of the proposed methods compared with state-of-the-art baselines on four datasets in this paper.

From the experimental results, it can be observed that in the Email-Enron and TFD datasets, the performance of the proposed HMRLH and its related algorithms is superior to the compared baselines. In the SPGC dataset, its performance is slightly inferior to that of Role2Vec. However, the performance in the TCM dataset is not as promising, which may be attributed to the fact that the HMRLH algorithm proposed in this paper primarily focuses on learning node features by considering higher-order structural information within the network. As indicated by the previous analysis, the TCM dataset lacks prominent higher-order structural characteristics. Consequently, the HMRLH algorithm does not outperform other methods when it comes to learning node features on the TCM dataset.

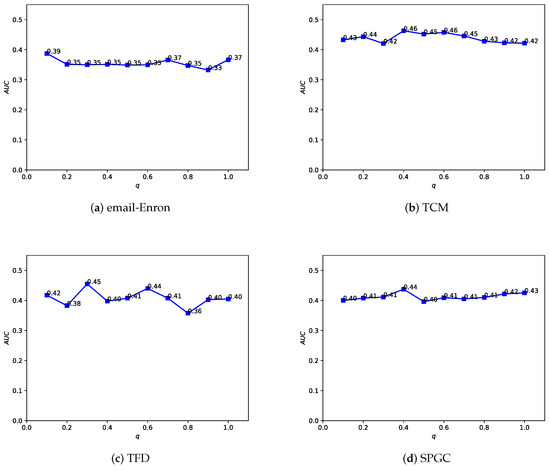

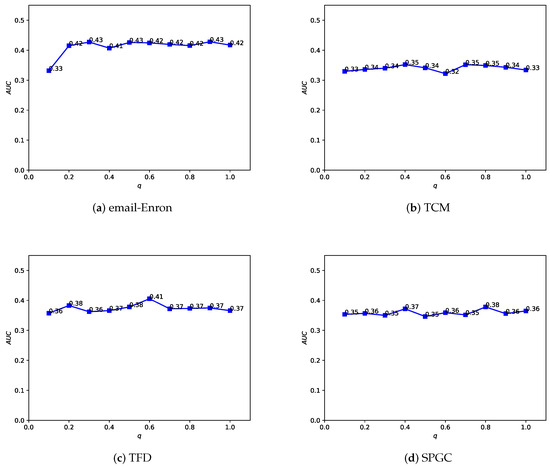

4.5. Parameter Sensitivity

In the Depth and Breadth Motif Random Walk method, we introduced two hyperparameters, p and q. Among them, parameter p is used to control the probability of returning to the previous node. Following the original paper, we set . Parameter q is used to control whether the random walk on the regular network leans more toward BFS (Breadth-First Search) or DFS (Depth-First Search). The difference between the two lies in the fact that BFS tends to emphasize learning the structural similarity of nodes in the network, while DFS, on the other hand, places more emphasis on reflecting node connectivity during traversal. In the HMRLH method, we already represented the structural similarity of nodes in the network by introducing supernodes. Therefore, during the random walk process, we tend to focus more on learning the connectivity of nodes. Taking , we conducted a sensitivity analysis on q values within the range of 0 to 1, as shown in Figure 12 and Figure 13. As the q value varies, the algorithm’s performance also undergoes changes. Analyzing the experimental results, it can be concluded that the algorithm performs relatively well when q is set to 0.4 or 0.6.

Figure 12.

Illustrating how the AUC changes with the hyperparameter q value in the LEMON-DB method.

Figure 13.

Illustrating how the AUC changes with the hyperparameter q value in the HMRLH-DB method.

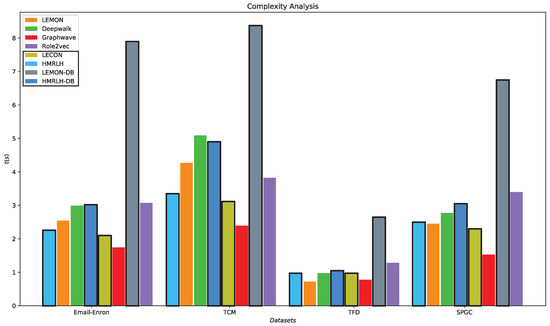

4.6. Time Complexity Analysis

The HMRLH method consists of two steps. First, it extracts hypermotifs from the network and reconstructs the network using these hypermotifs as supernodes. Then, it utilizes Depth and Breadth Motif Random Walk to obtain node sequences and learn node embeddings. The algorithm for extracting hypermotifs from the network is as follows (Algorithm 1):

| Algorithm 1: Closed and Opened Hypermotif Count |

Input: hypergraph: G = (V, E) Output: the number of open hypermotifs and closed hypermotifs corresponding to each node for

in E: for , in if != : if == : for in V: for in E: if in : return

end |

G represents the input network, and V and E represent the sets of nodes and edges, respectively. denotes the number of closed hypermotifs formed by hyperedge , and denotes the number of open hypermotifs formed by hyperedge . represents the number of closed hypermotifs formed by hyperedges containing node , and represents the number of open hypermotifs formed by hyperedges containing node .

Analyzing Algorithm 1, we can see that the time complexity for extracting hypermotifs is , where represents the number of hyperedges, L represents the average length of adjacency vectors, and represents the number of nodes in the network. In the embedding phase, Depth and Breadth Motif Random Walk introduces two additional parameters, p and q, for calculating the probabilities of moving to each neighboring node when selecting the next walking node. Its time complexity is , where represents the number of nodes, n represents the number of random walks, and l represents the length of random walks. The runtime of each algorithm in the embedding phase on various datasets is illustrated in Figure 14.

Figure 14.

Comparison chart of the runtime for each algorithm across the four datasets.

From Figure 14, it can be observed that the runtime of the HMRLH method is generally slightly lower than that of the LEMON method. This is likely because the HMRLH method introduces only two types of supernodes, resulting in a relatively smaller selection when generating random walk sequences. On the other hand, algorithms LEMON-DB and HMRLH-DB, which incorporate the Depth and Breadth Motif Random Walk method, have relatively longer runtimes. This is primarily due to the higher time complexity of the Depth and Breadth Motif Random Walk method, which requires more time to generate walk sequences. However, in terms of algorithmic complexity, the HMRLH method, which incorporates hypergraph modeling, exhibits relatively lower time complexity compared with all the baselines.

5. Conclusions

In this paper, addressing the issues of high time complexity and potential overfitting in the LEMON method, we propose a network representation learning approach based on Clique structure, named LECON. The experimental results indicate that, compared with the LEMON method, the LECON approach not only reduces time complexity but also enhances link prediction performance. Subsequently, addressing the limitation that “node–edge”-structured networks may not effectively capture group relationships, we model the data using a hypernetwork. Introducing hypermotifs as hypernodes, we propose the HMRLH method. Through experiments on various datasets, we validate that hypernetwork modeling exhibits certain advantages in link prediction compared with traditional “node–edge”-structured modeling. Finally, we introduce a motif random walk method that considers both depth-first and breadth-first strategies. We incorporate this method into the LEMON and HMRLH approaches, comparing them with the original methods. Experimental results demonstrate that the introduced motif random walk in the LEMON-DB and HMRLH-DB methods effectively enhances link prediction accuracy.

6. Discussion

This paper introduces the LECON and HMRLH methods based on Clique and HyperGraph, respectively. Building upon these methods, we further incorporate a depth–breadth motif random walk approach to enhance the experimental performance of the original methods in the field of link prediction. Compared with traditional methods, we took a more comprehensive approach to consider the impact of higher-order characteristics in the network on node features, and we validated this in our experiments. However, in the HMRLH method, the random walk approach we employed still samples between individual nodes. Exploring a random walk method that better captures hyperedge relationships remains an area for further investigation. Furthermore, while the Depth and Breadth Motif Random Walk method proposed in this paper has, to some extent, enhanced experimental performance, it also comes with a higher time complexity. This implies that applying such random walk methods to large-scale networks has certain limitations.

Author Contributions

The research was planned by K.F., G.Y. and H.L. The data acquisition was carried out by K.F., H.L. and W.C. The data analysis was conducted by K.F. and J.L. The manuscript was written by K.F. and W.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by the National Natural Science Foundation of China (Grant no. 62062049, 62366028), the Natural Science Foundation for Young Scientists of Gansu Province (Grant No. 22JR5RA595), Gansu Provincial Science and Technology Plan Project (Grant no. 21ZD8RA008), the Scientific Research and Innovation Fund Project of Gansu University of Chinese Medicine (Grant no. 2021KCYB-10), the Special Funds for Guiding Local Scientific and Technological Development by the Central Government (Grant no. 22ZY1QA005), and the Support Project for Youth Doctor in Colleges and Universities of Gansu Province (Grant no. 2023QB-038).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

Thanks to the reviewers and editors for their valuable comments and suggestions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zuo, X.N.; He, Y.; Betzel, R.F.; Colcombe, S.; Sporns, O.; Milham, M.P. Human connectomics across the life span. Trends Cogn. Sci. 2017, 21, 32–45. [Google Scholar] [CrossRef] [PubMed]

- Lü, L.; Chen, D.; Ren, X.L.; Zhang, Q.M.; Zhang, Y.C.; Zhou, T. Vital nodes identification in complex networks. Phys. Rep. 2016, 650, 1–63. [Google Scholar] [CrossRef]

- Wachs-Lopes, G.A.; Rodrigues, P.S. Analyzing natural human language from the point of view of dynamic of a complex network. Expert Syst. Appl. 2016, 45, 8–22. [Google Scholar] [CrossRef]

- Aziz, F.; Slater, L.T.; Bravo-Merodio, L.; Acharjee, A.; Gkoutos, G.V. Link prediction in complex network using information flow. Sci. Rep. 2023, 13, 14660. [Google Scholar] [CrossRef] [PubMed]

- Del Sol, A.; Fujihashi, H.; O’Meara, P. Topology of small-world networks of protein–protein complex structures. Bioinformatics 2005, 21, 1311–1315. [Google Scholar] [CrossRef] [PubMed]

- Guimera, R.; Danon, L.; Diaz-Guilera, A.; Giralt, F.; Arenas, A. Self-similar community structure in a network of human interactions. Phys. Rev. E 2003, 68, 065103. [Google Scholar] [CrossRef] [PubMed]

- Hua, Z.; Jing, X.; Martínez, L. Consensus reaching for social network group decision making with ELICIT information: A perspective from the complex network. Inf. Sci. 2023, 627, 71–96. [Google Scholar] [CrossRef]

- Aiello, L.M.; Barrat, A.; Schifanella, R.; Cattuto, C.; Markines, B.; Menczer, F. Friendship prediction and homophily in social media. ACM Trans. Web (TWEB) 2012, 6, 1–33. [Google Scholar] [CrossRef]

- Buccafurri, F.; Lax, G.; Nocera, A.; Ursino, D. Discovering missing me edges across social networks. Inf. Sci. 2015, 319, 18–37. [Google Scholar] [CrossRef]

- Ravasz, E.; Somera, A.L.; Mongru, D.A.; Oltvai, Z.N.; Barabási, A.-L. Hierarchical Organization of Modularity in Metabolic Networks. Science 2002, 297, 1551–1555. [Google Scholar] [CrossRef]

- Adamic, L.A.; Adar, E. Friends and neighbors on the Web. Soc. Netw. 2003, 25, 211–230. [Google Scholar] [CrossRef]

- Zhou, T.; Lv, L.; Zhang, Y.C. Predicting missing links via local information. Eur. Phys. J. B 2009, 71, 623–630. [Google Scholar] [CrossRef]

- Le, T.; Le, N.; Le, B. Knowledge graph embedding by relational rotation and complex convolution for link prediction. Expert Syst. Appl. 2023, 214, 119122. [Google Scholar] [CrossRef]

- Cai, R.; Chen, X.; Fang, Y.; Wu, M.; Hao, Y. Dual-dropout graph convolutional network for predicting synthetic lethality in human cancers. Bioinformatics 2020, 36, 4458–4465. [Google Scholar] [CrossRef] [PubMed]

- Abuoda, G.; De Francisci Morales, G.; Aboulnaga, A. Link Prediction via Higher-Order Motif Features. In Proceedings of the European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases, Wurzburg, Germany, 16–20 September 2019. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, H.; Lu, C.; Lee, C. Motif-based Graph Self-Supervised Learning for Molecular Property Prediction. Adv. Neural Inf. Process. Syst. 2021, 34, 15870–15882. [Google Scholar]

- Sun, B.J.; Shen, H.; Gao, J.; Ouyang, W.; Cheng, X. A non-negative symmetric encoder-decoder approach for community detection. In Proceedings of the 26th ACM International Conference on Information and Knowledge Management, Singapore, 6–10 November 2017. [Google Scholar]

- Ye, F.; Chen, C.; Zheng, Z. Deep autoencoder-like nonnegative matrix factorization for community detection. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018. [Google Scholar]

- Berahmand, K.; Nasiri, E.; Forouzandeh, S.; Li, Y. A preference random walk algorithm for link prediction through mutual influence nodes in complex networks. J. King Saud Univ. Comput. Inf. Sci. 2022, 34, 5375–5387. [Google Scholar] [CrossRef]

- Nasiri, E.; Berahmand, K.; Li, Y. A new link prediction in multiplex networks using topologically biased random walks. Chaos, Solitons Fractals 2021, 151, 111230. [Google Scholar] [CrossRef]

- Skiena, A.R. DeepWalk: Online Learning of Social Representations. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, New York, NY, USA, 24–27 August 2014. [Google Scholar]

- Narayanan, A.; Chandramohan, M.; Venkatesan, R.; Chen, L.; Jaiswal, S. graph2vec: Learning Distributed Representations of Graphs. arXiv 2017, arXiv:1707.05005. [Google Scholar]

- Grover, A.; Leskovec, J. node2vec: Scalable Feature Learning for Networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Shao, P.; Yang, Y.; Xu, S.; Wang, C. Network Embedding via Motifs. ACM Trans. Knowl. Discov. Data 2022, 16, 1–20. [Google Scholar] [CrossRef]

- Estrada, E.; Rodríguez-Velázquez, J.A. Subgraph centrality and clustering in complex hyper-networks. Phys. A Stat. Mech. Its Appl. 2006, 364, 581–594. [Google Scholar] [CrossRef]

- Ma, T.; Guo, J. Industry-University-Research Cooperative Hypernetwork for Applying PatentBased on Weighted Hypergraph: A Case of ICT Industry from Shanghai. Syst. Eng. 2018, 36, 13. [Google Scholar]

- Zhang, Z.; Wei, R.; Feng, S.; Wu, Q.; Mei, Y.; Xu, L. Construction and Empirical Study of Dynamic Optimal Evolution Model for Urban Rail Transit Hyper Networks Based on Allometric Growth Relationship. Authorea Prepr. 2023. [Google Scholar] [CrossRef]

- Sun, X.; Cheng, H.; Liu, B.; Li, J.; Chen, H.; Xu, G.; Yin, H. Self-supervised hypergraph representation learning for sociological analysis. IEEE Trans. Knowl. Data Eng. 2023, 35, 11860–11871. [Google Scholar] [CrossRef]

- Milo, R.; Shen-Orr, S.; Itzkovitz, S.; Kashtan, N.; Chklovskii, D.; Alon, U. Network motifs: Simple building blocks of complex networks. Science 2002, 298, 824–827. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Zhou, Y.; Song, Y.; Lee, D.L. Motif enhanced recommendation over heterogeneous information network. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Sizemore, A.E.; Giusti, C.; Kahn, A.; Vettel, J.M.; Betzel, R.F.; Bassett, D.S. Cliques and cavities in the human connectome. J. Comput. Neurosci. 2018, 44, 115–145. [Google Scholar] [CrossRef]

- Lee, G.; Ko, J.; Shin, K. Hypergraph Motifs: Concepts, Algorithms, and Discoveries. In Proceedings of the VLDB Endowment, Online, 31 August–4 September 2020; pp. 2256–2269. [Google Scholar]

- Benson, A.R.; Abebe, R.; Schaub, M.T.; Jadbabaie, A.; Kleinberg, J. Simplicial closure and higher-order link prediction. Proc. Natl. Acad. Sci. USA 2018, 115, E11221–E11230. [Google Scholar] [CrossRef]

- Donnat, C.; Zitnik, M.; Hallac, D.; Leskovec, J. Learning structural node embeddings via diffusion wavelets. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, London, UK, 19–20 August 2018. [Google Scholar]

- Ahmed, N.K.; Rossi, R.; Lee, J.B.; Willke, T.L.; Zhou, R.; Kong, X.; Eldardiry, H. Learning role-based graph embeddings. arXiv 2018, arXiv:1802.02896. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).