Abstract

Millimeter wave (mmWave) and terahertz (THz) massive MIMO architectures are pivotal in the advancement of mobile communications. These systems conventionally utilize codebooks to facilitate initial connection and to manage information transmission tasks. Traditional codebooks, however, are typically composed of numerous single-lobe beams, thus incurring substantial beam training overhead. While neural network-based approaches have been proposed to mitigate the beam training load, they sometimes fail to adequately consider the minority users dispersed across various regions. The fairness of the codebook coverage relies on addressing this problem. Therefore, we propose an imbalanced learning (IL) methodology for beam codebook construction, explicitly designed for scenarios characterized by an imbalanced user distribution. Our method begins with a pre-clustering phase, where user channels are divided into subsets based on their power response to combining vectors across distinct subareas. Then, each subset is refined by a dedicated sub-model, which contributes to the global model within each IL iteration. To facilitate the information exchange among sub-models during global updates, we introduce the focal loss mechanism. Our simulation results substantiate the efficacy of our IL framework in enhancing the performance of mmWave and THz massive MIMO systems under the conditions of imperfect channel state information and imbalanced user distribution.

1. Introduction

Millimeter wave (mmWave) and terahertz (THz) multiple-input multiple-output (MIMO) communication systems are crucial in driving the progress of the fifth generation (5G) mobile communication and future Beyond 5G. These systems incorporate large-scale antenna arrays, providing notable beamforming gains and adequate signal reception capability. Nonetheless, the implementation of fully digital transceiver architectures, necessitating an RF chain for each antenna, faces limitations due to the cost and power consumption issues linked with high-frequency mixed-signal circuits [1]. As a result, mmWave and THz systems frequently adopt either fully analog or hybrid analog/digital architectures for their combining operations [2,3]. In these configurations, transceivers utilize the networks of phase shifters. Due to the challenges associated with channel estimation and feedback, they commonly employ predefined single-lobe beamforming codebooks, such as DFT codebooks [4,5]. These classical pre-defined beamforming/beamsteering codebooks typically encompass a multitude of single-lobe beams, with each beam capable of steering the signal in a specific direction. These classical beamforming codebooks encounter two primary issues. Firstly, they involve extra beam training costs by covering all possible directions, which includes the redundant beams for beam training. Secondly, they slightly accommodate specific scenarios such as non-line-of-sight (NLOS) conditions or imbalanced user distribution.

Recent developments have focused on enhancing the adaptability of beam codebooks to user distributions in both LOS and NLOS conditions using neural network (NN)-based approaches [6,7]. Moreover, reinforcement learning has also been utilized to adapt the beam patterns based on the surrounding environment [8]. Nevertheless, the challenges posed by imbalanced or non-uniform user distribution, crucial for 5G deployment [9,10], remain largely unexplored. Typically, in imbalanced user distribution, majority users significantly outnumber the minority users. This is related to the imbalanced long-tail data challenge in machine learning, which hampers the neural network’s ability to generalize for minority classes [11]. In this case, imbalanced user distribution can lead to either overlooking or underperforming combined gain for these minority users, especially when applying NN-based methods in codebook design. Ensuring equitable codebook coverage necessitates an effective approach to address the data imbalance-related problem. Outside the realm of wireless communication, imbalanced learning (IL)-based solutions typically involve resampling the different classes in [12,13] and re-weighting featured by adjusting the loss calculated from the majority and minority classes in [14,15,16]. Furthermore, ensemble learning, a technique that aims to enhance the performance of a single classifier by integrating multiple complementary classifiers, has demonstrated its effectiveness in adapting to and generalizing from imbalanced data [17,18]. However, directly applying these strategies in mmWave and THz communications overlooks the richness of the geometric and physical attributes of the training data, i.e., the channels. Consequently, it is natural to propose an IL-based codebook specifically designed for imbalanced user distributions in mmWave and THz communication systems, which meets the following criteria. Firstly, the IL method aims to fully exploit the physical features of the channels. This is achieved by implementing pre-clustering, which is similar to a reverse process of DFT codebook-based combining. Secondly, the method inherits the superior feature extraction capability of NN-based approaches. This is accomplished through the utilization of multiple sub-models to deal with different classes of channels. Each of these sub-models contributes to the global model, which is similar to the essentials of ensemble learning.

In this paper, our work centers around the advancement of codebook design enhanced by the IL framework, particularly in scenarios where user distribution is notably imbalanced. The proposed approach commences with a pre-clustering of user channels based on the power responses of distinct combining vectors across various subregions, which enables a distinction between channels associated with majority and minority users. The classification creates multiple subsets. Moving forward, we introduced an innovative IL architecture to process these identified subsets. Each subset is then associated with a phase shift sub-model, which contributes to the global model after undergoing sub-forward and sub-backpropagation passes. To bolster information exchange across sub-models, we employ the focal loss function during global updating. Ultimately, iterative IL updating rounds for both global and sub-models allow the IL-based codebook to adapt to the imbalanced user distribution under imperfect channel conditions, which outperforms the NN-based baseline in the achievable rate metric.

Throughout this paper, vectors and matrices are represented by lower-case and upper-case boldface letters, respectively. The sets and denote the real and complex spaces of dimensions . The Hermitian transpose and the transpose of a matrix are indicated by and , respectively. We denote an identity matrix by and an zero matrix by . A complex Gaussian random vector is characterized as , where and represent the mean vector and the covariance matrix, respectively. The notation denotes the n-norm of a vector.

The rest of this paper is organized as follows: Section 2 details the system model. Section 3 provides the problem description and defines the optimization objective. In Section 4, we introduce the proposed IL scheme. Section 5 describes the experimental setup and discusses the simulation results. Finally, Section 6 concludes the paper.

2. System Model

2.1. Channel Model

This study investigates a mmWave and THz massive MIMO communication system that accommodates U single-antenna users dispersed over F subregions, which utilizes a base station (BS) equipped with a uniform linear array (ULA) comprising M antennas. The single-cluster geometric channel vector between the single antenna uth user and a M-antenna BS can be written as [19]

where is the normalization factor, denotes the complex gain of the lth path, and there are L paths in total. Also, the term represents the angle of arrival (AOA) of the lth path in the angle domain . The array response vector is given by the ULA geometric structure of the M-antennas as

where is the wavelength and d signifies the spacing between antennas. To comprehensively characterize the channel states of all U users, we introduce the concept of a channel set denoted as . It is formally defined as and hence .

2.2. User Distribution

To streamline the representation of user distribution, we divide the channel set into F subsets. This can be expressed as: . Here, each user channel group encompasses the channels of designated users belonging to class f (). In more detail, by employing the mean AOA of the user’s channel as the feature of a cluster [19], the channel subset comprises channels with the same mean AOA values that fall within the interval . Here, is partitioned into F non-overlapping subregions. Subsequently, an imbalanced user distribution is manifested by a substantial difference in the cardinality between one subset and the others, i.e., . Consequently, we can interpret as , representing the channel set of the majority of users. The channels not encompassed by are collectively categorized under , which denotes the channel subsets corresponding to the minority user groups.

2.3. Uplink Transmission

In the uplink data transmission stage, the uth user sends the uplink symbol to the BS. After combining in BS, the processed signal can be represented as

where the symbol has a mean power limitation . Also, the noise vector at the BS follows . Next, the combining vector is the th beam of the N-beam codebook , i.e., , , and . In particular, is given as

where represents the phase shift applied at the mth BS antenna. Importantly, is only responsible for modifying the phase of the signal and does not affect the processed signal power. To this end, it is possible to optimize the configuration of .

3. Problem Definition

In this paper, our primary goal is to devise a design for an N-beam codebook, referred to as , which can adeptly accommodate the imbalanced user distribution outlined in Section 2.2. Given the substantial generalization and adaptability attributes inherent in NN architectures, we selected this approach as the backbone network to create an adaptive codebook. Hence, we aim to reframe the construction of codebook into an optimization problem based on NNs in this section.

First of all, our aim is to formulate a beamforming codebook with the target of optimizing the combining gain. This gain is defined as [6]. To this end, the optimal is determined by exhaustively searching through in the codebook, i.e.,

where signifies the selection of the most suitable codeword selected from the codebook . Following this, the optimization problem is elucidated. Specifically, the task is to maximize the combining gain across the entire dataset of user channels with the designed codebook. The expression of the superlative codebook can be rewritten as

The above-mentioned restriction is introduced to ensure adherence to power limitations imposed by the combining vectors.

Furthermore, a prominent challenge inherent in optimizing this problem lies in the imbalanced distribution of users, notably the substantial imbalance between the majority and minority subsets. This disproportionality leads to a faint combining gain improvement for minority user channels when employing conventional NN-based methods. This phenomenon can be attributed to the fact that the loss values computed from the minority user channels contribute insignificantly to the overall loss during batch updates in traditional NN-based methods. Consequently, the associated combining gains for the minority users from these updates remain almost the same. In the subsequent section, we will conduct an in-depth analysis of the underlying causes for the limited contribution of the minority user losses and propose an innovative IL-based solution to effectively mitigate this issue.

4. Imbalanced Learning

In this section, we employ IL to construct a more adaptive codebook in scenarios characterized by imbalanced user distribution, as compared to the NN-based approach. This adaptability arises from the independent updates of sub-models and the fair aggregation process applied to the global model. To achieve this, we introduce an initial pre-clustering method that capitalizes on the channel properties to effectively differentiate between and , subsequently assigning them to separate subsets. Following this, we propose an IL framework to attain the desired beam patterns for these diverse subsets. Finally, we present a global model structure ensuring the equitable integration of the sub-models.

4.1. Model Architecture

4.1.1. Pre-Clustering Process

According to the user distribution elucidated in Section 2.2, our primary objective is to categorize users and their associated channels into distinct clusters. This process results in distinguishing between and the corresponding . Additionally, segmenting into clusters can be likened to partitioning into discrete regions. This segmentation effectively characterizes each cluster by the median angle, denoted by , of subregion ( [20]. Consequently, by defining the median angle of each cluster as a distinctive feature, we can express the pre-clustered index as follows

where the pre-clustering power vector , the power response to each combining vector is defined as and the pre-clustering codebook matrix . Also, the function signifies the array response for a given AOA. This further signifies that the channels in share analogous power responses to the respective featured combining vector within the th subregion. To implement the above equation in the form of NN, the complex matrix multiplication, power computation, and argmax can be mapped into the basic NN components. These components will be introduced in the next subsection. Then, the pre-clustered channels, which belong to the subset , will be stored in the th subset register waiting to be processed by the corresponding sub-model. After partitioning into distinct types, the next step involves deploying a model to acquire the features of . Specifically, this paper adopts a focal loss and cross entropy loss combined IL model improved from the original NN-based self-supervised method outlined in [7]. The details of the IL forward pass architecture will be elaborated in the subsequent parts.

Remark 1.

The pre-clustering process draws inspiration from the DFT codebook , denoted by . Given that the angle domain Ψ is defined as the range , it is divided into M subareas, each with an equal interval of . Consequently, when a channel’s mean AOA is congruent with a combining vector for a specific interval within Ψ, a superior power response is realized. In essence, the DFT codebook covers the entire angular space, which ensures that there is a combining vector catering to the specific channel, and thus the highest power response is attained among the pre-defined combining vectors. However, the best power response within the pre-defined combining vectors may not be optimal and requires the more adaptive NN-based method to optimize.

Informed by the exhaustive angular coverage of the DFT codebook, the initial step of our method involves calculating the power response for each channel across the subdivided angular sectors. Through this assessment, we identify the superior combining vector that results in the highest power among the pre-defined combining vectors. This process facilitates the determination of a channel’s pre-clustering class where the channels share similar power responses.

4.1.2. Complex-Valued Fully Connected and Power Computation Layer

The layer is designed to perform complex-valued multiplicative and additive operations in the neural network backbone. In this context, the signal post-combining is represented by the resultant inner product, i.e.,

where signifies the received signal vector after combining and denotes a channel from the subset . Also, the term denotes the th sub-model codebook matrix. Moreover, unlike the conventional NN approaches in computer vision, the elements of the codebook matrix do not function as parameters of the fully connected layer. Instead, the codebook matrices are generated based on the real NN weights [6]. This operation is conducted through the phase-to-complex conversion, scaled by , which is given as

where denotes the phase shift matrix and represents a phase shift vector.

Following this, the power received from each combining vector is determined by evaluating the square modulus of every complex value in the received signal vector. This leads to the formulation of the received signal power , which is defined as

4.1.3. Softmax and Argmax Layer

This layer commences with a softmax operation to estimate the alignment ’probability’ between a combining vector and the current channel , which is indicated by the received power . Accordingly, the softmax vector, represented by , is formulated as

On the other hand, the argmax layer is tasked with conducting the one-hot vector, denoted by , and it is given as

where the operation is to identify the position of the maximum value within the softmax vector. Additionally, the self-generated label vector plays a crucial role in adjusting and updating the phase shifts in the codebook. This adaptation is achieved through the utilization of the cross-entropy loss during sub-updating and the focal loss during global updating. Also, it is worth noting that a similar operation is also required in the pre-clustering process to determine the class index associated with the optimal power response.

Transitioning to the process of learning the IL-based codebook, the subsequent subsection will delve into the details of sub-backpropagation and the comprehensive IL solution.

4.2. Learning the IL-Based Codebooks

After establishing the architecture for the forward pass of the sub-model, the subsequent phase entails the application of sub-backpropagation to enhance the performance of the sub-model . Following the sub-backpropagation, the sub-models are aggregated to assess the overall performance of the global model, which then performs the global updating through the focal loss. Ultimately, the global model is partitioned into sub-models to initiate the next round of IL.

4.2.1. Sub-Backpropagation

The corresponding sub-model undergoes a series of forward-backward updating iterations based on . Within each iteration, as delineated in the preceding section, the forward processes obtain the softmax vectors and one-hot labels for a batch of channel vectors. In each of these iterations, the efficacy of the codebook is evaluated by quantifying the divergence between the obtained softmax vector and the target one-hot vector , utilizing the cross-entropy loss function, i.e.,

where and represent the nth element of the bth tuple , in a batch with the size of B. Moreover, channels for a single batch are indiscriminately chosen from both and , which constitute the comprehensive dataset . In contrast, in the IL-based batch, the batch exclusively comprises channels from either or .

Particularly, the aforementioned loss function considers the one-hot encoded label as the target distribution for the model. Its objective is to minimize this value through the adjustment of phase shift vectors with the aim of minimizing the discrepancy between and to the utmost degree. Subsequently, the backpropagation gradient of phase shift vectors is computed through the chain rule, i.e., [7]

Hence, the stochastic gradient descent (SGD) based method can be employed to update the sub-model phase matrix , which is given as

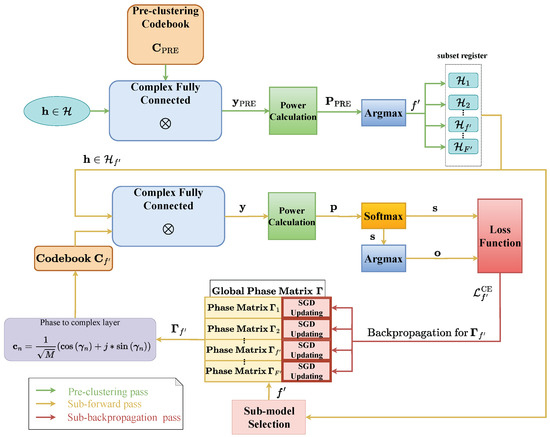

where denotes the learning rate of the SGD-based process. In conclusion, the pre-clustering process and sub-model updating are demonstrated in Figure 1. After the sub-model updates, the next stage will introduce the process of global aggregation and updating.

Figure 1.

The pre-clustering process and sub-model updating.

4.2.2. Global Updating

After the sub-model updating epochs, the phase shift matrices are fused into the global model. As previously explained, consists of distinct phase shift vectors, each corresponding to the number of combining vectors within the correlated codebook. Our objective is to evenly integrate the model across the various distributions, thereby addressing the challenge of imbalanced combining gain elevation in the NN-based approach. Driven by the specified objective, the global model is constructed by concatenating the phase shift matrices of varying dimensions to create a unified model, which is expressed by

where ( represents the global phase shift matrix model and denotes the corresponding global codebook. The above equation ensures the specialization of different sub-models.

Nonetheless, the process of concatenation introduces the invalid exchange of information among different sub-models, potentially sub-optimal in nature. The information exchange implies that a small proportion of the channels from a subset tend to have better power responses on combining vectors in the adjacent sub-model after global updating, instead of its own sub-model . Consequently, to adapt the global user distribution within , the global model should undergo updates akin to the sub-model. However, the cross-entropy loss function is supposed to be replaced with a more adaptive focal loss function in [21]. The adaptive method is supposed to automatically rectify the imbalance in loss calculations, stemming from the imbalance of quantity between two types of channels: those seeking to change their assigned sub-models and those already well adapted to their sub-models. Specifically, the focal loss mechanism notes the lower received power (reflected on the softmax probabilities) of the exchanged channels, compared with the received power of a sub-model’s originally well-adapted channels. To this end, the mechanism will introduce a more balanced loss function by improving the proportion of the losses of the low received power channels. Therefore, the focal loss function is utilized and given as [21]

where the modulating factor is added to the cross-entropy loss, with a tunable focusing parameter . In scenarios where the received power is relatively high (i.e., ), the modulating factor approaches zero. Conversely, for channels with lower received power, the modulating factor tends towards unity. As a result, when contrasted with the standard cross-entropy loss, the focal loss maintains its effectiveness for channels with lower received power while reducing the loss for those with higher received power. In essence, this adjustment amplifies the contribution of channels with lower receiver power that seeks to alter the serving sub-model within the loss function.

To initiate the subsequent IL iteration, is separated at the columns corresponding to the prior concatenation, reverting to their pre-fusion dimensions. This step can be considered the reverse operation of Equation (16). To date, Algorithm 1 has summarized the overall IL process.

| Algorithm 1 Imbalanced Learning. |

|

Remark 2.

While the loss function in the NN-based approach effectively quantifies the disparity between the current model and the desired response for users equally distributed, its primary limitation becomes apparent when applied in a batch-based context. Even though only the best-performing combining vectors associated with the current channel will be updated based on the one hot label, it is quite common for the same combining vector to serve both the majority and minority during a single batch. For instance, it arises when the codebook size is relatively small, and the previous batch update only contains the majority user channels and the current batch update contains minority user channels. To this end, in cases where there is an imbalance in the number of users across different regions, the loss associated with channels from might be unfairly averaged and consequently downplayed.

To illustrate this, it is reasonable to consider a NN-based updating process for a phase shift matrix based on the global dataset . Let the number of channels from in a batch be denoted by and the number of channels from as , with and . In such scenarios, the loss function for NN with a batch size of B can be represented as follows

If , the equation above is approximated as

This insight reveals a tendency of the NN-based method to underestimate the calculated loss in specific regions when the size of subsets in those regions is significantly smaller than the region with the predominant users. In the IL-based approaches, the shortcomings of the loss function are effectively mitigated. During the update phase of the phase shift matrix in NN, the enhancement in combining gain for minority users can be measured by the contribution of to the overall loss function, expressed as

where denotes the fraction of the loss derived from in the cumulative loss. For a comparative perspective, by assuming the update processes of all sub-models for are considered, the proportional contribution in the IL framework can be given as

where exceeds is evident in the stipulated scenario. Consequently, from Equations (20) and (21), it is inferred that minority users contribute more significantly to the loss function within the proposed framework. This ensures a more pronounced combining gain enhancement for minority users in comparison to the NN approach.

5. Simulation Result

In this section, we begin by providing an in-depth exposition of the dataset generation, model training, and testing configurations for the proposed algorithms. The simulation was conducted in a setting with imperfect channel conditions and a notably imbalanced user distribution.

5.1. Scenarios, Datasets, and Training Parameters

To assess the validity of the IL method in addressing the challenges posed by the imbalance within the channel set , we create a scenario featuring 10 non-overlapping and equally spaced subregions. The numbers of pre-clustering subsets in pre-clustering, BS antennas and paths are set as 5, 64 and 5, respectively. The AOA within each subregion follows a Laplacian distribution, all sharing the same mean angle and an angle spread of 10 degrees. More precisely, the mean AOAs in subregion n fall within the interval . We generate a total of 10,000 realizations of in the 10th subregion, representing the majority of users. In contrast, the minority group is represented by 250 realizations of , with subregions 1,3,4,5 containing 100, 50, 50, and 50 realizations, respectively. The average achievable rate for all users is denoted by and the term is the received SNR. For assessing the performance under imperfect channel conditions, we introduce synthetic additive white Gaussian noise to each channel’s data and define [20]. For simulations, is set as 10 dB. To assess the efficacy of our proposed method, we perform a comparative analysis. Our method is compared with the N-beam DFT codebook and with the self-supervised NN solution outlined in prior work [7]. In addition, we define the upper bound equal-gain combining vector as . This upper bound vector is defined based on the corresponding received power for each user, given as [22]. To simplify the IL model training, we maintain uniform codebook sizes across all sub-models. In a given training instance, the batch sizes during the sub-model and global updates are configured to 10 and 1000, respectively. Similarly, the epochs are set to 20 for sub-model updating and 5 for global updating. Moreover, the learning rate, the focal loss focusing parameter , and the validation rates are set as 0.001, 2, and 0.1, respectively.

5.2. Performance Analysis

5.2.1. Pre-Clustering Result

As delineated in Section 5.1, the constructed dataset comprises 10,000 realizations for region 10, while all other regions consist of 250 realizations. After pre-clustering, the channel sets , , , and encompass 125, 49, 95, and 9981 channels, respectively. These outcomes suggest that certain non-ideal conditions, such as constrained subregion segmentation, imperfect channel state information, and angle spacing, may impede precise clustering. However, the distinction between and remains generally discernible.

5.2.2. Achievable Rate and Model Convergence

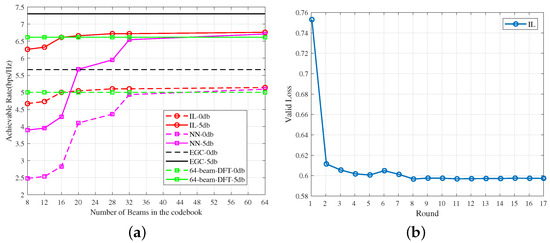

Figure 2a depicts the average achievable rate against the codebook size at received SNRs of 0 dB and 5 dB. The equal-gain receiver serves as the performance upper bound. Observations from the results indicate that increased codebook size leads to augmented gains and achievable rates, which implies enhanced feature extraction during updates. Notably, saturation in the achievable rate is observed at a 64-beam codebook size, which suggests marginal gains when transitioning from 32 to 64 beams. In terms of the proposed IL method’s efficacy, it surpasses the NN-based approach in the achievable rate for all cases except the 64-beam codebook. Specifically, with smaller codebooks (sizes of 8, 12, or 16 beams), the IL method demonstrates superior adaptability to and the imbalanced user distribution. For codebooks exceeding 16 beams, the IL method’s performance is commensurate with that of the 64-beam DFT codebook, whereas the NN-based codebooks lag behind. Moreover, Figure 2b depicts the validation losses for the 16-beam IL codebook over the IL rounds, each comprising sub-model and global updating cycles. It is close to saturation in the 8th round.

Figure 2.

The figures of achievable rate comparison utilizing different methods and the model convergence of IL-based methods: (a) average achievable rate versus the number of beams of the codebook utilizing different methods; and (b) valid loss versus the IL round.

5.2.3. Beam Pattern

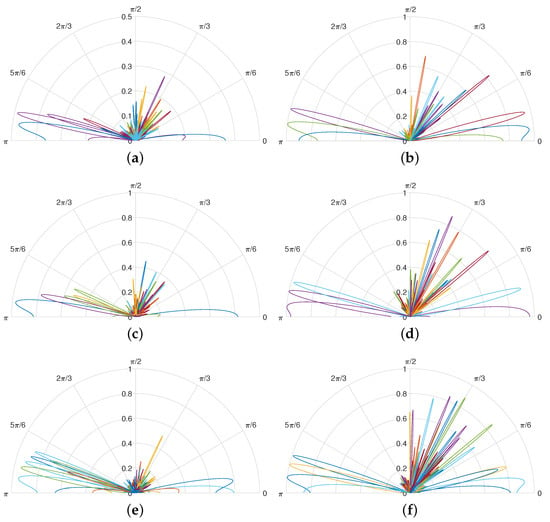

Figure 3 showcases the beam patterns emanating from six learned codebooks of sizes 20, 32, and 64, which demonstrates the learning outcomes for both the proposed and NN-based methods. Notably, unlike the DFT codebook, these patterns reveal that beams do not span the entire azimuth plane but are instead oriented toward user locations. However, the NN codebook tends to overlook minority channel groups in region 1, and the magnitude of beams for user channels in regions 3, 4, and 5 are not significantly enhanced after updating. This is particularly evident in the 64-beam NN codebook, where most beams cater to the majority user channels. Consequently, it fails to capture the desired beam pattern, which corroborates the analysis presented in Remark 2. In contrast, the IL-based method flexibly adapt to the minority user channels in multipath scenarios using multi-lobe beams or precisely aligns with the minority users’ orientation with a magnitude exceeding 0.7.

Figure 3.

Beam patterns for the codebook with 20, 32, 64 beams learned by the self-supervised NN and the IL solution in multipath setting: (a) 20-beam NN codebook; (b) 20-beam IL codebook; (c) 32-beam NN codebook; (d) 32-beam IL codebook; (e) 64-beam NN codebook; and (f) 64-beam IL codebook.

6. Conclusions

In this paper, our focus was on the design of codebooks aided by the IL framework in the mmWave and THz massive MIMO communication systems with imbalanced user distribution. The primary contributions of our work to the field of machine learning-enhanced mmWave and THz massive MIMO communication lie in revealing the negative impact of imbalanced datasets in practical communication on the overall performance of NN-based codebook design and proposing an IL-based method to handle the imbalanced samples. We began by pre-clustering based on the power responses of distinct combining vectors across various subregions, which allows us to differentiate between channels associated with majority and minority users. This classification resulted in multiple subsets. Subsequently, we introduced an innovative IL architecture to process these subsets. Each subset was associated with a phase shift sub-model , which contributed to the global model after undergoing sub-forward and sub-backpropagation passes. To enhance the information exchange across different sub-models, we utilized the focal loss function, which improved the balance during global updating. Compared to the NN-based method in previous work, the IL process ensured combining gain improvement during updates by augmenting the minority user channel’s contribution to the overall loss function in each batch. Through iterative IL updating rounds for both global and sub-models, our IL-based approach effectively adapted to the imbalanced user distribution under imperfect channel conditions, surpassing the performance of the NN-based baseline in terms of achievable rate. Moreover, the depicted beam patterns further intuitively confirmed the method’s effectiveness in accommodating minority user channels. In future work, we plan to broaden the scope of our methodology to encompass hybrid beamforming techniques. This expansion will involve integrating additional modules in the backbone network for designing baseband precoders. Additionally, to test the validation of the proposed IL framework in a more practical scenario, we will consider the potential hardware impairments on the antenna array geometric that are prevalent in the real-world mmWave and THz massive MIMO systems.

Author Contributions

Conceptualization, Z.C. and P.L.; methodology, Z.C.; software, Z.C.; validation, Z.C.; formal analysis, Z.C.; investigation, Z.C and P.L.; writing—original draft preparation, Z.C. and P.L.; writing—review and editing, Z.C., P.L., and K.W.; supervision, P.L and K.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key Research and Development Program of China under Grant 2021YFB3901503, in part by the National Natural Science Foundation of China under Grant 62001336 and Grant 62371353, in part by the open research fund of Key Lab of Broadband Wireless Communication and Sensor Network Technology (Nanjing University of Posts and Telecommunications), Ministry of Education under Grant JZNY202105, and in part by the Knowledge Innovation Program of Wuhan-Shuguang Project under Grant 2023010201020316, and in part by the China Scholarship Council (CSC) under Grant 202306950052.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sohrabi, F.; Yu, W. Hybrid digital and analog beamforming design for large-scale antenna arrays. IEEE J. Sel. Top. Signal Process. 2016, 10, 501–513. [Google Scholar] [CrossRef]

- Heath, R.W.; Gonzalez-Prelcic, N.; Rangan, S.; Roh, W.; Sayeed, A.M. An overview of signal processing techniques for millimeter wave MIMO systems. IEEE J. Sel. Top. Signal Process. 2016, 10, 436–453. [Google Scholar] [CrossRef]

- Alkhateeb, A.; Mo, J.; Gonzalez-Prelcic, N.; Heath, R.W. MIMO precoding and combining solutions for millimeter-wave systems. IEEE Commun. Mag. 2014, 52, 122–131. [Google Scholar] [CrossRef]

- Hur, S.; Kim, T.; Love, D.J.; Krogmeier, J.V.; Thomas, T.A.; Ghosh, A. Millimeter wave beamforming for wireless backhaul and access in small cell networks. IEEE Trans. Commun. 2013, 61, 4391–4403. [Google Scholar] [CrossRef]

- Wang, J.; Lan, Z.; Pyo, C.w.; Baykas, T.; Sum, C.s.; Rahman, M.A.; Gao, J.; Funada, R.; Kojima, F.; Harada, H.; et al. Beam codebook based beamforming protocol for multi-Gbps millimeter-wave WPAN systems. IEEE J. Sel. Areas Commun. 2009, 27, 1390–1399. [Google Scholar] [CrossRef]

- Zhang, Y.; Alrabeiah, M.; Alkhateeb, A. Learning beam codebooks with neural networks: Towards environment-aware mmWave MIMO. In Proceedings of the 2020 IEEE 21st International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), Atlanta, GA, USA, 26–29 May 2020; pp. 1–5. [Google Scholar]

- Alrabeiah, M.; Zhang, Y.; Alkhateeb, A. Neural networks based beam codebooks: Learning mmWave massive MIMO beams that adapt to deployment and hardware. IEEE Trans. Commun. 2022, 70, 3818–3833. [Google Scholar] [CrossRef]

- Zhang, Y.; Alrabeiah, M.; Alkhateeb, A. Reinforcement learning of beam codebooks in millimeter wave and terahertz MIMO systems. IEEE Trans. Commun. 2021, 70, 904–919. [Google Scholar] [CrossRef]

- Li, C.; Yongacoglu, A.; D’Amours, C. Downlink coverage probability with spatially non-uniform user distribution around social attractors. In Proceedings of the 2017 24th International Conference on Telecommunications (ICT), Limassol, Cyprus, 3–5 May 2017; pp. 1–5. [Google Scholar]

- Ye, J.; Ge, X.; Mao, G.; Zhong, Y. 5G ultradense networks with nonuniform distributed users. IEEE Trans. Veh. Technol. 2017, 67, 2660–2670. [Google Scholar] [CrossRef]

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- Pouyanfar, S.; Tao, Y.; Mohan, A.; Tian, H.; Kaseb, A.S.; Gauen, K.; Dailey, R.; Aghajanzadeh, S.; Lu, Y.H.; Chen, S.C.; et al. Dynamic sampling in convolutional neural networks for imbalanced data classification. In Proceedings of the 2018 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Miami, FL, USA, 10–12 April 2018; pp. 112–117. [Google Scholar]

- He, H.; Garcia, E.A. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Cui, Y.; Jia, M.; Lin, T.Y.; Song, Y.; Belongie, S. Class-balanced loss based on effective number of samples. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9268–9277. [Google Scholar]

- Huang, C.; Li, Y.; Loy, C.C.; Tang, X. Deep imbalanced learning for face recognition and attribute prediction. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2781–2794. [Google Scholar] [CrossRef] [PubMed]

- Cao, K.; Wei, C.; Gaidon, A.; Arechiga, N.; Ma, T. Learning imbalanced datasets with label-distribution-aware margin loss. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Chawla, N.V.; Lazarevic, A.; Hall, L.O.; Bowyer, K.W. SMOTEBoost: Improving prediction of the minority class in boosting. In Proceedings of the Knowledge Discovery in Databases: PKDD 2003: 7th European Conference on Principles and Practice of Knowledge Discovery in Databases, Dubrovnik, Croatia, 22–26 September 2003; Springer: Berlin/Heidelberg, Germany, 2003; pp. 107–119. [Google Scholar]

- Zhu, Z.; Wang, Z.; Li, D.; Zhu, Y.; Du, W. Geometric Structural Ensemble Learning for Imbalanced Problems. IEEE Trans. Cybern. 2020, 50, 1617–1629. [Google Scholar] [CrossRef] [PubMed]

- Forenza, A.; Love, D.J.; Heath, R.W. Simplified spatial correlation models for clustered MIMO channels with different array configurations. IEEE Trans. Veh. Technol. 2007, 56, 1924–1934. [Google Scholar] [CrossRef]

- Elbir, A.M.; Coleri, S. Federated learning for hybrid beamforming in mm-wave massive MIMO. IEEE Commun. Lett. 2020, 24, 2795–2799. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Love, D.J.; Heath, R.W. Equal gain transmission in multiple-input multiple-output wireless systems. IEEE Trans. Commun. 2003, 51, 1102–1110. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).