Saliency Detection Based on Low-Level and High-Level Features via Manifold-Space Ranking

Abstract

1. Introduction

2. Related Work

Graph-Based Manifold Ranking

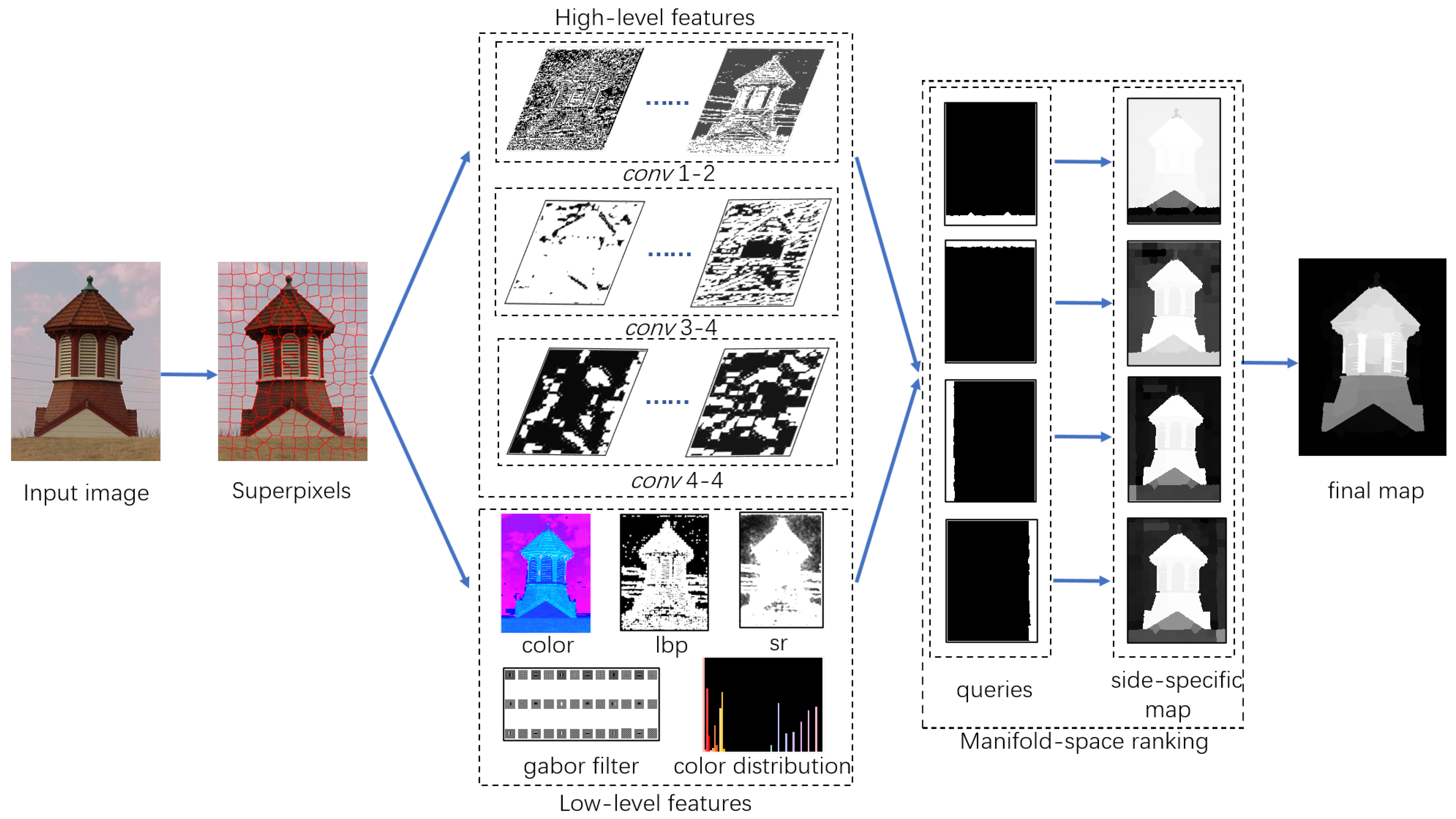

3. The Proposed Method

3.1. Low-Level Feature Extraction

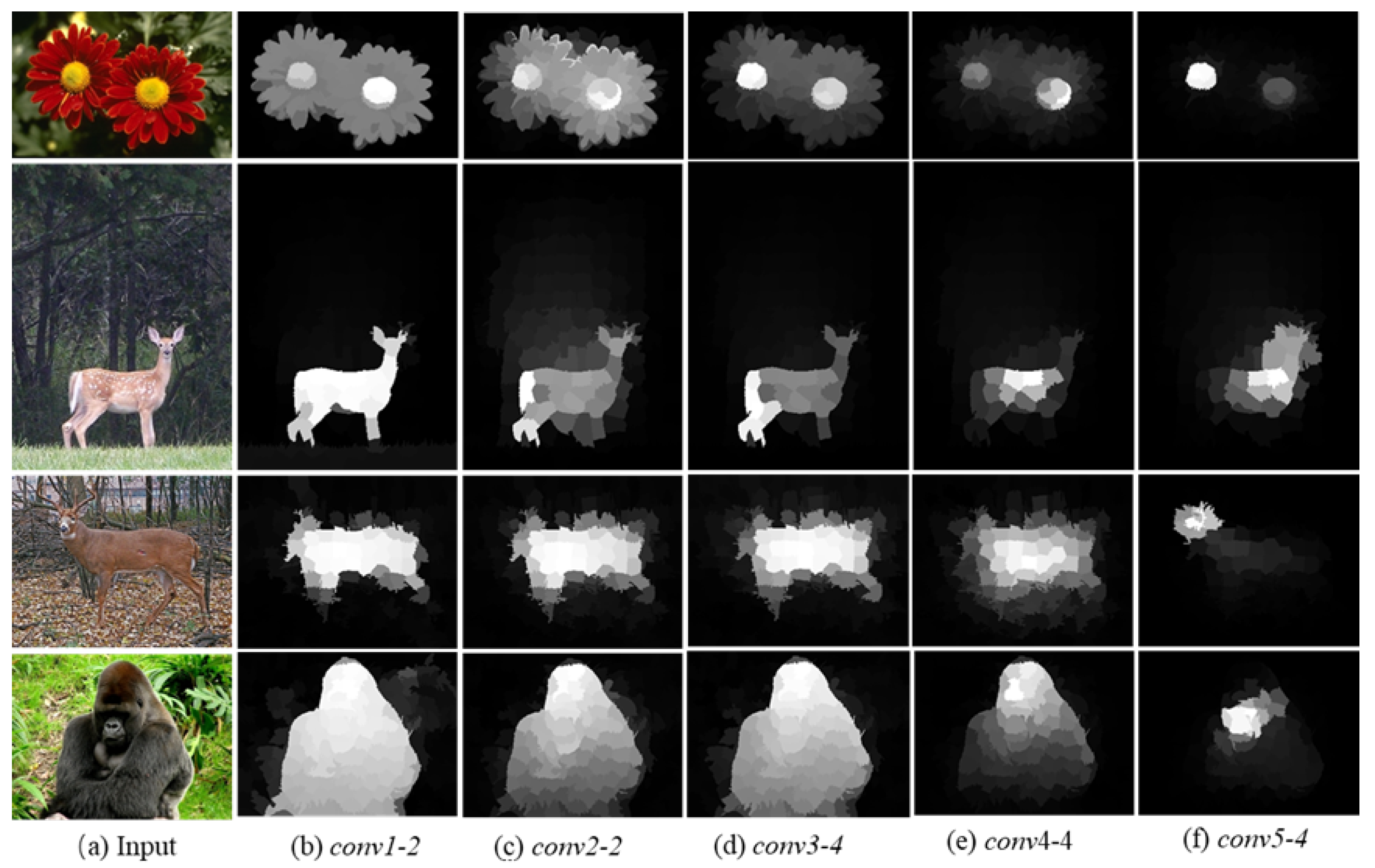

3.2. High-Level Feature Extraction

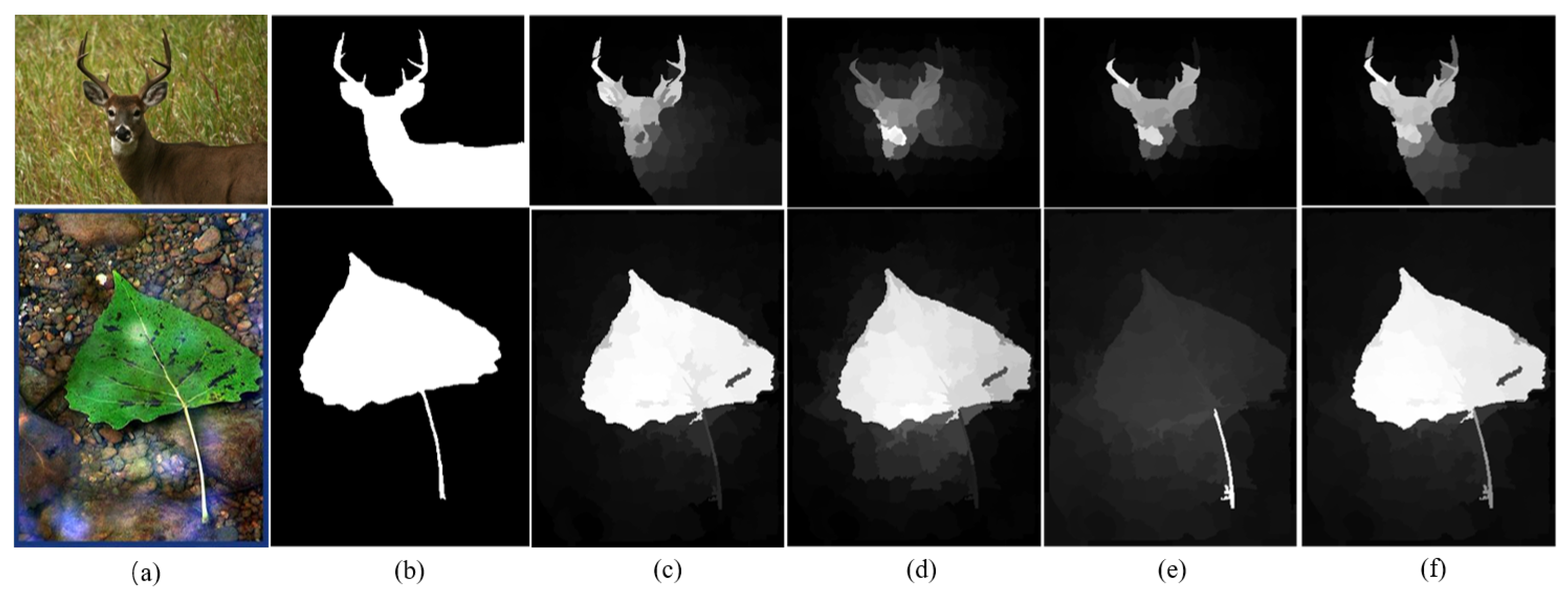

3.3. Saliency Detection in Two Stages

3.3.1. Ranking with Background Seeds

3.3.2. Ranking with Foreground Seeds

4. Experimental Validation and Analysis

4.1. Experiment Setup

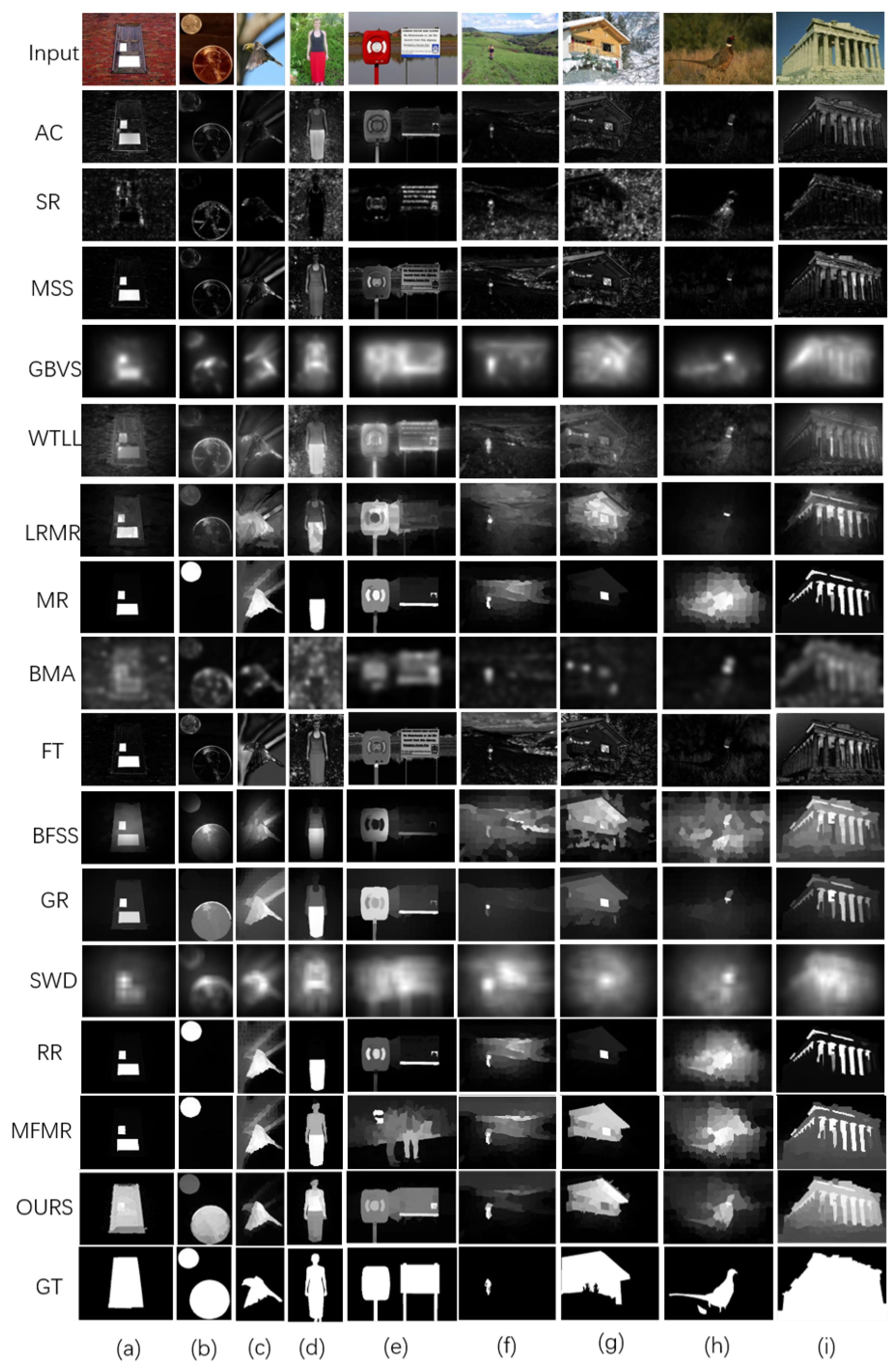

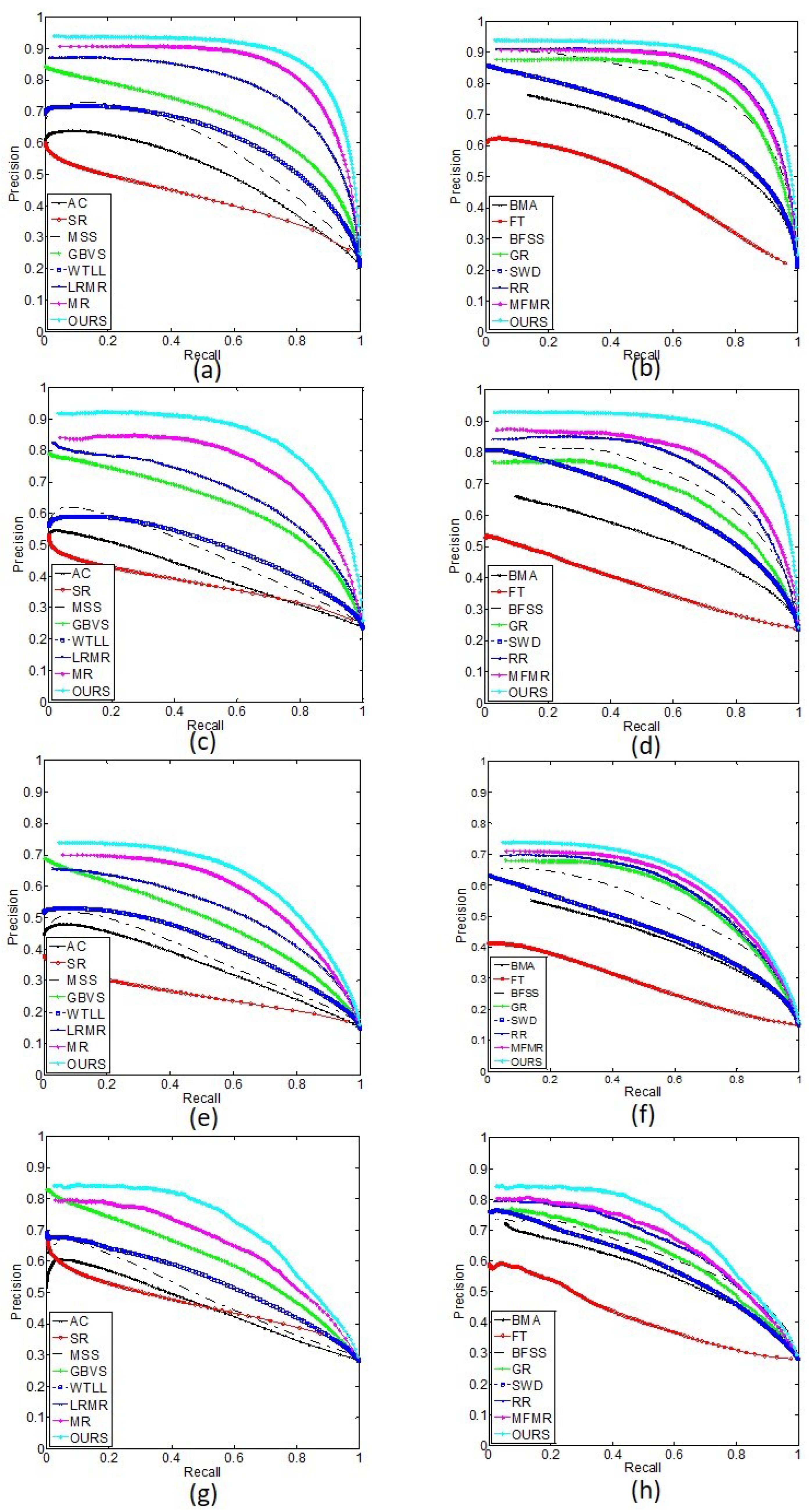

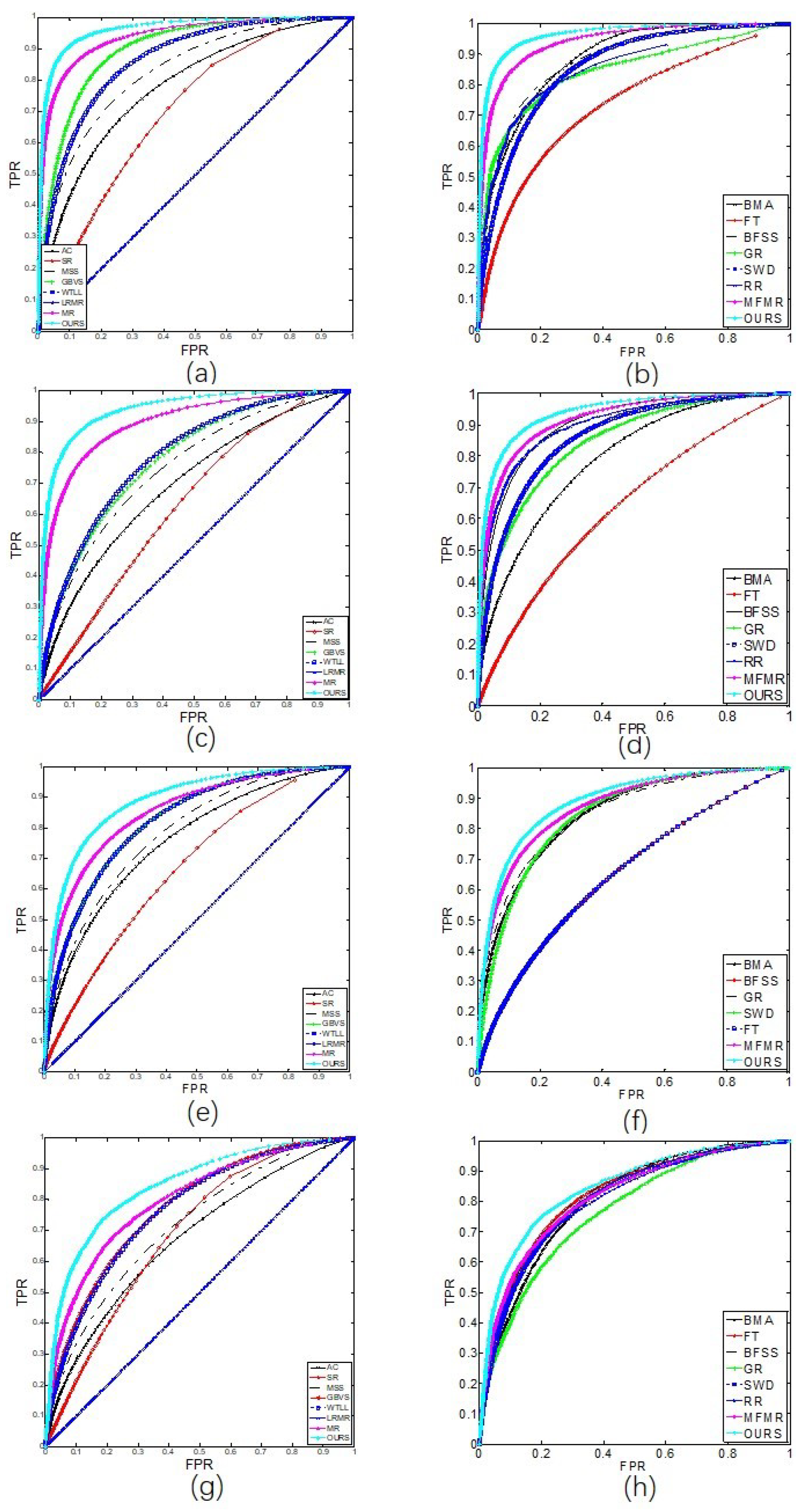

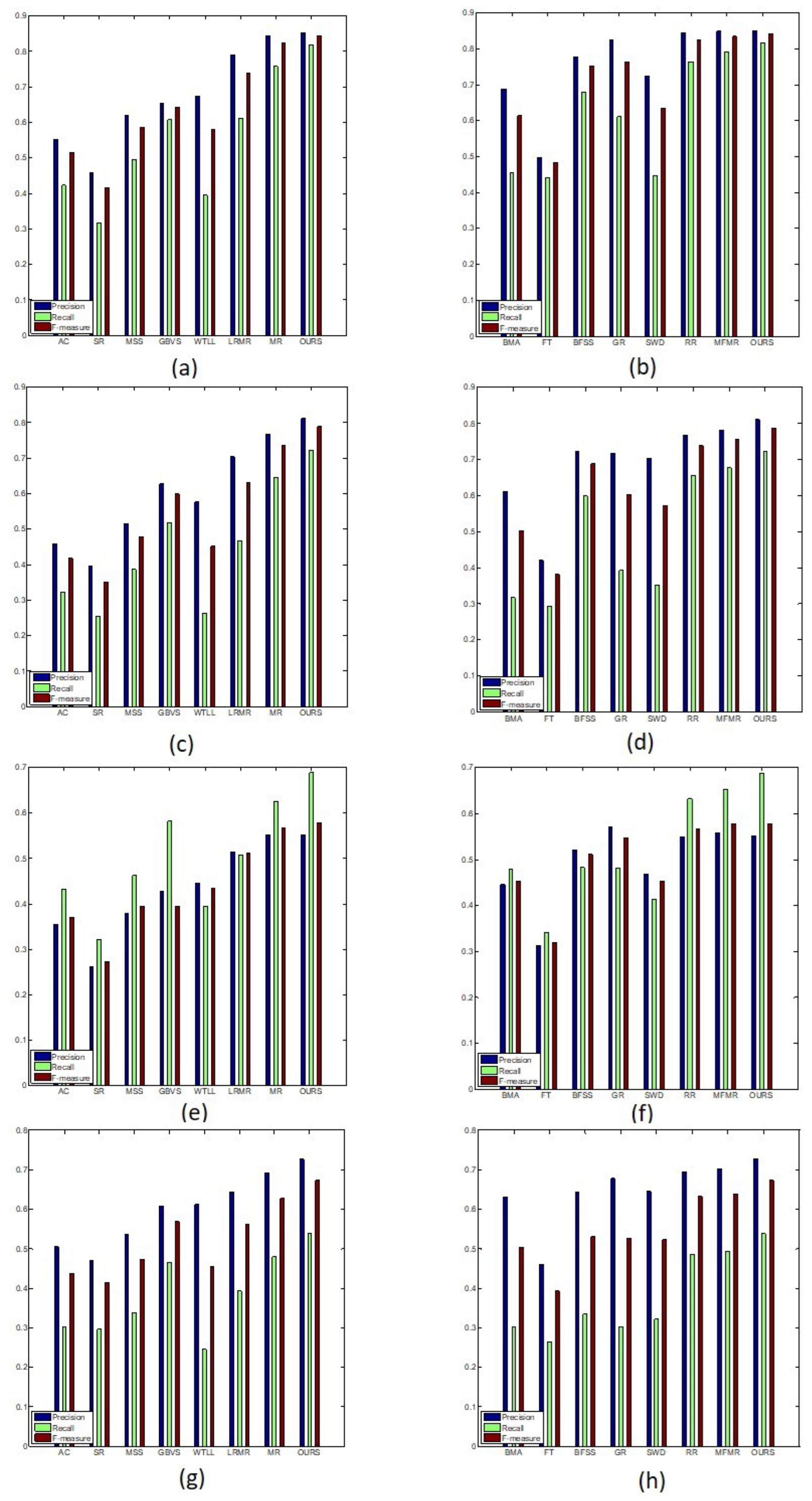

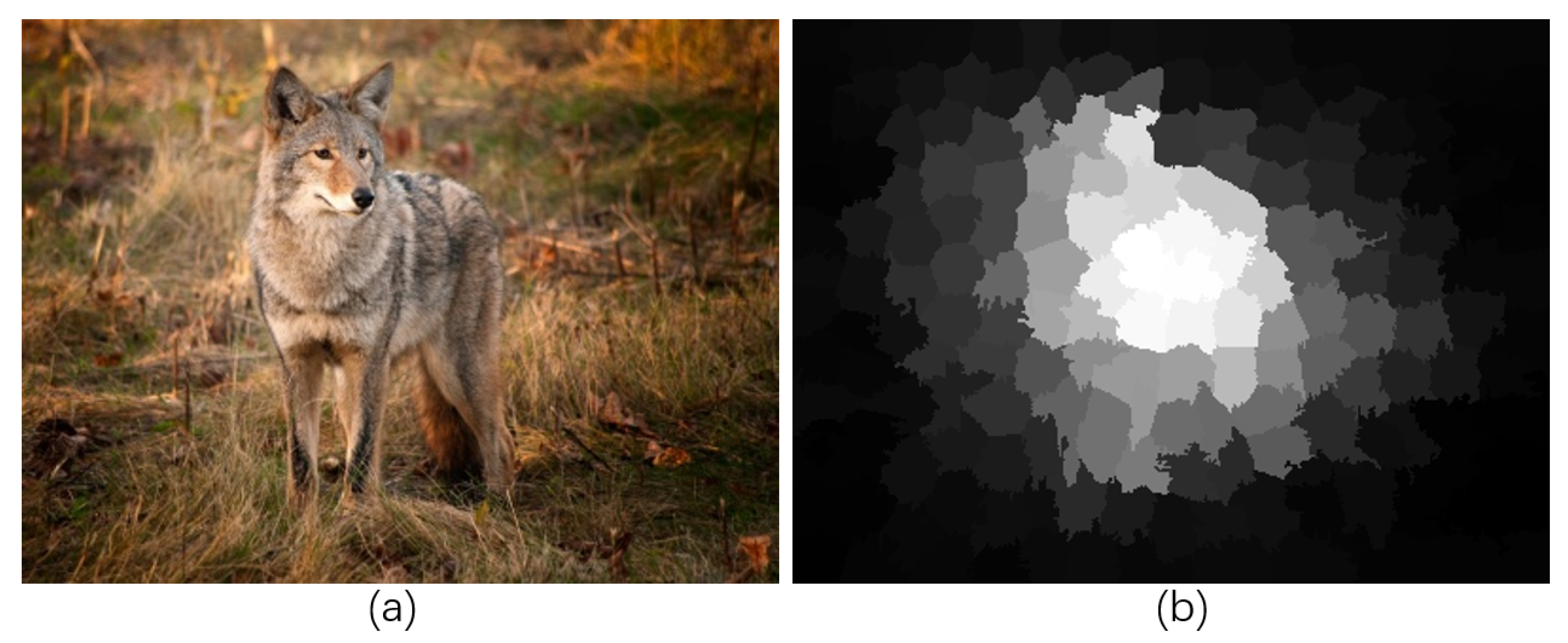

4.2. Visual Performance Comparison

4.3. Quantitative Performance Comparison

4.4. Limitation and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mahadevan, V.; Vasconcelos, N. Saliency-based discriminant tracking. In Proceedings of the Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1007–1013. [Google Scholar]

- Ding, Y.; Xiao, J.; Yu, J. Importance filtering for image retargeting. In Proceedings of the Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 89–96. [Google Scholar]

- Sun, J.; Ling, H. Scale and object aware image retargeting for thumbnail browsing. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 11–14 November 2011; pp. 1511–1518. [Google Scholar]

- Han, S.; Vasconcelos, N. Biologically plausible salicency mechanisms improve feedforward object recognition. Vis. Res. 2010, 50, 2295–2307. [Google Scholar] [CrossRef] [PubMed]

- Ishikura, K.; Kurita, N.; Chandler, D.M.; Ohashi, G. Saliency detection based on multiscale extrema of local perceptual color differences. IEEE Trans. Image Process. 2018, 27, 703–717. [Google Scholar] [CrossRef]

- Wang, L.; Xue, J.; Zheng, N.; Hua, G. Automatic salient object extraction with contextual cue. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 11–14 November 2011; pp. 105–112. [Google Scholar]

- Lempitsky, V.; Kohli, P.; Rother, C.; Sharp, T. Image segmentation with a bounding box prior. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 27 September–4 October 2009; pp. 277–284. [Google Scholar]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef]

- Judd, T.; Ehinger, F.; Torralba, A. Learning to predict where humans look. In Proceedings of the 6th International Conference on Computer Vision, Tokyo, Japan, 27 September–4 October 2009; pp. 2106–2113. [Google Scholar]

- Liu, T.; Yuan, Z.J.; Sun, J.; Wang, J.D.; Zheng, N.N.; Tang, X.O.; Shum, H.Y. Learning to Detect a Salient Object. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 353–367. [Google Scholar] [PubMed]

- Goferman, S.; Zelnik-Manor, L.; Tal, A. Context-aware saliency detection. In Proceedings of the Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1915–1926. [Google Scholar]

- Cheng, M.M.; Mitra, N.; Huang, X.; Torr, P.; Hu, S. Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Shi, J.P.; Yan, Q.; Xu, L.; Jia, J. Hierarchical Image Saliency Detection on Extended CSSD. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 717–729. [Google Scholar] [CrossRef] [PubMed]

- Zhu, W.J.; Liang, S.; Wei, Y.C.; Sun, J. Saliency optimization from robust background detection. In Proceedings of the Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–29 June 2014; pp. 2814–2821. [Google Scholar]

- Wang, J.P.; Lu, H.C.; Li, X.H.; Tong, N.; Liu, W. Saliency detection via background and foreground seed selection. Neurocomputing 2015, 152, 359–368. [Google Scholar] [CrossRef]

- Wei, Y.; Wen, W.; Sun, J.A. Geodesic saliency using background priors. In Proceedings of the European Conference on Computer Vision, Firenze, Italy, 7–13 October 2012; pp. 29–42. [Google Scholar]

- Yang, C.; Zhang, L.; Lu, H.C.; Ruan, X.; Yang, M. Saliency Detection via Graph-Based Manifold Ranking. In Proceedings of the Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3166–3173. [Google Scholar]

- Li, X.L.; Liu, Y.P.; Zhao, H.C. Image Saliency Detection via Multi-Feature and manifold-Space Ranking. In Proceedings of the Asia Pacific Information Technology, Bangkok, Thailand, 15–17 January 2021; pp. 76–81. [Google Scholar]

- Deng, C.; Liu, X.; Li, C.; Tao, D. Active multi-kernel domain adaptation for hyperspectral image classification. Pattern Recognit. 2018, 77, 306–315. [Google Scholar] [CrossRef]

- Yang, M.L.; Deng, C.; Nie, F.P. Adaptive-weighting discriminative regression for multi-view classification. Pattern Recognit. 2019, 88, 236–245. [Google Scholar] [CrossRef]

- Wang, M.; Konrad, J.; Ishwar, P.; Jing, K.; Rowley, H. Image saliency: From intrinsic to extrinsic context. In Proceedings of the Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 417–424. [Google Scholar]

- Zhang, L.; Yang, C.; Lu, H.; Ruan, X.; Yang, M.H. Ranking saliency. Pattern Anal. Mach. Intell. 2017, 39, 1892–1904. [Google Scholar] [CrossRef] [PubMed]

- Deng, C.; Nie, F.; Tao, D. Saliency detection via a multiple self-weighted graph-based manifold ranking. Multimedia 2020, 22, 885–896. [Google Scholar] [CrossRef]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Hou, X.D.; Zhang, L.Q. Saliency Detection: A Spectral Residual Approach. In Proceedings of the Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 19–23 June 2007; pp. 1–8. [Google Scholar]

- Hou, Q.B.; Cheng, M.M.; Hu, X.W.; Borji, A.; Tu, Z.W.; Torr, P. Deeply supervised salient object detection with short connections. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 815–828. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.P.; Wang, D.; Lu, H.C.; Wang, H.Y.; Ruan, X. Amulet: Aggregating Multi-level Convolutional Features for Salient Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 202–211. [Google Scholar]

- Wang, T.T.; Zhang, L.H.; Wang, S.; Lu, H.; Yang, G.; Ruan, X.; Borji, A. Detect globally, refine locally: A novel approach to saliency detection. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–22 June 2018; pp. 3127–3135. [Google Scholar]

- Zhang, X.N.; Wang, T.T.; Qi, J.Q.; Lu, H.C.; Wang, G. Progressive Attention Guided Recurrent Network for Salient Object Detection. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 19–22 June 2018; pp. 714–722. [Google Scholar]

- Shen, X.H.; Wu, Y. A Unified Approach to Salient Object Detection via Low Rank Matrix Recovery. In Proceedings of the Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 853–860. [Google Scholar]

- Li, G.B.; Yu, Y.Z. Visual saliency based on multiscale deep features. In Proceedings of the Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 5455–5463. [Google Scholar]

- Zhao, R.; Ouyang, W.L.; Li, H.S.; Wang, X.G. Saliency detection by multi-context deep learning. In Proceedings of the Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 1265–1274. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmention. In Proceedings of the Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 5455–5463. [Google Scholar]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. Slic Superpixels; EPFL Scientific Publication: Lausanne, Switzerland, 2010. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 71–987. [Google Scholar] [CrossRef]

- Ma, C.; Huang, J.B.; Yang, X.K.; Yang, M.H. Robust Visual Tracking via Hierarchical Convolutional Features. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 2709–2723. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Ganesh, R.N. Advances in Principal Component Analysis: Research and Development; Springer: Singapore, 2018. [Google Scholar]

- Li, C.Y.; Yuan, Y.C.; Cai, W.D.; Xia, Y.; Feng, D.D. Robust saliency detection via regularized random walks ranking. In Proceedings of the Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 2710–2717. [Google Scholar]

- Achanta, R.; Estrada, F.; Wils, P.; Süsstrunk, S. Salient region detection and segmentation. In Proceedings of the 6th International Conference on Computer Vision systems, Santorini, Greece, 12–15 May 2008; pp. 66–75. [Google Scholar]

- Achanta, R.; Süsstrunk, S. Saliency detection using maximum symmetric surround. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–28 September 2010; pp. 2653–2656. [Google Scholar]

- Harel, J.; Koch, C.; Perona, P.; Schölkopf, B.; Platt, J.; Hofmann, T. Graph-based visual saliency. In Proceedings of the 20th Annual Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; pp. 545–552. [Google Scholar]

- Lmamoglu, N.; Lin, W.; Fang, Y.M. A saliency detection model using low-level features based on wavelet. IEEE Trans. Multimed. 2013, 15, 96–105. [Google Scholar] [CrossRef]

- Zhang, J.M.; Sclaroff, S. Exploiting surroundedness for saliency detection: A boolean map approach. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 889–902. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Zhang, L.H.; Lu, H.C. Graph-regularized Saliency Detection with Convex-hull-based Center Prior. IEEE Signal Process. Lett. 2013, 20, 637–640. [Google Scholar] [CrossRef]

- Duan, L.J.; Wu, C.P.; Miao, J.; Qing, L.Y.; Fu, Y. Visual saliency detection by spatially weighted dissimilarity. In Proceedings of the Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 473–480. [Google Scholar]

| Features of a Superpixel | Dimension of Feature Space |

|---|---|

| Average color value | 3 |

| Color histograms | 32 |

| Gabor filters | 36 |

| Local binary patterns | 1 |

| Spectral residual | 1 |

| High-level features | 832 |

| MSRA-5000 | ECSSD | DUT-OMRON | SOD | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F | AUC | P | R | F | AUC | P | R | F | AUC | P | R | F | AUC | |

| AC | 0.5519 | 0.4234 | 0.5158 | 0.7766 | 0.4582 | 0.3217 | 0.4173 | 0.6766 | 0.3552 | 0.4322 | 0.3704 | 0.7342 | 0.5047 | 0.3019 | 0.4370 | 0.6502 |

| SR | 0.4581 | 0.3172 | 0.4155 | 0.6982 | 0.3955 | 0.2554 | 0.3511 | 0.4794 | 0.2608 | 0.3214 | 0.2727 | 0.6623 | 0.4701 | 0.2967 | 0.4142 | 0.4844 |

| MSS | 0.6185 | 0.4954 | 0.5850 | 0.8273 | 0.5157 | 0.3866 | 0.4788 | 0.6080 | 0.3778 | 0.4621 | 0.3944 | 0.6016 | 0.5376 | 0.3386 | 0.4734 | 0.5312 |

| GBVS | 0.6542 | 0.6080 | 0.6429 | 0.8736 | 0.6279 | 0.5184 | 0.5987 | 0.7629 | 0.4276 | 0.5813 | 0.4554 | 0.8000 | 0.6091 | 0.4651 | 0.5685 | 0.7629 |

| WTLL | 0.6731 | 0.3956 | 0.5793 | 0.8624 | 0.5757 | 0.2629 | 0.4517 | 0.7836 | 0.4460 | 0.3954 | 0.4332 | 0.8205 | 0.6113 | 0.2448 | 0.4544 | 0.7667 |

| LRMR | 0.7876 | 0.6123 | 0.7388 | 0.4999 | 0.7032 | 0.4665 | 0.6295 | 0.4995 | 0.5128 | 0.5078 | 0.5116 | 0.4996 | 0.6434 | 0.3925 | 0.5607 | 0.4986 |

| MR | 0.8445 | 0.7569 | 0.8225 | 0.9388 | 0.7675 | 0.6447 | 0.7352 | 0.7412 | 0.5512 | 0.6242 | 0.5665 | 0.8509 | 0.6911 | 0.4792 | 0.6271 | 0.6571 |

| BMA | 0.6878 | 0.4540 | 0.6147 | 0.8394 | 0.6108 | 0.3171 | 0.5032 | 0.7751 | 0.4457 | 0.4779 | 0.4527 | 0.8287 | 0.6313 | 0.3013 | 0.5039 | 0.7828 |

| FT | 0.4976 | 0.4413 | 0.4834 | 0.7306 | 0.4204 | 0.2927 | 0.3820 | 0.6048 | 0.3131 | 0.3406 | 0.3191 | 0.6285 | 0.4598 | 0.2642 | 0.3927 | 0.7999 |

| BFSS | 0.7776 | 0.6793 | 0.7525 | 0.7415 | 0.7212 | 0.5984 | 0.6886 | 0.7525 | 0.5208 | 0.4829 | 0.5115 | 0.6285 | 0.6437 | 0.3344 | 0.5305 | 0.7999 |

| GR | 0.8236 | 0.6112 | 0.7624 | 0.9087 | 0.7177 | 0.3932 | 0.6029 | 0.8334 | 0.5709 | 0.4815 | 0.5474 | 0.8390 | 0.6780 | 0.3018 | 0.5266 | 0.7582 |

| SWD | 0.7250 | 0.4474 | 0.6342 | 0.8935 | 0.7036 | 0.3526 | 0.5722 | 0.8567 | 0.4672 | 0.4128 | 0.4534 | 0.8420 | 0.6459 | 0.3205 | 0.5233 | 0.8053 |

| RR | 0.8447 | 0.7643 | 0.8247 | 0.6600 | 0.7689 | 0.6545 | 0.7391 | 0.7491 | 0.5486 | 0.6317 | 0.5658 | 0.7218 | 0.6944 | 0.4860 | 0.6319 | 0.6649 |

| MFMR | 0.8487 | 0.7905 | 0.8346 | 0.9527 | 0.7829 | 0.6770 | 0.7556 | 0.9152 | 0.5578 | 0.6511 | 0.5769 | 0.8699 | 0.7023 | 0.4925 | 0.6395 | 0.8140 |

| OURS | 0.8517 | 0.8174 | 0.8435 | 0.9636 | 0.8108 | 0.7229 | 0.7886 | 0.9382 | 0.5515 | 0.6878 | 0.5779 | 0.8883 | 0.7273 | 0.5380 | 0.6721 | 0.8450 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Liu, Y.; Zhao, H. Saliency Detection Based on Low-Level and High-Level Features via Manifold-Space Ranking. Electronics 2023, 12, 449. https://doi.org/10.3390/electronics12020449

Li X, Liu Y, Zhao H. Saliency Detection Based on Low-Level and High-Level Features via Manifold-Space Ranking. Electronics. 2023; 12(2):449. https://doi.org/10.3390/electronics12020449

Chicago/Turabian StyleLi, Xiaoli, Yunpeng Liu, and Huaici Zhao. 2023. "Saliency Detection Based on Low-Level and High-Level Features via Manifold-Space Ranking" Electronics 12, no. 2: 449. https://doi.org/10.3390/electronics12020449

APA StyleLi, X., Liu, Y., & Zhao, H. (2023). Saliency Detection Based on Low-Level and High-Level Features via Manifold-Space Ranking. Electronics, 12(2), 449. https://doi.org/10.3390/electronics12020449