Micro-Expression Spotting Based on a Short-Duration Prior and Multi-Stage Feature Extraction

Abstract

1. Introduction

- The existing ME spotting samples are very limited; without abundant data, the deep neural network will fully “drain” the training data and over-absorb the information from the training set, resulting in performance degradation in the testing set.

- Due to the subtle movements of MEs, it is difficult to find MEs integrally in long video sequences; in other words, it is difficult to determine the boundaries of MEs.

- The temporal extent of expression varies dramatically compared to the sizes of objects in an image (from a fraction of 0.04 to 4 s). The significant distinction in duration also makes it very challenging to locate MEs and MaEs.

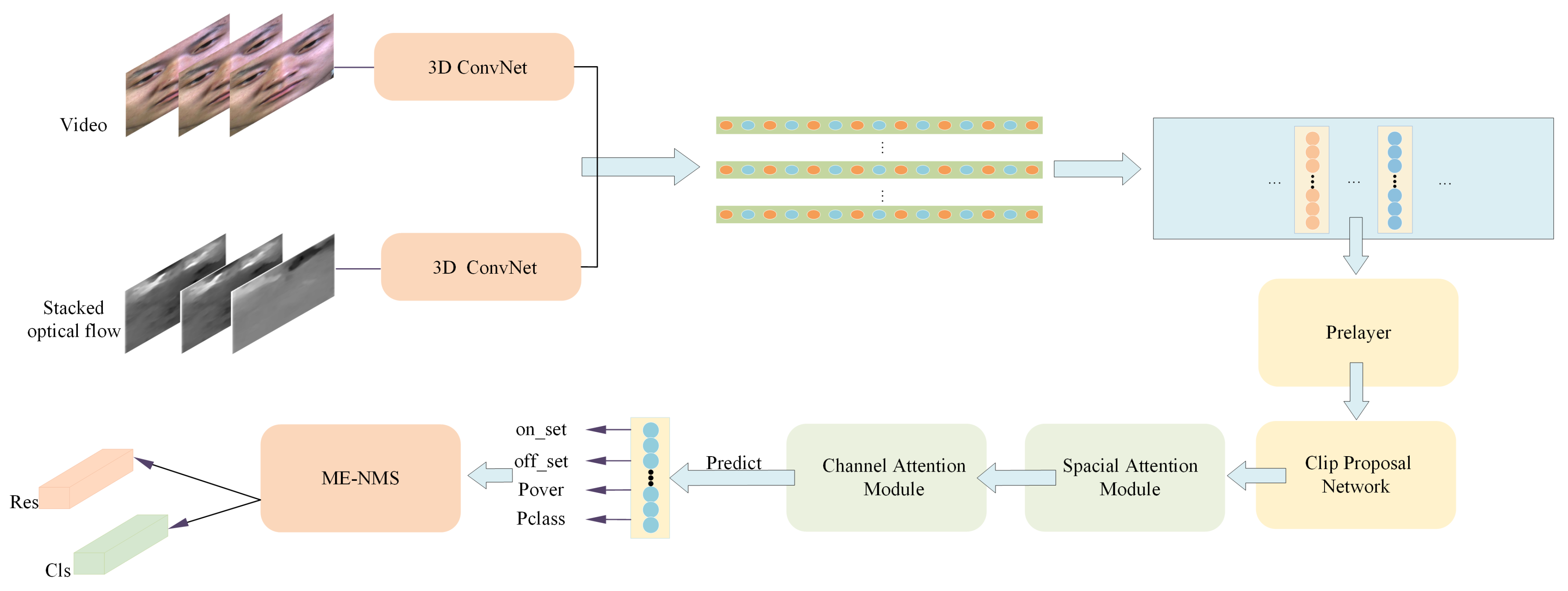

- We propose a multi-stage channel feature extraction module, named Prelayer, which is capable of fusing optical flow modality and RGB information and alleviating the problem of insufficient samples.

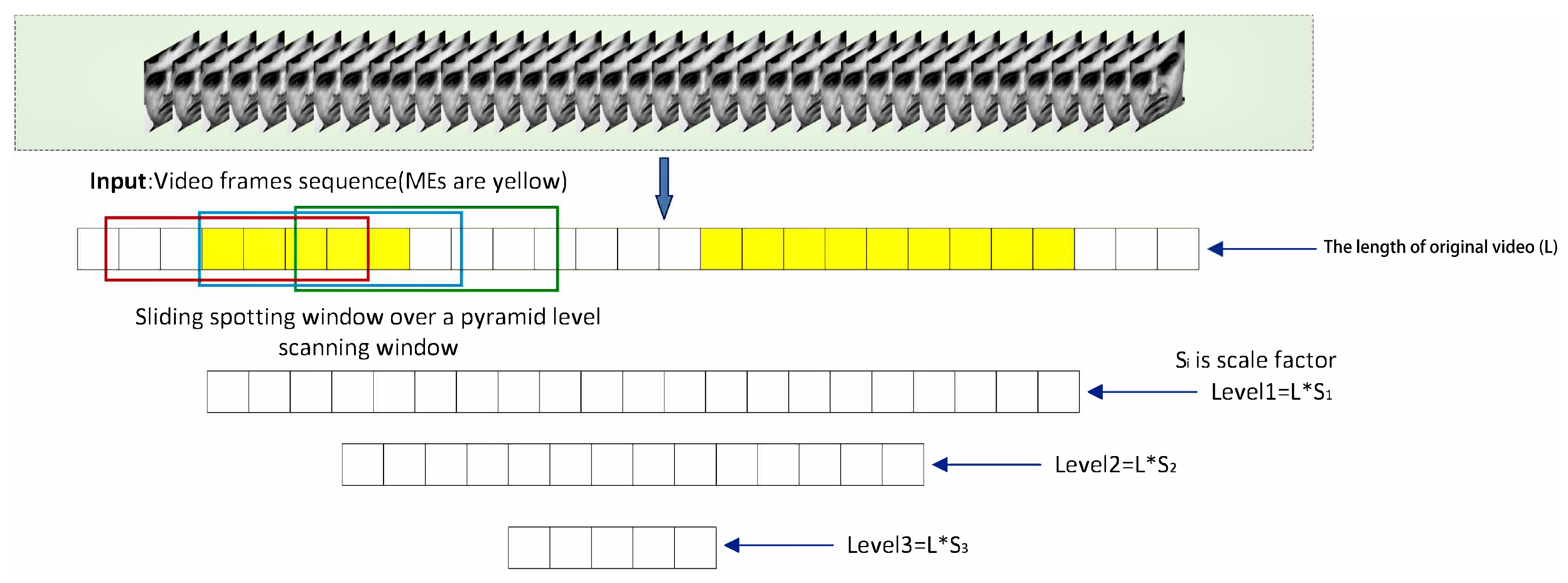

- A multi-scale network with the fusion of multiple scale features and an anchor-free-based mechanism was developed to locate each ME’s boundaries accurately.

- A new post-processing network for MEs, named ME-NMS (non-maximum suppression), is utilized to enhance the detection accuracy of some extremely short fragments.

2. Related Work

2.1. Action Localization

2.2. Micro-Expression Spotting

2.3. Non-Maximum Suppression

3. Our Method

3.1. Network Architecture

3.1.1. Multi-Stage Channel Feature Extraction

| Algorithm 1 ME-NMS loss calculating procedure. |

Output: Correct process

|

3.2. Modules

3.2.1. Clip Proposal Network

3.2.2. Spatio-Temporal Attention Block

3.2.3. ME-NMS Module

4. Experiments

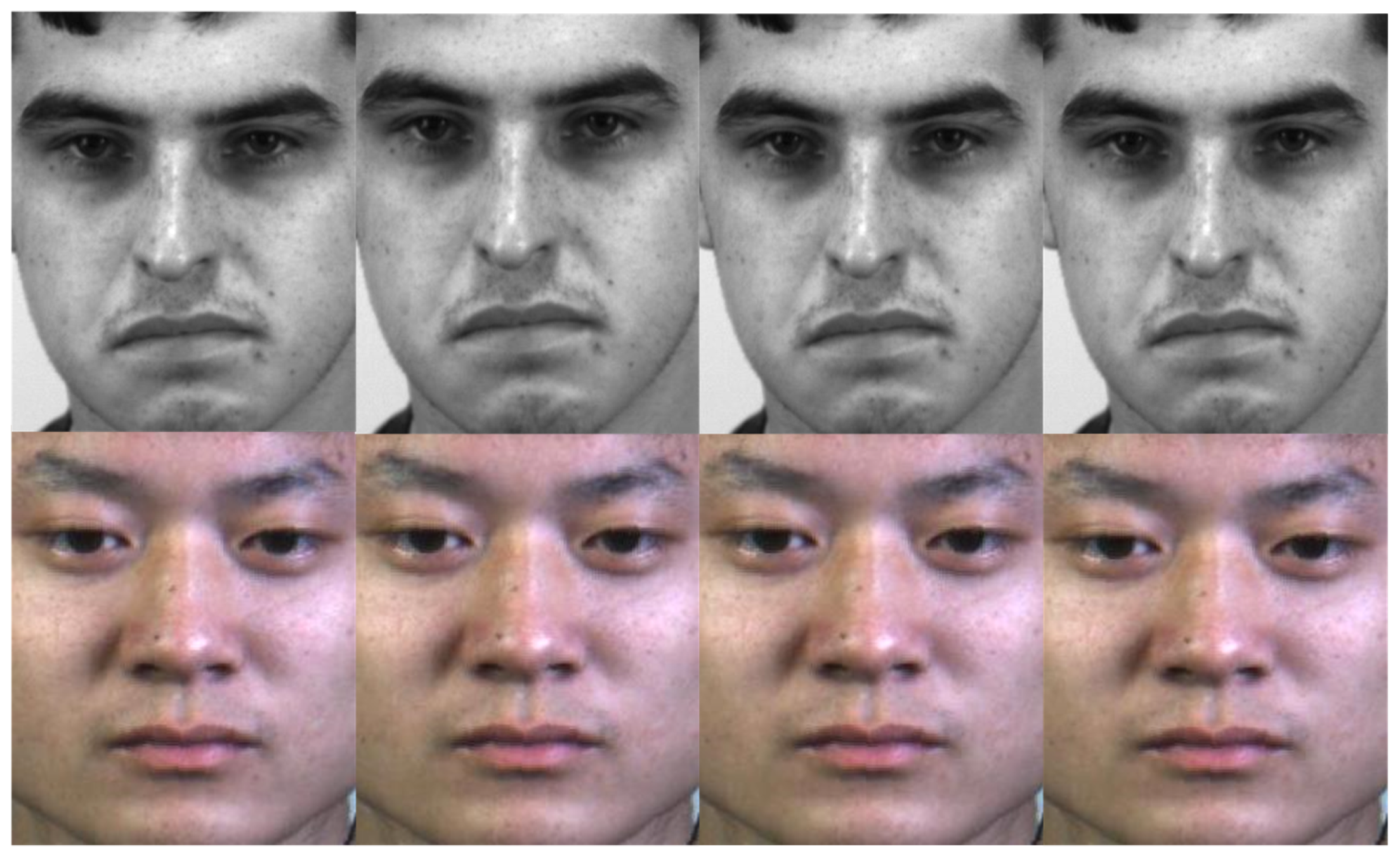

4.1. Database

4.2. Method

4.3. Loss Function Settings (Videos of Different Lengths)

- Anchor-free classification loss: based on the classification scores , AEMNet contributes the by the standard cross-entropy loss.

- The positioned time fragments are regressed to the start and end frames of micro-expressions and expressions . The regression loss adopts the smooth loss [33].

- Anchor-based classification loss: is calculated via cross-entropy loss.

- Anchor-based overlap loss: The overlap is the calculation of intersection over union between the predicted instances and its closest ground truth; it is evaluated with mean-square error loss.

- Anchor-based regression loss: Given regression target and the prediction , the anchor-based regression loss be defined as follows:

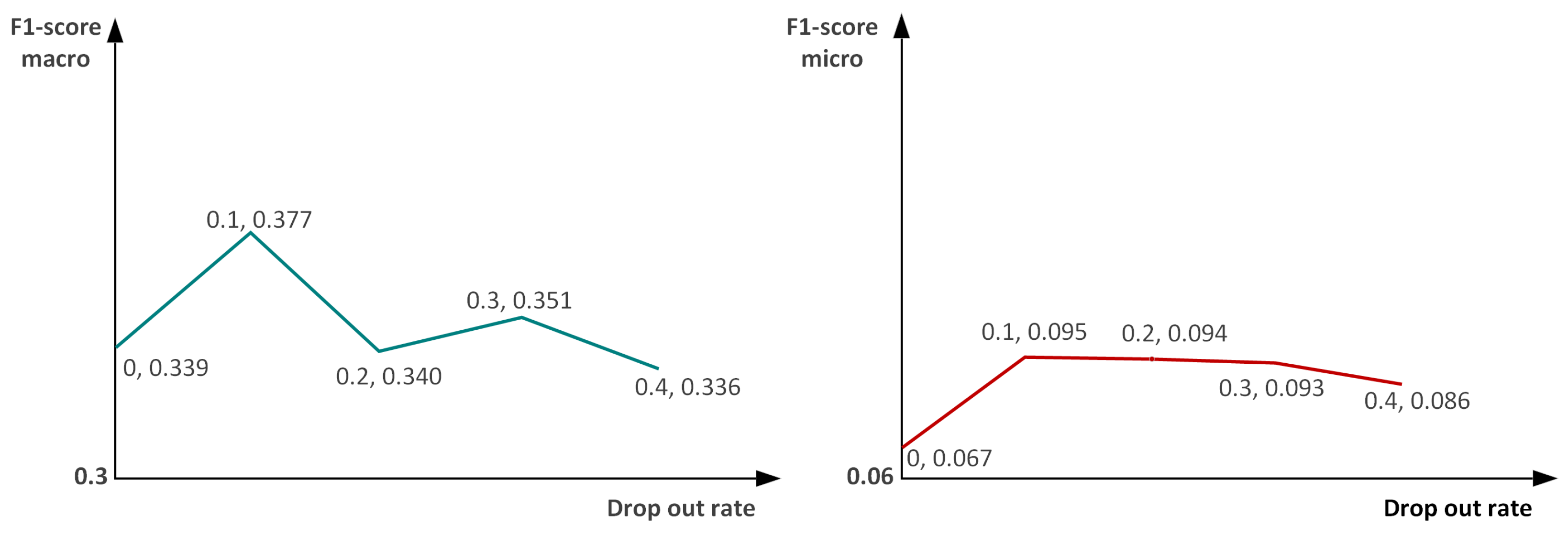

4.4. Experimental Parameters and Configurations

4.5. Results and Comparisons

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ben, X.; Ren, Y.; Zhang, J.; Wang, S.J.; Kpalma, K.; Meng, W.; Liu, Y.J. Video-based Facial Micro-Expression Analysis: A Survey of Datasets, Features and Algorithms. IEEE Trans. Pattern Anal. Mach. Intell. 2021. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Wei, G.; Wang, J.; Zhou, Y. Multi-scale joint feature network for micro-expression recognition. Comput. Vis. Media 2021, 7, 407–417. [Google Scholar] [CrossRef]

- Cai, L.; Li, H.; Dong, W.; Fang, H. Micro-expression recognition using 3D DenseNet fused Squeeze-and-Excitation Networks. Appl. Soft Comput. 2022, 119, 108594. [Google Scholar] [CrossRef]

- Zhou, L.; Mao, Q.; Huang, X.; Zhang, F.; Zhang, Z. Feature refinement: An expression-specific feature learning and fusion method for micro-expression recognition. Pattern Recognit. 2022, 122, 108275. [Google Scholar] [CrossRef]

- Porter, S.; Brinke, L. Reading between the lies identifying concealed and falsified emotions in universal facial expressions. Psychol. Sci. 2008, 19, 508–514. [Google Scholar] [CrossRef] [PubMed]

- Davison, A.K.; Yap, M.H.; Costen, N.; Tan, K.; Lansley, C.; Leightley, D. Micro-facial movements: An investigation on spatiotemporal descriptors. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 111–123. [Google Scholar] [CrossRef]

- Liong, S.-T.; See, J.; Phan, R.C.-W.; Le Ngo, A.C.; Oh, Y.-H.; Wong, K. Subtle expression recognition using optical strain weighted features. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014; pp. 644–657. [Google Scholar] [CrossRef]

- Wu, J.; Xu, J.; Lin, D.; Tu, M. Optical Flow Filtering-Based Micro-Expression Recognition Method. Electronics 2020, 9, 2056. [Google Scholar] [CrossRef]

- Wang, S.J.; Yan, W.J.; Li, X.; Zhao, G.; Fu, X. Micro-expression recognition using dynamic textures on tensor independent color space. Pattern Recognit. 2014, 4678–4683. [Google Scholar] [CrossRef]

- Lin, T.; Zhao, X.; Shou, Z. Single shot temporal action detection. In Proceedings of the ACM Multimedia Conference, Mountain View, CA, USA, 23–27 October 2017; pp. 988–996. [Google Scholar] [CrossRef]

- Xie, Z.H.; Cheng, S.J.; Liu, X.Y.; Fan, J.W. Adaptive enhanced micro-expression spotting network based on multi-stage features extraction. In Proceedings of the Chinese Conference on Biometric Recognition (CCBR), Beijing, China, 11–13 November 2022; Lecture Notes in Computer Science. Volume 13628, pp. 289–296. [Google Scholar] [CrossRef]

- Chao, Y.W.; Vijayanarasimhan, S.; Seybold, B.; Ross, D.A.; Deng, J.; Sukthankar, R. Rethinking the faster R-CNN architecture for temporal action localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2018; pp. 1130–1139. [Google Scholar] [CrossRef]

- Le, Y.; Houwen, P.; Dingwen, Z.; Jianlong, F.; Junwei, H. Revisiting anchor mechanisms for temporal action localization. IEEE Trans. Image Process. 2020, 29, 8535–8548. [Google Scholar] [CrossRef]

- Zhang, Z.; Chen, T.; Meng, H.; Liu, G.; Fu, X. Smeconvnet: A convolutional neural network for spotting spontaneous facial micro-expression from long videos. IEEE Access 2018, 6, 143–171. [Google Scholar] [CrossRef]

- Antti, M.; Guoying, Z.; Matti, P. Spotting rapid facial movements from videos using appearance-based feature difference analysis. In Proceedings of the International Conference on Pattern Recognition, Stockholm, Sweden, 24–28 August 2014; pp. 1722–1727. [Google Scholar] [CrossRef]

- Adrian, D.K.; Moi, Y.H.; Cliff, L. Micro-facial movement detection using individualised baselines and histogram based descriptors. In Proceedings of the International Conference on Systems, Man, and Cybernetics, San Antonio, TX, USA, 2–5 October 2015; pp. 1864–1869. [Google Scholar] [CrossRef]

- Adrian, D.; Walied, M.; Cliff, L.; Choon, N.C.; Moi, Y.H. Objective micro-facial movement detection using facs-based regions and baseline evaluation. In Proceedings of the International Conference on Automatic Face and Gesture Recognition (FG), Xi’an, China, 15–19 May 2018; pp. 642–649. [Google Scholar] [CrossRef]

- Devangini, P.; Guoying, Z.; Matti, P. Spatiotemporal integration of optical flow vectors for micro-expression detection. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Auckland, New Zealand, 10–14 February 2015; pp. 369–380. [Google Scholar] [CrossRef]

- Thuong, T.K.; Xiaopeng, H.; Guoying, Z. Sliding window based micro-expression spotting: A benchmark. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Antwerp, Belgium, 18–21 September 2017; pp. 542–553. [Google Scholar] [CrossRef]

- Sujing, W.; Shuhuang, W.; Xingsheng, Q.; Jingxiu, L.; Xiaolan, F. A main directional maximal difference analysis for spotting facial movements from long-term videos. Neurocomputing 2017, 230, 382–389. [Google Scholar] [CrossRef]

- Genbing, L.; See, J.; Laikuan, W. Shallow optical flow three-stream CNN for macro- and micro-expression spotting from long videos. In Proceedings of the 2021 IEEE International Conference on Image Processing, Anchorage, AK, USA, 19–22 September 2021; pp. 2643–2647. [Google Scholar] [CrossRef]

- Guo, Y.; Li, B.; Ben, X.; Ren, Y.; Zhang, J.; Yan, R.; Li, Y. A Magnitude and Angle Combined Optical Flow Feature for Microexpression Spotting. IEEE Multimed. 2021, 28, 29–39. [Google Scholar] [CrossRef]

- Wangwang, Y.; Jingwen, J.; Yongjie, L. LSSNET: A two-stream convolutional neural network for spotting macro-and micro-expression in long videos. In Proceedings of the ACM Conference on Multimedia, Virtual, 24 October 2021; pp. 4745–4749. [Google Scholar] [CrossRef]

- Xiaolong, W.; Girshick, R.; Gupta, A.; Kaiming, H. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar] [CrossRef]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. SoftNMS–Improving Object Detection with One Line of Code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar] [CrossRef]

- Songtao, L.; Di, H.; Yunhong, W. Adaptive NMS: Refining pedestrian detection in a crowd. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6459–6468. [Google Scholar] [CrossRef]

- Xin, H.; Zheng, G.; Zequn, J.; Yoshie, O. NMS by Representative Region: Towards Crowded Pedestrian Detection by Proposal Pairing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10750–10759. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Gaidon, A.; Harchaoui, Z.; Schmid, C. Temporal localization of actions with actoms. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2782–2795. [Google Scholar] [CrossRef]

- Qu, F.; Wang, S.J.; Yan, W.J.; Li, H.; Wu, S.; Fu, X. CAS(ME)2: A database for spontaneous macro-expression and micro-expression spotting and recognition. IEEE Trans. Affect. Comput. 2017, 9, 424–436. [Google Scholar] [CrossRef]

- Yap, C.; Kendrick, C.; Yap, M. Samm long videos: A spontaneous facial micro-and macro-expressions dataset. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition, Buenos Aires, Argentina, 16–20 November 2020; pp. 194–199. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar] [CrossRef]

- Tian, Z.; Shen, C.; Chen, H.; He, T. FCOS: Fully convolutional one-stage object detection. In Proceedings of the IEEE Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar] [CrossRef]

- Yap, C.; Yap, M.; Davison, A.; Cunningham, R. 3D-CNN for Facial Micro- and Macro-expression Spotting on Long Video Sequences using Temporal Oriented Reference Frame. arXiv 2021, arXiv:2105.06340. [Google Scholar]

- Sujing, W.; Ying, H.; Jingting, L.; Xiaolan, F. MESNet: A convolutional neural network for spotting multi-scale micro-expression intervals in long videos. IEEE Trans. Image Process. 2021, 3956–3969. [Google Scholar] [CrossRef]

- Zhang, L.; Li, J.; Wang, S.; Duan, X.; Yan, W.; Xie, H.; Huang, S. Spatio-temporal fusion for macro-and micro-expression spotting in long video sequences. In Proceedings of the 2020 15th IEEE International Conference on Automatic Face and Gesture Recognition, Buenos Aires, Argentina, 16–20 November 2022; pp. 245–252. [Google Scholar] [CrossRef]

| Models | SAMM-LV | |||||

|---|---|---|---|---|---|---|

| MaE | ME | ALL | MaE | ME | ALL | |

| Yap [34] | 0.216 | 0.025 | 0.142 | 0.192 | 0.043 | 0.107 |

| MESNet [35] | - | - | 0.036 | - | - | 0.088 |

| Zhang [36] | 0.213 | 0.022 | 0.140 | 0.07 | 0.133 | 0.100 |

| LSSNet [23] | 0.368 | 0.050 | 0.314 | 0.314 | 0.191 | 0.286 |

| AEM-Net | 0.377 | 0.095 | 0.325 | 0.311 | 0.297 | 0.300 |

| AEM-SE | 0.315 | 0.104 | 0.286 | 0.260 | 0.315 | 0.264 |

| AEM-F | 0.293 | 0.129 | 0.289 | 0.355 | 0.303 | 0.313 |

| F1_Score_Macro | F1_Score_Micro | F1_Score | |

|---|---|---|---|

| Anchor-free (Af) | 0.219 | 0.000 | 0.194 |

| Anchor-based (Ab) | 0.341 | 0.060 | 0.295 |

| Ab+Af | 0.351 | 0.093 | 0.315 |

| F1_Score_Macro | F1_Score_Micro | F1_Score | |

|---|---|---|---|

| Prelayer | 0.351 | 0.093 | 0.315 |

| Non_pre | 0.325 | 0.049 | 0.275 |

| F1_Score_Macro | F1_Score_Micro | F1_Score | |

|---|---|---|---|

| NMS | 0.351 | 0.086 | 0.312 |

| ME-NMS | 0.351 | 0.093 | 0.315 |

| NMS-Nom | 0.312 | 0.051 | 0.272 |

| Threshold | 0.3 | 0.35 | 0.4 | 0.45 | 0.5 | 0.55 | 0.6 |

|---|---|---|---|---|---|---|---|

| MaE | 0.367 | 0.356 | 0.362 | 0.377 | 0.365 | 0.354 | 0.342 |

| ME | 0.085 | 0.073 | 0.096 | 0.095 | 0.078 | 0.073 | 0.077 |

| AU | 0.321 | 0.325 | 0.307 | 0.325 | 0.313 | 0.311 | 0.295 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, Z.; Cheng, S. Micro-Expression Spotting Based on a Short-Duration Prior and Multi-Stage Feature Extraction. Electronics 2023, 12, 434. https://doi.org/10.3390/electronics12020434

Xie Z, Cheng S. Micro-Expression Spotting Based on a Short-Duration Prior and Multi-Stage Feature Extraction. Electronics. 2023; 12(2):434. https://doi.org/10.3390/electronics12020434

Chicago/Turabian StyleXie, Zhihua, and Sijia Cheng. 2023. "Micro-Expression Spotting Based on a Short-Duration Prior and Multi-Stage Feature Extraction" Electronics 12, no. 2: 434. https://doi.org/10.3390/electronics12020434

APA StyleXie, Z., & Cheng, S. (2023). Micro-Expression Spotting Based on a Short-Duration Prior and Multi-Stage Feature Extraction. Electronics, 12(2), 434. https://doi.org/10.3390/electronics12020434