Human Activity Recognition Based on Continuous-Wave Radar and Bidirectional Gate Recurrent Unit

Abstract

:1. Introduction

- We analyze realistic, continuous sequences of human activities rather than discrete activities. Within them, different actions can happen at any time, with unconstrained duration for each activity, and the body parts reposition themselves appropriately in order to perform the following activity.

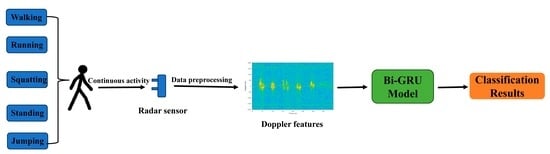

- We extract the Doppler feature from continuous-wave (CW) radar data. Then, we introduce stacked bidirectional GRU networks as a potent deep learning (DL) mechanism for classifying these ongoing human activity sequences. Bi-GRUs are inherently suitable for such analysis because they can capture both temporal forward and backward correlated information within the radar data. We also shed light on performance implications stemming from data-processing choices and pivotal hyperparameters.

- We base our analysis on experimental data collected using a CW radar and involving three participants performing different combinations of five activities. Then, we design three different permutations, as shown in the table in Section 4.3, to train and test the model with different humans, which makes it more credible.

2. Related Works

3. Proposed Bi-GRU Algorithm

3.1. System Description and Data Processing

3.2. Optimal Parameters for Human Activity Classification

- (1)

- number of Bi-GRU layers

- (2)

- number of Bi-GRU neurons

- (3)

- learning rate

4. Experiment and Results

4.1. Measurement Hardware and Its Parameters

4.2. Experiment Scenario Setup and Data Collection

4.3. Training and Testing Set Composition

4.4. Performance Analysis

4.5. Performance Comparison

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Shrestha, A.; Li, H.; Le Kernec, J.; Fioranelli, F. Continuous human activity classification from FMCW radar with Bi-LSTM networks. IEEE Sens. J. 2020, 20, 13607–13619. [Google Scholar] [CrossRef]

- Khatun, M.A.; Yousuf, M.A.; Ahmed, S.; Uddin, M.Z.; Alyami, S.A.; Al-Ashhab, S.; Akhdar, H.F.; Khan, A.; Azad, A.; Moni, M.A. Deep CNN-LSTM with self-attention model for human activity recognition using wearable sensor. IEEE J. Transl. Eng. Health Med. 2022, 10, 2700316. [Google Scholar] [CrossRef] [PubMed]

- Tong, L.; Ma, H.; Lin, Q.; He, J.; Peng, L. A novel deep learning Bi-GRU-I model for real-time human activity recognition using inertial sensors. IEEE Sens. J. 2022, 22, 6164–6174. [Google Scholar] [CrossRef]

- Luo, F.; Khan, S.; Huang, Y.; Wu, K. Binarized neural network for edge intelligence of sensor-based human activity recognition. IEEE Trans. Mob. Comput. 2023, 22, 1356–1368. [Google Scholar] [CrossRef]

- Teng, Q.; Tang, Y.; Hu, G. RepHAR: Decoupling networks with accuracy-speed tradeoff for sensor-based human activity recognition. IEEE Trans. Instrum. Meas. 2023, 72, 2505111. [Google Scholar] [CrossRef]

- Xu, S.; Zhang, L.; Huang, W.; Wu, H.; Song, A. Deformable convolutional networks for multimodal human activity recognition using wearable sensors. IEEE Trans. Instrum. 2022, 71, 2505414. [Google Scholar] [CrossRef]

- Imran, H.A. UltaNet: An Antithesis Neural Network for Recognizing Human Activity Using Inertial Sensors Signals. IEEE Sens. Lett. 2022, 6, 7000304. [Google Scholar] [CrossRef]

- Rezaei, A.; Stevens, M.C.; Argha, A.; Mascheroni, A.; Puiatti, A.; Lovell, N.H. An unobtrusive human activity recognition system using low resolution thermal sensors, machine and deep learning. IEEE Trans. Biomed. Eng. 2023, 70, 115–124. [Google Scholar] [CrossRef]

- Manaf, A.; Singh, S. Computer vision-based survey on human activity recognition system, challenges and applications. In Proceedings of the 2021 3rd International Conference on Signal Processing and Communication (ICPSC), Coimbatore, India, 13–14 May 2021; pp. 110–114. [Google Scholar]

- Siddiqi, M.H.; Almashfi, N.; Ali, A.; Alruwaili, M.; Alhwaiti, Y.; Alanazi, S.; Kamruzzaman, M.M. A unified approach for patient activity recognition in healthcare using depth camera. IEEE Access 2021, 9, 92300–92317. [Google Scholar] [CrossRef]

- Wang, B.; Zhang, H.; Guo, Y.-X. Radar-based soft fall detection using pattern contour vector. IEEE Internet Things J. 2023, 10, 2519–2527. [Google Scholar] [CrossRef]

- Yu, Z.; Zahid, A.; Taha, A.; Taylor, W.; Kernec, J.K.; Heidari, H.; Ali, M.; Abbasi, Q.H. An intelligent implementation of multi-sensing data fusion with neuromorphic computing for human activity recognition. IEEE Internet Things J. 2023, 10, 1124–1133. [Google Scholar] [CrossRef]

- Yao, Y.; Liu, C.; Zhang, H.; Yan, B.; Jian, P.; Wang, P.; Du, L.; Chen, X.; Han, B.; Fang, Z. Fall detection system using millimeter-wave radar based on neural network and information fusion. IEEE Internet Things J. 2022, 21, 21038–21050. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, C.; Ye, W. Category-extensible human activity recognition based on Doppler radar by few-shot learning. IEEE Sens. J. 2022, 22, 21952–21960. [Google Scholar] [CrossRef]

- Abdu, F.J.; Zhang, Y.; Deng, Z. Activity classification based on feature fusion of FMCW radar human motion micro-doppler signatures. IEEE Sens. J. 2022, 22, 8648–8662. [Google Scholar] [CrossRef]

- Vandersmissen, B.; Knudde, N.; Jalalvand, A.; Couckuyt, I.; Bourdoux, A.; Neve, W.D.; Dhaene, T. Indoor person identification using a low-power FMCW radar. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3941–3952. [Google Scholar] [CrossRef]

- Chen, V.C.; Li, F.; Ho, S.S.; Wechsler, H. Micro-Doppler effect in radar: Phenomenon, model, and simulation study. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 2–21. [Google Scholar] [CrossRef]

- Chakraborty, M.; Kumawat, H.C.; Dhavale, S.V. DIAT-RadHARNet: A lightweight DCNN for radar-based classification of human suspicious activities. IEEE Trans. Instrum. Meas. 2022, 71, 2505210. [Google Scholar] [CrossRef]

- Alnujaim, I.; Oh, D.; Kim, Y. Generative adversarial networks for classification of micro-doppler signatures of human activity. IEEE Geosci. Remote Sens. Lett. 2019, 17, 396–400. [Google Scholar] [CrossRef]

- Chen, W.; Zhai, C.; Wang, X.; Li, J.; Lv, P.; Liu, C. GCN- and GRU-based intelligent model for temperature prediction of local heating surfaces. IEEE Trans. Ind. Inform. 2023, 19, 5517–5529. [Google Scholar] [CrossRef]

- Shu, W.; Cai, K.; Xiong, N.N. A short-term traffic flow prediction model based on an improved gate recurrent unit neural network. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16654–16665. [Google Scholar] [CrossRef]

- Aslam, N.; Rustam, F.; Lee, E.; Washington, P.B.; Ashraf, I. Sentiment analysis and emotion detection on cryptocurrency related tweets using ensemble LSTM-GRU model. IEEE Access 2022, 10, 39313–39324. [Google Scholar] [CrossRef]

- Xia, M.; Shao, H.; Ma, X.; de Silva, C.W. A stacked GRU-RNN-based approach for predicting renewable energy and electricity load for smart grid operation. IEEE Trans. Ind. Inform. 2021, 17, 7050–7059. [Google Scholar] [CrossRef]

- Li, K.; Shen, N.; Kang, Y.; Chen, H.; Wang, Y.; He, S. Livestock product price forecasting method based on heterogeneous GRU neural network and energy decomposition. IEEE Access 2021, 9, 158322–158330. [Google Scholar] [CrossRef]

- Chen, D.; Yongchareon, S.; Lai, E.; Yu, J.; Sheng, Q.; Li, Y. Transformer with bidirectional GRU for nonintrusive, sensor-based activity recognition in a multiresident environment. IEEE Internet Things J. 2022, 9, 23716–23727. [Google Scholar] [CrossRef]

- Zhu, J.; Lou, X.; Ye, W. Lightweight deep learning model in mobile-edge computing for radar-based human activity recognition. IEEE Internet Things J. 2021, 8, 12350–12359. [Google Scholar] [CrossRef]

- Yang, S.; Kim, Y. Single 24-GHz FMCW radar-based indoor device-free human localization and posture sensing with CNN. IEEE Sens. 2023, 23, 3059–3068. [Google Scholar] [CrossRef]

- Xu, C.; Zhou, Y.; Wang, Q.; Ma, Z.; Zhu, Y. Detecting hypernymy relations between medical compound entities using a hybrid-attention based Bi-GRU-CapsNet model. IEEE Access 2019, 7, 175693–175702. [Google Scholar] [CrossRef]

| Parameters | Values |

|---|---|

| Radar type | CW (1 − Tx and 1 − Rx) |

| CW Frequency | 24,000 MHz |

| Sampling period | 0.0004 s |

| Number of samples | 128 |

| Sampling time | 0.0512 s |

| Velocity resolution | 0.122 m/s |

| Low-pass filter (theoretical) | 1250 Hz |

| Low-pass filter (current hardware) | 915 Hz |

| High-pass filter (theoretical) | 19.53 Hz |

| High-pass filter (current hardware) | 20 Hz |

| No. | Gender | Age (yr) | Weight (kg) | Height (cm) |

|---|---|---|---|---|

| 1 | Man | 26 | 81 | 177 |

| 2 | Man | 23 | 60 | 177 |

| 3 | Man | 31 | 72 | 170 |

| No. | Train Set | Test Set |

|---|---|---|

| 1 | 2nd target, 3rd target | 1st target |

| 2 | 1st target, 3rd target | 2nd target |

| 3 | 1st target, 2nd target | 3rd target |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, J.; Sun, C.; Jang, K.; Yang, S.; Kim, Y. Human Activity Recognition Based on Continuous-Wave Radar and Bidirectional Gate Recurrent Unit. Electronics 2023, 12, 4060. https://doi.org/10.3390/electronics12194060

Zhou J, Sun C, Jang K, Yang S, Kim Y. Human Activity Recognition Based on Continuous-Wave Radar and Bidirectional Gate Recurrent Unit. Electronics. 2023; 12(19):4060. https://doi.org/10.3390/electronics12194060

Chicago/Turabian StyleZhou, Junhao, Chao Sun, Kyongseok Jang, Shangyi Yang, and Youngok Kim. 2023. "Human Activity Recognition Based on Continuous-Wave Radar and Bidirectional Gate Recurrent Unit" Electronics 12, no. 19: 4060. https://doi.org/10.3390/electronics12194060

APA StyleZhou, J., Sun, C., Jang, K., Yang, S., & Kim, Y. (2023). Human Activity Recognition Based on Continuous-Wave Radar and Bidirectional Gate Recurrent Unit. Electronics, 12(19), 4060. https://doi.org/10.3390/electronics12194060