Enhancing LAN Failure Predictions with Decision Trees and SVMs: Methodology and Implementation

Abstract

:1. Introduction

2. Related Work

3. Materials and Methods

- Feature selection: The decision tree algorithm begins by selecting the most relevant features (network parameters) from the dataset. These features are chosen based on their ability to discriminate between LAN failure instances and non-failure instances.

- Splitting criteria: The algorithm then decides on a splitting criterion for each node in the tree. It chooses the feature and threshold that best separates the data into distinct groups. Common splitting criteria include Gini impurity, entropy, or mean-squared error, depending on the type of problem (classification or regression).

- Recursive splitting: The decision tree recursively splits the dataset at each node based on the selected splitting criterion. It continues this process until certain stopping conditions are met, such as reaching a maximum tree depth or having too few samples in a node.

- Leaf node assignment: Once the tree is built, each leaf node represents a prediction. For LAN failure prediction, leaf nodes are labeled as either “Failure” or “Non-failure” based on the majority class of instances within the node.

- Predictions: To make predictions, the algorithm follows the tree’s decision path from the root node to a leaf node based on the input features of a LAN instance. The prediction is the class label associated with the reached leaf node.

- Feature selection and transformation: SVM begins by selecting relevant features and potentially transforming them into a higher-dimensional space using kernel functions (e.g., RBF kernel). This transformation helps make complex decision boundaries more linear.

- Hyperplane selection: SVM aims to find a hyperplane that best separates LAN failure instances from non-failure instances. It selects the hyperplane that maximizes the margin, which is the distance between the hyperplane and the nearest data points from both classes (support vectors).

- Margin maximization: SVM strives to maximize the margin while minimizing classification errors. Instances that fall within the margin or on the wrong side of the hyperplane are penalized. This creates a robust decision boundary.

- Kernel trick: If a kernel function is used, SVM applies it to map the data into a higher-dimensional space, where a linear hyperplane can effectively separate the classes. The choice of the kernel function can influence the model’s capacity to capture complex patterns.

- Predictions: To make predictions, SVM evaluates the input LAN instance in the transformed space and determines which side of the hyperplane it falls on. Depending on the side, the instance is classified as “Failure” or “Non-failure”.

- A dataset was collected featuring attributes such as CPU load, memory usage, temperature, network traffic, and a binary label indicating device failure (1 for failure, 0 for normal operation).

- The data were pre-processed, which involved cleaning, normalizing, and encoding categorical variables if necessary.

- -

- The data preprocessing process began by identifying missing values in the dataset. Network monitoring data can sometimes have missing entries due to network interruptions or data collection issues. Several strategies can be employed to handle missing values. In our case, for numeric features, missing values were replaced by the mean or median of the available data in the same feature. However, for categorical features, missing values were replaced by the mode (the most frequent category) of that feature.

- -

- For nominal categorical variables (where there is no inherent order), one-hot encoding was typically used. Each category was transformed into a binary column (0 or 1) representing its presence or absence. This ensured that the model did not assume any ordinal relationships among categories.

- The dataset was divided into training and testing sets (50% for each one).

- A decision tree classifier was trained on the training dataset using a machine learning library, such as scikit-learn in Python.

- The model’s performance was evaluated; adjustments were made by tweaking parameters and pruning the tree to optimize performance.

- The trained model was used to predict future LAN failures based on the collected data.

3.1. Input Data

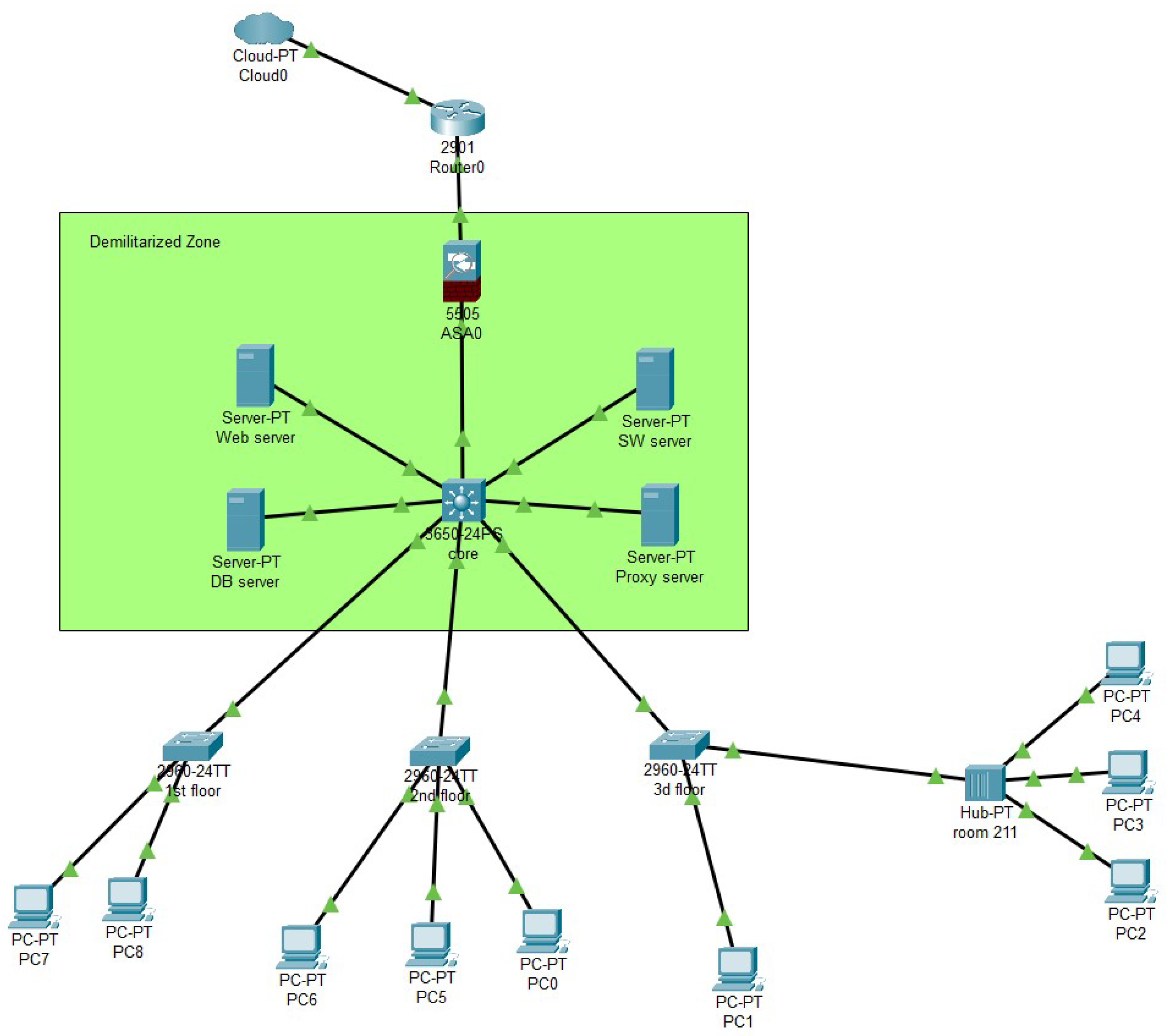

- The data are gathered from various sources such as logs, alarms, and other informational channels. The monitoring system plays a vital role in this process, cataloging defect occurrences and offering comprehensive data about these flaws. The collected data are then stored in a database system, paving the way for the crucial step of data analytics. This step encompasses advanced data analytics, data mining, machine learning, and cloud computing technologies. Data collection from network devices using a network monitoring program was carried out through the SNMP protocol.

- SNMP configuration on network devices: SNMP (simple network management protocol) is a standard network management protocol that allows network administrators to monitor and manage devices within a network. To collect data from network devices, SNMP was configured on each device. This involved setting SNMP parameters, such as the device address, the port used for SNMP, and the SNMP protocol version.

- Network monitoring program installation: After the configuration of SNMP on devices, a network monitoring program, which is PRTG network monitor in our case, was installed. It used the SNMP protocol to collect data from devices. The program can be installed on a computer or server located within the same network as network devices.

- Monitoring rule creation: Setting up monitoring rules allows determining which data need to be collected from each device within the network. In other words, the final training dataset consisted of feature matrix indicators collected by the monitoring system: CPU load, memory usage, temperature of network equipment components, network traffic, number of alarms, packet loss, and equipment availability. A binary vector of acceptable responses was created with a breakdown classification equal to 1 for normal equipment operation and equal to 0 otherwise.

- Monitoring initiation: After creating rules and starting the monitoring process, the PRTG network monitor collected and recorded data. Finally, data were saved in the program’s database.

- Data analysis: After data collection, analysis can be conducted using the monitoring program. The program can provide charts and diagrams that help analyze metric changes over time. Additionally, the program can alert potential network issues based on established metric threshold values. In our case, we limited ourselves to exporting data in “.csv” format for each individual network device.

3.2. Dataset

- Date: The date on which the equipment status message was recorded. The date format used was “DD.MM.YYYY”, for example, “10.12.2020”. This column was subsequently removed as it did not offer any informative value.

- Uptime: The number of days the equipment functioned without any failures. The recorded format is numerical; for example, “95.00” indicates the switch operated for 95 consecutive days without interruptions or failures.

- Downtime: A metric denoting the period during which a specific switch or device in the network is either inaccessible or not functioning properly. This could occur due to various reasons, such as hardware failures, software bugs, or connectivity issues. The recorded format is percentage-based; for example, 0% indicates 24 h of uninterrupted operation, whereas 0.1% indicates a downtime of approximately 1–0.2% of a 24 h period, which equates to around 2 min.

- CPU load: The CPU load or utilization of the switch, recorded in a numerical format. For example, “8.12” signifies a CPU load of 81.2% of its full 100% capacity.

- CPU 1: In Catalyst 6500/6000 switches, there are two CPUs. One is the network management processor (NMP) or switch processor (SP), and the other is the multi-layer switch feature card (MSFC) or routing processor (RP). This entry captures the load on the Switch Processor (SP), which performs the following functions:

- Participates in the learning and aging of MAC addresses. Note: MAC address learning is also known as path setup.

- Initiates protocols and processes for network management, such as the spanning tree protocol (STP), Cisco discovery protocol (CDP), VLAN trunking protocol (VTP), dynamic trunking protocol (DTP), and port aggregation protocol (PAgP).

- Handles network management traffic directed to the switch’s CPU, such as Telnet, HTTP, and SNMP traffic. The recorded format is numerical, for example, “8.76”.

- Available memory for Processor 1: This refers to the numerical value representing the available memory for Processor 1 at the time of measurement. The data are recorded in a numerical format, with the unit of measurement being megabytes (MB). For instance, a value of “52.00” signifies that 52 MB of memory is available for Processor 1.

- Percentage of available memory for Processor 1: This metric quantifies the availability of memory for Processor 1 as a percentage of the total 100%. The data are recorded in a percentage format, for example, “37%”, which indicates that 37% of the processor’s memory is available for computational tasks. During data preprocessing, the percentage symbol was removed because the predictive model was unable to interpret this character.

- Available memory for Processor 2: This metric presents data on the available memory for the second processor, recognizing that not all switches have dual processors. The routing processor (RP) performs several tasks:

- Constructs and updates Layer 3 routing tables and the address resolution protocol (ARP).

- Generates the forwarding information base (FIB) for Cisco express forwarding (CEF) and adjacency tables and loads them onto the policy feature card (PFC).

- Manages network control traffic directed toward the RP, such as Telnet, HTTP, and SNMP traffic. The data are recorded in a numerical format, with the unit of measurement being megabytes (MB). For instance, a value of “1.73” signifies that 1.73 MB of memory is available for processing tasks.

- Percentage of available memory for Processor 2: This variable indicates the percentage of available memory for Processor 2 as a fraction of 100%. The data are recorded in a percentage format. For example, a value of “43%” signifies that 43% of the processor’s memory is available for task execution. During the data cleansing phase, the percentage symbol was removed due to incompatibilities with the predictive model.

- Overall available memory: This parameter measures the overall memory availability in percentage terms. For instance, a reading of “100%” implies that all of the memory or RAM capacity is available for utilization. The percentage symbol was removed during data preprocessing due to model limitations. Subsequently, the numerical value was simplified from 100 to 1 to facilitate data handling.

- Response time index (RTI): This is a metric employed to measure the response time of a network device or application. It serves as a vital indicator of the quality of service (QoS) within a network, particularly in scenarios where latency is a critical factor, such as voice or video conferencing. Response time is the duration between the sending of a request and the receipt of a corresponding response. This time encompasses network transmission delays, device processing delays, and other contributing factors. The RTI can be utilized for monitoring and analyzing network performance; a high RTI value may signal network issues that warrant investigation. The measurement format is numerical, recorded in milliseconds (ms). This metric captures the time required for a packet to travel from the sender to the receiver and back, and may include various types of delays such as transmission and processing delays. For example, a value of “70” would imply a 70-ms response time.

- Optimal RTI: An RTI value of 20 ms is generally considered excellent for the majority of applications.

- Average RTI: An RTI of 100 ms may be acceptable for certain types of traffic but could result in noticeable real-time delays for voice and video applications.

- High RTI: If the RTI reaches 300 ms or more, this is likely indicative of significant network latency issues that could adversely impact the performance of network applications.

- CPU load index: This metric provides information reflecting the current load on the central processing unit (CPU). It is a quantitative assessment of the extent to which CPU time is being utilized and serves as an important indicator of overall device performance and health. In Cisco switches, the CPU load index is generally measured as a percentage ranging from 1% to 100%, where

- 1–30%: This range is usually considered a normal load, indicating that the device is operating healthily.

- 31–70%: This is a moderate load that may necessitate additional monitoring. It could be related to a temporary increase in traffic or a task consuming more resources.

- 71–100%: This signifies a high or critical load, potentially indicating a problem. This could be due to misconfiguration, an attack, hardware defects, or other issues that could have a negative impact on the overall network operation.

- Traffic index: This metric is used for the quantitative assessment of the volume of traffic flowing through a switch over a specified period of time.

- Bandwidth analysis: This entails determining the data transfer rate through a specific port or interface of the switch. For instance, the traffic index might reveal that a certain port is being utilized at 70% of its maximum bandwidth capacity. In our context, data were recorded from TRUNK ports, which are channel ports connecting the switch to other network switches. The recording format is numerical. For example, a value of “9.32” means that the channel port operated at an average speed of 9.32 MB/s.

- Alarms: These are records from an alerting system designed to notify network administrators about various events, problems, or anomalous states that may require attention or intervention. The recording format is numerical. For example, “1” means that there was one alarm from the system, and “3” means there were three alarms from the system.

- Temperature: Most Cisco switches are equipped with built-in temperature sensors that continuously measure the temperature at various points within the device. In our case, the measurement format was numerical and in degrees Celsius, e.g., a reading of “42.0” signified that at the time of measurement, the equipment’s temperature was around 42 C. During data cleaning, the “C” symbol was removed because the forecasting model could not interpret that symbol.

- is the information gain for attribute A on dataset T.

- is the entropy of dataset T.

- is the number of samples in the subset , created by splitting S on attribute A.

- is the entropy of the subset .

- Maximum depth: The tree limit was 3 and 4;

- Minimum samples per node;

- Minimum information gain.

3.3. Pre-Modeling

- Logistic regression.

- Random forest.

- Gradient boosted trees.

- SVM (support vector machine).

- Naïve Bayes.

- kNN (k-nearest neighbor).

- Linear regression.

- Gradient boosted regression.

- Neural network.

3.4. Proposed Model

- Maximum depth (max depth): Setting a maximum depth for the decision tree restricts how many levels it can grow. If a tree reaches this depth during construction, it stops splitting and becomes a leaf node. This helps prevent overfitting by limiting the tree’s complexity.

- Minimum samples per leaf: This criterion specifies the minimum number of samples required in a leaf node. If a node has fewer samples than this threshold after a split, the split is not allowed. It prevents the creation of very small leaf nodes, which can capture noise in the data.

- Minimum samples per split: Similar to the minimum samples per leaf, this criterion specifies a minimum number of samples required to perform a split at a node. If there are fewer samples than this threshold, the split is not considered, preventing further growth of the tree on small subsets of data.

- Maximum features: This parameter limits the number of features considered for each split. It can be set to a fixed number or a percentage of total features. Limiting the features considered at each node helps control tree complexity and prevents overfitting.

- Impurity threshold (minimum impurity decrease): Nodes are split only if the impurity decrease (e.g., Gini impurity or entropy) resulting from the split exceeds a certain threshold. If the decrease in impurity is below this threshold, the split is not performed, which helps avoid splitting into noisy or irrelevant features.

- Pruning techniques: After growing a full tree, pruning techniques can be applied to remove branches that do not contribute significantly to improving predictive accuracy. Pruning involves evaluating the impact of removing subtrees and keeping only those that improve the tree’s generalization performance on validation data.

- Cross-validation: Cross-validation techniques, such as k-fold cross-validation, can be used to estimate the optimal tree depth and other hyperparameters by assessing a tree’s performance on different validation subsets of the data. This helps find the right trade-off between model complexity and predictive accuracy.

- The script starts by importing several important Python libraries used for data manipulation, visualization, and machine learning (Python libraries, such as numpy, pandas, sklearn, and matplotlib).

- Data loading: The authors take some of the collected data from the monitoring systems and prepare them for further manipulation. The next step is “data preprocessing”:

- (a)

- The authors add comments within triple quotes to avoid overlapping histograms for each specified feature. This allows for a comparison of distributions in cases where ’Breaking’ is ’Yes’ or ’No’.

- (b)

- Conversion to binary: The ’Breaking’ column is the target variable, and it is converted from ’Yes’/’No’ to a binary format (1/0) using a dictionary mapping.

- (c)

- Feature selection: Some columns are dropped from the DataFrame “ds” (as specified in cat_ feet), and the modified DataFrame only contains numerical features.

- Train–test split: The dataset is split into a training set (X_ train, Y_ train) and a test set (X_ test, Y_ test). The test set size is set to 50% of the total data. The random_ state=0 ensures that the splits are reproducible.

- Modeling. A pipeline is created with two steps:

- (a)

- “StandardScaler()”: Standardization of a dataset is a common requirement for many machine learning estimators. It standardizes features by removing the mean and scaling to unit variance.

- (b)

- “SVC(gamma=’auto’, probability=True)”: SVC stands for support vector classification, which is a type of SVM. By setting “gamma=’auto’”, the gamma parameter is set to 1/n_ features. Setting “probability=True” enables the classifier to provide probability estimates. After all, the pipeline is fitted on the training data.

- Prediction: Predictions (“clf_ pred”) are made on the test data. Probabilities (“clf_ pred_ proba”) are also calculated for each prediction. This is possible because “probability=True” is set in the SVC classifier.

- Model evaluation: Several metrics are printed out for evaluating the model:

- (a)

- Accuracy: This is the percentage of correct predictions. It is calculated using “accuracy_ score(clf_ pred, Y_ test)”, comparing the predicted values against the actual values from the test set.

- (b)

- Cross-validation score: This is another way to measure the effectiveness of the trained model, which works by dividing the dataset into “k” groups or folds, repeatedly training the model on k-1 folds while evaluating it on the held-out fold.

- (c)

- Classification report: This report displays the precision, recall, F1-score, and support for the model.

- Alerts: Finally, the script prints “Alarm!” for every instance in the test set that is predicted as class 1 (“Breaking” = Yes). This could be interpreted as an alert system where an alarm is raised if the model predicts a system failure.

3.5. Results and Discussion

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Potapov, V.I.; Shafeeva, O.P.; Doroshenko, M.S.; Chervenchuk, I.V.; Gritsay, A.S. Numerically-analytical solution of problem gaming confrontation hardware-redundant dynamic system with the enemy operating in conditions of incomplete information about the behavior of participants in the game. J. Phys. Conf. Ser. 2018, 1050, 012062. [Google Scholar] [CrossRef]

- Storozhenko, N.R.; Goleva, A.I.; Tunkov, D.A.; Potapov, V.I. Modern problems of information systems and data networks: Choice of network equipment, monitoring and detecting deviations and faults. J. Phys. Conf. Ser. 2020, 1546, 012030. [Google Scholar] [CrossRef]

- Cybersecurity Threatscape 2019 (No Date) Ptsecurity.com. Available online: Https://www.ptsecurity.com/ww-en/analytics/cybersecurity-threatscape-2019/ (accessed on 19 August 2023).

- Juliono, A.; Rosyani, P. Implementasi Sistem Monitoring Jaringan Internet Kantor PT. Permodalan Nasional Madani (Persero) Menggunakan Jessie Observium Dan Mikrotik (Simonjangkar). Kernel J. Ris. Inov. Bid. Inform. Pendidik. Inform. 2022, 3, 27–32. [Google Scholar]

- Josephsen, D. Building a Monitoring Infrastructure with Nagios; Prentice Hall PTR: Indianapolis, IN, USA, 2007. [Google Scholar]

- Zhou, J.; Huang, H.; Mattson, E.; Wang, H.F.; Haimson, B.C.; Doe, T.W.; Oldenburg, C.M.; Dobson, P.F. Modeling of hydraulic fracture propagation at the kISMET site using a fully coupled 3D network-flow and quasi-static discrete element model (No. INL/CON-17-41116). In Proceedings of the 42nd Workshop on Geothermal Reservoir Engineering Stanford University, Stanford, CA, USA, 13–15 February 2017. [Google Scholar]

- Mistry, D.; Modi, P.; Deokule, K.; Patel, A.; Patki, H.; Abuzaghleh, O. Network traffic measurement and analysis. In Proceedings of the 2016 IEEE Long Island Systems, Applications and Technology Conference (LISAT), Farmingdale, NY, USA, 29 April 2016; pp. 1–7. [Google Scholar]

- Olups, R. Zabbix 1.8 Network Monitoring; Packt Publishing Ltd.: Birmingham, UK, 2010. [Google Scholar]

- Orazbayev, B.; Ospanov, Y.; Orazbayeva, K.; Makhatova, V.; Kurmangaziyeva, L.; Utenova, B.; Mailybayeva, A.; Mukatayev, N.; Toleuov, T.; Tukpatova, A. System Concept for Modelling of Technological Systems and Decision Making in Their Management; PC Technology Center: Kharkiv, Ukraine, 2021; 180p. [Google Scholar] [CrossRef]

- Sansyzbay, L.Z.; Orazbayev, B.B. Modeling the operation of climate control system in premises based on fuzzy controller. J. Phys. Conf. Ser. 2019, 1399, 044017. [Google Scholar] [CrossRef]

- Shao, J.; Zhao, Z.; Yang, L.; Song, P. Remote Monitoring and Control System Oriented to the Textile Enterprise. In Proceedings of the 2009 Second International Symposium on Knowledge Acquisition and Modeling, Wuhan, China, 30 November–1 December 2009; Volume 3, pp. 151–154. [Google Scholar]

- Li, Q.; Yang, Y.; Jiang, P. Remote Monitoring and Maintenance for Equipment and Production Lines on Industrial Internet: A Literature Review. Machines 2022, 11, 12. [Google Scholar] [CrossRef]

- Yugapriya, M.; Judeson, A.K.J.; Jayanthy, S. Predictive Maintenance of Hydraulic System using Machine Learning Algorithms. In Proceedings of the 2022 International Conference on Electronics and Renewable Systems (ICEARS), Tuticorin, India, 16–18 March 2022; pp. 1208–1214. [Google Scholar]

- Dsouza, J.; Velan, S. Preventive maintenance for fault detection in transfer nodes using machine learning. In Proceedings of the 2019 International Conference on Computational Intelligence and Knowledge Economy (ICCIKE), Dubai, United Arab Emirates, 11–12 December 2019; pp. 401–404. [Google Scholar]

- Polat, H.; Polat, O.; Cetin, A. Detecting DDoS attacks in software-defined networks through feature selection methods and machine learning models. Sustainability 2020, 12, 1035. [Google Scholar] [CrossRef]

- Wang, M.; Cui, Y.; Wang, X.; Xiao, S.; Jiang, J. Machine learning for networking: Workflow, advances and opportunities. IEEE Netw. 2017, 32, 92–99. [Google Scholar] [CrossRef]

- Jinglong, Z.; Changzhan, H.; Xiangming, W.; Jiakun, A.; Chunguang, H.; Jinglin, H. Research on Fault Prediction of Distribution Network Based on Large Data. In MATEC Web of Conferences; EDP Sciences: Ulys, France, 2017; Volume 139, p. 00149. [Google Scholar]

- Le, T.; Luo, M.; Zhou, J.; Chan, H.L. Predictive maintenance decision using statistical linear regression and kernel methods. In Proceedings of the 2014 IEEE Emerging Technology and Factory Automation (ETFA), Barcelona, Spain, 16–19 September 2014; pp. 1–6. [Google Scholar]

- Harrell, F.E. Regression Modeling Strategies: With Applications to Linear Models, Logistic Regression, and Survival Analysis; Springer: New York, NY, USA, 2001; Volume 608. [Google Scholar]

- Liu, T.; Wang, S.; Wu, S.; Ma, J.; Lu, Y. Predication of wireless communication failure in grid metering automation system based on logistic regression model. In Proceedings of the 2014 China International Conference on Electricity Distribution (CICED), Shenzhen, China, 23–26 September 2014; pp. 894–897. [Google Scholar]

- Fletcher, S.; Islam, M.Z. Decision tree classification with differential privacy: A survey. Acm Comput. Surv. (CSUR) 2019, 52, 1–33. [Google Scholar] [CrossRef]

- Priyanka; Kumar, D. Decision tree classifier: A detailed survey. Int. J. Inf. Decis. Sci. 2020, 12, 246–269. [Google Scholar] [CrossRef]

- Mohammadi, M.; Rashid, T.A.; Karim, S.H.T.; Aldalwie, A.H.M.; Tho, Q.T.; Bidaki, M.; Rahmani, A.M.; Hosseinzadeh, M. A comprehensive survey and taxonomy of the SVM-based intrusion detection systems. J. Netw. Comput. Appl. 2021, 178, 102983. [Google Scholar] [CrossRef]

- Cervantes, J.; Garcia-Lamont, F.; Rodríguez-Mazahua, L.; Lopez, A. A comprehensive survey on support vector machine classification: Applications, challenges and trends. Neurocomputing 2020, 408, 189–215. [Google Scholar] [CrossRef]

- Myrzatay, A.; Rzayeva, L. Creation of Forecast Algorithm for Networking Hardware Malfunction in the context of small number of breakdowns. Int. J. Eng. Res. Technol. 2020, 13, 1243. [Google Scholar] [CrossRef]

- Bouke, M.A.; Abdullah, A.; ALshatebi, S.H.; Abdullah, M.T.; El Atigh, H. An intelligent DDoS attack detection tree-based model using Gini index feature selection method. Microprocess. Microsyst. 2023, 98, 104823. [Google Scholar] [CrossRef]

- Kent, J.T. Information gain and a general measure of correlation. Biometrika 1983, 70, 163–173. [Google Scholar] [CrossRef]

- Englezou, Y.; Waite, T.W.; Woods, D.C. Approximate Laplace importance sampling for the estimation of expected Shannon information gain in high-dimensional Bayesian design for nonlinear models. Stat. Comput. 2022, 32, 82. [Google Scholar] [CrossRef]

- Rokach, L.; Maimon, O. Decision trees. In Data Mining and Knowledge Discovery Handbook; Springer: New York, NY, USA, 2005; pp. 165–192. [Google Scholar]

- Costa, V.G.; Pedreira, C.E. Recent advances in decision trees: An updated survey. Artif. Intell. Rev. 2023, 56, 4765–4800. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rzayeva, L.; Myrzatay, A.; Abitova, G.; Sarinova, A.; Kulniyazova, K.; Saoud, B.; Shayea, I. Enhancing LAN Failure Predictions with Decision Trees and SVMs: Methodology and Implementation. Electronics 2023, 12, 3950. https://doi.org/10.3390/electronics12183950

Rzayeva L, Myrzatay A, Abitova G, Sarinova A, Kulniyazova K, Saoud B, Shayea I. Enhancing LAN Failure Predictions with Decision Trees and SVMs: Methodology and Implementation. Electronics. 2023; 12(18):3950. https://doi.org/10.3390/electronics12183950

Chicago/Turabian StyleRzayeva, Leila, Ali Myrzatay, Gulnara Abitova, Assiya Sarinova, Korlan Kulniyazova, Bilal Saoud, and Ibraheem Shayea. 2023. "Enhancing LAN Failure Predictions with Decision Trees and SVMs: Methodology and Implementation" Electronics 12, no. 18: 3950. https://doi.org/10.3390/electronics12183950

APA StyleRzayeva, L., Myrzatay, A., Abitova, G., Sarinova, A., Kulniyazova, K., Saoud, B., & Shayea, I. (2023). Enhancing LAN Failure Predictions with Decision Trees and SVMs: Methodology and Implementation. Electronics, 12(18), 3950. https://doi.org/10.3390/electronics12183950