A Comparison of Summarization Methods for Duplicate Software Bug Reports

Abstract

1. Introduction

- We apply and evaluate several text summarization methods for duplicate bug reports;

- We compare and analyze the effectiveness of existing text summarization methods for duplicate bug report summarization;

- We analyze the impact of using duplicate bug reports for bug summarization.

2. Related Work

2.1. Extractive Summarization for Bug Reports

2.1.1. Extractive Supervised Summarization for Bug Reports

2.1.2. Extractive Unsupervised Summarization for Bug Reports

2.2. Abstractive Summarization for Bug Reports

2.3. Summarization with Duplicate Bug Reports

3. Six Summarization Methods for Duplicate Bug Reports

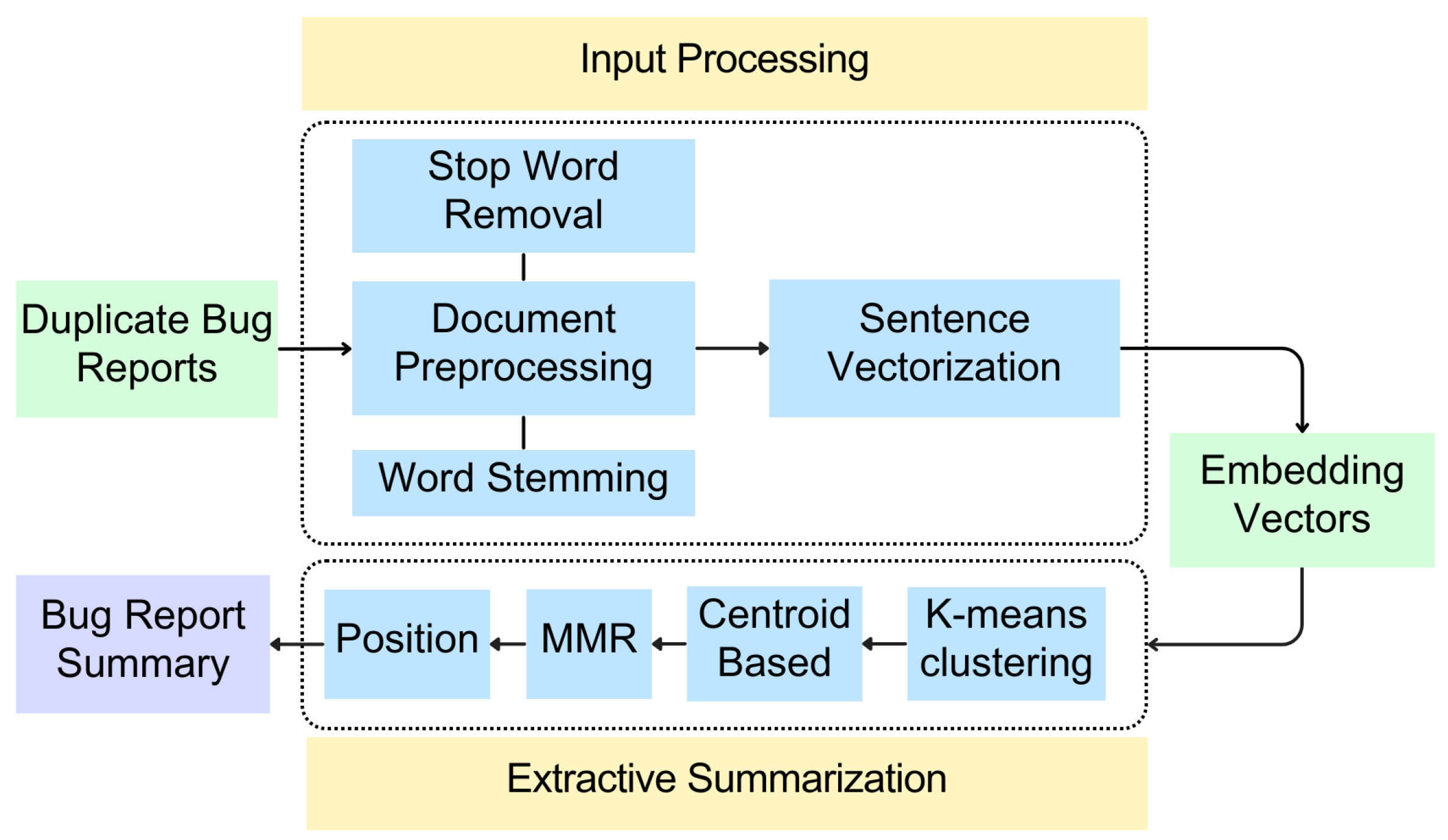

3.1. K-Means, Centroid-Based, MMR, and Sentence Position (KCMS)

3.1.1. Input Processing

3.1.2. Extractive Summarization

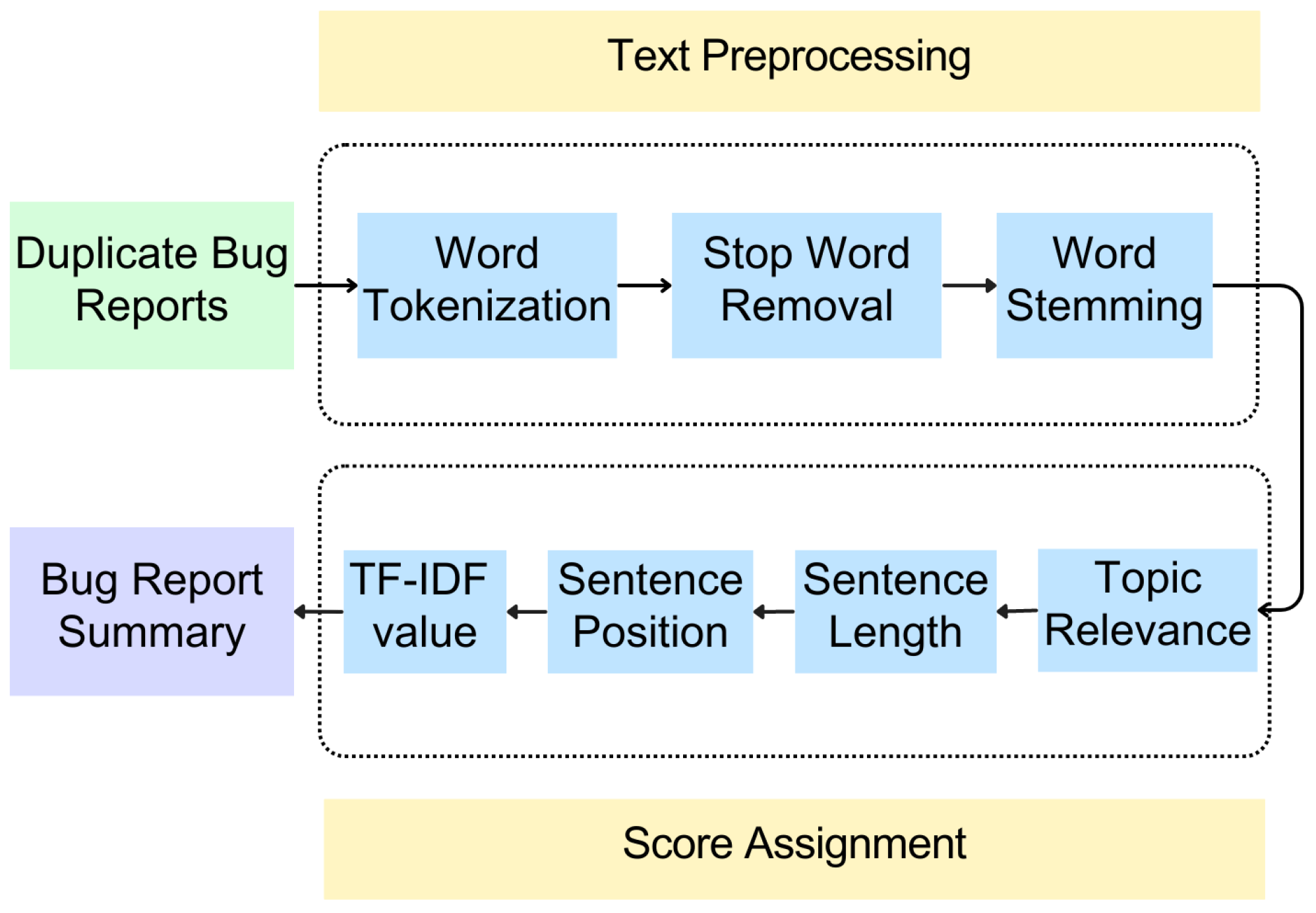

3.2. Multi-Doc News Summarizer (MDNS)

3.2.1. Text Preprocessing

3.2.2. Score Assignment

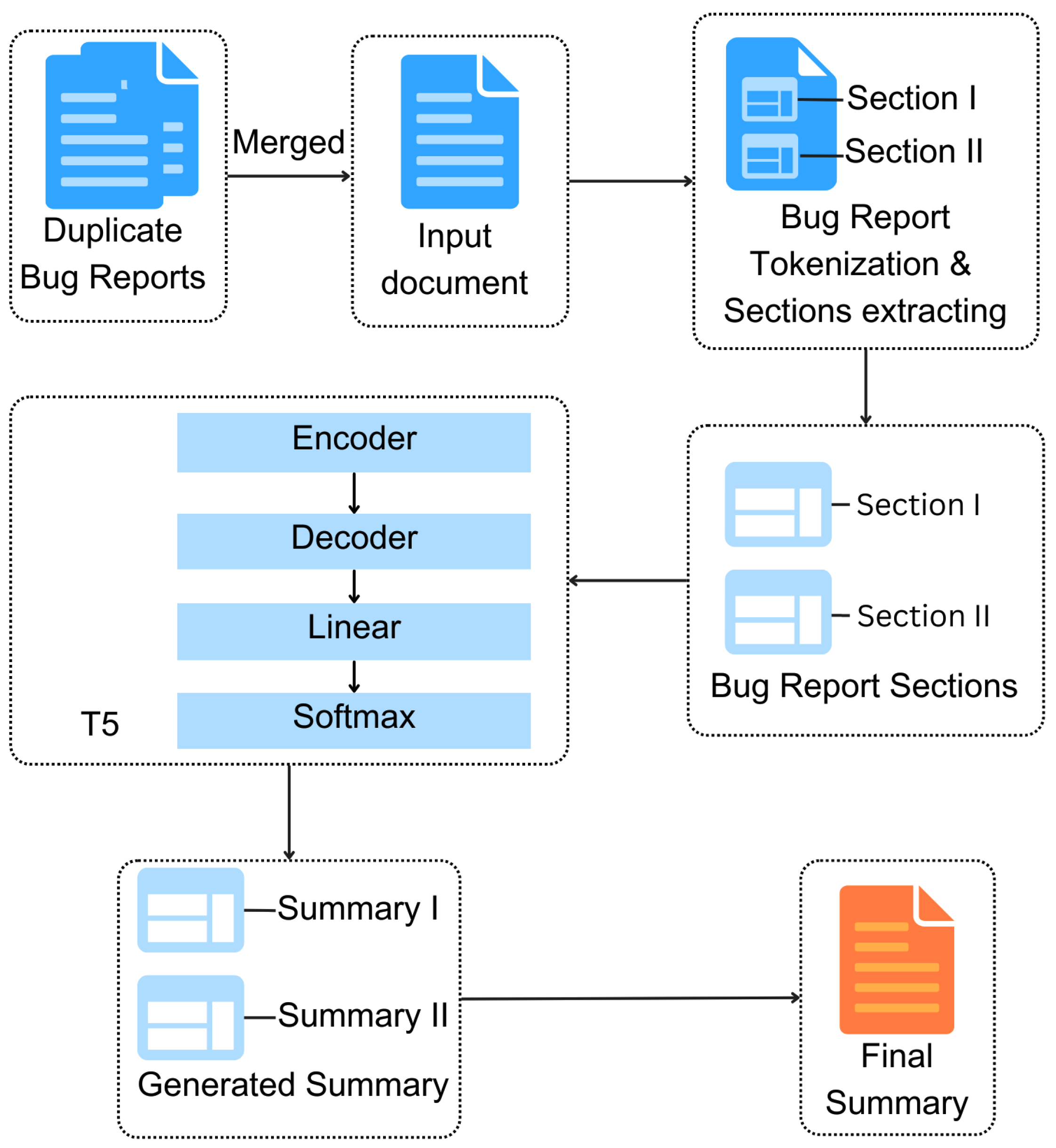

3.3. T5

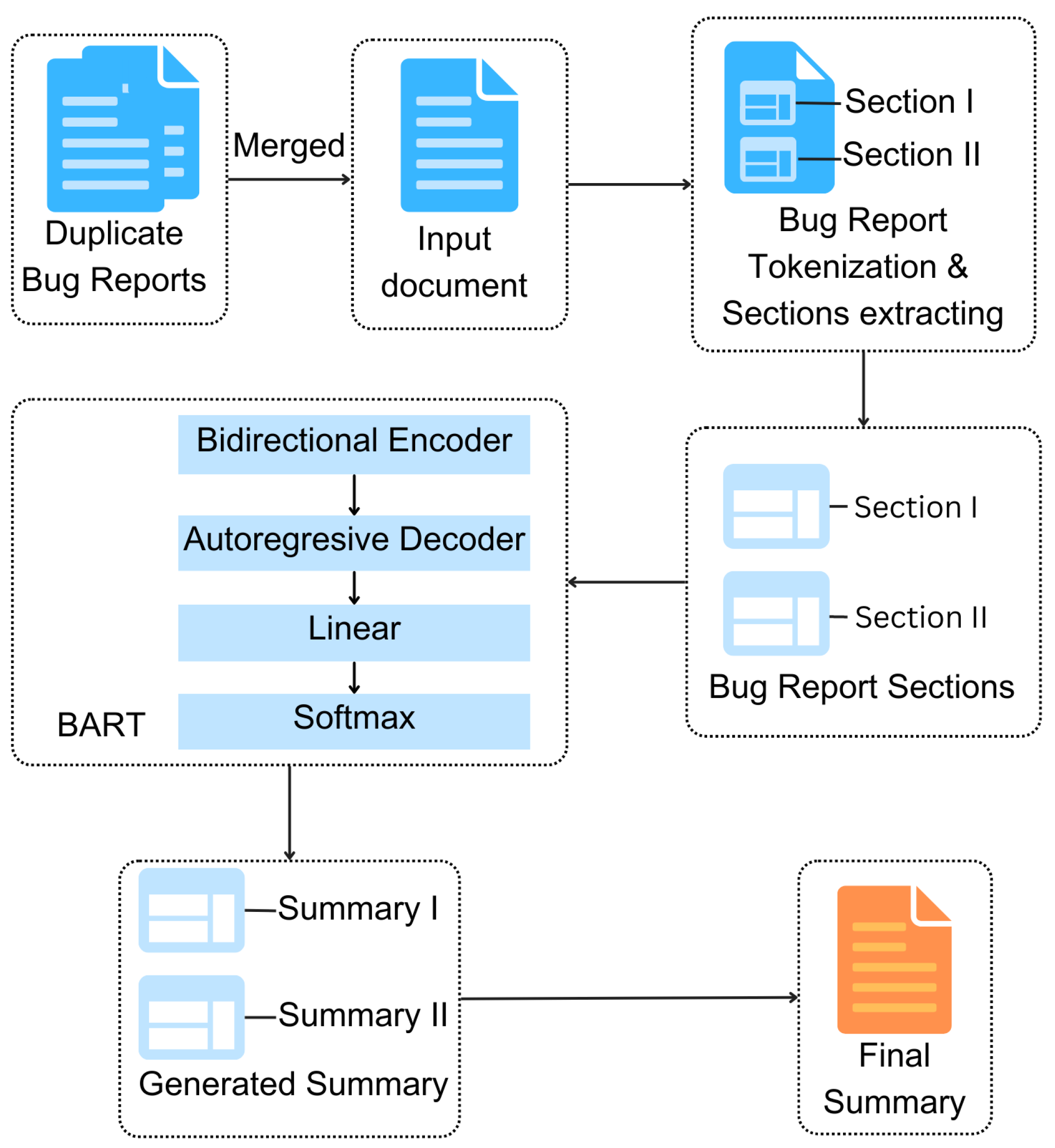

3.4. BART

3.5. PEGASUS

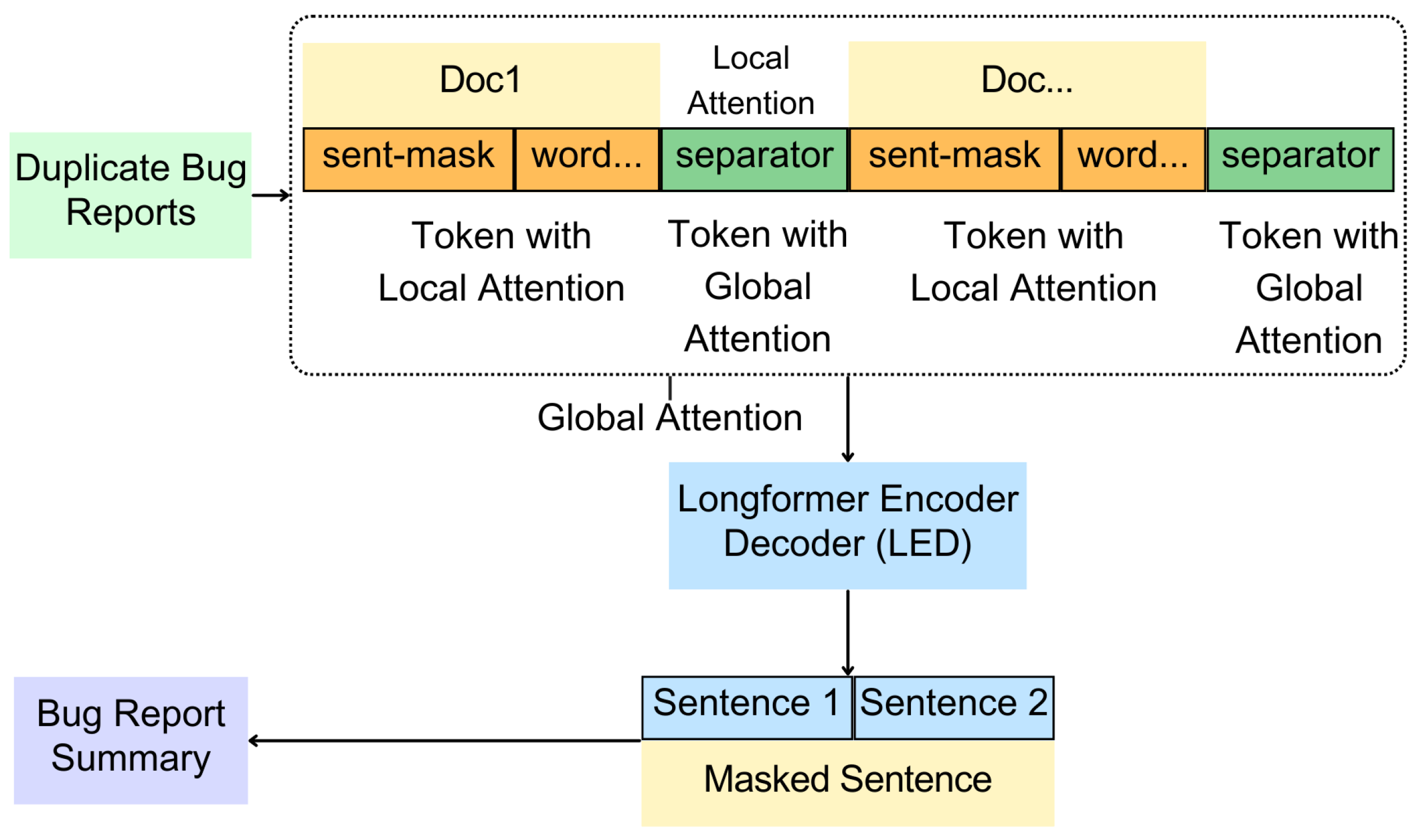

3.6. PRIMERA

4. Experimental Setup

4.1. Research Questions

- RQ1—Which approach yields the highest performance for duplicate bug report summarization?

- RQ2—How well does each approach comprehensively summarize the key information in bug reports?

4.2. Datasets

4.3. Data Preprocessing

4.4. Annotation of Bug Reports

4.5. Evaluation Metrics

5. Results

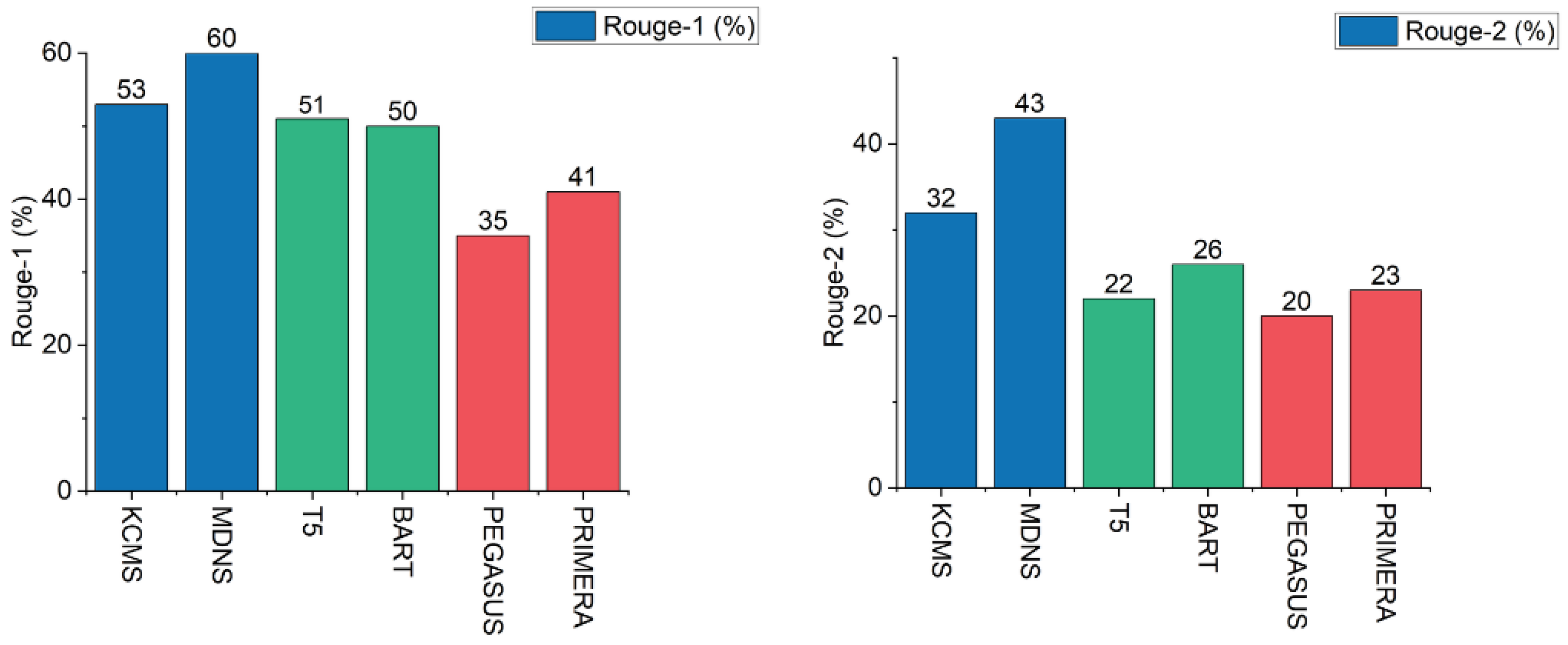

5.1. Research Question 1

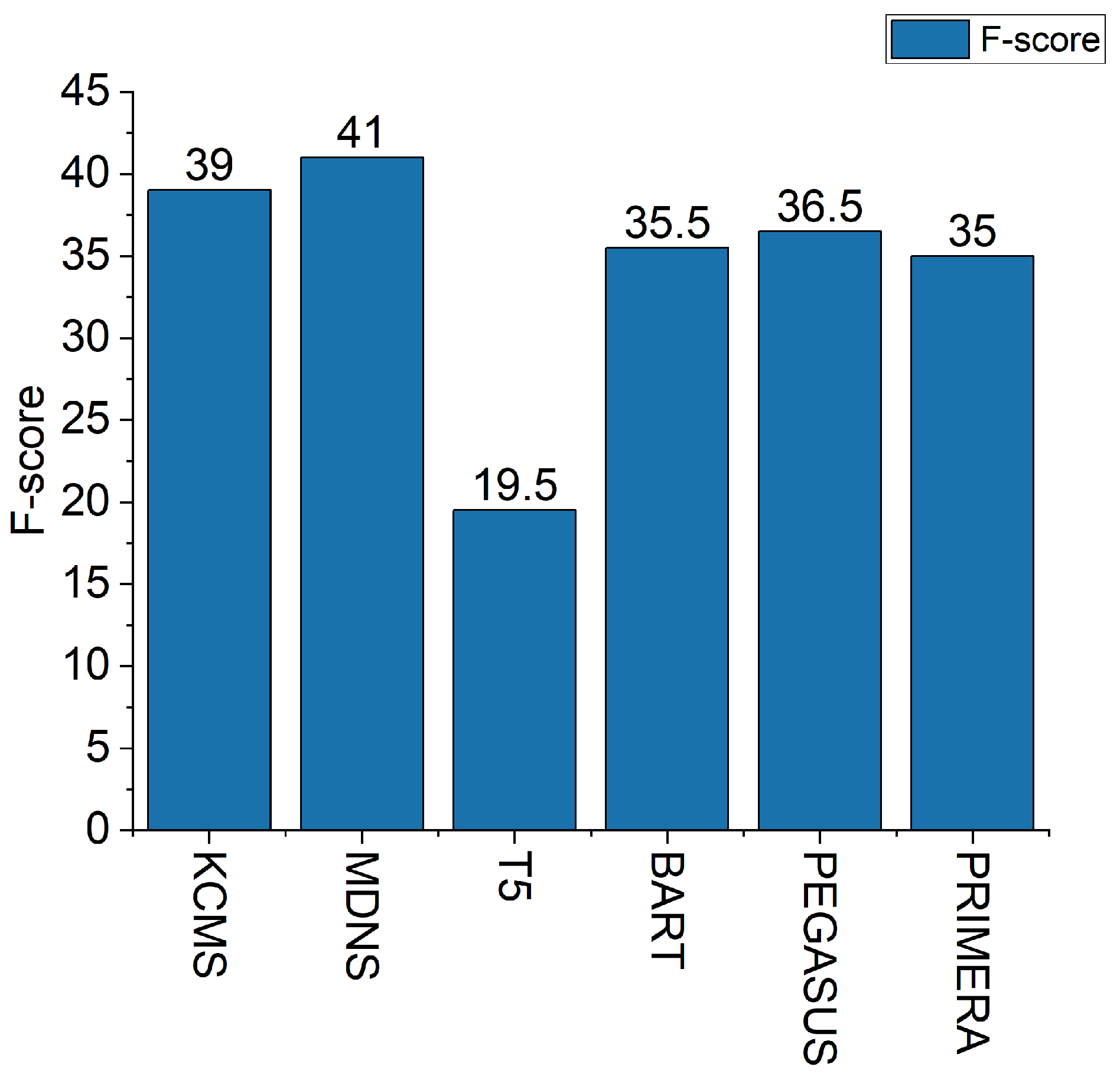

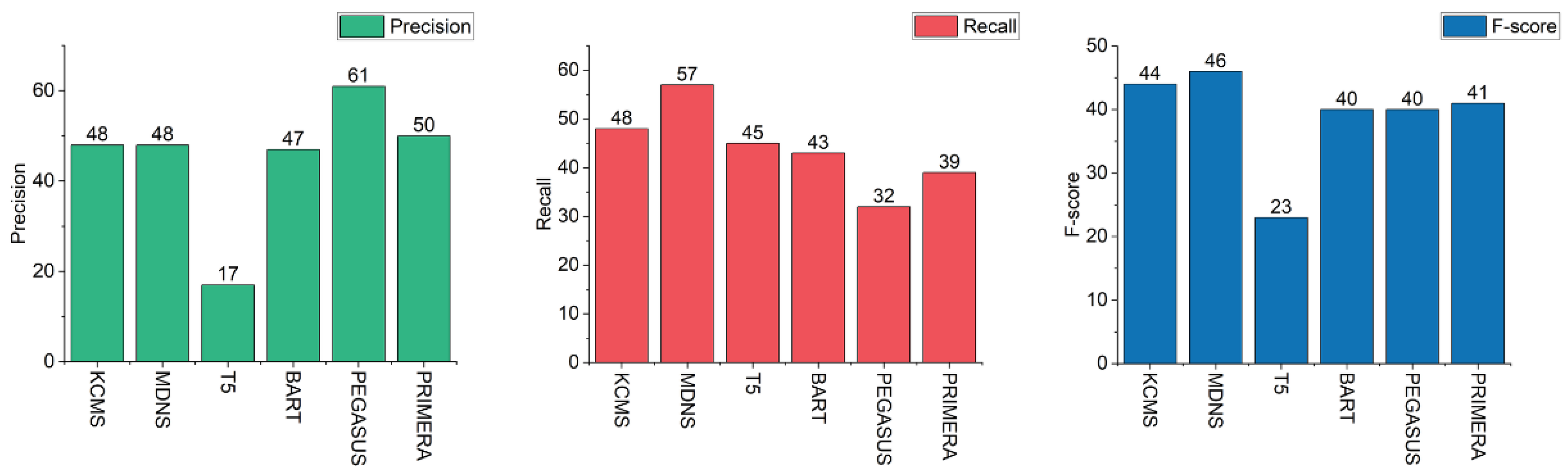

5.2. Research Question 2

6. Discussion

6.1. Implication

6.2. Threats to Validity

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| ADS | Authorship Dataset |

| BART | Bidirectional and Auto-Regressive Transformers |

| BoW | Bag of Words |

| BRC | Bug Report Corpus |

| KCMS | K-means, Centroid-based, MMR, and Sentence Position |

| MDNS | Multi-Doc News Summarizer |

| MMR | Maximal Marginal Relevance |

| ROUGE | Recall-Oriented Understudy for Gisting Evaluation |

| SDS | Summary Dataset |

| T5 | Text-To-Text Transfer Transformer |

Appendix A. The Entire Summary Generated by Each Model

| Model | Summary |

|---|---|

| KCMS | Enable profiles in settings |

| Restart | |

| In one of the windows run “Settings Profiles: Create an Empty Settings Profile” and create a new empty profile | |

| See that your new profile gets used in just that window | |

| In that same window run “Settings Profiles: Switch” and choose your default profile See that your default profile is applied, but view locations are reset | |

| /duplicate | |

| Thanks for the steps. | |

| root cause is the order of storage events. | |

| Reloading does not fix my issue. | |

| Found an easy repro: | |

| Have 2 windows open (not sure if this step is needed) | |

| Disable profiles in settings (not sure if this step is needed) | |

| Restart. | |

| This happens during storage change with views cache storage change comes first followed by view containers cache storage change. | |

| MDNS | Views location is not getting updated when switching profiles |

| Enable Settings Profiles | |

| Create an empty profile P1 and move Timeline and Outline views to secondary sidebar as separate view containers | |

| Create an empty profile P2 | |

| Switch from P2 to P1 | |

| Timeline and Outline views are not moved to secondary sidebar and remain in explorer | |

| Refreshing the window fixes it. View locations reset | |

| I think this happened because I was testing profiles. Similar to #153655 | |

| interesting, looks like this is specific to generated view containers, as moving an entire container from activity bar to secondary side bar is reflected correctly | |

| yes. My view locations have been reset. This happens during storage change with views cache storage change comes first followed by view containers cache storage change. Found an easy repro: | |

| Have 2 windows open (not sure if this step is needed) | |

| Disable profiles in settings (not sure if this step is needed) | |

| Restart. Enable profiles in settings | |

| Restart | |

| In one of the windows run “Settings Profiles: Create an Empty Settings Profile” and create a new empty profile | |

| See that your new profile gets used in just that window | |

| In that same window run “Settings Profiles: Switch” and choose your default profile | |

| See that your default profile is applied, but view locations are reset | |

| /duplicate | |

| Thanks for the steps. I think you might be hitting this—#154090 | |

| @sandy081 I took a look at the steps in the duplicate issue, and it says that reloading fixes the issue. I am planning to look at #154090 next milestone and during then I will cover this too. |

| Model | Summary |

|---|---|

| T5 | I think this happened because I was testing profiles. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart./nI think this happened because I was testing profiles. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart. Restart./nI think this happened because I was testing profiles. Reloading does not fix my issue. I think this happened because I was testing profiles. Reloading does not fix my issue. Reloading does not fix my issue. Reloading does not fix my issue. Reloading does not fix my issue./nI think this happened because I was testing profiles. Reloading does not fix my issue. I think this happened because I was testing profiles. Reloading does not fix my issue. Reloading does not fix my issue. Reloading does not fix my issue. Reloading does not fix my issue./nI am planning to look at #154090 next milestone and during then I will cover this too. root cause is the order of storage events. This happens during storage change with views cache storage change comes first followed by view containers cache storage change …. My view locations have been reset. I think this happened because I was testing profiles. My view locations have been reset. in. in.. Reload. Re.. This happens This/n |

| BART | View locations reset I think this happened because I was testing profiles. I have Gitlens installed, and/nEnable profiles in settings. Restart. Create a new empty profile./nI think you might be hitting this—#154090. I took a look at the steps in the/nViews location is not getting updated when switching profiles. Timeline and Outline views are not moved to secondary sidebar/nyes. root cause is the order of storage events. This happens during storage change with views cache storage change comes/n |

| PEGASUS | Enable profiles in settings Restart In one of the windows run “Settings Profiles: Create an Empty Settings Profile” and create a new empty profile See that your new profile gets used in just that window In that same window run “Settings Profiles: Switch” and choose your default profile See that your default profile is applied, but view locations are reset/duplicate Thanks for the steps. Views location is not getting updated when profiles switching Enable Settings Profiles Create an empty profile P1 and move Timeline and Outline views to secondary sidebar as separate view containers Create an empty profile P2 Switch from P2 to P1 Timeline and Outline views are not moved to secondary sidebar and remain in explorer Refreshing the window fixes it./n |

| PRIMERA | View locations reset I think this happened because I was testing profiles.My view locations have been reset. I have Gitlens installed, and I had a few of the gitlens views in their own view container. Now, they are back in their default view container (scm). fixmeMay I know if you are able to reproduce? If so can you please provide steps? Zooming in and out of the view containers is not possible when switching profiles./n |

References

- Jindal, S.G.; Kaur, A. Automatic keyword and sentence-based text summarization for software bug reports. IEEE Access 2020, 8, 65352–65370. [Google Scholar] [CrossRef]

- Bettenburg, N.; Premraj, R.; Zimmermann, T.; Kim, S. Duplicate bug reports considered harmful… really? In Proceedings of the 2008 IEEE International Conference on Software Maintenance, Beijing, China, 28 September–4 October 2008; pp. 337–345. [Google Scholar]

- Hao, R.; Feng, Y.; Jones, J.A.; Li, Y.; Chen, Z. CTRAS: Crowdsourced test report aggregation and summarization. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering (ICSE), Montreal, QC, Canada, 25–31 May 2019; pp. 900–911. [Google Scholar]

- Manh, H.C.; Le Thanh, H.; Minh, T.L. Extractive Multi-Document Summarization Using K-Means, Centroid-Based Method, MMR, and Sentence Position. In Proceedings of the 10th International Symposium on Information and Communication Technology, SoICT ’19, Hanoi, Vietnam, 4–6 December 2019; pp. 29–35. [Google Scholar] [CrossRef]

- Reichold, L. Multi-Document News Article Summarizer. Available online: https://github.com/lukereichold/News-Summarizer (accessed on 3 July 2023).

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 5485–5551. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. arXiv 2019. [Google Scholar] [CrossRef]

- Zhang, J.; Zhao, Y.; Saleh, M.; Liu, P.J. PEGASUS: Pre-training with Extracted Gap-sentences for Abstractive Summarization. arXiv 2020. [Google Scholar] [CrossRef]

- Xiao, W.; Beltagy, I.; Carenini, G.; Cohan, A. PRIMERA: Pyramid-based Masked Sentence Pre-training for Multi-document Summarization. arXiv 2022. [Google Scholar] [CrossRef]

- Yadav, D.; Lalit, N.; Kaushik, R.; Singh, Y.; Dinesh, M.; Yadav, A.K.; Bhadane, K.; Kumar, A.; Khan, B. Qualitative Analysis of Text Summarization Techniques and Its Applications in Health Domain. Comput. Intell. Neurosci. 2022, 2022, 3411881. [Google Scholar] [CrossRef] [PubMed]

- Kumarasamy Mani, S.K.; Catherine, R.; Sinha, V.; Dubey, A. AUSUM: Approach for unsupervised bug report summarization. In Proceedings of the ACM SIGSOFT 20th International Symposium on the Foundations of Software, Cary, NC, USA, 11–16 November 2012; p. 11. [Google Scholar] [CrossRef]

- Rastkar, S.; Murphy, G.C.; Murray, G. Automatic Summarization of Bug Reports. IEEE Trans. Softw. Eng. 2014, 40, 366–380. [Google Scholar] [CrossRef]

- Lotufo, R.; Malik, Z.; Czarnecki, K. Modelling the ‘Hurried’ bug report reading process to summarize bug reports. In Proceedings of the 2012 28th IEEE International Conference on Software Maintenance (ICSM), Trento, Italy, 23–28 September 2012; Volume 20, pp. 430–439. [Google Scholar] [CrossRef]

- Ferreira, I.; Cirilo, E.; Vieira, V.; Mourão, F. Bug Report Summarization: An Evaluation of Ranking Techniques. In Proceedings of the 2016 X Brazilian Symposium on Software Components, Architectures and Reuse (SBCARS), Maringz, Brazil, 19–20 September 2016; pp. 101–110. [Google Scholar] [CrossRef]

- Yang, C.Z.; Ao, C.M.; Chung, Y.H. Towards an Improvement of Bug Report Summarization Using Two-Layer Semantic Information. IEICE Trans. Inf. Syst. 2018, E101.D, 1743–1750. [Google Scholar] [CrossRef]

- Liu, H.; Yu, Y.; Li, S.; Guo, Y.; Wang, D.; Mao, X. BugSum: Deep Context Understanding for Bug Report Summarization. In Proceedings of the 28th International Conference on Program Comprehension, ICPC’20, Seoul, Republic of Korea, 13–15 July 2020; pp. 94–105. [Google Scholar] [CrossRef]

- Kukkar, A.; Mohana, R. Bug Report Summarization by Using Swarm Intelligence Approaches. Recent Patents Comput. Sci. 1969, 12, 1–15. [Google Scholar] [CrossRef]

- Shastri, A.; Saini, N.; Saha, S.; Mishra, S. MEABRS: A Multi-objective Evolutionary Framework for Software Bug Report Summarization. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 2006–2011. [Google Scholar] [CrossRef]

- Koh, Y.; Kang, S.; Lee, S. Deep Learning-Based Bug Report Summarization Using Sentence Significance Factors. Appl. Sci. 2022, 12, 5854. [Google Scholar] [CrossRef]

- Huai, B.; Li, W.; Wu, Q.; Wang, M. Mining Intentions to Improve Bug Report Summarization. In Proceedings of the International Conferences on Software Engineering and Knowledge Engineering, Redwood City, CA, USA, 1–3 July 2018; pp. 320–363. [Google Scholar] [CrossRef]

- Tian, X.; Wu, J.; Yang, G. BUG-T5: A Transformer-based Automatic Title Generation Method for Bug Reports. In Proceedings of the 2022 3rd International Conference on Big Data & Artificial Intelligence & Software Engineering, Guangzhou, China, 21–23 October 2022; Volume 3304, pp. 45–50. [Google Scholar]

- Ma, X.; Keung, J.W.; Yu, X.; Zou, H.; Zhang, J.; Li, Y. AttSum: A Deep Attention-Based Summarization Model for Bug Report Title Generation. IEEE Trans. Reliab. 2023, 1–15. [Google Scholar] [CrossRef]

- He, J.; Nazar, N.; Zhang, J.; Zhang, T.; Ren, Z. PRST: A PageRank-Based Summarization Technique for Summarizing Bug Reports with Duplicates. Int. J. Softw. Eng. Knowl. Eng. 2017, 27, 869–896. [Google Scholar] [CrossRef]

- Kim, B.; Kang, S.; Lee, S. A Weighted PageRank-Based Bug Report Summarization Method Using Bug Report Relationships. Appl. Sci. 2019, 9, 5427. [Google Scholar] [CrossRef]

- Wang, A.; Singh, A.; Michael, J.; Hill, F.; Levy, O.; Bowman, S.R. GLUE: A Multi-Task Benchmark and Analysis Platform for Natural Language Understanding. arXiv 2018. [Google Scholar] [CrossRef]

| Group | Model Name | Type 1 | Type 2 |

|---|---|---|---|

| Alteration for Bug Reports | KCMS | Extractive | Multi-documents |

| Alteration for Bug Reports | MDNS | Extractive | Multi-documents |

| Modified Version | T5 | Abstractive | Single-documents |

| Modified Version | BART | Abstractive | Single-documents |

| State-of-the-art Model | PEGASUS | Abstractive | Single-documents |

| State-of-the-art Model | PRIMERA | Abstractive | Multi-documents |

| ID | Title | Number of Duplicates | Issue Numbers |

|---|---|---|---|

| 1 | Git Bash is not visible when try to select default terminal profile | 2 | #126023, #158627 |

| 2 | VS Code was lost on shutdown with pending update | 2 | #52855, #161019 |

| 3 | Loses text when maximizing the integrated terminal | 2 | #134448, #159703 |

| 4 | The terminal cannot be opened when multiple users use remote-ssh | 2 | #157611, #159519 |

| 5 | GitHub Commit error after June VS Code update | 4 | #154449, #154463, #154504, #154837 |

| 6 | Ansi Foreground colors not applied correctly when background color set in terminal | 2 | #155856, #146168 |

| 7 | View locations reset | 2 | #156315, #154090 |

| 8 | Webview Panels Dispose Bug | 2 | #158839, #98603 |

| 9 | Open file and then search opens the wrong find widget | 2 | #156853, #155924 |

| 10 | Sticky Scroll: Go to definition jumps to definitions but stay hidden behind sticky scroll layout | 2 | #157225, #157175 |

| 11 | bash: printf: ‘C’: invalid format character error in bash terminal | 2 | #157278, #157226 |

| 12 | New rebase conflicts resolution completely broken. Diff worse than before. | 3 | #157735, #156608, #157827 |

| 13 | Underscores (_) in integrated terminal are surrounded by black box | 2 | #158522, #158497 |

| 14 | Searching keyboard shortcuts with ’Record Keys’ does not include chords using that keybinding | 2 | #158799, #88122 |

| 15 | Trust dialog prompt appears even when disabled in settings | 2 | #159823, #156183 |

| 16 | Monolithic structure, multiple project settings | 2 | #32693, #155017 |

| Model | Bug Description | Reproduction Step | Possible Solution |

|---|---|---|---|

| KCMS | O | X | |

| MDNS | O | O | O |

| T5 | X | X | |

| BART | O | X | X |

| PEGASUS | X | X | |

| PRIMERA | X | X |

Views location is not getting updated when switching profiles. I think this happened because I was testing profiles. My view locations have been reset. Found an easy repro:

|

| In one of the windows run “Settings Profiles: Create an Empty Settings Profile” and create a new empty profile. See that your new profile gets used in just that window. In that same window run “Settings Profiles: Switch” and choose your default profile. See that your default profile is applied, but view locations are reset … |

| Enable profiles in settings |

| Restart |

| In one of the windows run “Settings Profiles: Create an Empty Settings Profile” and create a new empty profile |

| See that your new profile gets used in just that window |

| In that same window run “Settings Profiles: Switch” and choose your default profile See that your default profile is applied, but view locations are reset … |

| Views location is not getting updated when switching profiles |

| Enable Settings Profiles |

| Create an empty profile P1 and move Timeline and Outline views to the secondary sidebar as separate view containers |

| Create an empty profile P2 |

| Switch from P2 to P1 … |

| I think this happened because I was testing profiles. Restart. Restart. Restart. Restart. … |

| View locations reset I think this happened because I was testing profiles. I have Gitlens installed, and/nEnable profiles in settings. Restart. Create a new empty profile./nI think you might be hitting this—#154090. I took a look at the steps in the/nViews location is not getting updated when switching profiles … |

| Enable profiles in settings Restart In one of the windows run “Settings Profiles: Create an Empty Settings Profile” and create a new empty profile See that your new profile gets used in just that window In that same window run “Settings Profiles: Switch” and choose your default profile See that your default profile is applied, but view locations are reset/duplicate Thanks for the steps … profiles … |

| View locations reset I think this happened because I was testing profiles.My view locations have been reset. I have Gitlens installed, and I had a few of the gitlens views in their own view container. Now, they are back in their default view container (scm). fixmeMay I know if you are able to reproduce? … |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mukhtar, S.; Primadani, C.C.; Lee, S.; Jung, P. A Comparison of Summarization Methods for Duplicate Software Bug Reports. Electronics 2023, 12, 3456. https://doi.org/10.3390/electronics12163456

Mukhtar S, Primadani CC, Lee S, Jung P. A Comparison of Summarization Methods for Duplicate Software Bug Reports. Electronics. 2023; 12(16):3456. https://doi.org/10.3390/electronics12163456

Chicago/Turabian StyleMukhtar, Samal, Claudia Cahya Primadani, Seonah Lee, and Pilsu Jung. 2023. "A Comparison of Summarization Methods for Duplicate Software Bug Reports" Electronics 12, no. 16: 3456. https://doi.org/10.3390/electronics12163456

APA StyleMukhtar, S., Primadani, C. C., Lee, S., & Jung, P. (2023). A Comparison of Summarization Methods for Duplicate Software Bug Reports. Electronics, 12(16), 3456. https://doi.org/10.3390/electronics12163456