Exploring Zero-Shot Semantic Segmentation with No Supervision Leakage

Abstract

1. Introduction

2. Related Work

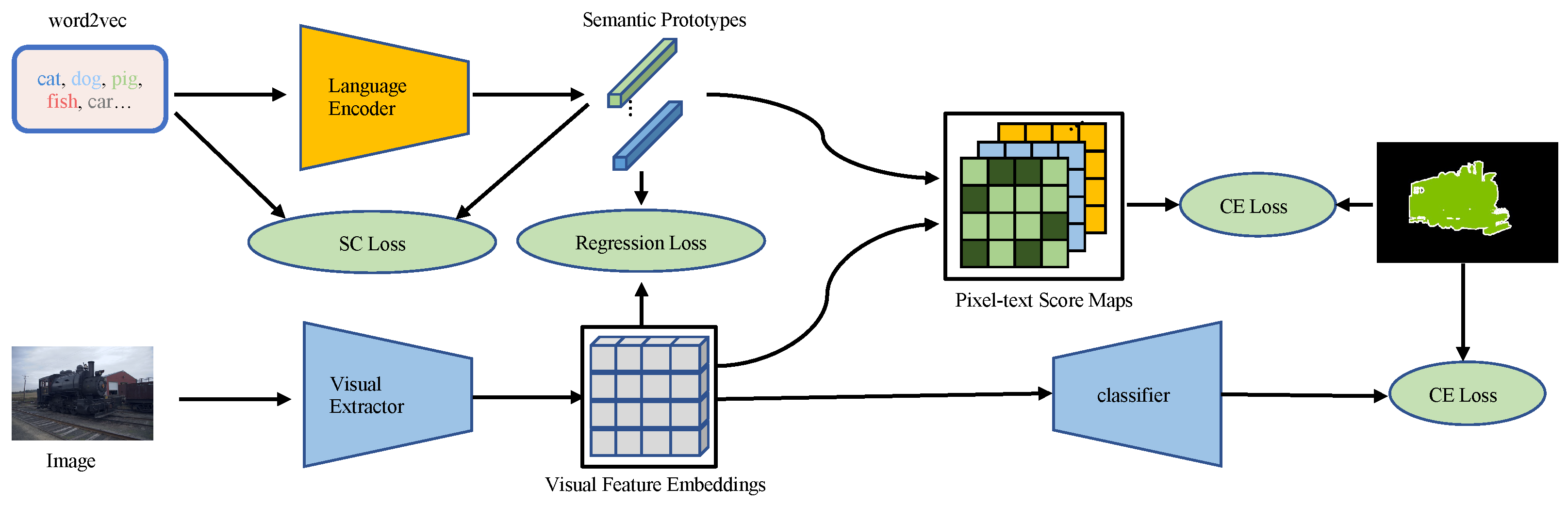

3. Method

3.1. Motivations

3.2. Overview

3.3. Transformer Backbone

3.4. Network Training

3.5. Network Inference

4. Experiments

4.1. Implementation Details

4.2. Ablation Study and Results

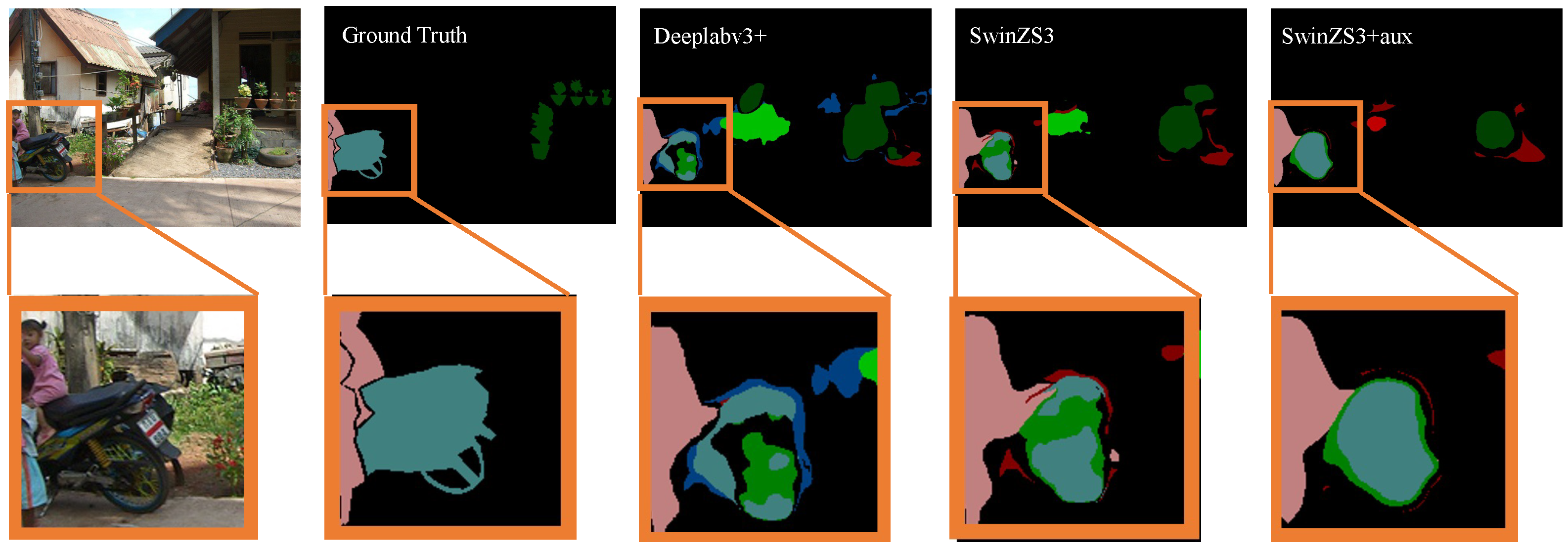

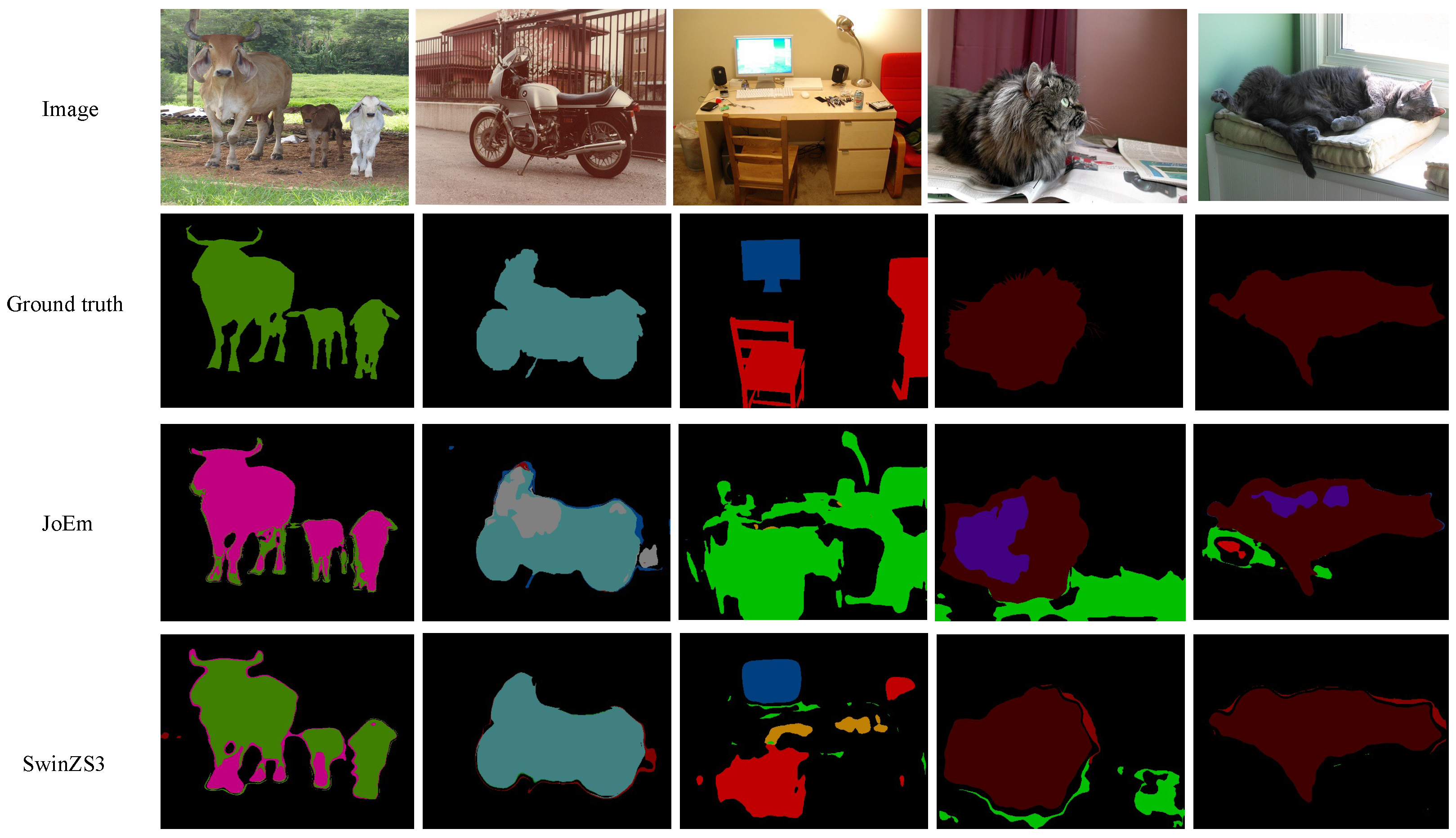

4.3. Qualitative Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Sun, J.; Lin, D.; Dai, J.; Jia, J.; He, K.S. Scribble-supervised convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; Volume 26. [Google Scholar]

- Dai, J.; He, K.; Sun, J. Boxsup: Exploiting bounding boxes to supervise convolutional networks for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1635–1643. [Google Scholar]

- Hou, Q.; Jiang, P.; Wei, Y.; Cheng, M.M. Self-erasing network for integral object attention. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS 2018), Montreal, QC, Canada, 3–8 December 2018; Volume 31. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 818–833. [Google Scholar]

- Zhang, D.; Zhang, H.; Tang, J.; Hua, X.S.; Sun, Q. Causal intervention for weakly-supervised semantic segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 655–666. [Google Scholar]

- Zhao, H.; Puig, X.; Zhou, B.; Fidler, S.; Torralba, A. Open vocabulary scene parsing. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2002–2010. [Google Scholar]

- Bucher, M.; Vu, T.H.; Cord, M.; Pérez, P. Zero-shot semantic segmentation. Adv. Neural Inf. Process. Syst. 2019, 32. [Google Scholar]

- Gu, Z.; Zhou, S.; Niu, L.; Zhao, Z.; Zhang, L. Context-aware feature generation for zero-shot semantic segmentation. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1921–1929. [Google Scholar]

- Li, P.; Wei, Y.; Yang, Y. Consistent structural relation learning for zero-shot segmentation. Adv. Neural Inf. Process. Syst. 2020, 33, 10317–10327. [Google Scholar]

- Baek, D.; Oh, Y.; Ham, B. Exploiting a joint embedding space for generalized zero-shot semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 9536–9545. [Google Scholar]

- Creswell, A.; White, T.; Dumoulin, V.; Arulkumaran, K.; Sengupta, B.; Bharath, A.A. Generative adversarial networks: An overview. IEEE Signal Process. Mag. 2018, 35, 53–65. [Google Scholar] [CrossRef]

- Kingma, D.P.; Welling, M. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Xian, Y.; Choudhury, S.; He, Y.; Schiele, B.; Akata, Z. Semantic projection network for zero-and few-label semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8256–8265. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Mottaghi, R.; Chen, X.; Liu, X.; Cho, N.G.; Lee, S.W.; Fidler, S.; Urtasun, R.; Yuille, A. The role of context for object detection and semantic segmentation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 891–898. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhang, Z.; Zhang, X.; Peng, C.; Xue, X.; Sun, J. Exfuse: Enhancing feature fusion for semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 269–284. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Singh, K.K.; Lee, Y.J. Hide-and-seek: Forcing a network to be meticulous for weakly-supervised object and action localization. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; IEEE: Piscataway Township, NJ, USA, 2017; pp. 3544–3553. [Google Scholar]

- Li, K.; Wu, Z.; Peng, K.C.; Ernst, J.; Fu, Y. Tell me where to look: Guided attention inference network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9215–9223. [Google Scholar]

- Ding, J.; Xue, N.; Xia, G.S.; Dai, D. Decoupling Zero-Shot Semantic Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11583–11592. [Google Scholar]

- Xu, M.; Zhang, Z.; Wei, F.; Lin, Y.; Cao, Y.; Hu, H.; Bai, X. A simple baseline for open-vocabulary semantic segmentation with pre-trained vision-language model. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 24–28 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 736–753. [Google Scholar]

- Zhou, Z.; Lei, Y.; Zhang, B.; Liu, L.; Liu, Y. Zegclip: Towards adapting clip for zero-shot semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 11175–11185. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning. PMLR, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 4904–4916. [Google Scholar]

- Yang, J.; Li, C.; Zhang, P.; Xiao, B.; Liu, C.; Yuan, L.; Gao, J. Unified Contrastive Learning in Image-Text-Label Space. arXiv 2022, arXiv:2204.03610. [Google Scholar]

- Xu, J.; De Mello, S.; Liu, S.; Byeon, W.; Breuel, T.; Kautz, J.; Wang, X. GroupViT: Semantic Segmentation Emerges from Text Supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 18134–18144. [Google Scholar]

- Arnab, A.; Dehghani, M.; Heigold, G.; Sun, C.; Lučić, M.; Schmid, C. Vivit: A video vision transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 6836–6846. [Google Scholar]

- Xu, M.; Zhang, Z.; Wei, F.; Lin, Y.; Cao, Y.; Hu, H.; Bai, X. A simple baseline for zero-shot semantic segmentation with pre-trained vision-language model. arXiv 2021, arXiv:2112.14757. [Google Scholar]

- Misra, I.; Maaten, L.v.d. Self-supervised learning of pretext-invariant representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 6707–6717. [Google Scholar]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective; MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Xian, Y.; Lampert, C.H.; Schiele, B.; Akata, Z. Zero-shot learning—A comprehensive evaluation of the good, the bad and the ugly. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 2251–2265. [Google Scholar] [CrossRef] [PubMed]

- Xie, Z.; Lin, Y.; Yao, Z.; Zhang, Z.; Dai, Q.; Cao, Y.; Hu, H. Self-supervised learning with swin transformers. arXiv 2021, arXiv:2105.04553. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.F. ImageNet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

| Method | |||||||

|---|---|---|---|---|---|---|---|

| Deeplabv3+ | ✓ | ✓ | ✓ | 33.4 | 8.4 | 13.4 | |

| Deeplabv3+ | ✓ | ✓ | ✓ | 36.2 | 23.2 | 28.3 | |

| Deeplabv3+ | ✓ | ✓ | ✓ | ✓ | 37.7 | 25.0 | 30.2 |

| SwinZS3 | ✓ | ✓ | ✓ | 25.8 | 12.0 | 16.4 | |

| SwinZS3 | ✓ | ✓ | ✓ | 37.1 | 24.3 | 29.3 | |

| SwinZS3 | ✓ | ✓ | ✓ | ✓ | 39.3 | 26.2 | 31.4 |

| Datasets | K | Method | VOC | ||

|---|---|---|---|---|---|

| Supervision leakage | |||||

| WebImageText 400M | 5 | Zegformer | 86.4 | 63.6 | 73.3 |

| zsseg | 83.5 | 72.5 | 77.5 | ||

| ZegCLIP | 91.9 | 77.8 | 84.3 | ||

| No supervision leakage | |||||

| ImageNet wo VOC | 2 | DeViSE | 68.1 | 3.2 | 6.1 |

| SPNet | 71.8 | 34.7 | 46.8 | ||

| ZS3Net | 72.0 | 35.4 | 47.5 | ||

| CSRL | 73.4 | 45.7 | 56.3 | ||

| JoEm | 68.9 | 43.2 | 53.1 | ||

| Ours | 69.2 | 45.8 (+2.6) | 55.3 | ||

| ImageNet wo VOC | 4 | DeViSE | 64.3 | 2.9 | 5.5 |

| SPNet | 67.3 | 21.8 | 32.9 | ||

| ZS3Net | 66.4 | 23.2 | 34.4 | ||

| CSRL | 69.8 | 31.7 | 43.6 | ||

| JoEm | 67.0 | 33.4 | 44.6 | ||

| Ours | 68.9 | 34.4 (+1.0) | 45.7 (+1.1) | ||

| ImageNet wo VOC | 6 | DeViSE | 39.8 | 2.7 | 5.1 |

| SPNet | 64.5 | 20.1 | 30.6 | ||

| ZS3Net | 47.3 | 24.2 | 32.0 | ||

| CSRL | 66.2 | 29.4 | 40.7 | ||

| JoEm | 63.2 | 30.5 | 41.1 | ||

| Ours | 62.6 | 31.6 (+1.1) | 42.0 (+0.9) | ||

| ImageNet wo VOC | 8 | DeViSE | 35.7 | 2.0 | 3.8 2 |

| SPNet | 61.2 | 19.9 | 30.0 | ||

| ZS3Net | 29.2 | 22.9 | 25.7 | ||

| CSRL | 62.4 | 26.9 | 37.6 | ||

| JoEm | 58.5 | 29.0 | 38.8 | ||

| Ours | 60.2 | 29.6 (+0.6) | 39.9 (+1.1) | ||

| Datasets | K | Context | |||

|---|---|---|---|---|---|

| Method | |||||

| Supervision leakage | |||||

| WebImageText 400M | 5 | Zegformer | - | - | - |

| zsseg | - | - | - | ||

| ZegCLIP | 46.0 | 54.6 | 49.9 | ||

| No supervision leakage | |||||

| ImageNet context | 2 | DeViSE | 35.8 | 2.7 | 5.0 |

| SPNet | 38.2 | 16.7 | 23.2 | ||

| ZS3Net | 41.6 | 21.6 | 28.4 | ||

| CSRL | 41.9 | 27.8 | 33.4 | ||

| JoEm | 38.2 | 32.9 | 35.3 | ||

| Ours | 39.8 | 33.5 (+ 0.6) | 36.3 (+ 1.0) | ||

| ImageNet context | 4 | DeViSE | 33.4 | 2.5 | 4.7 |

| SPNet | 36.3 | 18.1 | 24.2 | ||

| ZS3Net | 37.2 | 24.9 | 29.8 | ||

| CSRL | 39.8 | 23.9 | 29.9 | ||

| JoEm | 36.9 | 30.7 | 33.5 | ||

| Ours | 38.7 | 33.5 (+2.8) | 35.1 (+1.6) | ||

| ImageNet context | 6 | DeViSE | 31.9 | 2.1 | 3.9 |

| SPNet | 31.9 | 19.9 | 24.5 | ||

| ZS3Net | 32.1 | 20.7 | 25.2 | ||

| CSRL | 35.5 | 22.0 | 27.2 | ||

| JoEm | 36.2 | 23.2 | 28.3 | ||

| Ours | 39.3 (+3.1) | 26.2 (+3.0) | 31.4 (+3.1) | ||

| ImageNet context | 8 | DeViSE | 22.0 | 1.7 | 3.2 |

| SPNet | 28.6 | 14.3 | 19.1 | ||

| ZS3Net | 20.9 | 16.0 | 18.1 | ||

| CSRL | 31.7 | 18.1 | 23.0 | ||

| JoEm | 32.4 | 20.2 | 24.9 | ||

| Ours | 35.0 (+2.6) | 21.4 (+1.2) | 26.6 (+1.7) | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Tian, Y. Exploring Zero-Shot Semantic Segmentation with No Supervision Leakage. Electronics 2023, 12, 3452. https://doi.org/10.3390/electronics12163452

Wang Y, Tian Y. Exploring Zero-Shot Semantic Segmentation with No Supervision Leakage. Electronics. 2023; 12(16):3452. https://doi.org/10.3390/electronics12163452

Chicago/Turabian StyleWang, Yiqi, and Yingjie Tian. 2023. "Exploring Zero-Shot Semantic Segmentation with No Supervision Leakage" Electronics 12, no. 16: 3452. https://doi.org/10.3390/electronics12163452

APA StyleWang, Y., & Tian, Y. (2023). Exploring Zero-Shot Semantic Segmentation with No Supervision Leakage. Electronics, 12(16), 3452. https://doi.org/10.3390/electronics12163452