Abstract

Triplet extraction is the key technology to automatically construct knowledge graphs. Extracting the triplet of mechanical equipment fault relationships is of great significance in constructing the fault diagnosis of a mine hoist. The pipeline triple extraction method will bring problems such as error accumulation and information redundancy. The existing joint learning methods cannot be applied to fault texts with more overlapping relationships, ignoring the particularity of professional knowledge in the field of complex mechanical equipment faults. Therefore, based on the Chinese pre-trained language model BERT Whole Word Masking (BERT-wwm), this paper proposes a joint entity and relation extraction model MHlinker (Mine Hoist linker, MHlinker) for the mine hoist fault field. This method uses BERT-wwm as the underlying encoder. In the entity recognition stage, the classification matrix is constructed using the multi-head extraction paradigm, which effectively solves the problem of entity nesting. The results show that this method enhances the model’s ability to extract fault relationships as a whole. When the small-scale manually labeled mine hoist fault text data set is tested, the extraction effect of entities and relationships is significantly improved compared with several baseline models.

1. Introduction

The relationship between the components is complex. As the “throat” of mine production, the mine hoist is in charge of transporting ore and lifting staff and equipment. Its running state and working reliability will directly affect mining production [1]. Once the mine hoist fails, it will cause significant safety accidents. The traditional fault diagnosis of mine hoists mainly depends on the maintenance manual and the experience of operation and maintenance experts [2]. The conventional operation and maintenance modes have made it impossible to meet the fault problems of the equipment. The fault maintenance is complicated, and the fault treatment efficiency could be higher, which brings difficulties to fault analysis and diagnosis and challenges the realization of intelligent fault diagnosis of mine hoists [3].

With the improvement of information technology, the application of information technology for fault diagnosis and analysis has become mainstream. The knowledge graph is a technical method to describe concepts and their relationships in the physical world by constructing a graph model [4]. It has the advantages of large scale, excellent quality, and social structure. It is an emerging technology in artificial intelligence. It can organize multi-source, heterogeneous data to provide better services and applications for downstream tasks [5]. A fault knowledge graph has outstanding advantages in fault diagnosis and has gradually become an essential means of fault diagnosis and troubleshooting. Entity-Relation Extraction (ERE), the critical technology of knowledge graph construction, automatically extracts the semantic relationship between entity pairs from unstructured text to extract practical semantic information [6]. Traditional information extraction work utilizes pipeline extraction. Pipeline extraction involves two processes. Firstly, there is input statements for entity extraction processing, and then the extracted entities are classified by relationship [7]. The two processes do not interfere with each other. Once the entity recognition error leads to a relationship extraction error, there are problems such as information redundancy and cumulative error. Compared with the traditional pipeline extraction method, joint extraction can combine the two tasks of entity extraction and relationship extraction, share the underlying parameters of the network, and design a specific marking strategy to complete the two sub-tasks of information extraction in the same mode, thus reducing the steps of the extraction process [8].

In summary, how to accurately extract fault information such as fault causes, fault phenomena [9], and maintenance measures from many redundant unstructured texts and solve the problem of error propagation and overlapping relationship extraction in the text is the core issue and challenging work for knowledge graph construction of mine hoist fault. This paper proposes an entity-relationship extraction model for mine hoist fault detection named Mine Hoist linker (MHlinker). Then verifies it on the self-built mine hoist fault data set. The experimental results show that the proposed model can effectively improve the relationship overlap problem. Based on the above problems, this paper combines the entity relationship joint extraction model TPlinker [10]. Based on the introduction of the BERT-wwm pre-trained language model, this article proposes a joint entity relationship extraction model MHlinker for mine hoist faults.

Compared with previous work, the main contributions and innovations of this paper are summarized as follows:

- The pre-training language joint extraction model based on BERT-wwm is tested, which can generate feature vectors and encode the information combined with context semantic information. MHlinker modeling based on three tuples can fully use the interdependence between entities and relationships and effectively deal with the overlapping relationships of fault text. Compared with other joint extraction models, the F1 of the MHlinker model is greatly improved.

- Aiming at the problem, there is no public data set in the field of mine hoist failure at present; there is no data set for the joint task of entity and relationship extraction. This study combined a mining company’s relevant materials and literature, carried out manual annotation, and constructed a reasonable data set related to mine hoist faults, providing a reference for research in this field.

2. Related Work

2.1. Pipeline Extraction

The task of named entity recognition was first proposed by RAU [11]. The nature of the task can be described as follows: for a natural language text, each segment (composed of one or more words) is given a certain label and annotated with the corresponding category. Relational classification (RC) can automatically extract the semantic relationship between entity pairs and extract adequate semantic knowledge by modeling text information [12]. Entity relationship extraction is essential, which is the core task and an important link in many fields. Pipeline extraction is to extract the relationship between entities on the basis directly based on entity recognition and finally output three tuples with entity relationships as the prediction result [13].

In the recurrent neural network (RNN) [14], there is a correlation between the neural units at the same level. The calculations in the network are carried out in sequence, and the calculations in the neural units are carried out in time or position order, so that the RNN has the memory function because it adds the loop path, which can make the information flow inside it. However, there is a problem: RNN cannot label long sequences. Huang et al. [15] first proposed a context decoder based on a bidirectional long short-term memory (BILSTM) neural network as an encoder and a conditional random field as a sequence marking model. The model can capture past and future dependency features with long word granularity and learn the context features of sentences. Google launched the pre-training model BERT (bidirectional encoder representations from transformers) [16], which uses a bidirectional transformer structure. This research has become a breakthrough technology in natural language processing, attracting many researchers to study the pre-training model and its corresponding downstream tasks. Pipeline extraction usually has the following problems: The first is the cumulative error. The entity identified in the named entity recognition task cannot always be guaranteed to be the correct entity. The relationship extraction based on the wrong entity obtained in the previous study will lead to the transmission and accumulation of errors. Entity recognition errors will affect relationship extraction performance in the next step [17]. The second is to generate redundant information. Because the extracted entity pairs are identified first and then classified, it will significantly depend on the entity extraction results. If the data is seriously unbalanced, it will increase the error rate and computational complexity, further reducing the model’s effect [18].

The construction of a knowledge graph in the field of hoist faults mainly has the following problems: First, there is no fault corpus data set in the mine hoist equipment fault area. Second, there is much overlap between entities and relationships in the fault texts collected from enterprises and professional books. Different triples in a sentence may have overlapping entities, as shown in Table 1 [19].

Table 1.

Examples of normal, EPO, and SEO overlapping problems.

2.2. Joint Extraction

With the advent of big data, many scholars are committed to researching entity-relationship joint extraction. For example, Zheng et al. [20] proposed a new labeling scheme to transform the joint extraction task into a sequence labeling problem. Sun and Fu et al. [21] used a Graph Convolutional Neural Network (GCN) to establish an end-to-end relationship extraction model. They used relationship weighting to capture the relationship between multiple entity types and relationship types in sentences. Nayak et al. [22] proposed a joint entity relation extraction method for encoder-decoder architecture, and the proposed model significantly improved the F1 score. Yu et al. [23] proposed a new decomposition strategy using Span’s tagging scheme to decompose the joint extraction task into two interrelated subtasks (HE extraction and TER extraction). This method has a significant performance gain in the test application scenarios. Wei et al. [24] proposed a new cascaded binary annotation framework, Casrel, which can transform the task of extracting triples into three levels: central entity, relation, and object entity. Katiyar et al. [25] first introduced the attention mechanism combined with bidirectional LSTM for the joint extraction of entities and relationships. In reference [26], he introduced the attention mechanism of neural networks in relation extraction, which is more effective than other structured perceptron joint models. Authority [27] first proposed a method to solve the problem of overlapping relation extraction. They divided sentences into three categories according to the degree of overlap of entities and designed an end-to-end model based on sequence-to-sequence learning. Due to the introduction of a replication mechanism in the model, entity pairs can be directly copied from the initial input, providing us with valuable ideas and enlightenment for further research and problem solving.

The existing typical domain entity relationship joint extraction technology has gradually matured. However, there are still some problems in information extraction, such as exposure gaps, error propagation, entity redundancy, cumulative error, etc. [28]. The research on information extraction technology still has a long way to go. The fault data resources of mine hoist are few, professional, and memorable. Therefore, it is a great challenge to define it accurately and extract practical features. Based on the particularity of the knowledge graph in the vertical field compared with the general area, the theory and method of entity relation extraction in the area of mine hoist fault are still in their infancy, which cannot provide valuable theoretical research for subsequent scholars in this field.

3. Model

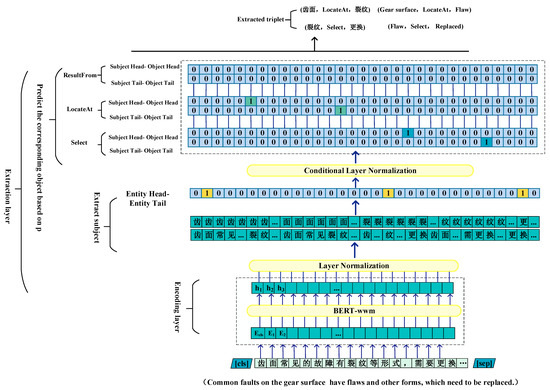

The MHlinker model extracts triplet information by combining BERT-wwm’s entity relationships with the multi-head extraction paradigm. As illustrated in Figure 1, The entire architecture has two levels: the encoding and extraction layers. Firstly, the BERT-wwm model is used to retrain each input character. Then, the Multi-head extraction paradigm extracts entities to achieve complete triple information. For example, Enter “common faults on the gear surface have flaws and other forms, which need to be replaced”. The ternary group is “< Gear surface, LocateAt, Flaw >, and< Flaw, Select, Replaced >. The length of this text fragment is 18, assuming only two relationships are included. “齿面(Gear surface)” is the subject of this sentence. We mark “1” for the “Gear surface” in the starting position vector and “0” for other words. We are using an encoder module through the MHlinker model and detecting the location information of the topic. The subject in this sentence has two relational triples. Therefore, we mark the words “flaw” and “replaced” with “1” in the starting and ending position vectors of “LocateAt” and “Select”. Please note that we mark the position vector of other relationship objects with ‘0′.

Figure 1.

An overview of the MHlinker model. For example, Enter “齿面常见的故障有裂纹等形式,需要更换 (common faults on the gear surface have flaws and other forms, which need to be replaced)”.

3.1. Encoder

BERT-wwm

Pre-training models (PTMs) can provide better training results for downstream tasks. The Chinese pre-training model BERT-wwm [29] works better for the language preprocessing of upstream tasks. The reason is that there is a space in English and no space between each word in Chinese. If you use BERT directly for pretreatment, the Masked Language Model (MLM) will randomly mask and replace the words in Chinese. BERT-wwm is an improvement and upgrade of BERT. This method does not arbitrarily select WordPiece tags for shielding but shields all corresponding words belonging to the exact phrase so that the model can restore all words in the MLM pre-training work instead of only restoring the WordPiece token [30]. According to the characteristics of the data in the field of mine hoist fault, this study uses a Chinese pre-training model, BERT-wwm, based on a whole word mask as the model’s encoder to accelerate the algorithm’s convergence and improve the efficiency of data processing. For example, the input statement is: The main reason for brake failure is insufficient braking torque. Table 2 shows the difference between the original masking method and the complete word masking method, where [M] represents the content.

Table 2.

Whole-word mask generation example.

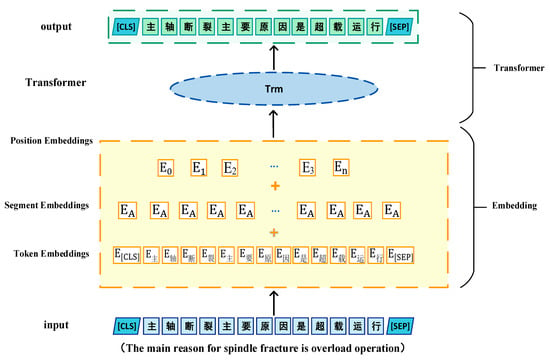

The pre-training mode of BERT-wwm includes two stages: The embedding stage and the Transformer stage. If the length of the input statement is marked as n, the input statement is. Firstly, model the input statements and embed the [CLS] tag into the first position of the sentence as a semantic representation. Similarly, Embed the [sep] tag at the end of a sentence as a boundary representation of the sentence. Then, in Embedding, the obtained statement is summed by the word embedding vector (Token Embeddings), the segmentation embedding vector (Segment Embeddings), and the position embedding vector (Position Embeddings). The word embedding vector represents the vector representation of the fixed length of the word. The position embedding vector represents the position information of the word in the sequence. The segmented embedded vector represents the statement where the current term is located and obtains an n × m dimensional moment, which is the corresponding dimensional word embedding vector. Finally, the input Transformer layer extracts features to obtain the following statement encoding: entity-relationship joint extraction. Figure 2 is the BERT-wwm model structure diagram.

Figure 2.

An overview of the BERT-wwm model. For example, Enter “主轴断裂主要原因是超载运行 (The main reason for spindle fracture is overload operation)”.

3.2. Multi-Head Normal Form Extraction Layer

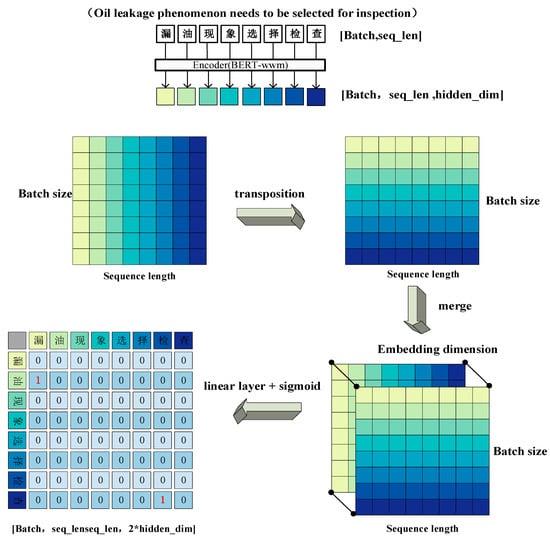

The main task of the entity extraction layer is to realize named entity recognition. The most commonly used annotation method is sequence annotation, but this method is less effective for complex extraction problems such as entity nesting and type confusion. This study uses EH2ET (Entity head to Entity tail) to represent annotated entities. It uses SH2OH (Subject head to Object head) and ST2OT (Subject tail to Object tail) to mark the relationship between entities [10]. Because the entity position of EH is more significant than that of ET, the entity tags of EH2ET must all be located in the upper part of the entity tag matrix. Since the subject entity may appear after the order of the predicate entity, the relation markers SH2OH and SH2OH may be located in the lower half of the matrix, which will cause the lower half of the matrix to be sparse. To improve the computational efficiency, the multi-head paradigm labeling adopts a flip operation, transposes it to the upper triangle, and changes ‘label 1’ to ‘label 2’, thereby avoiding the problem of the sparse matrix in the algorithm. Figure 3 is the schematic diagram of the Multi-head paradigm. Figure 3 shows the structure of the Multi-head paradigm. First, input a sentence with dimension=[batch, seq_len], and use BERT wwm encoding to obtain a matrix with dimension=[batch, seq_lenl, hidden]. At this point, copy this token to seq_ Len, obtain a representation matrix with dimension=[batch, seq_len, seq_len, num_label], then transpose it to obtain another matrix, it is added to the transpose matrix, and obtain the output feature dimension, which is 2*hidden dim, and then classify through linear layer+sigmoid; The final output entity recognition matrix is the result, where “1” represents the identified entity.

Figure 3.

An overview of the Multi-head model structure diagram. The sentence is “Selection and inspection of oil leakage phenomenon”.

3.3. Loss Function

In this paper, the Cross entropy Loss function is adopted, and its general form is:

P (Positive number) and N (Negative number) are sets of positive and negative categories, respectively. Usually, a multi-label Loss function is used. This method needs to create a vast parameter matrix, and the training cost is high. Therefore, the “sparse version” of multi-label Cross entropy is used; that is, only the subscripts matching it are transmitted each time. Because there are fewer positive analogies than negative ones, the size of the label matrix becomes very small, thus improving training efficiency. For the “sparse” multi-label Cross entropy, if , the equation is:

If a = , b = , then the “sparse” multi-label Cross entropy Loss function can be written as:

Thus, the losses corresponding to the negative class are obtained through P and A, while the losses of the positive class remain unchanged.

4. Proposed Dataset

4.1. Data Sources

The fault of the mine hoist is complex [31]. The data source of the mine hoist fault knowledge graph is mainly based on the fault point detection table and daily maintenance report of a mining company. This paper combines paper books, e-book documents, and other related documents to convert data into PDF format, which is convenient for data management and annotation. This paper refers to the current national standards of mine hoists, and according to the essential attributes of the common fault and fault relationship, we define the five entity types of mine hoist fault (fault phenomenon, fault influence, maintenance measures, fault cause, and fault location). See Appendix A for a description of the specific types.

4.2. Data Processing

In the preliminary work of this study, through the investigation and analysis of the data, the concept model design of the knowledge graph is completed, and the ontology definition is determined. Through online query data, a large number of unstructured text data are obtained, and then the relationship is defined. There are five types of relationships between the five types defined in this paper: LocateAt, Select, BelongTO, ResultFrom, ConsistOf (see Appendix A Table A1 and Table A2 for specific type descriptions).

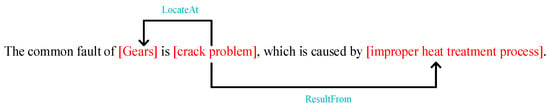

This dataset not only pioneered research in the field of mine hoist faults but also reflected the complexity of the context in which relationships occur in actual text. Researchers used manual annotations. After preprocessing the acquired data, such as sentence segmentation and punctuation elimination, the annotation tool Labstudio was used to manually annotate entities and relationships. The preliminary construction completed the data set in the field of mechanical equipment failure, allowing the model to run on an accurate data set. For example, a common fault in gears is the crack problem caused by improper heat treatment.

4.3. Data Features

Compared with the general domain named entity recognition task, named entity recognition in a specific field is more specific and complex [32], which is embodied in the following aspects:

(1) It is not easy to recognize lexical boundaries. Word boundary recognition is a challenging task. For a named entity in a specific domain, boundary recognition becomes extremely difficult because it needs to master the particular knowledge of its domain and understand its specific laws. The recognition effect depends on the correct division of lexical boundaries. Therefore, lexical boundary recognition is the key to the overall impact of recognition.

(2) Domain vocabulary recognition. In specific fields, entity names often have problems such as inappropriate grammatical structure, informal abbreviations, and polysemy. To solve these problems, we need to make the model have more knowledge. In addition to the simple vocabulary information, we must consider the context and dynamic semantic representation ability to ensure the correct understanding and expression of vocabulary. In addition, in-depth integration of domain expertise is essential to improving the model’s ability to recognize out-of-vocabulary and low-frequency words. Combining domain expertise with the model can help the model better understand and deal with those rare terms and entity names.

(3) The domain lacks tag data. The training of deep neural networks usually relies on high-quality, large-scale data sets, and labeling data requires a lot of financial and material resources. Due to the uniqueness of specific fields, there is a lack of training corpus.

The data sample is: {“text”: 齿轮常见的一种故障就是裂纹问题,是由于热处理工艺不当, <A common fault of gears is crack problem, which is due to improper heat treatment process>“ “spo_list”: [{“predicate”: “LocateAt”, “object_type”: “故障位置<fault location>”, “object”: “齿轮<gear>”, “subject_type”: “故障现象<Fault phenomenon>”, “subject”: “裂纹<flaw>”}, {“predicate”: “ResultFrom”, “object_type”: “故障现象<Fault phenomenon>”, “object”: “热处理工艺不当<Improper heat treatment process>,” “subject_type”: “故障原因<Fault cause>,” “subject”: “裂纹<flaw>”}}。An example of annotation results is shown in Figure 4.

Figure 4.

Data example.

Our fault data set has the following advantages compared to other Chinese data sets: First, we conducted a pioneering study on the fault data set of mine hoists, filling the gap in this field. Secondly, based on the complexity of fault text data, we provide the corpus with rich features, including part of speech tagging, dependency, entity type, and other information. Thirdly, we preprocess the labeled corpus to increase the accuracy of model recognition. Fourth, we have created a reasonable fault relationship classification system that covers various fault relationship information and provides sufficient data support for downstream tasks.

After manual labeling and sorting, this dataset contains 3388 data, including 4272 relationships and 8545 entity samples. The training set contains 3388 training data, a validation set, and a test set according to the ratio of 8:1:1 [33].

5. Experiment

5.1. Evaluating Indicator

The model for this study is built using the PyTorch framework. The underlying encoder uses the Chinese pre-trained language model BERT-wwm, and the optimizer selects the Adam optimizer. The parameter settings are shown in Table 3 and Table 4 after performing parameter tuning experiments on the verification set.

Table 3.

Model preset parameters.

Table 4.

Experimental environment parameters.

5.2. Experimental Parameters

Precision (), Recall (), and -score were used as evaluation indexes in this experiment. The calculation methods for each evaluation index are shown in Formulas (4)–(6).

Among them, represents the number of sample data that the test set model can correctly predict, represents the number of irrelevant sample data that the test set model predicts, and represents the number of sample data that the test set model predicts unsuccessfully. In the experiment, he adopted a strict evaluation index. Because the joint extraction model is constructed in this paper, when the head entity, relationship, and tail entity are all predicted correctly, it is determined that the triple is correctly predicted.

5.3. Comparative Experimental

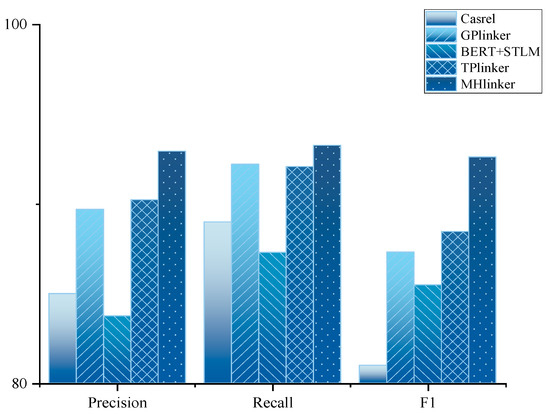

To verify the superiority of the model MHlinker to the fault triple extraction task, this paper selects the mainstream extraction models, such as Casrel [24] and GPlinker [34], as the control and conducts comparative experiments on the self-built mine hoist fault data set. The experimental results are shown in Table 5.

Table 5.

Experimental results of different models.

The experimental results show that on the mine hoist fault data set built in this paper, the MHlinker model can better solve the problem of entity relationship extraction and relationship overlap in the mine hoist fault field and effectively extract the hoist fault triples. The precision, recall, and -score values are better than those of other benchmark models. The reason is that in the BERT-wwm model, due to the use of a two-way Transformer network structure, it can fine-tune its features based on learning context and mine text information to the greatest extent. After learning, it can achieve a more noticeable improvement effect on a small amount of data [35]. As can be seen in Figure 5, the model of this study can effectively improve the efficiency of triple information extraction by using MHlinker entity relationship recognition of triples, strengthen the association between entity recognition and relationship extraction, and effectively realize the integration and utilization of fault information. It provides technical support in the field of fault diagnosis.

Figure 5.

Results of different models.

6. Conclusions

Information extraction is an indispensable part of knowledge graph construction. In this paper, a data set about mine hoist faults is built, which contains many overlapping data. To solve the problems of exposure bias and relation overlap in entity-relation joint extraction, a dependency-based multi-headed normal annotation model (MHlinker) for Chinese overlapping entity-relation was proposed. In the experiment of mine hoist information extraction task, the network performance of the model is improved compared with other models, which provides a reference for the application of knowledge graphs in the mechanical field and the construction of fault diagnosis knowledge graphs. The proposed method needs to be improved; for example, unstructured information extraction has a small amount of data, so more data should be used to enhance the performance of the information extraction model. We believe that the continuing study will consider the improvement of this model and will continue to move from linguistic and multilingual to multimodal research to enhance its practical value.

Author Contributions

Conceptualization, X.D. (Xiaochao Dang), H.D., and X.D. (Xiaohui Dong); methodology, X.D. (Xiaochao Dang)and H.D.; software, H.D. and L.W.; validation, X.D. (Xiaochao Dang), H.D., and X.D. (Xiaohui Dong); formal analysis, X.D. (Xiaochao Dang), H.D., and L.W.; investigation, X.D. (Xiaochao Dang); resources, X.D. (Xiaochao Dang) and H.D.; data curation, X.D. (Xiaochao Dang), H.D., F.L., and Z.Z.; writing—original draft preparation, H.D. and L.W.; writing—review and editing, X.D. (Xiaochao Dang) and H.D.; visualization, X.D. (Xiaochao Dang) and X.D. (Xiaohui Dong); supervision, X.D. (Xiaochao Dang); project administration, X.D. (Xiaochao Dang) and X.D. (Xiaohui Dong); funding acquisition, X.D. (Xiaochao Dang) All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant 62162056), Industrial Support Foundations of Gansu (Grant No. 2021CYZC-06) by X.D. (Xiaochao Dang).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Entity type.

Table A1.

Entity type.

| Entity Type | Illustrate |

|---|---|

| Fault phenomenon | External manifestations of faults |

| Repair measures | Various measures taken to restore the fault to its original usable state |

| Fault Impact | The result of a failure mode on the use, function, or state of a product |

| Cause of fault | Factors related to design, manufacturing, use, and maintenance that cause malfunctions |

| Fault Location | The location where the fault occurred |

Table A2.

Relation type distribution.

Table A2.

Relation type distribution.

| Relationship Type | Illustrate |

|---|---|

| LocateAt | The relationship between the fault phenomenon and the location of the fault |

| Select | The relationship between fault phenomena and the selection of maintenance measures |

| BelongTO | The attribution relationship between the fault phenomenon and its corresponding fault phenomenon |

| ConsistOf | What level does the phenomenon belong to |

| ResultFrom | The causal relationship between the fault phenomenon and the cause of its occurrence |

References

- Feng, K.; Ji, J.; Ni, Q.; Beer, M. A review of vibration-based gear wear monitoring and prediction techniques. Mech. Syst. Signal Process. 2023, 182, 109605. [Google Scholar] [CrossRef]

- Li, F.; Sun, R.; Fu, W.; Qiao, X. Hoist Wire Rope Fault Detection System Based on Wavelet Threshold and SVD Denoising. In Proceedings of the 5th International Conference on Information Technologies and Electrical Engineering 2022, Online, 25–27 March 2022; pp. 469–476. [Google Scholar]

- Zhu, J.; Jiang, Q.; Shen, Y.; Qian, C.; Xu, F.; Zhu, Q. Application of recurrent neural network to mechanical fault diagnosis: A review. J. Mech. Sci. Technol. 2022, 36, 527–542. [Google Scholar]

- Chen, X.; Jia, S.; Xiang, Y. A review: Knowledge reasoning over knowledge graph. Expert Syst. Appl. 2020, 141, 112948. [Google Scholar]

- Golshan, P.N.; Dashti, H.A.R.; Azizi, S.; Safari, L. A study of recent contributions on information extraction. arXiv 2018, arXiv:1803.05667. [Google Scholar]

- Li, X.; Yin, F.; Sun, Z.; Li, X.; Yuan, A.; Chai, D.; Zhou, M.; Li, J. Entity-relation extraction as multi-turn question answering. arXiv 2019, arXiv:1905.05529. [Google Scholar]

- Xiao, S.; Song, M. A text-generated method to joint extraction of entities and relations. Appl. Sci. 2019, 9, 3795. [Google Scholar]

- Li, X.; Luo, X.; Dong, C.; Yang, D.; Luan, B.; He, Z. TDEER: An efficient translating decoding schema for joint extraction of entities and relations. In Proceedings of the Conference on Empirical Methods in Natural Language Processing 2021, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 8055–8064. [Google Scholar]

- Xu, M.; Pi, D.; Cao, J.; Yuan, S. A novel entity joint annotation relation extraction model. Appl. Intell. 2022, 52, 12754–12770. [Google Scholar] [CrossRef]

- Wang, Y.; Yu, B.; Zhang, Y.; Liu, T.; Zhu, H.; Sun, L. TPLinker: Single-stage joint extraction of entities and relations through token pair linking. arXiv 2020, arXiv:2010.13415. [Google Scholar]

- Rau, L.F. Extracting company names from text. In Proceedings of the Seventh IEEE Conference on Artificial Intelligence Application, Miami Beach, FL, USA, 24–28 February 1991; pp. 29–30. [Google Scholar]

- E, H.-H.; Zhang, W.-J.; Xiao, S.Q.; Cheng, R.; Hu, Y.X.; Zhou, X.S.; Niu, P.Q. Survey of entity relationship extraction based on deep learning. Ruan Jian Xue Bao J. Softw. 2019, 30, 1793–1818. [Google Scholar]

- Zeng, D.; Liu, K.; Lai, S.; Zhou, G.; Zhao, J. Relation classification via convolutional deep neural network. In Proceedings of the COLING 2014, the 25th International Conference on Computational Linguistics: Technical Papers 2014, Dublin, Ireland, 23–29 August 2014; pp. 2335–2344. [Google Scholar]

- Grossberg, S. Recurrent neural networks. Scholarpedia 2013, 8, 1888. [Google Scholar] [CrossRef]

- Huang, Z.; Xu, W.; Yu, K. Bidirectional LSTM-CRF models for sequence tagging. arXiv 2015, arXiv:1508.01991. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Gao, C.; Zhang, X.; Liu, H.; Jiang, J. A joint extraction model of entities and relations based on relation decomposition. Int. J. Mach. Learn. Cybern. 2022, 13, 1833–1845. [Google Scholar] [CrossRef]

- Fuhai, L.; Rujiang, B.; Qingsong, Z. A Hybrid Semantic Information Extraction Methodfor Scientific Research Papers. Libr. Inf. Serv. 2013, 57, 112. [Google Scholar]

- Tuo, M.; Yang, W.; Wei, F.; Dai, Q. A Novel Chinese Overlapping Entity Relation Extraction Model Using Word-Label Based on Cascade Binary Tagging. Electronics 2023, 12, 1013. [Google Scholar] [CrossRef]

- Zheng, S.; Wang, F.; Bao, H.; Hao, Y.; Zhou, P.; Xu, B. Joint extraction of entities and relations based on a novel tagging scheme. arXiv 2017, arXiv:1706.05075. [Google Scholar]

- Fu, T.J.; Li, P.H.; Ma, W.Y. Graphrel: Modeling text as relational graphs for joint entity and relation extraction. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics 2019, Florence, Italy, 28 July–2 August 2019; pp. 1409–1418. [Google Scholar]

- Nayak, T.; Ng, H.T. Effective modeling of encoder-decoder architecture for joint entity and relation extraction. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; pp. 8528–8535. [Google Scholar]

- Yu, B.; Zhang, Z.; Shu, X.; Liu, T.; Wang, Y.; Wang, B.; Li, S. Joint extraction of entities and relations based on a novel decomposition strategy. arXiv 2019, arXiv:1909.04273. [Google Scholar]

- Wei, Z.; Su, J.; Wang, Y.; Tian, Y.; Chang, Y. A novel cascade binary tagging framework for relational triple extraction. arXiv 2019, arXiv:1909.03227. [Google Scholar]

- Katiyar, A.; Cardie, C. Investigating lstms for joint extraction of opinion entities and relations. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 2016, Berlin, Germany, 7–12 August 2016; pp. 919–929. [Google Scholar]

- Katiyar, A.; Cardie, C. Going out on a limb: Joint extraction of entity mentions and relations without dependency trees. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 2017, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 917–928. [Google Scholar]

- Zeng, X.; Zeng, D.; He, S.; Liu, K.; Zhao, J. Extracting relational facts by an end-to-end neural model with copy mechanism. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) 2018, Melbourne, Australia, 15–20 July 2018; pp. 506–514. [Google Scholar]

- Hamdi, A.; Pontes, E.L.; Sidere, N.; Coustaty, M.; Doucet, A. In-depth analysis of the impact of OCR errors on named entity recognition and linking. Nat. Lang. Eng. 2023, 29, 425–448. [Google Scholar] [CrossRef]

- Cui, Y.; Che, W.; Liu, T.; Qin, B.; Yang, Z. Pre-training with whole word masking for chinese bert. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3504–3514. [Google Scholar] [CrossRef]

- Wu, Z.; Jiang, D.; Wang, J.; Zhang, X.; Du, H.; Pan, L.; Hsieh, C.-Y.; Cao, D.; Hou, T. Knowledge-based BERT: A method to extract molecular features like computational chemists. Brief. Bioinform. 2022, 23, bbac131. [Google Scholar] [PubMed]

- Gu, J.; Peng, Y.; Lu, H.; Cao, S.; Cao, B. Fault diagnosis of spindle device in hoist using variational mode decomposition and statistical features. Shock Vib. 2020, 2020, 8849513. [Google Scholar] [CrossRef]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. arXiv 2016, arXiv:1603.01360. [Google Scholar]

- Li, Y.; Li, P.; Yang, X.; Hsieh, C.-Y.; Zhang, S.; Wang, X.; Lu, R.; Liu, H.; Yao, X. Introducing block design in graph neural networks for molecular properties prediction. Chem. Eng. J. 2021, 414, 128817. [Google Scholar] [CrossRef]

- Su, J.; Murtadha, A.; Pan, S.; Hou, J.; Sun, J.; Huang, W.; Wen, B.; Liu, Y. Global Pointer: Novel Efficient Span-based Approach for Named Entity Recognition. arXiv 2022, arXiv:2208.03054. [Google Scholar]

- Li, X.; Zhang, W.; Ding, Q.; Sun, J.-Q. Intelligent rotating machinery fault diagnosis based on deep learning using data augmentation. J. Intell. Manuf. 2020, 31, 433–452. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).