Part-of-Speech Tags Guide Low-Resource Machine Translation

Abstract

1. Introduction

- (1)

- We set different weights for different loss functions, which allows us to distinguish the task’s levels according to the task requirements.

- (2)

- We use a reconstructor approach to ensure consistency between text features and lexical information.

- (3)

- Our approach does not increase inference time when introducing multiterminated lexical information.

2. Related Work

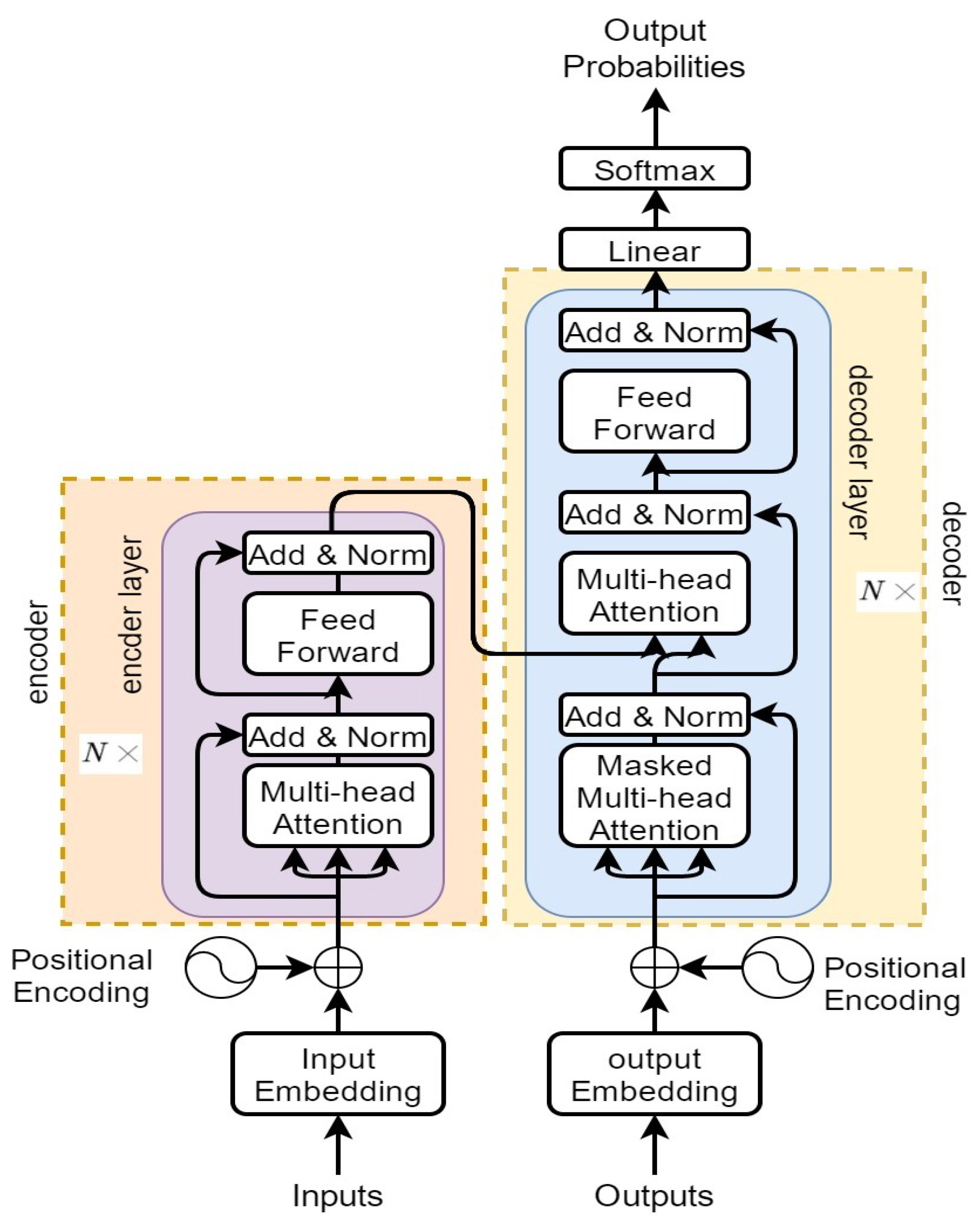

3. Background

3.1. Encoder

3.2. Decoder

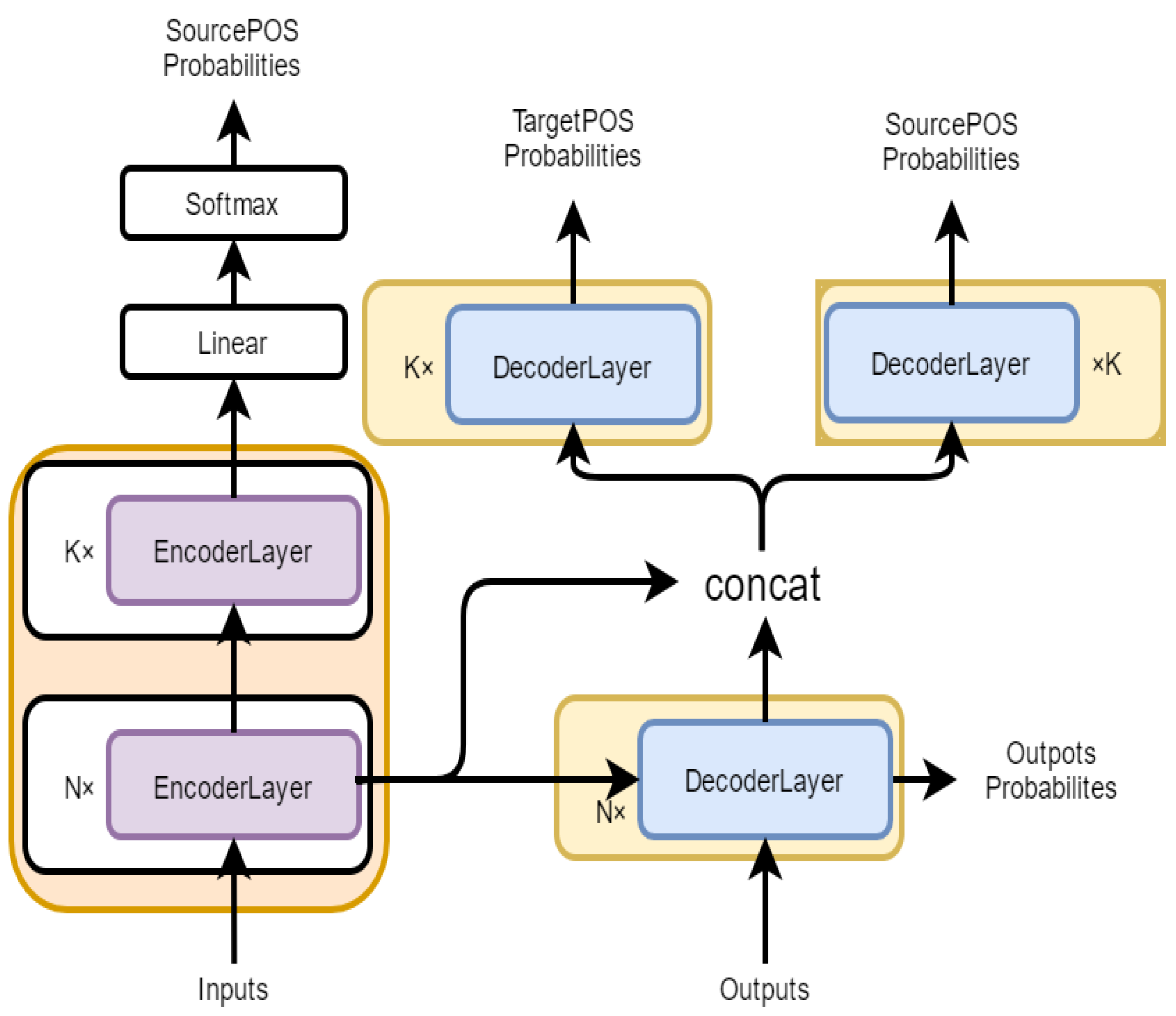

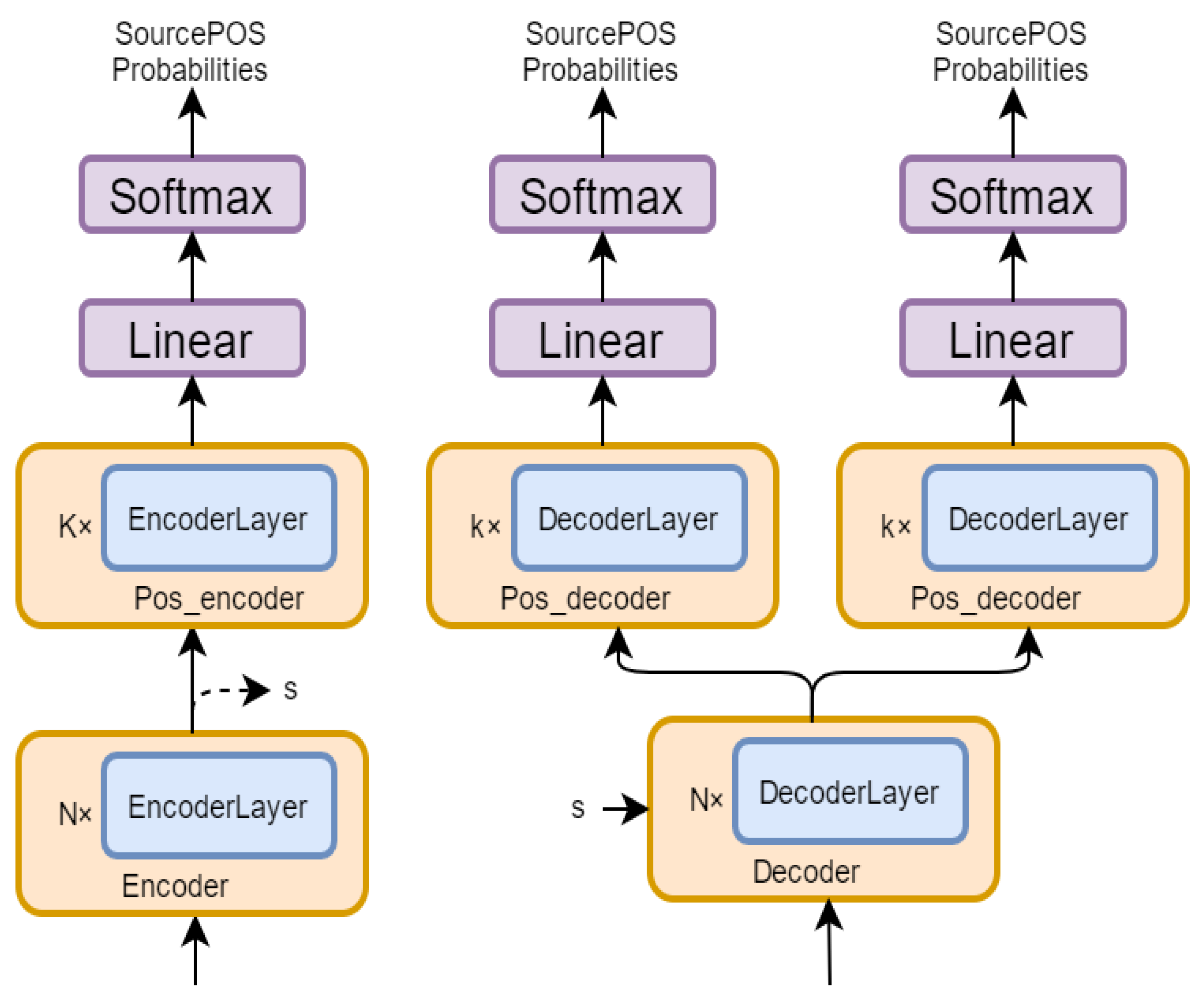

4. Methodology

5. Experiments

5.1. Dataset

5.2. Hyperparameters and Systems

- The head is 8 in multihead attention.

- Encoder and decoder layers are 6.

- The dimension of the word vector and model hidden state is 512.

- The dimension of the feed-forward network is 2048.

- The optimization function is Adam [48], where adam_beta1 is 0.9 and adam_beta2 is 0.998.

- Dropout [49] is 0.1.

- The warm up [50] is 4000.

- The learning rate is 0.0007.

- The label_smoothing is 0.1.

- base: The base system is based on the Transformer base parameter.

- Sennrich [8]: Since the experiments performed by Sennrich [8] are based on the RNN machine translation model, we reproduce the method in the Transformer according to the method proposed in Sennrich’s paper, and this system is used as Sennrich. This approach enriches the feature information of the encoder by splicing POS information with word vector information.

- joint: According to the previous experimental results and Sennrich’s method, we define the text’s word vector and the word vector of the part of speech as 512 dimensions. After splicing into a 1024-dimensional feature, a linear layer converts the 1024-dimensional feature into a 512-dimensional feature (system joint).

- srcPOS: srcPOS is a part of the loss function in our proposed model (the loss function is ); the system only includes the loss of the source-side POS information.

- tarPOS: tarPOS is a loss that only contains the target POS information.

- joint + tarPOS: joint + tarPOS is the fusion of the modified Sennrich method and the tarPOS method.

- srcPOS + tarPOS: srcPOS + tarPOS is a fusion of corresponding system methods.

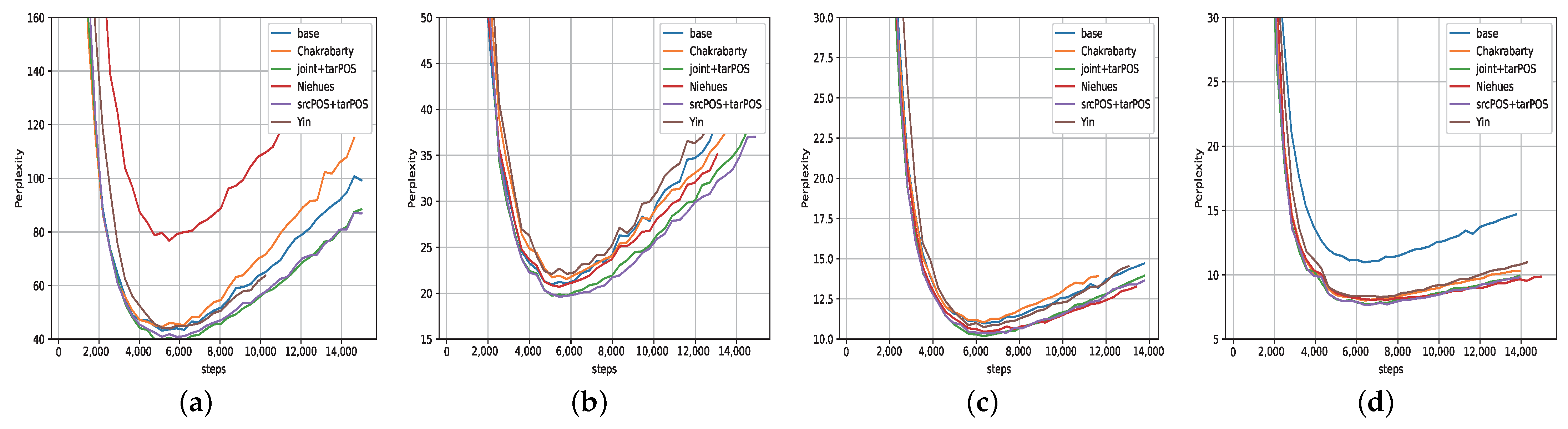

5.3. Low-Resource Translation Tasks

5.4. Rich-Resource Translation Tasks

5.5. Discussions

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| NMT | Neural Machine Translation |

| POS | Part of Speech |

| CCMT | China Conference on Machine Translation |

| IWSLT | The International Conference on Spoken Language Translation |

| WMT | Workshop on Machine Translation |

References

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems-Volume 2, Montreal, QC, Canada, 8–13 December 2014; pp. 3104–3112. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Wu, F.; Fan, A.; Baevski, A.; Dauphin, Y.; Auli, M. Pay Less Attention with Lightweight and Dynamic Convolutions. arXiv 2019, arXiv:1901.10430. [Google Scholar]

- Ranathunga, S.; Lee, E.S.A.; Prifti Skenduli, M.; Shekhar, R.; Alam, M.; Kaur, R. Neural Machine Translation for Low-resource Languages: A Survey. ACM Comput. Surv. 2023, 55, 229. [Google Scholar] [CrossRef]

- Eriguchi, A.; Hashimoto, K.; Tsuruoka, Y. Tree-to-Sequence Attentional Neural Machine Translation. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 823–833. [Google Scholar]

- Shi, X.; Padhi, I.; Knight, K. Does string-based neural MT learn source syntax? In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 1526–1534. [Google Scholar]

- Sennrich, R.; Haddow, B. Linguistic Input Features Improve Neural Machine Translation. In Proceedings of the First Conference on Machine Translation: Volume 1, Research Papers, Berlin, Germany, 7–12 August 2016; pp. 83–91. [Google Scholar]

- Chen, H.; Huang, S.; Chiang, D.; Chen, J. Improved Neural Machine Translation with a Syntax-Aware Encoder and Decoder. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 1936–1945. [Google Scholar]

- Bastings, J.; Titov, I.; Aziz, W.; Marcheggiani, D.; Sima’an, K. Graph Convolutional Encoders for Syntax-aware Neural Machine Translation. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1957–1967. [Google Scholar]

- Hashimoto, K.; Tsuruoka, Y. Neural Machine Translation with Source-Side Latent Graph Parsing. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 125–135. [Google Scholar]

- Li, J.; Xiong, D.; Tu, Z.; Zhu, M.; Zhang, M.; Zhou, G. Modeling Source Syntax for Neural Machine Translation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Vancouver, BC, Canada, 30 July–4 August 2017; pp. 688–697. [Google Scholar]

- Wu, S.; Zhang, D.; Zhang, Z.; Yang, N.; Li, M.; Zhou, M. Dependency-to-dependency neural machine translation. IEEE/ACM Trans. Audio Speech Lang. Process. 2018, 26, 2132–2141. [Google Scholar] [CrossRef]

- Zhang, M.; Li, Z.; Fu, G.; Zhang, M. Syntax-Enhanced Neural Machine Translation with Syntax-Aware Word Representations. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 1151–1161. [Google Scholar]

- Bugliarello, E.; Okazaki, N. Enhancing Machine Translation with Dependency-Aware Self-Attention. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1618–1627. [Google Scholar]

- Chakrabarty, A.; Dabre, R.; Ding, C.; Utiyama, M.; Sumita, E. Improving Low-Resource NMT through Relevance Based Linguistic Features Incorporation. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 8–13 December 2020; pp. 4263–4274. [Google Scholar]

- Wu, G.; Tang, G.; Wang, Z.; Zhang, Z.; Wang, Z. An attention-based BiLSTM-CRF model for Chinese clinic named entity recognition. IEEE Access 2019, 7, 113942–113949. [Google Scholar] [CrossRef]

- Labeau, M.; Löser, K.; Allauzen, A. Non-lexical neural architecture for fine-grained POS tagging. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 232–237. [Google Scholar]

- Rei, M.; Crichton, G.K.; Pyysalo, S. Attending to characters in neural sequence labeling models. arXiv 2016, arXiv:1611.04361. [Google Scholar]

- Ren, S.; Zhou, L.; Liu, S.; Wei, F.; Zhou, M.; Ma, S. Semface: Pre-training encoder and decoder with a semantic interface for neural machine translation. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online, 1–6 August 2021; pp. 4518–4527. [Google Scholar]

- Caglayan, O.; Kuyu, M.; Amac, M.S.; Madhyastha, P.S.; Erdem, E.; Erdem, A.; Specia, L. Cross-lingual Visual Pre-training for Multimodal Machine Translation. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, Online, 19–23 April 2021; pp. 1317–1324. [Google Scholar]

- Niehues, J.; Cho, E. Exploiting Linguistic Resources for Neural Machine Translation Using Multi-task Learning. In Proceedings of the Second Conference on Machine Translation, Copenhagen, Denmark, 7–8 September 2017; pp. 80–89. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Yin, Y.; Su, J.; Wen, H.; Zeng, J.; Liu, Y.; Chen, Y. POS tag-enhanced coarse-to-fine attention for neural machine translation. ACM Trans. Asian Low-Resour. Lang. Inf. Process. (TALLIP) 2019, 18, 1–14. [Google Scholar] [CrossRef]

- Wang, Y.; Zhai, C.; Hassan, H. Multi-task Learning for Multilingual Neural Machine Translation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 1022–1034. [Google Scholar]

- Zhou, J.; Zhang, Z.; Zhao, H.; Zhang, S. LIMIT-BERT: Linguistics Informed Multi-Task BERT. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2020, Online, 16–20 November 2020; pp. 4450–4461. [Google Scholar]

- Mao, Z.; Chu, C.; Kurohashi, S. Linguistically Driven Multi-Task Pre-Training for Low-Resource Neural Machine Translation. Trans. Asian Low-Resour. Lang. Inf. Process. 2022, 21, 1–29. [Google Scholar] [CrossRef]

- Burlot, F.; Garcia-Martinez, M.; Barrault, L.; Bougares, F.; Yvon, F. Word representations in factored neural machine translation. In Proceedings of the Conference on Machine Translation, Copenhagen, Denmark, 7–11 September 2017; Volume 1, pp. 43–55. [Google Scholar]

- Liu, Q.; Kusner, M.; Blunsom, P. Counterfactual data augmentation for neural machine translation. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 187–197. [Google Scholar]

- Chen, G.; Chen, Y.; Wang, Y.; Li, V.O. Lexical-constraint-aware neural machine translation via data augmentation. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, Yokohama, Japan, 11–17 July 2021; pp. 3587–3593. [Google Scholar]

- Edunov, S.; Ott, M.; Auli, M.; Grangier, D. Understanding Back-Translation at Scale. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 489–500. [Google Scholar]

- Tu, Z.; Liu, Y.; Shang, L.; Liu, X.; Li, H. Neural machine translation with reconstruction. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31, pp. 3097–3103. [Google Scholar]

- Gong, L.; Li, Y.; Guo, J.; Yu, Z.; Gao, S. Enhancing low-resource neural machine translation with syntax-graph guided self-attention. Knowl.-Based Syst. 2022, 246, 108615. [Google Scholar] [CrossRef]

- Waldendorf, J.; Birch, A.; Hadow, B.; Barone, A.V.M. Improving translation of out of vocabulary words using bilingual lexicon induction in low-resource machine translation. In Proceedings of the 15th biennial conference of the Association for Machine Translation in the Americas (Volume 1: Research Track), Orlando, FL, USA, 12–16 September 2022; pp. 144–156. [Google Scholar]

- Sethi, N.; Dev, A.; Bansal, P.; Sharma, D.K.; Gupta, D. Hybridization Based Machine Translations for Low-Resource Language with Language Divergence. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2022. [Google Scholar] [CrossRef]

- Hlaing, Z.Z.; Thu, Y.K.; Supnithi, T.; Netisopakul, P. Improving neural machine translation with POS-tag features for low-resource language pairs. Heliyon 2022, 8, e10375. [Google Scholar] [CrossRef] [PubMed]

- Maimaiti, M.; Liu, Y.; Luan, H.; Pan, Z.; Sun, M. Improving Data Augmentation for Low-Resource NMT Guided by POS-Tagging and Paraphrase Embedding. Trans. Asian Low-Resour. Lang. Information Processing 2021, 20, 1–21. [Google Scholar] [CrossRef]

- Zheng, W.; Zhou, Y.; Liu, S.; Tian, J.; Yang, B.; Yin, L. A Deep Fusion Matching Network Semantic Reasoning Model. Appl. Sci. 2022, 12, 3416. [Google Scholar] [CrossRef]

- Zheng, W.; Liu, X.; Yin, L. Sentence Representation Method Based on Multi-Layer Semantic Network. Appl. Sci. 2021, 11, 1316. [Google Scholar] [CrossRef]

- Shi, X.; Yu, Z. Adding Visual Information to Improve Multimodal Machine Translation for Low-Resource Language. Math. Probl. Eng. 2022, 2022, 5483535. Available online: https://www.hindawi.com/journals/mpe/2022/5483535/ (accessed on 4 May 2022). [CrossRef]

- Xu, M.; Hong, Y. Sub-word alignment is still useful: A vest-pocket method for enhancing low-resource machine translation. arXiv 2022, arXiv:2205.04067. [Google Scholar]

- Burchell, L.; Birch, A.; Heafield, K. Exploring diversity in back translation for low-resource machine translation. arXiv 2022, arXiv:2206.00564. [Google Scholar]

- Oncevay, A.; Rojas, K.D.R.; Sanchez, L.K.C.; Zariquiey, R. Revisiting Syllables in Language Modelling and their Application on Low-Resource Machine Translation. arXiv 2022, arXiv:2210.02509. [Google Scholar]

- Atrio, À.R.; Popescu-Belis, A. On the interaction of regularization factors in low-resource neural machine translation. In Proceedings of the 23rd Annual Conference of the European Association for Machine Translation, Ghent, Belgium, 1–3 June 2022. [Google Scholar]

- Li, Z.; Sun, M. Punctuation as implicit annotations for Chinese word segmentation. Comput. Linguist. 2009, 35, 505–512. [Google Scholar] [CrossRef]

- Sennrich, R.; Haddow, B.; Birch, A. Neural Machine Translation of Rare Words with Subword Units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 1715–1725. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Freitag, M.; Al-Onaizan, Y. Beam Search Strategies for Neural Machine Translation. In Proceedings of the First Workshop on Neural Machine Translation, Vancouver, BC, Canada, 4 August 2017; pp. 56–60. [Google Scholar]

| Language Pair | Source | Train Data Size | Valid Data Size | Test Data Size |

|---|---|---|---|---|

| zh-uy | CCMT2019 | 170,054 | 1000 | 1000 |

| zh-en | IWSLT2017 | 231,230 | 879 | 1000 |

| en-ru | WMT2014 | 1,558,847 | 3000 | 3000 |

| de-en | WMT2016 | 4,500,966 | 2169 | 2999 |

| System | zh2uy | uy2zh | zh2en | en2zh |

|---|---|---|---|---|

| base [3] | 22.71 | 27.36 | 21.25 | 19.33 |

| Sennrich [8] | 22.87 | 26.92 | 21.26 | 19.23 |

| Niehues [22] | 23.34 | 27.86 | 21.7 | 19.73 |

| Yin [24] | 23.12 | 27.75 | 21.62 | 19.6 |

| Chakrabarty [16] | 23.34 | 26.96 | 21.67 | 19.45 |

| joint | 23.43 | 28.14 | 21.82 | 19.94 |

| srcPOS | 23.36 | 27.72 | 21.86 | 19.79 |

| tarPOS | 23.47 | 28.2 | 21.86 | 19.62 |

| joint + tarPOS | 23.7 | 28.39 | 23.00 | 20.26 |

| srcPOS + tarPOS | 23.88 | 28.37 | 22.27 | 20.46 |

| System | en2ru | ru2en | de2en |

|---|---|---|---|

| base [3] | 29.65 | 30.17 | 35.47 |

| Sennrich [8] | 28.87 | 28.9 | 35.55 |

| Niehues [22] | 30.1 | - | 35.86 |

| Yin [24] | 29.01 | 28.95 | 35.58 |

| Chakrabarty [16] | 28.73 | 28.7 | 35.77 |

| joint | 22.68 | - | 35.53 |

| srcPOS | 29.86 | 29.7 | 35.45 |

| tarPOS | 29.78 | 29.44 | 35.87 |

| srcPOS + tarPOS | 30.21 | 30.82 | 35.66 |

| System | 0.2 m | 0.5 m | 1 m | 4.5 m |

|---|---|---|---|---|

| base | 18.07 | 21.71 | 25.1 | 35.47 |

| joint | 18.78 | 21.43 | 24.88 | 35.53 |

| tarpos | 18.35 | 22.46 | 24.95 | 35.87 |

| srcpos + tarpos | 18.82 | 22.33 | 24.92 | 35.66 |

| line | 19.4 | 22.26 | 25.3 | 35.76 |

| line2 | 19.13 | 22.75 | 25.2 | 35.96 |

| System | en2zh |

|---|---|

| base | 19.33 |

| joint | 19.94 |

| srcPOS | 19.77 |

| joint + srcPOS | 19.47 |

| System | zh2en | en2zh |

|---|---|---|

| base | 21.25 | 19.33 |

| srcPOS | 21.86 | 19.79 |

| srcPOS1 | 21.57 | 19.43 |

| srcPOS2 | 21.73 | 19.44 |

| srcPOS3 | 21.51 | 20.23 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kadeer, Z.; Yi, N.; Wumaier, A. Part-of-Speech Tags Guide Low-Resource Machine Translation. Electronics 2023, 12, 3401. https://doi.org/10.3390/electronics12163401

Kadeer Z, Yi N, Wumaier A. Part-of-Speech Tags Guide Low-Resource Machine Translation. Electronics. 2023; 12(16):3401. https://doi.org/10.3390/electronics12163401

Chicago/Turabian StyleKadeer, Zaokere, Nian Yi, and Aishan Wumaier. 2023. "Part-of-Speech Tags Guide Low-Resource Machine Translation" Electronics 12, no. 16: 3401. https://doi.org/10.3390/electronics12163401

APA StyleKadeer, Z., Yi, N., & Wumaier, A. (2023). Part-of-Speech Tags Guide Low-Resource Machine Translation. Electronics, 12(16), 3401. https://doi.org/10.3390/electronics12163401