Adaptive Image Size Padding for Load Balancing in System-on-Chip Memory Hierarchy

Abstract

1. Introduction

- We propose the adaptive image size padding technique with the following features. First, the presented approach takes both caches and the main memory hierarchy into account. Second, the system can adaptively determine the image pad size at the application-invoke time. To develop the adaptive pad sizing algorithm, we conduct the metric analysis and derive the condition.

- The design, the performance evaluation, and the overhead analysis are described. The experiments indicate the presented design can significantly enhance the traffic load balancing and the performance. Additionally, the analysis indicates that the memory footprint overhead is insignificant especially when the image is large sized.

2. Related Work

2.1. Dram Address Mapping

2.2. Address Generation

2.3. Image Applications and Deep Learning

2.4. Cache

2.5. Padding

3. Background

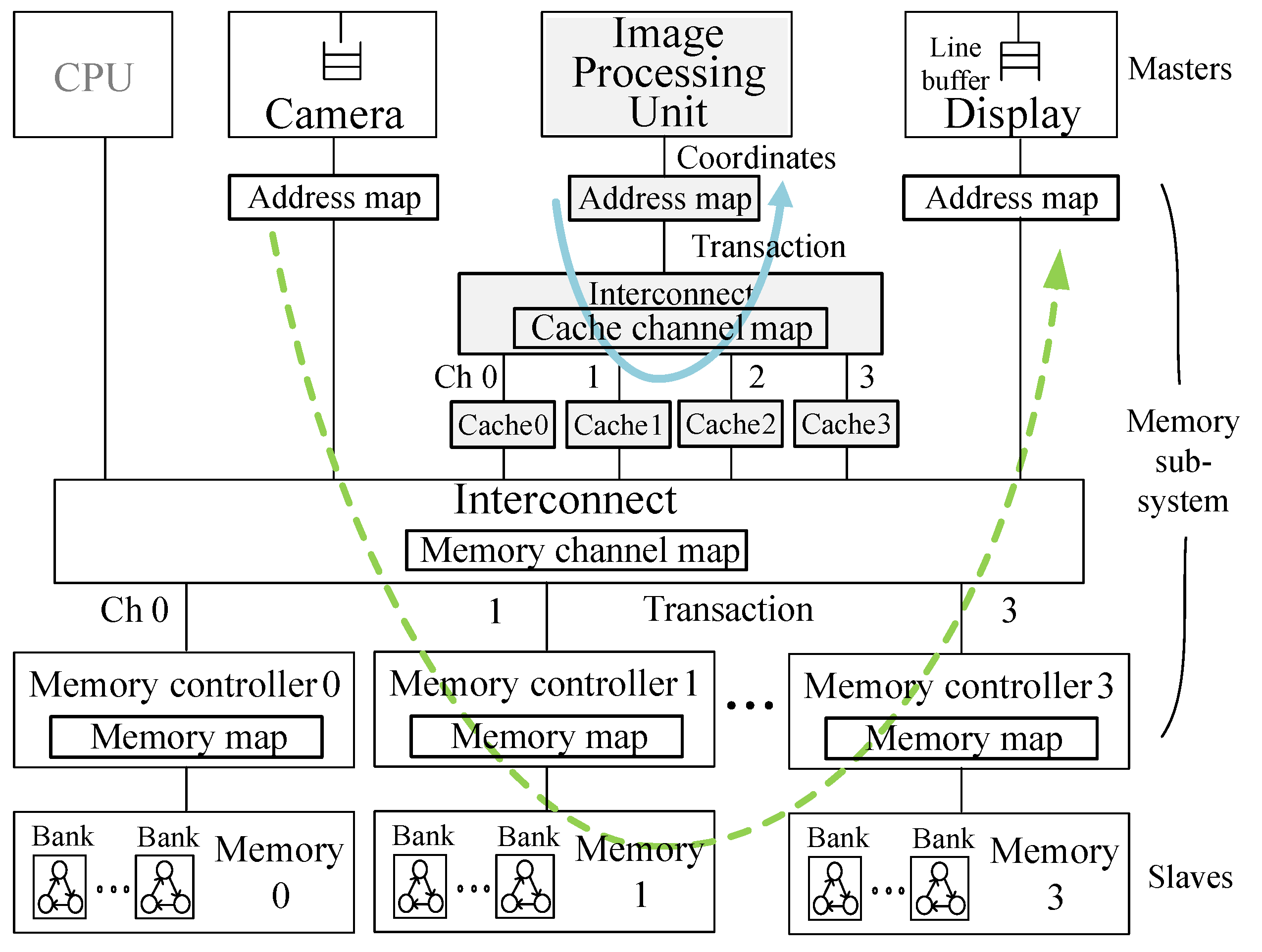

3.1. Transaction and Memory Attributes

3.2. Address Mapping

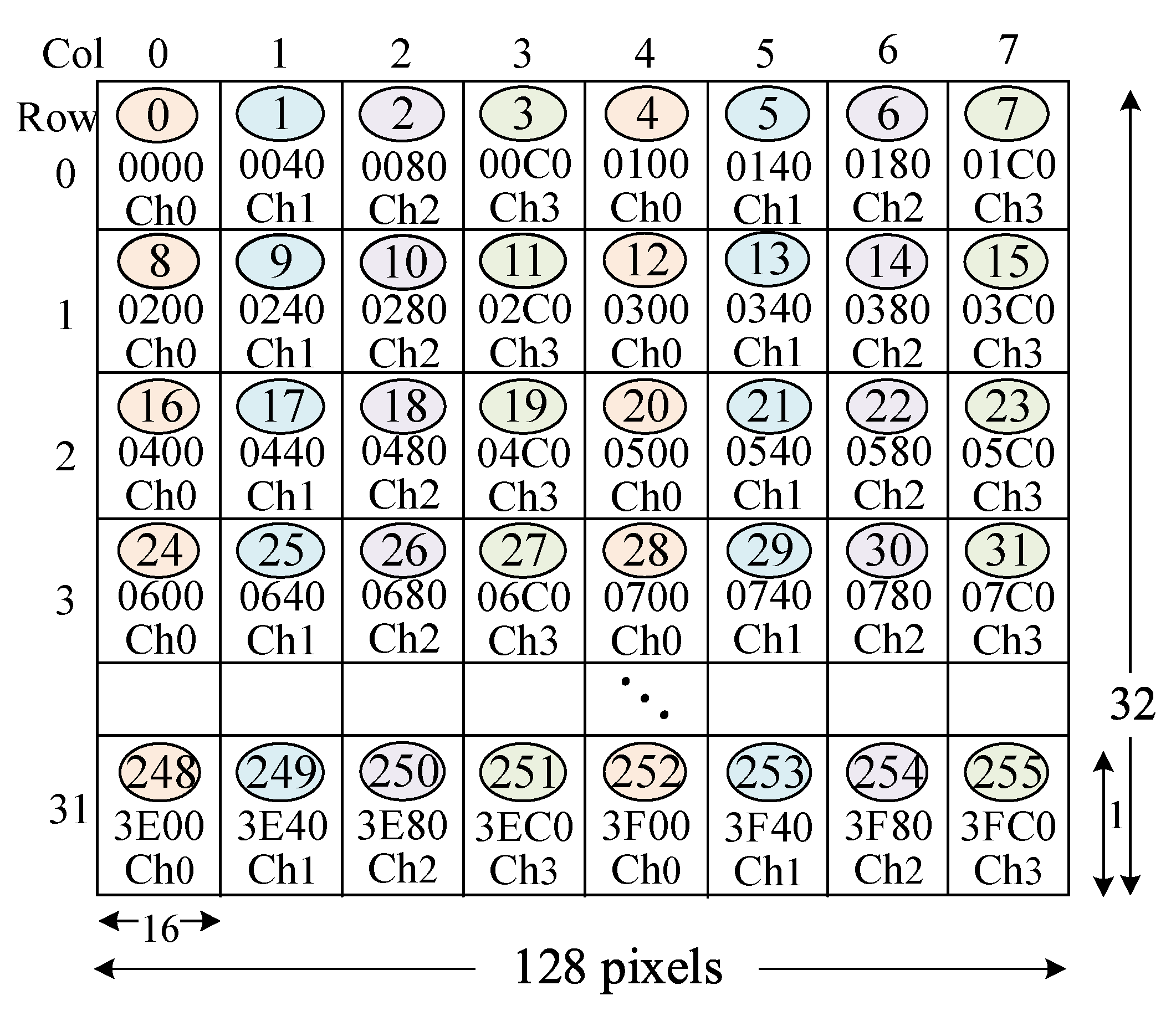

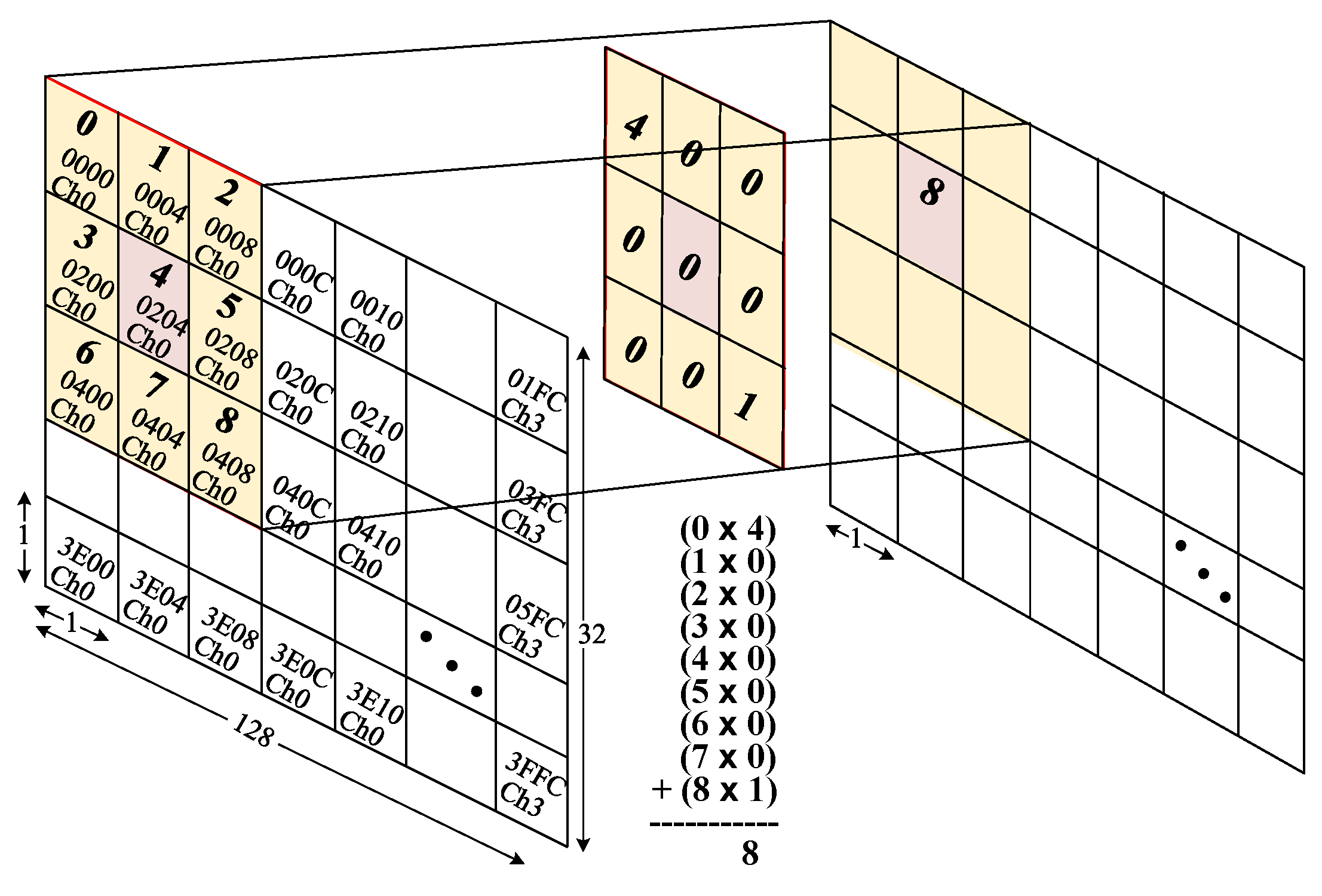

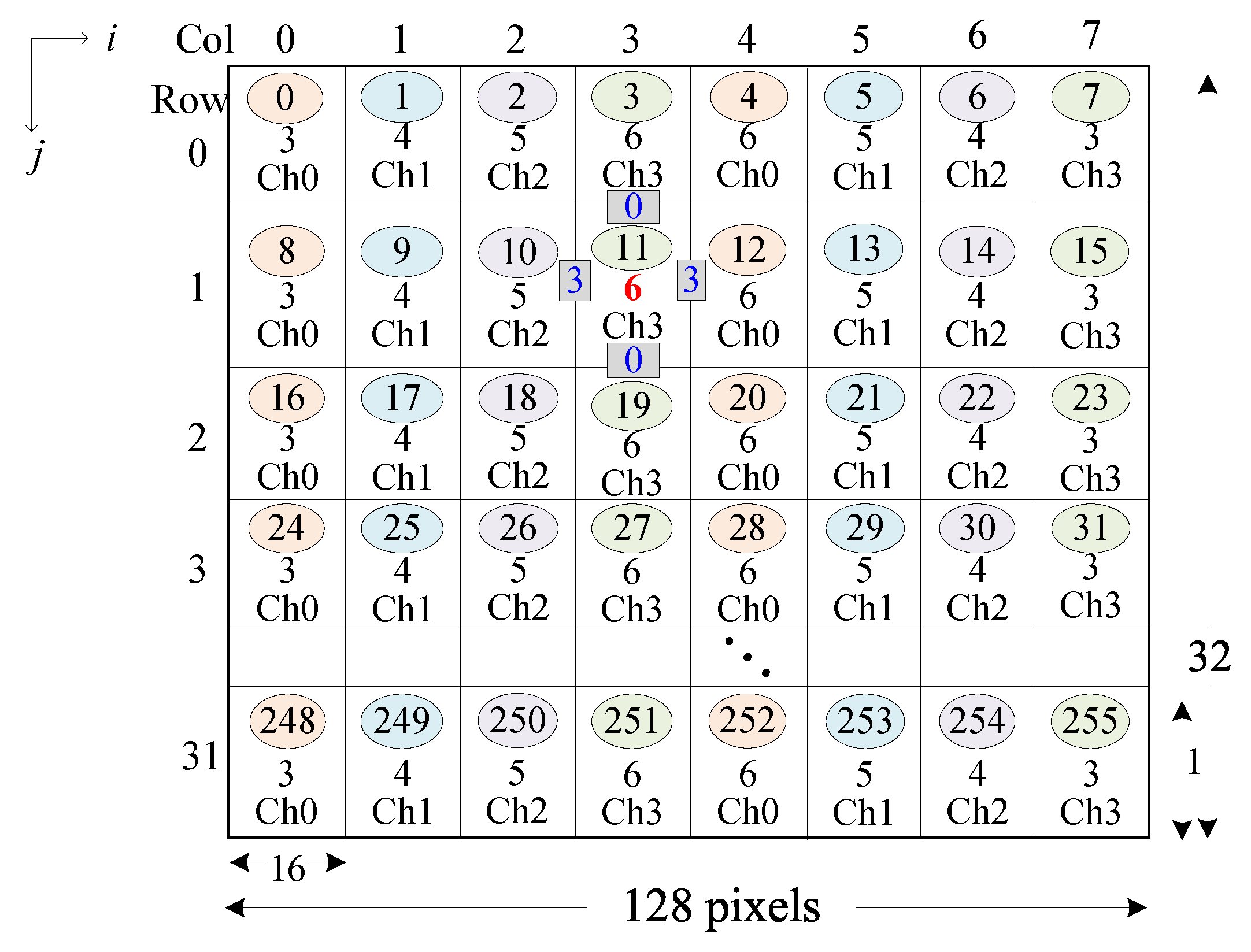

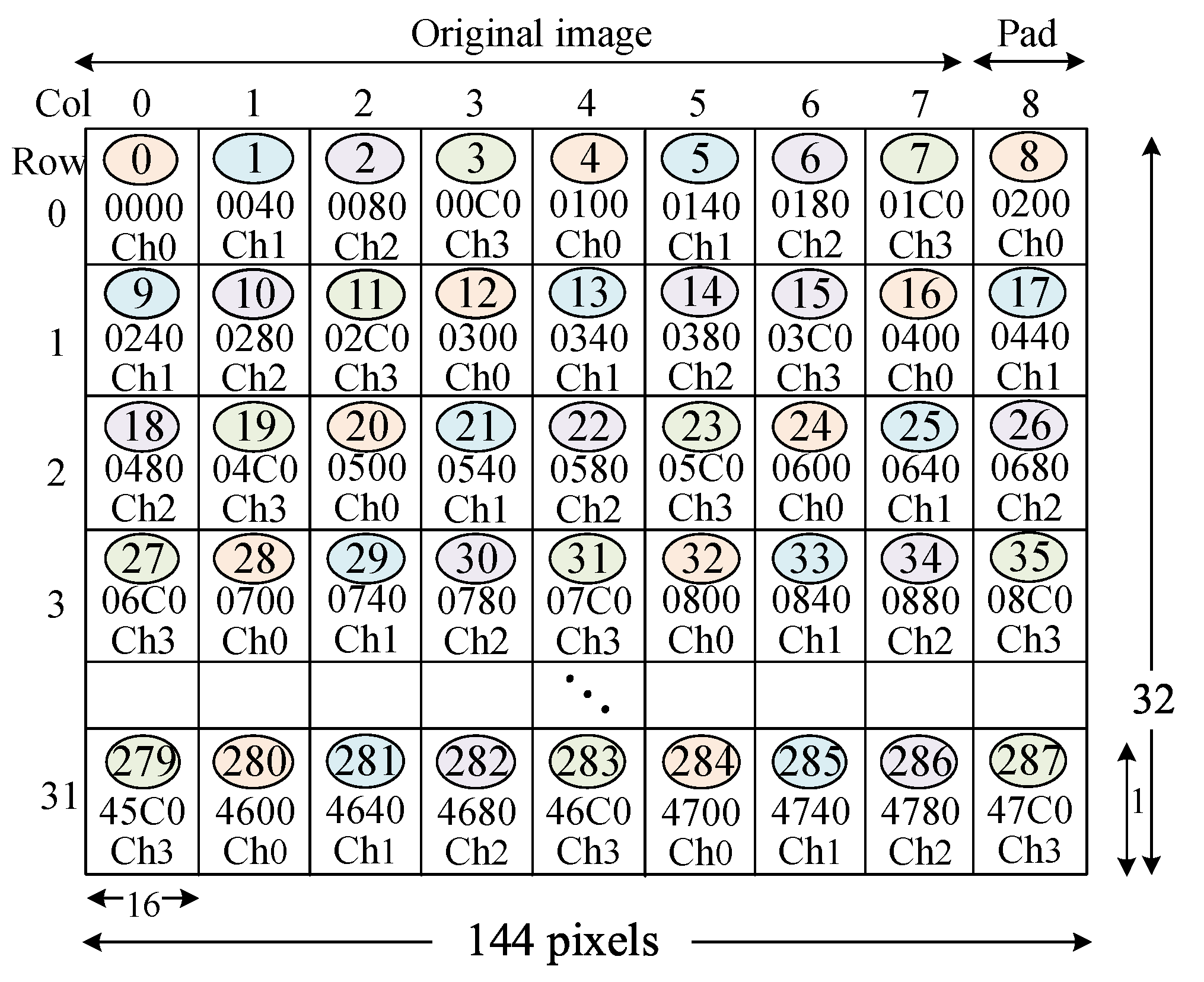

- An address map converts image pixels onto their transaction addresses. Figure 2 depicts the linear address map (LIAM) for the image with 128 × 32 pixels. In Figure 2, the transaction addresses sequentially increase in the horizontal direction. This conventional method is widely used in practice due to its simplicity. The number in the circle is the transaction number that indicates the order of the addresses. A single transaction accesses 64 bytes of data or 16 RGB pixels. Suppose a master generates the transaction ➈ to access the pixel coordinate (16, 1). Then, the transaction address is 240 in the hexadecimal number.

- A cache map converts an address onto a tag, an index, a channel, and an offset number. Figure 3a depicts an example in which the cache line size is 64 bytes. The address 240 for the transaction ➈ is mapped to the cache channel 1 as denoted by Ch1.

- A memory map converts the transaction address onto a DRAM location (a row, a bank, a channel, and a column number). Figure 3b depicts an example. The address 240 for the transaction ➈ is mapped to the DRAM channel 1.

, ➀, ➁, and ➂, then the targeted channels are Ch0, 1, 2, and 3. This means the outstanding transactions desirably access memory components in the interleaved and the load-balanced way.

, ➀, ➁, and ➂, then the targeted channels are Ch0, 1, 2, and 3. This means the outstanding transactions desirably access memory components in the interleaved and the load-balanced way.3.3. Motivational Use Cases

, ➇, ⑯, and so on, and thus, the targeted channels are Ch0, 0, 0, and so on. This means the outstanding transactions access a single component and incur the congestion. However, a single component can serve a single transaction at a time. Subsequently, the traffic congestion incurs undesirable delay and degrades memory performance.

, ➇, ⑯, and so on, and thus, the targeted channels are Ch0, 0, 0, and so on. This means the outstanding transactions access a single component and incur the congestion. However, a single component can serve a single transaction at a time. Subsequently, the traffic congestion incurs undesirable delay and degrades memory performance.4. Proposed Design

4.1. Overview

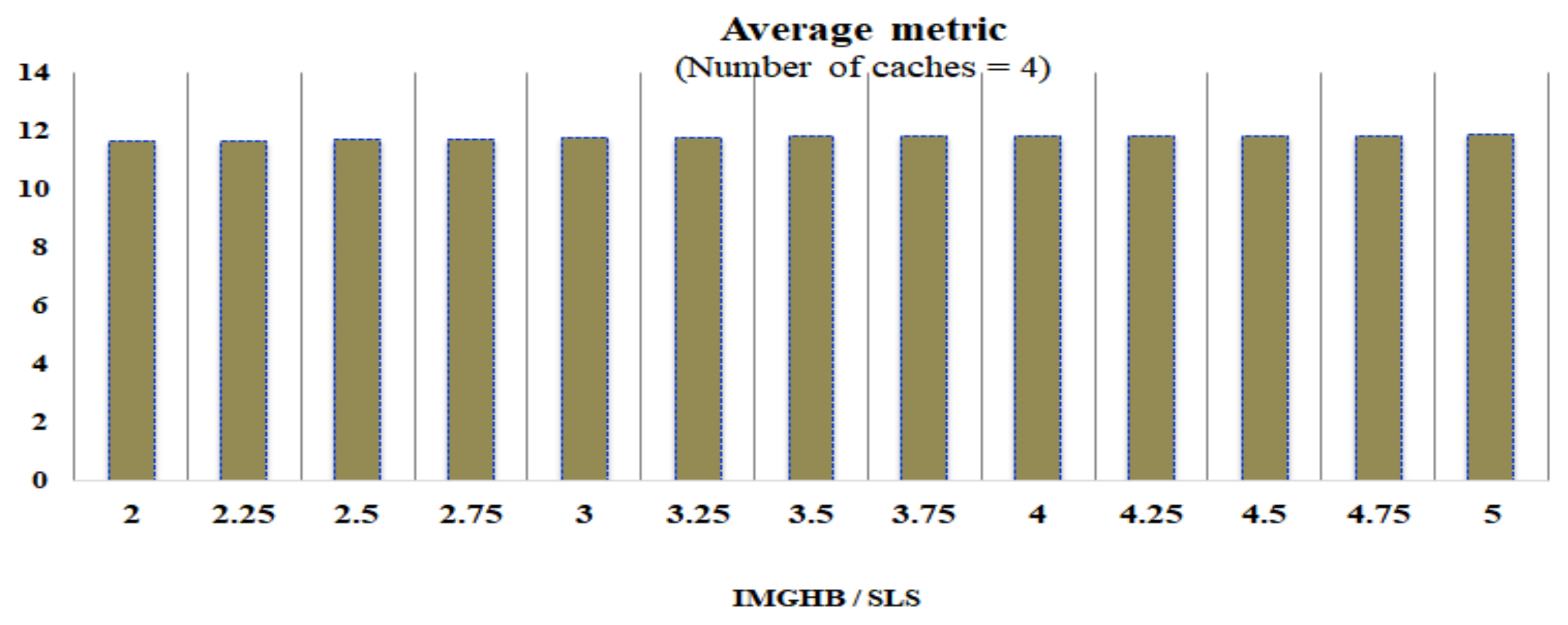

4.2. Liam Metric Analysis

4.3. Padded Linear Address Map

- An image size padding technique is applied in the adaptive way, taking both caches and the main memory hierarchy into account.

- The system can adaptively determine the image pad size at the application-invoke time.

4.4. Adaptive Pad Sizing

4.5. System Configuration Furthermore, Operation

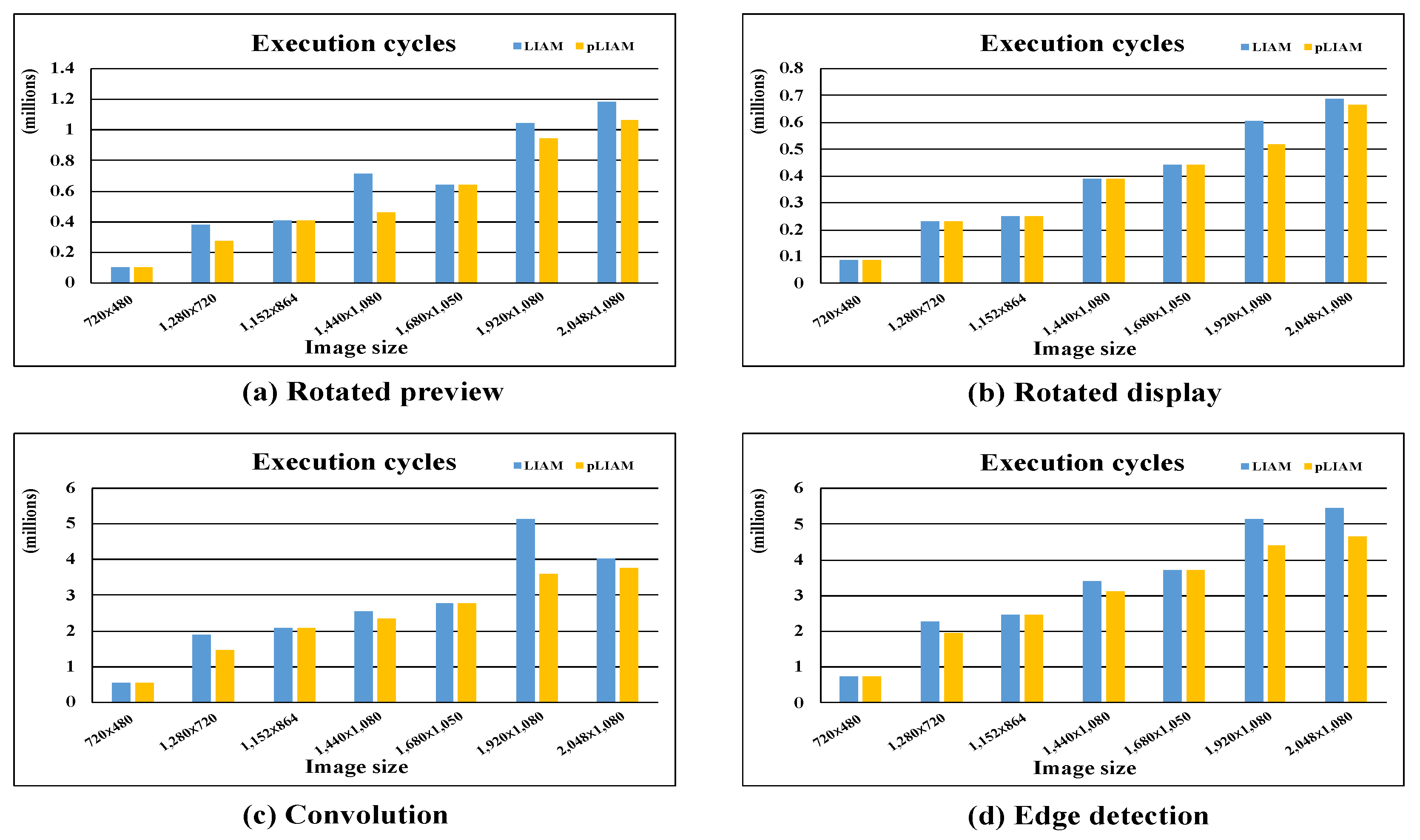

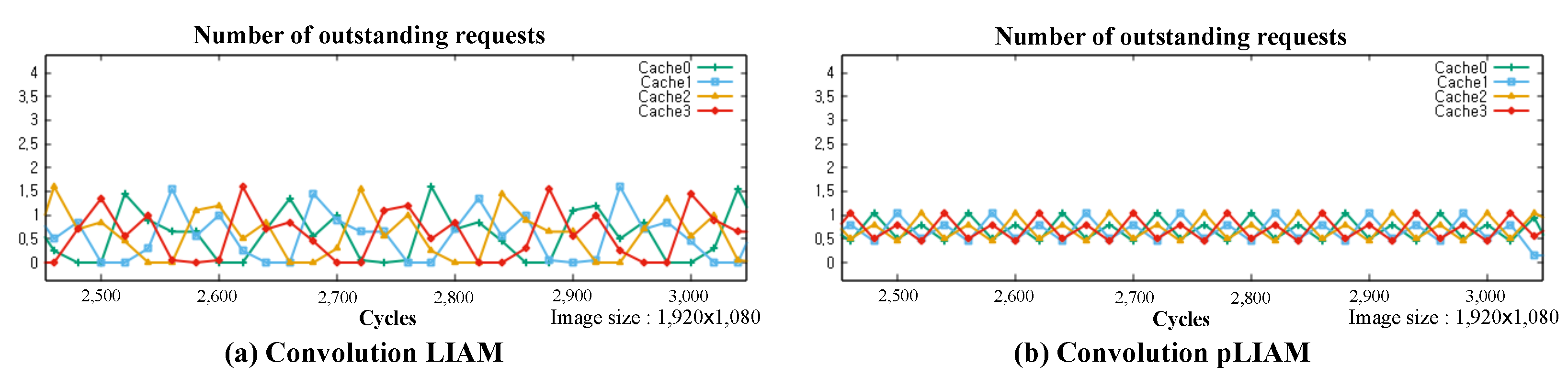

5. Experimental Results

6. Conclusions

- Summary

- Memory utilization and performance

- Overhead, limitation, and future work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, Z.; Zhu, Z.; Zang, Z. Breaking address mapping symmetry at multi-levels of memory hierarchy to reduce DRAM row-buffer conflicts. J. Instr.-Level Parallelism 2001, 3, 29–63. [Google Scholar]

- Kaseridis, D.; Stuecheli, J.; John, L.K. Minimalist open-page: A DRAM page-mode scheduling policy for the many-core era. In Proceedings of the 44th Annual IEEE/ACM International Symposium on Microarchitecture, Porto Alegre, Brazil, 3–7 December 2011; pp. 24–35. [Google Scholar]

- Shao, J.; Davis, B.T. The bit-reversal SDRAM address mapping. In Workshop on Software and Compilers for Embedded Systems; Association for Computing Machinery: New York, NY, USA, 2005; pp. 62–71. [Google Scholar]

- Wei, R.; Li, C.; Chen, C.; Sun, G.; He, M. Memory access optimization of a neural network accelerator based on memory controller. Electronics 2021, 4, 438. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, Z.; Cheng, Y.; Nepal, S. Dramdig: A knowledge-assisted tool to uncover dram address mapping. In Proceedings of the 2020 57th ACM/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 20–24 July 2020; pp. 1–6. [Google Scholar]

- Zhu, Z.; Cao, J.; Li, X.; Zhang, J.; Xu, Y.; Jia, G. Impacts of memory address mapping scheme on reducing DRAM self-refresh power for mobile computing devices. IEEE Access 2018, 6, 78513–78520. [Google Scholar] [CrossRef]

- Islam, M.; Shaizeen, A.G.A.; Jayasena, N.; Kotra, J.B. Hardware-Software Collaborative Address Mapping Scheme for Efficient Processing-in-Memory Systems. U.S. Patent 11,487,447 B2, 1 November 2022. [Google Scholar]

- Shao, J.; Davis, B.T. A Burst Scheduling Access Reordering Mechanism. In Proceedings of the 2007 IEEE 13th International Symposium on High Performance Computer Architecture, Scottsdale, AZ, USA, 10–14 February 2007; pp. 285–294. [Google Scholar]

- Ghasempour, M.; Jaleel, A.; Garside, J.D.; Luján, M. Dream: Dynamic re-arrangement of address mapping to improve the performance of drams. In Proceedings of the International Symposium on Memory Systems, Alexandria, VA, USA, 3–6 October 2016; pp. 362–373. [Google Scholar]

- Cypher, R.E. System and Method for Dynamic Memory Interleaving and De-Interleaving. U.S. Patent No. 7,318,114, 8 January 2008. [Google Scholar]

- Sato, M.; Han, C.; Komatsu, K.; Egawa, R.; Takizawa, H.; Kobayashi, H. An energy-efficient dynamic memory address mapping mechanism. In Proceedings of the 2015 IEEE Symposium in Low-Power and High-Speed Chips (COOL CHIPS XVIII), Yokohama, Japan, 13–15 April 2015; pp. 1–3. [Google Scholar]

- Hur, J.Y.; Rhim, S.W.; Lee, B.H.; Jang, W. Adaptive Linear Address Map for Bank Interleaving in DRAMs. IEEE Access 2019, 7, 129604–129616. [Google Scholar] [CrossRef]

- Chavet, C.; Coussy, P.; Urard, P.; Martin, E. Static address generation easing: A design methodology for parallel interleaver architectures. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 1594–1597. [Google Scholar]

- Lin, H.; Wolf, W. Co-design of interleaved memory systems. In Proceedings of the Eighth International Workshop on Hardware/Software Codesign; Association for Computing Machinery: New York, NY, USA, 2000; pp. 46–50. [Google Scholar]

- Khan, A.; Al-Mouhamed, M.; Fatayar, A.; Almousa, A.; Baqais, A.; Assayony, M. Padding Free Bank Conflict Resolution for CUDA-Based Matrix Transpose Algorithm. In Proceedings of the 15th IEEE/ACIS International Conference on Software Engineering, Artificial Intelligence, Networking and Parallel/Distributed Computing (SNPD), Las Vegas, NV, USA, 30 June–2 July 2014; pp. 1–6. [Google Scholar]

- Li, M.; Zhang, W.; Hu, B.; Kang, J.; Wang, Y.; Lu, S. Automatic Assessment of Depression and Anxiety through Encoding Pupil-wave from HCI in VR Scenes. Acm Trans. Multimed. Comput. Commun. Appl. 2022. [Google Scholar] [CrossRef]

- Duan, Z.; Song, P.; Yang, C.; Deng, L.; Jiang, Y.; Deng, F.; Jiang, X.; Chen, Y.; Yang, G.; Ma, Y.; et al. The impact of hyperglycaemic crisis episodes on long-term outcomes for inpatients presenting with acute organ injury: A prospective, multicentre follow-up study. Front. Endocrinol. 2022, 13, 1057089. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Wang, T.; Chen, T.; Deng, W. Hyperspectral Image Classification Based on Fusing S3-PCA, 2D-SSA and Random Patch Network. Remote Sens. 2023, 15, 3402. [Google Scholar] [CrossRef]

- Jia, W.; Shaw, K.A.; Martonosi, M. MRPB: Memory request prioritization for massively parallel processors. In Proceedings of the 2014 IEEE 20th International Symposium on High Performance Computer Architecture (HPCA), Orlando, FL, USA, 15–19 February 2014; pp. 272–283. [Google Scholar]

- Lee, Y.; Kim, J.; Jang, H.; Yang, H.; Kim, J.; Jeong, J.; Lee, J.W. A fully associative, tagless DRAM cache. ACM Sigarch Comput. Archit. News 2015, 43, 211–222. [Google Scholar] [CrossRef]

- Fang, Z.; Zheng, B.; Weng, C. Interleaved multi-vectorizing. Proc. VLDB Endow. 2019, 13, 226–238. [Google Scholar] [CrossRef]

- Wu, S.; Wang, G.; Tang, P.; Chen, F.; Shi, L. Convolution with even-sized kernels and symmetric padding. Adv. Neural Inf. Process. Syst. 2019, 32, 1194–1205. [Google Scholar]

- Hashemi, M. Enlarging smaller images before inputting into convolutional neural network: Zero-padding vs. interpolation. J. Big Data 2019, 6, 98. [Google Scholar] [CrossRef]

- Rivera, G.; Tseng, C.W. Data transformations for eliminating conflict misses. In Proceedings of the ACM SIGPLAN Conference on Programming Language Design and Implementation, Montreal, QC, Canada, 17–19 June 1998; pp. 38–49. [Google Scholar]

- Hong, C.; Bao, W.; Cohen, A.; Krishnamoorthy, S.; Pouchet, L.N.; Rastello, F.; Ramanujam, J.; Sadayappan, P. Effective Padding of Multidimensional Arrays to Avoid Cache Conflict Misses. ACM SIGPLAN Not. 2016, 51, 129–144. [Google Scholar] [CrossRef]

- Ishizaka, K.; Obata, M.; Kasahara, H. Cache Optimization for Coarse Grain Task Parallel Processing Using Inter-Array Padding. In Languages and Compilers for Parallel Computing: 16th International Workshop, LCPC 2003, College Station, TX, USA, 2–4 October 2003; Revised Papers 16; Springer: Berlin/Heidelberg, Germany, 2004; pp. 64–76. [Google Scholar]

- Vera, X.; Llosa, J.; González, A. Near-Optimal Padding for Removing Conflict Misses. In Languages and Compilers for Parallel Computing: 15th Workshop, LCPC 2002, College Park, MD, USA, 25–27 July 2002; Revised Papers 15; Springer: Berlin/Heidelberg, Germany, 2002; pp. 329–343. [Google Scholar]

- Bilgic, B.; Horn, B.K.; Masaki, I. Efficient integral image computation on the GPU. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 528–533. [Google Scholar]

- Zhang, Q.; Li, Q.; Dai, Y.; Kuo, C.C. Reducing memory bank conflict for embedded multimedia systems. In Proceedings of the 2004 IEEE International Conference on Multimedia and Expo (ICME) (IEEE Cat. No.04TH8763), Taipei, Taiwan, 27–30 June 2004; Volume 1, pp. 471–474. [Google Scholar]

- ARM Architecture Reference Manual, ARMv8-A Edition. Available online: Http://www.arm.com (accessed on 20 May 2023).

| Parameters | Description | Unit | Example |

|---|---|---|---|

| LineSize | Cache line size | bytes | 64 |

| TranSize | Transaction size | bytes | 64 |

| M | Multiple outstanding count | - | 4 |

| NumCacheCh | Number of cache channels | - | 4 |

| NumMemCh | Number of memory channels | - | 4 |

| SLS | Super-line size = LineSize × NumCacheCh or TranSize × NumMemCh | bytes | 256 |

| ImgH | Image horizontal size | pixels | 128 |

| ImgV | Image vertical size | pixels | 32 |

| BytePixel | Byte per pixel | bytes | 4 (RGB) |

| ImgHB | Image horizontal size = ImgH × BytePixel | bytes | 512 |

| Image Size (Pixels) | Equation (5) | Pad Size | Padded Image Size | |

|---|---|---|---|---|

| 720 × 480 | 11.25 | Not met | 0 | 720 × 480 |

| 1280 × 720 | 20 | Met | 16 | 1296 × 720 |

| 1152 × 864 | 18 | Met | 16 | 1168 × 864 |

| 1440 × 1080 | 22.5 | Met | 16 | 1456 × 1080 |

| 1680 × 1050 | 26.25 | Not met | 0 | 1680 × 1050 |

| 1920 × 1080 | 30 | Met | 16 | 1936 × 1080 |

| 2048 × 1080 | 32 | Met | 16 | 2064 × 1080 |

| Components | Item | Configuration |

|---|---|---|

| Cache | Channels | Configurable |

| Line size | 64 bytes | |

| Organization | 16-way set associative | |

| Mapping | Tag, Index, Channel, Offset | |

| Size | 512 lines | |

| Replacement | Least Recently Used (LRU) | |

| Interconnect | Data width | 128 bits |

| Arbitration | Round-robin | |

| Transaction size | 64 bytes | |

| Multiple outstanding | Max. 16 | |

| Memory Controller | Mapping | Row, Bank, Col, Channel, Col |

| Request queue | 16 entries | |

| Memory (DRAM) | Model | DDR3-800 |

| Timing | t-t-t = 5-5-5 | |

| Channels | Configurable | |

| Scheduling | bank-hit first | |

| Banks | 4 |

| Workloads | Type | Component | Operation | Access | Pad Size |

|---|---|---|---|---|---|

| Camera preview | NC | Camera | Write | Raster scan | 0 |

| Display | Read | Raster scan | 0 | ||

| Image scaling × 1.5 | NC | Camera | Write | Raster scan | 0 |

| Scaler | Read, Write | Raster scan | 0 | ||

| Display | Read | Raster scan | 0 | ||

| Image blending | NC | Blender | Read, Read, Write | Raster scan | 0 |

| Rotated display | NC | Display | Read | Vertical | 0 or TranSize |

| Rotated preview | NC | Camera | Write | Vertical | 0 or TranSize |

| Display | Read | Raster scan | 0 or TranSize | ||

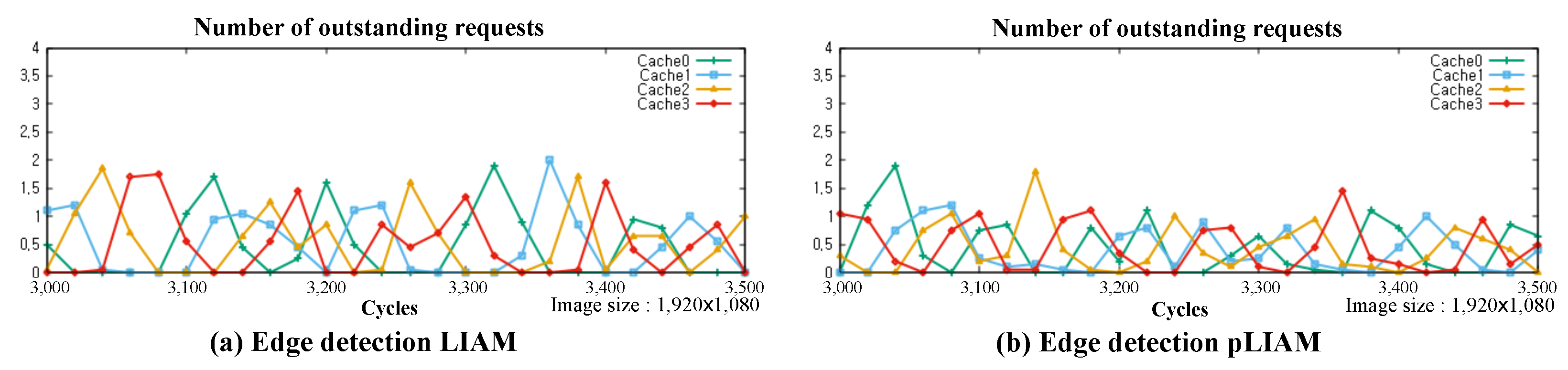

| Edge detection | C | Image processing unit | Read, Read, Write | Block | 0 or LineSize |

| Convolution | C | Image processing unit | Read, Read, Write | Block | 0 or LineSize |

| Workloads | LIAM | pLIAM |

|---|---|---|

| Rotated preview | Channel 0:4.0 | Channel 0:2.68 |

| Channel 1:4.0 | Channel 1:2.62 | |

| Channel 2:4.0 | Channel 2:2.51 | |

| Channel 3:4.0 | Channel 3:2.61 | |

| Rotated display | Channel 0:3.04 | Channel 0:1.38 |

| Channel 1:3.03 | Channel 1:1.38 | |

| Channel 2:3.03 | Channel 2:1.38 | |

| Channel 3:3.04 | Channel 3:1.38 | |

| Convolution | Cache 0:0.53 | Cache 0:0.68 |

| Cache 1:0.51 | Cache 1:0.59 | |

| Cache 2:0.49 | Cache 2:0.61 | |

| Cache 3:0.49 | Cache 3:0.58 | |

| Edge detection | Cache 0:0.46 | Cache 0:0.50 |

| Cache 1:0.43 | Cache 1:0.44 | |

| Cache 2:0.41 | Cache 2:0.43 | |

| Cache 3:0.42 | Cache 3:0.43 |

| Workloads | LIAM | pLIAM | Improvement (%) |

|---|---|---|---|

| Rotated preview | 6.9 | 2.26 | 67.2 |

| Rotated display | 5.33 | 0.25 | 95.3 |

| Convolution | 0.59 | 0.36 | 39.1 |

| Edge detection | 0.51 | 0.47 | 8.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.-Y.; Hur, J.-Y. Adaptive Image Size Padding for Load Balancing in System-on-Chip Memory Hierarchy. Electronics 2023, 12, 3393. https://doi.org/10.3390/electronics12163393

Kim S-Y, Hur J-Y. Adaptive Image Size Padding for Load Balancing in System-on-Chip Memory Hierarchy. Electronics. 2023; 12(16):3393. https://doi.org/10.3390/electronics12163393

Chicago/Turabian StyleKim, So-Yeon, and Jae-Young Hur. 2023. "Adaptive Image Size Padding for Load Balancing in System-on-Chip Memory Hierarchy" Electronics 12, no. 16: 3393. https://doi.org/10.3390/electronics12163393

APA StyleKim, S.-Y., & Hur, J.-Y. (2023). Adaptive Image Size Padding for Load Balancing in System-on-Chip Memory Hierarchy. Electronics, 12(16), 3393. https://doi.org/10.3390/electronics12163393