Abstract

Analysis of the occlusion relationships between different objects in an image is fundamental to computer vision, including both the accurate detection of multiple objects’ contours in an image and each pixel’s orientation on the contours of objects with occlusion relationships. However, the severe imbalance between the edge pixels of an object in an image and the background pixels complicates occlusion relationship reasoning. Although progress has been made using convolutional neural network (CNN)-based methods, the limited coupling relationship between the detection of object occlusion contours and the prediction of occlusion orientation has not yet been effectively used in a full network architecture. In addition, the prediction of occlusion orientations and the detection of occlusion edges are based on the accurate extraction of the local details of contours. Therefore, we propose an innovative multitask coupling network model (MTCN). To address the abovementioned issues, we also present different submodules. The results of extensive experiments show that the proposed method surpasses state-of-the-art methods by 2.1% and 2.5% in Boundary-AP and by 3.5% and 2.8% in Orientation AP on the PIOD and BSDS datasets, respectively, indicating that the proposed method is more advanced than comparable methods.

1. Introduction

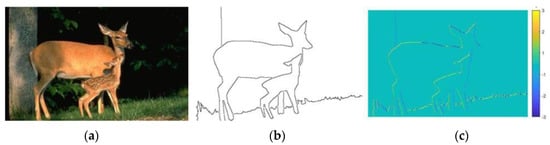

When capturing object projections from a 3D scene in a 2D image, the occlusion boundaries of objects satisfy the depth discontinuities between different objects (or the object and background). Therefore, analysis of the occlusion relationship between objects from monocular images can reveal the relative depth differences between objects in the scene. Figure 1 shows an example from the BSDS300, in which two deer obstruct the lawn; their boundaries are considered occlusion edges, while their shadows are not. This method of inferring occlusion relationships between different objects from a monocular 2D image has been widely applied in many fields, including visual tracking [1,2], mobile robotics [3,4], object detection [5,6,7,8], and segmentation [9,10,11].

Figure 1.

(a) The original image from the BSDS300 dataset; (b) the ground-truth occluded edge map; (c) the ground-truth occlusion orientation map.

A standard strategy employed in the early research on occlusion relationship inferences is to combine machine-learning-based techniques with several features, including convexity, triple points, geometric context, keypoint descriptor, and image features such as Histogram of Oriented Gradient (HOG), and spectral features [12,13,14,15,16,17]. However, these methods mainly use manually designed low-level visual clue features to achieve occlusion contour detection. In addition, owing to the difficulty of defining occlusion edges and occlusion clues, as well as limited training data, the detection performance is nonideal. Recently, with the development of deep learning, convolutional neural networks (CNNs) have been applied to occlusion relationship inferences and occlusion edge detection, and have significantly improved detection performance. The DOC [18] uses an innovative approach to express occlusion relationships, where a closed contour is used to represent an object and the depth discontinuity between different objects (or between objects and the background) is represented by the direction value of contour pixels. In this way, the occlusion relationship inference task is decomposed into occlusion edge detection and occlusion orientation prediction. In addition, two subnetworks are constructed for the two subtasks. The DOOBNet [19] uses an encoder-decoder network structure to obtain multi-scale, multilevel features, where two subnetworks share the features extracted by a backbone network to achieve occlusion orientation and occlusion edge prediction.

Although significant progress has been made in research on occlusion relationship inferences, two main problems have yet to be solved. First, the severe imbalance between edge and non-edge pixels in a sample can lead to the poor prediction accuracy of edges, resulting in coarse occlusion contours that require post-processing with non-maximum suppression (NMS). Second, the coupling relationship between low-level local features and high-level semantic features has not been fully explored and used, which can cause a large amount of noise in the predictions. Low-level local features are the key factors for extracting occlusion boundaries, whereas occlusion orientation prediction relies on both low-level local features and high-level occlusion clues.

Inspired by the DOC [18], DOOBNet [19], OFNet [20], and MT-ORL [21], we propose an innovative multitask coupling network (MTCN) that includes two paths: an occlusion edge extraction path and occlusion orientation prediction path. The proposed model enhances the clarity of occlusion contours by improving edge localization accuracy and suppressing trivial edge noise. In the occlusion edge extraction path, multi-supervision is used, in which each stage of a backbone network has a side output that is used for supervision. During model training, high-level semantic features guide the learning of low-level local features to obtain accurate local spatial information. Furthermore, in the orientation prediction path, we make use of the property that low-level local information represents the basis of high-level occlusion information in deep supervised networks; this allows low-level spatial information to serve as high-level semantic features to obtain high-level occlusion clues through back-propagation, thus improving the accuracy of occlusion orientation prediction. High-level semantic features from deep-side outputs preserve large receptive fields. In the proposed method, the coupling of low-level local spatial information and high-level semantic features is used to obtain multilevel feature maps from various levels, which improves the model’s ability to restore local contour-detail features and remove non-occluded pixels. Moreover, the adaptive context coupling module we designed distinguishes the importance of pixels at different positions, guiding the network toward clearer contours and less surrounding noise and, in turn, further enhancing the model’s ability to obtain clear contours.

In summary, the main contributions of this study are as follows:

- (1)

- An MTCN model that includes two paths, the occlusion edge extraction path and occlusion orientation prediction path, is proposed;

- (2)

- A low-level feature context integration module (LFCI) is proposed that utilizes a self-attention mechanism to distinguish the importance of pixels at different positions and obtain local detail features of objects’ contours;

- (3)

- To enhance the receptive field of the side output features of the backbone network, we propose a multipath receptive field block (MRFB);

- (4)

- To fuse the feature flows extracted at different scales in the network structure, we propose a bilateral complementary fusion module (BCFM) that we designed;

- (5)

- Finally, to address the problem of blurred contour detection caused by a severe imbalance between the edge and non-edge pixels in data samples, we propose an adaptive multitask loss function that we designed.

2. Related Work

Occlusion relationship reasoning from a single image remains extremely challenging. Early work achieved success in simple domains, such as a block world [22] and line drawings [23]. The 2.1-D Sketch [24] uses a mid-level representation to express occlusion relationships. Teo et al. [3] embedded several different local cues (e.g., HOG and extremal edges) and semi-global grouping cues (e.g., Gestalt-like) into structured random forests (SRF) to detect occlusion relationships. Maire et al. [25] designed and embedded boundary relationships’ representations into the segmentation depth ordering inference.

Recently, great success has been found using deep learning in occlusion relationship reasoning. The DOC [18] determines the occlusion relationships using a binary edge detector and predicts object boundaries and occlusion orientation variables. It fully learns to use local and nonlocal clues to recover occlusion relationships. The DOOBNet [19] adopts an attention loss function to improve the loss contribution of false-positive and false-negative samples, solving the imbalance between edge and non-edge pixels. In addition, it uses an encoder structure with skip connections to obtain multi-scale and multilevel features. The OFNet [20] employs a decoder-encoder network structure with two side output paths, and it can share occlusion relationships and learn to use high-level semantic clues. Moreover, the multi-rate context learner (MCL) module can learn more clues about occlusion reasoning near the boundary. In addition, the bilateral response fusion (BRF) module can accurately determine and distinguish foreground and background areas. The MT-ORL [21] uses the OPNet network to solve the limited coupling between occlusion boundary extraction and occlusion orientation prediction. Furthermore, the orthogonal occlusion representation (OOR) can enhance occlusion relationship expression.

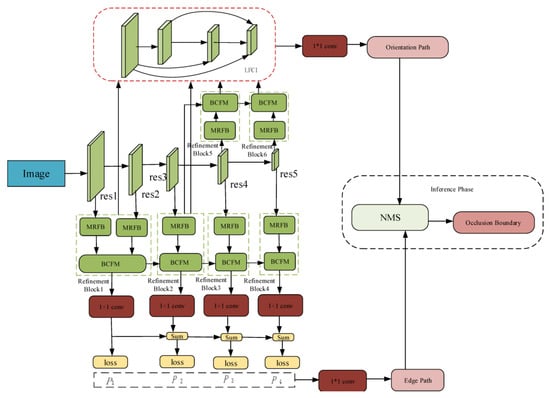

However, the aforementioned methods either use two independent architectures for performing the boundary extraction and orientation prediction tasks or excessively share semantic information in deep stages; thus, they do not fully use coupling between local low-level information and high-level occlusion cues. In contrast, MTCN shares feature only in the shallow stages of the decoder and adopts a multi-scale structure, as shown in Figure 2.

Figure 2.

The overall structure of the proposed network model.

3. MTCN Model

In this section, the overall structure of the proposed network model and details of each internal module are presented. To address the imbalance between edge and non-edge pixels, we improve the loss function by combining the cross entropy, dice loss, and feature unmixing loss functions to obtain an improved adaptive loss function.

3.1. Network Architecture

In the process of occlusion relationship reasoning, two important tasks are extracting complete and clear object contours and accurately predicting the orientation of occlusion edge pixels. These dense prediction tasks aim to recover pixel-level spatial details and understand high-level occlusion clues. However, there are differences in the utilization of specific contextual features; namely, contour extraction focuses on local detailed information, whereas the occlusion orientation prediction both pays attention to local detailed information and focuses on the spatial positional relationships between objects. Therefore, to consider the connection and difference between the two tasks, the two tasks share low-level local detail features and separate high-level semantic occlusion clues, reducing the mutual interference between the two tasks due to excessive coupling.

As shown in Figure 2, MTCN consists of an encoder and a decoder. The encoder uses a ResNet50 [26] backbone network and is composed of two mutually coupled edge detection paths and orientation prediction paths. As in previous studies, the ResNet50 [26,27] is pre-trained on the ImageNet dataset. The decoder consists of the edge detector and orientation predictor, which mainly include a low-level feature context aggregation module, a BCFM, and a multipath receptive field module. The proposed model fully exploits the multi-scale features generated at different stages of the network on two paths while avoiding excessive coupling between low-level spatial positional information and high-level occlusion clues in the two subtasks.

3.1.1. Edge Extraction Path

In the edge detection process, extracting a clear, accurate edge is the basis for an occlusion relationship inference. The occlusion edge represents an object’s position and defines the boundary position between bilateral regions. The occlusion edge requires the preserved resolution of the original image and a larger receptive field to achieve accurate positioning and perceive the mutual constraint relationship of the boundary pixels.

The structure of the edge extraction path is shown in Figure 3, which shows that the input image is propagated from the shallow stages to the deep stages along the backbone network ResNet50. Three residual modules, denoted by rest1, rest2, and rest3, output low-level feature clues, which are used to encode rich spatial information. The side outputs of the shallow stages have higher resolution and less channel information, qualities that are more conducive to learning low-level information for a specific task. The side outputs of the deep stages (i.e., rest4 and rest5) represent high-level semantic features. These deep stages contain fewer parameters and spatial details but provide rich abstract and global information for use in downstream tasks. The side output feature maps of the different resolutions generated by the encoder are input into the corresponding refinement blocks. The multipath receptive field enhancement module and BCFM are connected in a cascaded manner. The side output of each intermediate BCFM is passed through a 1 × 1 convolution to obtain the multi-scale predicted edges. Finally, all of the edges predicted by the intermediate layer are fused to construct the boundary map. The proposed structure can integrate feature maps of different scales generated in the encoding process, and semantic edges can be obtained through fusion. In the model training process, the multi-scale feature maps of the side outputs can be fully used for deep supervision learning. The generated boundary map contains both low- and high-level features, ensuring the consistency and accuracy of occlusion edges. Specifically, the edge detection path provides a complete and continuous contour, which constitutes the object region.

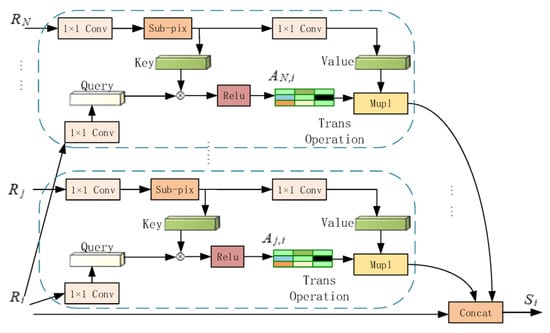

Figure 3.

The structural diagram of the LFCI.

3.1.2. Orientation Prediction Path

Differently from the edge extraction path, the orientation path predicts the orientation of contour pixels to describe the occlusion relationship between the object regions. In this study, we posit that accurately obtaining contour pixels is the basis for direction prediction. Therefore, we present a fully connected structure designed from the low level to the high level to achieve position- and stage-adaptive context aggregation. As shown in Figure 3, the output features of refinement blocks 1, 2, 5, and 6 are fed to the lower-level feature context integration module, which enhances features at each stage with a context adaptively selected from the lower stages through cross-stage self-attention. Refinement blocks 1 and 2 learn from the dual-supervision signals from both the edge path and the direct path. Therefore, they can learn low-level semantic features that contain more spatial and positional information than features learned through single supervision from the edge path. Refinement blocks 5 and 6 output features that focus more on restoring relationships between different object regions. Therefore, in the proposed model, the decoder has two sub-paths sharing the shallow layers and separating the deep layers. This structure avoids excessive sharing between the two sub-paths, which can cause mutual interferences and thus helps improve the accuracy of orientation prediction.

3.1.3. Submodule Structure

- (1)

- Low-level Feature Context Integration Module

Inspired by [28], we propose a low-level feature context integration module that enhances features from all low-level stages by adaptively selecting the context using a cross-stage self-attention mechanism. Then, the enhanced features are up-sampled from all stages to the lowest-stage resolution and fused. The fused features contain rich, adaptively selected context information for occlusion contour restoration while also preserving high-resolution detail information.

The feature map aggregation process in the context of stage s presented in Figure 3, where the feature map of stage is denoted as . Feature of the lower stages, ,, where represents the total number of stages and is used as a source of spatial position information. The adaptive context aggregation represents the concatenation of all context information found from all lower-level features and , which can be mathematically expressed as

where has the same resolution as , and the Trans operation is adopted to fuse the cross-stage features and , which are expressed in Equation (2).

The Trans operation is presented in the dashed box in Figure 4, which shows that first, a 1 × 1 convolution and up-sampling are performed on to transform it into as a key with the same resolution as . Next, is transformed into as a query using a 1 × 1 convolution. Then, the dot product is used to calculate the similarity between the elements of and , and the result is processed by the function to obtain . The scale of is jointly determined by the size values of and . As the network depth increases, contains larger receptive fields and more occlusion clues. Therefore, as the value of increases, the size of decreases. Finally, the dot product of and provides , where is obtained by performing two consecutive 1 × 1 convolution and sub-pixel operations on .

Figure 4.

The structural diagram of the BCFM.

For all enhanced features , up-sampling is performed using sub-pixels, and the features are summed to obtain the final output feature , which can be mathematically expressed as follows:

where represents the sub-pixel convolution for up-sampling and has the same resolution as the first-stage data (i.e., half the size of the original image).

The proposed low-level feature context aggregation module allows class-agnostic edges to attach appropriate occlusion clues directly, enabling the occlusion contours to stand out from the general edges. In addition, the Trans operation can integrate all spatial detail information from lower stages, thus providing sufficient local information for the orientation prediction of edge pixels.

- (2)

- BCFM

Inspired by [28,29] and the following observations, we present the BCFM we designed to fuse the feature flows extracted at different scales in the network structure. This module not only restores the missing edge local details but also removes the cluttered, non-occluded pixels. The main idea behind it is to use the two-stage feature information complementarity to conduct fully bilateral edge response fusion and enhance the unified orientation fusion map of occlusion. The backbone network ResNet50 [26,27] is divided into five stages, corresponding to an input to a multipath receptive field module and obtaining side output features, as shown in Figure 2. The side output features of the receptive field module are cascaded and fed to the BCFM’s input. Although the two stages in each group are similar in terms of characteristics, they have complementary clues owing to the step-by-step encoding in the CNN structure. Given a set of features containing two stages, the BCFM compensates for the details ignored in the higher-stage features due to down-sampling, dilated convolution, and the restricted receptive field of the lower stages.

In particular, residual connections are used inside the BCFM, as shown in Figure 4. Assuming that a set of side outputs of the receptive field is given and denoted by , where and are inputs that are fed to the Relu activation function to obtain and , then input needs to first be passed through the sub-pixel convolution layer to convert the low-resolution feature map into a high-resolution one. Furthermore, to compensate for the potential response lost in and , we can calculate and . Then, these values can be separately multiplied by and and passed through the 1 × 1 convolution layer. The calculation results can then be fused with the feature map from the shortcut path to obtain a set of enhanced features . Finally, and can be concatenated into , which is output. By aggregating the two responses in the same module using the BCFM, we can obtain the enhanced feature . The corresponding mathematical formulas are as follows:

where represents the function, represents the 1 × 1 convolution layer, and represents the sub-pixel upsampling operation.

However, in some cases, there may be no response even when considering the complementary information from the two stages. In addition, such a well-designed convolution block eliminates the gridding artifacts caused by the dilated convolution in high-level layers [30].

- (3)

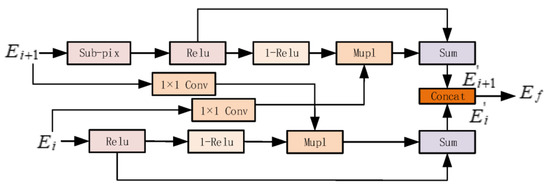

- Multipath Receptive Field Block

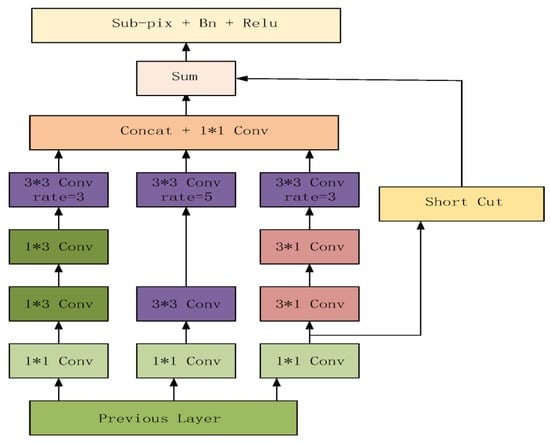

Owing to the reasoning of occlusion relationships between different objects, accurate order prediction of the foreground and background regions is required. Therefore, an embedded receptive field enhancement module we designed is implemented into the network structure to perceive information on object edges. Inspired by the work presented in [31], we propose the MRFB. The MRFB structure includes several parts: a multibranch convolution layer using different convolution kernels, a dilation convolution layer at the end of each branch (used to form constraints between the contour pixels), and a shortcut branch, as shown in Figure 5.

Figure 5.

The structural diagram of the MRFB.

Specifically, in each branch, the bottleneck structure, which consists of a 1 × 1 convolution layer, is used to integrate various channel clues and promote bilateral feature aggregation across channels at the same position. The central branch consists of two 3 × 3 convolution modules, and the other two branches consist of two 1 × 3 and two 3 × 1 strip convolution modules. The two stripe convolution paths simultaneously increase the receptive field and feature weight of the central pixel in the orthogonal direction, thus accumulating more orthogonal signals with more information for the subsequent prediction of the occlusion direction. At the end of each branch, dilation convolution with a different ratio is used, which can capture richer contextual information while maintaining the same number of parameters. In addition, the shortcut path design from ResNet [26] is used. Finally, the feature maps output by the branches are concatenated and then passed through a 1 × 1 convolutional layer. The resulting feature map is then fused with the feature maps from the shortcut path.

- (4)

- Multitask Loss Function

For the proposed CNN-based multitask separation network model, it is necessary to achieve accurate extraction of occlusion contours and prediction of tangential directions of contour pixels simultaneously. Therefore, the loss function should be derived considering the following three aspects: the fusion of multitask loss functions, the accuracy of detection results, and the severe imbalance between the contour and non-contour pixels. Inspired by previous work [32,33], we propose a multitask loss function. The total loss L is calculated as follows:

where and represent the loss functions of the contour extraction path and the orientation prediction path, respectively; and represent the weights of and , respectively.

In the contour extraction path, four side contour feature maps and a fused contour feature map . The contour extraction loss function is defined as follows:

where ; and are the weights of the fused contour loss function and the side contour loss functions, respectively; represents the predicted contour feature map; and is the ground-truth label value.

To obtain accurate occlusion contours, we use an adaptive loss function of the BMRN proposed in [29], which is defined as ; is the dice coefficient loss function of the LPCB proposed by Deng et al. [31]; and is the standard cross-entropy loss function of the HED proposed in [32]. All loss functions calculate all pixels in the corresponding feature map. Furthermore, is the loss function of the orientation path, which calculates only the ground-truth boundary pixels; is the orthogonal orientation regression loss (OOR) defined in the MT-ORL [21], which is defined as follows:

where and represent the predicted values of the network model, and 𝜃 is the ground truth of the occlusion orientation.

4. Results and Discussion

The performance of the proposed network model was verified by a large number of experiments. In addition, the design choices of the network model were validated through ablation experiments.

4.1. Datasets and Implementation Details

The proposed model was evaluated on two public relationship datasets, the PIOD [23] and BSDS [22] datasets. The PIOD dataset includes 9175 training images and 925 test images; in this dataset, each original image corresponds to two labels: the object occlusion contour label and the occlusion orientation label. The BSDS ownership dataset contains 100 training images and 100 test images. Similarly, to the PIOD dataset, each original image in the BSDS dataset corresponds to two labels: the occlusion object contour label and the occlusion orientation label. Subsequently, during the training process, all images were randomly divided into two datasets containing images cropped to a resolution of 320 × 320 px, and the original size remained unchanged during the testing process.

- (1)

- Implementation Details

The proposed network model was evaluated on the current mainstream framework PyTorch, and AdamW was used as an optimizer for the network model training. The backbone encoder was the ResNet50 [26,27] architecture pre-trained on the ImageNet dataset. The other hyper-parameters were set as follows: the minimum batch size was 10; the global learning rate, was 3 × 10−4; and the momentum, was 0.9. The PIOD and BSDS datasets were iteratively trained 40,000 and 20,000 times, respectively, with a 10-fold decrease in the learning rate every 1000 iterations. , and were set to 1.1, 2.1, 1.7, 1.0, and 1.0, respectively.

- (2)

- Evaluation Metrics

During the testing phase, the precision and recall of the proposed model were calculated. For edge extraction and occlusion orientation prediction, the boundary precision recall (BPR) and orientation precision recall (OPR) were used to represent the corresponding accuracy and recall of the edge and occlusion orientation, respectively. The three evaluation criteria were calculated from the PR fixed contour threshold (ODS), best threshold of an image (OIS), and average precision (AP). In the comparison experiments, the B- and O-Metric were used to represent the metrics related to the BPR and OPR, respectively. Note that only the correctly detected contour pixels were calculated for the OPR.

4.2. Ablation Experiments

We analyzed the effect of the proposed algorithm’s sub-module on the performance of the overall network model through ablation experiments, using the BSDS500 training and verification sets to train the network model and the test set to evaluate model performance. Table 1 shows the impact of different modules on the algorithm performance in the proposed network model. LFCI had the greatest impact on the overall model performance, while BCFM had the smallest impact on the model performance.

Table 1.

Comparative analysis on the impact of ILFC, MRBF, and BCFM modules on the overall network performance provided by the research institute. All numbers are percentages.

4.3. Comparison Experiments

The proposed method was compared with several recent methods, including DOC-HED [18], DOC-DMLFOV [18], DOOBNet [19], OFNet [20], MT-ORL [21], and SRF-OCC [3].

- (1)

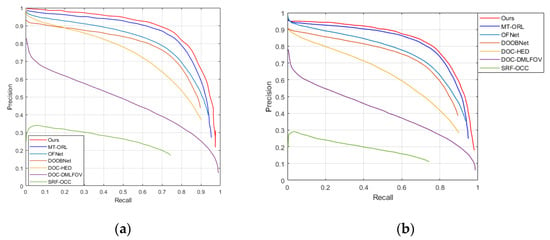

- Performance Comparison on BSDS Dataset

Training the model on the challenging BSDS dataset, with limited training samples, is difficult. Still, on this dataset, the proposed method could achieve better performance than the other methods in terms of the BPR and OPR, as shown in Figure 6a and Figure 6b, respectively. Furthermore, as shown in Table 2, the proposed method achieved the best performance in terms of ODS, OIS, and AP among all methods, improving the B-AP and O-AP values by 2.1% and 5.2%, respectively, compared with the state-of-the-art methods. Moreover, our running efficiency was also the highest, reaching 33.2 FPS. The results validate the effectiveness of the proposed method in both occlusion orientation prediction and contour extraction.

Figure 6.

The precision-recall curves (PRC) of different methods on the BSDS ownership dataset. (a) PRC of boundary. (b) PRC of occlusion.

Table 2.

Quantitative comparison results of different methods on the BSDS test dataset. All numbers are percentages. ₤ refers to GPU running time. † indicates training boundary extraction branch only. ‡ indicates training orientation prediction branch only.

- (2)

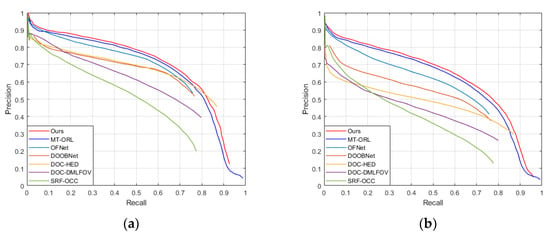

- Performance Comparison on PIOD Dataset

The precision-recall curves of different methods in the contour extraction and occlusion orientation prediction tasks on the PIOD dataset are shown in Figure 7a and Figure 7b, respectively. The results show that the proposed method achieved significant improvements in both precision and recall compared with the other methods. The experimental results of the ODS, OIS, and AP metrics of different methods are presented in Table 3, which shows that the proposed method outperformed the MT-ORL method. Furthermore, the proposed method achieved the shortest running time and the highest efficiency (34.6 FPS) on the PIOD test set.

Figure 7.

The precision-recall curves (PRC) of different methods on the PIOD ownership dataset. (a) PRC of boundary. (b) PRC of occlusion.

Table 3.

Quantitative comparison results of the performance of different methods on the PIOD test set, where † denotes training only the contour extraction branch, and ‡ denotes training only the orientation prediction branch. All numbers are datasets in percentages. ₤ refers to the GPU running time.

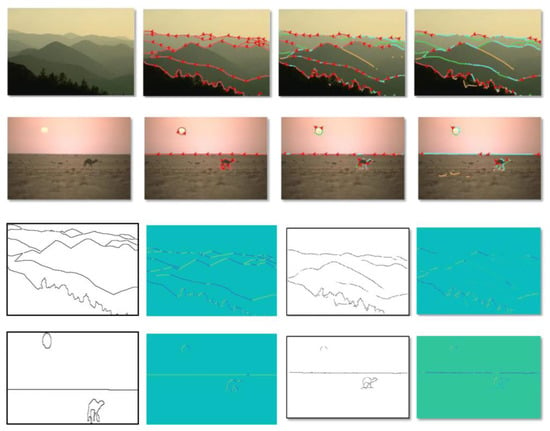

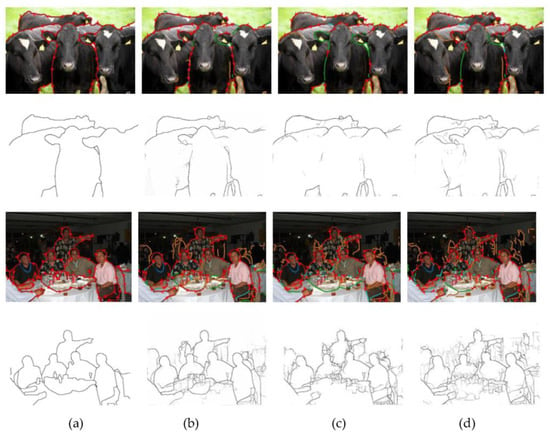

Finally, the contour and occlusion relationship maps were constructed. The results of the experiments on multiple images from the BSDS and PIOD datasets show that the contours extracted by the proposed method were more complete and clearer than those of the DOOBNet [19] and MT-ORL [21] methods, as shown in Figure 8 and Figure 9. In addition, as shown in Figure 8, in a large area of the solid-colored scene, the proposed method had a sufficient receptive field, as opposed to a small receptive field, making it difficult to perceive a large area of solid-colored objects and correctly predict this relationship. Furthermore, many foreground objects appeared in the scene, and the edge occlusion relationships were complex and difficult to distinguish, as shown in Figure 9. However, the proposed method could accurately detect the occluded edges, showing stronger generalization potential compared with the other methods.

Figure 8.

Qualitative comparison results of different methods on the BSDS test set. The first and second rows, from left to right, are the original image, the ground-truth occlusion relationship map, and the occlusion relationship maps obtained by the proposed method and MT-ORL method, respectively. The third and fourth rows, from left to right, are the edge and orientation maps obtained by the proposed method and MT-ORL method, respectively.

Figure 9.

The predicted occlusion relationship and edge maps obtained by different methods on the PIOD test set. From left to right, (a) denotes the ground-truth occlusion relationship and edge maps, and (b–d) denote the occlusion relationship and edge maps predicted by the proposed method, MT-ORL method, and DOOBNet method.

In summary, the proposed method could significantly outperform other methods in edge extraction and occlusion direction prediction, thus validating its effectiveness. In Figure 8 and Figure 9, the pixels on the right side of the arrows represent the image background, and those on the left side represent the foreground image. The “red” arrow pixels indicate the true occlusion direction and the boundary marked by “cyan” pixels is correct, but the occlusion is incorrect. Moreover, the “green” pixels indicate false-negative boundaries, while the “orange” pixels denote false-positive boundaries.

5. Conclusions

This paper proposed an innovative network model, called MTCN, that includes two subtasks, extracting occluded object contours and predicting occlusion directions, by constructing the corresponding sub-paths. In addition, two sub-paths are constructed to share shallow stages and separate deep stages in the network structure. Moreover, the BCFMs, LFCI, and a multipath receptive field module are incorporated into the proposed network structure. During the training of the proposed model, the two sub-paths can either be trained separately or combined. The proposed method was verified by experiments, and the experimental results show the superiority of the proposed method compared to state-of-the-art methods on the BSDS and PIOD datasets.

Author Contributions

Conceptualization, S.B.; methodology, S.B.; validation, S.B. and J.X.; Investigation and resources, S.B. and G.X.; writing—original draft preparation, S.B.; writing—review and editing, S.B. and Y.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61772033.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhou, Y.; Bai, X.; Liu, W.; Latecki, L. Fusion with Diffusion for Robust Visual Tracking; NIPS: Lake Tahoe, Nevada, 2012. [Google Scholar]

- Zhou, Y.; Ming, A. Human action recognition with skeleton induced discriminative approximate rigid part model. Pattern Recognit. Lett. 2016, 83, 261–267. [Google Scholar] [CrossRef]

- Teo, C.L.; Fermuller, C.; Aloimonos, Y. Fast 2d Border Ownership Assignment; In Proceedings of the CVPR 2015, Boston, MA, USA, 7–12 June 2015.

- Calderero, F.; Caselles, V. Recovering relative depth from low-level features without explicit t-junction detection and interpretation. Int. J. Comput. Vis. 2013, 104, 38–68. [Google Scholar] [CrossRef]

- Ayvaci, A.; Soatto, S. Detachable Object Detection: Segmentation and Depth Ordering from Short-Baseline Video. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1942–1951. [Google Scholar] [CrossRef] [PubMed]

- Gao, T.; Packer, B.; Koller, D. A Segmentation-Aware Object Detection Model with Occlusion Handling. In Proceedings of the CVPR 2011, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1361–1368. [Google Scholar]

- Fan, D.-P.; Lin, Z.; Ji, G.-P.; Zhang, D.; Fu, H.; Cheng, M.-M. Taking a Deeper Look at Co-Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2919–2929. [Google Scholar]

- Pang, Y.; Zhao, X.; Zhang, L.; Lu, H. Multi-Scale Interactive Network for Salient Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2020; pp. 9413–9422. [Google Scholar]

- Sargin, M.E.; Bertelli, L.; Manjunath, B.S.; Rose, K. Probabilistic occlusion boundary detection on spatio-temporal lattices. Proceeding of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 560–567. [Google Scholar]

- Marshall, J.A.; Burbeck, C.A.; Ariely, D.; Rolland, J.P.; Martin, K.E. Occlusion edge blur: A cue to relative visual depth. JOSA A 1996, 13, 681–688. [Google Scholar] [CrossRef] [PubMed]

- Alatise, M.B.; Hancke, G.P. A review on challenges of autonomous mobile robot and sensor fusion methods. IEEE Access 2020, 8, 39830–39846. [Google Scholar] [CrossRef]

- Saxena, A.; Chung, S.; Ng, A. Learning Depth from Single Monocular Images. In Proceedings of the NIPS, Vancouver, BC, Canada, 5–8 December 2005. [Google Scholar]

- Jia, Z.; Gallagher, A.; Chang, Y.-J.; Chen, T. A Learning-Based Framework for Depth Ordering. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 294–301. [Google Scholar]

- Derek, H.; Alexei, E.; Martial, H. Recovering Occlusion Boundaries from an Image. In Proceedings of the ICCV 2007, Rio de Janeiro, Brazil, 14–20 October 2007. [Google Scholar]

- Hoiem, D.; Efros, A.A.; Hebert, M. Recovering occlusion boundaries from an image. Int. J. Comput. Vis. 2011, 91, 328–346. [Google Scholar] [CrossRef]

- Zhou, Y.; Bai, X.; Liu, W.; Latecki, L. Similarity Fusion for Visual Tracking. Int. J. Comput. Vis. 2016, 118, 337–363. [Google Scholar] [CrossRef]

- Avola, D.; Bernardi, M.; Cascio, M.; Cinque, L.; Foresti, G.L.; Massaroni, C. A New Descriptor for Keypoint-Based Background Modeling. In Image Analysis and Processing–ICIAP 2019; Ricci, E., Rota Bulò, S., Snoek, C., Lanz, O., Messelodi, S., Sebe, N., Eds.; ICIAP 2019; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11751. [Google Scholar] [CrossRef]

- Wang, P.; Yuille, A. DOC: Deep OCclusion Estimation from a Single Image. In Computer Vision–ECCV 2016; Lecture Notes in Computer Science; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2016; Volume 9905. [Google Scholar] [CrossRef]

- Wang, G.; Wang, X.; Li, F.W.B.; Liang, X. DOOBNet: Deep Object Occlusion Boundary Detection from an Image. In Computer Vision–ACCV 2018; Lecture Notes in Computer Science; Jawahar, C., Li, H., Mori, G., Schindler, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11366. [Google Scholar] [CrossRef]

- Lu, R.; Xue, F.; Zhou, M.; Ming, A.; Zhou, Y. Occlusion-Shared and Feature-Separated Network for Occlusion Relationship Reasoning. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republica of Korea, 27 October–2 November 2019; pp. 10342–10351. [Google Scholar] [CrossRef]

- Feng, P.; She, Q.; Zhu, L.; Li, J.; Zhang, L.; Feng, Z.; Wang, C.; Li, C.; Kang, X.; Ming, A.; et al. MT-ORL: Multitask Occlusion Relationship Learning. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9344–9353. [Google Scholar] [CrossRef]

- Ren, X.; Fowlkes, C.C.; Malik, J. Figure/Ground Assignment in Natural Images; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; LNCS; Volume 3952, pp. 614–627. [Google Scholar]

- Cooper, M.C. Interpreting line drawings of curved objects with tangential edges and surfaces. Image Vis. Comput. 1997, 15, 263–276. [Google Scholar] [CrossRef]

- Nitzberg, M.; Mumford, D. The 2.1-D sketch. In Proceedings of the Third International Conference on Computer Vision, Osaka, Japan, 4–7 December 1990; pp. 138–144. [Google Scholar] [CrossRef]

- Maire, M.; Narihira, T.; Yu, S.X. Affinity CNN: Learning Pixel-Centric Pairwise Relations for Figure/Ground Embedding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 174–182. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- He, T.; Zhang, Z.; Zhang, H.; Zhang, Z.; Xie, J.; Li, M. Bag of tricks for image classification with convolutional neural networks. In Proceedings of the IEEE/CVF Conference Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 558–567. [Google Scholar]

- Bo, Q.; Ma, W.; Lai, Y.-K.; Zha, H. All-Higher-Stages-In Adaptive Context Aggregation for Semantic Edge Detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 6778–6791. [Google Scholar] [CrossRef]

- Bao, S.-S.; Huang, Y.-R.; Xu, G.-Y. Bidirectional Multiscale Refinement Network for Crisp Edge Detection. IEEE Access 2022, 10, 26282–26293. [Google Scholar] [CrossRef]

- Yu, F.; Koltun, V.; Funkhouser, T. Dilated Residual Networks. In Proceedings of the CVPR 2017, Honolul, HI, USA, 21–26 July 2017; Volume 3, p. 4. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. In Computer Vision–ECCV 2018; Lecture Notes in Computer Science; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: Berlin/Heidelberg, Germany, 2018; Volume 11215. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).