Abstract

In recent years, significant progress has been made in the application of federated learning (FL) in various aspects of cyberspace security, such as intrusion detection, privacy protection, and anomaly detection. However, the robustness of federated learning in the face of malicious attacks (such us adversarial attacks, backdoor attacks, and poisoning attacks) is weak, and the unfair allocation of resources leads to slow convergence and inefficient communication efficiency regarding FL models. Additionally, the scarcity of malicious samples during FL model training and the heterogeneity of data result in a lack of personalization in FL models. These challenges pose significant obstacles to the application of federated learning in the field of cyberspace security. To address these issues, the introduction of meta-learning into federated learning has been proposed, resulting in the development of federated meta-learning models. These models aim to train personalized models for each client, reducing performance discrepancies across different clients and enhancing model fairness. In order to advance research on federated meta-learning and its applications in the field of cyberspace security, this paper first introduces the algorithms of federated meta-learning. Based on different usage principles, these algorithms are categorized into client-level personalization algorithms, network algorithms, prediction algorithms, and recommendation algorithms, and are thoroughly presented and analyzed. Subsequently, the paper divides current cyberspace security issues in the network domain into three branches: information content security, network security, and information system security. For each branch, the application research methods and achievements of federated meta-learning are elucidated and compared, highlighting the advantages and disadvantages of federated meta-learning in addressing different cyberspace security issues. Finally, the paper concludes with an outlook on the deep application of federated meta-learning in the field of cyberspace security.

1. Introduction

The rapid development of internet services has led to a significant increase in network attacks, with cyber threats becoming increasingly complex and automated. Various security incidents frequently occur in cyberspace, resulting in the compromise of personal privacy, substantial economic losses for enterprises, and threats to national security. Consequently, many traditional cyberspace security methods have become ineffective in dealing with new network threats. Additionally, the field of cyberspace security often involves a large amount of data dispersed across different locations, posing security risks. Therefore, there is a need for secure collaborative learning and cooperative defense to protect data privacy. Federated learning (FL) is a distributed learning framework in machine learning. Its core idea is to shift the model training process from a central server to the client side, where each client performs model training locally using its own data. This approach aims to safeguard data privacy and reduce communication costs. Federated learning, a research hotspot in recent years, has made significant progress in the field of cyberspace security thanks to its ability to ensure data privacy and enable model sharing. Campos et al. [1] evaluated an FL intrusion detection method based on multi-class classifiers, which considered the data distribution when detecting different attacks in Internet of Things (IoT) scenarios. Pei et al. [2] proposed a personalized federated anomaly detection framework for network traffic anomaly detection, where data were aggregated under privacy protection and personalized models were constructed through fine-tuning. Manoharan et al. [3] introduced a novel system for detecting poisoned attacks on the training set in the context of federated learning with generative adversarial networks. This system easily detects vulnerabilities and identifies poisoned attack regions that are more likely to induce network vulnerabilities.

However, the application of federated learning in the field of cyberspace security faces the following challenges: (1) poor robustness against malicious attacks; (2) inefficient model communication due to unfair resource allocation; (3) imbalanced data distribution, with a scarcity of malicious samples compared to normal samples; and (4) varying data sources and quantities among different clients, resulting in significant performance discrepancies in the global model on different clients and a lack of personalization. To address the aforementioned issues, scholars have incorporated meta-learning into federated learning by employing a shared meta-learner in place of the conventional shared global model. The algorithm is maintained on the server, which then distributes the algorithm parameters to the clients for model training. Subsequently, the clients upload their test results to the server for algorithm updates, thereby creating the federated meta-learning (FML) framework. In FML, each client is considered as a task, and meta-learning is leveraged to rapidly adapt to new tasks and train personalized models for the clients. This approach utilizes meta-learning algorithms to rapidly adapt to new poisoning tasks, enhances the robustness of federated learning against malicious attacks, and protects data privacy. Furthermore, federated meta-learning facilitates fair resource allocation, improves communication efficiency, and accelerates convergence speed. Leveraging the ability of meta-learning to learn quickly from a small number of samples, it can also enable real-time detection of fraudulent behavior.

Based on the problems to be addressed, federated meta-learning algorithms can be classified into four categories: client personalization algorithms, network algorithms, prediction algorithms, and recommendation algorithms. Client personalization algorithms aim to address the issue of non-independent and identically distributed data and improve the performance and adaptability of models on each client while safeguarding data privacy and enhancing resource utilization efficiency. Network algorithms aim to tackle challenges in real-world graph-based problems, such as data collection, labeling, and privacy protection. They also address the problem of efficient learning and quick adaptation to different tasks on nodes with limited resources, thereby achieving rapid adaptation and efficient model updates. Prediction algorithms focus on challenges in click-through rate (CTR) prediction and wireless traffic prediction, enabling personalized and efficient model updates while reducing communication costs. Recommendation algorithms aim to address privacy protection, personalization, and efficiency issues in recommendation systems. By utilizing distributed training, model parameter sharing, and meta-learning strategies, these algorithms provide personalized recommendation results while protecting user privacy.

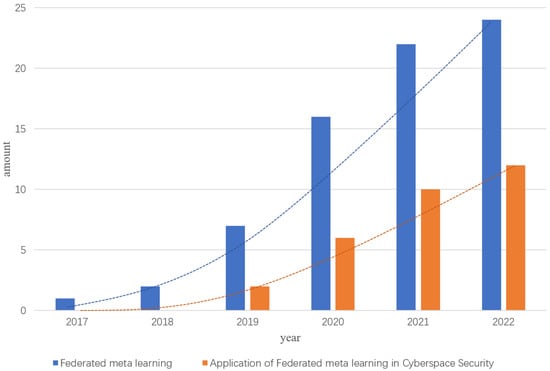

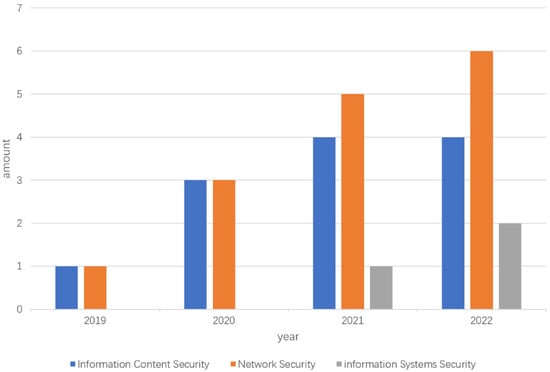

Federated meta-learning has significant implications for privacy and security of user data, as well as for enhancing model performance and efficiency in the field of cyberspace security. The application of federated meta-learning in the realm of cyberspace security has witnessed exponential growth, as depicted in Figure 1, which displays the number of research studies conducted in recent years, organized by year. These studies can be classified into three categories based on their application domains: information content security, network security, and information system security. The quantity of literature produced in each domain is presented in Figure 2.

Figure 1.

Exponential growth chart of the number of relevant research papers.

Figure 2.

Number of research papers in each application direction.

However, it is worth noting that there is a dearth of comprehensive review articles on the topic of federated meta-learning and its application in the field of cyberspace security in the existing research literature. A comprehensive analysis and comparison of the current state of federated meta-learning have a significant positive impact on driving technological advancement, efficient resource utilization, data privacy protection, enhancing cybersecurity, and fostering cooperation and sharing. Such analysis and comparison offer crucial guidance and support for the development and application of this technology. Amidst the ever-growing demands in the realm of cybersecurity, the adoption of federated meta-learning will aid in addressing issues like data privacy and resource constraints, thereby further elevating the level of cybersecurity. Consequently, in order to facilitate further research and promote the application of federated meta-learning in the domain of cyberspace security, while enabling a deeper understanding of this technology and its applications among researchers and enterprises, this paper aims to analyze and compare the current state of research on federated meta-learning, both domestically and internationally. Specifically, it will delve into three branches of its application in cyberspace security: information content security, network security, and information system security, providing substantial insights to support future studies.

The primary contributions of this paper are as follows:

- We provide a comprehensive overview of the concepts, classification, challenges, and applications of federated learning and meta-learning, as presented in Section 2.

- We systematically define federated meta-learning and categorize the algorithms into four types: client-specific algorithms, network algorithms, prediction algorithms, and recommendation algorithms. Each type is introduced in detail, corresponding to the content in Section 3.

- We conduct an in-depth analysis and exploration of the application research progress in federated meta-learning in subdomains of cybersecurity, including information content security, network security, and information system security. This analysis is covered in Section 4.

- We summarize the challenges faced by federated meta-learning and its application in cybersecurity in the context of cyberspace. Furthermore, we outline future research prospects, as discussed in Section 5.

2. Overview of Federated Learning

2.1. Federated Learning

2.1.1. The Concept of Federated Learning

In the era of booming artificial intelligence and big data technologies, massive amounts of data are being generated. The extraction of useful information from such a vast volume of data and harnessing its potential value for the benefit of human society have given rise to the field of artificial intelligence and machine learning [4,5]. Looking back at the development of machine learning, it can be divided into three major stages: centralized learning, distributed on-site learning, and federated learning [6]. A comparative illustration of these three stages is shown in Figure 3.

Figure 3.

Comparison of three stages of machine learning.

Centralized learning, which is currently the most widely used learning mode, follows the fundamental idea of “model stationary, data moving” [7]. In this mode, data from all terminals need to be transmitted to the central server, where the machine learning tasks are executed based on the collected data. Centralized learning concentrates all the data on the central server, which provides a broader scope of potential data knowledge. Consequently, the learned model is more reflective of the underlying value of the data, resulting in relatively better performance [8]. However, centralized learning still faces potential risks in data security despite using encryption during data transmission [9]. As a result, the centralized learning mode no longer meets the requirements of social development, and machine learning has entered the stage of distributed on-site learning.

Distributed on-site learning addresses data security and privacy protection concerns and is primarily realized through edge computing [10]. Edge computing is one implementation approach of distributed on-site learning, which confines the data requiring analysis to the edge environment of devices for on-site learning. The final learning outcomes are then aggregated and stored on the central server. By distributing a centralized learning task to different device edges for on-site learning, sensitive data no longer need to be transmitted to the central server, reducing the circulation rate of sensitive information and effectively protecting the data privacy of all parties involved. However, this approach also leads to the phenomenon of “data islands” due to the lack of data communication among the parties. The inability to exchange and integrate data within systems results in a narrow perspective and a lack of global and generalizable knowledge in the learned models [11]. To address the data privacy issues of centralized learning and the data isolation problem of distributed on-site learning, federated learning was proposed, marking a new stage in the development of machine learning [12].

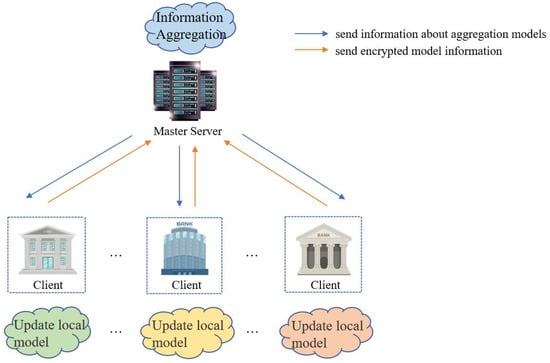

Federated learning, first introduced by McMahan et al., was initially used for the Google Input Method System to enable candidate word prediction [9,13,14,15]. Federated learning is a distributed learning framework in machine learning. Its core idea is to shift the model training process from a central server to client devices, enabling multiple client datasets to collaborate in model training, thereby safeguarding data privacy and reducing communication costs. In contrast to the “model stationary, data moving” approach of centralized learning, federated learning adopts a “data stationary, model moving” learning mode [16]. The central server plays a crucial role in coordinating and controlling the entire process of federated learning, following predefined learning strategies and rules of the federated learning algorithm. Before the commencement of federated learning, the central server is responsible for initializing the global model and distributing it to participating client devices. Each client device then conducts model training locally using its own data. During the training process, the clients do not exchange sample data; they only share intermediate data related to the model. Upon the completion of each round of federated learning, the client devices upload their locally trained model parameters to the central server. The central server is responsible for aggregating these model parameters to update the global model. Model aggregation is a core step in federated learning, typically employing weighted averaging or other aggregation strategies to merge model parameters from different clients and update the global model. Subsequently, the central server provides the updated global model back to the clients, who use the aggregated model information to update their respective models. This process effectively ensures the security and privacy of sensitive data on each client device, while achieving knowledge aggregation from multiple client data sources and preserving privacy [17,18,19]. In simple terms, the essence of federated learning is to achieve model sharing while preserving data privacy.

2.1.2. Classification of Federated Learning

Based on the distribution of feature space and sample space in the participating datasets, federated learning can be categorized into three types: horizontal federated learning, vertical federated learning, and federated transfer learning [20,21].

- Horizontal Federated Learning

Horizontal federated learning refers to the situation where two datasets have a high overlap in terms of sample features but differ in their respective IDs. For example, consider two sets of customer data from different banks operating in distinct regions. Generally, banks manage similar data features, but the customers themselves are different. This scenario allows us to employ horizontal federated learning to train models. The prerequisite for implementing horizontal federated learning is that each client possesses labeled data. Figure 4 illustrates the architecture of horizontal federated learning.

Figure 4.

Horizontal federal learning architecture.

In this learning mode, clients construct local models based on their own datasets. Subsequently, the client encrypts the model information, such as gradients, using encryption algorithms and sends the encrypted model information to the central server [22]. The central server performs secure aggregation based on the clients’ model information, commonly using algorithms like Federated Averaging Algorithm (FedAvg) [23] and aggregating algorithm of heterogeneous federated models (FedProx) [24]. (In FedAvg, the central server receives model parameters from various clients and computes the global model parameter by averaging the parameters from all clients. The FedProx algorithm focuses on aggregating parameters in heterogeneous federated models.) The aggregated information is then distributed to all clients by the central server. Clients decrypt the received aggregated information from the central server and update their local models accordingly. Therefore, in the training process of federated models, there is no need for intermediate computation results exchange between clients. Instead, the central server performs model aggregation, and each party updates their local model based on the latest aggregated model information, enabling knowledge exchange among parties without revealing other clients’ source data. However, horizontal federated learning is not suitable for cross-domain federated learning scenarios with significant differences in feature space between clients. Therefore, vertical federated learning has been proposed [25,26].

Horizontal federated learning is applicable to multiple devices with the same feature space but different data distributions. It facilitates model generalization across diverse devices, thereby enhancing overall performance. By employing joint learning to merge models from different devices, horizontal federated learning mitigates the challenges arising from disparate data distributions. Nevertheless, due to the variations in data distribution among devices, accommodating data changes may necessitate increased communication and computational resources. Additionally, the heterogeneity of data among devices may pose difficulties in model updating within horizontal federated learning.

- 2.

- Vertical Federated Learning

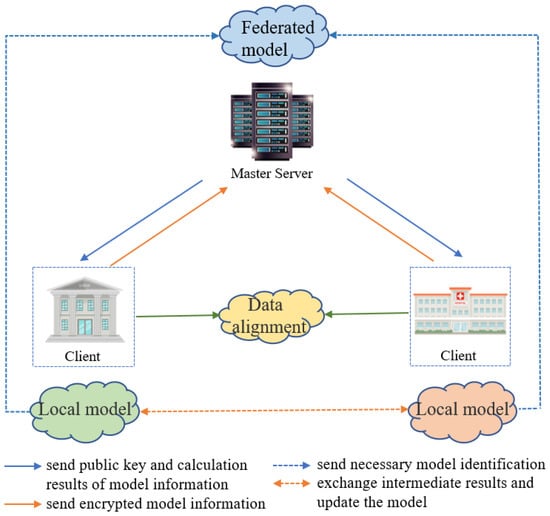

Vertical federated learning refers to the scenario where two datasets have a high degree of overlap in their IDs but differ in their features. For instance, consider banks and hospitals within the same region. In this case, the samples in the bank and hospital datasets are likely to have a high degree of personnel overlap, while their features differ. The bank dataset may include attributes such as deposits and loans, whereas the hospital dataset may involve physiological indicators, among others. When training a model using these datasets, it is referred to as vertical federated learning. The prerequisite for vertical federated learning is that only one client’s data possess a label space, while the data from other clients lack a label space. Figure 5 illustrates the architecture of vertical federated learning.

Figure 5.

Vertical federal learning architecture.

In this learning mode, the first step is data alignment. Data alignment aims to identify common samples while protecting the privacy and data security of each client. A common method for data alignment is the algorithm proposed by Sahu et al. [27]. Subsequently, the main server sends a public key to each client. At the same time, based on the common samples, clients construct their initial local models. Encrypted model information, such as gradients and loss values, is then sent to the main server [28]. The main server decrypts the client’s model information while calculating the necessary computational results for updating the client’s model, which are then transmitted back to the client [29]. The client updates its local model based on the main server’s computation results. Additionally, intermediate computational results are shared among the participants to assist in calculating gradients, loss values, and other model information [30]. For some vertical federated learning algorithms, the client also sends the model identifier of its local model to the main server for storage. This allows the main server to know which clients to send new data to for federated prediction. However, in certain vertical federated learning algorithms that require the participation of all clients in prediction, such as secure federated linear regression, the clients do not need to inform the main server of the necessary model identifiers [31]. In summary, in vertical federated learning, each party possesses different features. Therefore, during the training of the federated model, the parties need to exchange intermediate results to help each other learn the features they possess [32].

Vertical federated learning is suitable for scenarios where multiple devices share the same sample IDs but possess distinct features. It facilitates cross-device model collaboration while preserving data privacy. Through secure federated learning, longitudinal federated learning avoids direct sharing of raw data, thus safeguarding data privacy. Nevertheless, the limited data volume on each device may potentially restrict the model’s generalization ability. Additionally, since data are not centralized on a central server, the model aggregation process may require higher communication costs.

- 3.

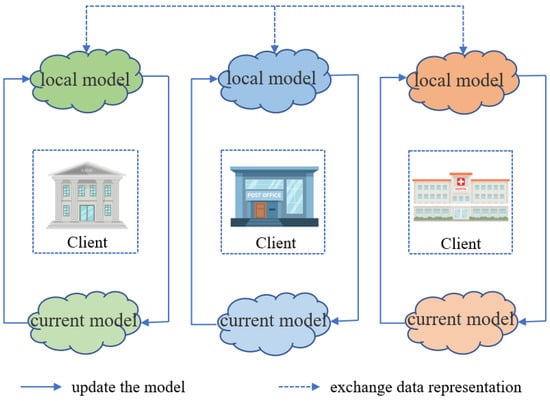

- Federated Transfer Learning

Federated transfer learning refers to the scenario where there is limited overlap in sample IDs and features, such as different banks and hospitals in various regions. The core focus of federated transfer learning lies in the concept of “transfer learning”. Transfer learning involves utilizing the similarities between data, tasks, or models to apply a learned model from a source domain to a target domain. The prerequisites for federated transfer learning are the same as those for vertical federated learning: only one participant possesses labeled data. The learning process can be summarized as follows: using the well-trained model parameters from a specific client in the current iteration and transferring them to another client to assist in training a new model round. Figure 6 illustrates the architecture of federated transfer learning.

Figure 6.

Federated transfer learning architecture.

In this learning framework, clients construct local models based on their own datasets. Subsequently, each client independently executes their local model, obtaining data representations and a set of intermediate results. These results are encrypted and sent to the respective counterpart. The counterpart utilizes the received intermediate results to compute the encrypted gradients and loss values of the model, incorporating a mask, and sends them back to the original client. After decrypting the received information, all parties send the decrypted model information back to the counterpart, and then each party updates their respective models using the decrypted information. In this process, each client effectively utilizes the counterpart’s current model and the potential data representations, thereby updating their local models and achieving a federated learning framework with transfer learning, referred to as federated transfer learning [33].

During the process of federated transfer learning, protecting intermediate results and gradient privacy are of paramount importance to ensure the privacy and security of user data. Several commonly used encryption and masking mechanisms are employed for safeguarding intermediate results and gradient privacy: (1) encryption algorithms: secure multi-party computation (SMPC) [34]: SMPC is a widely used encryption technique that enables computation among different devices while keeping the data encrypted, avoiding direct sharing of raw data. SMPC ensures the privacy of the computation results as no device can access the private data of others. Homomorphic encryption [35]: homomorphic encryption allows computation to be performed on encrypted data without the need for decryption. This means that devices can send encrypted data to a central server, perform computations on the server, and return encrypted results, thus avoiding the risk of data exposure. (2) Masking techniques: differential privacy [36]: differential privacy is a technique that allows data analysis while preserving privacy. In federated transfer learning, devices can apply random perturbation to their gradients to prevent the leakage of individual information. By adding noise to the gradients, the privacy of individual data can be protected.

Federated transfer learning combines the strengths of federated learning and transfer learning, enabling model transfer among devices with different data distributions. It facilitates the transfer of models trained on one device to others for personalized learning, accelerating the adaptation of models to new device data. However, federated transfer learning may encounter the problem of insufficiently labeled data, leading to potentially less accurate models learned on target devices. Furthermore, effective model selection and tuning strategies are crucial for successful federated transfer learning as inadequate choices can lead to a decline in performance.

2.1.3. Challenges Faced by Federated Learning

The challenges of federated learning initially appear similar to classical problems in fields such as machine learning, yet they possess unique characteristics distinguishing them from other classical problems. For instance, in problems involving expensive communication in machine learning, numerous methods have been proposed to address them. However, these methods often fall short in handling the scale of federated networks, let alone the challenges arising from data heterogeneity. Similarly, while privacy is an essential aspect of many machine learning algorithms, privacy preservation methods in machine learning may not be applicable due to statistical variations in data. Moreover, the implementation of these methods may become even more difficult due to system constraints on each device and potential large-scale networks. This section aims to delve into these challenges in greater detail.

- Communication Requirements

Communication is a crucial bottleneck in federated networks, compounded by concerns about privacy regarding the transmission of raw data, necessitating the need for local data retention on each device. In fact, federated networks can comprise a multitude of devices, such as millions of smartphones, where network communication can be orders of magnitude slower than local computation [37,38]. Therefore, to accommodate data generated by devices within a federated network, it is imperative to develop efficient communication methods that involve sending small messages or model updates iteratively as part of the training process rather than transmitting the entire dataset over the network. To further mitigate communication in such scenarios, two key aspects need to be considered: reducing the total number of communication rounds or decreasing the size of messages transmitted in each round.

In federated learning, quantization, compression, and sparsification are vital communication optimization techniques that reduce data transmission and lower communication overhead. Quantization involves converting model parameters or gradients from high-precision representations to low-precision ones. Transmitting high-precision parameters or gradients requires more bits, resulting in increased communication costs. By applying quantization techniques, the values of parameters or gradients can be restricted to a smaller range, enabling representation with fewer bits. Common quantization methods include fixed-point quantization, floating-point quantization, and vector quantization. While quantization significantly reduces data transmission, it may introduce some information loss, necessitating a balance between quantization precision and communication overhead. Compression, unlike quantization, involves using more efficient encoding methods to represent model parameters or gradients, thus reducing the number of bits required for transmission. Compression techniques offer more flexibility in encoding, such as encoding algorithms and dictionary learning. Common compression methods include Huffman coding and run-length encoding. These compression techniques effectively reduce data transmission without introducing additional information loss. Sparsification entails setting elements in model parameters or gradients that are close to zero to zero, thereby reducing data transmission. In deep learning, model parameters are often high-dimensional and dense, but, during training, many parameters may approach zero and have minimal impact. Sparsification techniques zero out these insignificant parameters and transmit only the non-zero ones. Common sparsification methods include L1 regularization and pruning. These communication optimization techniques prove highly valuable in federated learning as they help to decrease communication overhead and improve the efficiency and performance of the federated learning process. However, selecting appropriate techniques depends on the specific application scenario and model requirements.

- 2.

- Data Are non-Independent and Identically Distributed

Due to variations in identity, personality, and environment, the datasets generated by users may exhibit significant disparities. Consequently, the training samples are not uniformly and randomly distributed across different data nodes [39,40]. Data are non-independent and identically distributed (non-IID). The imbalanced distribution of data can lead to substantial biases in model performance across different devices. In FL, two common data distribution scenarios are IID and non-IID. These distributions have distinct impacts on the functioning of federated learning, as demonstrated in Table 1. Addressing the challenge of non-IID data in federated learning, several methods can alleviate the impact of data imbalance and optimize model aggregation. Common approaches include (1) local training strategies: clients in federated learning can adopt various local training strategies to cope with data imbalance. For instance, employing class-balanced sampling methods on each client ensures sufficient training data for every class. Moreover, using a class-weighted loss function assigns greater weight to rare classes, facilitating improved learning of these classes. (2) Aggregation strategies: during model aggregation in federated learning, different aggregation strategies can be employed to optimize the process under non-IID environments. A prevalent method is the weighted average, where each client’s model parameters are assigned distinct weights based on their data distribution. This enables clients with more balanced data distributions to have a more significant impact on the aggregation result. Another approach involves using approximate federated learning methods, such as FedAvg+, which introduces a client importance evaluation factor based on weighted averages to further optimize the aggregation process. (3) Model personalization: data imbalance in non-IID environments can lead to inferior model performance on certain clients. To address this issue, model personalization can be applied. In federated learning, allowing each client to perform personalized fine-tuning on the global model based on its unique data characteristics can lead to improved performance.

Table 1.

How federated learning works under IID and non-IID data.

- 3.

- Privacy Protection

Privacy is a fundamental attribute of federated learning, which achieves data protection by sharing model updates, such as gradient information, rather than raw data generated on each device [41,42,43]. However, transmitting model updates throughout the training process can potentially leak sensitive information to third parties or central servers [44]. While there are various methods for privacy protection during data exchange, these approaches often come at the cost of compromising model performance or system efficiency. Striking a balance between privacy and performance is a significant challenge for federated learning systems, both in theory and practice. For example, one approach is to protect user data privacy during machine learning by employing encryption mechanisms for parameter exchange [7]. Encryption is a method of transforming data into ciphertext to ensure it remains secure during transmission and storage. In federated learning, encryption techniques can be used to encrypt model parameters or updates, ensuring the confidentiality of sensitive information. However, the encryption and decryption processes may introduce computational and communication overhead, affecting system efficiency. Another method is to use differential privacy to safeguard data [34,44,45,46]. Differential privacy involves adding noise during data processing to protect individual data privacy. In the context of federated learning, differential privacy can be applied to protect local model updates or gradient information on the clients, effectively preventing privacy attacks targeting individual clients. However, the addition of noise may have an impact on the accuracy of the model, especially when dealing with small datasets.

- 4.

- Intermittent Behavior of Remote Clients

In federated learning (FL) technology, intermittent behavior of remote clients refers to situations where participating clients may intermittently fail to engage stably in model training. This intermittence may occur due to the following reasons: (1) unstable network connections: remote clients may operate in environments with unstable or low-bandwidth network connections, which can impede their timely communication with the central server for model parameter uploading and downloading. (2) Energy constraints: mobile devices and other remote clients are often limited by energy constraints, particularly when using wireless networks. Frequent data transmission and model training can consume a significant amount of power, restricting their participation frequency. (3) Limited computational capabilities: some remote clients may possess lower computational power, hindering their ability to perform complex model training tasks quickly. (4) Privacy and security concerns: certain remote clients may hold sensitive personal data, and, due to privacy and security considerations, they may choose to limit data interactions with the central server.

Intermittent behavior can significantly affect the performance of federated learning in the following ways: (1) reduced convergence speed: intermittent client participation may lead to missed training rounds, resulting in the discontinuity of model updates. The lack of continuity in model parameters can lead to unstable updates of the global model, requiring more training rounds to achieve convergence and consequently slowing down the convergence speed. (2) Unstable model performance: the intermittent participation of clients can cause fluctuations in model performance. When certain clients remain offline or do not participate in training for extended periods, their local data information may not be timely integrated into the global model, leading to unstable model performance. (3) Limited generalization ability: if intermittent clients have different data distributions compared to other clients, it may cause issues with data distribution imbalance, thus affecting the model’s generalization ability. (4) Load imbalance: the existence of intermittent clients may lead to load imbalance within the system, with some clients handling more computational and communication tasks, consequently impacting the overall efficiency and performance of the system.

Given the intermittent behavior of remote clients in federated learning, addressing such instability becomes crucial. Several potential solutions include (1) model update strategies: the central server can adopt flexible model update strategies to promptly update models when remote clients are available and perform certain levels of model parameter interpolation and imputation when they are not accessible. (2) Priority settings: for clients with lower participation rates, priority settings can be established to ensure higher-participating clients contribute more significantly to model updates. (3) Incremental learning: model training can be divided into multiple steps, communicating with as many clients as possible in each step to better adapt to intermittent behavior. (4) Local learning and aggregation: certain federated learning algorithms allow some degree of learning and model updates on local devices before aggregating model parameters on the central server. In conclusion, federated learning technology needs to consider the intermittent behavior of remote clients and adopt appropriate strategies to optimize the model training and parameter aggregation process, thereby improving the overall efficiency and performance of the system.

2.2. Meta-Learning

2.2.1. The Concept of Meta-Learning

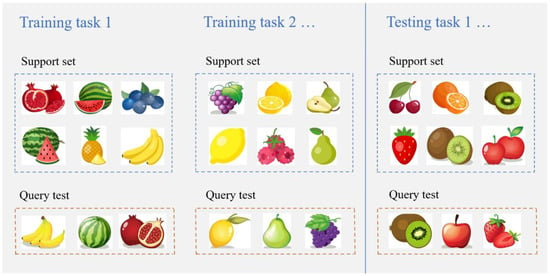

The success of machine learning is driven by handcrafted feature design [47,48]. It typically involves the use of manually designed fixed learning algorithms that are trained from scratch for specific tasks. Deep learning has achieved feature representation learning [49] and has shown significant improvements in performance across numerous tasks [50,51,52]. However, it also exhibits some evident limitations [52]. For instance, deep learning training often requires a large amount of data, rendering it impractical for applications with scarce or costly data [53] or when computational resources are limited [54]. Additionally, training for related tasks necessitates starting from scratch, which incurs substantial time and resource expenses. Meta-learning (ML) aims to replace previous handcrafted learners with algorithms that can “learn to learn” [55,56,57,58]. It involves extracting experience from multiple learning tasks, typically encompassing the distribution of related tasks, and utilizing this experience to enhance future learning performance. In meta-learning, the training set and the test set are referred to as the meta-training set and the meta-test set, respectively. The meta-training set consists of a support set and a query set, while the meta-test set also contains both a support set and a query set. During meta-training, a model is trained using the support set from the meta-training set, and its performance is evaluated using the query set. In the training of multiple tasks, the model’s performance is assessed by calculating the overall loss across multiple tasks, and both model parameters and algorithm parameters are simultaneously updated to obtain an algorithm that can be applied to various tasks. In meta-testing, the algorithm is updated on the support set for several steps to obtain a model capable of handling new tasks, and this model makes final predictions on the query set, as illustrated in Figure 7.

Figure 7.

Schematic diagram of meta-learning training.

Meta-learning is a driving force in advancing the contemporary deep learning industry, particularly as it has the potential to alleviate many key issues in modern deep learning [59], such as enhancing data efficiency, knowledge transfer, and unsupervised learning. Meta-learning has proven to be useful in both multi-task scenarios and single-task scenarios. In multi-task scenarios, task-agnostic knowledge is extracted from a series of tasks and used to improve learning for new tasks in the series [55,60]. In single-task scenarios, a single problem is repeatedly solved and improved across multiple iterations [57,61,62,63]. In summary, meta-learning has been successfully applied in various domains, including image recognition in cinematography [60,64], unsupervised learning [58], data efficiency [65,66], autonomous reinforcement learning (RL) [67], hyperparameter optimization [61], and neural architecture search (NAS) [62,68,69].

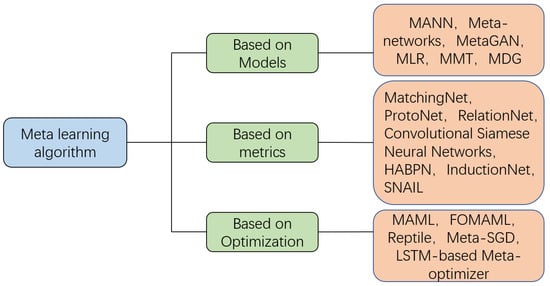

2.2.2. The Methods and Applications of Meta-Learning

The existing majority of meta-learning research can be classified into three main categories based on the methods employed: model-based, metric-based, and optimization-based, as shown in Figure 8.

Figure 8.

Meta-learning algorithm classification diagram.

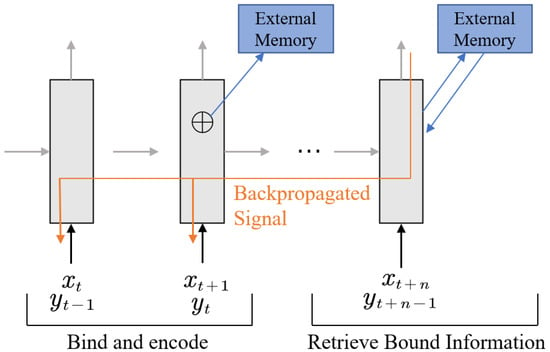

Based on Models: This category of meta-learning involves the direct generation of models. It relies on the use of recurrent networks with external or internal memory to generate a model prior based on previous task experiences, which is then used for learning new tasks. One example of model-based meta-learning is meta-learning with memory-augmented neural network (MANN) [70], which incorporates external memory to remember the previous data inputs without label information. This allows establishing the connection between input data and labels during the backpropagation process after completing the next input, enabling subsequent input data to be compared with relevant historical data retrieved from the external memory. Consequently, the external memory accumulates a wealth of historical experiences, which empowers the model to rapidly learn new tasks, as depicted in Figure 9. Other notable achievements in model-based meta-learning include meta-networks, meta-learning of generative adversarial network based on data enhancement (MetaGAN) [71], multi-response linear regression (MLR), multi-model trees (MMT), and meta decision tree (MDT) [72].

Figure 9.

MANN schematic diagram. Adapted from Santoro et al. (2016) [70].

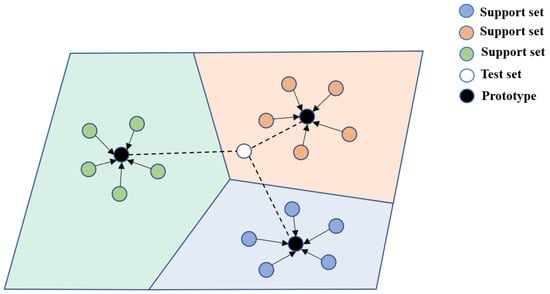

Based on metrics: This approach models the meta-learning problem as a metric learning problem. In metric-based meta-learning, algorithms infer the direction of model parameter updates by learning how to measure the similarity between tasks. Typically, previous task experiences are used to learn distance metrics (such as Euclidean distance [73] or cosine similarity [74]), which are then applied to new tasks to compare similarities. One metric-based meta-learning method is the MatchingNet [75] proposed by Vinyals et al.: after feature extraction, the support set is projected onto an embedding space and measured using cosine similarity, enabling classification based on the computed matching degree of the test samples. Another method is the ProtoNet [64] introduced by Snell et al.: using clustering, the support set is projected into a metric space, where the mean vector is obtained based on Euclidean distance measurement. The distances between the test samples and each prototype are computed to achieve classification, as shown in Figure 10. Sung et al. proposed RelationNet [76], where the relation module structure replaces the cosine similarity and Euclidean distance measurement in MatchingNet and ProtoNet, making it a nonlinear classifier that learns to determine relationships for classification. Additionally, there have been new advancements, such as convolutional Siamese neural networks, HABPN [77], InductionNet [78], and SNAIL [79].

Figure 10.

ProtoNet schematic diagram. Adapted from Snell et al. (2017) [64].

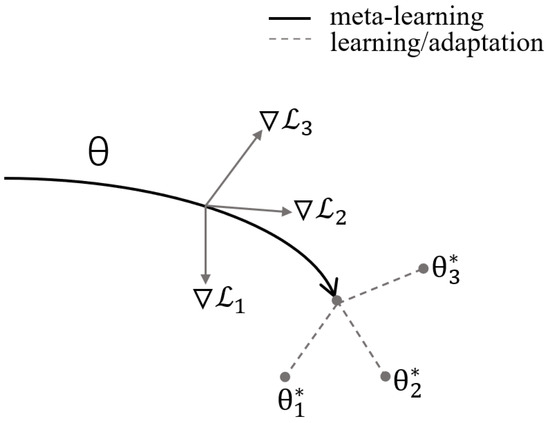

Based on Optimization: This approach transforms the meta-learning problem into an optimization problem. In optimization-based meta-learning, algorithms enhance the generalization ability of models by learning how to adjust the model’s initial state or parameter update rules. By optimizing the learning algorithm through an optimization process, it becomes capable of adapting to new tasks and achieving better performance. Typically, model parameters are optimized using gradient descent or reinforcement learning. One notable model-agnostic meta-learning algorithm proposed by Finn et al. [60] is called model-agnostic meta-learning (MAML). MAML directly optimizes the learning performance of model initialization by iteratively sampling different tasks during the training process to update the model’s parameters. This allows the model to learn a well-suited initial parameter θ, facilitating faster convergence when adapting to new tasks. Specifically, the training process of MAML consists of two stages. In the first stage, model parameters are initialized randomly, and the model is trained on multiple tasks, improving its performance on each task using gradient descent. In the second stage, for each new task, the parameters learned in the first stage serve as the starting point, and a few gradient descent steps are taken to update the model parameters, enabling adaptation to the new task, as depicted in Figure 11. The advantages of MAML lie in its ability to operate without prior knowledge of specific information about new tasks as it only requires learning a well-suited initial parameter during the training phase. Additionally, MAML is applicable to various types of deep learning models since it does not rely on any specific model architecture, hence being referred to as a “model-agnostic” meta-learning algorithm. MAML has emerged as one of the mature meta-learning algorithms currently available. Apart from MAML, other optimization-based meta-learning methods include first-order model-agnostic meta-learning (FOMAML) [80], Reptile [80], Meta-SGD [81], and more. Furthermore, following long short-term memory (LSTM) technology [82], Ravi et al. introduced an LSTM-based meta-optimizer [56], which has gained significant research attention and application.

Figure 11.

MAML schematic diagram. Adapted from Finn et al. (2017) [60].

The three meta-learning methods each possess distinct characteristics, suitable for different problems and application scenarios. Model-based meta-learning methods exhibit high internal dynamism and broader applicability compared to metric-based meta-learning methods while also achieving better performance. However, they typically require substantial computational resources and sample data and exhibit strong dependence on network structures. The design of network structures relies on the characteristics of the tasks at hand, necessitating a redesign for tasks with significant variations. On the other hand, optimization-based meta-learning methods are relatively simple and computationally efficient. Nevertheless, they may be sensitive to initial states and parameter update rules. When using gradient descent to update weights, due to limitations imposed by the choice of optimizer (e.g., SGD, Adam) and learning rate settings, multiple steps are usually required to reach an optimal point. Consequently, the learning process is slow when the model encounters new tasks. Additionally, in the case of training with small sample sizes, the weight updating process is prone to overfitting. Metric-based meta-learning methods typically demonstrate good generalization capabilities, making them suitable for scenarios involving few-shot learning and zero-shot learning. When the number of tasks is small, the network does not require specific task adjustments, resulting in fast predictions. However, these methods exhibit low robustness and are relatively picky about the dataset. Moreover, when the number of tasks is large, pairwise comparisons become expensive, and they heavily rely on labels, limiting their applicability to supervised environments.

3. Overview of Federated Meta-Learning

3.1. The Concept of Federated Meta-Learning

Federated learning is a distributed learning approach that safeguards data privacy and security. It involves training a shared data model by solely exchanging model parameters without sharing node data [83,84]. However, in federated learning, the non-uniform distribution of training samples among different data nodes, due to potential differences between datasets, can lead to significant performance biases across devices. This creates a substantial demand for addressing the training of heterogeneous data in federated learning. Meta-learning aims to learn and extract transferrable knowledge from previous tasks to train a well-initialized model capable of quickly adapting to new tasks, thereby preventing overfitting and facilitating rapid adaptation to the inconsistent data distributions in federated learning. Consequently, employing meta-learning to train a personalized federated learning model becomes a viable solution. Meta-learning achieves this by training separate data models for different data nodes, allowing for direct capture of the imbalanced data relationships between clients, making it well-suited for addressing the data imbalance issue in federated learning.

In federated learning, the utilization of meta-learning algorithms to train personalized models for each client, reducing the performance disparities of the models across different clients and enhancing model fairness, is referred to as federated meta-learning [80]. Federated meta-learning treats each client as a task and trains a well-initialized model that can quickly adapt to new tasks on the client side through a few simple steps of gradient descent. Due to its ability to rapidly adapt to new tasks, meta-learning exhibits tremendous potential in addressing the systemic and statistical challenges posed by federated settings [85].

3.2. Federated Meta-Learning Algorithm

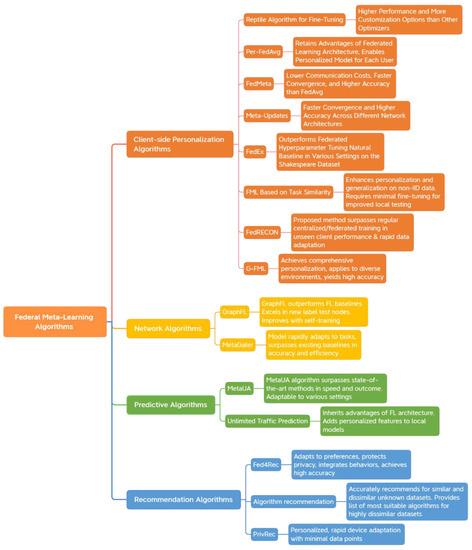

Federated learning aims to train a global model by leveraging data from different clients while protecting the privacy of each client. However, there are significant variations in the local data across different clients, making it impractical to rely on a single global model that satisfies all client needs. Furthermore, traditional federated learning methods have limitations in handling heterogeneous data, new label domains, and unlabeled data. These methods are unable to adapt quickly to different tasks and require retraining from scratch, which is both time-consuming and costly. To address these challenges, several federated meta-learning algorithms have been developed. In this section, we categorize federated meta-learning algorithms into four types: client-personalized algorithms, network algorithms, prediction algorithms, and recommendation algorithms. Figure 12 illustrates the classification framework of federated meta-learning algorithms and highlights the advantages of each algorithm.

Figure 12.

FML algorithm classification framework diagram.

3.2.1. Client-Side Personalization Algorithms

Although utilizing data from multiple clients can enhance the performance of models compared to using only local data for training, in the context of federated learning, different clients may experience significant variations in their local data due to geographical differences and cultural influences, resulting in non-identical and imbalanced data distributions. These disparities in data sources and quantities can lead to significant performance biases across different clients, making the globally trained model unsuitable for all clients. Moreover, clients have their own objectives and interests, making a single global model inadequate for meeting all their needs. To address the issue of data heterogeneity in federated learning, personalized models need to be provided for different clients through federated meta-learning. Personalized algorithms for clients involve tailored model training based on specific data distributions and task requirements. This approach maximizes the utilization of local data characteristics, enhancing model performance and adaptability on each client. The aim is to improve both the personalization capability and overall performance of federated learning, enabling customization of model training based on the unique data characteristics of each client while safeguarding data privacy and improving resource utilization efficiency.

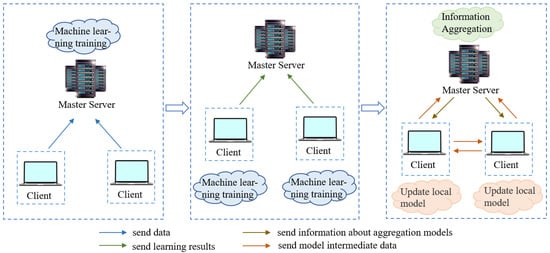

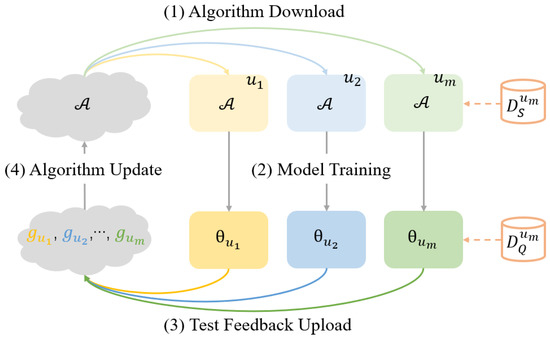

Jiang et al. [86] proposed a solution in 2019 that inserts a Reptile [80] fine-tuning phase after the federated averaging algorithm. By carefully fine-tuning the global model, its accuracy can be improved, making it more amenable to personalization. This solution consists of two stages: training and fine-tuning, with personalized optimization performed using the same client optimizer. The main difference between this method and other federated meta-learning algorithms is that it introduces meta-learning as a fine-tuning phase after the federated learning process rather than incorporating the meta-learning algorithm as part of the entire federated learning process. The experimental results demonstrate that this approach outperforms other optimizers. Subsequently, Chen et al. [85] proposed the first federated meta-learning framework, called FedMeta. In this framework, each client is treated as a task, aiming to train a well-initialized global model rather than a globally optimal model. The algorithm is maintained on the server and distributed to clients for model training. In each round of meta-training, a batch of sampled clients receives the algorithm’s parameters and performs model training on the support set, after which the test results on the query set are uploaded to the server for algorithm updates. The workflow is depicted in Figure 13. FedMeta utilizes a shared meta-learner in place of a shared global model in federated learning, allowing different meta-learning algorithms to be applied flexibly in the federated learning system. Experiments demonstrate that, compared to federated averaging (FedAvg), FedMeta reduces the required communication costs by 2.82–4.33 times, achieves faster convergence, and improves accuracy by 3.23–14.84%.

Figure 13.

FedMeta framework workflow. Adapted from Chen et al. (2019) [85].

Fallah et al. [87] conducted a study in 2020 on a personalized variant of federated learning called Per-FedAvg. The objective was to find an initial shared model that could be easily adapted to their local dataset by current or new users through performing one or several gradient descent steps on their own data. This approach retains all the advantages of the federated learning architecture while providing a more personalized model for each user. Per-FedAvg was investigated within the MAML framework and evaluated based on the gradient norm of non-convex loss functions. The experimental results demonstrate that Per-FedAvg offers a more personalized solution compared to FedAvg. Subsequently, Yao et al. [88] employed gradient descent tracking and gradient evaluation strategies to reduce the gradient bias caused by local updates. They introduced a meta-update process that facilitates optimization towards the target distribution using a controlled meta-training set. The experimental results indicate that this method achieves faster convergence and higher accuracy in various federated learning settings. Inspired by the neural architecture search (NAS) technique of weight sharing, Khodak et al. [89] proposed a practical method for federated hyperparameter optimization called FedEx, which is based on extended gradient updates. This method simultaneously optimizes multiple neural networks with the same parameters, accelerating the adjustment of algorithms such as FedAvg in the context of personalized federated learning. FedEx is applicable to several popular federated learning algorithms, and its performance is measured using the final test accuracy from five random experiments. The algorithm outperforms the federated hyperparameter tuning baseline by 1.0–1.9% in several different settings on the Shakespeare dataset. In the same year, Zhang et al. [90] introduced an alternative approach called FedFoMo, where each client collaborates only with other relevant clients to obtain a more specific target model for each client. To achieve this personalization, FedFoMo no longer learns a single average model but calculates the inter-client model relationships and determines the best model combination based on the influences among clients. Different meta-models are then sent to client groups using n server meta-models. FedFoMo allows each client to optimize for any desired target distribution, providing greater flexibility for personalization. This method outperforms existing alternatives and introduces new capabilities for personalized federated learning, including transmission beyond local data distributions.

Balakrishnan et al. [91] developed a task-similarity-based federated meta-learning solution that allows for training model initializers in a resource-efficient manner when dealing with a range of client data distributions. The resulting model initializers significantly improve the local testing accuracy of clients with only a few fine-tuning steps. This approach serves as an effective initialization example for federated learning, enhancing the personalization and generalization of non-IID data models. The performance of this method surpasses that of well-known federated averaging algorithms and their variants on benchmark federated learning datasets. Similarly, in the same year, Singhal et al. [92] proposed a model-agnostic local federated reconstruction algorithm, FedRECON, where model parameters are divided into global parameters and sensitive local parameters. Local parameters are not shared outside the client during the training process, thus preserving client data privacy. This method utilizes meta-learning to train global parameters capable of quickly reconstructing local parameters, which are then reconstructed on the client side using the global parameters. The experimental results demonstrate that FedRECON outperforms standard centralized training methods and federated methods in terms of performance for unseen clients, quickly adapting to individual client data, matching the performance of other federated personalization techniques, and minimizing communication overhead.

In 2023, Yang et al. [93] proposed G-FML, a group-based federated meta-learning framework. This framework adaptively divides clients into different groups based on the similarity of their data distributions and obtains personalized models within each group through meta-learning. The grouping mechanism ensures that each group consists of clients with similar data distributions, thereby achieving overall personalization. This framework can be extended to highly heterogeneous environments and has been effectively validated on three heterogeneous benchmark datasets. The experimental results show that G-FML achieves a 13.15% improvement in model accuracy compared to state-of-the-art federated meta-learning approaches.

3.2.2. Network Algorithms

The federated meta-learning network algorithm combines meta-learning techniques within the framework of federated learning to achieve rapid adaptation and efficient model updates. Federated learning addresses the challenges of distributed data privacy and data collection, while meta-learning focuses on fast adaptation to new tasks. The aim of the federated meta-learning network algorithm is to address data collection, labeling, and privacy protection challenges in real-world graph-based problems, as well as efficient learning and rapid adaptation to different tasks on resource-constrained nodes.

The existing centralized approaches face difficulties in addressing data collection, labeling, and privacy protection issues in semi-supervised node classification problems on graphs. At the same time, the traditional federated learning methods have limitations in handling non-independent and identically distributed data across clients, data with new label domains, and unlabeled data. To tackle these challenges, Wang et al. proposed the first FL framework called GraphFL [94] in 2020. In this framework, two MAML-inspired GraphFL methods were introduced to address the handling of heterogeneous data and data with new label domains, and self-training methods were used to address the utilization of unlabeled data. In the case of handling heterogeneous data, as data do not need to be IID in MAML, it was integrated into FL. This method consists of two stages. Firstly, a global model is learned on the server by following the training scheme of MAML, which helps to mitigate the issues caused by non-IID graph data. Then, the existing FL methods are used to further update the global model, enabling good generalization on test nodes. Regarding the handling of data with new label domains, MAML was reformulated within the FL framework, and a new objective function, different from the existing FL methods, was defined. This allows learning a shared global model for all clients on the server, enabling the rapid adaptation of the global model to test nodes with different label domains from the training nodes. For the utilization of unlabeled data, local models are trained on each client using the GraphSSC method with client labels, and these local models are then used to predict unlabeled nodes of clients. The unlabeled nodes with the most confident predictions are selected, and their predicted labels are used as additional labeled nodes to train the GraphSSC method. The experimental results demonstrate that the GraphFL framework significantly outperforms the compared FL baselines and exhibits better capability in handling test nodes with new label domains. Additionally, GraphFL with self-training achieves improved performance.

The deployment of deep learning on resource-constrained nodes faces significant challenges due to the enormous model size and intensive computational requirements, and most existing techniques cannot rapidly adapt to different tasks. To enable efficient learning on resource-constrained nodes, where subnetworks for each new task can quickly adapt and incur minimal training cost, Lin et al. developed a joint meta-learning method called MetaGater [95] in 2021. This method leverages the model similarity between learning tasks across different nodes to collectively learn good meta-initializations for the backbone network and gating module. In this way, the meta-gating module can effectively capture important filters of the meta-backbone network, enabling the conditional channel gating network to rapidly adapt to specific tasks. In summary, MetaGater can quickly acquire task-specific subnetworks with higher accuracy and improve the differences between task models after rapid adaptation. This means that MetaGater successfully constructs a joint model of task-sensitive meta-backbone networks and meta-gating modules, leading to efficient inference through the rapid adaptation of model parameters. This method demonstrates clear superiority over existing baselines in terms of accuracy and efficiency.

3.2.3. Predictive Algorithms

The federated meta-learning prediction algorithm combines federated learning and meta-learning techniques to address the challenges in click-through rate (CTR) prediction and wireless traffic prediction. It achieves personalized and efficient model updates while reducing communication costs.

In federated learning for CTR prediction, client devices train locally and only communicate model changes to the server, without sharing user data, to ensure privacy protection. However, this approach faces two main challenges: (1) the heterogeneity of client devices slows down the progress of FL algorithms that use weighted averaging to aggregate model parameters, leading to suboptimal learning outcomes. (2) Adjusting the server learning rate using a trial-and-error method is extremely difficult due to the significant computation time and resources required for each experiment. To tackle these challenges, Liu et al. proposed an online meta-learning method called MetaUA in 2022 [96]. It is a learning strategy that aggregates local model updates in CTR prediction federated models. This strategy adaptively weighs the importance of client devices based on their attributes and adjusts the update step size. MetaUA adjusts in an online manner suitable for FL communication rounds, without requiring additional communication from clients. The experimental results demonstrate that MetaUA outperforms previous state-of-the-art methods in terms of convergence speed and final results under various settings.

Wireless traffic prediction [97,98] is one of the key elements in 6G networks [99,100] as proactive resource allocation and green communication heavily rely on accurate predictions of future traffic states. To avoid training from scratch and construct personalized models for geographically distributed heterogeneous datasets, Zhang et al. introduced MAML into the FL framework for wireless traffic prediction in 2022 [101]. This enables efficient wireless traffic prediction at the edge. Specifically, a sensitive initial model is trained, which can quickly adapt to heterogeneous scenarios in different regions. The global model undergoes only one or a few fine-tuning steps on local datasets to effectively adapt to heterogeneous local scenarios. Additionally, a distance-based weighted model aggregation is proposed and integrated to capture dependencies between different regions, achieving better spatiotemporal prediction. This approach inherits all the advantages of the FL architecture while ensuring personalized features for each local model. Extensive simulation results demonstrate that this approach outperforms traditional federated learning methods and other commonly used traffic prediction benchmarks.

3.2.4. Recommendation Algorithms

The federated meta-learning recommendation algorithm combines federated learning and meta-learning techniques to address issues such as privacy protection, personalization, and efficiency in recommendation systems. By leveraging distributed training, model parameter sharing, and meta-learning strategies, the recommendation algorithm can provide personalized recommendation results while safeguarding user privacy and achieving good performance in terms of accuracy and efficiency.

In 2020, Zhao et al. proposed Fed4Rec [102], a privacy-preserving framework for page recommendation based on federated learning (FL) and model-agnostic meta-learning (MAML). It allows machine learning models to be trained on data collected from both public users who share data with the server and private users who do not. Fed4Rec provides recommendations for public users computed on the server and private users computed on local devices. FL is employed to train the local data, while Fed4Rec only shares a portion of the locally computed model parameters with the server. MAML is used for joint training of the shared data and model parameters from private users. Fed4Rec can quickly adapt to user preferences, protect user privacy, and integrate various user behaviors. The experimental results demonstrate that Fed4Rec outperforms the baseline in terms of recommendation accuracy.

In the same year, Arambakam et al. proposed an algorithmic recommendation algorithm [103]. This recommendation algorithm takes a dataset as input and outputs the best-performing algorithm and hyperparameters for solving the task. Implementing this algorithm requires obtaining meta-features from the dataset using the k nearest neighbor algorithm and historical performance data. For previously unknown but similar datasets, the recommended algorithm is accurate, but the hyperparameters need to be re-optimized for the dataset. For previously unknown and highly dissimilar datasets, a list of algorithms believed to be most suitable for the dataset is recommended based on prior knowledge.

In 2021, Wang et al. introduced a DNN-based recommendation model called PrivRec [104], which operates in a distributed federated learning environment to ensure that user data remain exclusively on their personal devices while facilitating the training of an accurate model. To address the data heterogeneity in FL, a first-order meta-learning approach is introduced, which enables fast personalization on devices with minimal data points. Furthermore, to prevent potential malicious users from posing significant security threats to other users, a user-level differential privacy model, namely DP-PrivRec, is developed, making it impossible for attackers to identify any specific user from the trained model.

4. Application of Federated Meta-Learning in Cyberspace Security

Cyberspace security, also referred to as information security or network security, emphasizes the spatial concept parallel to land, sea, air, and space. In comparison to information security, network security and cyberspace security reflect a more three-dimensional, extensive, multilayered, and diverse understanding of information security. They better capture the characteristics of cyberspace and demonstrate greater permeation and integration with other security domains [105].

The main research directions in the discipline of cyberspace security include cryptography, network security, information system security, information content security, and information warfare [106,107,108]. As the threats to cyberspace security continue to increase, researchers are exploring various methods to address these challenges. Federated meta-learning, an emerging field in machine learning, has witnessed numerous remarkable research achievements in recent years. Researchers in the field of machine learning have undertaken cutting-edge explorations into the integration of cyberspace security and federated meta-learning. This paper focuses on the organic integration of cyberspace security and federated meta-learning and provides a comprehensive study of the current application status and progress of federated meta-learning in cyberspace security.

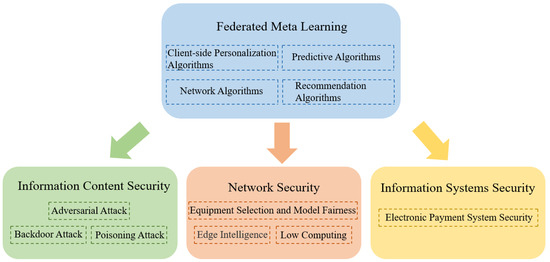

Based on the current research status, this paper will separately review the research progress and core ideas of different federated meta-learning algorithms in the field of cyberspace security. Currently, federated meta-learning has been specifically applied and researched in the domains of information content security, network security, and information system security in cyberspace security. Subsequently, the application research of corresponding federated meta-learning models in these three domains will be categorized and summarized, as shown in Figure 14. This section will introduce the specific applications of federated meta-learning in recent years, focusing on these three domains.

Figure 14.

Research framework of federated meta-learning in cyberspace security.

4.1. Federated Meta-Learning Applied to Information Content Security

Information security is the core aspect of cyberspace security, encompassing the protection and manipulation of data and information within the internet. The academic community currently holds varying perspectives on the concept of information content security. Broadly speaking, information content security encompasses the confidentiality of information, protection of intellectual property rights, information hiding, and privacy preservation [109]. In federated learning, the protection of client privacy restricts servers from arbitrarily inspecting data sent by clients. This vulnerability makes federated learning more susceptible to malicious attacks. Therefore, it is essential to enhance the robustness of federated learning against such attacks and safeguard data privacy by employing meta-learning algorithms. This section summarizes the recent advancements in federated meta-learning in the context of adversarial attacks, backdoor attacks, and poisoning attacks, as shown in Table 2.

Table 2.

FML applied to information content security.

4.1.1. Adversarial Attack

References [116,117] have already indicated that meta-learning algorithms, such as MAML, are susceptible to adversarial attacks, resulting in a significant decline in the performance of locally adapted models at the target when presented with perturbed input data. In light of this, Lin et al. [110] proposed a federated meta-learning framework called Robust FedML, which is built upon distributed robust optimization, balancing both robustness and accuracy. In this framework, the federated meta-learning model is initially trained on a group of edge nodes and then quickly adapts to new tasks at the target node using only a few samples. The framework achieves algorithm robustness by constructing adversarial data. It employs an adversarial data generation process where, during iterations, each edge node constructs adversarial data samples using gradient ascent and adds them to its own adversarial dataset. Each node first updates the parameters with its training set,

and then uses the test set and the built confrontation data set to locally update the again,

After completing the local updates, the updated parameters are transmitted to the central server. By pretraining each edge node, both model accuracy and resilience against adversarial perturbations on the test set are ensured. Models trained in a meta-learning manner prevent adversarial attacks without significantly sacrificing the target edge nodes’ rapid adaptive learning accuracy. Experimental results demonstrate that robust FedML outperforms FedML in scenarios with higher levels of perturbed data.

4.1.2. Backdoor Attack

Due to the independence and confidentiality of each client, FL cannot guarantee the honesty of all clients, making FL models vulnerable to adversarial attacks. One form of such attacks is the backdoor attack. The adversary’s objective is to maintain the model’s good performance on the primary task while decreasing its performance on the target task. Existing research primarily focuses on static backdoor attacks, where the injected poison pattern remains unchanged. However, FL is an online learning framework, allowing attackers to choose new attack targets at any time. Traditional algorithms require learning new target tasks from scratch, incurring high computational costs and demanding a large number of adversarial training examples.

To address this issue, Huang et al. (2020) proposed a novel framework called symbiosis network [111]. This framework aims to train a versatile model that can simultaneously adapt to the target task and the main task while also quickly adapting to new target tasks. The following definitions are employed: represents a client, where each client stores a dataset on their local device. For malicious client , consists of two components: a clean dataset and an adversarial (poisoned) dataset . Both and should adhere to the following conditions:

In order to achieve high performance in both the primary task and the objective task, the goal is to train appropriate model parameters such that the model can make good predictions on clean and toxic datasets. Therefore, the objective function for the client is as follows:

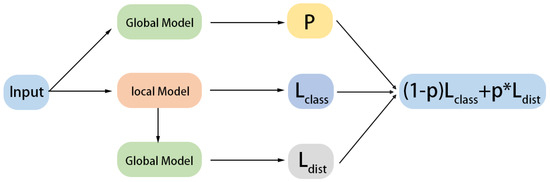

The right-hand side of Equation (4) can be decomposed into two parts: the first part represents the training on the clean dataset ; optimizing this part leads to good performance on the main task; the second part involves training on the poisoned dataset , where denotes the label chosen by the attacker; optimizing this part improves the performance of the targeted backdoor task. This approach bridges meta-learning and backdoor attacks in the federated learning (FL) setting. For benign clients, the training process is the same as conventional federated learning. For malicious clients, we propose a novel framework called the symbiotic network to address the backdoor attack task. We employ meta-optimization as an aggregation method to enhance the model’s adaptability, enabling it to quickly adapt to new poisoned tasks, as shown in Figure 15. Taking classification as an example, for malicious clients, both the local and global models are maintained, and the training objective function for the local model is modified as follows:

Figure 15.

Symbiosis network architecture diagram. Adapted from Huang et al. (2020) [111].

The components and play different roles in the overall training of the model. captures the accuracy of both the primary task and the backdoor task, while computes the distance between the local model and the global model. To balance their contributions, a factor is introduced. One viable approach is to set as the performance of the global model, which, for classification tasks, can be measured by the classification accuracy . The global model aims to learn internal features that are broadly applicable to all tasks rather than a single task. This objective can be achieved by minimizing the total loss sampled from the task distribution, as shown in Equation (6):

Here, represents the optimal parameters for task , as determined by Equation (4). The global model parameters should closely align with the optimal parameters for each task. By employing the Euclidean distance metric, we can derive the following new loss function:

The optimal parameter updates obtained are as follows:

The variable denotes the total number of tasks selected in this round. In order to ensure compatibility between Equation (8) and the objective, an amplification strategy is employed, resulting in the modification of Equation (8) as follows:

Experimental findings demonstrate that symbiosis network training generally outperforms manual configuration methods in terms of accuracy in backdoor attacks. Furthermore, this advantage can be sustained as the iterations progress, indicating the method’s persistence and robustness without significantly affecting the primary task. The algorithm exhibits excellent performance across three datasets, providing evidence of its effectiveness in addressing dynamic backdoor attacks.

Subsequently, inspired by the matching network, Chen et al. proposed a defense mechanism [112]. In this approach, users employ the classification mechanism of the matching network to classify based on the distance between the input sample features and the features of the samples in the support set. Through this local decision making mechanism, the success rate of backdoor attacks was reduced from over 90% to less than 20% (Omniglot validation) and from 50% to 20% (mini-ImageNet validation) within a few iterations. While this local defense mechanism significantly reduces the success rate of backdoor attacks and enhances the robustness of federated meta-learning against such attacks, it also decreases the accuracy of the main task.