Abstract

Semantic segmentation finds wide-ranging applications and stands as a crucial task in the realm of computer vision. It holds significant implications for scene comprehension and decision-making in unmanned systems, including domains such as autonomous driving, unmanned aerial vehicles, robotics, and healthcare. Consequently, there is a growing demand for high precision in semantic segmentation, particularly for these contents. This paper introduces DPNet, a novel image semantic segmentation method based on the Deeplabv3 plus architecture. (1) DPNet utilizes ResNet-50 as the backbone network to extract feature maps at various scales. (2) Our proposed method employs the BiFPN (Bi-directional Feature Pyramid Network) structure to fuse multi-scale information, in conjunction with the ASPP (Atrous Spatial Pyramid Pooling) module, to handle information at different scales, forming a dual pyramid structure that fully leverages the effective features obtained from the backbone network. (3) The Shuffle Attention module is employed in our approach to suppress the propagation of irrelevant information and enhance the representation of relevant features. Experimental evaluations on the Cityscapes dataset and the PASCAL VOC 2012 dataset demonstrate that our method outperforms current approaches, showcasing superior semantic segmentation accuracy.

1. Introduction

Semantic segmentation tasks have found extensive applications in various fields [1], such as autonomous driving [2,3,4,5,6,7] and medical image processing [8,9,10], and it is also an important technique for UAV remote sensing image analysis [11,12,13]. A robust segmentation model should not only accurately capture the semantic information of objects but also enhance the extraction of neighboring object boundaries. Deeplabv3 plus [14] has emerged as a classical architecture that leverages dilated convolutions to extract multi-scale features, thereby avoiding redundant upsampling operations and achieving a balance between model accuracy and computational efficiency. The ASPP module plays a vital role in aggregating contextual information from diverse regions, facilitating the exploration of global image characteristics. It is worth noting that the adoption of conventional single-scale convolutional kernels may impose limitations on the effective scope of feature extraction.

Based on the Deeplabv3 plus baseline model, a novel semantic segmentation method with a dual feature pyramid and attention mechanism is proposed. This method fully utilizes the feature information obtained from the backbone network, enhancing the model’s generalization ability and enabling better handling of these problems.

1.1. Semantic Segmentation

FCN (Fully Convolutional Network) [15] revolutionized the field of semantic segmentation by introducing the use of convolutional neural networks. It replaces the last fully connected layer of the classification network with convolutional layers and enables end-to-end pixel-wise prediction of class labels in images. However, its effectiveness is hindered by the limited receptive field, which restricts the efficient utilization of multi-scale contextual information. Recently, some algorithms, such as UPerNet [16], have been developed based on the FPN (Feature Pyramid Network) structure, utilizing top-down lateral connections to fuse multi-scale information. The HRNet [17] designed structure can maintain high resolution even with deeper network layers, highlighting the importance of high-resolution feature maps for semantic segmentation. The DANet [18] introduces a dual attention module that combines spatial attention and channel attention to improve feature representation, effectively integrating local and global features.

1.2. Multi-Scale Information Fusion

Previous works have discussed how to integrate multi-scale information and demonstrated the benefits of incorporating multi-scale information for semantic segmentation. Similar to the parallel aggregation in PSPNet [19], the Deeplab series [14,20,21] introduced the ASPP module to capture contextual information. It utilizes atrous convolution with different rates to construct multi-scale semantic information, allowing for a larger receptive field to capture multi-scale information.

In the shallow layers of the network, feature maps contain detailed information that effectively distinguishes small objects. However, due to the limited receptive field, they face difficulties in differentiating larger objects. On the other hand, in the deep layers of the network, feature maps undergo multiple downsampling operations, posing challenges for distinguishing small objects. Nevertheless, deep-level feature maps often contain rich semantic information that enables the discrimination of larger objects.

Zhu et al. [22] used a non-local method to fuse features from different scales. They proposed a Feature Pyramid Transformer for multi-scale feature fusion, transforming all feature maps to the same size or scale for fusion, abandoning the traditional top-down pathway. CHASPP (Cascaded Hierarchical Atrous Spatial Pyramid Pooling) module introduced a new hierarchical structure consisting of multiple convolutional layers [23], increasing density and effectively addressing the weak representation issue caused by sparse sampling in the ASPP module.

Jiang et al. [24] proposed MSCB (Multi-Scale Context Block) to aggregate features from different scales. Chen et al. [25] proposed a method to preserve multi-scale features by learning spatial localization through multiple parallel paths. Dai et al. [26] designed a parallel, dual-branch network to extract information at different scales. Tan et al. [27] proposed a weighted bidirectional pyramid structure with discriminative fusion of different input features. Ou et al. [28] proposed a pyramid decoder structure to obtain multi-scale feature maps generated by the ASPP module at different stages. Lin et al. [29] introduced a multi-path semantic segmentation structure that integrates semantic information from three pathways.

These approaches and techniques demonstrate various strategies for integrating multi-scale information and leveraging it for improved semantic segmentation.

1.3. Attention Module

The attention mechanism imitates the cognitive process of the human brain, which has limited processing capacity for the information presented. Therefore, it requires a focus on specific regions to acquire more crucial information while filtering out irrelevant data. In certain mobile devices, such as UAVs [11], small robots [30], and augmented reality devices [31], it is not feasible to incorporate large computational devices. In medical image analysis, the datasets often have a large volume and exhibit high resolution and complexity [32]. The inclusion of attention mechanisms can enhance the efficiency of extracting critical lesion areas in these datasets. In neural networks, particularly in scenarios with limited computational resources, extracting relatively more significant and valuable information assumes great importance [33]. This ability aids the model in achieving enhanced performance in the respective tasks. Notably, this mechanism has found successful applications across diverse computer vision tasks.

Existing attention modules, such as SENet [34] (Squeeze and Excitation Net), ECANet [35] (Efficient Channel Attention Net), and CBAM [36] (Convolutional Block Attention Module), have been developed. The SENet module is a representative channel attention architecture that employs GAP (Global Average Pooling) and fully connected layers to recalibrate feature responses across channels, thereby reshaping the interdependencies among channels. However, the SE module solely focuses on capturing variations in pixel importance across different channels, disregarding distinctions in pixel importance within the same channel. ECANet, built upon SENet, introduces a one-dimensional convolutional filter to generate channel weights, replacing the fully connected layer and reducing module complexity. Additionally, differing from the aforementioned two structures, Woo et al. proposed CBAM, a convolutional block attention network that combines channel and spatial attention. CBAM incorporates a structure of maximum pooling to further enhance the model’s performance.

To integrate spatial and channel attention, challenges such as increased computational burden and convergence difficulties are encountered. To address this issue, a recent efficient module called Shuffle Attention [37] has been proposed. The Shuffle Attention module divides the feature map into multiple groups of sub-features along the channel dimension and leverages the Shuffle Unit to integrate complementary channel and spatial attention for each sub-feature. This approach offers a lightweight and efficient solution.

Our contribution:

- (1)

- We propose an improved semantic segmentation model based on Deeplabv3 plus, called DPNet. DPNet is a dual-pyramid semantic segmentation network.

- (2)

- We introduce a method that leverages a dual-feature pyramid to integrate feature maps at various resolutions, effectively amalgamating contextual information and enhancing accuracy. The model’s capacity to capture multi-scale information and fuse diverse-scale information is strengthened while fully exploiting the effective features extracted by the backbone network.

- (3)

- We incorporate the Shuffle Attention module, which effectively combines spatial attention and channel attention, suppressing the transmission of irrelevant information and enhancing the representational capacity of meaningful features.

- (4)

- The proposed algorithm achieves promising experimental results with mIoU values of 78.69% and 79.51% on the validation sets of the Cityscapes and Pascal VOC 2012, respectively.

Compared with previous methods, our method improves on the classic Deeplabv3 plus algorithm by processing the feature map through two feature pyramids. Different from the method proposed by [28], our method uses two feature pyramids as the encoder, which forms a dual-branch structure. While DANet [18] also employs a dual-branch structure, our method differs in that we do not separately compute spatial attention and channel attention. Instead, we utilize a lightweight attention module.

2. Methods

Deeplabv3 plus is an extension of the Deeplabv3 architecture, featuring a simplified and efficient decoding module that refines feature information and enhances semantic segmentation performance. In the encoder component, an improved Xception model serves as the backbone network, leveraging depth-separable convolutions across different channels to extract image features. Subsequently, the ASPP module employs three parallel convolutions with void rates of 6, 12, and 18, along with global average pooling, to capture high-level semantic information. In the decoder component, the low-level features extracted from the input layer of the backbone network are downscaled using convolutions and fused with the high-level features obtained from the encoder. Multiple convolutions are then applied to restore spatial information within the feature maps, followed by bilinear upsampling for precise boundary adjustment of the target objects. Ultimately, the segmentation results are derived from this process.

In this paper, the algorithm proposed selects ResNet as the backbone network due to its strong feature extraction capability. Extending the framework of Deeplabv3plus, a dual-path, dual-feature pyramid structure is introduced to facilitate multi-scale feature fusion. This approach effectively leverages the feature maps at different scales obtained from the backbone network.

In comparison to the Xception network model, ResNet introduces a residual structure with identity mapping, enabling smooth gradient propagation from shallow to deep layers. With this residual structure, it becomes feasible to train very deep neural networks, enhancing the capability of feature extraction and capturing the fine details and characteristics of input data. The ResNet network model with residual mechanisms can better retain the features of different scales for the later part of the network model.

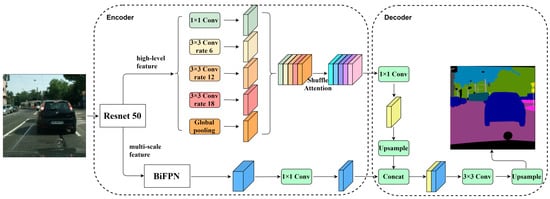

The overall structure of the parallel dual pyramid is illustrated in Figure 1. In the algorithm proposed in this paper, ResNet is employed to obtain four layers of feature maps. The highest-level feature map is fed into the ASPP module, while the remaining three shallow feature maps undergo BiFPN to effectively enhance the network model’s capability for multi-scale feature fusion.

Figure 1.

The overall framework of DPNet.

The BiFPN module and ASPP module are integrated as parallel dual-branch structures, serving as the encoder of the overall architecture. The decoder part follows a similar structure to Deeplabv3 plus, where the high-level feature map from the ASPP module is upsampled to match the size of the feature map outputted by the BiFPN module, and they are stacked together. Two depth-separable convolutions are then applied to obtain the final effective feature map, which serves as a condensed representation of the entire image. Finally, the feature map is upsampled to the size of the input image.

2.1. Multi-Scale Information Feature Fusion in the Dual-Pyramid Structure

In the original algorithm of Deeplabv3 plus, the decoder part simply used a single low-level feature map to combine with the high-level features from the ASPP module for multi-scale feature fusion. This led to the underutilization of the multi-scale information obtained from the backbone network. To address this issue, we introduce the BiFPN module as a component for processing shallow features. It incorporates a weight selection mechanism that helps preserve more relevant and valuable features.

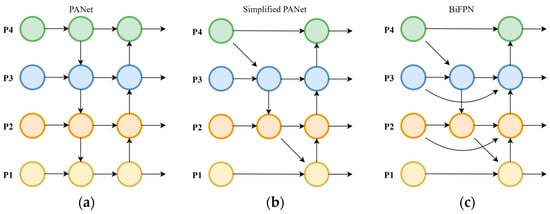

BiFPN is a structure proposed by the Google team in 2020 for fusing multi-scale information, originally introduced in the EfficientDet model [27]. The inspiration for BiFPN comes from the PANet [38] structure (Figure 2).

Figure 2.

(a) PANet. (b) Simplified PANet. (c) BiFPN.

BiFPN is a weighted bi-directional feature pyramid network consisting of a top-down pathway to propagate semantic information from higher layers and a bottom-up pathway to convey positional information from lower layers. It differs from the PANet structure in several aspects. BiFPN is a weighted bi-directional feature pyramid network consisting of a top-down pathway to propagate semantic information from higher layers and a bottom-up pathway to convey positional information from lower layers. It differs from the PANet structure in several aspects. Firstly, nodes with only one input edge are removed since they contribute minimally to the overall fusion of multi-scale features. This removal simplifies the bi-directional network structure. Secondly, if an original input node and an output node are in the same layer, an additional edge is inserted between them. This allows the combination of more features without significantly increasing the data volume. Unlike traditional feature fusion approaches that often rely on simple feature map concatenation or shortcut operations, BiFPN takes into account the varying resolutions and contributions of feature maps from different layers. Simple stacking is not the optimal solution. In the BiFPN structure [27], learnable weights are introduced to determine the importance of different input features, and only the features need to be multiplied by learnable weights, which is similar to the softmax method. The weights are scaled to the range of [0, 1], as shown in Equation (1):

In Equation (1), where is a learnable weight, the ReLU activation function is applied after each to ensure that its value is greater than or equal to 0. The small value of is used to prevent numerical instability.

In the proposed structure of this paper, three groups of features, , are obtained by the backbone feature extraction network at different scales. The channel numbers are adjusted using convolutional operations. is then transformed into via upsampling, matching the scale of . The result is stacked and convolved with feature . The intermediate calculation involves computing the weight selection via Equation (1), which is used to learn the importance of different input features.

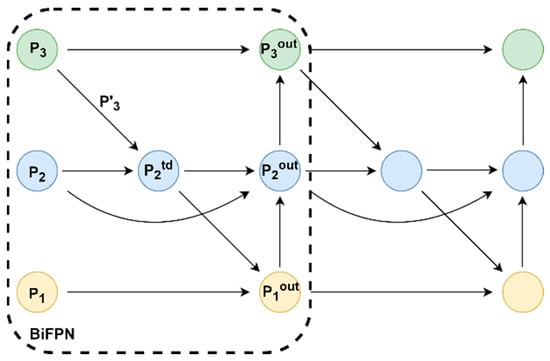

As shown in Figure 3, taking and as examples, the expressions for and are given by Equations (2) and (3). The intermediate weight mechanism determines whether to focus more on or . After the weight mechanism selection, the other features undergo similar stacking and weight selection operations to form a BiFPN module. Considering that the accuracy can be improved by repeatedly stacking BiFPN modules, but it may increase the data volume, in the proposed structure of this paper, this module is only stacked twice and achieves good improvement.

Figure 3.

Structure of the BiFPN module.

By fully utilizing the feature maps of different scales obtained from the backbone network, the shallow low-level feature maps in Deeplabv3 plus are replaced with the fusion results of the BiFPN structure. This enhances the network’s representation capability for features of different resolutions, resulting in improved pixel classification and more accurate details.

2.2. Shuffle Attention Module

For different computer vision tasks, attention modules are used to build the interdependencies between features. There are two widely used types: channel attention and spatial attention. The former aggregates information along the channel dimension of the feature map, while the latter aggregates information within each channel’s spatial dimensions. These attention mechanisms can enhance the model’s representation capability and improve its accuracy.

The most commonly used ones are the SE attention block and the CBAM. In recent years, the SE attention block has been widely used in semantic segmentation tasks. However, the SE block only considers the influence of channel relationships on features, ignoring spatial positional information. While the CBAM block takes into account both spatial and channel dimensions, it introduces additional parameters, thereby increasing the network’s parameter count. Moreover, it requires the calculation of additional attention scores, which adds to the model’s complexity and computational cost. As a result, it may not be suitable for scenarios with limited computing resources. Additionally, the performance of the CBAM module highly depends on the input of convolutional layers, making it less effective for special or irregular image inputs, leading to no improvement or even a decrease in segmentation performance.

In our approach, we integrated the lightweight attention mechanism called Shuffle Attention, which was originally proposed in SA-Net [37]. This attention mechanism efficiently combines both spatial and channel attention mechanisms to enhance the representation of features in our method.

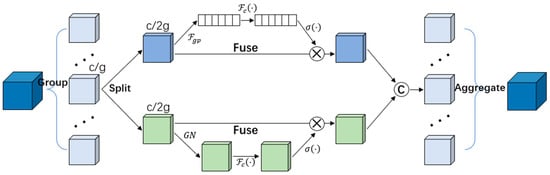

The structure of the Shuffle Attention module is illustrated in Figure 4. The overall structure is divided into four sub-modules:

Figure 4.

Structure of the Shuffle Attention Module.

(1) Feature grouping, which divides the input feature map into G groups of sub-feature maps based on the channel dimension. Here, C represents the number of channels in the feature map, while W and H represent the width and height of the feature map, respectively. It can be expressed as Equation (4).

Next, each group of sub-feature maps is further divided into two branches, . These branches correspond to spatial attention and channel attention, respectively. The channel attention branch utilizes the relationships between feature channels to generate channel attention maps, while the other branch utilizes the spatial relationships between features to generate spatial attention maps.

(2) Channel Attention: For the spatial attention branch, the input is first subjected to average pooling to obtain channel-wise statistics. Subsequently, a linear transformation function is employed to enhance the feature representation. The resulting features are then activated using a sigmoid function. Finally, element-wise multiplication is performed between the activated features and the original input, enabling the incorporation of global information. This process generates a class representation with channel attention weights, denoted as , thereby enhancing the features. The detailed calculation process is defined by Equation (5).

denotes the linear function, denotes the sigmoid activation function, denotes the average pooled feature, and the two parameters are linear transformation parameters used to scale and translate .

(3) Spatial Attention: Spatial attention can be seen as complementary information to channel attention, focusing on the spatial aspects of the features. For the spatial attention branch , the features are first normalized using the GN (Group Norm) function to obtain spatial statistics. Then, a linear transformation function is applied to enhance the feature representation. The resulting features are activated using a sigmoid function, and element-wise multiplied with the original features, yielding the spatial attention-weighted features . This process highlights the importance of specific regions by emphasizing their contribution. The specific calculation procedure is defined by Equation (6).

where denotes the group norm normalization function, and the two parameters are linear transformation parameters.

(4) Aggregation: To combine the channel attention and spatial attention features, they are weighted and concatenated to form the aggregated features . This is achieved by connecting the two branches and ensuring that the resulting feature has the same number of channels as the input. It can be expressed as Equation (7).

Then, all the sub-features are aggregated. The channel grouping operation is performed to rearrange the channels, enabling information interaction between different channels. This enhances the model’s expressive power.

All generated sub-features will be fused after the feature maps are computed via channel attention and spatial attention, respectively. Finally, a “Channel Shuffle” operation is used here.

The Shuffle Attention module is an efficient attention mechanism based on channel shuffling. Unlike global attention mechanisms, it offers improved performance without significantly increasing computational costs. This makes it particularly advantageous when dealing with a large number of channels, as it achieves better results with fewer resources. Its implementation is straightforward, requiring only convolution operations on the reshuffled feature maps. In this paper, the Shuffle Attention module is positioned after the ASPP module, leveraging the multi-scale features fused by the ASPP module while further enhancing the representation capabilities via the Shuffle Attention module. Moreover, this approach prevents premature compression of the features strengthened by the Shuffle Attention module.

3. Experiments and Results

In this section, we first introduce the configuration of the experimental environment, then present the dataset used for evaluation, and finally present a series of experimental results obtained on each of the two datasets.

3.1. Experimental Environment

The experimental platform in this paper is Ubuntu 18.04; the GPU used is Nvidia RTX3090 with 24G of memory; the deep learning framework is PyTorch 1.8; we use ResNet as the backbone network, using the pre-trained weights on ImageNet, and use SGD (Stochastic Gradient Descent) to train the network with momentum 0.9, the weight attenuation 1 × 10−4. This article employs the typical “ploy” strategy; the learning rate (lr) is calculated by Equation (8).

We trained on the Cityscapes dataset [39] for 100 epochs with mini-batchsize set to 8 and on the Pascal VOC 2012 dataset [40] for 100 epochs with mini-batchsize set to 8.

We compared our proposed method with several state-of-the-art networks, which have been open-sourced, on the Cityscapes dataset and the Pascal VOC 2012 dataset. For fair comparison, we set the backbone network of all these methods to ResNet-50 to minimize the influence of the backbone network on the experiments. We also conducted ablation experiments on the Cityscapes dataset to further evaluate our approach.

3.2. Datasets

3.2.1. Cityscapes

Cityscapes is a popular dataset for semantic segmentation tasks. It consists of high-resolution images and corresponding semantic annotations from 50 cities in Germany. The dataset includes 19 classes and covers various urban scenes such as roads, pedestrians, vehicles, and buildings. It is commonly used for semantic segmentation in the context of autonomous driving. The dataset contains 5000 finely annotated images and 19,998 coarsely annotated images. Among the finely annotated images, there are three subsets, which include 2975 images for training, 500 images for validation, and 1525 images for testing. Among the Cityscapes dataset, we evaluated our method on the validation dataset only.

3.2.2. Pascal VOC 2012

The Pascal VOC 2012 dataset consists of 21 common classes, such as cars, people, cats, dogs, and more. It contains 1464 training images, 1449 validation images, and 1456 test images. Additionally, an augmented set of 10,582 images is available for training purposes. The Pascal VOC 2012 dataset is also a popular general-purpose semantic segmentation dataset that can be used to evaluate the performance of algorithms. For the Pascal VOC 2012 dataset, we evaluated our method solely on the validation set.

3.3. Evaluation Metrics

To evaluate DPNet, this study analyzes the experimental results from both subjective and objective perspectives. The subjective evaluation compares certain targets and objects, such as the segmentation performance of smaller objects and object boundaries, based on the visual results of semantic segmentation. For objective evaluation, this study employs two metrics: (Mean Intersection over Union) and (Average Pixel accuracy). The represents the average ratio of intersection to union, and in semantic segmentation tasks, the intersection over union for a single class is the ratio of the intersection between the ground truth label and the predicted label to their union.

The is the average of the intersection over union values for each class in the dataset. Equation (9) illustrates the calculation method for . Specifically, represents true positive, indicating the model’s prediction is positive and the ground truth is also positive. represents false positive, indicating the model’s prediction is positive while the ground truth is negative. represents false negative, indicating the model’s prediction is negative while the ground truth is positive. represents true negative, indicating both the model’s prediction and the ground truth are negative.

The calculates the percentage of pixels correctly classified for each class separately, and then these values are summed and averaged as shown in Equation (10).

3.4. Ablation Study on Cityscapes Dataset

We conducted ablation experiments on the Cityscapes dataset, as shown in Table 1. (1) We presented the results of the baseline; and (2) the results after adding BiFPN; (3) the results after adding Shuffle Attention; (4) the results of our proposed dual-path dual-feature pyramid semantic segmentation method with both modules. These experiments were performed using ResNet-50 as the backbone network.

Table 1.

Ablation study on Cityscapes dataset.

After adding BiFPN, the mIoU increased by 1.24% compared to the baseline. Similarly, when Shuffle Attention was added, the mIoU increased by 1.2%. When both modules were added simultaneously, the result reached 78.69%. These results demonstrate that the multi-scale fusion method and attention module have a positive impact on scene segmentation. Notably, the mIoU achieved by adding the BiFPN module reached 77.83%, indicating that this module effectively selects better features and outputs them as useful features. Additionally, the attention mechanism we incorporated successfully combines channel attention and spatial attention, suppressing the transmission of irrelevant features, resulting in an mIoU of 77.79% for our approach. Furthermore, with the combination of these two modules, the segmentation capability of the model was further improved. This represents a 2.1% improvement over the baseline model, and the mPA is also improved by a small margin, providing strong evidence of the effectiveness of our improvements.

We also conducted experiments to examine the impact of noise on the model. We preprocessed the validation set images by introducing slight Gaussian noise (with a mean of 0 and a standard deviation of 0.3). The addition of Gaussian noise resulted in blurred edges and details in the images. Figure 5 showcases the images after adding Gaussian noise.

Figure 5.

We added slight Gaussian noise to the validation set images (a) RGB Images, (b) RGB images processed with Gaussian noise.

In Table 1, the data in the last two columns indicate that compared to the images without preprocessing, the baseline method’s mIoU decreased by 5.66% after adding Gaussian noise, while our proposed method only experienced a 3.91% decrease. This suggests that our method exhibits some performance degradation when dealing with slight noise, but it performs better compared to the baseline algorithm.

In Table 2, we present the changes in memory usage for single-image inference, demonstrating the variations in model size after incorporating different modules. We also calculated the FLOPs (Floating Point Operations) to reflect the changes in computational complexity. As shown in the data in Table 2, adding the BiFPN module increased memory usage by 195MB, while adding the Shuffle Attention module increased memory usage by 48MB. When both modules were added simultaneously, the memory usage increased by 242MB. Regarding FLOPs, our method experienced a 4.4% increase after adding the BiFPN module, while there was only a minor change after adding the Shuffle Attention module. The addition of a small amount of computational resources to our method has led to an overall performance improvement.

Table 2.

Results for memory usage and FLOPs.

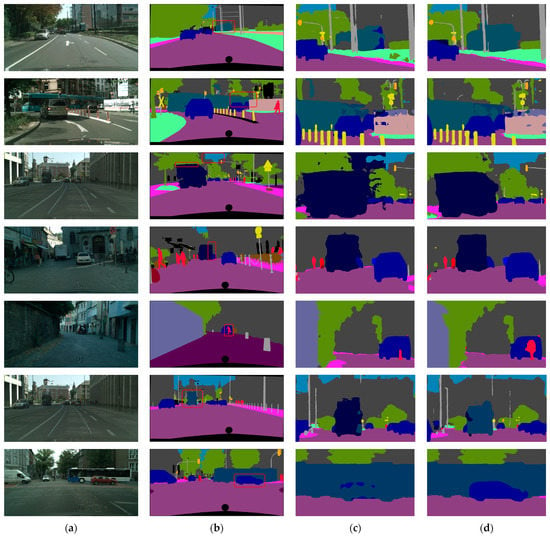

From the visualization results, we can see that our method has better integrity for some object segmentation, and in the results of Deeplabv3 plus, for some objects, the output results are not complete objects, as shown in the first two rows of Figure 6. For some target edges, our proposed method can obtain more accurate results and optimize the effect of segmented edges, as shown in the third and fourth rows of Figure 6. For smaller or distant objects, our proposed method obviously obtains better results. However, in terms of segmentation contours, especially for these smaller or distant objects, there is still room for improvement, as shown in the last three rows of Figure 6.

Figure 6.

Comparison results of Deeplabv3 plus and our proposed method. (a) RGB Images, (b) Ground Truth, (c) Deeplabv3 plus, (d) Proposed way.

3.5. Results on Cityscapses Dataset

To validate the effectiveness and rationality of our proposed method, we compared our proposed structure with widely used methods such as FCN, Deeplabv3, UPerNet, PSPNet, and DaNet. On the Cityscapes dataset, we set the training input size to and conducted experiments using the same data augmentation methods. Table 3 presents the comparative experimental results on the Cityscapes dataset.

Table 3.

Results obtained by different methods on Cityscapes.

As shown in Table 3, we quantitatively evaluated several methods on the validation set under the same environment and configuration. On the Cityscapes dataset, our method outperforms several other popular methods. In previous work, DANet utilized a self-attention mechanism to capture long-term dependencies, while PSPNet adopted a similar parallel aggregation structure and achieved some results. Different from the previous methods, our method is based on the parallel structure of the dual pyramid, making full use of the effective feature maps obtained in the backbone network. After adding the Shuffle Attention module, the performance has been further improved, and better performance has been achieved. It can also be observed that several classes in the comparative experiments exhibit poor segmentation performance, such as walls, fences, poles, and riders. Our model still requires further enhancement for thin objects or objects with intricate details.

3.6. Results on Pascal VOC 2012 Dataset

To further validate the effectiveness and rationality of our proposed method, we compared its structure with several widely used methods. On the Pascal VOC 2012 dataset, we set the training input size to and conducted experiments using the same data augmentation methods. Table 4 presents the comparative experimental results on the Pascal VOC 2012 dataset.

Table 4.

Results obtained by different methods on Pascal VOC 2012.

As seen from Table 4, among these popular methods (FCN, HRNet, UPerNet, PSPNet, and Deeplabv3 plus), the final mIoU of our method is 79.51%; mPA is 94.77% on the validation set, which is the best performance. However, the final mIoU of the classic Deeplabv3 plus method is 78.26%, and mPA is 94.55%.

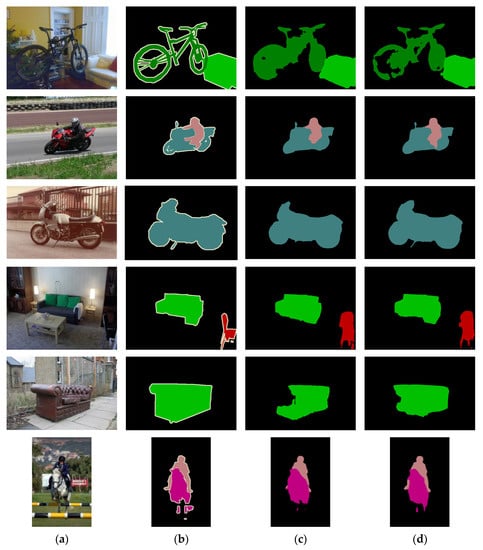

We also show the results of the visualization and make an intuitive comparison, as shown in Figure 7.

Figure 7.

Comparison results of Deeplabv3 plus and our proposed method. (a) RGB Images, (b) Ground Truth, (c) Deeplabv3 plus, and (d) Proposed Way.

As shown in Figure 7, this paper compares our proposed method with the Deeplabv3 plus method in the visualization part, and the performance of our method has been improved after adding the BiFPN module and the Shuffle Attention module. For example, in the first row, our method segmented the details of the rear tire of the bicycle more accurately; in the second row, our method segmented the human legs more clearly; in the third row, our method segmented the motorcycle bracket fully. In the last three rows, our method segments the contours of objects more accurately. However, for objects such as bicycles, chairs, and plants, further improvements are needed in the segmentation results. While our method has shown some improvements compared to other methods, we still face challenges in accurately segmenting objects with intricate details.

4. Discussion

This paper proposes an improved method based on Deeplabv3 plus, changing the encoder part to a double-pyramid structure. The parallel double-pyramid structure can realize the full utilization of the effective feature layer obtained from the backbone part on the basis of extracting global information. The information in the feature map is enriched. On this basis, we also added an attention mechanism. The addition of the Shuffle Attention module not only considers the connection between channels but also the relationship between spatial positions. In the case of limited hardware device resources, the transmission of useless features is suppressed, and the expression of effective features is guaranteed. Our method achieves the purpose of improving the segmentation effect. In addition, our method also shows some improvements compared to the baseline approach when slight noise is added. On the other hand, for smaller objects, objects with finer details, or objects located at a greater distance in the images, such as bicycle tires, fences, poles, and people in the distance, our method exhibits some advancements compared to other methods. However, there is still room for improvement in our method. Experiments show that the DPNet model we proposed can segment images with high precision and performs well on two public datasets.

Author Contributions

Conceptualization, J.W., T.Y. and A.T.; methodology, J.W.; software, J.W. and X.Z.; validation, J.W. and X.Z.; formal analysis, J.W.; investigation, J.W.; resources, T.Y.; data curation, J.W.; writing—original draft preparation, J.W., T.Y., X.Z. and A.T.; writing—review and editing, J.W. and X.Z.; project administration, T.Y. and A.T.; funding acquisition, T.Y. and A.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Provincial Natural Science Foundation of Zhejiang, grant number LTGG23E090002.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mo, Y.; Wu, Y.; Yang, X.; Liu, F.; Liao, Y. Review the state-of-the-art technologies of semantic segmentation based on deep learning. Neurocomputing 2022, 493, 626–646. [Google Scholar] [CrossRef]

- Feng, D.; Haase-Schutz, C.; Rosenbaum, L.; Hertlein, H.; Glaser, C.; Timm, F.; Wiesbeck, W.; Dietmayer, K. Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Trans. Intell. Transp. Syst. 2021, 22, 1341–1360. [Google Scholar] [CrossRef]

- Li, J.; Jiang, F.; Yang, J.; Kong, B.; Gogate, M.; Dashtipour, K.; Hussain, A. Lane-deeplab: Lane semantic segmentation in automatic driving scenarios for high-definition maps. Neurocomputing 2021, 465, 15–25. [Google Scholar] [CrossRef]

- Wang, H.; Chen, Y.; Cai, Y.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. Sfnet-n: An improved sfnet algorithm for semantic segmentation of low-light autonomous driving road scenes. IEEE Trans. Intell. Transp. Syst. 2022, 23, 21405–21417. [Google Scholar] [CrossRef]

- Zhou, W.; Liu, J.; Lei, J.; Yu, L.; Hwang, J.-N. Gmnet: Graded-feature multilabel-learning network for rgb-thermal urban scene semantic segmentation. IEEE Trans. Image Process. 2021, 30, 7790–7802. [Google Scholar] [CrossRef]

- Emek Soylu, B.; Guzel, M.S.; Bostanci, G.E.; Ekinci, F.; Asuroglu, T.; Acici, K. Deep-learning-based approaches for semantic segmentation of natural scene images: A review. Electronics 2023, 12, 2730. [Google Scholar] [CrossRef]

- Gu, J.; Bellone, M.; Sell, R.; Lind, A. Object segmentation for autonomous driving using iseauto data. Electronics 2022, 11, 1119. [Google Scholar] [CrossRef]

- Heller, N.; Isensee, F.; Maier-Hein, K.H.; Hou, X.; Xie, C.; Li, F.; Nan, Y.; Mu, G.; Lin, Z.; Han, M.; et al. The state of the art in kidney and kidney tumor segmentation in contrast-enhanced ct imaging: Results of the kits19 challenge. Med. Image Anal. 2021, 67, 101821. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet plus plus: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans. Med. Imag. 2020, 39, 1856–1867. [Google Scholar] [CrossRef]

- Chin, C.-L.; Lin, J.-C.; Li, C.-Y.; Sun, T.-Y.; Chen, T.; Lai, Y.-M.; Huang, P.-C.; Chang, S.-W.; Sharma, A.K. A novel fuzzy dbnet for medical image segmentation. Electronics 2023, 12, 2658. [Google Scholar] [CrossRef]

- Jia, J.; Song, J.; Kong, Q.; Yang, H.; Teng, Y.; Song, X. Multi-attention-based semantic segmentation network for land cover remote sensing images. Electronics 2023, 12, 1347. [Google Scholar] [CrossRef]

- Gibril, M.B.A.; Shafri, H.Z.M.; Al-Ruzouq, R.; Shanableh, A.; Nahas, F.; Al Mansoori, S. Large-scale date palm tree segmentation from multiscale uav-based and aerial images using deep vision transformers. Drones 2023, 7, 93. [Google Scholar] [CrossRef]

- Wang, X.; Shu, L.; Han, R.; Yang, F.; Gordon, T.; Wang, X.; Xu, H. A survey of farmland boundary extraction technology based on remote sensing images. Electronics 2023, 12, 1156. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 833–851. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified perceptual parsing for scene understanding. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 432–448. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H.; Soc, I.C. Dual attention network for scene segmentation. In Proceedings of the 32nd IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3141–3149. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the 30th IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Zhu, Z.; Xu, M.; Bai, S.; Huang, T.; Bai, X. Asymmetric non-local neural networks for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 593–602. [Google Scholar]

- Lian, X.; Pang, Y.; Han, J.; Pan, J. Cascaded hierarchical atrous spatial pyramid pooling module for semantic segmentation. Pattern Recognit. 2020, 110, 107622. [Google Scholar] [CrossRef]

- Jiang, D.; Qu, H.; Zhao, J.; Zhao, J.; Hsieh, M.-Y. Aggregating multi-scale contextual features from multiple stages for semantic image segmentation. Connect. Sci. 2021, 33, 605–622. [Google Scholar] [CrossRef]

- Zhu, Q.; Liao, C.; Hu, H.; Mei, X.; Li, H. Map-net: Multiple attending path neural network for building footprint extraction from remote sensed imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6169–6181. [Google Scholar] [CrossRef]

- Dai, Y.; Wang, J.; Li, J.; Li, J. Pdbnet: Parallel dual branch network for real-time semantic segmentation. Int. J. Control. Autom. Syst. 2022, 20, 2702–2711. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. arXiv 2020, arXiv:1911.09070. [Google Scholar]

- Ou, X.; Wang, H.; Zhang, G.; Li, W.; Yu, S. Semantic segmentation based on double pyramid network with improved global attention mechanism. Appl. Intell. 2023, 53, 18898–18909. [Google Scholar] [CrossRef]

- Lin, Z.; Sun, W.; Tang, B.; Li, J.; Yao, X.; Li, Y. Semantic segmentation network with multi-path structure, attention reweighting and multi-scale encoding. Vis. Comput. 2023, 39, 597–608. [Google Scholar] [CrossRef]

- Jia, W.K.; Tian, Y.Y.; Luo, R.; Zhang, Z.H.; Lian, J.; Zheng, Y.J. Detection and segmentation of overlapped fruits based on optimized mask r-cnn application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef]

- Sinha, A.; Dolz, J. Multi-scale self-guided attention for medical image segmentation. IEEE J. Biomed. Health Inform. 2021, 25, 121–130. [Google Scholar] [CrossRef]

- Cheng, H.K.; Chung, J.; Tai, Y.-W.; Tang, C.-K. Cascadepsp: Toward class-agnostic and very high-resolution segmentation via global and local refinement. arXiv 2020, arXiv:2005.02551. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. arXiv 2020, arXiv:1910.03151. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the 15th European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhang, Q.-L.; Yang, Y.-B. SA-Net: Shuffle attention for deep convolutional neural networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 2235–2239. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the 31st IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).