Abstract

Inadequate illumination often causes severe image degradation, such as noise and artifacts. These types of images do not meet the requirements of advanced visual tasks, so low-light image enhancement is currently a flourishing and challenging research topic. To alleviate the problem of low brightness and low contrast, this paper proposes an improved zero-shot Retinex network, named IRNet, which is composed of two parts: a Decom-Net and an Enhance-Net. The Decom-Net is designed to decompose the raw input into two maps, i.e., illuminance and reflection. Afterwards, the subsequent Enhance-Net takes the decomposed illuminance component as its input, enhances the image brightness and features through gamma transformation and a convolutional network, and fuses the enhanced illumination and reflection maps together to obtain the final enhanced result. Due to the use of zero-shot learning, no previous training is required. IRNet depends on the internal optimization of each individual input image, and the network weights are updated by iteratively minimizing a series of designed loss functions, in which noise reduction loss and color constancy loss are introduced to reduce noise and relieve color distortion during the image enhancement process. Experiments conducted on public datasets and the presented practical applications demonstrate that our method outperforms other counterparts in terms of both visual perception and objective metrics.

1. Introduction

Poor contrast, high noise levels, and the loss of information during the capture of images using cameras and other equipment can be caused by problems such as inadequate lighting [1]. Existing advanced vision tasks such as image segmentation, image recognition, target detection [2], etc., require high-quality normal light images, and some existing intelligent applications such as night driving, night photography, etc., are also implemented on a normal light/video basis. Therefore, it is necessary to enhance the brightness of low-light images to restore image contrast and provide more image information [3,4].

In the past, researchers have proposed numerous conventional methods for improving low-light images, most of which have been HE (Histogram Equalization)-based [5] methods and Retinex-based methods [6]. Numbering among the HE-based methods is Local Histogram Equalization (Local HE, LHE) [7], which enhances the local detail of an image to a greater degree by chunking the image and using local processing methods. The method based on Retinex theory is based on estimating the illuminance and reflection components of an image and adjusting the pixel dynamic range of the illuminance component to enhance the brightness of the original image. The main methods in this category are Single-Scale Retinex (SSR) [8], Multi-Scale Retinex (MSR) [9], Multi-Scale Retinex with Color Recovery (MSRCP) [10], etc. In recent years, learning-based methods have achieved good results in the field of image processing, and such methods are used to achieve low-light image enhancement through the construction of effective models [11,12]. However, all of these methods have some drawbacks; for example, traditional methods increase the brightness of an image, but the image’s noise is significantly amplified, while most of the methods based on deep learning require a large dataset of images as training support (see Figure 1) [13]. Therefore, a relevant research direction consists of avoiding these problems while simultaneously improving the brightness of the images.

Figure 1.

Examples of enhancement effects of existing low-light image enhancement methods. The upper row shows the inputs, while the lower row displays the corresponding results.

In this paper, we present a new zero-shot learning method that is applied to low-light image enhancement. The method is based on Retinex theory, which consists of a Decom-Net and an Enhance-Net. Retinex belongs to a school of image decomposition theory that maintains that the color and brightness of an object perceived by the human eye depends on the reflective characteristics of the object’s surface. Based on this, an image is divided into an illuminance component and a reflection component, where the illuminance component contains the brightness information of the image, and the reflection component contains the color information of the image. The Decom-Net decomposes the low-light image into illumination and reflection components. Unlike noise reduction using deep learning models, the method in this paper employs a new method of acquiring noise suppression loss to suppress the noise in the reflection component. The input provided to Enhance-Net is the illumination component decomposed from the low-illumination image, and the network structure avoids a loss of detail while enhancing the illumination component. Finally, the desired enhancement results are obtained by combining the reflection component of the Decom-Net and the illumination component of the Enhance-Net. By designing an effective Decom-Net, an Enhance-Net, and a reasonable loss function, our method can not only effectively enhance image brightness and image contrast but also remarkably suppress image noise.

Based on the above analysis, this paper proposes an effective low-light image enhancement method in accordance with Retinex theory, providing the following main contributions:

- (1)

- We propose a new effective low-light image enhancement method that consists of two parts: a Decom-Net and an Enhance-Net. The method incorporates the procedures of decomposition, noise reduction, and enhancement, which can effectively enhance image brightness and contrast while suppressing image noise.

- (2)

- The method reported in this paper is a zero-shot network that neither relies on large-scale image datasets nor requires prior training and achieves image decomposition and noise suppression through cleverly designed loss functions.

- (3)

- The experimental results obtained using paired image datasets as well as real low-light images show that the method reported in this paper provides a significant improvement over existing methods in terms of objective evaluation metrics and subjective vision. Also, in combination with practical applications, our method improves nighttime face detection.

The rest of the paper is organized as follows. Section 2 mainly reviews the deep-learning-based methods for low-light image enhancement. Section 3 describes the network structure and the loss function proposed in this paper. Section 4 provides the experimental results and a discussion. Section 5 concludes the paper.

2. Related Work

For some time now, deep-learning-based methods have been the dominant approach in the field of low-light image enhancement, and several researchers have presented their findings. Deep-learning-based methods for low-light image enhancement can be divided into four main categories: fully supervised learning, semi-supervised learning, unsupervised learning, and zero-shot learning.

Fully supervised learning contains the largest number of methods of any kind, such as LLNet [14], the first deep learning network to be used for low-light image enhancement, whose main role is to improve image contrast and de-blooming. RetinexNet [15], proposed by Wei et al., is the first fully supervised network to combine deep learning and Retinex theory. The network is also divided into a Decom-Net, which obtains an estimated illumination component by constraining the reflectance component of a low/normal-light image; an enhancement network, which uses BM3D [16] for noise reduction; and an enhancement network, which maintains consistency over large regions of an image through multi-scale connectivity. However, there is a noticeable degree of color distortion when using this method for enhancement. Subsequently, more methods based on deep learning and Retinex theory have been proposed, such as R2RNet [17], Retinex-Net [18], and so on, but such methods rely on paired datasets, with the available paired datasets being few in number and consisting of almost synthetic images, leading to a significant impact on the experimental results.

Semi-supervised learning networks have been around for a relatively short period of time and are associated with the fewest approaches in the field of low-light image enhancement. Yang et al. proposed a Deep Recurrent Band Network (DRBN) [19] based on paired image datasets, where each band signal is first learned for recovery, with the main objective of this stage being to guarantee signal fidelity and detail recovery. Band reorganization is then performed on the unpaired dataset for image enhancement. Although semi-supervised learning reduces the need for paired datasets, it is still impossible to completely preclude reliance on these datasets.

A representative unsupervised learning method is EnlightenGAN [20], the first method to introduce unsupervised learning to low-light image enhancement, which uses a convolutional network guided by an attention mechanism as the generator, with global and local information guided by a double discriminator, while implementing feature retention losses to preserve the problematic features of an image during training. Zhang et al. [21] proposed HEP based on HE combined with unsupervised networks. This method features the introduction of a noise separation module (NDM), which can separate the noise in the reflectance map from the image content remarkably well, and the results show that this method can well separate the noise in relatively dark areas of an image. A new self-calibrating illumination framework (SCI) has been proposed by Ma et al. [22]. The authors use this self-calibrating module to construct a cascading illumination learning process with weight sharing, achieving convergence between the results of each stage. Since the method contains only three convolutional layers, its computational cost is greatly reduced.

The unique advantage of zero-shot networks is that they do not require paired or unpaired image datasets for training. The enhancement of images is mainly achieved by constructing a series of clever loss functions. For example, Zero-DCE proposed by Guo et al. [23] is a representative zero-shot low-light image enhancement method that learns to recover targets in an image at the low-frequency layer and enhances the high-frequency details in the image based on the recovered image targets. RRDNet [24] belongs to the family of zero-sample learning methods, which is characterized by the formation of a branching network used to extract image noise for noise reduction purposes.

In contrast to the existing methods, we propose a method that combines zero-shot networks and Retinex theory, consisting of a Decom-Net and an Enhance-Net. In our method, a low-light image is decomposed into illuminance and reflection components via Decom-Net, and noise reduction is accomplished using a designed loss function, while the Enhance-Net is used to enhance the brightness of the illuminance component. Experiments have shown that this method is very adept at enhancing image brightness while achieving contrast enhancement and noise suppression.

3. Methodology

In this section, we comprehensively describe the network structure and loss function of the method proposed in this paper.

3.1. Network Architecture

Our proposed novel zero-shot network is shown in Figure 2. It contains both decomposed and enhanced networks. The Decom-Net decomposes the input image into illumination and reflection components and suppresses the noise in the reflection components by means of a proposed noise suppression loss function. The Enhance-Net takes the decomposed illuminance component as an input and enhances the luminance and contrast of the illuminance component. As a result, the method can increase the brightness of low-light images, improve image contrast, and suppress image noise, resulting in higher-quality normal-light images.

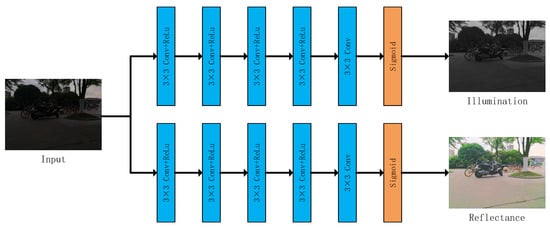

Figure 2.

The overall framework of our proposed IRNet.

Decom-Net: The Decom-Net model is shown in Figure 3. Our Decom-Net can be represented in a manner akin to that of a Retinex-based decomposition, precisely decomposing the input image (S) into an illumination component (I) and a reflection component (R), as expressed in the following equation.

Figure 3.

The proposed Decom-Net architecture. The Decom-Net decomposes an image into illumination and reflection maps.

The Decom-Net adopts a dual-branch fully convolutional network, where each branch consists of five layers of 3 × 3 convolutional networks followed by a sigmoid activation function. These branches are responsible for estimating the illumination component and the reflectance component, respectively. The sigmoid function ensures that the intensity values are bounded between 0 and 1. By iteratively updating the network weights and minimizing the loss function, the final decomposition results can be obtained.

Enhance-Net: The Enhance-Net is shown in Figure 4. The enhancement of the illumination component is a central part of low-light image enhancement as it enhances the brightness of the illumination component; by combining the illumination component with the decomposed reflection component, it is possible to obtain a normally illuminated image. Subject to the stage-optimized illumination method proposed in SCI [22], this paper proposes a progressive illumination module called IR. The module consists of convolutional layers, batch normalization, and ReLu layers implemented in an iterative manner. The number of channels in the convolutional layers of the IR units is maintained at 64 channels. The IR units make full use of multi-scale fusion, which is used to combine the output of the previous IR unit with the input of the current IR unit, thereby reducing the loss of feature information. Additionally, we apply gamma correction to adjust the brightness and contrast of an image, making it clearer. The discovery of gamma correction [25] revealed that the human perception of image brightness is not linear but exhibits a non-linear relationship. The essence of gamma transformation is to perform a mirror non-linear operation on an input image, resulting in an exponential relationship between the output image’s grayscale values and the input image’s grayscale values. The purpose of gamma transformation is to enhance the details in the dark regions of an image through non-linear transformation, making the image’s exposure more closely aligned with human perception. Finally, the network output and the gamma correction results are adaptively fused to obtain the enhanced illuminance components.

Figure 4.

The proposed Enhance-Net architecture. The Enhance-Net takes the illumination component as an input and aims to improve its brightness and contrast. (a) The illumination map; (b) The illumination map generated by Enhance-Net.

The final image is obtained by fusing the enhanced illuminance component and the decomposed reflection component R through the element-by-element multiplication method, which can be expressed as follows: . The results of each component and the enhancement effect of the method in this paper are shown in Figure 5.

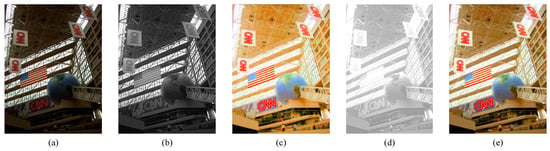

Figure 5.

Example of low-illumination image decomposition. (a) The input image; (b) The illumination map; (c) The reflectance map; (d) The illumination map generated by Enhance-Net; (e) The output image.

3.2. Loss Function

In this paper, the network structure is divided into two main parts, namely, Decom-Net and Enhance-Net, so the loss function is also mainly divided into decomposition loss and augmentation loss. Decomposition loss mainly consists of illuminance component loss (Li), and reflection component loss (Lr). The purpose of illuminance decomposition loss and reflection component loss is to achieve the decomposition of low-illuminance images. The expressions for the loss function of the illuminance component and the loss function of the reflection component are as follows:

where x and y denote the horizontal and vertical gradients, respectively. I is the illuminance component, R is the reflection component, and Y is the separately extracted Y component after RGB-to-YCBCR conversion. is the maximum value of the R, G, and B channel intensities and is often used as an initial estimate of the illuminance component in other Decom-Nets based on Retinex theory.

The enhanced loss function consists of a noise reduction loss function and a constancy color loss function. The noise reduction loss function is used to reduce the noise in the illuminance component so that monotonicity is maintained between adjacent pixels. Therefore, we added a noise reduction loss function to the illuminance component enhancement process using the following expression:

where M is the number of iterations, and x and y denote the horizontal and vertical gradients, respectively.

According to the color constancy theory [26], the color of each sensor averages out to grey in any image, so a color constancy loss function is introduced to compensate for possible color deviations during decomposition, and a relationship between the three-color channels is established. The expressions are related as follows:

In the image, represents the average intensity of channel b. (b, d) denotes a pair of color channels.

The total loss can be expressed as follows:

where ,, , and denote the coefficients of each loss function.

4. Experimental Results and Analysis

4.1. Subjective Evaluation

To test the performance of our method, we used an experimental platform consisting of a computer (with 32 GB of RAM, a NVIDIA GeForce RTX2080 Ti GPU, and an Intel i9-9900 CPU) and PyCharm with an Anaconda Python 3.7 interpreter and the PyTorch 1.12.1 framework. This paper presents a zero-shot network approach, so preparing the corresponding training and validation sets was unnecessary. The main empirical parameters involved in this paper were set as follows: = 1, = 1, = 0.2, and = 0.2.

For supervised learning networks, pairs of images are generally selected for training and testing, while GAN networks focus on unpaired image datasets for testing and evaluation. To better demonstrate our method’s effectiveness, the LOL dataset, which is a paired image dataset [15]; the LIME dataset, which is an unpaired image dataset [27]; the NPE dataset [28]; and the DICM [29] dataset were selected for testing and evaluation.

Traditional and deep-learning-based methods were selected for comparison. The traditional methods include HE [5], Retinex [6], and MSRCP [10], while for the deep-learning-based methods, we selected RetinexNet [15], EnlightenGAN [20], RRDNet [24], Zero-DCE [23], IceNet [30], and SCI [22]. For the sake of fairness, the compared methods were implemented using their source code published in the corresponding articles without any modifications.

Figure 6 shows a visual comparison between the existing advanced methods and our method with respect to the LOL dataset samples, from which it can be seen that the enhancement effect obtained by the traditional method leads to more serious color distortion compared with the other methods. Also, there is local color distortion in the image modified using the EnlightenGAN method. The image brightness improvement offered by RRDNet is not apparent, and there is still much room for improvement. The enhancement patterns obtained by Zero-DCE and SCI yielded significant enhancement patterns compared with the actual image. The color of the background palette is lighter than the enhancement effect obtained by the method in this paper, which is closer to the actual image in terms of visual effect.

Figure 6.

Comparison of image enhancement results of different methods applied to the LOL dataset: (a) Input; (b) HE [5]; (c) Retinex [6]; (d) MSRCP [10]; (e) RetinexNet [15]; (f) EnlightenGAN [20]; (g) RRDNet [24]; (h) Zero-DCE [23]; (i) Ice-Net [30]; (j) SCI [22]; (k) IRNet (our method); (l) Normal-light image.

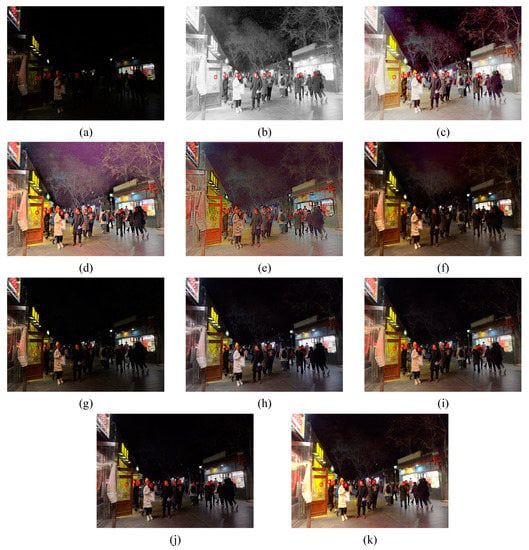

Figure 7 shows a comparison of the effects on the street images in the NPE dataset after enhancement via the different enhancement methods. The enhancement effects of the conventional method, RetinexNet, and Zero-DCE all show serious noise interference, in which some areas of the Retinex image are overexposed, the SCI image presents distorted color and a loss of details in the sky area along with a loss of detail in the white cloud area, and the RRDNet image presented a lesser degree of brightness enhancement compared to the proposed method. The enhancement effect of RRDNet is inferior to that of the method proposed in this paper.

Figure 7.

Comparison of image enhancement results of different methods applied to the NPE dataset: (a) Input; (b) HE [5]; (c) Retinex [6]; (d) MSRCP [10]; (e) RetinexNet [15]; (f) EnlightenGAN [20]; (g) RRDNet [24]; (h) Zero-DCE [23]; (i) Ice-Net [30]; (j) SCI [22]; (k) IRNet (our method).

Figure 8 shows a graph comparing the enhancement effect of the advanced methods with that of the method proposed in this paper with respect to the sample images of the LIME dataset. It is evident that the images enhanced by RetinexNet and RRDNet are blurred in some areas, which seriously affects the visual properties of the images, and backlighting, which should not appear, appears around the buildings in the image enhanced by RRDNet. The enhancement effects of Zero-DCE and SCI were compared with the original image, which presented the color white in the sky area. At the same time, the method in this paper improves the image saturation and brightness while retaining the image color information.

Figure 8.

Comparison of image enhancement results of different methods applied to the LIME dataset: (a) Input; (b) HE [5]; (c) Retinex [6]; (d) MSRCP [10]; (e) RetinexNet [15]; (f) EnlightenGAN [20]; (g) RRDNet [24]; (h) Zero-DCE [23]; (i) Ice-Net [30]; (j) SCI [22]; (k) IRNet (our method).

Figure 9 shows the enhancement effect on the image samples in the DICM dataset compared to the existing advanced enhancement methods and the method proposed in this paper. It is clear from the figure that all the methods effectively improved the brightness of the images, but the enhancement effects of the images are very different. The enhancement effect of the traditional method is poorer than the others. Furthermore, in terms of the deep-learning-based methods, the top area of the mall in the image enhanced by EnlightenGAN shows local area color unevenness. RRDNet and Zero-DCE demonstrated less of an enhancement effect than the other methods. The method proposed in this paper has a better visual effect than the other methods in terms of significantly reducing image noise while retaining the detailed parts of the image. Additionally, the image color distribution in the images enhanced by our method is uniform.

Figure 9.

Comparison of image enhancement results of different methods applied to the DICM dataset: (a) Input; (b) HE [5]; (c) Retinex [6]; (d) MSRCP [10]; (e) RetinexNet [15]; (f) EnlightenGAN [20]; (g) RRDNet [24]; (h) Zero-DCE [23]; (i) Ice-Net [30]; (j) SCI [22]; (k) IRNet (our method).

In order to make the subjective visual evaluation fairer, we selected ten low-light images in our environment and enhanced them using the method described above. Ten testers were invited to rate the enhancement effects of the different methods (with the rating scale ranging from one to five, with five being the best and one being the worst). The results of these ten testers’ ratings of the different enhancement effects are shown in Figure 10, from which it can be seen that our method scored the best, reflecting the subjective advantage of our method.

Figure 10.

Distribution chart of subjective scoring results. Our method presents better results.

4.2. Objective Evaluation

We have used both subjective visual evaluation and objective metrics. The objective metrics we have chosen are PSNR [31], SSIM [32], LPIPS [33], and VIF [34], which are the most widely used metrics for evaluating low-light images. The higher the PSNR value, the higher the quality of the image. The SSIM is a measure of the similarity between two images. Instead of comparing errors at the pixel level, they are compared at three levels, namely, brightness, contrast, and structure, where structure is the main factor, with a range of [0, 1], for which a more significant value means that the difference between the original image and the processed image is more negligible, while when the SSIM value is 1, there is no difference between the two images, i.e., they are identical. The LPIPS metric considers structural and semantic information about an image, and it is closer to the human visual system’s ability to perceive and recognize an image; the smaller the LPIPS value, the closer and more similar the image is in terms of perception. The VIF metric measures the similarity of image quality, i.e., how similar two images are; a higher VIF value means that the two images are closer in appearance and thus of better quality. Conversely, a lower VIF value means that the two images are more disparate and thus of lower quality.

Traditional and deep-learning-based methods were selected for comparison. The traditional methods include HE, Retinex, and MSRCP, while for the deep-learning-based methods, we have selected RetinexNet, EnlightenGAN, RRDNet, Zero-DCE, IceNet, and SCI. To ensure fairness, the methods we compared were employed using the codes published in their respective articles without any modifications. We used the LOL dataset to evaluate objective metrics so that RetinexNet was trained using the LOL dataset in the training process. In contrast, EnlightenGAN and Zero-DCE use unpaired images, so we used the pre-trained model proposed by the authors for testing.

The LOL dataset is an image dataset dedicated to low-light image enhancement. It consists of pairs of real images, containing 500 pairs of authentic low/standard-light images. Wei et al. divided the LOL dataset into 485 training and 15 test pairs. The LOL dataset is also one of the most widely used datasets. Therefore, this dataset was chosen for this paper’s objective metric evaluation comparison. The results of the comparison are shown in Table 1. As seen from the table, the method in this paper achieves the best result in terms of the PSNR metric, with a lead of 0.6258 dB over the method in second place. The SSIM index score is 0.658, ranking second among all the methods and trailing the first-place method by 0.04. However, RetinexNet is a fully supervised network. It needs to be trained on a dataset, while the method in this paper does not require training on a dataset and has a significant lead over the other methods. Although it did not finish in the top two in terms of the LPIPS index, it was ahead of most of the approaches. Our method also achieves the second-best result in terms of the VIF metric, showing that the proposed method yields a result that is closer in appearance to the actual image.

Table 1.

Quantitative comparison using the LOL dataset in terms of PSNR, SSIM, and LPIPS (the best result is shown in bold; the second-best result is underlined).

For a fairer objective comparison, we also evaluated the model proposed in this paper using several reference-free image datasets, including LIME, DICM, and Fusion, as shown in Table 2. These datasets are widely used to test the generalization capability of low-light image enhancement networks. These datasets do not have reference images, so we evaluated them using the Entropy metric, a reference-free image evaluation metric that reflects the average amount of information in an image in an objective evaluation, where the higher the entropy value of an image, the more precise the image.

Table 2.

Quantitative comparison using the LIME, MEF, and NPE datasets in terms of entropy (the best result is shown in bold; the second-best result is underlined).

Table 2 shows the entropy values for the method proposed in this paper and for all the compared methods. By referring to these values, we can see that the proposed method achieves the best values regarding the LIME and Fusion datasets and the second-best values with respect to the DICM dataset. This result fully demonstrates that the images enhanced by the proposed method are more explicit and reflect more image information.

4.3. Low-Light Face Detection Performance

A large part of the purpose of low illumination enhancement is to pave the way for advanced vision tasks such as face recognition, path recognition, intelligent driving, and so on [35]. In order to demonstrate that the method in this paper can serve as good padding for advanced vision tasks, we used the DSFD [36] method, a face recognition algorithm that was proposed in 2019. The dataset we used is the DarkFace dataset [37], which consists of a variety of images captured at night in various scenarios and constitutes a standard dataset used in the field of low-light image enhancement for practical-application-based detection. The results regarding face recognition are shown in Figure 11.

Figure 11.

Performance of compared enhancement methods regarding face detection in dark environment. (a) Input; (b) HE [5]; (c) Retinex [6]; (d) MSRCP [10]; (e) RetinexNet [15]; (f) EnlightenGAN [20]; (g) RRDNet [24]; (h) Zero-DCE [23]; (i) Ice-Net [30]; (j) SCI [22]; (k) IRNet (our method).

As shown in Figure 11, although all the enhancement methods can increase the number of faces recognized to some degree, their performances vary significantly. For example, the traditional methods HE and MSRCP both showed recognition errors, and some faces were not correctly recognized by RRDNet. In comparison, our proposed method achieved a higher contrast ratio while ensuring a better level of recognition.

5. Discussion of Limitations

While our proposed method outperforms the existing methods, further improvements are still needed. For example, in areas of an image containing similar colors, some fine details and textures may be lost, as observed in Figure 7. At the same time, it was found through experiments that IRNet may cause overexposure in the original test area of an image after enhancement. This may be partly due to the large dynamic range of low-illumination images, which will cause overexposure when the overall brightness of an image is increased. In our future work, we will explore methods for limiting the dynamic range of image brightness and further enhance the proposed approach. Nevertheless, in practical applications, aside from real-time performance limitations, IRNet can effectively enhance low-light images, particularly in zero-shot learning scenarios.

6. Conclusions

In summary, this paper presents a new framework for low-light image enhancement, namely, IRNet, which decomposes an image into illumination and reflection components based on Retinex theory and re-illuminates low-light images based on the complementary nature of illumination and reflection. It provides results that are superior to those yielded by many existing methods regarding image brightness, contrast, noise suppression, and color information. The advantages are more evident in the subjective visualization of multiple datasets and with reference to the objective evaluation metrics PSNR, SSIM, LPIPS, VIF, and Entropy. Practical applications show that this method can effectively improve the performance of night-time face recognition detection. In the future, we will continue to study the method and apply it to more applications, such as in very low-light images and for backlit image information enhancement.

Author Contributions

Conceptualization, C.X. and H.T.; methodology, C.X.; software, H.T.; validation, H.Z.; formal analysis, L.F.; investigation, Y.H.; resources, C.X.; data curation, H.T.; writing—original draft preparation, H.T.; writing—review and editing, C.X.; visualization, H.T.; supervision, C.X.; project administration, C.X.; funding acquisition, C.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by the National Natural Science Foundation of China under Grant 61901221 and 62203012, in part by the Postgraduate Research and Practice Innovation Program of Jiangsu Province under Grant SJCX23_0322, in part by the Nanjing Forestry University College Student Practice and Innovation Training Program under Grant 2023NFUSPITP0070, in part by the State Visiting Scholar Program of China Scholarship Council under Grant 202208320239, and in part by the National Key Research and Development Program of China under Grant 2019YFD1100404.

Data Availability Statement

The data presented in this study are openly available at https://github.com/csjcai/SICE, https://ieeexplore.ieee.org/abstract/document/7782813, https://daooshee.github.io/BMVC2018website/, https://flyywh.github.io/IJCV2021LowLight_VELOL/, accessed on 15 January 2018, 14 December 2016, 10 August 2018, and 11 January 2021, respectively.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fang, M.; Li, H.; Lei, L. A review on low light video image enhancement algorithms. J. Chang. Univ. Sci. Technol. 2016, 39, 56–64. [Google Scholar]

- Al Sobbahi, R.; Tekli, J. Low-light homomorphic filtering network for integrating image enhancement and classification. Signal Process. Image Commun. 2022, 100, 116527. [Google Scholar] [CrossRef]

- Wang, Y.; Li, B.; Jiang, L.; Yang, W. R2Net: Relight the restored low-light image based on complementarity of illumination and reflection. Signal Process. Image Commun. 2022, 108, 116800. [Google Scholar] [CrossRef]

- Xie, C.; Zhu, H.; Fei, Y. Deep coordinate attention network for single image super-resolution. IET Image Process. 2022, 16, 273–284. [Google Scholar] [CrossRef]

- Kim, Y.-T. Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans. Consum. Electron. 1997, 43, 1–8. [Google Scholar]

- Land, E.H.; McCann, J.J. Lightness and retinex theory. Josa 1971, 61, 1–11. [Google Scholar] [CrossRef]

- Zhu, H.; Chan, F.H.; Lam, F.K. Image contrast enhancement by constrained local histogram equalization. Comput. Vis. Image Underst. 1999, 73, 281–290. [Google Scholar] [CrossRef]

- Hines, G.; Rahman, Z.-U.; Jobson, D.; Woodell, G. Single-scale retinex using digital signal processors. In Proceedings of the Global Signal Processing Conference, San Jose, CA, USA, 22 February 2005. [Google Scholar]

- Rahman, Z.-U.; Jobson, D.J.; Woodell, G.A. Multi-scale retinex for color image enhancement. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996; pp. 1003–1006. [Google Scholar]

- Parthasarathy, S.; Sankaran, P. An automated multi scale retinex with color restoration for image enhancement. In Proceedings of the 2012 National Conference on Communications (NCC), Kharagpur, India, 3–5 February 2012; pp. 1–5. [Google Scholar]

- Liu, J.; Xu, D.; Yang, W.; Fan, M.; Huang, H. Benchmarking low-light image enhancement and beyond. Int. J. Comput. Vis. 2021, 129, 1153–1184. [Google Scholar] [CrossRef]

- Li, Y.; Xia, H.; Liu, Y.; Huo, L.; Ni, C.; Gou, B. Detection of Moisture Content of Pinus massoniana Lamb. Seedling Leaf Based on NIR Spectroscopy with a Multi-Learner Model. Forests 2023, 14, 883. [Google Scholar]

- Wang, W.; Wu, X.; Yuan, X.; Gao, Z. An experiment-based review of low-light image enhancement methods. IEEE Access 2020, 8, 87884–87917. [Google Scholar] [CrossRef]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform-domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef]

- Hai, J.; Xuan, Z.; Yang, R.; Hao, Y.; Zou, F.; Lin, F.; Han, S. R2rnet: Low-light image enhancement via real-low to real-normal network. J. Vis. Commun. Image Represent. 2023, 90, 103712. [Google Scholar] [CrossRef]

- Yang, W.; Wang, W.; Huang, H.; Wang, S.; Liu, J. Sparse gradient regularized deep retinex network for robust low-light image enhancement. IEEE Trans. Image Process. 2021, 30, 2072–2086. [Google Scholar] [CrossRef]

- Yang, W.; Wang, S.; Fang, Y.; Wang, Y.; Liu, J. From fidelity to perceptual quality: A semi-supervised approach for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3063–3072. [Google Scholar]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep light enhancement without paired supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Zhang, F.; Shao, Y.; Sun, Y.; Zhu, K.; Gao, C.; Sang, N. Unsupervised low-light image enhancement via histogram equalization prior. arXiv 2021, arXiv:2112.01766. [Google Scholar]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1780–1789. [Google Scholar]

- Zhu, A.; Zhang, L.; Shen, Y.; Ma, Y.; Zhao, S.; Zhou, Y. Zero-shot restoration of underexposed images via robust retinex decomposition. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; pp. 1–6. [Google Scholar]

- Gupta, B.; Tiwari, M. Minimum mean brightness error contrast enhancement of color images using adaptive gamma correction with color preserving framework. Optik 2016, 127, 1671–1676. [Google Scholar] [CrossRef]

- Land, E.H. The retinex theory of color vision. Sci. Am. 1977, 237, 108–129. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef]

- Wang, S.; Zheng, J.; Hu, H.-M.; Li, B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef]

- Lee, C.; Lee, C.; Kim, C.-S. Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans. Image Process. 2013, 22, 5372–5384. [Google Scholar] [CrossRef]

- Ko, K.; Kim, C.-S. IceNet for interactive contrast enhancement. IEEE Access 2021, 9, 168342–168354. [Google Scholar] [CrossRef]

- Yu, C.; Xu, X.; Lin, H.; Xinyan, Y. Low-illumination image enhancement method based on a fog-degraded model. J. Image Graph. 2017, 22, 1194–1205. [Google Scholar]

- Ignatov, A.; Kobyshev, N.; Timofte, R.; Vanhoey, K.; Van Gool, L. Wespe: Weakly supervised photo enhancer for digital cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 691–700. [Google Scholar]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 586–595. [Google Scholar]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef]

- Wang, Y.; Huang, Z.; Zhu, P.; Zhu, R.; Hu, T.; Zhang, D.; Jiang, D. Effects of compressed speckle image on digital image correlation for vibration measurement. Measurement 2023, 217, 113041. [Google Scholar] [CrossRef]

- Li, J.; Wang, Y.; Wang, C.; Tai, Y.; Qian, J.; Yang, J.; Wang, C.; Li, J.; Huang, F. DSFD: Dual shot face detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 5060–5069. [Google Scholar]

- Wang, W.; Wang, X.; Yang, W.; Liu, J. Unsupervised face detection in the dark. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1250–1266. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).