1. Introduction

With the decrease in the cost of drones, the civilian drone market has entered a period of rapid development. At the same time, target detection technology based on deep learning has also made remarkable progress in recent years, which has made the combination of drones and target detection technology more closely related. The integration of the two can play an important role in many fields, such as crop detection [

1], intelligent transportation [

2], and search and rescue [

3]. However, most target detection models are designed based on natural scene image datasets, and there are significant differences between natural scene images and drone aerial images. This makes it a meaningful and challenging task to design a target detection model specifically suitable from the aerial drone perspective.

In practical application scenarios, real-time target detection of the unmanned aerial vehicle (UAV) aerial video stream places a high demand on the detection speed of the algorithm model. Furthermore, unlike natural scene images, due to the high altitude of UAV flights and the existence of a large number of small targets in aerial images, there are fewer extractable features for these targets. In addition, the UAVs actual flight altitude often varies greatly, leading to drastic changes in object proportions and a low detection accuracy. Finally, complex scenes are often encountered during actual flight shooting, and there may be a large amount of occlusion between densely packed small targets, making them easily obscured by other objects or the background. In general, generic feature extractors [

4,

5,

6] downsample the feature maps to reduce spatial redundancy and noise while learning high-dimensional features. However, this processing inevitably leads to the representation of small objects being eliminated. Additionally, in real-world scenarios, the background exhibits diversity and complexity, characterized by various textures and colors. Consequently, small objects tend to be easily confounded with these background elements, resulting in an increased difficulty of their detection. In summary, there is a need to design a real-time target detection model for UAV aerial photography that is suitable for dense small target scenarios in order to meet practical application requirements.

Object detection algorithms based on neural networks can generally be divided into two categories: two-stage detectors and one-stage detectors. Two-stage detection methods [

7,

8,

9] first use region proposal networks (RPNs) to extract object regions, and then detection heads use region features as input for further classification and localization. In contrast, one-stage methods directly generate anchor priors on the feature map and then predict classification scores and coordinates. One-stage detectors have a higher computational efficiency but often lag behind in accuracy. In recent years, the YOLO series of detection methods has been widely used in object detection of UAV aerial images due to their fast inference speeds and good detection accuracies. YOLOv1 [

10] was the first YOLO algorithm, and subsequent one-stage detection algorithms based on its improvements mainly include YOLOv2 [

11], YOLOv3 [

12], YOLOv4 [

13], YOLOv5 [

14], YOLOx [

15], YOLOv6 [

16], YOLOv7 [

17], and YOLOv8 [

18]. YOLO algorithms directly regress the coordinates and categories of objects, and this end-to-end detection approach significantly improves the detection speed without sacrificing much accuracy, which meets the basic requirements of real-time object detection for unmanned systems.

Previous improvement methods for target detection in UAV aerial images can be categorized into three types: (i) utilizing more shallow feature information, such as adding small target detection layers [

19]; (ii) enhancing the feature extraction capability of the target detection network, such as improving the Neck network [

20] or introducing attention mechanisms [

21]; and (iii) increasing input feature information, such as generating higher resolution images [

22], image copying [

23], and image cropping [

24,

25].

Taking into consideration the aforementioned discussion, we propose a high-precision real-time algorithm, namely YOLOv7-UAV, for aerial image detection in unmanned aerial vehicles (UAVs). In summary, the contributions of this paper are as follows:

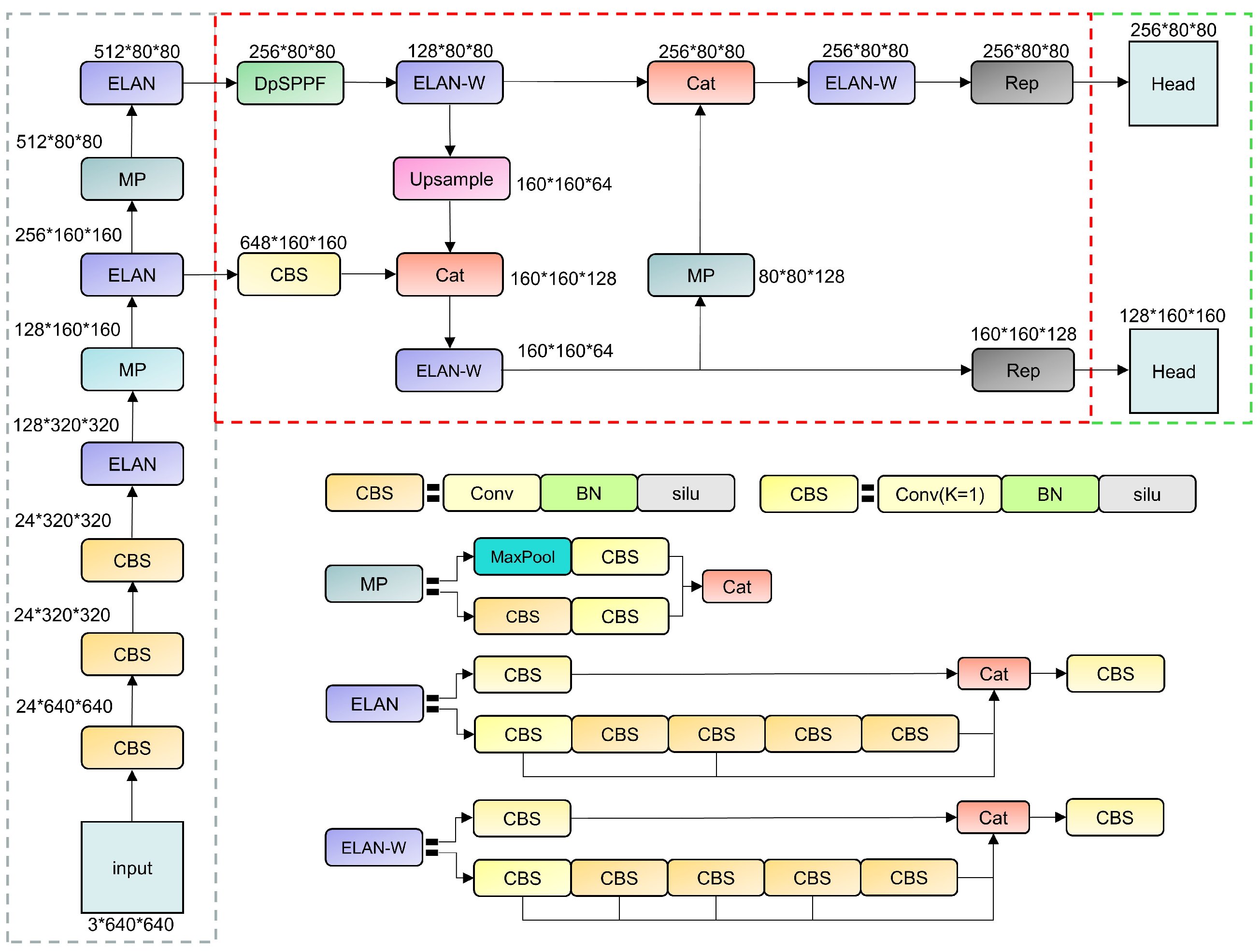

(1) We have optimized the overall architecture of the YOLOv7 model by removing the second downsampling layer and introducing an innovative approach to eliminate its final neck and detection head. This modification significantly enhances the utilization efficiency of the detection model in capturing shallow-level information.

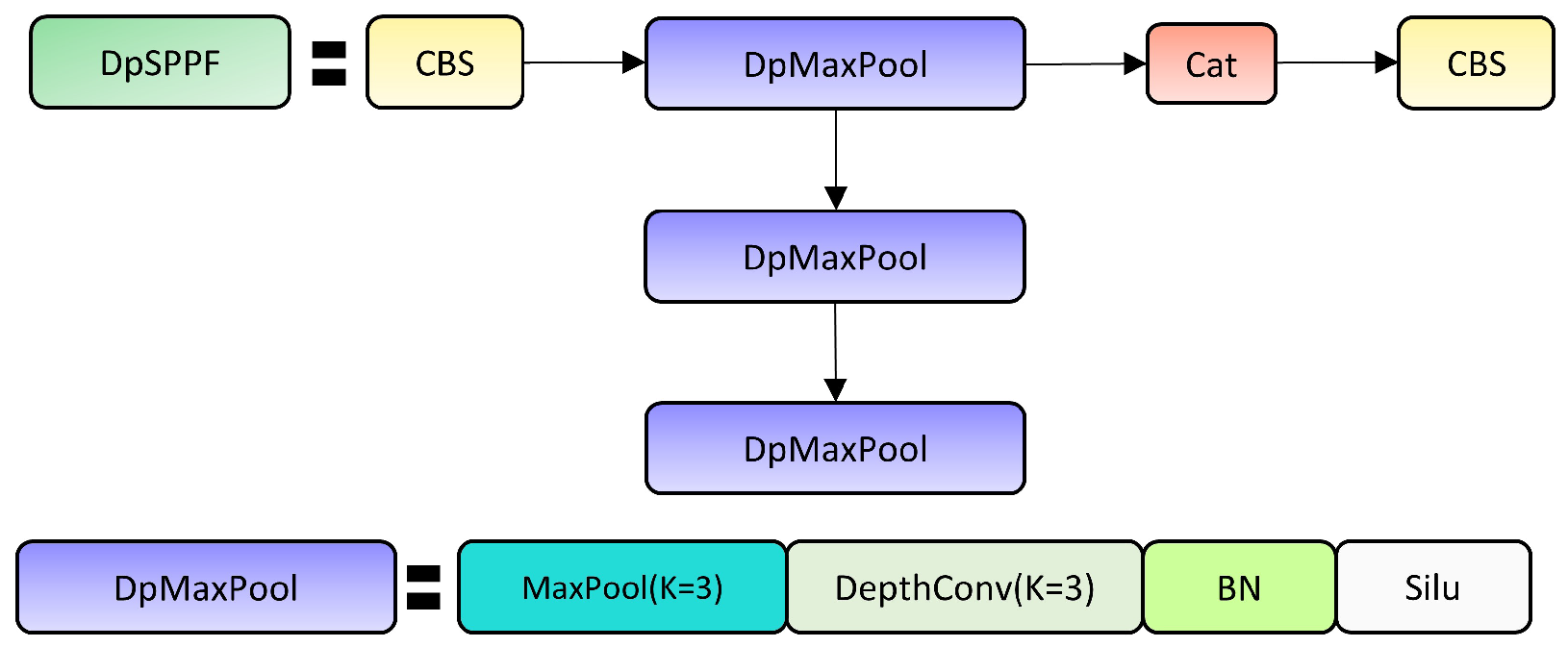

(2) We present the DpSPPF module as an alternative to the SPPF module. It replaces the original max pooling layers with a concatenation of smaller-sized max pooling layers and depth-wise separable convolutions. This design choice enables a more detailed extraction of feature information at different scales.

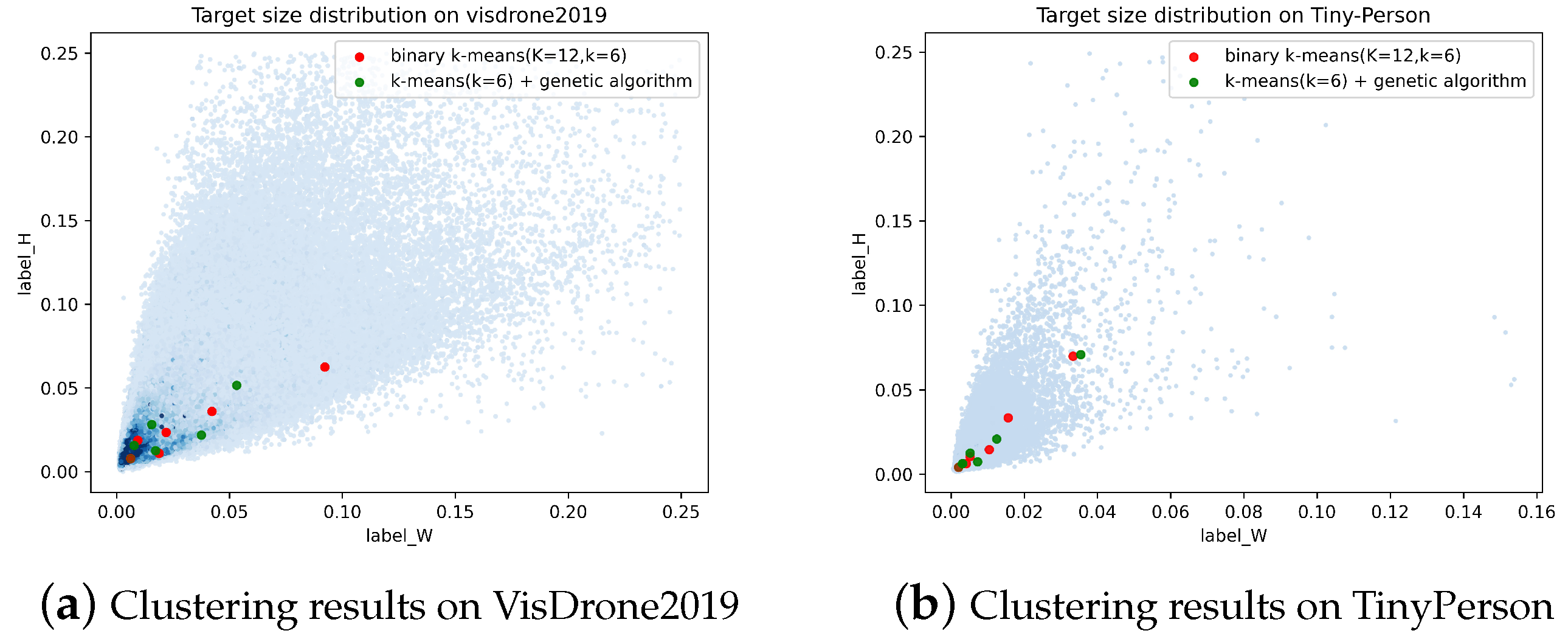

(3) We propose the binary K-means anchor generation algorithm, which avoids the problem of local optimal solutions and increases the focus on sparse-sized targets by reasonably dividing the anchor generation range into intervals and assigning different numbers of anchors that need to be generated in each interval.

(4) Extensive experiments were conducted on both the VisDrone dataset and the TinyPerson dataset to validate the superiority of our proposed method over state-of-the-art real-time detection algorithms.

5. Conclusions

There exists a significant challenge for existing detection algorithms in detecting a large number of small targets with diverse shooting angles in unmanned aerial vehicle (UAV) images. This paper proposes an algorithm, YOLOv7-UAV, which can detect UAV images in real time. Firstly, the algorithm reduces the loss of feature information and improves the model’s utilization efficiency of fine-grained feature information by removing the second downsampling layer and the deepest detection head of the YOLOv7 model. Then, the algorithm replaces the maximum pooling layer in the SPPF module with concatenated smaller depth-wise separable convolution and maximum pooling layers, optimizing its ability to extract fine-grained feature information while retaining the ability to aggregate multi-scale feature information. Subsequently, the paper proposes a binary K-means anchor generation algorithm that reasonably divides the anchor box generation interval and retains a focus on common sizes to obtain better anchors. Finally, YOLOv7-UAV introduces the weighted nwd and IoU as evaluation metrics in the label assignment strategy on the TinyPerson dataset. Results on the VisDrone2019 and TinyPerson datasets demonstrate that YOLOv7-UAV outperforms most popular real-time detection algorithms in terms of the detection accuracy, detection speed, and memory consumption.

Despite the excellent performance of our proposed method on the unmanned aerial vehicle object detection datasets, there are still some limitations. Specifically, the effectiveness of YOLOv7-UAV on low-power platforms, such as embedded devices, requires further testing and optimization. Moreover, the determination of the K value in the binary K-means anchor generation algorithm is solely based on experimental results, without a sufficient analysis of the factors influencing K. In real-world unmanned aerial vehicle tasks, adverse weather conditions such as fog and darkness may be encountered, which our method has not been specifically optimized for. Additionally, unmanned aerial vehicles can be equipped with camera systems that have a larger field of view (FOV), which introduces radial and barrel distortions that significantly impact the detection of small targets, an aspect that our proposed algorithm has not specifically addressed.

In future work, we plan to optimize the performance of YOLOv7-UAV on low-power platforms by employing model compression techniques such as model pruning and distillation. Moreover, we aim to improve the process of generating anchors using the binary K-means algorithm by directly determining an appropriate K value based on the distribution of data within the dataset and the receptive fields of the target detection model. We plan to incorporate advanced generative networks or construct more abundant datasets to enhance the performance of the YOLOv7-UAV algorithm in challenging environments such as those with dense fog or low light conditions. Additionally, we intend to investigate the rectification issues of wide-angle cameras and devise targeted data augmentation methods to enhance the detection performance of object detection algorithms on images captured with a larger FOV.