Abstract

In recent years, human interference in seismic-station environments has posed challenges to the quality and accuracy of seismic signals, making data processing difficult. To accurately identify interference caused by personnel and ensure the reliability of seismic-network instrument detection data, it is necessary to track the detected targets across consecutive frames. Deep neural networks have made significant progress in this field. Therefore, an intelligent identification solution for environmental interference at seismic stations is proposed, which combines deep learning with multi-object tracking techniques. A centroid-matching tracking algorithm based on Kalman filtering is introduced to identify the entry/exit timestamps, alongside motion trajectories of interfering individuals, thereby marking the anomalous data caused by the presence of interfering personnel in seismic time-series data. Experimental results demonstrate that this research provides an effective solution for intelligent identification of environmental interference in seismic station environments.

1. Introduction

China is implementing the National Earthquake Intensity Rapid Reporting and Early Warning Project, which has established the world’s largest monitoring network consisting of over 10,000 seismic stations. The purpose of this project is to detect earthquake signals in the early stages of seismic rupture and deliver rapid predictions of ground-motion intensity to users within a few seconds to one minute before the arrival of destructive shear and surface waves. This will significantly enhance the country’s capacity and level of seismic disaster prevention and mitigation. Seismic stations generally consist of various sophisticated instruments and equipment, including vibration sensors, data loggers, communication network routers/switches, and intelligent power supplies. With the long-term uninterrupted operation of seismic stations, equipment failures are increasing, requiring timely on-site maintenance by personnel. However, this also introduces interference and disturbances to the observed data [1]. To improve the intelligent monitoring and operation maintenance level of seismic stations and ensure their safe operation, video surveillance systems have been installed and deployed in seismic stations. These systems provide real-time monitoring, recording, and storage of the surrounding environment of instrument rooms, entrances and exits, as well as indoor and outdoor equipment. This enables centralized video monitoring and operation management of the seismic station network, enhancing the operation maintenance of seismic instruments, verification of abnormal observation data, and security protection level of instrument rooms [2].

Regarding the recent advances in the field, Thomas et al. [3] evaluated the impact of COVID-19 lockdown on high-frequency (4 to 14 Hz) seismic ambient noise. They collected data from 268 stations that implemented local restrictions during the lockdown period. The study revealed a significant reduction in seismic ambient noise at 185 stations. Similarly, Ścisło et al. [4] investigated the effects of reduced human activities due to the initial COVID-19 lockdown on ground vibrations in the European Organization for Nuclear Research’s Large Hadron Collider tunnel. By analyzing the environmental seismic noise using probability-power spectral density, they demonstrated the long-term impact of human activities on the vibrations within the caves and tunnels of the organization. To mitigate the impact of human activities on observational data, Kislov et al. [5] proposed a preprocessing algorithm that incorporates wavelet transform, autoencoders, and other techniques. They also employed a deep neural-network architecture for further data processing to reduce noise levels, eliminate human-induced noise, and reduce data dimensionality. Choudhary et al. [6] introduced a non-intrusive human activity recognition system that utilized one-dimensional convolutional neural networks for automatic feature extraction, fusion, and activity recognition for a nine-class classification problem. By leveraging vibrations and sounds caused by footsteps in outdoor environments, the system aimed to improve the performance of a single-information source, reducing both misclassifications and the impact of background noise on model performance. Additionally, the authors [7] employed seismic sensors for human activity recognition, mapping activities to compact representative descriptors using autoencoders and training an artificial neural- network classifier based on the extracted descriptors to achieve high efficiency in complex and noisy environments. Furthermore, the utilization of target detection and tracking techniques to obtain the duration of human interference and annotate abnormal data is also a current research focus. Therefore, addressing the issue of detecting interfering individuals is crucial. The YOLO algorithm [8] is currently a widely used object-detection algorithm. Additionally, to record the entry and exit times of interfering individuals, it is necessary to employ target-tracking algorithms to identify their movement trajectories. Ren et al. [9] proposed a multi-object tracking algorithm based on YOLOv3 and Kalman filtering. It achieves accurate object tracking by associating and matching detection-bounding boxes with predicted-bounding boxes using intersection over union (IoU) and color histograms. The algorithm iteratively updates and refines the object’s motion trajectory, resulting in improved tracking accuracy. However, severe occlusion and large areas of similar background can still lead to tracking losses. To address this issue, Gui et al. [10] introduced an improved tracking algorithm based on adaptive Kalman filtering that reduces interference. It adjusts the weights of different features using an adaptive approach, which allows for increased tracking accuracy and stability in scenarios where there are variations in the target and background. Rohal et al. [11] combined the real-time capability of Kalman filters with the robustness of particle filters and neural networks. They proposed a nonlinear motion-estimation method for tracking or estimating object positions in environments with interference and noise, effectively improving the target-tracking process. Additionally, for indoor target tracking, where furniture or building structures may cause occlusion, trackers may only produce short trajectories. To address this, Li et al. [12] proposed a trajectory-matching approach for indoor-target tracking. It uses Hankel matrix completion techniques to estimate missing data and correlates trajectories based on the rank of the Hankel matrix. In addition to the classical CNN networks, target-tracking algorithms based on Siamese network architectures are commonly used. The Siamese network framework formulates the target-tracking problem as a similarity learning problem solved by deep neural networks. Bertinet et al. [13] were the first to introduce the Siamese network into object-tracking algorithms. The workflow of the Siamese network algorithm involves extracting features of the target to be tracked and features of candidate search regions in the new frame. These extracted features are then convolved in the feature space, followed by similarity measurement. Positions with higher response indicate greater similarity to the predicted target. Finally, the position with the highest response is considered as the location of the predicted target. The SiamFC [14] (Fully Convolutional Siamese Networks) framework incorporates semantic features that improve the accuracy of online object tracking. CFNet (Correlation Filter Network) and DCFNet [15] (Deep Correlation Filter Network) build upon the SiamFC framework by integrating correlation-filtering algorithms. These approaches demonstrate that lightweight networks can achieve excellent tracking performance. Similarly, Gomes et al. [16] proposed a novel method for computing the number of people and bicycles in an edge AI system using the Jetson Nano board. They utilized the YOLO network and V-IOU tracker to perform real-time counting of people and bicycles. This approach optimizes memory usage and energy consumption while ensuring higher frame rates.

In conclusion, this paper proposes a method for intelligent detection and trajectory tracking of interfering individuals in seismic stations, addressing the challenges of abnormal interference in seismic time-series data and the low detection accuracy and easy loss of targets in tracking algorithms. Compared to existing research on object detection, this paper primarily utilizes detection and tracking techniques to capture the interference time caused by personnel. Since human interference can lead to abnormal data in the collected seismic data, it is essential to label the abnormal data caused by interfering individuals to prevent their impact on time-series data analysis. The proposed method consists of four steps: detection, prediction, matching, and labeling. First, the YOLOv5 network is employed for accurate target detection in the detection stage. Next, the centroid-matching algorithm is improved by incorporating Kalman filtering for prediction, enabling real-time tracking of interfering individuals. Then, in the matching stage, the Euclidean distance is utilized to associate centroid matches for candidate targets, recording the entry and exit times of the entire interference process. Subsequently, the method integrates with the corresponding seismic station’s time-series data stream and marks the corresponding time points as abnormal data, indicating the presence of interference. This approach effectively addresses the issue of interference in seismic time-series data by combining detection and tracking techniques, demonstrating the identification and labeling of abnormal data caused by interfering individuals. It provides valuable insights into the detection and tracking of interfering individuals in seismic stations, thereby enhancing the quality and reliability of seismic data analysis and contributing to the overall effectiveness and security of seismic station operations.

2. Object Recognition and Tracking Algorithm

2.1. Core Algorithm of Object Recognition and Tracking

Object detection is a crucial task in the field of computer vision, finding widespread applications in various domains such as security, intelligent transportation, and healthcare [17,18]. However, traditional object-detection algorithms face numerous challenges with increasing data volume and model complexity, including slow processing speed, low accuracy, and limited adaptability. In contrast, deep learning-based object detection algorithms offer higher accuracy and faster inference times. Among them, YOLOv5 [19] is an emerging object-detection algorithm that provides advantages such as high accuracy, fast detection, and user-friendliness, significantly improving detection speed while maintaining accuracy. For researchers focusing on object tracking, the motion and deformation of objects present challenges for methods relying solely on low-level features like color and texture, making it difficult to achieve ideal tracking results. Consequently, advanced object-tracking algorithms have been proposed, and one such algorithm is centroid matching [20,21]. The centroid-matching algorithm performs matching and tracking based on the centroids of objects. It evenly distributes pixel weights within the object region, reducing the impact of motion, occlusion, and other factors on object features. Moreover, the centroid-matching algorithm can effectively handle object deformation and rotation because the position and motion of the centroid possess certain invariances, ensuring algorithm stability and accuracy. Furthermore, the centroid-matching algorithm efficiently handles a large number of targets with low computational complexity and fast operation speed, making it suitable for real-time object-tracking scenarios. In practical applications, the centroid-matching algorithm finds extensive use in object tracking, pedestrian counting, traffic-flow monitoring, and other scenarios, delivering high accuracy and reliability.

2.1.1. YOLOv5

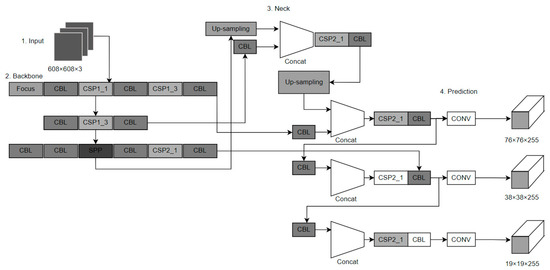

YOLOv5 offers a variety of model sizes and training datasets, allowing users to select the most suitable model for training and applications based on their specific needs. It includes models of different sizes and complexities, such as YOLOv5-nano, YOLOv5-s, YOLOv5-m, YOLOv5-l, YOLOv5-x, and YOLOv5-n. These models provide different trade-offs between speed and accuracy to accommodate various computational capabilities and real-time requirements. Among them, YOLOv5-s has fewer convolutional layers, faster detection speed, and relatively lower detection accuracy compared to other models. YOLOv5-s has shown good performance in various domains. The convolutional layers of other models are arranged in increasing order of complexity, with detection speed gradually decreasing and detection accuracy gradually improving. The network architecture is illustrated in Figure 1, consisting of four parts: Input, Backbone, Neck, and Prediction.

Figure 1.

YOLOv5 Architecture Diagram.

The Input component in YOLOv5 handles various functionalities such as mosaic augmentation, dynamic anchor boxes, and adaptive image processing. The Backbone serves as the main network responsible for feature extraction. It incorporates a Cross-Stage Partial (CSP) network to reduce computational complexity and a Spatial Pyramid Pooling Fusion (SPPF) module to enhance detection accuracy. The Neck component acts as an intermediate network, specifically a feature fusion network, employing the FPN + PAN structure. Its primary role is to fuse and reduce the dimensionality of the features extracted by the Backbone. Additionally, it enhances both semantic and positional information. The Prediction component serves as the output of YOLOv5, responsible for the final detection results. It takes the feature maps generated by the Neck and transforms them into object-detection predictions.

2.1.2. Centroid Matching

In addition to object detection, object tracking is an important application in the field of computer vision. It enables real-time tracking and localization of multiple objects in videos. The Centroid Matching algorithm is a simple yet effective method in object tracking, which utilizes the centroid position of the target region for matching and tracking. This algorithm does not require complex feature extraction and matching processes. Instead, it associates and matches the current detected target with the previous predicted position using the Euclidean distance. The centroid trajectory of the detected targets without wearing safety helmets is obtained through the matching and association process. The procedure is as follows:

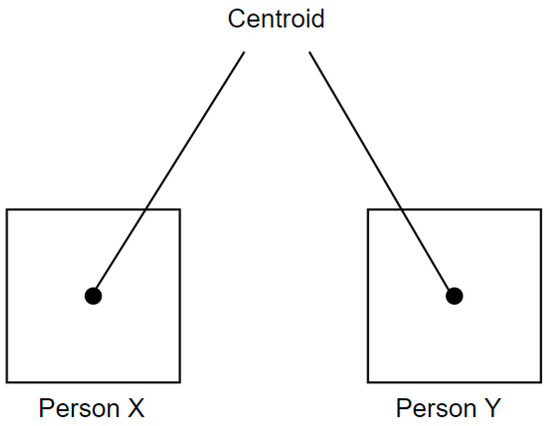

- As shown in Figure 2, the first step is to obtain the detected bounding boxes and calculate the centroids. For a given frame, the bounding box is identified, and its centroid is calculated, resulting in the centroid coordinates (x, y).

Figure 2. Centroid Extraction.

Figure 2. Centroid Extraction.

- 2.

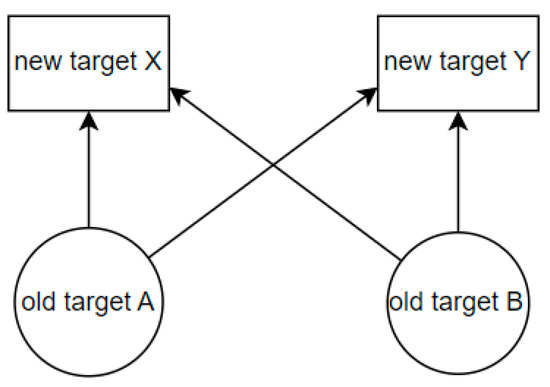

- As shown in Figure 3, the next step is to calculate the Euclidean distance between the centroids of the new and old bounding boxes. In this paper, the detection algorithm is used to obtain the bounding boxes, but individual IDs or labels are not assigned to each detected object. Instead, this method allows new and old targets to be linked.

Figure 3. Target Matching.

Figure 3. Target Matching.

- 3.

- Finally, update the centroid coordinates of existing targets. The premise of the centroid-tracking algorithm is that, for a given target, it will appear in consecutive frames, and the Euclidean distance between the centroids in frame N and frame N + 1 should be smaller than the Euclidean distance between different targets. Therefore, by linking the centroids of bounding boxes in consecutive frames based on the principle of minimum Euclidean distance, we can establish a target X to represent the temporal continuity across these frames and achieve the goal of object tracking. Additionally, when a new target is added, assign it a target ID, store the centroid coordinates of this new target, and calculate the Euclidean distance of the centroid for each frame in the video stream, continuously updating the centroid coordinates. For targets that have disappeared, their IDs are invalidated.

Although the centroid-matching algorithm performs well in certain specific scenarios, its effectiveness in complex backgrounds can be limited [1]. Therefore, in target-tracking scenarios that require higher accuracy, it is often necessary to combine more sophisticated algorithms to achieve precise object tracking.

2.2. Algorithm Development

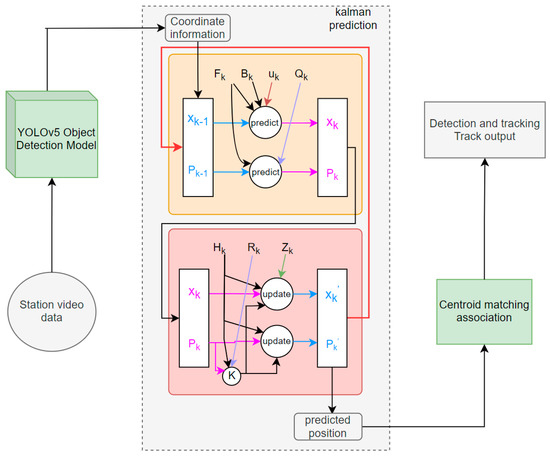

Due to the continuous output of detected objects by the YOLOv5 detection algorithm, we need only the entry and exit timestamps of the objects and their motion trajectories during the detection process. Therefore, we improve the centroid-matching tracking algorithm based on the YOLOv5 detection algorithm. We utilize Kalman filtering to predict the detection results from the previous frame and employ the centroid-tracking algorithm for object matching. This allows us to obtain the motion trajectories and entry/exit timestamps of interfering objects within the seismic station area, enabling target tracking in complex backgrounds. The specific workflow is illustrated in Figure 4:

Figure 4.

Algorithm flow chart.

As shown in Figure 4, this algorithm extends YOLOv5 and includes the following steps:

- (1)

- Station video data: This refers to the real-time video data from seismic stations, which is processed in the form of RTSP streams.

- (2)

- YOLOv5 object detection: The incoming RTSP real-time video stream is passed through the corresponding model for inference, resulting in a model output that includes coordinate information and detection results.

- (3)

- Kalman prediction: This module consists of two parts: prediction and update. It utilizes the Kalman filter to predict the potential position of the target in the next frame, i.e., the next frame’s coordinate information.

- (4)

- Centroid-matching association: By matching the actual detection coordinates of the next frame with the predicted coordinates from the previous frame, the algorithm establishes associations to track the targets.

In the Kalman state-prediction part, it is necessary to make informed predictions about the next position of the interfering individual using Kalman filtering. Even in the presence of noise interference, Kalman filtering can often identify subtle correlations that are not easily noticeable. It typically involves two steps: prediction and update. The process is as follows:

- (1)

- In the prediction step, we use known information and a prediction model to estimate the target’s state at the next time step. Specifically, based on the current state, state- transition matrix, and control input, we calculate the predicted value of the state at the next time step. Additionally, we estimate the covariance matrix of the prediction error. The specific calculation is shown in Formulas (1) and (2):

Prediction status:

Prediction error covariance:

Here, is the predicted state value at time k, is the state transition matrix, is the control input, is the control matrix, is the covariance matrix of state estimation error, and is the covariance matrix of system noise.

- (2)

- In the update step, we use the estimated predicted state and the predicted error covariance matrix from the prediction step, along with the measurement model, to calculate the optimal estimate of the target’s state at the next time step. Specifically, we combine the predicted state and the predicted error covariance matrix with the current measurement to calculate the optimal estimate of the state at the next time step and the covariance matrix of the estimation error. The specific calculations are shown in Formulas (3) and (4):

Estimated state:

Estimated error covariance:

Here, is the predicted state value at time k, is the measurement value at time k, is the measurement model, is the Kalman gain, and is the covariance matrix of state estimation error.

Through continuous prediction and update, the Kalman filter can make the target state estimation more accurate, thereby achieving the purpose of target tracking.

3. Construction of an Intelligent Identification Model for Environmental Interference at Seismic Stations

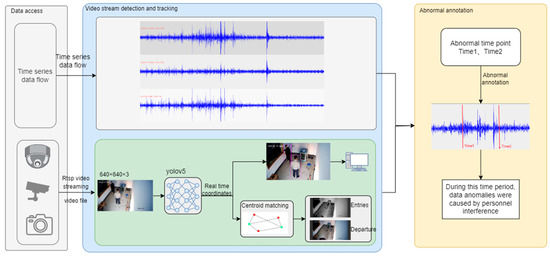

As shown in Figure 5, the workflow of this study primarily involves the integration of video streams and data streams, video stream detection and tracking, and annotation of anomalous data. The following are detailed descriptions of these steps:

Figure 5.

Architecture diagram of intelligent identification of environmental interference.

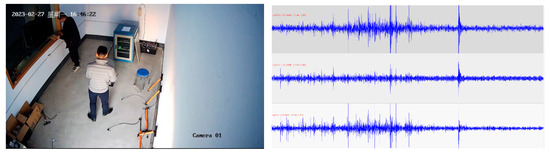

3.1. Data Access

As shown in Figure 6, we need to separately access the video-stream data from seismic stations and the corresponding time-series data of each station. The video data is used to detect the time points of pedestrian interference. Afterwards, the detected abnormal points are marked in the corresponding time-series data of each station. Since the seismic-station data consist of three rows representing three different azimuths, pedestrian interference mainly affects the data in the vertical direction. Therefore, in this paper, the focus is primarily on processing and marking the data from the third row.

Figure 6.

Data access (Left. Video-streaming access; Right. Data-stream access; In this case, “星期一” represented “Monday”).

3.2. Video-Stream Detection and Tracking

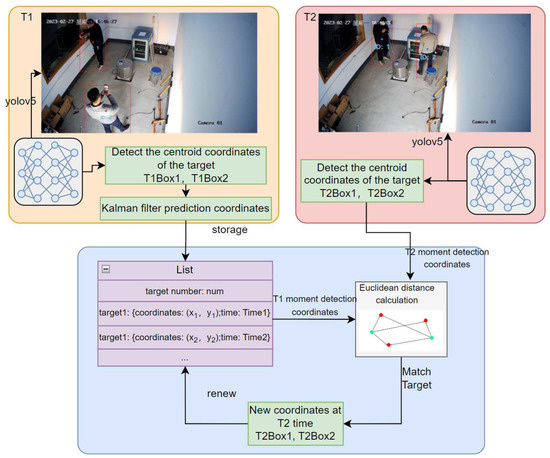

This paper proposes a real-time tracking algorithm based on Kalman filtering and centroid matching, built upon the Yolov5 detection framework, to track identified targets. First, the next frame’s position is predicted by utilizing Kalman filtering in conjunction with the previous motion information of individuals. Subsequently, after detecting the targets in the next frame, new target-position information is obtained. Finally, centroid matching is employed to establish associations between consecutive frames, enabling efficient tracking of interfering targets and fulfilling the fundamental requirements of this study.

According to Figure 7, at time T1, we need to receive bounding boxes and calculate centroids. To find a bounding box and calculate the centroid for a particular frame, obtaining the centroid coordinates (x, y), we then record the current number of detected targets. We use the Kalman filter algorithm to predict the targets’ positions and store the predicted information in a global list. For subsequent frames, such as T2 in the video stream, we similarly obtain the centroid coordinates at T2 and retrieve the information stored in the list from T1. We establish a connection between the new and old targets by calculating the Euclidean distance between the predicted coordinates at T1 and the detected coordinates at T2. Finally, we update the centroid coordinates of the latest target in the tracking list.

Figure 7.

Target recognition and tracking(In this case, “星期一” represented “Monday”).

Additionally, when a new target is added, which means no matching real coordinates are obtained, we assign a new ID to this new target. We then store the centroid coordinates of the new target along with the timestamp in a list. For each subsequent frame in the video stream, we calculate the Euclidean distance between the centroids and continuously update their coordinates. If the number of detected targets remains smaller than the number of targets stored in the list for the Nth frame or the following n frames, we identify the target with the maximum Euclidean distance and remove it from the list, considering it as having left the scene.

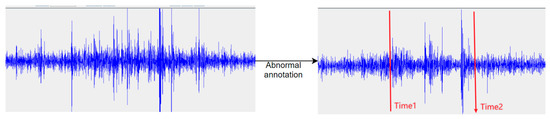

3.3. Abnormal Annotation

As depicted in Figure 8, after conducting video-stream analysis, we can obtain the detected time points of interference. Subsequently, we can annotate the corresponding segment of abnormal data within the time-series data stream. This allows for the labeling of interference data present in the seismic data collected by the current seismic station. By completing the annotation process, we gain a better understanding of the interference within the seismic data.

Figure 8.

Data Annotations.

Additionally, in cases where the abnormal data segment captured by the seismic station includes a prolonged period of normal data due to prolonged periods of inactivity, such as maintenance, it is necessary to annotate the data by incorporating the tracked- movement trajectory. To annotate this scenario, we start from the time point at which the interference caused by the stationary activity is detected and continue until the time point when the interfering personnel remain motionless for a continuous period. Similarly, we annotate from the time point when a change in the movement trajectory of the interfering personnel is detected until the time point when the interfering personnel are detected leaving. This allows for the isolation of the long duration of normal data within the captured dataset.

4. Experimental Process

4.1. Preparation of Detection Dataset

The quality and reliability of the training dataset have a direct impact on the quality of the trained model and are crucial factors affecting the performance of obstacle detection. In this paper, a pedestrian-detection dataset is utilized, which combines pedestrian data from different seismic stations in various regions as well as publicly available datasets. It takes into account factors such as different weather conditions, lighting conditions, and image clarity. The image dataset is divided into training, testing, and validation sets in an 8:1:1 ratio. Manual annotation is performed using the labeling tool, and the annotations are saved in the YOLO format, as shown in Table 1.

Table 1.

Dataset construction.

4.2. Experimental Environment and Parameter Settings

The operating system used in this experiment is Windows 11. The GPU model is NVIDIA GeForce RTX 3050. The programming language is Python 3.9. The deep learning framework used is Pytorch 1.7.0, and the CUDA version is 11.6.

4.2.1. Training Settings

The entire process employs the stochastic gradient descent (SGD) method and trains for 300 epochs. The input-image size is set to 640 × 640, and the batch size is set to 4. The initial learning rate is set to 0.01, and the cyclic learning rate is set to 0.1. The weight decay coefficient is set to 0.0005. During the initial training of the model, a warm-up training was performed for 3 epochs. At this stage, the momentum parameter of SGD was set to 0.8. The warm-up learning rate was used in the initial stage, and a one-dimensional linear interpolation was applied to adjust the learning rate for the first 3 epochs. Afterwards, the learning rate was updated using the cosine annealing algorithm. During testing, the non-maximum suppression (NMS) threshold for bounding-box selection was set to 0.6. The confidence threshold was set to 0.001, and the intersection over union (IoU) threshold was set to 0.5.

4.2.2. Detection Settings

In this experiment, monitoring video data from multiple seismic stations, including Linyi Lanshan Seismic Station, was used. The detection process involved accessing the RTSP video stream and performing object detection. During the detection process, when a target is first detected, the current time is considered the start time, and the centroid of the detected target is updated. When the target leaves the detection range, the current timestamp is recorded. If no target is detected within the next 2 min, it is considered as completing one detection task, and the recorded timestamp is reported.

4.3. Analysis of Experimental Results

In this study, accuracy, precision, and recall were adopted as evaluation metrics for the system. Accuracy measures the proportion of correctly classified samples to the total number of samples. Precision measures the accuracy of the model in predicting positive samples, i.e., the proportion of true positives among all positive predictions. Recall measures the proportion of correctly predicted positive samples to the actual number of positive samples. The calculation formulas for accuracy, precision, and recall are shown as Equations (5), (6) and (7), respectively:

Accuracy:

Precision:

Recall:

In this context, TP represents True Positives, which refer to the number of positive samples correctly detected by the model as belonging to the target class. FP represents False Positives, indicating the number of negative samples incorrectly classified as the target class by the model, resulting in false detections. TN represents True Negatives, which represents the number of samples that actually belong to the target class but are mistakenly classified as non-target class by the model, resulting in missed detections. FN represents False Negatives, which represents the number of samples that are incorrectly predicted by the model as negative when they are actually positive.

As shown in Table 2, this study tested the YOLOv5 object detection algorithm in the seismic station scenario. The model achieved an accuracy of 0.932, indicating an overall ability to accurately detect interfering personnel. This suggests that the model can correctly identify pedestrian targets in most cases. Furthermore, the precision of the model is 0.917, indicating a high level of accuracy in predicting interfering personnel. This helps minimize false positives and avoids negative impacts on system performance and user experience. However, the recall is 0.832, slightly lower compared to accuracy and precision, suggesting that the model may still miss some instances of interfering personnel during detection.

Table 2.

Model-Check Results.

This study also utilized several metrics to evaluate the performance of the target tracking algorithm, including Multiple Object-Tracking Accuracy (MOTA), Multiple Object-Tracking Precision (MOTP), ID F1 score (IDF1), pedestrian ID switches, tracked target percentage, and lost target percentage. Among these metrics, MOTA is the primary evaluation criterion as it considers the impact of factors such as missed detections, false detections, and tracking errors on the overall performance of the multiple-object tracker. The calculation for MOTA is as follows (Equation (8)):

Among these metrics, IDSW represents the number of ID switches, indicating how many times the same target changes its ID during the tracking process. GT represents the total number of ground truth objects. The variable i represents the cumulative number of frames since the detection of pedestrians started. Additionally, MOTP reflects the accuracy of the tracker’s estimation of the target positions. It is calculated as follows (Equation (9)):

Whereas d represents the distance measurement error for each correct match of targets, C represents the total number of correct matches of targets. IDF1 is used to compare the consistency of different trackers in identifying targets. It is calculated as follows (Equation (10)):

In this context, c represents the number of correct matches corresponding to each tracker ID, while ID_TP represents the number of times the target identifier matches the ground truth (GT) during tracking.

As shown in Table 3, this study compares the centroid matching algorithm based on Kalman filtering with other tracking algorithms in the seismic station scenario. It can be observed that the proposed algorithm achieves the best performance in terms of MOTA, MOTP, and IDF1, indicating higher accuracy in pedestrian detection, position estimation, and identification and tracking capability. Furthermore, it successfully tracks a larger number of pedestrian targets and incurs fewer target losses. However, this algorithm has a slightly longer processing time per frame, but still maintains above 20 frames.

Table 3.

Model-Tracking Comparison.

4.4. Display of Experimental Results

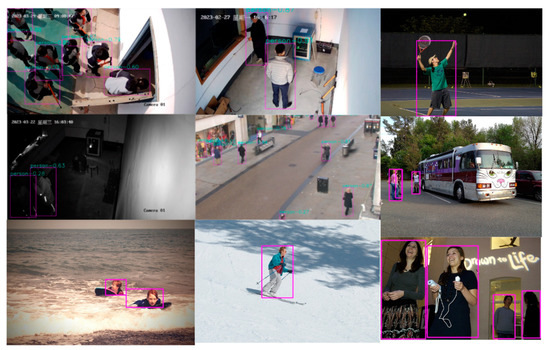

To visually validate the detection performance of the YOLOv5 model in real-world scenarios, we conducted separate verifications for personnel detection in different scenes. The detection results are shown in Figure 9, providing a visual representation of the model’s performance in detecting pedestrians in various scenes.

Figure 9.

Model-recognition results(In this case, “星期一” represented “Monday”, and “星期三” represents “Wednesday”).

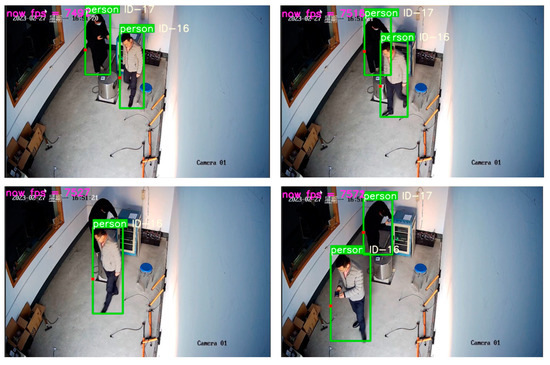

To verify the effectiveness of tracking interfering personnel, this study randomly set multiple individuals to walk around in seismic stations. The tracking was tested under scenarios involving target occlusion and target loss. The occlusion of targets is illustrated in Figure 10:

Figure 10.

Target-occlusion tracking (top left). Before occlusion (top right). About to overlap (bottom left). Target height overlap (bottom right). Target reappears (In this case, “星期一” represented “Monday”).

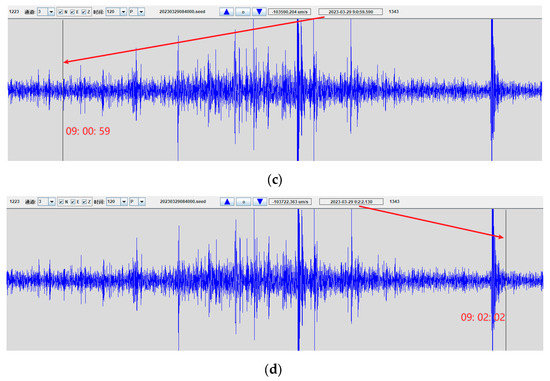

From the figure, it can be observed that when the interfering personnel with ID 17 is not detected due to anomalies, the trajectory of the target is adjusted by the algorithm, resolving the issue of trajectory abnormality. Subsequently, based on the obtained interference time points and trajectories, the time-series data collected by the seismic station are annotated with abnormal data. The specific effect of this annotation is illustrated in Figure 11:

Figure 11.

Abnormal data annotation test. (a). Interference with personnel entry time; (b). Disturbing personnel departure time; (c). Interference with personnel entering time marking; (d). Disturbing personnel’s departure time marking.

Figure 11 clearly demonstrates that the identification results from the surveillance video data effectively enable the annotation of corresponding abnormal data in the time-series data. This helps prevent interference caused by data anomalies resulting from personnel activities. In this context, “通道” translates to “thoroughfare” and “时间” translates to “time”. They are used to configure the display settings.

5. Results and Discussion

This paper presents an intelligent identification solution for environmental interference in seismic stations, which accurately labels abnormal data caused by personnel in the time-series data collected by the seismic stations. First, YOLOv5 is used to detect interfering personnel. After detecting the targets, a centroid-matching algorithm based on Kalman filtering is proposed. This method predicts the position of the target in the next frame and calculates the Euclidean distance between the predicted position and the actual detected position, thus achieving target tracking. Ultimately, the entry-exit time and motion trajectory of interfering personnel in the seismic stations are obtained, enabling the annotation of abnormal data in the seismic time-series data. Experimental results demonstrate that this method can accurately identify the time period when interfering personnel impact the seismic time-series data, thereby marking the corresponding abnormal data caused by interference in the corresponding time-series data. Furthermore, through experimental comparisons, the proposed solution in this paper shows good performance in practical scenarios compared to other algorithms.

Although the proposed solution in this paper exhibits good performance compared to other methods, there are limitations when targets are occluded for an extended period. Currently, this paper judges the departure of interfering personnel by analyzing the edge coordinates of each frame. If the target is not at the image edge, it is considered to still be within the station until an occluded target is detected again. In future work, we will further improve this aspect to meet the requirements of recognizing environmental interference in seismic stations. Additionally, considering the large number and wide distribution of seismic stations, we will explore the integration of the proposed solution with edge-cloud cooperation to deploy intelligent environmental-interference identification in edge devices. This aims to provide faster responses and real-time interference detection, reducing inference latency and improving real-time performance.

6. Conclusions

Due to the continuous operation of seismic stations, equipment failures frequently occur, necessitating timely on-site repairs. However, these repairs also introduce interference that affects the observed data. Therefore, the objective of this study is to develop a solution that accurately identifies and labels abnormal data caused by human interference in order to prevent its impact on subsequent data-analysis tasks. In this paper, we utilize YOLOv5 and target-tracking algorithms to detect and track the movement trajectories of interfering individuals. This allows us to determine the entry and exit times of the interfering individuals and label the corresponding abnormal data in the seismic time-series data, thus mitigating the impact on data analysis. Consequently, our research provides valuable insights for enhancing the operational efficiency and security of seismic stations, with significant implications for earthquake early warning and disaster-reduction capabilities.

Author Contributions

Conceptualization, Y.C.; Formal analysis, P.T.; Methodology, P.T. and Y.C.; Project administration, G.S. and Y.C.; Resources, Y.C.; Software, P.T.; Validation, H.S. and R.L.; Writing—original draft, P.T. and Y.Y.; Writing—review & editing, P.T. and Y.Y. and Y.C. and G.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Informationization and Intelligent Service Team Project of Shandong Earthquake Agency (Grant No. ZD202201); Technological Small and Medium sized Enterprise Innovation Ability Enhancement Project (2022TSGC2189); National Natural Science Foundation of China (61375084); Shandong Natural Science Foundation, China (ZR2019MF064).

Data Availability Statement

Not applicable.

Conflicts of Interest

The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Jiang, C.; Liu, R. National Earthquake Intensity Rapid Reporting and Early Warning Project—Opportunities and Challenges of Seismic Networks. Eng. Res. Eng. Interdiscip. Perspect. 2016, 8, 250–257. [Google Scholar]

- Yang, Y.; Ma, W. Analysis on the perfect and application of basic information of seismic stations. Prog. Earthq. Sci. 2021, 9, 413–420. [Google Scholar] [CrossRef]

- Lecocq, T.; Hicks, S.P.; Van Noten, K.; Van Wijk, K.; Koelemeijer, P.; De Plaen, R.S.; Massin, F.; Hillers, G.; Anthony, R.E.; Apoloner, M.T.; et al. Global quieting of high-frequency seismic noise due to COVID-19 pandemic lockdown measures. Science 2020, 369, 1338–1343. [Google Scholar] [CrossRef]

- Ścisło, Ł.; Łacny, Ł.; Guinchard, M. COVID-19 lockdown impact on CERN seismic station ambient noise levels. Open Eng. 2021, 11, 1233–1240. [Google Scholar] [CrossRef]

- Kislov, K.V.; Gravirov, V.V.; Vinberg, F.E. Possibilities of Seismic Data Preprocessing for Deep Neural Network Analysis. Izv. Phys. Solid Earth 2020, 56, 133–144. [Google Scholar] [CrossRef]

- Choudhary, P.; Kumari, P.; Goel, N.; Saini, M. An Audio-Seismic Fusion Framework for Human Activity Recognition in an Outdoor Environment. IEEE Sens. J. 2022, 22, 22817–22827. [Google Scholar] [CrossRef]

- Choudhary, P.; Goel, N.; Saini, M. A Seismic Sensor based Human Activity Recognition Framework using Deep Learning. In Proceedings of the 2021 17th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Washington, DC, USA, 16–19 November 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Juan, T.; Cordova-Esparza, D. A comprehensive review of YOLO: From YOLOv1 to YOLOv8 and beyond. arXiv 2023, arXiv:2304.00501. [Google Scholar]

- Ren, J.; Gong, N.; Han, Z. Multi target tracking algorithm based on YOLOv3 and Kalman filter. Comput. Appl. Softw. 2020, 37, 169–176. [Google Scholar]

- Quanan, G.; Xia, Y. Kalman Filter Algorithm for Sports Video Moving Target Tracking. In Proceedings of the 2020 International Conference on Advance in Ambient Computing and Intelligence (ICAACI), Ottawa, ON, Canada, 12–13 September 2020; pp. 26–30. [Google Scholar] [CrossRef]

- Rohal, P.; Ochodnicky, J. Target Tracking Based on Particle and Kalman Filter Combined with Neural Network. In Proceedings of the 2019 Communication and Information Technologies (KIT), Vysoke Tatry, Slovakia, 9–11 October 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Li, H.; Xu, Z.; Zhang, Y.; Guo, S.; Cui, G.; Kong, L. Robust Indoor Target Tracking Based on Track Matching. In Proceedings of the 2021 CIE International Conference on Radar (Radar), Haikou, Hainan, China, 15–19 December 2021; pp. 3023–3026. [Google Scholar] [CrossRef]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H. Fully-convolutional siamese networks for object tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar]

- Guo, Q.; Feng, W.; Zhou, C.; Huang, R.; Wan, L.; Wang, S. Learning dynamic siamese network for visual object tracking. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1763–1771. [Google Scholar]

- Valmadre, J.; Bertinetto, L.; Henriques, J.; Vedaldi, A.; Torr, P.H. End-to-end representation learning for correlation filter based tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2805–2813. [Google Scholar]

- Gomes, H.; Redinha, N.; Lavado, N.; Mendes, M. Counting People and Bicycles in Real Time Using YOLO on Jetson Nano. Energies 2022, 15, 8816. [Google Scholar] [CrossRef]

- Mu, X.; Lin, Y.; Liu, J.; Cao, Y.; Liu, H. Surface Navigation Target Detection and Recognition based on SSD. In Proceedings of the 2019 3rd International Conference on Electronic Information Technology and Computer Engineering (EITCE), Xiamen, China, 18–20 October 201; pp. 649–653. [CrossRef]

- Liu, X. Underwater target maneuver detection algorithm based on width learning. In Proceedings of the 2022 IEEE 5th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 16–18 December 2022; pp. 985–989. [Google Scholar] [CrossRef]

- Feng, Y.; Wei, Y.; Li, K.; Feng, Y.; Gan, Z. Improved Pedestrian Fall Detection Model Based on YOLOv5. In Proceedings of the 2022 IEEE 6th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Beijing, China, 3–5 October 2022; pp. 410–413. [Google Scholar] [CrossRef]

- Fu, B.; Huang, L. Polygon matching using centroid distance sequence in polar grid. In Proceedings of the 2016 2nd IEEE International Conference on Computer and Communications (ICCC), Chengdu, China, 14–17 October 2016; pp. 733–736. [Google Scholar] [CrossRef]

- Xu, Y.; Song, Y.; Zhao, H. A Trajectory Tracking Method Combining YOLOv5 and Centroid Matching. Electron. Meas. Technol. 2022, 45, 123–129. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).