1. Introduction

At this very moment, conversations between humans and human/machine interactions are taking place everywhere. Thanks to automatic speech recognition systems and online communication systems, vast amounts of these dialogues can be recorded in text easily [

1,

2,

3]. A succinct summarization helps readers grasp the key points with less time and effort. Hence, the necessity for dialogue summarization arises urgently. Dialogue summarization aims to succinctly compress and refine the content of conversations. My goal is to propose a new algorithmic framework that uncovers and incorporates external anaphora resolution information. The proposed framework aims to effectively assist models in clarifying referential relationships within dialogues and generating more coherent summaries.

Major text summarization works have focused on single-speaker documents like news publications [

4]. Compared to single-speaker documents, people prefer using more personal pronouns to refer to recent characters in conversations. There is an average of 0.08 personal pronouns per token in the widely used dialogue summarization dataset SAMSum [

1], compared to 0.03 personal pronouns per token in the news documents dataset CNN/DailyMail [

4]. However, existing advanced dialogue summarization models fail to understand these personal pronouns. These models often generate summaries that associate one’s actions with a wrong person. An example is shown in

Table 1.

This work presents an example from the test set of the SAMSum dataset, which consists of the original dialogue, supervised summary labels, and the decoding results from the latest state-of-the-art (SOTA) model. Upon reading the original dialogue, it is evident that Hank will be the one taking the child back. However, during the decoding process, the model generates a summary where Don is portrayed as the individual bringing the child. This error directly stems from the original dialogue, which does not explicitly specify who will bring the child and instead uses the pronoun ’I’. In the model’s encoding and inference process, it is crucial for it to fully comprehend and accurately infer the referent of ’I’ in order to generate a summary in the third person correctly.

This problem directly contributes to the challenges of employing the existing state-of-the-art (SOTA) model, Multi-View BART, in practical applications. Despite the fluency of its generated results, there is an inconsistency between the decoding outcome and the factual information present in the source text. Even a SOTA dialogue summarization model (Multi-view BART [

5]) makes 20 referral errors in 100 sampled test conversations, which significantly harm the quality of summarization measured by ROUGE [

6] scores (see

Section 5.3).

How to help models understand this frequently occurring key information in dialogue data has become an important research question. Therefore, this work aims to propose a new algorithmic framework to uncover and inject external coreference resolution information. The framework can effectively assist models in understanding the referential relationships in the dialogue and generating more consistent summaries.

Dialogue summaries have different data formats and characteristics compared to other forms of text summaries. Dialogues vary in their format and content, encompassing various types such as everyday conversations, meetings, customer support Q&A, doctor–patient dialogues, and more. Unlike fluent long texts, dialogues consist of discrete utterances in multiple rounds, and the coherence of the context and consistency of the topic cannot be guaranteed. Additionally, dialogues encompass different stages, frequent instances of complex coreference phenomena, and the utilization of domain-specific terminology, all of which present substantial challenges for dialogue summarization.

Existing document-focused summarization models often face difficulties in handling such issues. Therefore, there is a need for efficient methods that can address these problems and generate high-quality dialogue summaries. Existing solutions often rely on scarce dialogue data, and researchers attempt to enhance the existing summarization models using human priors or external resources. For example, TGDGA [

7] considers the presence of multiple topics in dialogues and models topic transitions explicitly to guide summary generation. In real-world conference dialogues, where speakers are relatively fixed and each speaker has distinct characteristics, HMNet [

3] utilizes these features to improve the generation quality. Furthermore, several works [

3,

7] have focused on modeling the various stages and structures found in dialogues. However, so far, no work has attempted to address the complex coreference relationships and the challenges posed by intricate referring expressions in dialogues, which persistently impact the quality of summary generation.

This paper proposes a framework named WHORU (the abbreviation of “Who are you”) to inject external personal pronoun resolution information into abstractive dialogue summarization models.

Specifically, WHORU appends the references after their corresponding personal pronouns and distinguishes personal pronouns, references, other words with additional tag embeddings. Preliminary experiments have shown that the SOTA personal pronoun resolution method SpanBERT [

8] is time consuming and space consuming.

This work would like to emphasize that there is a strong recency effect observed when humans use personal pronouns. This suggests that the nearest candidate is the most likely reference [

9]. Hence, an additional method called DialoguePPR (short for Dialogue Personal Pronoun Resolution) is proposed. It is a rule-based approach specifically designed to address personal pronoun resolution in dialogues. DialoguePPR efficiently performs a greedy search to identify the closest person or speaker.

The desirable features of the proposed methods can be summarized as follows:

Simple: WHORU is easy to implement since it does not need to modify existing models except adding tag embeddings. Rule-based DialoguePPR either requires any training procedure or personal pronoun resolution datasets.

Efficient: WHORU appends a few words to the original text which slightly increases training and inference time. DialoguePPR is model-free and only needs to run a greedy search algorithm which has linear time complexity.

Generalizable: WHORU can be applied to most of the existing advanced dialogue summarization models, including these built on pretrained models like BERT, BART [

10], etc. WHORU helps different models incorporate external personal pronoun resolution information (

Section 5.3). Moreover, DialoguePPR is accurate on two widely used dialogue summarization dataset from different areas (

Section 5.4).

Effective: Empirical results demonstrate that the performance of strong models is significantly improved on ROUGE evaluation by the proposed methods. More importantly, WHORU achieves new SOTA results on SAMSum and AMI datasets.

3. WHORU

In conversation, people often use personal pronouns as a simple substitution for the proper name of a person for convenience, which can avoid unnecessary repetition and make the conversation more succinct. However, the evidence demonstrated that existing models are often confused with personal pronouns. As a result, these models often generate summaries with referral errors and obtain ordinary ROUGE scores (see

Section 5.3).

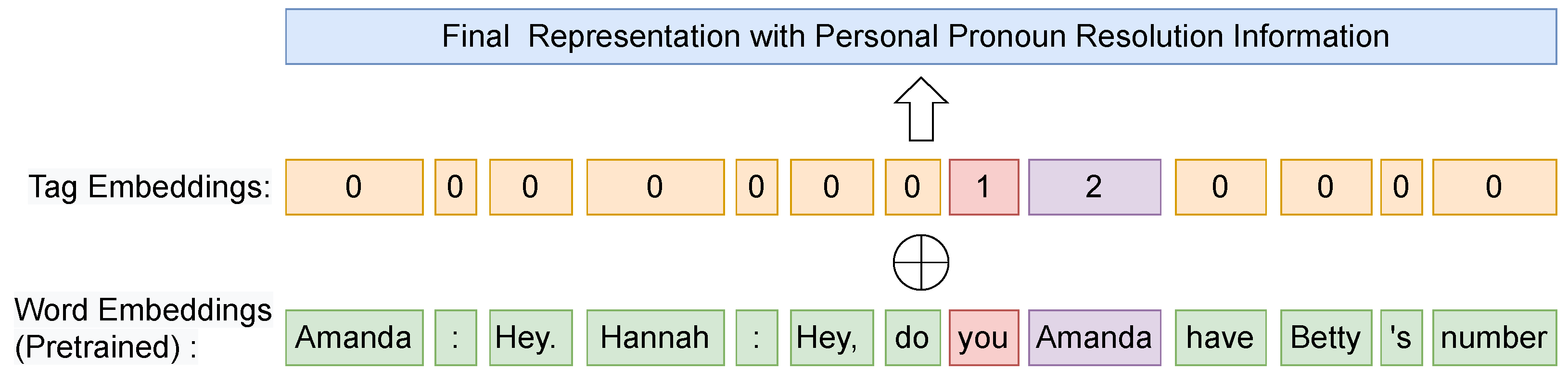

To alleviate this problem, a framework called WHORU is proposed, which explicitly considers the process of personal pronoun resolution. As shown in

Figure 1, my framework includes two steps. First, WHORU injects the references of personal pronouns into original conversations. Second, WHORU uses additional tag embeddings to help the model distinguish the role of personal pronouns and their references.

In

Figure 1, green is used to indicate the original words in the text, red is used to indicate the identified anaphoric words, and purple is used to indicate the additional injected anaphora resolution information. Assuming the anaphora resolution task has been completed and the referents for each personal pronoun have been obtained, in this example, the target pronoun ’you’ is identified, and an anaphora resolution method is employed to determine that it refers to ’Amanda’. For simplicity and ease of understanding, the position embedding is not depicted. The WHORU framework as a whole is lightweight and concise, allowing it to adapt flexibly to different backbone models. The next section introduces the embedding of PPR information into PGN and BART through simple steps.

3.1. Inject Personal Pronoun Resolution

3.1.1. Resolve Personal Pronouns in the Conversation

Personal pronouns are widely used in human conversations. Since there is only a limited number of personal pronouns in a specific language, they can be easily extracted by matching each word in utterances with a pre-defined personal pronoun list (as shown in

Table 2). Formally, given an input conversation

, a list of personal pronouns

can be obtained.

For each recognized personal pronoun

, the corresponding reference

is resolved using personal pronoun resolution methods. This paper considers two PPR methods: (1) SpanBERT [

8], which is pretrained on a large-scale unlabeled corpus and then finetuned on a vanilla coreference resolution dataset, and (2) DialoguePPR, a simple but effective rule-based method specifically designed for PPR in dialogues, which will be described in detail in

Section 4.

3.1.2. Inject PPR Information into Conversations

In the domain of personal pronoun resolution, there exist numerous potential schemes. However, it is crucial to seek a method that not only effectively addresses the task but also remains orthogonal to existing approaches while making minimal modifications to the existing models.

With the objective in mind, a direct modification approach is proposed, involving the injection of the obtained personal pronoun resolution (PPR) information into the input conversation X. Specifically, it is suggested to append the reference r after its corresponding personal pronoun p within the dialogue.

The beauty of my approach lies in its compatibility with Encoder-Decoder models, which share a common need to encode the source text and generate the target based on it. By incorporating the PPR information into the source text, the aim is to ensure seamless integration of this information into any Encoder-Decoder model, eliminating the need for additional adjustments in model encoding. In essence, a method has been devised that achieves the goal of injecting the personal pronoun resolution information by directly modifying the input dialogue X.

Through this innovative approach, the performance of personal pronoun resolution can be enhanced without disrupting the underlying structure and functioning of the existing models. By strategically incorporating the previously extracted PPR information into the input dialogue, the power of Encoder-Decoder architectures can be leveraged to improve the resolution accuracy and coherence of personal pronouns.

Formally, consider one turn of the conversation X as , where is a personal pronoun p, and r is its corresponding reference. The r is appended right after the personal pronoun , resulting in the modified sequence: . In this way, these two words will have close position embedding in Transformer model or time step in LSTM model. Thus the model could learn the relation between them.

3.2. Additional Tag Embeddings

Although, the proposed appending strategy could help the model associate the personal pronoun with its reference. It also introduces noise to the fluent human language. To assist the model in distinguishing between personal pronouns, references, and other words, different labels are assigned. Tag 1 and 2 are used to denote personal pronouns and references, respectively, while 0 is used for other tokens. For the given X, the corresponding labels are as follows: .

To enhance the effectiveness of anaphora resolution, an additional tag embeddings layer is introduced as a crucial step to embed the tag sequence. By incorporating tag embeddings into the input embeddings, a comprehensive and enriched representation of the input is achieved, which is subsequently fed into the encoder for further processing.

To maintain compatibility with pretrained parameters and avoid interference, a learnable embedding layer is chosen instead of fixed embeddings. This enables adaptive adjustments to the tag embeddings during training without impacting the existing pretrained parameters. As a result, the only modification made to the model is the inclusion of the tag embeddings layer.

An intriguing aspect to highlight is the remarkably small parameter size of the Tag Embedding Layer. This indicates that anaphora resolution information can be injected and identified at a significantly low cost. This advantageous characteristic aligns with the need for reduced training data and computational resources, making my approach more practical and efficient.

By seamlessly incorporating the tag embeddings layer into the model architecture, the representation of the input sequence is enriched, empowering the model to effectively capture and utilize anaphora resolution information. This augmentation enables improved performance in anaphora resolution tasks, without compromising the integrity of the pretrained parameters or incurring substantial additional computational overhead.

4. Personal Pronoun Resolution for Dialogues

The existing SOTA PPR method SpanBERT typically requires a large amount of monolingual data to pretrain, which may not be feasible for some low-resource languages. Furthermore, the computation and memory costs are also not beneficial to build a Green AI. To this end, a simple and effective rule-based personal pronoun resolution method, named DialoguePPR, is proposed.

A major step of existing rule-based personal pronoun resolution is identifying speakers [

12]. However, due to the inherent nature of conversation data, the extraction of speakers becomes straightforward once each turn is separated.

For the first personal pronoun, it is straightforward that the reference of it in an utterance is the speaker himself/herself.

Regarding the second personal pronoun, it is understood that the reference should be one of the speakers. Based on the strong recency effect, it is believed that the closest speaker is the most likely reference [

9]. Personal pronouns typically appear after their reference. Consequently, a backward search is performed to find the nearest speaker from the current utterance. If the algorithm does not identify any candidates in the backward search, it will proceed with a forward search to determine the nearest speaker as the reference.

Resolution of the third personal pronoun is a bit more complex: their references could be one of the persons mentioned in the whole conversation. Therefore, the first step is to utilize the name entity recognition tool in NLTK [

13] to extract a list of person entities denoted as

. Since name entity recognition problem has been well studied, the extraction performance can be guaranteed.

Guided by the recency effect, a search procedure similar to that used for the second personal pronoun is employed to locate the reference.

The above procedure is summarized in Algorithm 1, which represents a dedicated, straightforward, and efficient referential resolution strategy specifically designed for dialogue datasets.

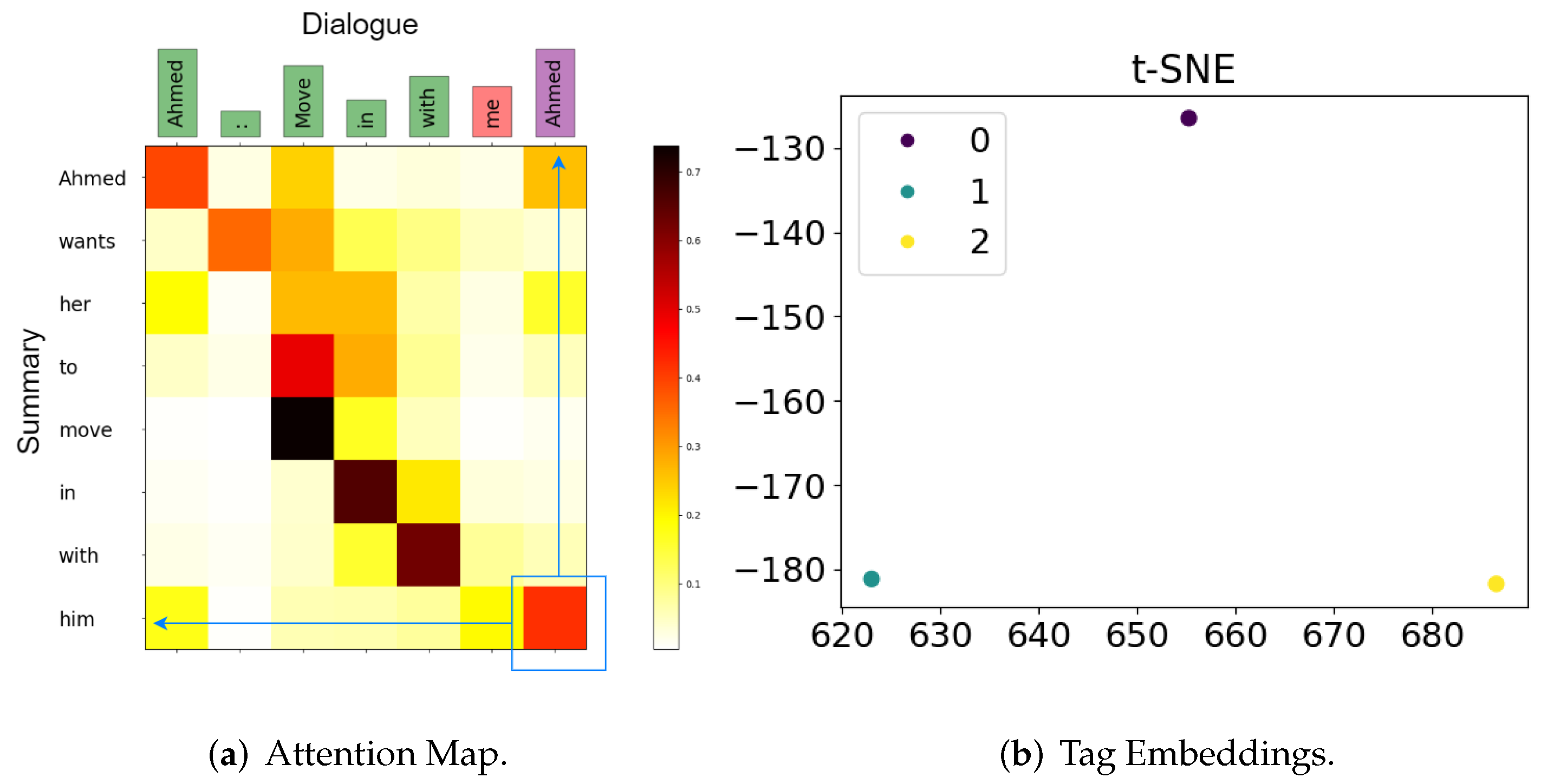

Figure 2 is a good example. By leveraging explicit features in the dialogue and linguistic prior knowledge, DialoguePPR efficiently and accurately performs anaphora resolution in the dialogue and extracts referential information. Subsequently, this information can be injected into the model using the WHORU framework introduced earlier.

Note that the greedy search have a linear time complexity

, where

T is the number of tokens in

X. It is natural to doubt that whether these rules work widely on different dialogue summarization datasets. In

Section 5.4, a comprehensive analysis of the efficiency and generalization of DialoguePPR will be conducted.

| Algorithm 1 DialoguePPR |

| Input: The conversation X, person entity list E. |

| for in X do |

| for in do |

| if is first personal pronoun then |

| Append to P. |

| end if |

| if is second personal pronoun then |

| Greedy search the closest speaker different from . |

| Append to P. |

| end if |

| if is third personal pronoun then |

| Greedy search the closest person in E. |

| Append to P. |

| end if |

| end for |

| end for |

| Output: The resolution result P. |

6. Related Work

Pre-trained Language Models. In the field of Natural Language Understanding (NLU), BERT [

19] is primarily based on the Masked Language Task. By masking a portion of the corpus, the model is trained to predict the masked part based on the context, thus enhancing the model’s ability to encode context. Since the Masked Language Task is an unsupervised process, it is possible to construct large-scale training data from common corpora. BERT trained on large corpora can effectively incorporate contextual information and obtain better contextual representations. Pretraining has greatly benefited the field of extractive summarization, as seen in BERTSUM [

20] and MATCHSUM [

21], which achieved state-of-the-art results using BERT.

On the other hand, there are also numerous pretrained models based on the Seq2Seq framework, such as BART [

10], PEGASUS [

22], and T5 [

23]. The general idea is to construct pretrained corpora in an unsupervised manner by modifying or extracting from the source text, and then train Seq2Seq models based on Transformers. BART initially employs various noise-adding strategies to disrupt the source text and then inputs the noisy text to generate the original text. BART effectively acquires the ability to generate text using context and exhibits good robustness to input text, yielding excellent results in natural language generation tasks such as paraphrasing and summarization. Reinforcement learning struggles without clear rewards. Intrinsic motivation methods reward novel states, but have limited benefits in large environments. ELLM [

24] uses text corpora to shape exploration. It prompts a language model to suggest goals for the agent’s current state. ELLM guides agents towards meaningful behaviors without human involvement. ELLM is evaluated in Crafter and Housekeep, showing improved coverage of common-sense behaviors and performance on downstream tasks.

These pretrained models provide additional domain-specific and grammatical knowledge. They also reduce the dependence on subsequent training data and training time, thereby greatly advancing the development of natural language processing. A significant amount of existing work is conducted based on pretrained models.

Abstractive Document Summarization. Neural models for abstractive document summarization have been widely studied [

25,

26] since Rush et al. [

27] first employed the encoder and decoder framework. A series of improvements have been proposed based on different advanced techniques. Except for the copy mechanism (PGN) and pretrained language model (BART) mentioned above, reinforcement learning [

28] and graph neural networks (GNNs) [

29] have also been extended. Some studies using discourse relation [

29] and coreference resolution [

30] are related to my paper. Differently, my work is the first work utilizing personal pronoun resolution for dialogue summarization. As demonstrated above, the personal pronoun problem in dialogue summarization is significantly different from that in the signal speaker document. While most of these works rely on the complex graph structure, my WHORU is quite simple and easy to implement on existing models.

Abstractive Dialogue Summarization.

Because of the scarcity of dialogue summarization resources, most existing works improve abstractive dialogue summarization with human prior knowledge or external information. For example, topic (TGDGA) [

7] and speaker role (HMNet) [

3] information have been widely used to improve abstractive dialogue summarization. Conversational structure prior knowledge is also be considered in [

3,

5,

7]. In this paper, successful utilization of external personal pronoun resolution information has led to achieving state-of-the-art (SOTA) results. DialoguePPR, which is based on conversational prior knowledge, avoids the requirement for external PPR resources.

Coreference resolution methods. Coreference resolution is a popular direction in natural language processing, and the current mainstream approach is based on end-to-end methods, such as Neural Coreference Resolution. This modeling method considers the input document (consisting of T tokens) as spans and attempts to find the antecedent for each span. This work uses a bidirectional LSTM network to encode information within and outside the spans, while also incorporating an attention mechanism. Neural Coreference Resolution outperforms all previous models without the need for syntactic parsing and named entity recognition.

Subsequently, with the development of pre-training models, SpanBERT [

8] emerged. As mentioned earlier, BERT utilizes the Masked Language Model task to train the model and achieves good results. However, BERT only masks one subword at a time, and the training objective focuses on obtaining token-level semantic representations, whereas end-to-end coreference resolution requires a good span representation. Therefore, researchers proposed SpanBERT, which introduces a better span masking scheme and a Span Boundary Objective (SBO) training objective. It has achieved state-of-the-art results in tasks related to spans, such as extractive question answering and coreference resolution. This paper will also use and compare with SpanBERT.

On the other hand, existing coreference resolution methods (including SpanBERT) are trained on the OntoNotes 5.0 dataset. The OntoNotes 5.0 dataset includes various types of data such as news, telephone conversations, and broadcasts, and it covers multiple languages, including English, Chinese, and Arabic. In terms of data distribution, English dialogue-type data is relatively limited, and there is inconsistency with some dialogue datasets (such as conference dialogue) in terms of content. In terms of data format, SpanBERT accepts a maximum of 512 tokens of text, which limits the direct application of existing coreference resolution work to dialogue summarization in subsequent studies.

Generative Text Summarization. Although extractive methods can ensure a certain level of grammatical and syntactic correctness, as well as the fluency of summaries, they are prone to content selection errors, lack flexibility, and exhibit poor coherence between sentences. Moreover, the limited choice of sentences and words solely from the source text greatly restricts the quality ceiling of summary generation techniques. With the emergence of neural networks and sequence-to-sequence (Seq2Seq) models, it became possible to flexibly select words from a large vocabulary to generate summaries. However, Seq2Seq methods encounter some issues, such as low-quality generated summaries with grammar errors and the tendency to produce redundant words. Additionally, due to the limitation of vocabulary size, out-of-vocabulary (OOV) problems may arise. Consequently, related works have proposed solutions to address these problems. For instance, the work of Paulus et al. introduced the Pointer Generator Network [

28], which incorporates Copy and Coverage mechanisms based on attention mechanisms in Seq2Seq, effectively alleviating the aforementioned issues. In addition to simple Seq2Seq models, reinforcement learning [

28] and Graph Neural Networks (GNNs) [

29] have also been gradually extended to this field and have achieved good results. Some researchers have utilized discrete relations [

29], as well as anaphora resolution methods [

30], which are somewhat related to the approach used in this paper. However, what distinguishes my work is that this work is the first to employ person-referencing resolution in dialogue summarization. Generating text summaries using generative techniques involves an autoregressive sequence generation process. At each time step, the decoding space encompasses the entire vocabulary, resulting in an excessively large search space during longer decoding steps. Thus, a decoding search strategy is needed. The two most popular methods are Greedy Search and Beam Search, which will be discussed further in the following sections.

Greedy Search: In the autoregressive process, when the model needs to generate a sequence of length N, it iterates N times, each time providing a probability distribution for the next token based on the generated portions of the source and target. Greedy Search selects the token with the highest probability from the distribution as the result for the current time step. Greedy Search always greedily chooses the token with the highest probability, leading to many candidate tokens being pruned in subsequent decoding steps and causing the optimal solution to be discarded prematurely.

Beam Search: Beam Search [

31,

32] is an improvement over Greedy Search and can be seen as an enlarged search space. Beam Search is a heuristic graph search algorithm. Unlike the greedy search strategy, Beam Search constructs a search tree for each layer of the tree using a breadth-first strategy. The nodes are sorted according to a certain policy, and only a predetermined number of nodes are kept. Only these selected nodes are expanded in the next level, while other nodes are pruned for optimization. The ’number of nodes’ here refers to the hyperparameter

, which effectively saves space and time, considers more possible optimal solutions, and improves the quality of search results.

7. Conclusions

This paper conducts in-depth research on the issue of referential errors in dialogue summarization. The research can be divided into the following aspects. Initially, problems encountered in current dialogue summarization and existing solutions are analyzed. Following the summary and analysis of existing methods, a significant issue is identified: the challenge of referential resolution in dialogue summarization, particularly the frequent occurrence of personal pronoun references that perplex models and result in erroneous and significantly degraded summaries. Consequently, the WHORU framework is introduced to inject additional referential resolution information into existing models, aiding in comprehending complex referential problems and enhancing the quality of generated summaries. Moreover, given the high cost and computational overhead associated with current general referential resolution strategies, as well as their contradiction with the concept of environmentally friendly AI, their application in lengthy dialogue data becomes challenging. To address this, a heuristic referential resolution strategy specifically tailored for dialogue summarization is proposed, exhibiting excellent adaptability and rapid computation speed. It achieves 11 times the inference speed of SpanBERT while maintaining considerable prediction accuracy. Extensive experiments in this paper demonstrate that WHORU achieves significant improvements over multiple baseline models, reaching a new state-of-the-art (SOTA) level. The experimental analysis also thoroughly validates the effectiveness and generalizability of WHORU and DialoguePPR, improving the quality of generated summaries and reducing referential errors, thus accomplishing my intended research objectives.

In future work, it would be worthwhile to explore the decoupling of referential information from the original input and the design of mechanisms for their interaction. For the encoding of injected referential information, new methods such as graph neural networks can be explored, which may have better performance on such structured data.

Regarding DialoguePPR, although extensive experiments have demonstrated its generalizability on diverse datasets, in more complex scenarios, the third-person pronoun resolution strategy may be overly simplistic, leading to the injection of a large amount of erroneous noise into the model. Therefore, future work can focus on improving the performance of SpanBERT or existing coreference resolution algorithms in long dialogue contexts to compensate for the limitations of the DialoguePPR algorithm.

Moreover, it is worth considering the integration of my work with the latest advancements in the field, such as Efficient Tuning techniques exemplified by PromptTuning. While the WHORU framework proposed in this paper introduces only a minimal number of parameters, rendering it well-suited for data-scarce tasks like dialogue summarization, it still necessitates fine-tuning the entire model during training. By combining it with efficient training strategies like PromptTuning to fully harness the capabilities of pre-trained language models, this work can be applied in broader scenarios.