In this section, we present the architecture of the proposed DEGANet. Additionally, we discuss the settings of the loss function.

3.1. DEGAN Network Architecture

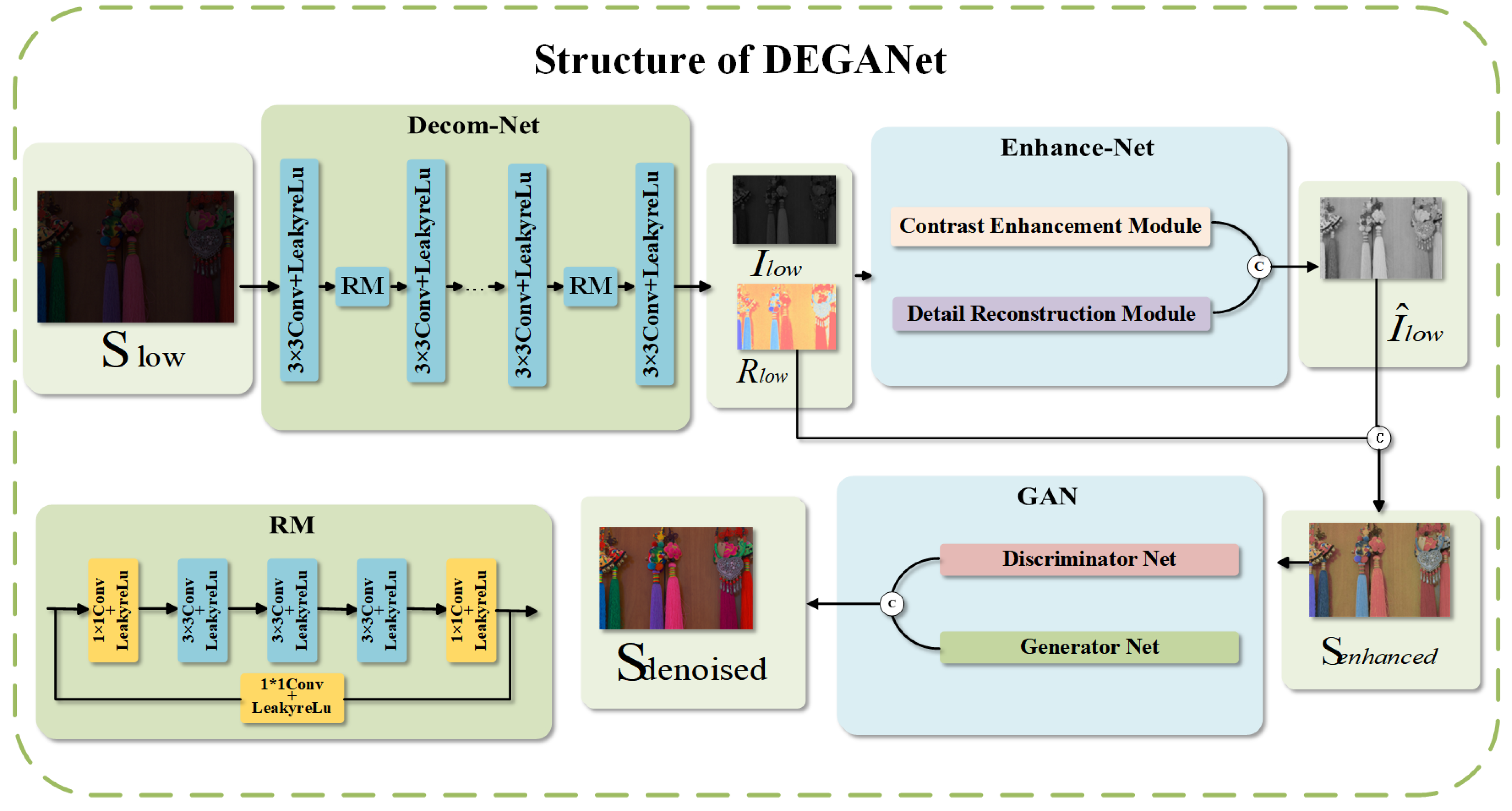

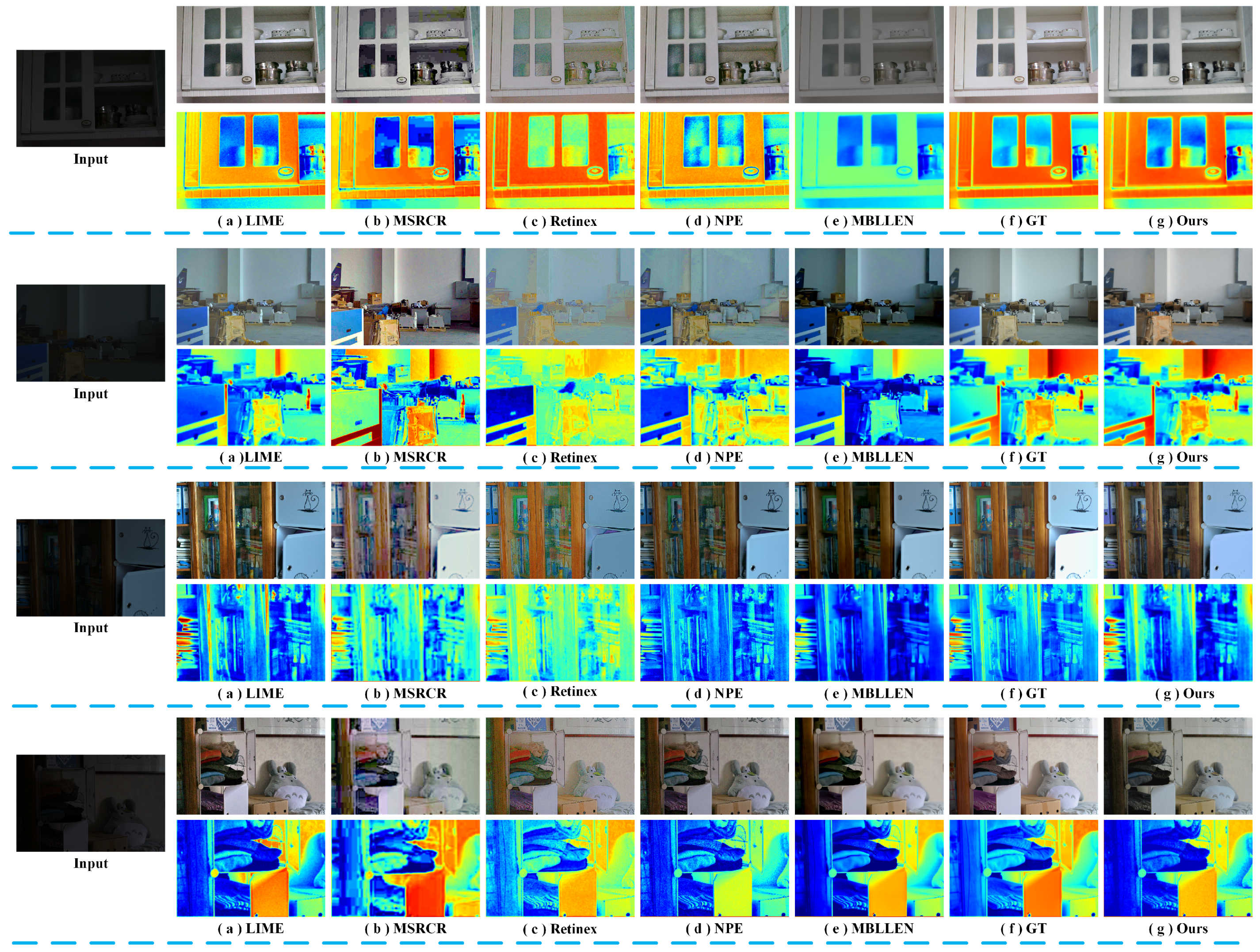

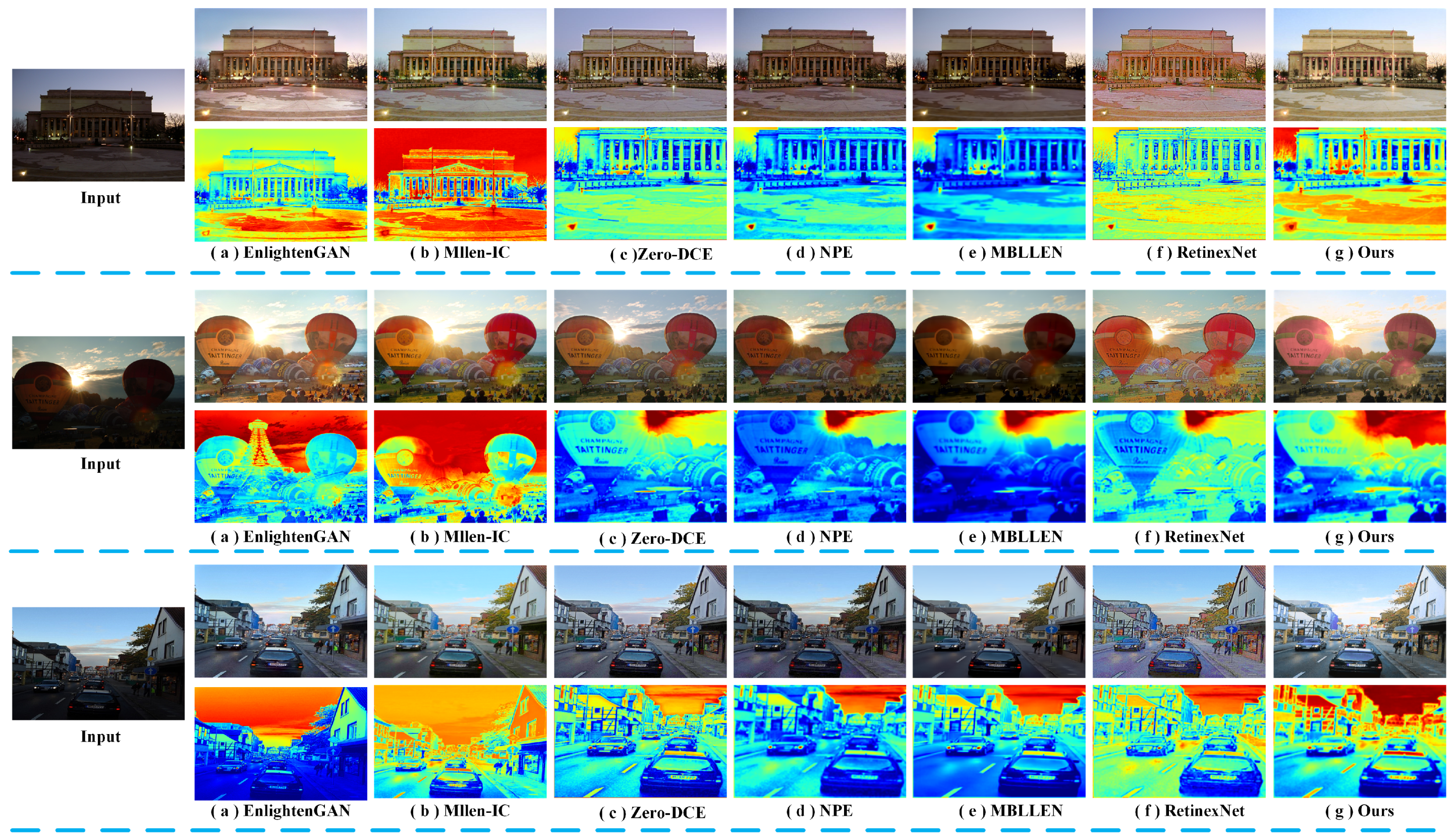

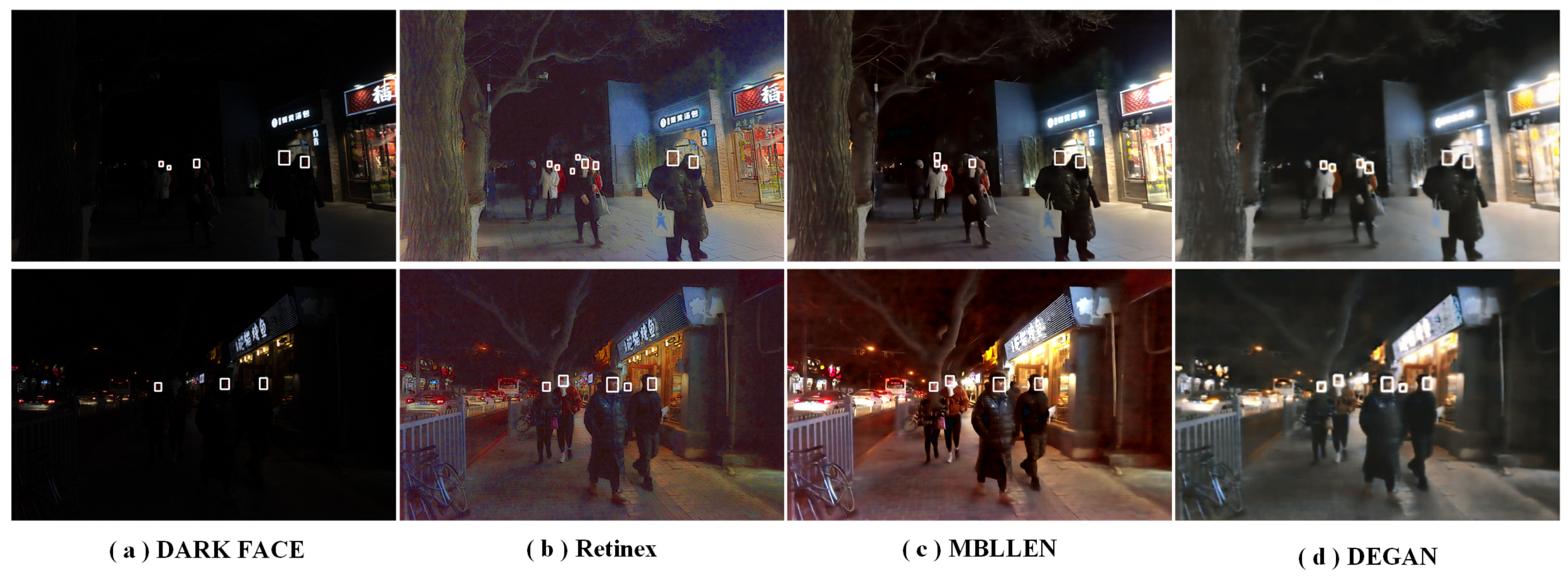

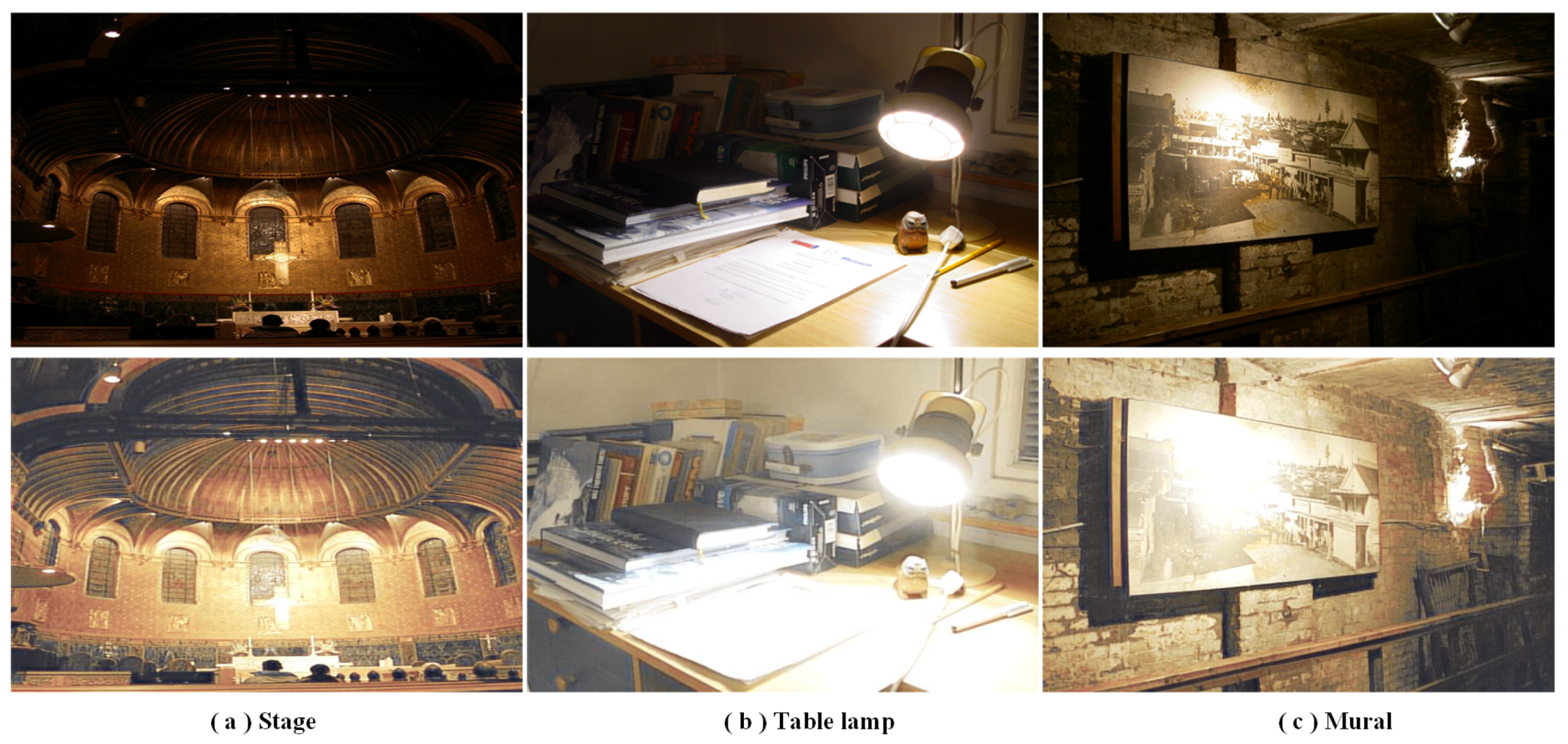

In our work, we aim to address these issues by proposing DEGANet, a unique deep-learning framework that combines the Retinex theory with sophisticated deep-learning algorithms, notably utilizing spatial and frequency information from poorly lit pictures. The DEGANet architecture is comprised of three linked subnetworks: Decom-Net, Enhance-Net, and an Adversarial Generative Network (GAN). The Enhance-Net may successfully supplement the illumination component by decomposing an input low-light picture into a reflectance component and an illumination component using the Decom-Net. By including a GAN in the architecture, DEGANet can effectively denoise and improve the enhanced picture while retrieving the original information and supplementing missing data. These subnets collaborate to separate the input poorly illuminated image into its illumination component and reflectance component, improve the illumination component, then denoise and improve the enhanced image, producing high-quality, aesthetically appealing output images. In terms of picture augmentation and denoising quality, our technique outperforms current state-of-the-art methods, making it appropriate for a wide range of real-time applications.

As shown in

Figure 1, our DEGANet completed the intermediate process including the illumination and reflectance components from Decom-Net, the enhanced illumination component from Enhance-Net, and the denoised component produced by GAN. As shown in

Figure 2, a low-light image is initially processed through the Decom-Net as input

, resulting in the division of the image into two distinct components: the illumination map

and the reflectance map

. The illumination component

encapsulates the estimated lighting conditions within the image, while the reflectance component

preserves the fundamental scene information. Subsequently, these two elements, the illumination component

, and the reflectance component

are supplied as inputs to the Enhance-Net, which is specifically crafted to enhance the lighting conditions of low-light images. The Enhance-Net processes these inputs and yields an enhanced illumination Map

. The aim of the Enhance-Net is to adeptly augment the illumination component, which significantly contributes to the enhancement of visibility and the perceptual quality of low-light images. Upon amalgamating the enhanced illumination component

and reflectance component

through element-by-element multiplication, we acquire the output, Enhanced Result

, which represents the enhanced image exhibiting heightened brightness and improved visual appeal. The GAN Result

is the final image that has been both enhanced by the Enhance-Net and then denoised by GAN.

Decom-Net: Retinex-based techniques focus on obtaining accurate illumination and reflectance maps, which greatly influence the subsequent enhancement and denoising processes. Consequently, creating an effective network for weakly illuminated image decomposition is crucial. The residual network [

24] is extensively employed in a variety of image processing with excellent outcomes. Due to its skip connection structure, residual networks facilitate the optimization of deep neural networks during training, without causing gradient vanishing or explosions. Taking inspiration from its advantages, we enhance the performance of DecomNet by incorporating RM(Residual Modules). Each Residual Modules consists of 5 convolutional layers with kernel sizes of

and kernel quantities of

, respectively. Additionally, we introduce a shortcut connection with a

convolutional layer. A

convolutional layer is also added in each RM’s pre- and post-stages.

The Decom-Net operates on paired images in different lighting conditions (

and

) simultaneously, learning to decompose both images based on the shared reflectance component principle. In the training phase, there is no need for explicit GT decomposition. Instead, the network incorporates necessary information, such as reflectance consistency and illumination component smoothness, through carefully designed loss metrics. Notably, the illumination component and reflectance component of the standard-light image do not directly contribute to the network’s training process or merely serve as a reference for decomposition. For a detailed description of the DecomNet architecture, please refer to

Figure 2.

Enhance-Net: Enhance-Net aims to increase the brightness of the illumination component, resulting in visually pleasing outcomes. Our Enhance-Net comprises two modules: CEM (Contrast Enhancement Module)and DRM (Detail Reconstruction Module). Drawing inspiration from previous endeavors in picture restoration such as [

8,

25], our Enhance-Net leverages spatial features, particularly frequency features, and achieves significant improvements by utilizing knowledge gained from these initiatives. To mitigate the loss of feature information, the CEM implements multi-scale fusion and concatenation on the outputs of each deconvolution layer in the expanding route. This approach enhances images by utilizing spatial features while minimizing information loss. The Fourier transform is employed to convert digital images from the spatial domain to the frequency domain, treating them as signals. Conversely, the CEM performs multi-scale fusion and concatenation on the outputs of each deconvolution layer in the expanding route to preserve spatial information for contrast enhancement, thereby reducing feature information loss. This process becomes possible through the application of the inverse Fourier transform.

Therefore, spectral data from the picture may be extracted using the Fourier transform. High-frequency signals indicate quick changes in the picture, such as features or noise, whereas low-frequency signals reflect smooth, steady changes, such as background components. The degraded version may be used to reconstruct a crisp picture and gather more data by amplifying the high-frequency signals.

The Fourier transform produces a matrix with the same dimensions as the original picture. The frequency domain data of the picture are represented by the points in the matrix. Each point is a complex number

, where the amplitude is represented by the modulus

and the phase angle by

. We build our DRM using the complicated convolution approach suggested in [

26], which makes use of the frequency domain data for detailed reconstruction.

As a result, the amplitude and phase data can be integrated into the frequency domain. One FIP (Frequency Information Processing) block and two SFSC (Spatial-Frequency-Spatial Conversion) blocks make up the DRM. Consolidating information flow between the frequency and spatial domains is the primary goal of the SFSC blocks. Before undergoing the fast Fourier transform for frequency domain conversion, the first Resblock analyses the features in the spatial domain. The complex Resblock handles the data in the frequency domain, facilitating seamless information transfer between different domains. The FIP block functions as a high-pass filter for fine detail reconstruction by boosting the image’s edge outlines. The fast Fourier transform is used to translate the input image directly into the frequency domain to produce the image-level signal, while the SFSC block produces the feature-level signal. When the outcomes of DRM and CEM are combined, the improved illumination component is created. It is crucial to remember that both DRM and CEM have 64 output channels. To minimize dimensionality, we use a

convolution layer and a

convolution layer. The architecture of Enhance-Net is shown in

Figure 3.

A low-light image is initially processed through the Decom-Net, resulting in the division of the image into two distinct components: the illumination component (

) and the reflectance component (

). The illumination component encapsulates the estimated lighting conditions within the image, while the reflectance component preserves the fundamental scene information. Subsequently, these two elements,

and

, are supplied as inputs to the Enhance-Net, which is specifically crafted to enhance the lighting conditions of low-light images. The Enhance-Net processes these inputs and yields an enhanced illumination component (

). The aim of the Enhance-Net is to adeptly augment the illumination component, which significantly contributes to the enhancement of visibility and perceptual quality of low-light images. Upon amalgamating the

and

through element-by-element multiplication, we acquire the final output,

, which represents the enhanced image exhibiting heightened brightness and improved visual appeal. Its formula is as follows:

Figure 1e displays the enhanced result. Meanwhile, the Generative Adversarial Network (GAN) generates the denoised result, complementing the enhanced image and ensuring that the final output is visually appealing while preserving important information from the original low-light image.

Adversarial Generative Network: In this GAN-based denoising framework, the goal is to train a generator that can produce high-quality denoised images while being adversarially challenged by a discriminator.

The training process involves iteratively updating both the generator and the discriminator networks using a combination of losses to ensure realistic image generation and accurate denoising. The generator makes use of a U-Net architecture, which is well-suited for image-to-image translation jobs and is capable of maintaining spatial information and fine features. The generator is optimized throughout the training phase using a mixture of MSE (mean squared error) loss, BCE (Binary Cross-Entropy) loss, and SSIM (Structural Similarity Index Measure [

27]) loss. The BCE loss encourages the discriminator to classify generated images as genuine, the SSIM loss ensures structural similarity between generated and ground-truth images, and the MSE loss concentrates on pixel-level accuracy. CNNs (Convolutional Neural Networks) are used in the discriminator’s construction to discriminate between genuine and fake images. It is trained with the BCE loss to discriminate between genuine denoised pictures and the generator’s outputs. The training procedure alternates between training the discriminator and training the generator. The discriminator is initially changed in each cycle to better discriminate between genuine and produced pictures. The generator is then trained to generate pictures that are more likely to mislead the discriminator. This adversarial training process continues until the generator produces denoised images that are indistinguishable from the ground truth. The use of learning rate schedulers for both generator and discriminator optimizers ensures that the learning rates are adaptively adjusted during training, which can lead to more stable and efficient convergence. Overall, this GAN-based denoising framework leverages the strengths of the U-Net architecture and the adversarial training process to produce high-quality denoised images that are both visually appealing and structurally accurate. The architecture of GAN is illustrated in

Figure 4.

In our GAN model, the discriminator is a deep convolutional neural network meant to discriminate between actual and produced photos. It consists of six convolutional layers, with each layer followed by a leaky ReLU activation function, except for the last layer. The vanishing gradient problem, which frequently affects deep neural networks, is lessened with the use of the leaky ReLU activation function. The network gradually raises the number of channels from the input picture while decreasing the spatial dimensions in the convolutional layers by utilizing a stride of 2 and a kernel size of 4. This design decision enables the network to extract hierarchical characteristics from the input picture effectively while minimizing computational cost. Finally, a sigmoid activation function is used to determine if the input picture is genuine or fabricated. This precise discriminator architecture decision allows the model to properly learn and capture complicated patterns in the input photos while preserving computational efficiency during training.

The generator utilizes a U-Net-based architecture tailored for the task of generating high-quality images. This architecture is specifically chosen due to its proven effectiveness in image-to-image translation tasks and its ability to preserve spatial information throughout the network. The U-Net structure consists of an encoding path that captures hierarchical features and a decoding path that reconstructs the output image while maintaining spatial information. Additionally, skipping connections between corresponding encoding and decoding layers helps retain fine-grained details in the generated images. Considering the specific task, the U-Net architecture is advantageous as it enables the generator to generate high-quality images with preserved spatial information and fine details, making it well-suited for producing realistic outputs in GAN applications.

3.2. Loss Function

The decomposition loss (), the enhancement loss (), and the GAN loss () are the three components that make up the whole loss function since they are all learned individually during the training phase.

Decomposition loss:

represents the content loss, whereas

represents the perceptual loss. As a content loss, we utilize the L1 loss.

In all the formulas, the index

represents the

ith sample used to compute the loss. The

N denotes the total number of samples.

and

, respectively, represent the illumination component and the reflectance component of a low-light image.

and

represents the illumination component and the reflectance component of a normal-light image. The illumination component encapsulates the estimated lighting conditions within the image, while the reflectance component preserves the fundamental scene information. A pre-trained VGG16 model’s features are used to determine the perceptual loss. In contrast to other methods, we employ features that were retrieved before the activation layer, as seen below.

The VGG16 pre-trained model’s

jth layer is indicated by the index

j. The default setting for

j is 4. In this formula,

C (Channels) represents the number of color channels in the image, with the default assumption of 3 for RGB images.

H (Height) represents the vertical dimension of the image, typically measured in pixels.

W (Width) represents the horizontal dimension of the image, also measured in pixels. Therefore,

denotes the total number of elements in the feature map of the

jth layer of the VGG16 model. It is calculated by multiplying the number of channels, height, and width of that layer’s feature map. In the formula, this value is used to normalize the result of the perceptual loss. The decomposition loss

is expressed as follows:

Enhancement loss: Content loss, perceptual loss, and detail preservation loss are all types of augment loss. We create content loss and perceptual loss using the same method as decomposition loss as follows:

Furthermore, to facilitate the recovery of additional details, we incorporate a frequency loss in R2Rnet [

8] into Ehance-Net, leveraging the frequency information utilized within the network. The Wasserstein distance is used to reduce the disparities between the real and imaginary portions of enhanced and low-light images. The expression for the frequency loss is as follows:

In this equation,

N represents the total number of samples.

k represents the dimension in the frequency domain and can be either real or imaginary. The symbol inf denotes the minimum value, while

represents the joint distribution composed of frequency components from low-light and normal-light images.

represents the expectation of

under the constraint of differences in frequency components. The enhancement loss is formulated as

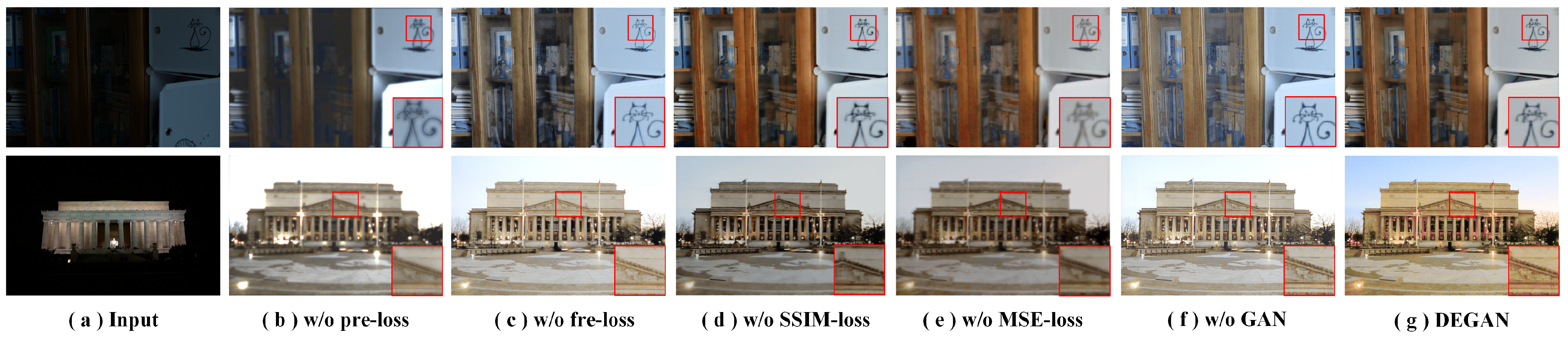

GAN loss: The GAN loss plays a crucial role in the performance of generative adversarial networks, encompassing two main components that work synergistically to optimize the model’s outcomes. These components include the discriminator loss , which is responsible for the accurate classification of real and fake images, and the generator loss , which focuses on producing images that closely resemble real ones while maintaining structural and pixel-level accuracy. By carefully balancing these two components, the GAN achieves its goal of generating high-quality, realistic denoising images.

The loss for classifying real images and the loss for classifying fake images make up the discriminator loss. For both portions, we use the binary cross-entropy loss (BCELoss), as follows:

In this equation, denotes the label for real images, denotes the generated images, denotes the label for fake images, and represents the classification result of the discriminator for the generated image.

The SSIM loss, the MSE loss, and the BCE loss make up the three components of the generator loss. The MSE loss maintains the pixel-level accuracy whereas the SSIM loss aims to keep the output image architecturally similar to the original. The discriminator classifies the final picture as real as a consequence of the BCE loss. The specific formulas for the three generator loss components are as follows:

SSIM loss (Structural Similarity Index Measure Loss):

In this instance, the produced and reference pictures are denoted by x and y, respectively. The structural similarity of the two pictures is compared using the SSIM.

MSE loss (Mean Squared Error Loss):

N stands for the total number of pixels, and the pixel values of the reference image and the produced image are denoted by and , respectively. The MSE loss calculates the mean squared error between the output image and the reference image.

BCE loss (Binary Cross-Entropy Loss):

Here, the target labels (real or fake) are represented by

t and the predicted probabilities of the discriminator are represented by

y. The BCE loss calculates the binary cross-entropy between the target labels and the anticipated probabilities. We formulate the generator loss as follows:

The weights for the SSIM loss, MSE loss, and BCE loss in this equation are

,

, and

, respectively.