A Workpiece-Dense Scene Object Detection Method Based on Improved YOLOv5

Abstract

1. Introduction

2. Workpiece Detection Algorithm Based on the Improved YOLOv5

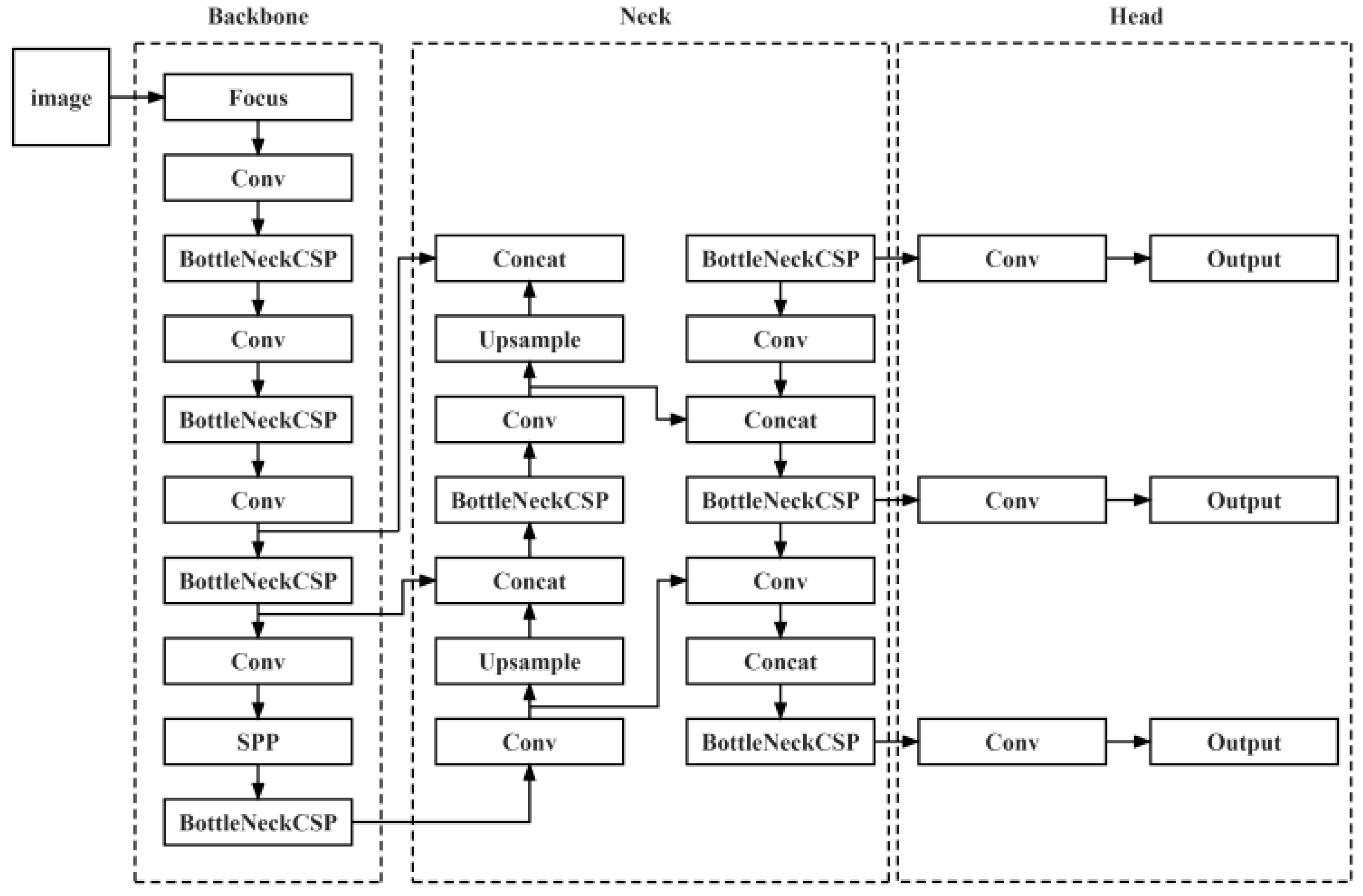

2.1. YOLOv5 Object Detection Algorithm

2.2. Improvements to the YOLOv5 Model

- The coordinate attention mechanism is integrated into the backbone feature extraction network to increase the network’s interest in important features and improve the feature extraction capability of the network.

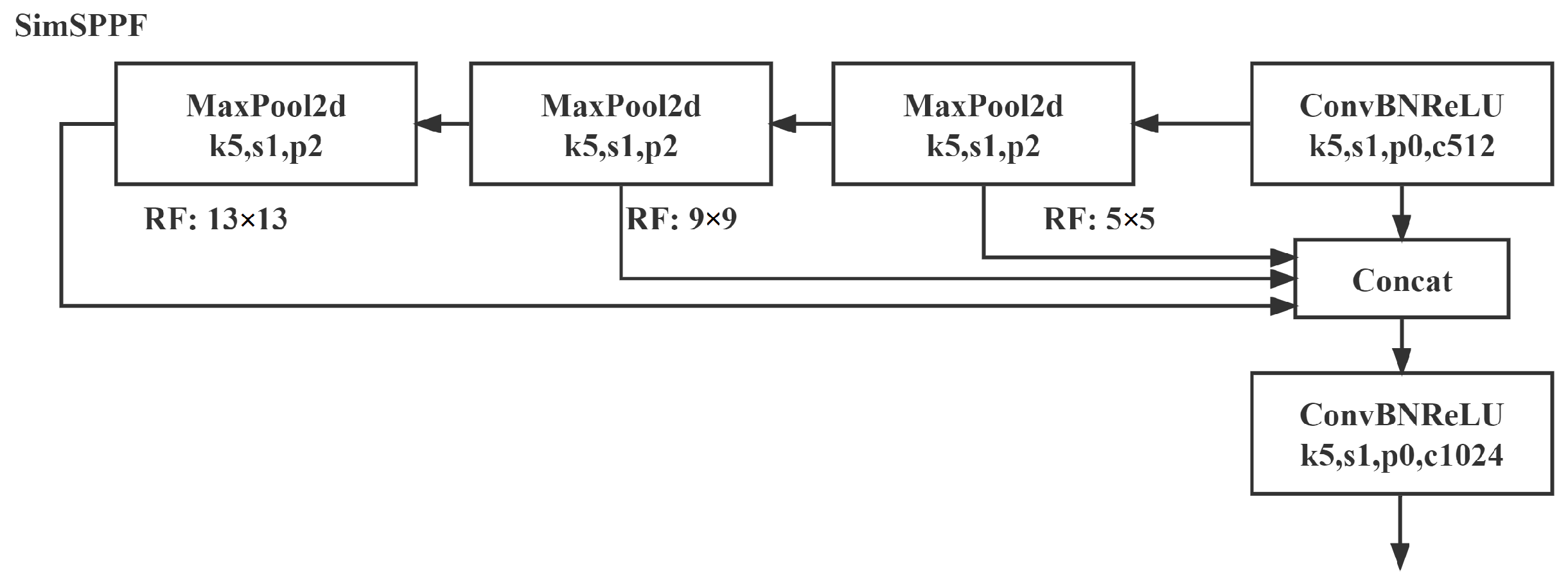

- The SPP in the original model is improved to SimSPPF, which reduces the computation and increases the running speed.

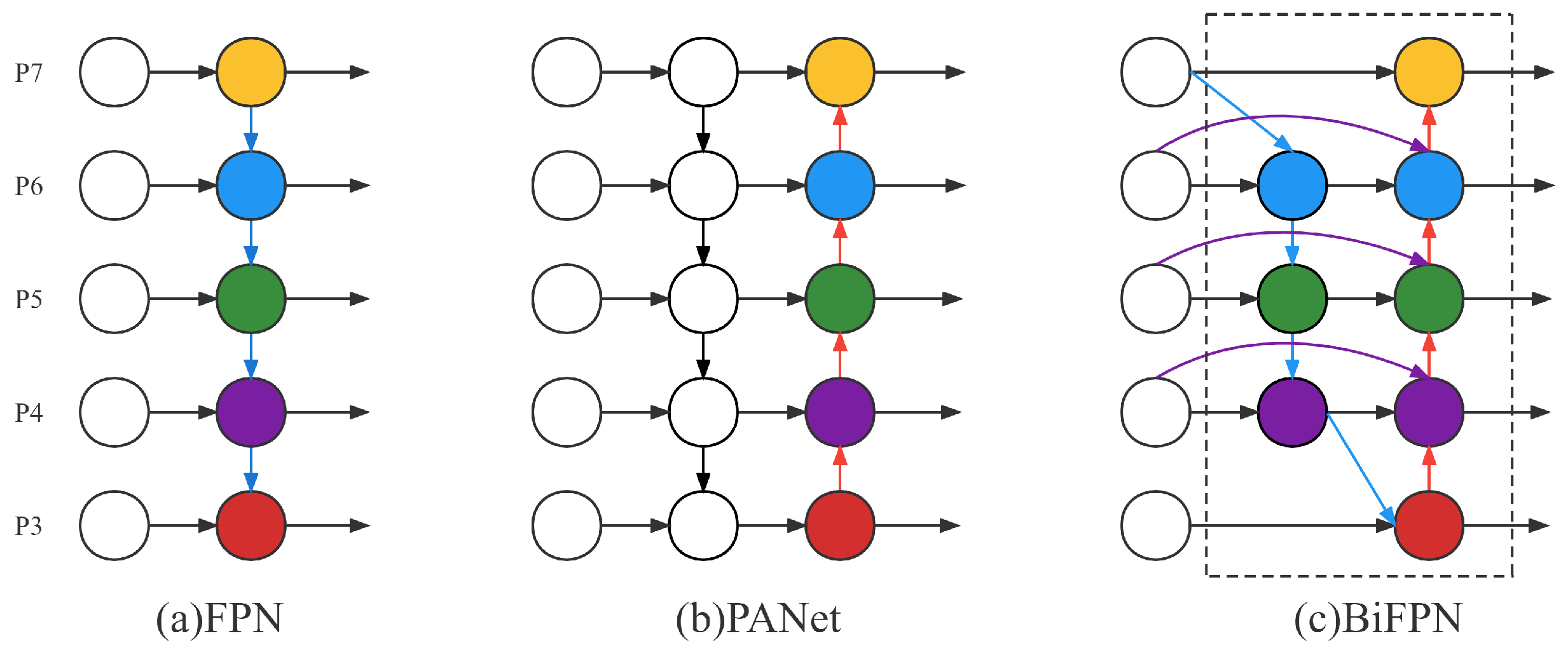

- BiFPN structure is used for cross-layer feature fusion, which fully combines semantic information and location information to enhance the feature fusion capability of the network.

- The CIoU loss function in the original model is improved to the SIoU loss function, and the direction matching between the real box and the predicted box is fully considered to improve the convergence performance of the model.

2.2.1. Coordinate Attention Mechanism

2.2.2. Simple and Fast Space Pyramid Pool

2.2.3. Bidirectional Feature Pyramid

2.2.4. SIoU Loss Function

- Angle costThe model first makes predictions on either the X or Y axes, and then approximates along the correlation axis. To achieve this, the convergence process will first attempt to minimize the angle, so the angle costing formula is introduced and defined.

- Distance costAngle cost is introduced into distance cost and distance cost is redefined.

- Shape costShape cost is defined.

- Cross and compare costsThe crossover cost is defined.Finally, the SIoU loss function is defined.

3. Experimental Research and Result Analysis

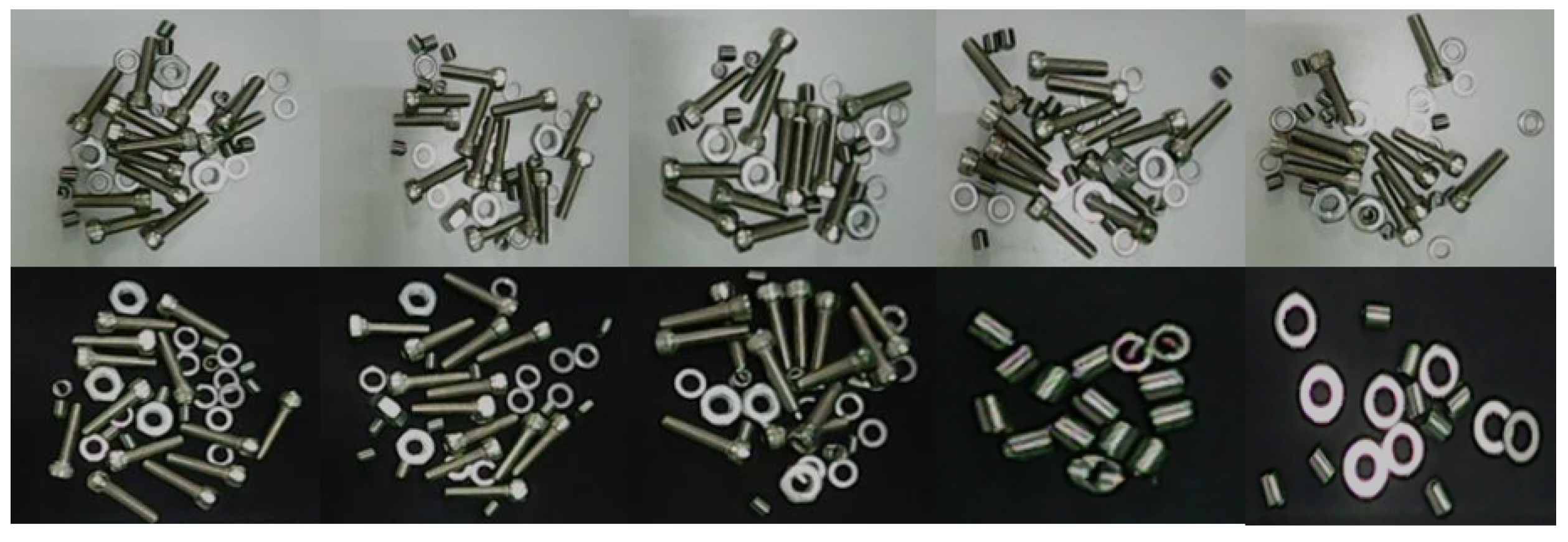

3.1. Workpiece Dataset Establishment

3.1.1. Workpiece Data Acquisition

3.1.2. Data Enhancement

3.2. Experimental Environment and Evaluation Indicators

3.2.1. Setting the Experimental Environment and Parameters

3.2.2. Evaluation Index

3.3. Experimental Research

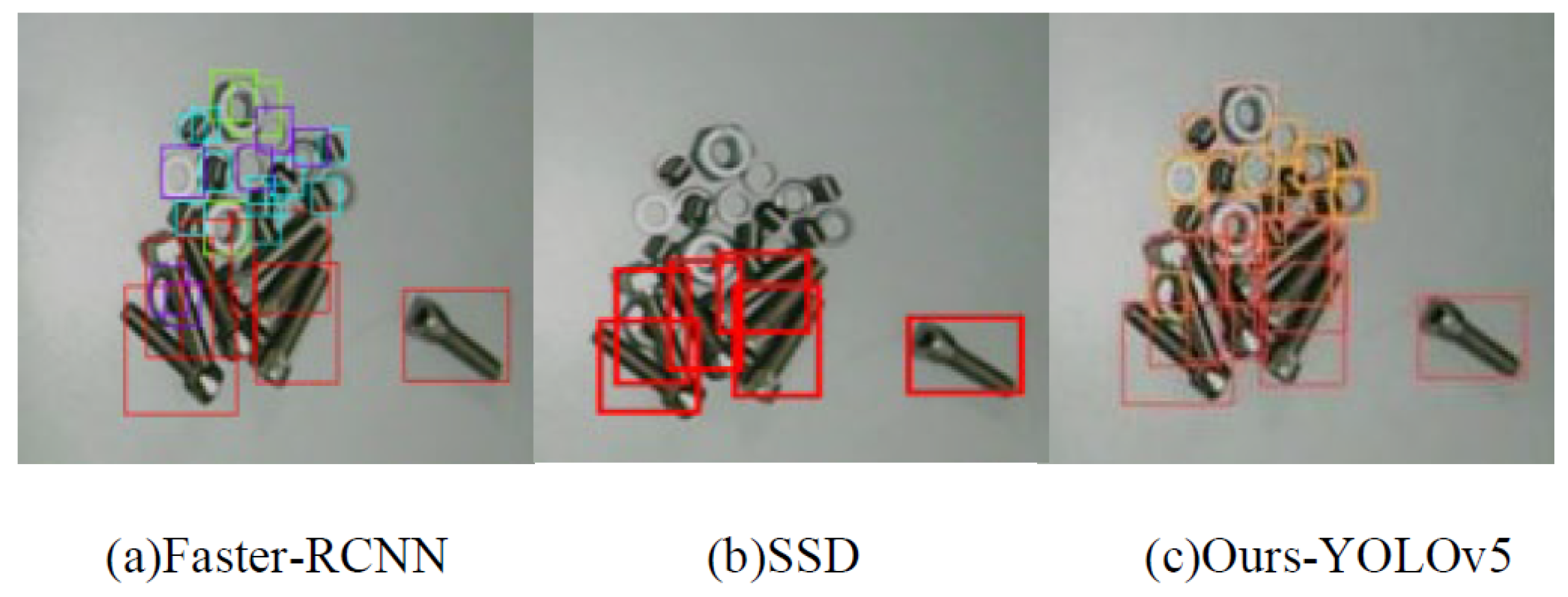

3.3.1. Contrast Experiment

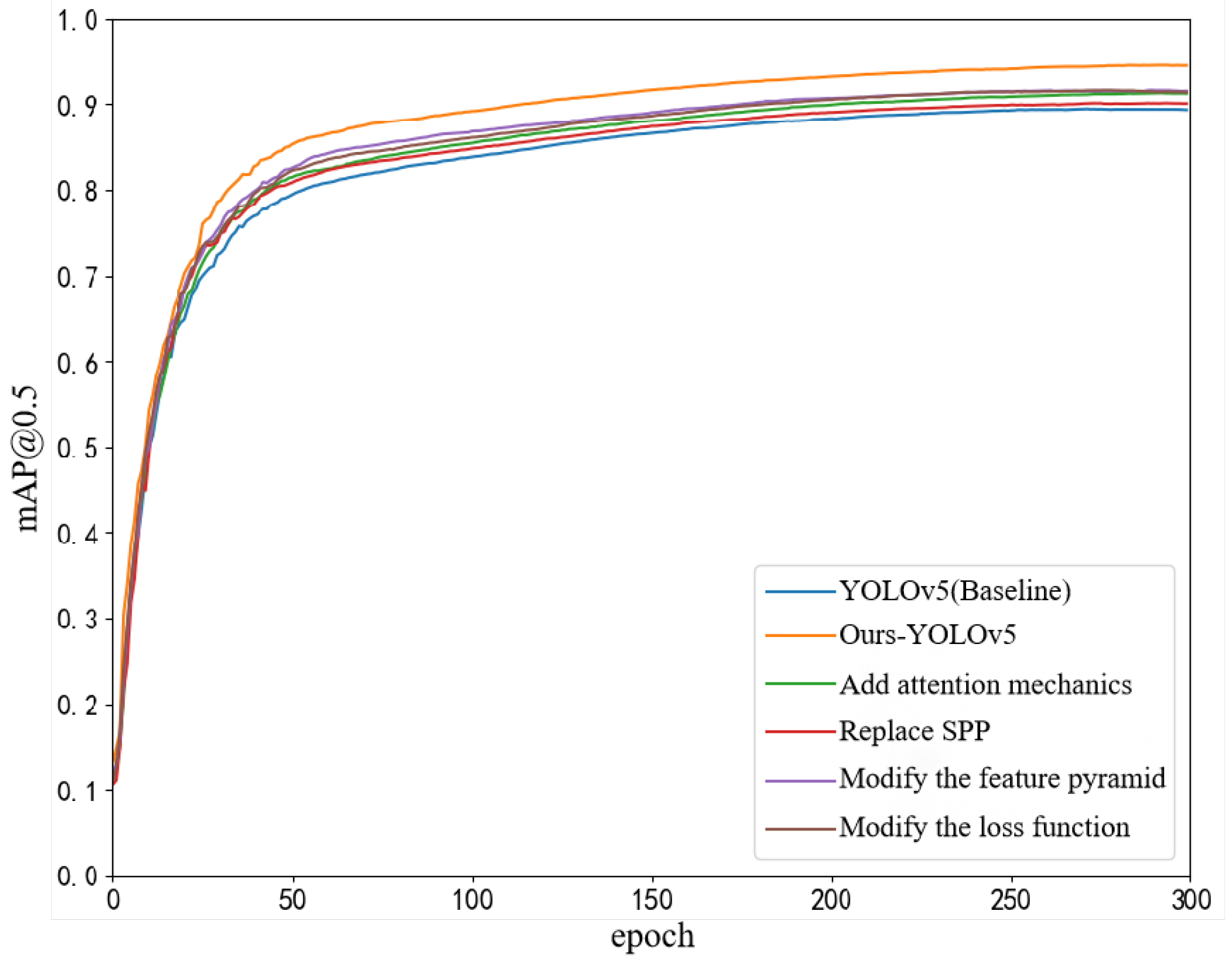

3.3.2. Ablation Experiment

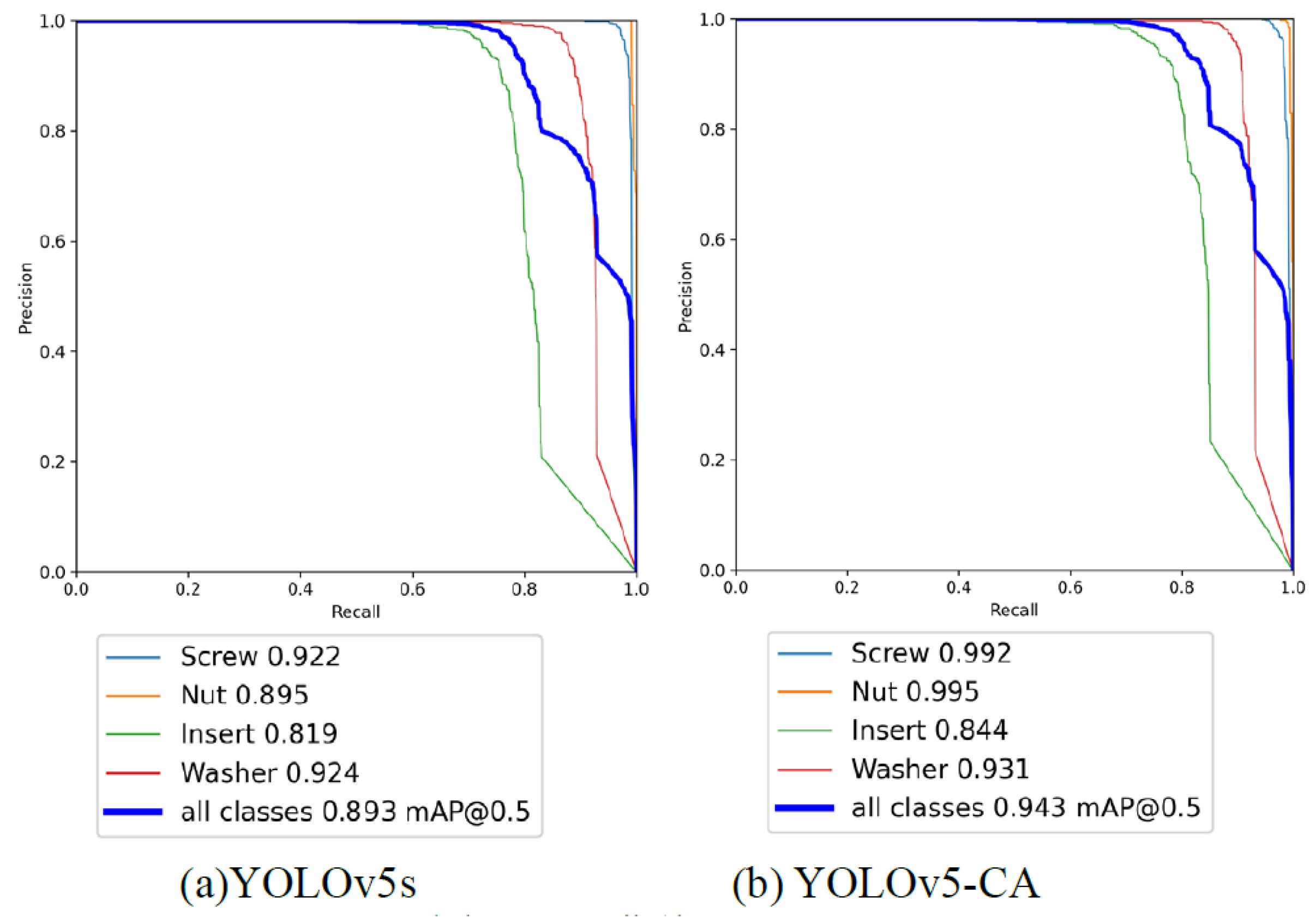

- Analysis of the model test of increased attention mechanismIn this paper, the CA module is added to the backbone network after feature extraction, so that it has clearer low-level contour information and coordinate information but also contains rich high-level semantic information. It can not only ensure the integrity of the feature information, but also improve the information expression ability of the feature map. According to the data in Table 2, it can be found that the index of mAP@0.5 of the model with the introduction of the attention mechanism is higher than that of the original model, which indicates that adding the attention mechanism after the backbone network can effectively enhance the feature information.The accuracy rate–recall curve of the model introduced with the attention mechanism and the original YOLOv5s model on the self-built dataset is shown in Figure 14. In Figure 14a on the left, the area surrounded by the YOLOv5s blue curve is smaller than that surrounded by the axes, while in Figure 14b on the right, the area surrounded by the YOLOv5s-CA blue curve is larger than that surrounded by the axes, indicating that the classification performance of the model with the attention mechanism on the self-built dataset is improved compared with that of the YOLOv5s model.Figure 15 shows the comparison of detection effects between the model with attention mechanism proposed in this paper and the YOLOv5s model. It can be seen that some wire sleeves are very small and dense, and the YOLOv5s model fails to correctly detect the targets and produces some false detections, while the YOLOv5-CA model can successfully detect these targets, indicating that it has become more accurate in the detection of small targets and dense targets after the introduction of the attention mechanism.

- Improved spatial pyramid pool model test analysisIn this paper, SPP in YOLOv5 was replaced by SimSPPF to increase the receptive field and uses multiple small-size pooling kernel cascades instead of a single large-size pooling kernel. Table 3 shows the comparison of the parameters of SPP and SimSPPF. Compared with SPP, the number of parameters and the amount of computation for SimSPPF decreased.According to Table 2, the improved spatial pyramid pool model mAP@0.5 has an improvement of over the original. The models configured with YOLOv5 and YOLOv5+simSPPF were, respectively, subjected to 50 times of reasoning and a comparison test of 100 images. The experimental comparison index was reasoning time, which could reflect the speed of image reasoning by the image processing module. Figure 16 shows the curve comparison of reasoning time. It can be seen that the improved spatial pyramid pool model reasoning was faster than the original model. This proves that, while retaining the original function, SimSPPF reduces the amount of computation, further improving the speed and efficiency of operation.

- Improved feature pyramid model test analysisIn order to verify the performance of BiFPN added in this paper, the number of model parameters, model weight, and mAP@0.5 of FPN, PANet and BiFPN in the mainstream feature pyramid network are compared. The results are shown in Table 4. It can be seen that the detection accuracy of the FPN network in the top-down single-order direction is not high. Adding the bottom-up path on the basis of FPN improves the detection performance of the PANet network; adding the cross-layer BiFPN network on the basis of PANet has the best detection performance; mAP@0.5 increased by compared with PANet. At the same time, the number of parameters and the weight of the BiFPN network do not increase greatly, which proves that it enhances the information extraction ability of the network, so that the low level of location information can better combine with the high level of semantic information.Figure 17 shows the comparison between the test effect of the improved feature pyramid model and the original model. It can be seen that the improved feature pyramid model has a better detection effect on small targets and less false detection. Therefore, it is proved that the BiFPN can extract position information more fully, reduce the loss of feature information and increase the ability of the network to detect small targets.

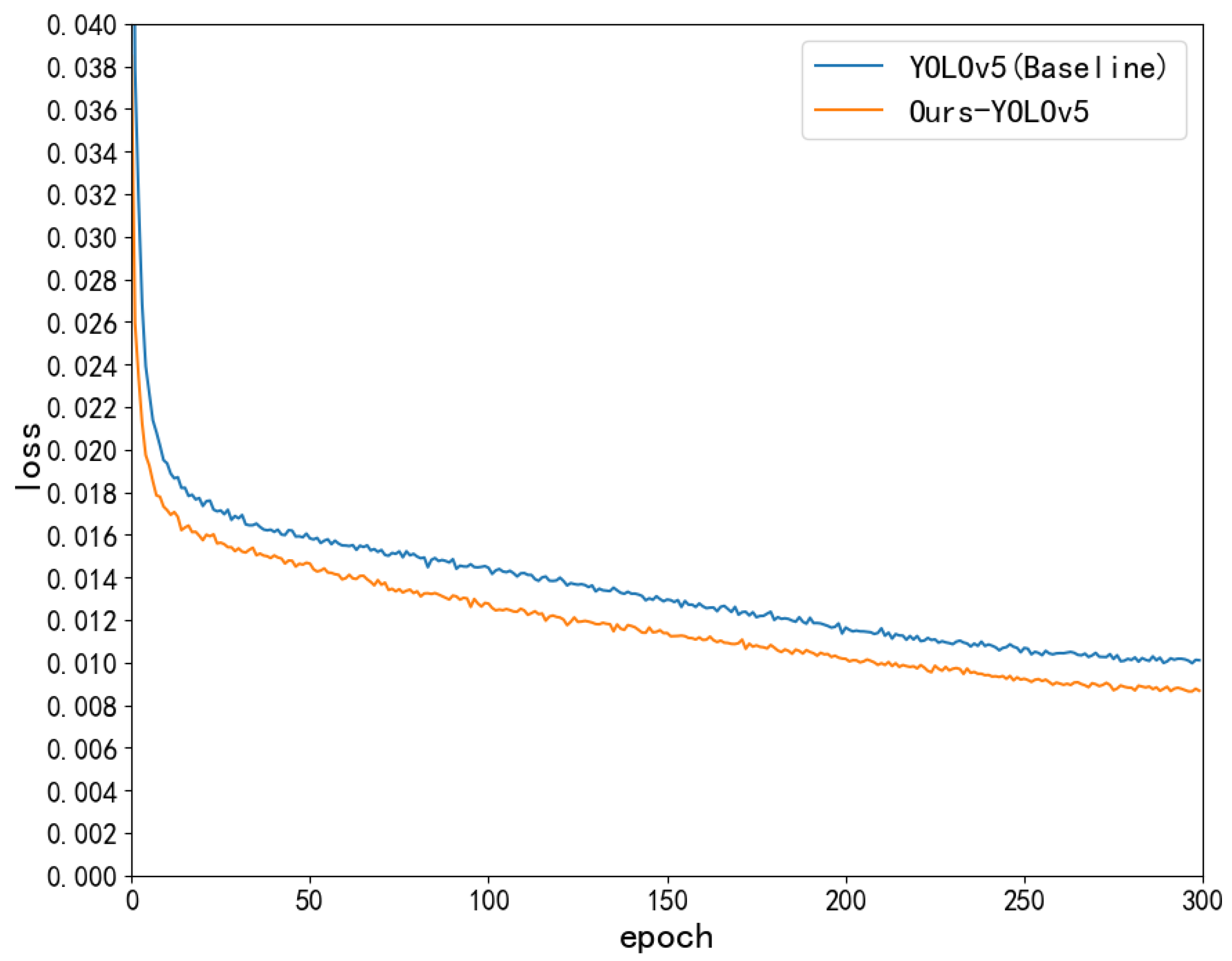

- Improved loss function model test analysisIn this paper, the SIoU loss function is used to replace the CIoU loss function in the original model. According to Table 2, after using the SIoU loss function, mAP@0.5 improves by compared with using CIoU. Meanwhile, Figure 18 shows the comparison of loss curves before and after the improvement of the loss function. After the improvement, the convergence speed of the model is faster, the loss value is gradually reduced and the convergence ability is enhanced. This indicates that SIoU is used instead of CIoU in this paper to solve the problem of direction matching between the real box and the predicted box, and the convergence performance of the model is improved.

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhu, Y. Development of Robotic Arm Sorting System Based on Deep Learning Object Detection. Master’s Thesis, Zhejiang University, Hangzhou, China, 2019. [Google Scholar]

- Dang, H.; Hou, J.; Qiang, H.; Zhang, C. SCARA robot based on visual guiding automatic assembly system. J. Electron. Technol. Appl. 2017, 43, 21–24. [Google Scholar] [CrossRef]

- Liu, J.; Zhong, P.; Liu, M. Research on Workpiece Recognition and Grasping Method Based on Improved SURF_FREAK Algorithm. Mach. Tool Hydraul. 2019, 47, 52–55+82. [Google Scholar]

- Jiang, B.; Xu, X.; Wu, G.; Zuo, Y. Contour Hu invariant moments of workpiece, image matching and recognition. J. Comb. Mach. Tools Autom. Process. Technol. 2020, 104–107+111. [Google Scholar] [CrossRef]

- Bibbo’, L.; Cotroneo, F.; Vellasco, M. Emotional Health Detection in HAR: New Approach Using Ensemble SNN. Appl. Sci. 2023, 13, 3259. [Google Scholar] [CrossRef]

- Wang, B. Positioning and Grasping Technology of Small Parts of Automobile Based on Visual Guidance. Master’s Thesis, Yanshan University, Qinghuangdao, China, 2019. [Google Scholar] [CrossRef]

- Gong, W.; Zhang, K.; Yang, C.; Yi, M.; Wu, J. Adaptive visual inspection method for transparent label defect detection of curved glass bottle. In Proceedings of the 2020 International Conference on Computer Vision, Image and Deep Learning (CVIDL), Chongqing, China, 10–12 July 2020; pp. 90–95. [Google Scholar]

- Chen, Y.; Alifu, K.; Lin, W. CA-YOLOv5 for crowded pedestrian detection. Comput. Eng. Appl. 2022, 1, 1–10. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 6105–6114. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Shen, X. Analysis of Automobile Recyclability; Heilongjiang Science and Technology Information: Harbin, China, 2012; Volume 90. [Google Scholar]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 13713–13722. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, hlSeattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU loss: More powerful learning for bounding box regression. arXiv 2022, arXiv:2205.12740. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern. Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016, Proceedings of the 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

| Model | Weight/MB | mAP@0.5/% | Params/ | Inference/ms |

|---|---|---|---|---|

| SSD | 100.3 | 77.8 | 23.7 | 123 |

| Faster-RCNN | 159 | 84.6 | 136.0 | 207 |

| YOLOv5s | 14.1 | 89.3 | 7.0 | 12 |

| Ours-YOLOv5 | 14.6 | 94.3 (↑ 5.0) | 7.1 | 13 |

| Model | AAM | RSP | MFP | MTF | mAP@0.5/% |

|---|---|---|---|---|---|

| YOLOv5s | × | × | × | × | 89.3 |

| Model 1 | √ | × | × | × | 91.2 (↑1.9) |

| Model 2 | × | √ | × | × | 90.1(↑0.8) |

| Model 3 | × | × | √ | × | 91.5 (↑2.2) |

| Model 4 | × | × | × | √ | 91.0 (↑1.7) |

| Ours-YOLOv5 | √ | √ | √ | √ | 94.3 (↑5.0) |

| Model | Params/ | GFLOPs |

|---|---|---|

| SPP | 7,225,885 | 16.5 |

| SimSPPF | 7,030,417 | 16.0 |

| Model | Params/ | Weight/MB | mAP@0.5/% |

|---|---|---|---|

| FPN | 6.2 | 13.2 | 87.4 |

| PANet | 7.0 | 14.0 | 89.3 |

| BiFPN | 7.1 | 14.6 | 94.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Zhang, S.; Ma, Z.; Zeng, Y.; Liu, X. A Workpiece-Dense Scene Object Detection Method Based on Improved YOLOv5. Electronics 2023, 12, 2966. https://doi.org/10.3390/electronics12132966

Liu J, Zhang S, Ma Z, Zeng Y, Liu X. A Workpiece-Dense Scene Object Detection Method Based on Improved YOLOv5. Electronics. 2023; 12(13):2966. https://doi.org/10.3390/electronics12132966

Chicago/Turabian StyleLiu, Jiajia, Shun Zhang, Zhongli Ma, Yuehan Zeng, and Xueyin Liu. 2023. "A Workpiece-Dense Scene Object Detection Method Based on Improved YOLOv5" Electronics 12, no. 13: 2966. https://doi.org/10.3390/electronics12132966

APA StyleLiu, J., Zhang, S., Ma, Z., Zeng, Y., & Liu, X. (2023). A Workpiece-Dense Scene Object Detection Method Based on Improved YOLOv5. Electronics, 12(13), 2966. https://doi.org/10.3390/electronics12132966