Content Caching and Distribution Policies for Vehicular Ad-Hoc Networks (VANETs): Modeling and Simulation

Abstract

1. Introduction

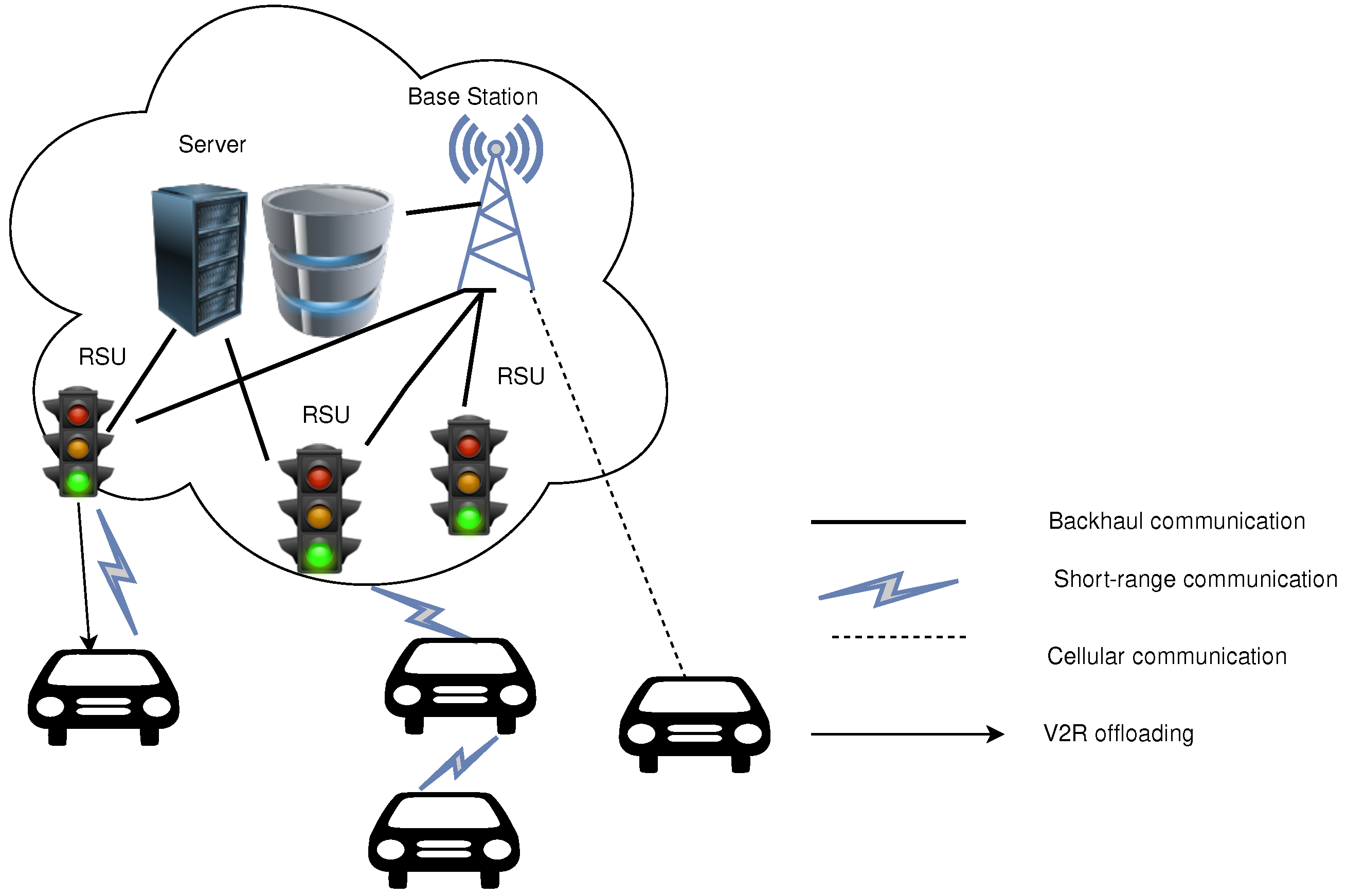

- Vehicles. Vehicles can be either private or public transport vehicles.

- Servers/Origin server. Servers act as data repositories for content that exists in the network and they communicate directly and exclusively with Roadside Units (RSUs).

- Roadside Units (RSUs). Static or mobile RSUs function as an intermediary between servers and vehicles and may also contain stored network information that vehicles can access.

2. Related Work

3. Modeling and Algorithms

3.1. Simple Message Handling

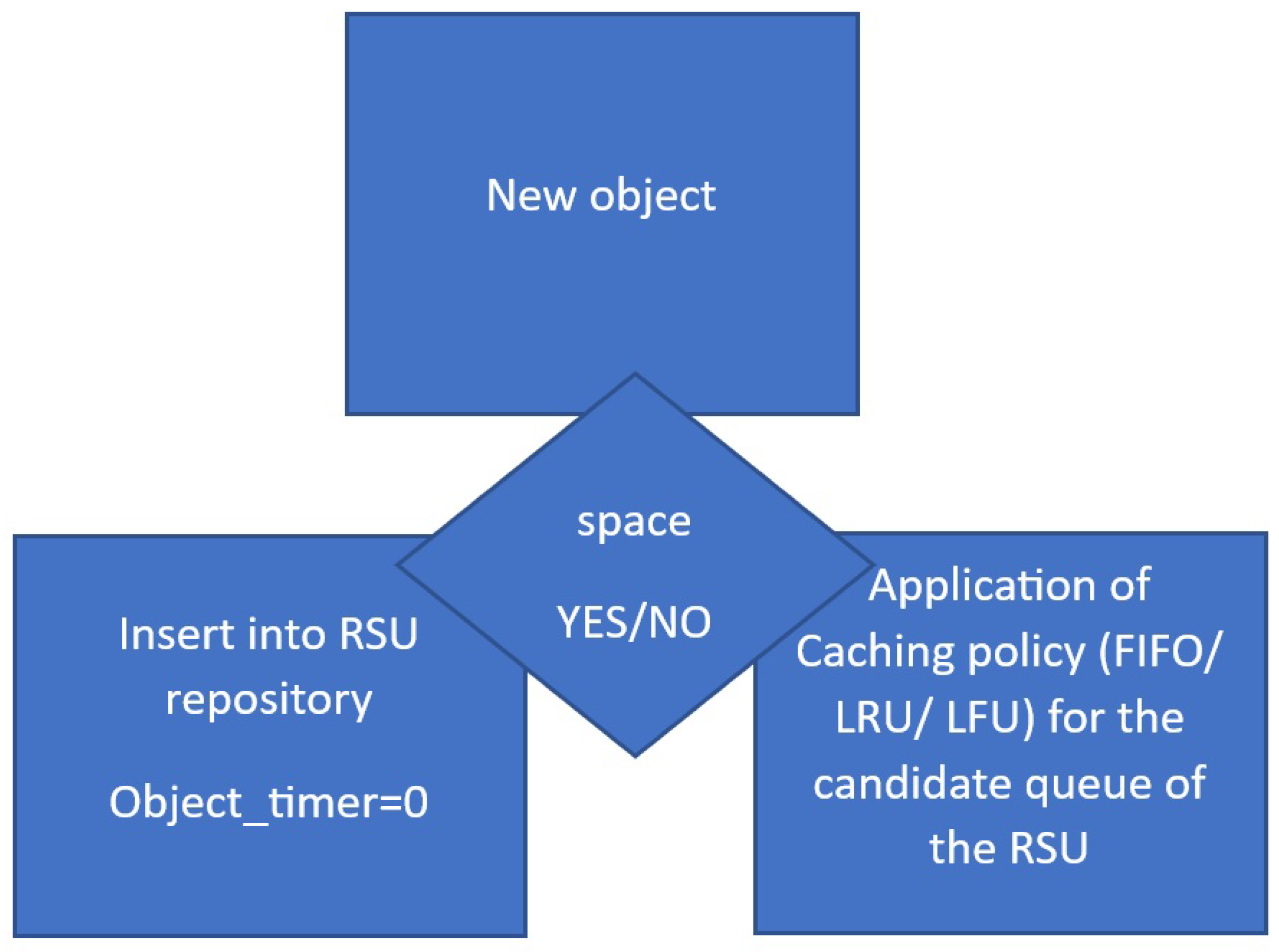

- First In First Out (FIFO) sorts the list to delete based on the receivedAt field of the stored MessageData.

- Least Recently Used (LRU). Every time we receive a message, we set that time to the last used time (field lastUsed of the MessageData structure) and if we are asked for this message again, the used time is refreshed. The list is sorted and we delete the specified number of messages with the smallest lastUsed value.

- Least Frequently Used (LFU) determines which messages will be deleted based on their usage frequency (usedFrequency field of the MessageData structure).

3.2. Content Message Handling

3.3. Shortest Paths

3.4. Calculation of Network Metrics

3.4.1. Degree Centrality

| Algorithm 1 Degree Centrality Calculation |

|

3.4.2. Closeness Centrality

| Algorithm 2 Closeness Centrality Calculation |

|

3.4.3. Betweenness Centrality

| Algorithm 3: Betweenness Centrality Calculation |

|

3.5. Machine Learning

| Algorithm 4: K-Means for VANETs |

|

| Algorithm 5: Agglomerative Clustering for VANETs |

|

4. Case Study–Simulations

4.1. Content Sharing Policies

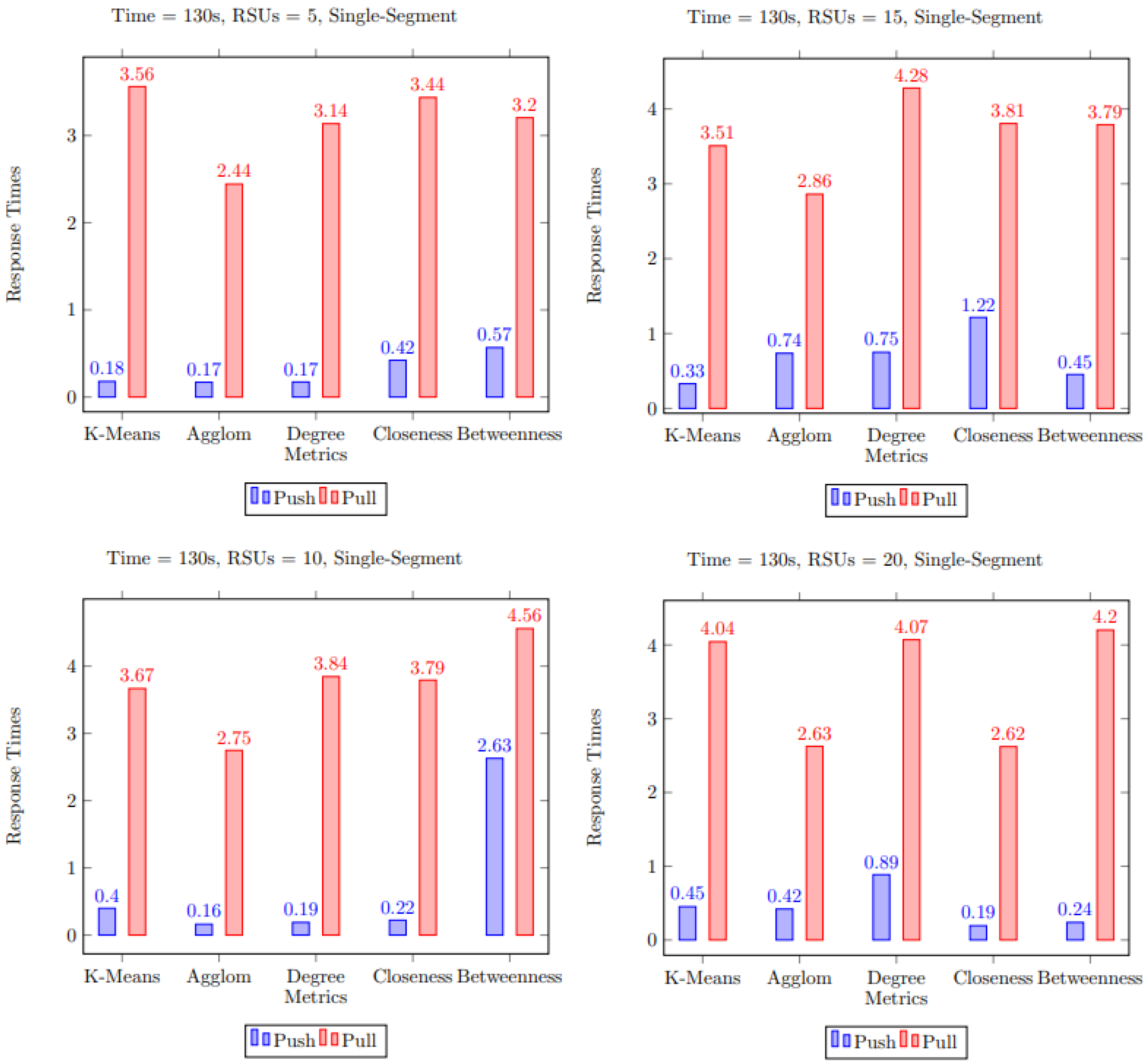

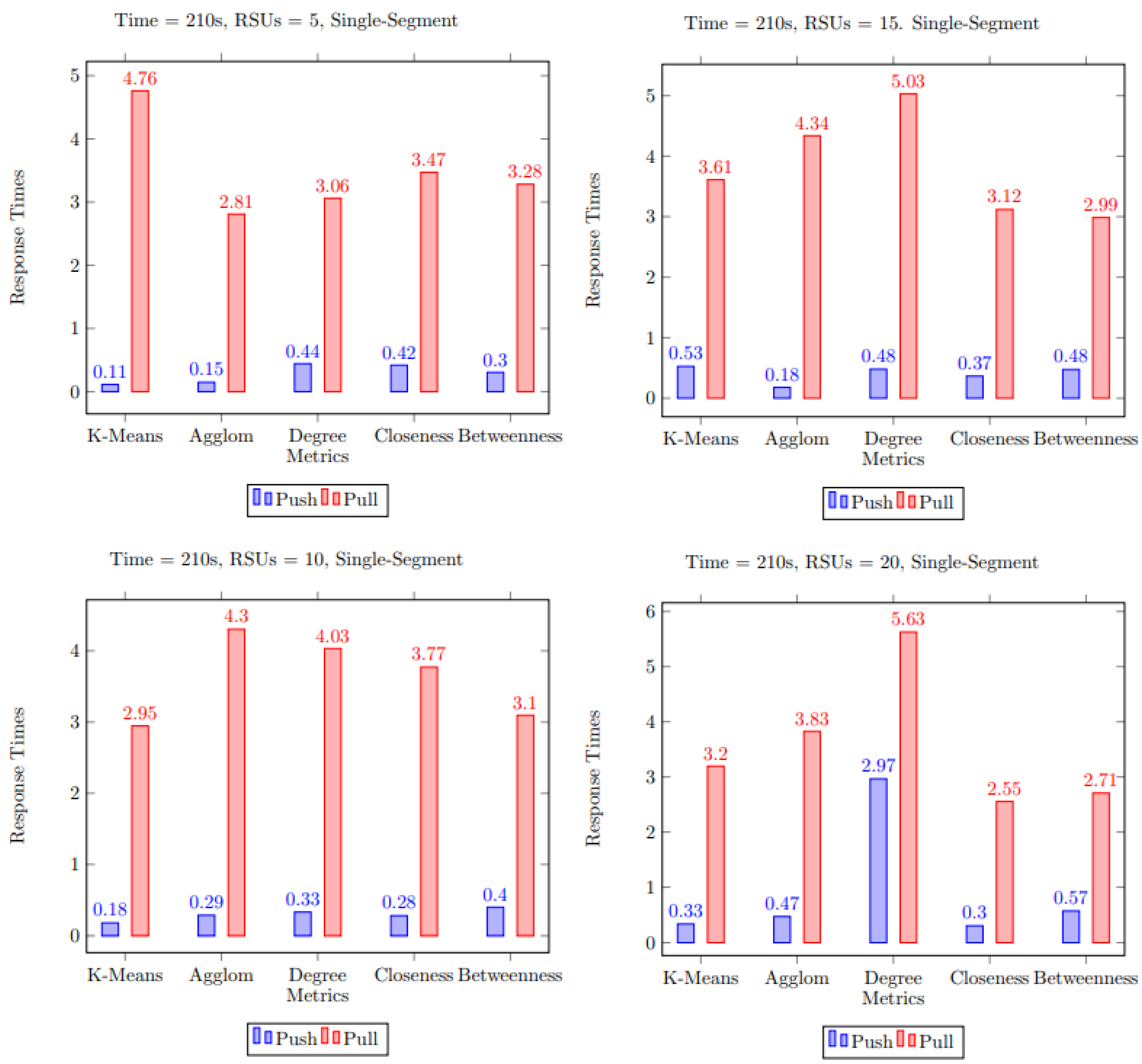

- Pull: When a resource is requested from the RSU, the RSU checks if it has it and if it does not, it forwards the request to the Origin and copies content to its cache as well. Therefore, the object is copied to the RSU the first time it is requested.

- Push: Push policy copies content from Origin to RSUs proactively, before even the content is requested.

4.2. Content Message Storage

4.3. Message Acceptance

4.4. Transmission Confirmation

5. Measurements of Simulations

5.1. Single Segment Multimedia Content

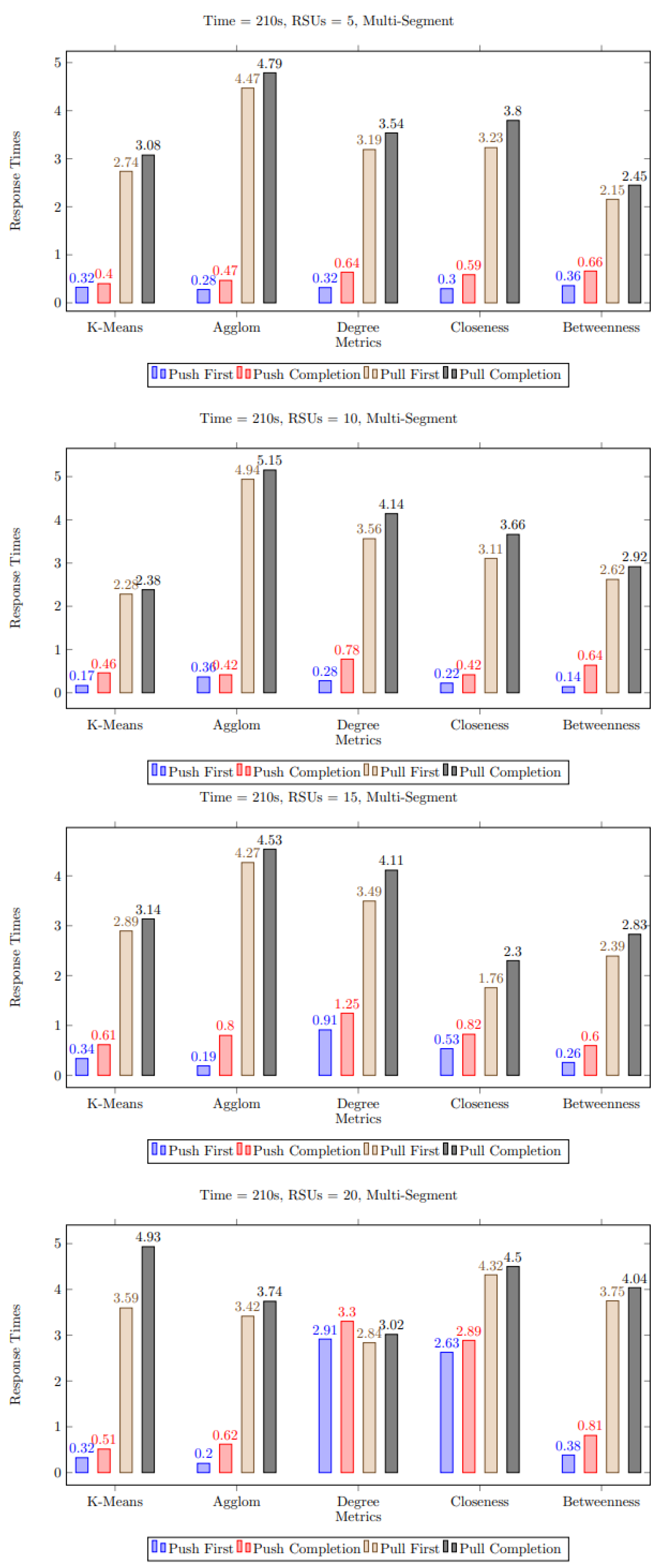

5.2. Multiple Segment Multimedia Content

5.3. Discussion of Simulation Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Veins

Appendix A.1. Simulation Files

- UnitHandler. This file is the “parent” class used by every node in the network. It contains mostly basic functions used by all child classes, such as the function that handles how to send messages. The UnitHandler class definition is a subclass from the DemoBaseApplLayer class, which is present in the example that comes with Veins.

- OriginHandler. OriginHandler is the class that defines the behavior of Origin nodes and adds some functions exclusively for them, e.g., the function that instructs the RSUs to calculate the centrality requested by the Origin.

- RsuHandler. This class, in turn, contains functions that describe how RSUs handle various scenarios. An example of this is handling requests from vehicles for some content stored in an RSU or the Origin of the simulation.

- CarHandler. Finally, CarHandler also has functions that deal with handling and creating requests. The vehicles in the network are constantly moving, so there is also a function that is called at each moment of the simulation for purposes such as accident detection.

Appendix A.2. Message Archive

- SenderAddress. The address of the last node that sent the message. It is used so that we can obtain an indication of the path of the message.

- Recipient. The address of the node that will receive the message. Any node whose address is different from the one listed in this field receives the message and ignores it.

- Source. The address of the node that generated the message. It is used for various purposes, mainly to know which node to respond to when a request comes to us.

- Dest. The address of the node to which we want the message to arrive. If a node with an address other than the one in Dest receives this message, it forwards the message to reach its destination.

- SenderPosition. The coordinates of the latter.

- MaxHops. It is the maximum number of bounces the message can make before it is deleted.

- Hops. It is the number of bounces the message has made so far. If it exceeds MaxHops, the message is deleted.

- Type. This field specifies the type of message being sent. There are 18 different message types, each for a different occasion and function.

- State. As mentioned at the beginning of this subsection, each message is first sent as a “self-message”. State defines which operating state this message corresponds to. For example, Sending, which is the default state, sends the message to the specified nodes. In contrast, the Caching state does not send a message to other nodes, and, instead, starts the process of managing content storage. There are a total of 7 different message intents.

- Centrality. This field is only used for centrality calculation requests and their responses. It specifies the kind of centrality we want to calculate.

- MsgInfo. In this field we store numerical information that we want to send, e.g., centrality results.

- AckInfo. Information of type simtime_t, i.e., simulation time, is stored here. The field is used to send acknowledgments and ensure that received acknowledgment corresponds to content.

- Route. A vector storing the path taken so far. Mainly used for best path finding messages. Another use is for messages between Origin and RSU. Since Origin and RSU are static, if the optimal path between them is calculated, we can use that path to send a message faster.

- PreviousNodes. A vector that stores the path taken by a message reply while we are traversing a path in reverse, removing nodes from Route and adding nodes to PreviousNodes.

- OriginMessage. This field, if set to true, indicates that this is a message between RSUs and Origin. Therefore, all vehicles that receive this message ignore it. Accordingly, if it is false then it is ignored by Origin.

- UpdatePaths. A message that has Route is subject to a routing check. If this variable is false, we can ignore this check.

- ContentId. It is used to identify the content we request or send.

- Content. In this field we store the content we want to send.

- Segments. Here we define how many segments the content has. The content requester does not know how many segments the content has in the first place.

- SegmentNumber. In the case of sending multipart content this variable is used to define which part we are sending.

- Multimedia. Again for content transmission, this field specifies whether the content we request or send is multimedia content, i.e., a video or an image, or content that contains road information.

Appendix A.3. Data Structures

Appendix A.4. Installing and Running a Simulation

References

- Fourati, L.; Kilanioti, I. Radio Aspects for the Internet of Vehicles (IoV) in High Mobility Environments. In Proceedings of the URSI General Assembly and Scientific Symposium, URSI GASS 2023, Sapporo, Japan, 19–26 August 2023. [Google Scholar]

- Do, Y. Centrality Analysis in Vehicular Ad Hoc Networks; Technical Report; EPFL: Lausanne, Switzerland, 2008. [Google Scholar]

- Vukadinovic, V.; Bakowski, K.; Marsch, P.; Garcia, I.D.; Xu, H.; Sybis, M.; Sroka, P.; Wesolowski, K.; Lister, D.; Thibault, I. 3GPP C-V2X and IEEE 802.11 p for Vehicle-to-Vehicle communications in highway platooning scenarios. Ad Hoc Netw. 2018, 74, 17–29. [Google Scholar] [CrossRef]

- Kilanioti, I.; Rizzo, G.; Masini, B.M.; Bazzi, A.; Osorio, D.P.M.; Linsalata, F.; Magarini, M.; Löschenbrand, D.; Zemen, T.; Kliks, A. Intelligent Transportation Systems in the Context of 5G-Beyond and 6G Networks. In Proceedings of the IEEE Conference on Standards for Communications and Networking, CSCN 2022, Thessaloniki, Greece, 28–30 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 82–88. [Google Scholar] [CrossRef]

- Bronner, F.; Sommer, C. Efficient Multi-Channel Simulation of Wireless Communications. In Proceedings of the 2018 IEEE Vehicular Networking Conference (VNC), Taipei, Taiwan, 5–7 December 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Eckhoff, D.; Sommer, C. A multi-channel IEEE 1609.4 and 802.11 p EDCA model for the veins framework. In Proceedings of the 5th ACM/ICST International Conference on Simulation Tools and Techniques for Communications, Networks and Systems: 5th ACM/ICST International Workshop on OMNet++, Desenzano, Italy, 19–23 March 2012. [Google Scholar]

- Veins Documentation. Available online: https://veins.car2x.org/documentation/ (accessed on 22 May 2023).

- Kilanioti, I. Improving multimedia content delivery via augmentation with social information: The social prefetcher approach. IEEE Trans. Multimed. 2015, 17, 1460–1470. [Google Scholar] [CrossRef]

- Elsayed, S.A.; Abdelhamid, S.; Hassanein, H.S. Predictive Proactive Caching in VANETs for Social Networking. IEEE Trans. Veh. Technol. 2022, 71, 5298–5313. [Google Scholar] [CrossRef]

- Brik, B.; Lagraa, N.; Yagoubi, M.B.; Lakas, A. An efficient and robust clustered data gathering protocol (CDGP) for vehicular networks. In Proceedings of the Second ACM International Symposium on Design and Analysis of Intelligent Vehicular Networks and Applications, Paphos, Cyprus, 21–25 October 2012; pp. 69–74. [Google Scholar]

- Chaves, R.; Senna, C.; Luis, M.; Sargento, S.; Matos, R.; Recharte, D. Content distribution in a VANET using InterPlanetary file system. Wirel. Netw. 2023, 29, 129–146. [Google Scholar] [CrossRef]

- Luo, L.; Sheng, L.; Yu, H.; Sun, G. Intersection-based V2X routing via reinforcement learning in vehicular ad hoc networks. IEEE Trans. Intell. Transp. Syst. 2021, 23, 5446–5459. [Google Scholar] [CrossRef]

- Kuo, T.Y.; Lee, M.C.; Lee, T.S. Quality-aware caching, computing and communication design for video delivery in vehicular networks. In Proceedings of the ICC 2022-IEEE International Conference on Communications, Seoul, Republic of Korea, 16–20 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 261–266. [Google Scholar]

- Tian, H.; Otsuka, Y.; Mohri, M.; Shiraishi, Y.; Morii, M. Leveraging in-network caching in vehicular network for content distribution. Int. J. Distrib. Sens. Netw. 2016, 12, 8972950. [Google Scholar] [CrossRef]

- Grewe, D.; Wagner, M.; Frey, H. PeRCeIVE: Proactive caching in ICN-based VANETs. In Proceedings of the 2016 IEEE Vehicular Networking Conference (VNC), Columbus, OH, USA, 8–10 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- Doan Van, D.; Ai, Q. In-network caching in information-centric networks for different applications: A survey. Cogent Eng. 2023, 10, 2210000. [Google Scholar] [CrossRef]

- Cislaghi, V.; Quadri, C.; Mancuso, V.; Marsan, M.A. Simulation of Tele-Operated Driving over 5G Using CARLA and OMNeT++. In Proceedings of the 2023 IEEE Vehicular Networking Conference (VNC), Istanbul, Türkiye, 26–28 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 81–88. [Google Scholar]

- Pusapati, S.; Selim, B.; Nie, Y.; Lin, H.; Peng, W. Simulation of NR-V2X in a 5G Environment using OMNeT++. In Proceedings of the 2022 IEEE Future Networks World Forum (FNWF), Montreal, QC, Canada, 10–14 October 2022; pp. 634–638. [Google Scholar] [CrossRef]

- Garrido Abenza, P.P.; Malumbres, M.P.; Piñol, P.; López Granado, O. A simulation tool for evaluating video streaming architectures in vehicular network scenarios. Electronics 2020, 9, 1970. [Google Scholar] [CrossRef]

- Klaue, J.; Rathke, B.; Wolisz, A. Evalvid–a framework for video transmission and quality evaluation. In Proceedings of the Computer Performance Evaluation. Modelling Techniques and Tools: 13th International Conference, TOOLS 2003, Urbana, IL, USA, 2–5 September 2003; Proceedings 13. Springer: Berlin/Heidelberg, Germany, 2003; pp. 255–272. [Google Scholar]

- Sommer, C.; German, R.; Dressler, F. Bidirectionally Coupled Network and Road Traffic Simulation for Improved IVC Analysis. IEEE Trans. Mob. Comput. 2011, 10, 3–15. [Google Scholar] [CrossRef]

- Veins Tutorial. Available online: https://veins.car2x.org/tutorial/ (accessed on 22 May 2023).

- Simulation of Urban MObility Files. Available online: https://sourceforge.net/projects/sumo/files/sumo/ (accessed on 22 May 2023).

- OMNeT++ Older Versions. Available online: https://omnetpp.org/download/old (accessed on 22 May 2023).

- Veins Download. Available online: https://veins.car2x.org/download/ (accessed on 22 May 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kilanioti, I.; Astrinakis, N.; Papavassiliou, S. Content Caching and Distribution Policies for Vehicular Ad-Hoc Networks (VANETs): Modeling and Simulation. Electronics 2023, 12, 2901. https://doi.org/10.3390/electronics12132901

Kilanioti I, Astrinakis N, Papavassiliou S. Content Caching and Distribution Policies for Vehicular Ad-Hoc Networks (VANETs): Modeling and Simulation. Electronics. 2023; 12(13):2901. https://doi.org/10.3390/electronics12132901

Chicago/Turabian StyleKilanioti, Irene, Nikolaos Astrinakis, and Symeon Papavassiliou. 2023. "Content Caching and Distribution Policies for Vehicular Ad-Hoc Networks (VANETs): Modeling and Simulation" Electronics 12, no. 13: 2901. https://doi.org/10.3390/electronics12132901

APA StyleKilanioti, I., Astrinakis, N., & Papavassiliou, S. (2023). Content Caching and Distribution Policies for Vehicular Ad-Hoc Networks (VANETs): Modeling and Simulation. Electronics, 12(13), 2901. https://doi.org/10.3390/electronics12132901