TGA: A Novel Network Intrusion Detection Method Based on TCN, BiGRU and Attention Mechanism

Abstract

1. Introduction

- This paper proposes an algorithmic model based on TCN, BiGRU, and attention mechanism (TGA). The temporal features of traffic sequences are extracted using both TCN and BiGRU models simultaneously, which can improve the effectiveness of sequence data modeling by using their respective advantages to some extent. After that, their outputs are fused, and the correlation between different positions in a sequence is captured by adding a self-attention mechanism to enhance the model’s expressiveness.

- The presented method was evaluated on the CSE-CIC-IDS2018 dataset at 97.83% accuracy. Compared with existing methods, our proposed model has shown much better results.

2. Related Work

3. Methodology

3.1. Model Architecture

3.2. Datasets

3.3. Data Preprocess

- Data Integration: In this experiment, we selected eight days of traffic samples from the CSE-CIC-IDS2018 dataset, and these eight CSV files were first integrated together. After that, 14 different tags were reclassified into seven categories, including one benign and six malicious tags.

- Data Cleaning: The presence of anomalous traffic in the dataset can adversely impact the training, so the rows containing NaN, Infinity, and null values are removed from this experiment. Table 2 shows the distribution of each category before and after cleaning. Before data cleaning, there were 5,567,951 benign samples and 2,103,173 malicious samples. After data cleaning, there are 5,538,387 benign samples and 2,102,418 malicious samples.

- Feature Processing: There are 79 attributes and one label in the CSE-CIC-IDS2018 dataset. Timestamp has been removed since it has a slight impact on network traffic identification.

- Data Digitization: The one-hot encoding method converts categorical features into a numeric variable.

- Data Normalization: If data features are not normalized, the model will assume that features taking larger values have a greater impact on the results. Normalizing data features can balance the size of each feature value and ensure that the DL model converges when backpropagating. In our experiment, the Min-Max normalization methodology is chosen to normalize the data on the dataset. Thus, each feature that has numerical values is normalized to the [0,1] interval, which can be defined as follows:

3.4. Background on Neural Networks

3.4.1. Temporal Convolutional Networks

- Causal ConvolutionCausal convolution performs convolution operations in a strict temporal order, only on data from past time steps. However, it should be noted that causal convolution is susceptible to the limitations of the received domain, so it can only process shorter historical information for prediction.

- Dilated ConvolutionTCN introduces dilated convolution to enable the model to better capture the dependencies on long input data. The dilated convolution increases the “dilated rate” parameter compared with the traditional convolution, which can enhance the ability to extract features effectively and enable the model to expand the perceptual field of the convolution kernel and catch the long-term time-dependent relationship without increasing the number of parameters. When performing time series forecasting, assuming given a sequence of inputs series [22]: , , , …, , , . output series: , , , …, , , . The input sequence can be interpreted as a record of past data. The procedure aims is to develop a formulism of generating novel potential information, also referred to as an output sequence, that is built on past data [15]. This can be represented as follows:The Figure 2 illustrates the process of predicting an input sequence. When d = 1, every point of the input needs to be sampled; when d = 2, every two points are sampled as an input, and when d = 4, every four points are sampled as an input.

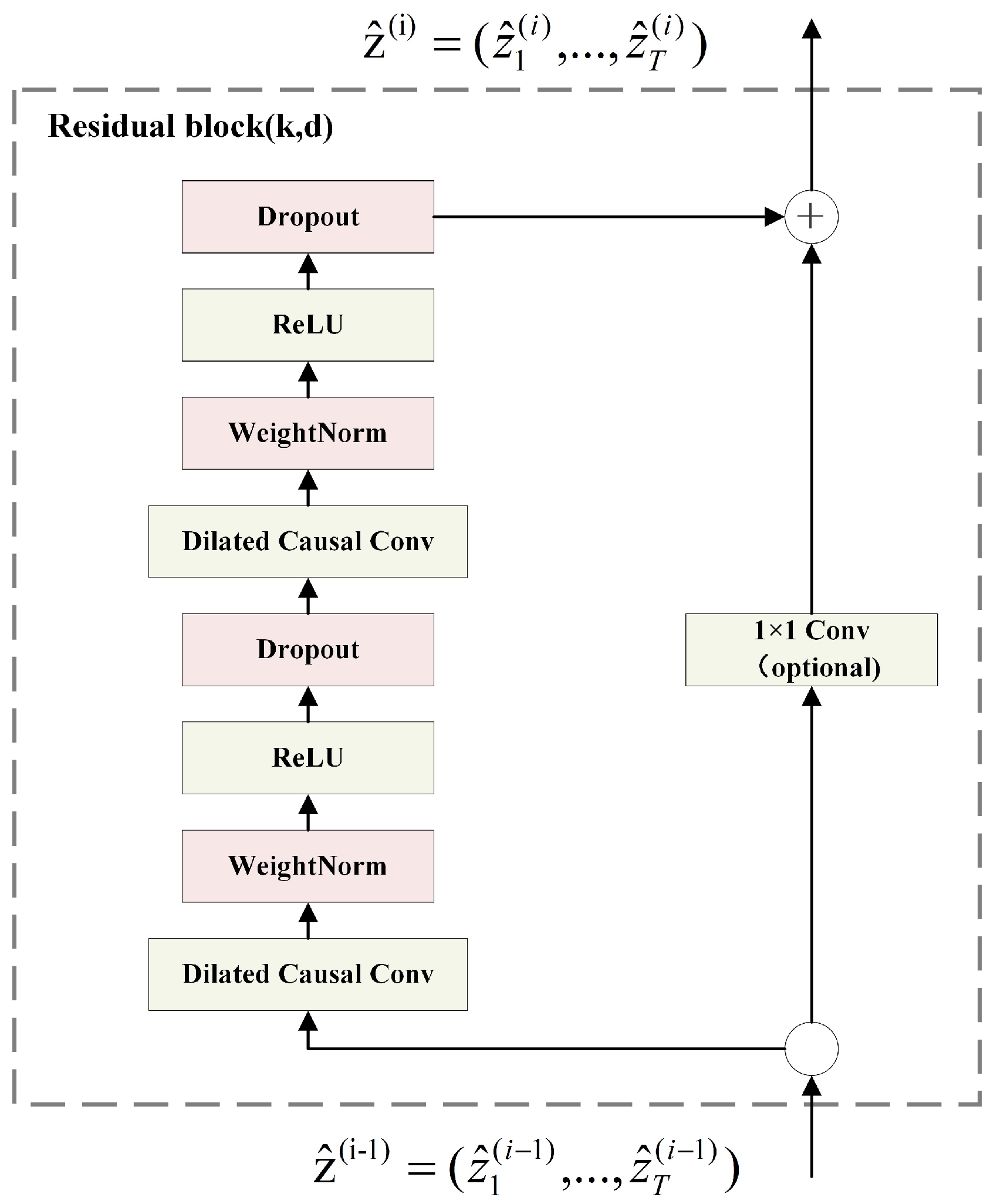

- Residual BlockThe architecture of the residual block in the TCN model is illustrated in Figure 3, means the residual block of input, and denotes the residual block output. Two nonlinear dilated causal convolution layers are included in one residual block, WeightNorm is applied to the convolutional filters, and the activation function is chosen as ReLU. In addition, the Dropout [23] layer was used for regularization after the activation function layer. Considering the potential variability in the number of input and output channels, a convolutional layer is used to adjust the quantity of channels.

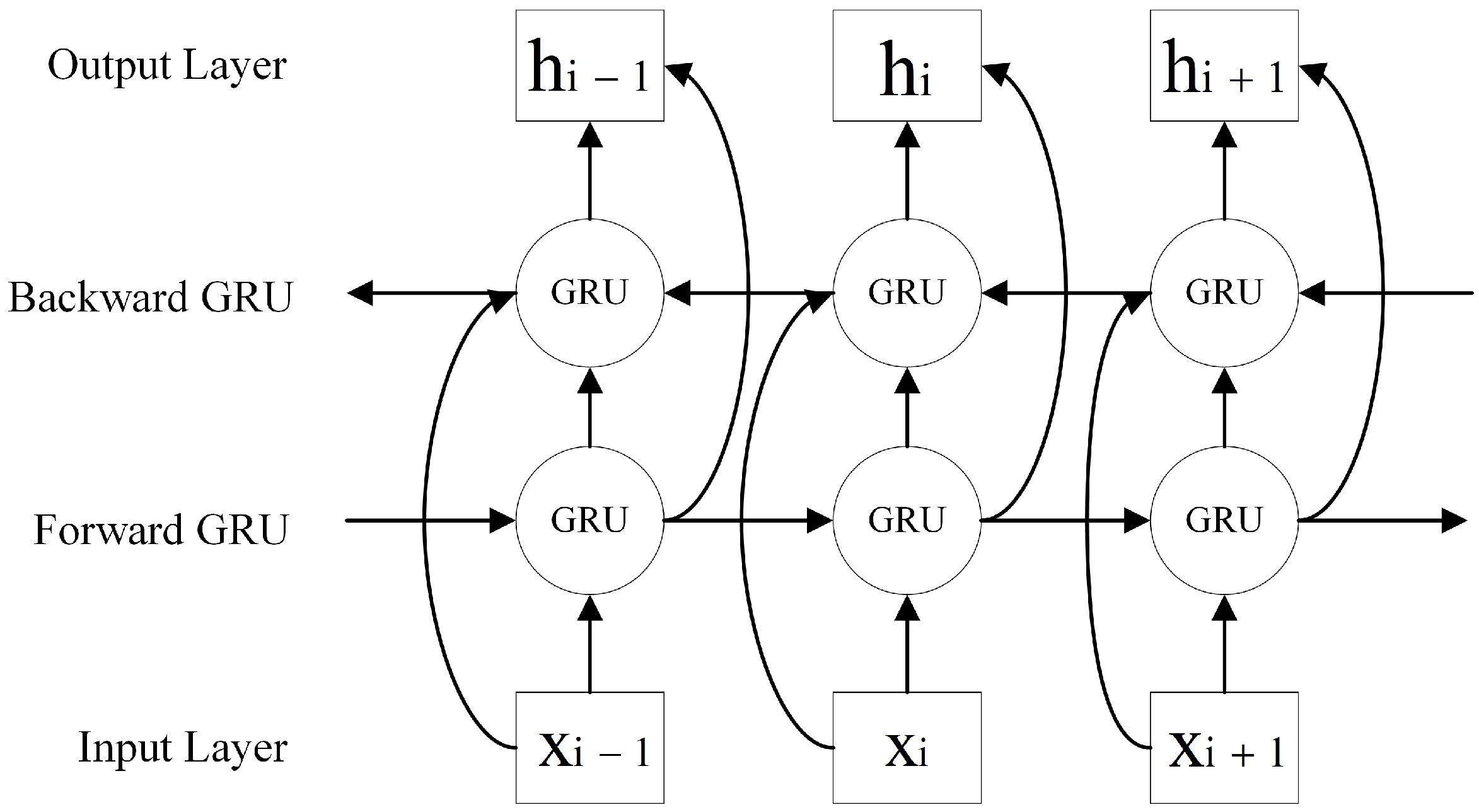

3.4.2. Bidirectional Gated Recurrent Unit

3.4.3. Self-Attention Mechanism

3.4.4. Softmax Layer

4. Experimental Method

4.1. Experimental Environment

4.2. Evaluation Indicator

4.3. Effect of Hyperparameters

4.3.1. Learning Rate

4.3.2. Nb_Stacks

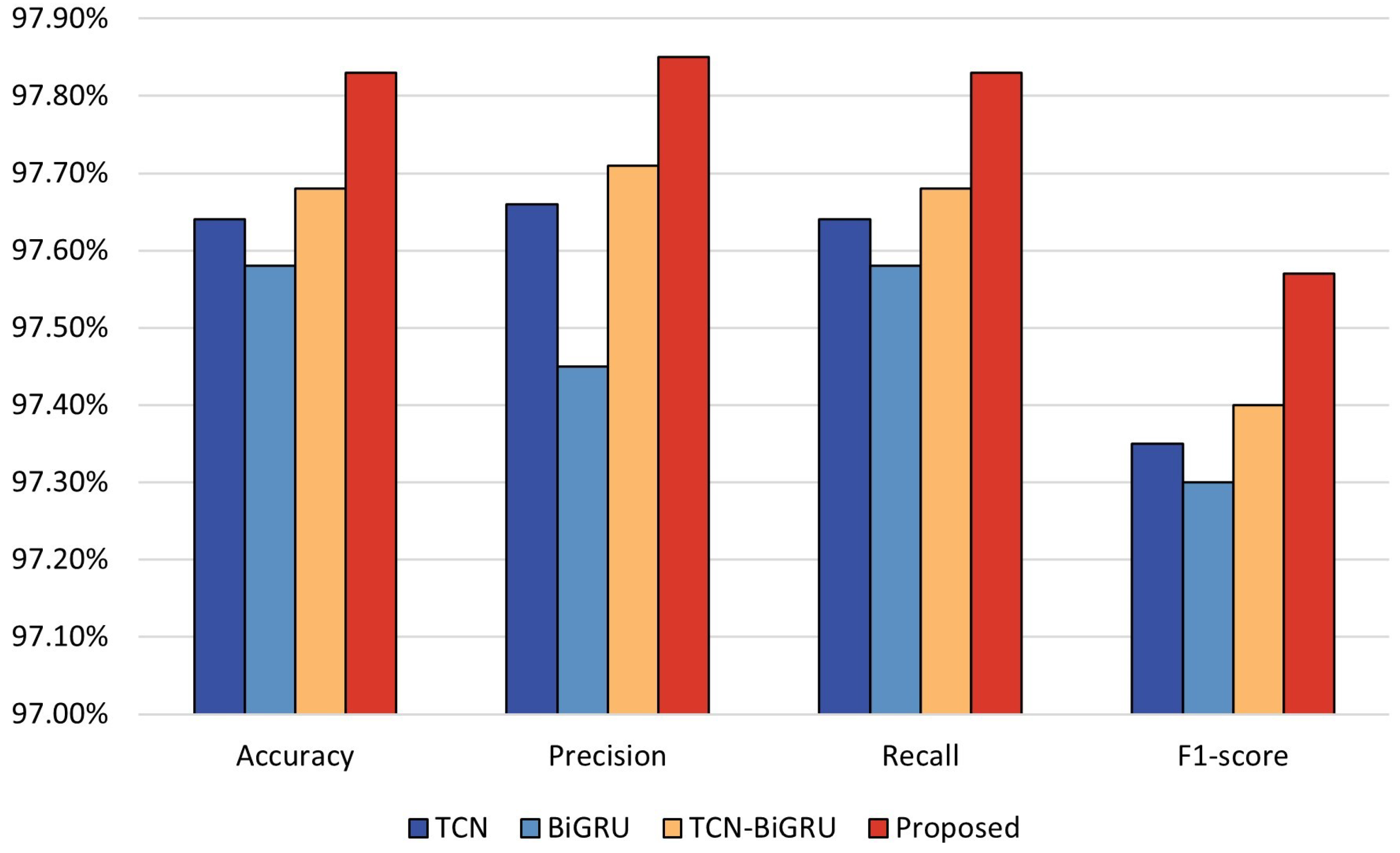

4.4. Result and Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| TCN | Temporal Convolutional Network |

| BiGRU | Bidirectional Gated Recurrent Unit |

| GRU | Gated Recurrent Unit |

| LSTM | Long short-term Memory Network |

| CBAM | Convolutional Block Attention Module |

| ML | Machine Learning |

| DL | Deep Learning |

| TP | True Positives |

| TN | True Negatives |

| FP | False Positives |

| FN | False Negatives |

| AM | Attention Model |

References

- Sun, P.; Liu, P.; Li, Q.; Liu, C.; Lu, X.; Hao, R.; Chen, J. DL-IDS: Extracting features using CNN-LSTM hybrid network for intrusion detection system. Secur. Commun. Netw. 2020, 2020, 8890306. [Google Scholar] [CrossRef]

- Khraisat, A.; Gondal, I.; Vamplew, P.; Kamruzzaman, J. Survey of intrusion detection systems: Techniques, datasets and challenges. Cybersecurity 2019, 2, 20. [Google Scholar] [CrossRef]

- Kornaropoulos, E.M.; Papamanthou, C.; Tamassia, R. The state of the uniform: Attacks on encrypted databases beyond the uniform query distribution. In Proceedings of the 2020 IEEE Symposium on Security and Privacy (SP), IEEE, San Francisco, CA, USA, 18–21 May 2020; pp. 1223–1240. [Google Scholar]

- Liu, Y.; Kang, J.; Li, Y.; Ji, B. A network intrusion detection method based on CNN and CBAM. In Proceedings of the IEEE INFOCOM 2021-IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), IEEE, Vancouver, BC, Canada, 10–13 May 2021; pp. 1–6. [Google Scholar]

- Kim, J.; Kim, J.; Thu, H.L.T.; Kim, H. Long short term memory recurrent neural network classifier for intrusion detection. In Proceedings of the 2016 International Conference on Platform Technology and Service (PlatCon), IEEE, Jeju, Republic of Korea, 15–17 February 2016; pp. 1–5. [Google Scholar]

- Tavallaee, M. An Adaptive Hybrid Intrusion Detection System; The University of New Brunswick: Fredericton, NB, Canada, 2011. [Google Scholar]

- Pajouh, H.H.; Javidan, R.; Khayami, R.; Dehghantanha, A.; Choo, K.K.R. A two-layer dimension reduction and two-tier classification model for anomaly-based intrusion detection in IoT backbone networks. IEEE Trans. Emerg. Top. Comput. 2016, 7, 314–323. [Google Scholar] [CrossRef]

- Mahfouz, A.M.; Venugopal, D.; Shiva, S.G. Comparative analysis of ML classifiers for network intrusion detection. In Fourth International Congress on Information and Communication Technology: ICICT 2019; Springer: Singapore, 2020; Volume 2, pp. 193–207. [Google Scholar]

- Zwane, S.; Tarwireyi, P.; Adigun, M. Performance analysis of machine learning classifiers for intrusion detection. In Proceedings of the 2018 International Conference on Intelligent and Innovative Computing Applications (ICONIC), IEEE, Mon Tresor, Mauritius, 6–7 December 2018; pp. 1–5. [Google Scholar]

- Vinayakumar, R.; Soman, K.; Poornachandran, P. Applying convolutional neural network for network intrusion detection. In Proceedings of the 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI), IEEE, Udupi, India, 13–16 September 2017; pp. 1222–1228. [Google Scholar]

- Yan, J.; Jin, D.; Lee, C.W.; Liu, P. A comparative study of off-line deep learning based network intrusion detection. In Proceedings of the 2018 Tenth International Conference on Ubiquitous and Future Networks (ICUFN), IEEE, Prague, Czech Republic, 3–6 July 2018; pp. 299–304. [Google Scholar]

- Usama, M.; Asim, M.; Latif, S.; Qadir, J.; Ala-Al-Fuqaha. Generative adversarial networks for launching and thwarting adversarial attacks on network intrusion detection systems. In Proceedings of the 2019 15th International Wireless Communications & Mobile Computing Conference (IWCMC), IEEE, Tangier, Morocco, 24–28 June 2019; pp. 78–83. [Google Scholar]

- Kong, F.; Li, J.; Jiang, B.; Wang, H.; Song, H. Integrated generative model for industrial anomaly detection via Bidirectional LSTM and attention mechanism. IEEE Trans. Ind. Inform. 2021, 19, 541–550. [Google Scholar] [CrossRef]

- Ansari, M.S.; Bartoš, V.; Lee, B. GRU-based deep learning approach for network intrusion alert prediction. Future Gener. Comput. Syst. 2022, 128, 235–247. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, G.; Zhao, H.; Ye, Y. Research on Network Traffic Anomaly Detection Method Based on Temporal Convolutional Network. In Proceedings of the 2022 IEEE 8th International Conference on Computer and Communications (ICCC), IEEE, Chengdu, China, 9–12 December 2022; pp. 590–598. [Google Scholar]

- Tavallaee, M.; Bagheri, E.; Lu, W.; Ghorbani, A.A. A detailed analysis of the KDD CUP 99 data set. In Proceedings of the 2009 IEEE Symposium on Computational Intelligence for Security and Defense Applications, IEEE, Ottawa, ON, Canada, 8–10 July 2009; pp. 1–6. [Google Scholar]

- Dhanabal, L.; Shantharajah, S. A study on NSL-KDD dataset for intrusion detection system based on classification algorithms. Int. J. Adv. Res. Comput. Commun. Eng. 2015, 4, 446–452. [Google Scholar]

- Moustafa, N.; Slay, J. The evaluation of Network Anomaly Detection Systems: Statistical analysis of the UNSW-NB15 data set and the comparison with the KDD99 data set. Inf. Secur. J. Glob. Perspect. 2016, 25, 18–31. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

- Ren, K.; Zeng, Y.; Cao, Z.; Zhang, Y. ID-RDRL: A deep reinforcement learning-based feature selection intrusion detection model. Sci. Rep. 2022, 12, 15370. [Google Scholar] [CrossRef]

- Khan, M.A.; Kim, J. Toward developing efficient Conv-AE-based intrusion detection system using heterogeneous dataset. Electronics 2020, 9, 1771. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. An empirical evaluation of generic convolutional and recurrent networks for sequence modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Zhang, D.; Yang, J.; Li, F.; Han, S.; Qin, L.; Li, Q. Landslide Risk Prediction Model Using an Attention-Based Temporal Convolutional Network Connected to a Recurrent Neural Network. IEEE Access 2022, 10, 37635–37645. [Google Scholar] [CrossRef]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Zheng, W.; Cheng, P.; Cai, Z.; Xiao, Y. Research on Network Attack Detection Model Based on BiGRU-Attention. In Proceedings of the 2022 4th International Conference on Frontiers Technology of Information and Computer (ICFTIC), IEEE, Qingdao, China, 2–4 December 2022; pp. 979–982. [Google Scholar]

- Li, L.; Hu, M.; Ren, F.; Xu, H. Temporal Attention Based TCN-BIGRU Model for Energy Time Series Forecasting. In Proceedings of the 2021 IEEE International Conference on Computer Science, Artificial Intelligence and Electronic Engineering (CSAIEE), IEEE, Virtual Conference, 20–22 August 2021; pp. 187–193. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Child, R.; Gray, S.; Radford, A.; Sutskever, I. Generating long sequences with sparse transformers. arXiv 2019, arXiv:1904.10509. [Google Scholar]

- Hodo, E.; Bellekens, X.; Hamilton, A.; Tachtatzis, C.; Atkinson, R. Shallow and deep networks intrusion detection system: A taxonomy and survey. arXiv 2017, arXiv:1701.02145. [Google Scholar]

- Lin, P.; Ye, K.; Xu, C.Z. Dynamic network anomaly detection system by using deep learning techniques. In Proceedings of the Cloud Computing–CLOUD 2019: 12th International Conference, Held as Part of the Services Conference Federation, SCF 2019, San Diego, CA, USA, 25–30 June 2019; Springer: Cham, Switzerland, 2019; pp. 161–176. [Google Scholar]

- Ferrag, M.A.; Maglaras, L.; Moschoyiannis, S.; Janicke, H. Deep learning for cyber security intrusion detection: Approaches, datasets, and comparative study. J. Inf. Secur. Appl. 2020, 50, 102419. [Google Scholar] [CrossRef]

- Kim, J.; Kim, J.; Kim, H.; Shim, M.; Choi, E. CNN-based network intrusion detection against denial-of-service attacks. Electronics 2020, 9, 916. [Google Scholar] [CrossRef]

- Kunang, Y.N.; Nurmaini, S.; Stiawan, D.; Suprapto, B.Y. Attack classification of an intrusion detection system using deep learning and hyperparameter optimization. J. Inf. Secur. Appl. 2021, 58, 102804. [Google Scholar] [CrossRef]

| Feature Name | Feature Short Description | Data Type |

|---|---|---|

| Dst Port | The destination port number | Integer |

| Protocol | Protocols used for connection | Integer |

| Flow Duration | Duration that connection occurred | String |

| Tot Fwd Pkts | Total number of packages in the forward-looking direction | Integer |

| Tot Bwd Pkts | Total number of packets in the back direction | Integer |

| ToLen Fwd Pkts | Total length of forward packets | Integer |

| Fwd Pkt Len Max | Maximum size of forward mode network packets | Float |

| Bwd Pkt Len Mean | Mean size of backward mode network packets | Float |

| … | … | … |

| Active mean | Mean time a flow was active before it becomes idle | Float |

| Idle Std | Standard deviation time a flow was idle before it becomes active | Float |

| Idle Min | Minimum idle time for a flow before it becomes active | Float |

| Label | Describes whether the file is attack or benign | char |

| Class | Attack Type | Before | After |

|---|---|---|---|

| Benign | - | 5,567,951 | 5,538,387 |

| DDOS | DDOS attack-HOIC | 686,012 | 685,993 |

| - | DDOS attack-LOIC-UDP | 1730 | 1729 |

| Dos | DoS attacks-Hulk | 461,912 | 461,905 |

| - | DoS attacks-GoldenEye | 41,508 | 41,505 |

| - | DoS attacks-Slowloris | 10,990 | 10,989 |

| - | DoS attacks-SlowHTTPTest | 139,890 | 139,880 |

| Bot | Bot | 286,191 | 286,170 |

| Bruteforce | SSH-Brute force | 187,589 | 187,576 |

| - | FTP-Brute force | 193,360 | 193,342 |

| Infilteration | Infilteration | 93,063 | 92,403 |

| Web-attack | Brute Force-Web | 611 | 609 |

| - | Brute Force-XSS | 230 | 230 |

| - | SQL Injection | 87 | 87 |

| Predict | Benign | Malware |

|---|---|---|

| Actual | ||

| Benign | TP | FN |

| Malware | FP | TN |

| Method | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| LSTM + AM [32] | 96.2% | 96% | 96% | 93% |

| DNN [33] | 97.28% | N/A | N/A | N/A |

| CNN [34] | 91.5% | N/A | N/A | N/A |

| DAE + DNN [35] | 95.79% | 95.38% | 95.79% | 95.11% |

| CNN + CBAM [4] | 97.29% | 97.26% | 97.29% | 96.88% |

| ID-RDRL [20] | 96.2% | N/A | N/A | 94.9% |

| Proposed | 97.83% | 97.85% | 97.83% | 97.57% |

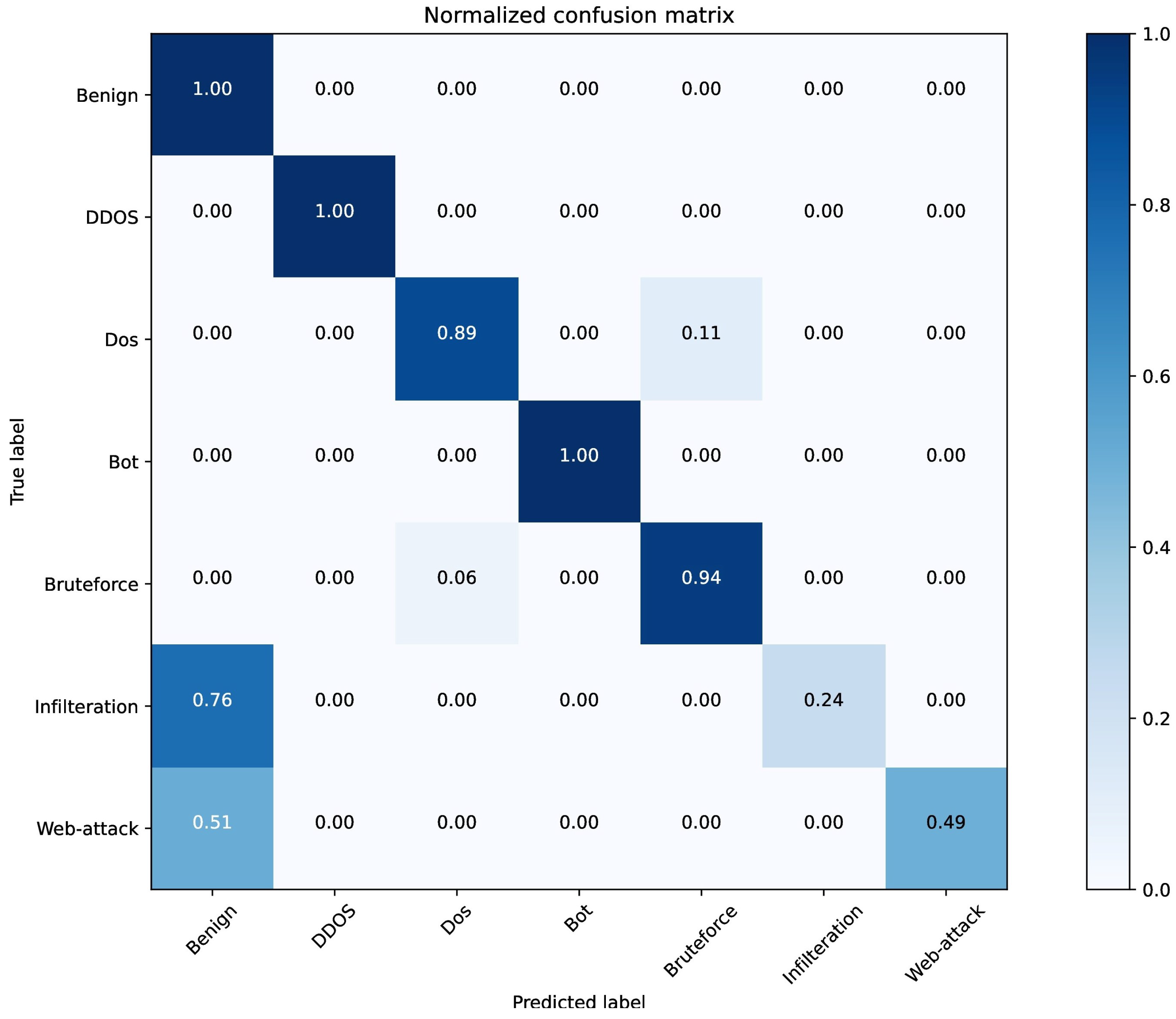

| Precision | Recall | F1-Score | Support | |

|---|---|---|---|---|

| Benign | 98.73% | 99.95% | 99.34% | 553,839 |

| DDOS | 100% | 100% | 100% | 68,772 |

| Dos | 96.39% | 89.30% | 92.71% | 553,839 |

| Bot | 99.97% | 99.87% | 99.92% | 28,617 |

| Bruteforce | 83.70% | 94.38% | 88.71% | 38,092 |

| Infilteration | 91.92% | 23.63% | 37.59% | 9240 |

| Web-attack | 82.14% | 49.46% | 61.74% | 93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, Y.; Luktarhan, N.; Shi, Z.; Wu, H. TGA: A Novel Network Intrusion Detection Method Based on TCN, BiGRU and Attention Mechanism. Electronics 2023, 12, 2849. https://doi.org/10.3390/electronics12132849

Song Y, Luktarhan N, Shi Z, Wu H. TGA: A Novel Network Intrusion Detection Method Based on TCN, BiGRU and Attention Mechanism. Electronics. 2023; 12(13):2849. https://doi.org/10.3390/electronics12132849

Chicago/Turabian StyleSong, Yangyang, Nurbol Luktarhan, Zhaolei Shi, and Haojie Wu. 2023. "TGA: A Novel Network Intrusion Detection Method Based on TCN, BiGRU and Attention Mechanism" Electronics 12, no. 13: 2849. https://doi.org/10.3390/electronics12132849

APA StyleSong, Y., Luktarhan, N., Shi, Z., & Wu, H. (2023). TGA: A Novel Network Intrusion Detection Method Based on TCN, BiGRU and Attention Mechanism. Electronics, 12(13), 2849. https://doi.org/10.3390/electronics12132849