A Laser Data Compensation Algorithm Based on Indoor Depth Map Enhancement

Abstract

1. Introduction

- (1)

- The concept of pseudo-laser data is introduced, and the converted pseudo-laser data are fused to the laser data to make the laser data contain more information and improve the accuracy of mapping.

- (2)

- Based on the processed laser data, the data model is enhanced to provide a more accurate initial iterative value for SLAM front-end matching.

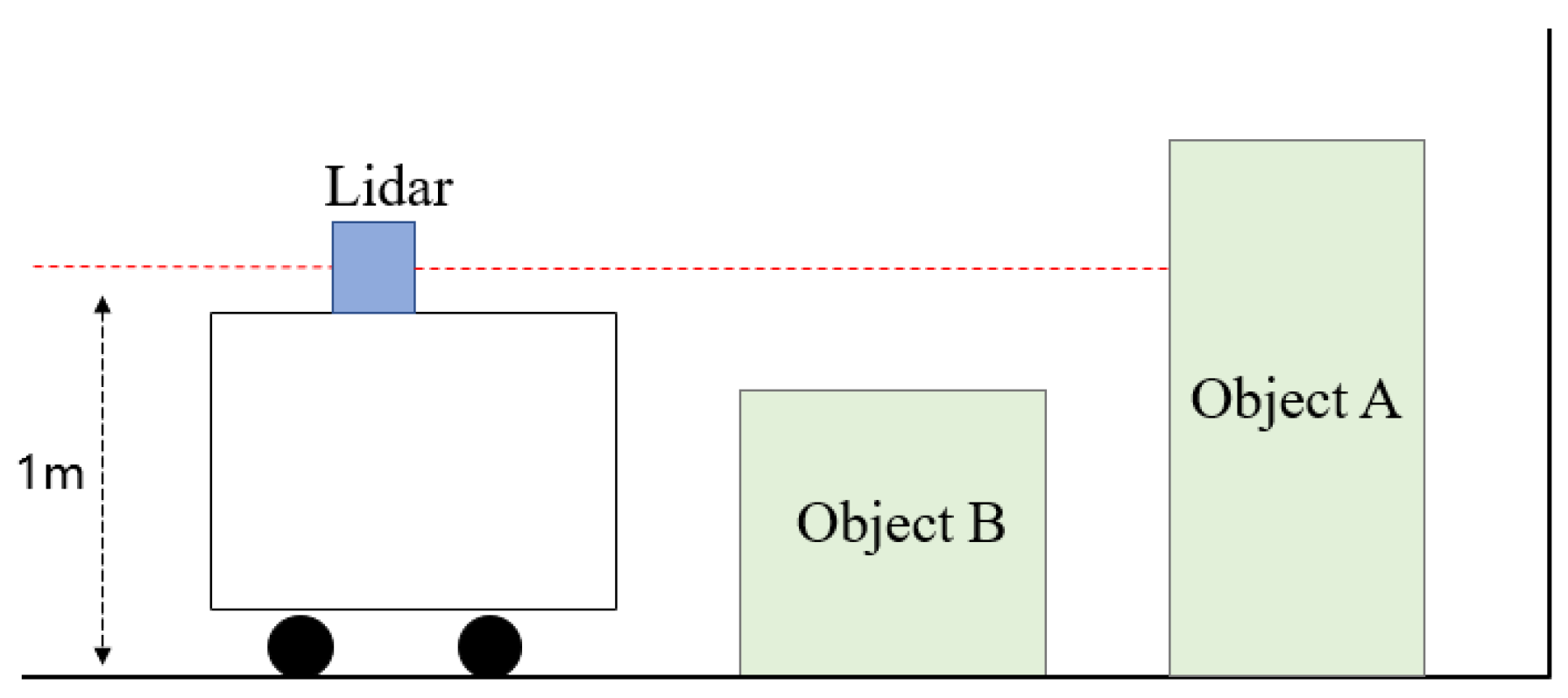

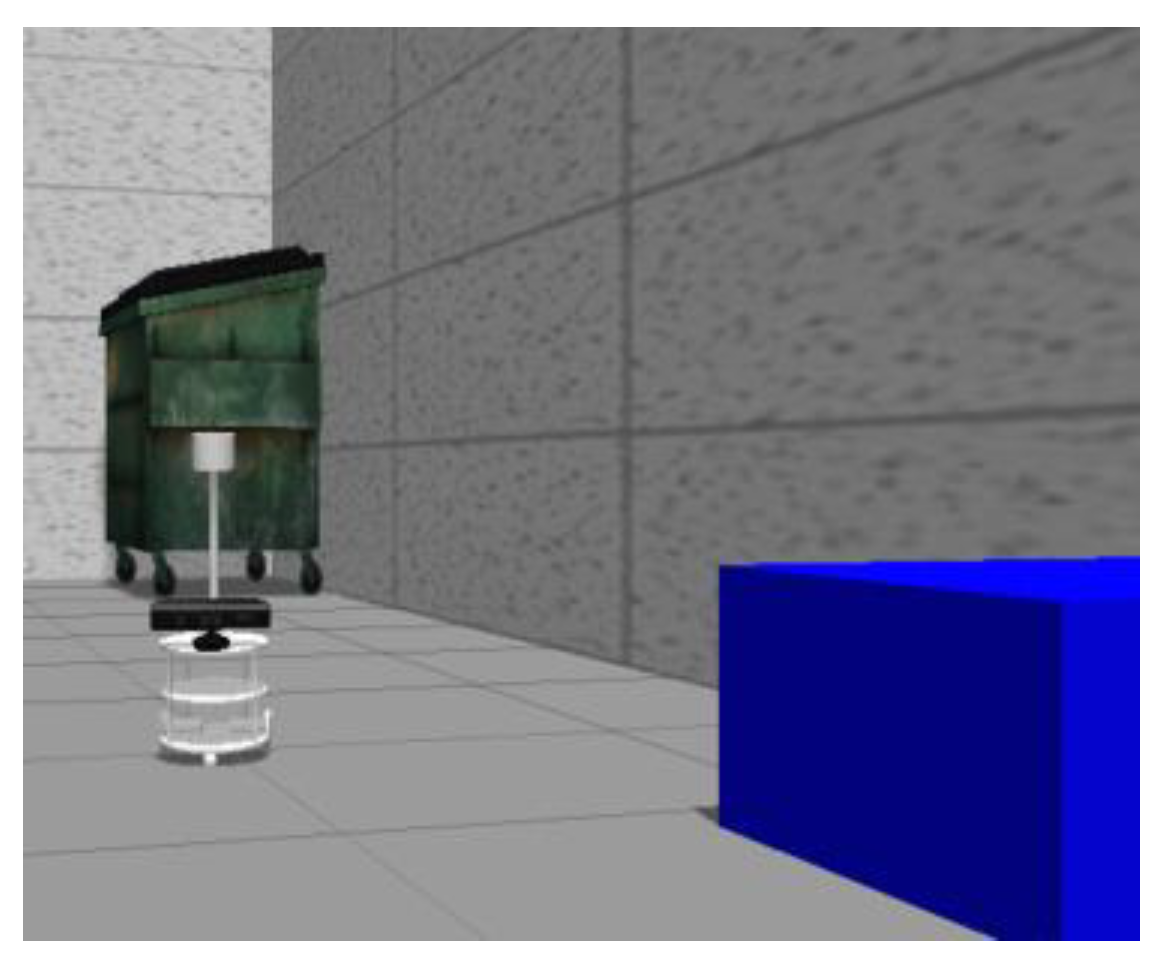

2. Problem Description of Scanning Limitations

3. Overview of the Fused Slam Algorithm

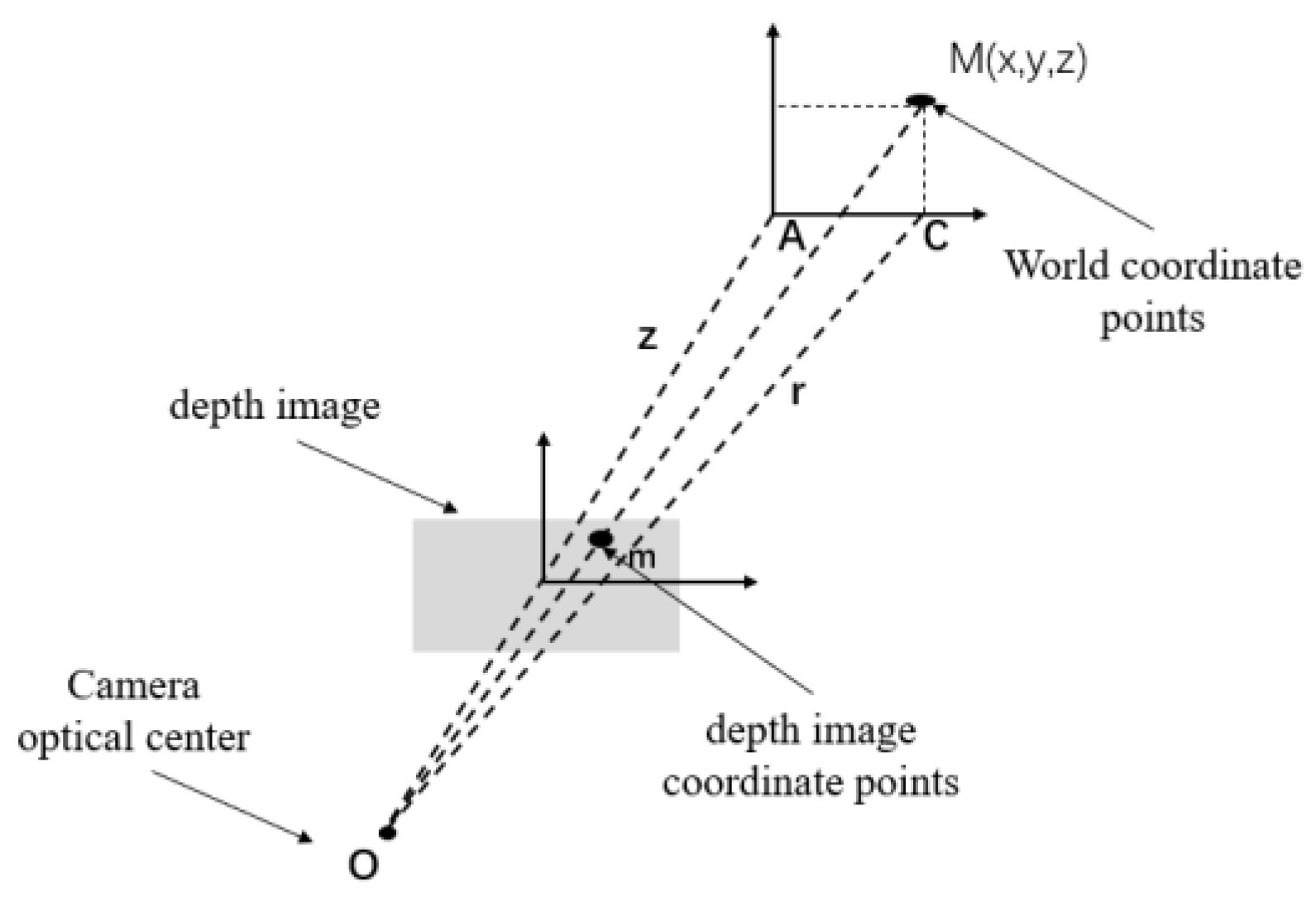

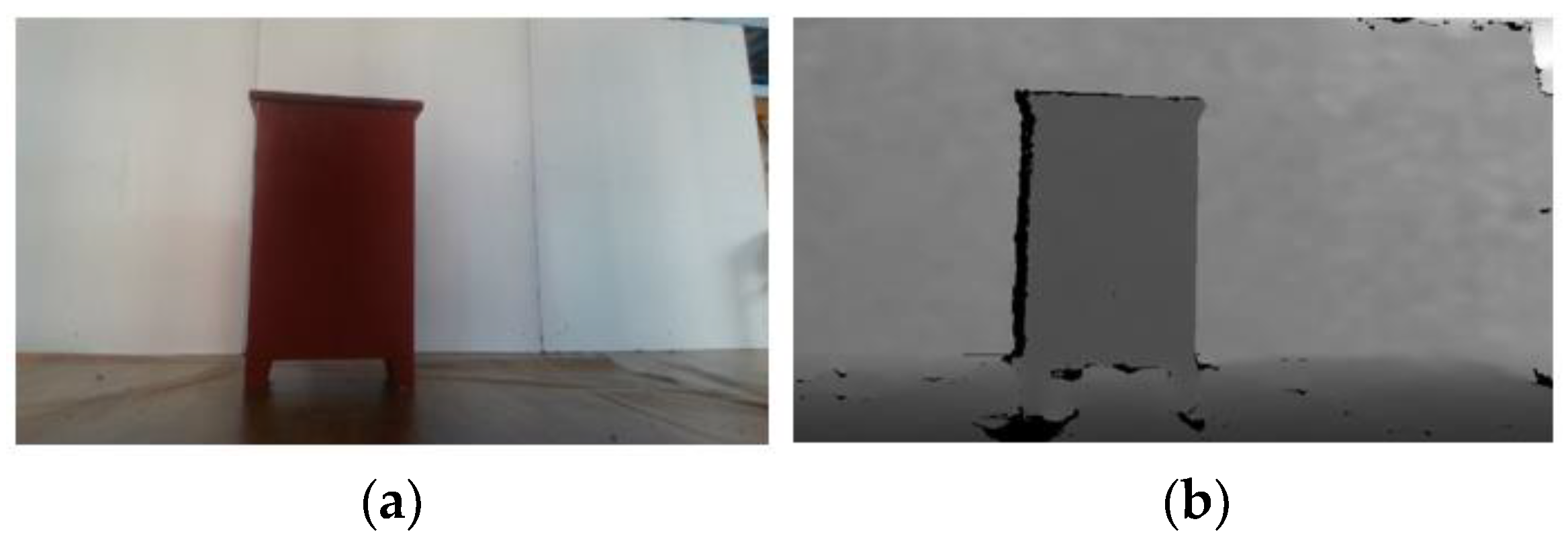

3.1. Pseudo-Laser Data Conversion

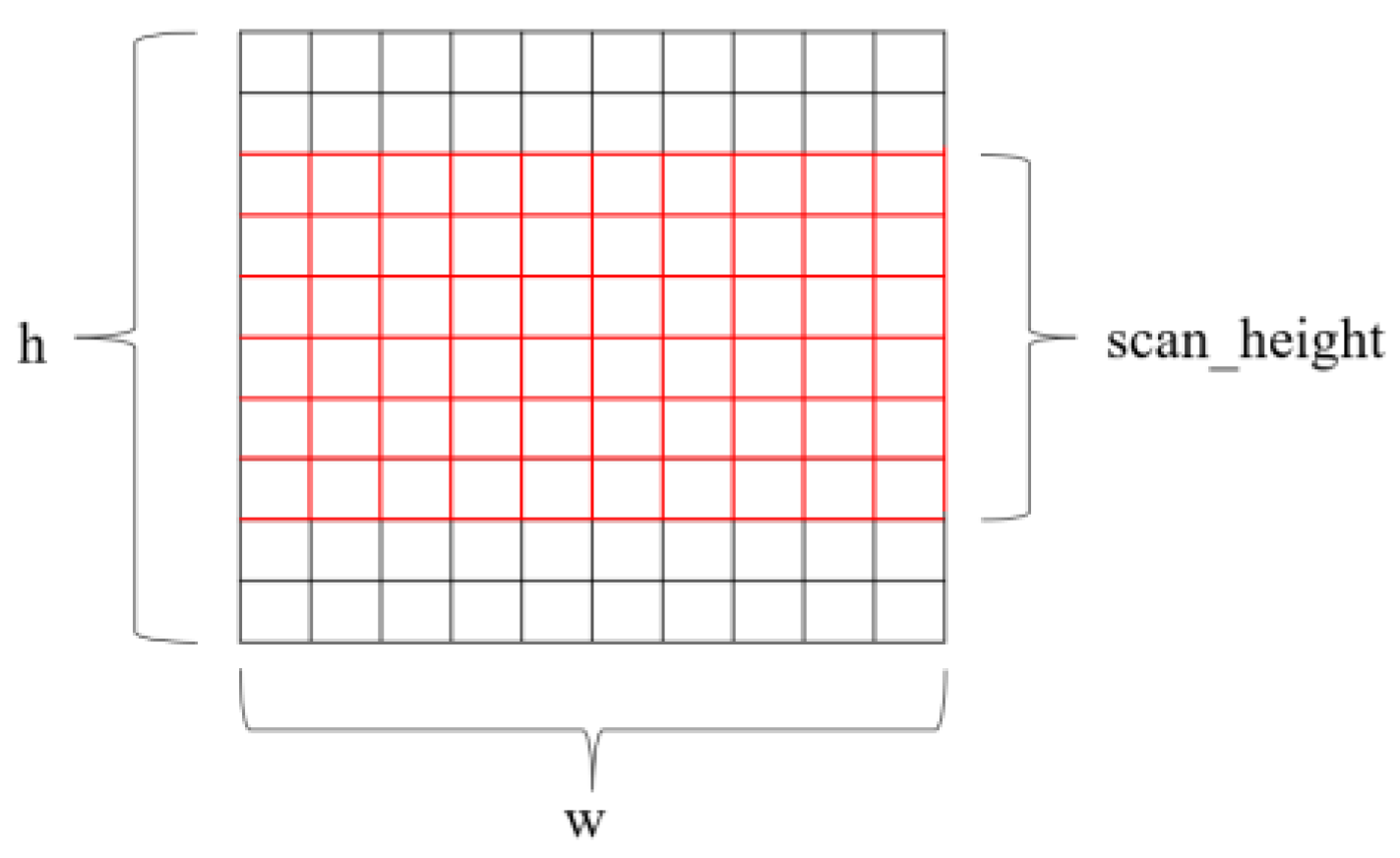

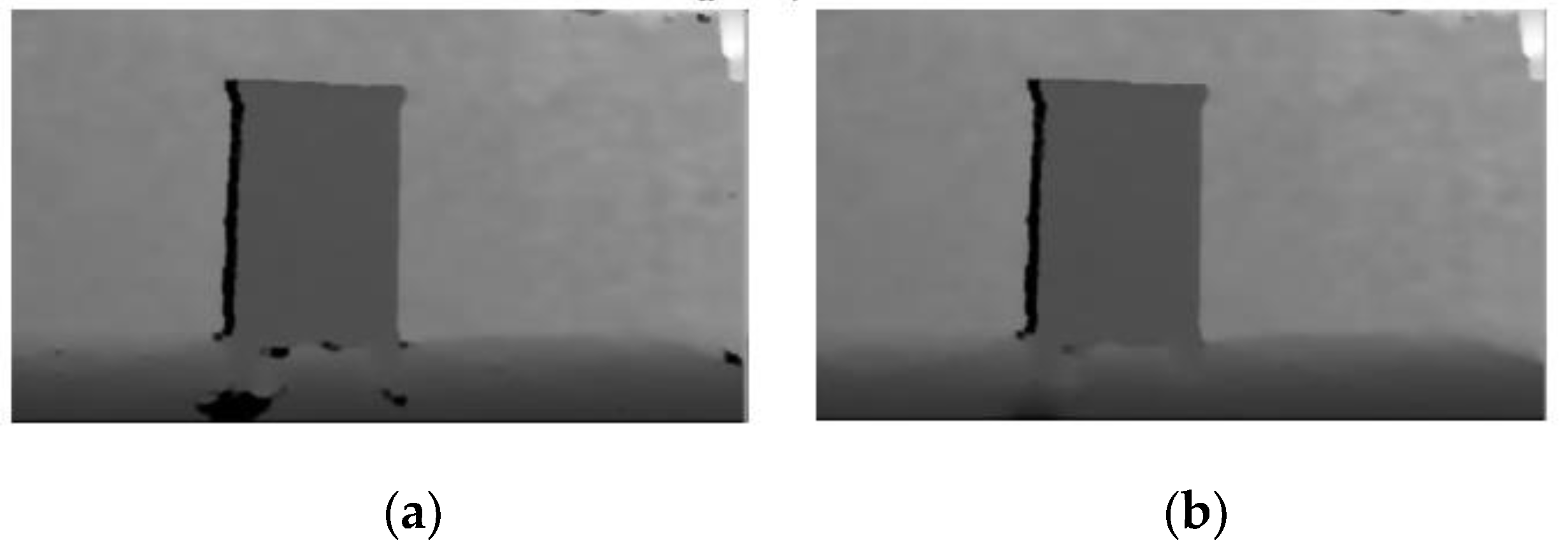

3.2. Depth Map Filtering

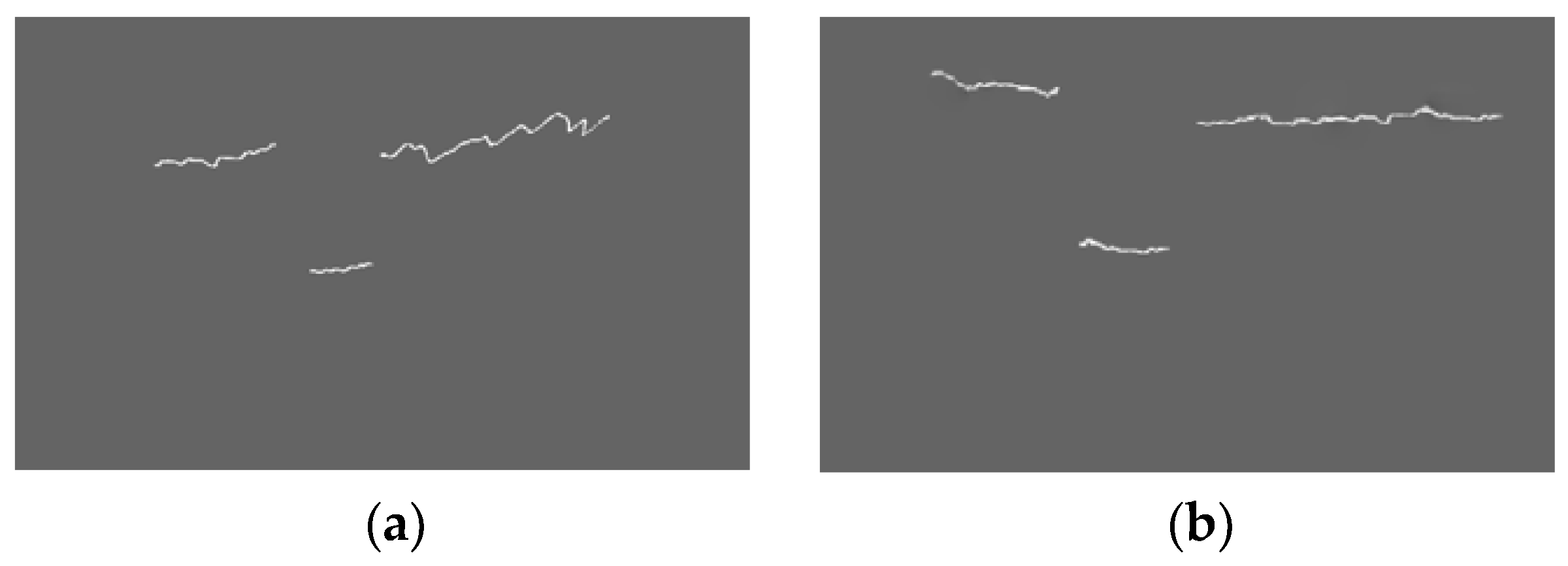

3.3. Correlation of Fused Laser Data

3.4. Laser Data Compensation Processing

| Algorithm 1 Laser Data Compensation Algorithm |

| Input: Pseudo-Laser Data: sense[k], Laser Data: lidar[k] Output: Fused Laser Data: lidar[k] while k < 1280 do if k < 640 then = 180 = = if < lidar[k] then lidar[k] = else = 18° − = = if < lidar[k] then lidar[k] = end if end while return lidar |

4. Simulation Analysis

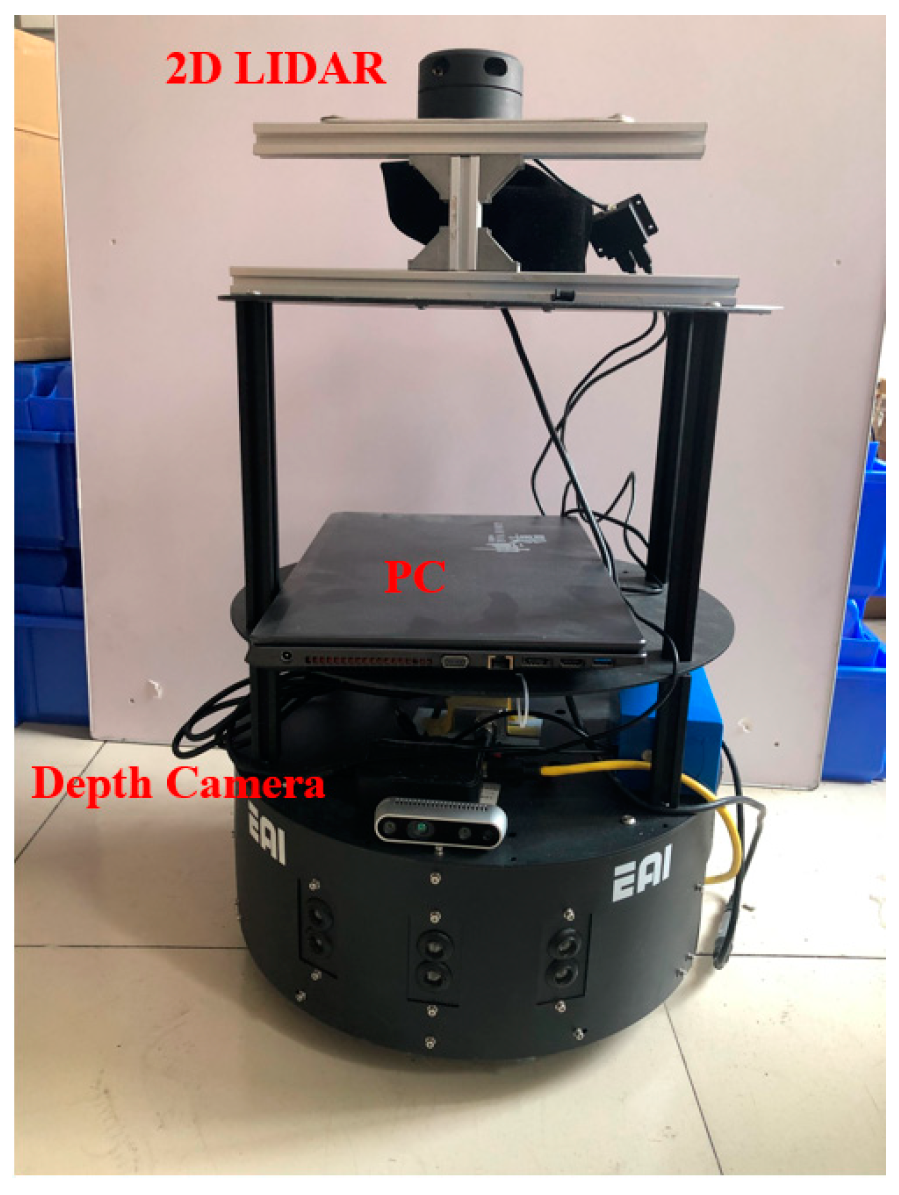

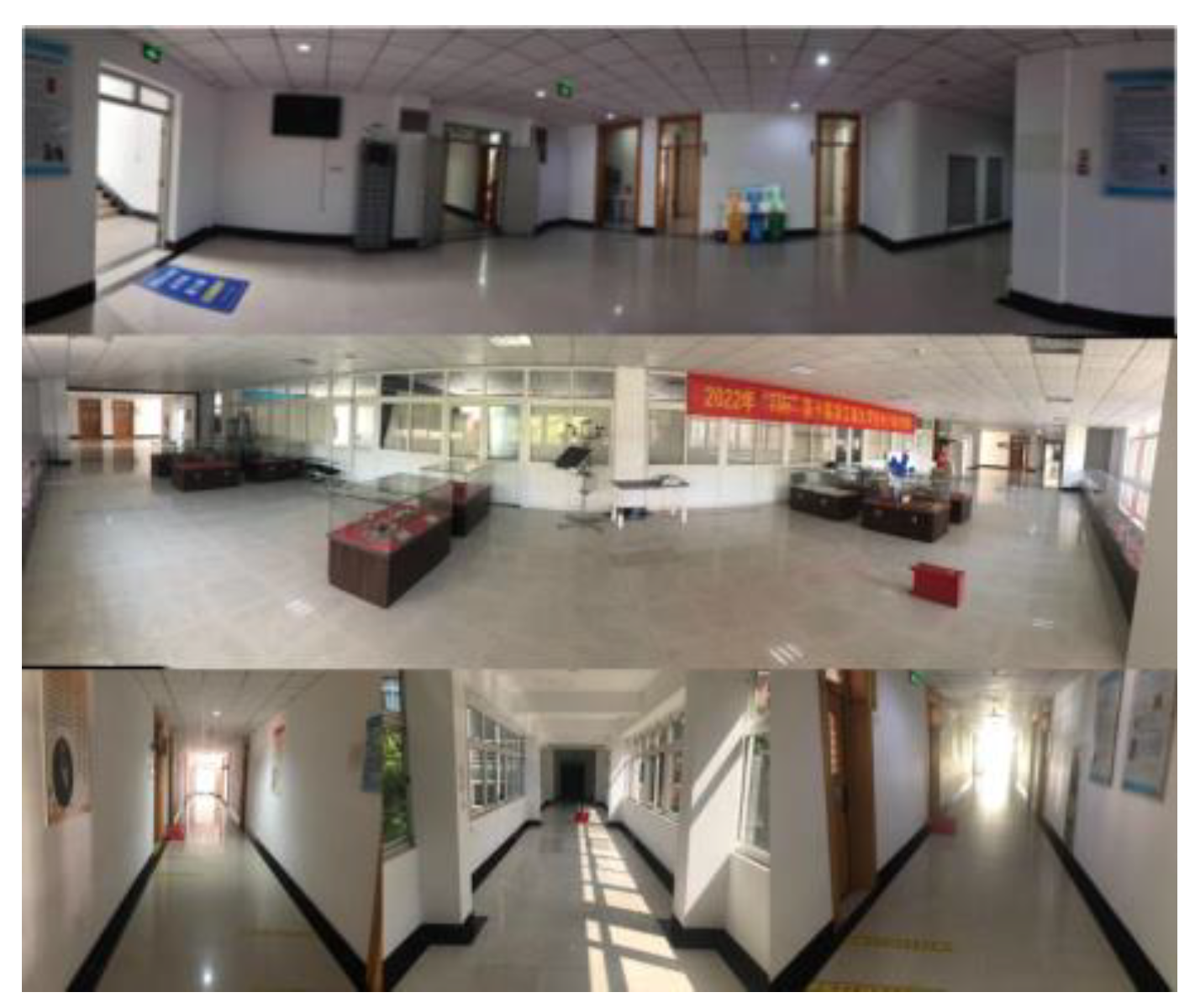

5. Experimental Evaluation

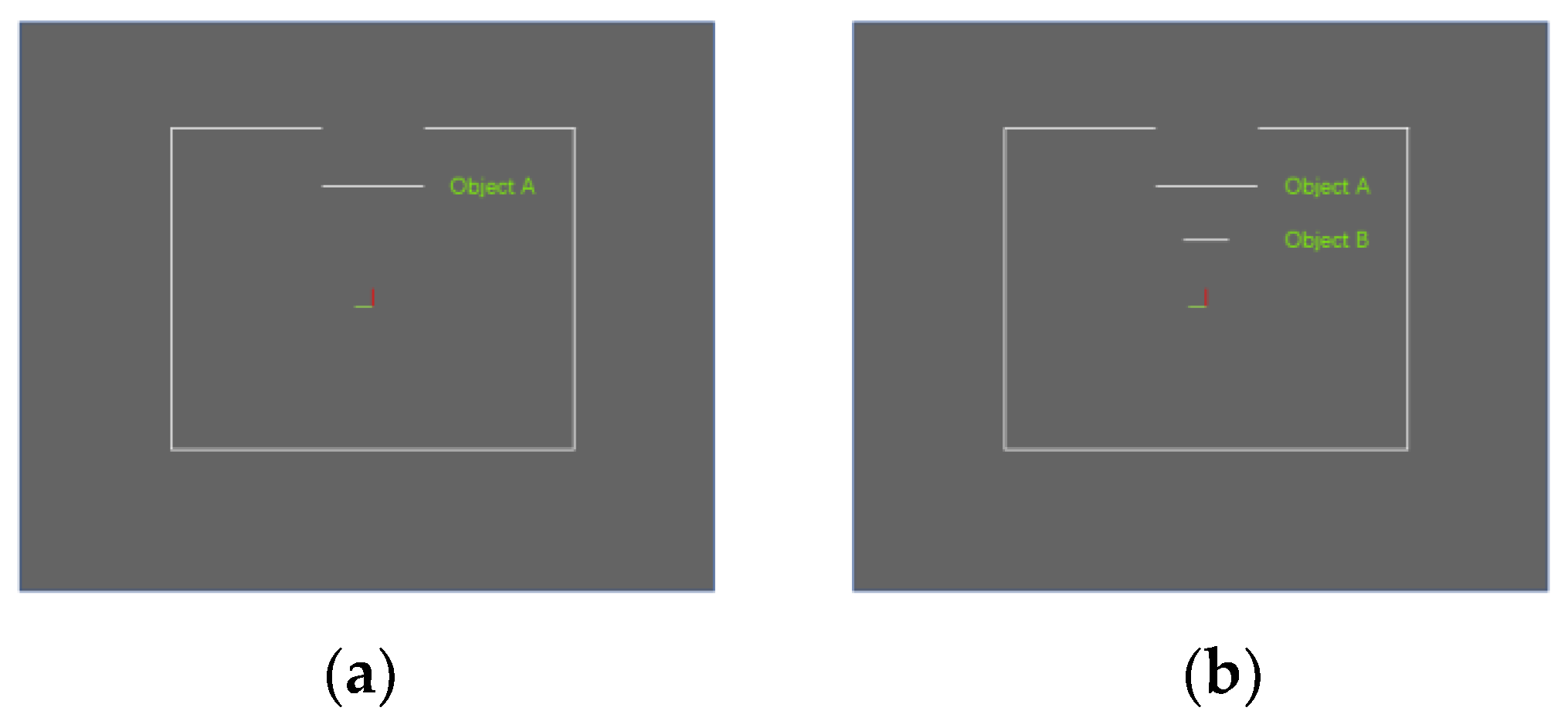

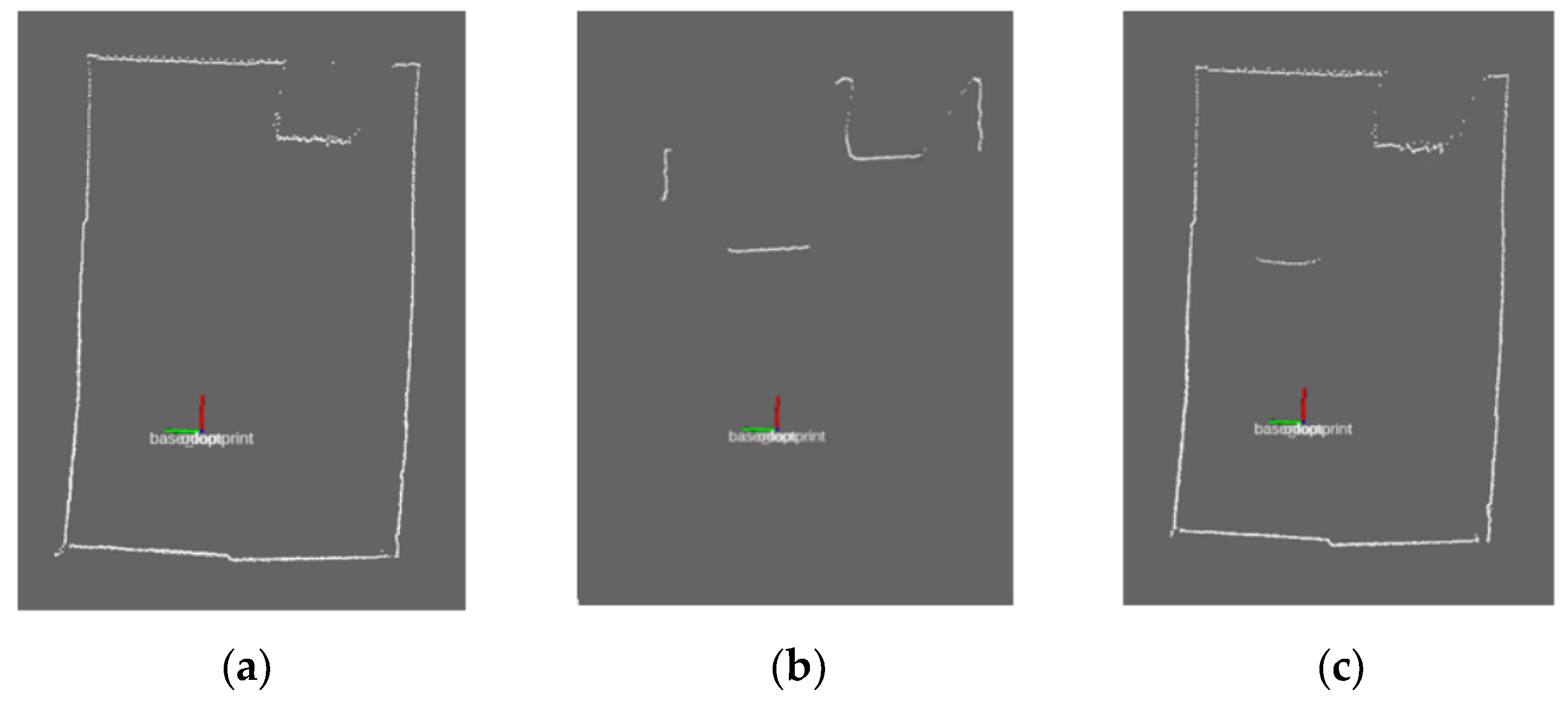

5.1. Experiments on Fusion Laser Data

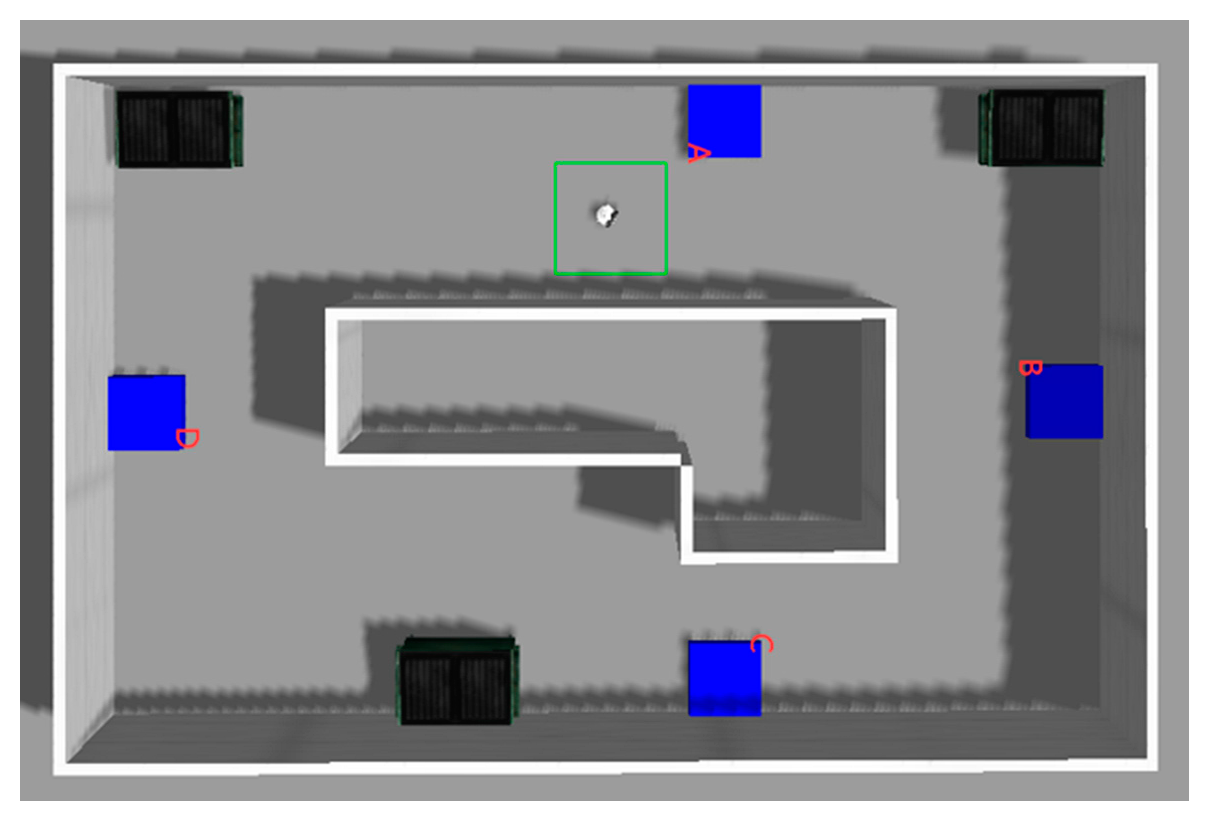

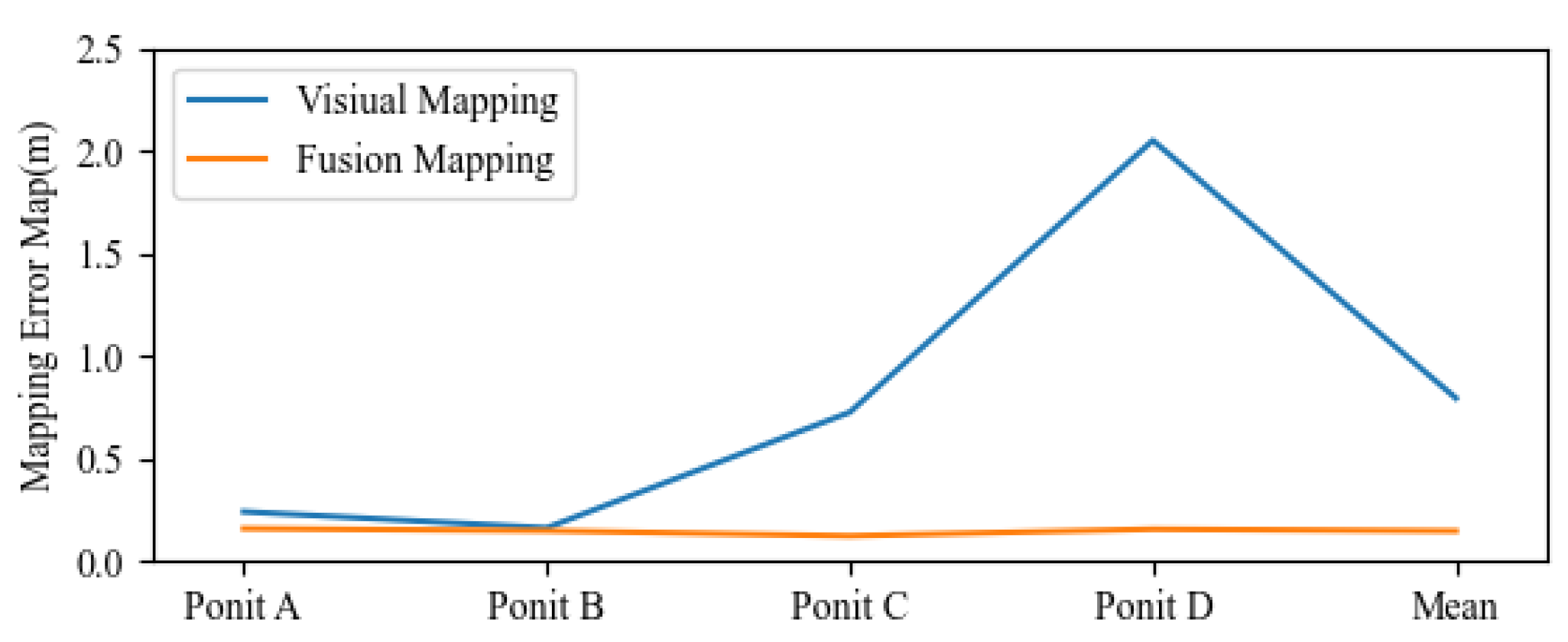

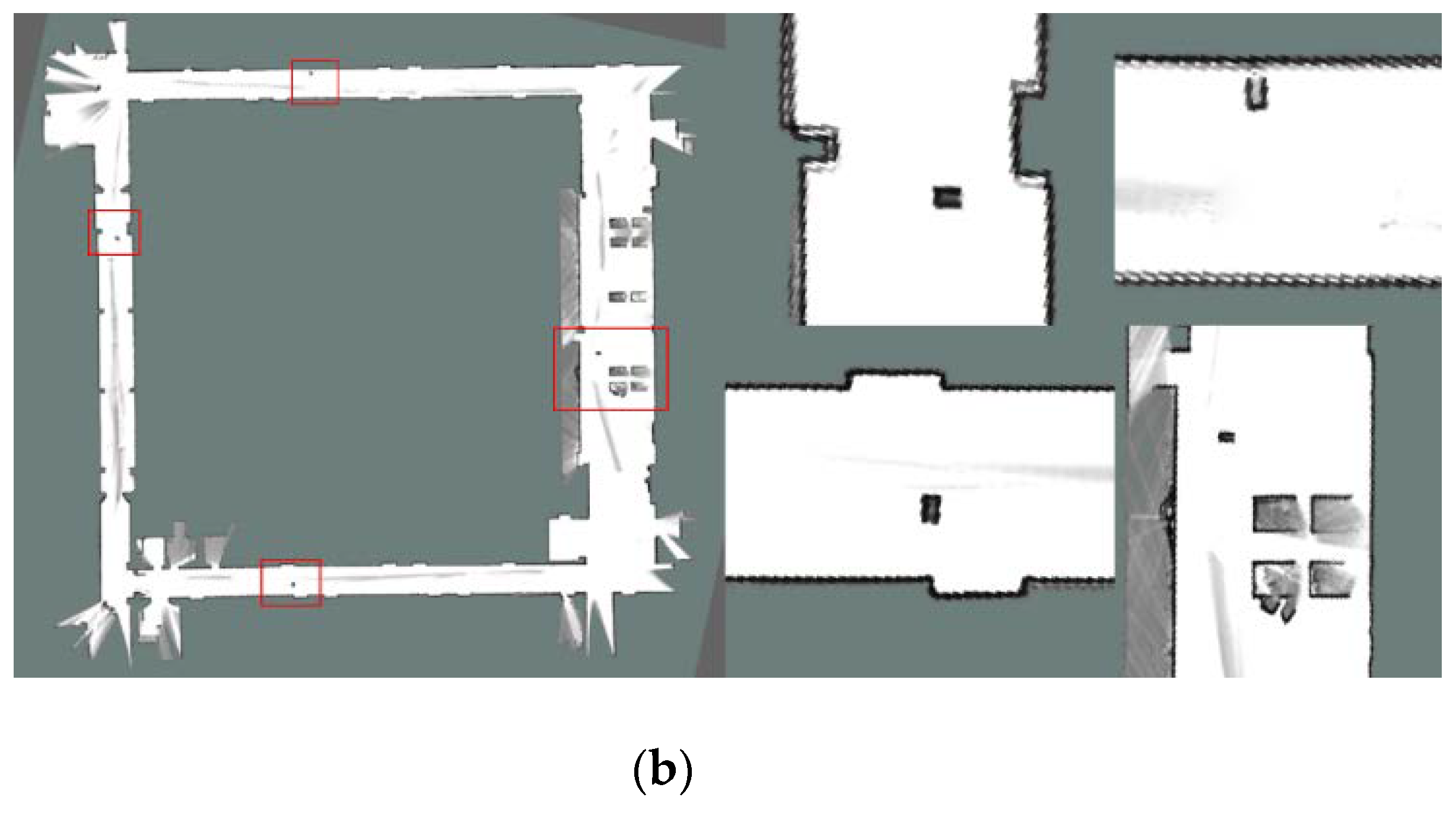

5.2. Experiments on Fusion SLAM Mapping

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- De Winter, A.; Baldi, S. Real-Life implementation of a GPS-Based path-following system for an autonomous vehicle. Sensors 2018, 18, 3940. [Google Scholar] [CrossRef] [PubMed]

- Lei, D.; Liu, H. A SLAM method based on LiDAR scan matching in the polar coordinates. J. Chin. Acad. Elec. Res. Inst. 2019, 14, 563–567. [Google Scholar] [CrossRef]

- Rui, H.; Yi, Z. High Adaptive LiDAR Simultaneous Localization and Mapping. J. Univ. Electron. Sci. Technol. China 2021, 50, 52–58. [Google Scholar] [CrossRef]

- Grinvald, M.; Furrer, F.; Novkovic, T.; Chung, J.J.; Cadena, C.; Siegwart, R.; Nieto, J. Volumetric Instance -Aware Semantic Mapping and 3D Object Discovery. IEEE Robot. Autom. Lett. 2019, 4, 3037–3044. [Google Scholar] [CrossRef]

- Wang, D.; Zhang, M.; Li, G. Research on SLAM of Unmanned Platform Based on the Fusion of Lidar and Depth Camera, 3rd ed.; WSAI: Sydney, NSW, Australia, 2021. [Google Scholar] [CrossRef]

- Xin, L.I.; Xunyu, Z.; Xiafu, P.; Zhaohui, G.; Xungao, Z. Fast ICP-SLAM Method Based on Multi-resolution Search and Multi-density Point Cloud Matching. Robot 2020, 42, 583–594. [Google Scholar] [CrossRef]

- Zhang, Q.Y.; Zhao, X.H.; Liu, L.; Dai, T.D. Adaptive sliding mode neural network control and flexible vibration suppression of a flexible spatial parallel robot. Electronics 2021, 10, 212. [Google Scholar] [CrossRef]

- Luo, C.; Wei, H.; Lu, B. Research and implementation of SLAM algorithm based on deep vision. Comput. Eng. Des. 2017, 34, 1062–1066. [Google Scholar] [CrossRef]

- Xue, H.; Fu, H.; Dai, B. IMU-aided high-frequency LiDAR odometry for autonomous driving. Appl. Sci. 2019, 9, 1506. [Google Scholar] [CrossRef]

- Shao, W.; Tian, G.; Zhang, Y. A 2D Mapping Method Based on Virtual Laser Scans for Indoor Robots. Int. J. Autom. Comput. 2021, 18, 747–765. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, X.; Chen, Y. A lightweight and accurate localization algorithm using multiple inertial measurement units. IEEE Robot. Autom. Lett. 2020, 5, 1508–1515. [Google Scholar] [CrossRef]

- Yokozuka, M.; Koide, K.; Oishi1, S.; Banno, A. LiTAMIN2: Ultra Light LiDAR-based SLAM using Geometric Approximation applied with KL-Divergence. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation, Xi’an, China, 19–22 November 2021; pp. 11619–11625. [Google Scholar] [CrossRef]

- Xu, Y.; Yan, W.; Wu, W. Improvement of LiDAR SLAM front—End algorithm based on local map in similar scenes. Robotics 2022, 44, 176–185. [Google Scholar] [CrossRef]

- Liu, R.; Zhang, G.; Wang, J.; Zhao, S. Cross-modal 360° depth completion and reconstruction for large-scale indoor environment. Intell. Transp. Syst. 2022, 23, 25180–25190. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, Z.; Cao, J.; Shen, P.; Jiang, D.; Me, L.; Zhu, G.; Qi, M. Cartographer algorithm and system implementation based on enhanced pose fusion of sweeping robot. J. Softw. 2020, 31, 2678–2690. [Google Scholar] [CrossRef]

- Hu, A.; Yu, G.; Wang, Q. Efficient Hardware Accelerator design of non-Linear optimization correlative scan matching algorithm in 2D LiDAR SLAM for mobile robots. Sensors 2022, 22, 8947. [Google Scholar] [CrossRef]

- Yin, J.; Li, A.; Li, T.; Yu, W.; Zou, D. M2dgr: A multi-sensor and multi-scenario slam dataset for ground robots. IEEE Robot. Autom. Lett. 2021, 7, 2266–2273. [Google Scholar] [CrossRef]

- Wei, X.; Lv, J.; Sun, J.; Pu, S. Ground-SLAM: Ground Constrained LiDAR SLAM for Structured Multi-Floor Environments. arXiv 2021, arXiv:2103.03713. [Google Scholar]

| Real Coordinates | Measured Coordinates | Error/m | |

|---|---|---|---|

| A | (1.045, 0.746) | (1.271, 0.830) | 0.241 |

| B | (5.755, −2.133) | (5.891, −2.079) | 0.146 |

| C | (2.048, −5.954) | (2.688, −6.247) | 0.704 |

| D | (−5.914, −3.256) | (−4.153, −3.450) | 1.772 |

| Mean | 0.716 m | ||

| Real Coordinates | Measured Coordinates | Error/m | |

|---|---|---|---|

| A | (1.045, 0.746) | (1.182, 0.765) | 0.138 |

| B | (5.755, −2.133) | (5.899, −2.129) | 0.134 |

| C | (2.048, −5.954) | (2.160, −5.968) | 0.113 |

| D | (−5.914, −3.256) | (−5.774, −3.290) | 0.144 |

| Mean | 0.132 m | ||

| Actual Distance/m | Distance before Fusion/m | Error/m | Distance after Fusion/m | Error/m | |

|---|---|---|---|---|---|

| 0° | 0.720 | 0.684 | −0.036 | 0.682 | −0.028 |

| 36° | 0.781 | 0.804 | 0.023 | 0.805 | 0.024 |

| 72° | 0.838 | 0.789 | −0.049 | 0.791 | −0.047 |

| 108° | 0.776 | 0.715 | −0.061 | 0.718 | −0.058 |

| 144° | 1.135 | 1.086 | −0.049 | 1.081 | −0.054 |

| 180° | 1.013 | 2.062 | 1.049 | 0.932 | −0.081 |

| 216° | 2.041 | 1.997 | −0.044 | 1.979 | −0.062 |

| 252° | 1.303 | 1.256 | −0.047 | 1.252 | −0.051 |

| 288° | 1.234 | 1.188 | −0.046 | 1.183 | −0.051 |

| 324° | 0.862 | 0.910 | 0.048 | 0.907 | 0.045 |

| MAE/m | 0.1442 | 0.0501 | |||

| RMSE/m | 0.3345 | 0.0524 | |||

| Cartographer Algorithm | Cartographer Compensation Algorithm | |

|---|---|---|

| 93.57% | 97.68% | |

| 95.56% | 96.28% | |

| 97.06% | 97.32% | |

| 96.78% | 97.29% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chi, X.; Meng, Q.; Wu, Q.; Tian, Y.; Liu, H.; Zeng, P.; Zhang, B.; Zhong, C. A Laser Data Compensation Algorithm Based on Indoor Depth Map Enhancement. Electronics 2023, 12, 2716. https://doi.org/10.3390/electronics12122716

Chi X, Meng Q, Wu Q, Tian Y, Liu H, Zeng P, Zhang B, Zhong C. A Laser Data Compensation Algorithm Based on Indoor Depth Map Enhancement. Electronics. 2023; 12(12):2716. https://doi.org/10.3390/electronics12122716

Chicago/Turabian StyleChi, Xiaoni, Qinyuan Meng, Qiuxuan Wu, Yangyang Tian, Hao Liu, Pingliang Zeng, Botao Zhang, and Chaoliang Zhong. 2023. "A Laser Data Compensation Algorithm Based on Indoor Depth Map Enhancement" Electronics 12, no. 12: 2716. https://doi.org/10.3390/electronics12122716

APA StyleChi, X., Meng, Q., Wu, Q., Tian, Y., Liu, H., Zeng, P., Zhang, B., & Zhong, C. (2023). A Laser Data Compensation Algorithm Based on Indoor Depth Map Enhancement. Electronics, 12(12), 2716. https://doi.org/10.3390/electronics12122716