MSGSA: Multi-Scale Guided Self-Attention Network for Crowd Counting

Abstract

1. Introduction

- (1)

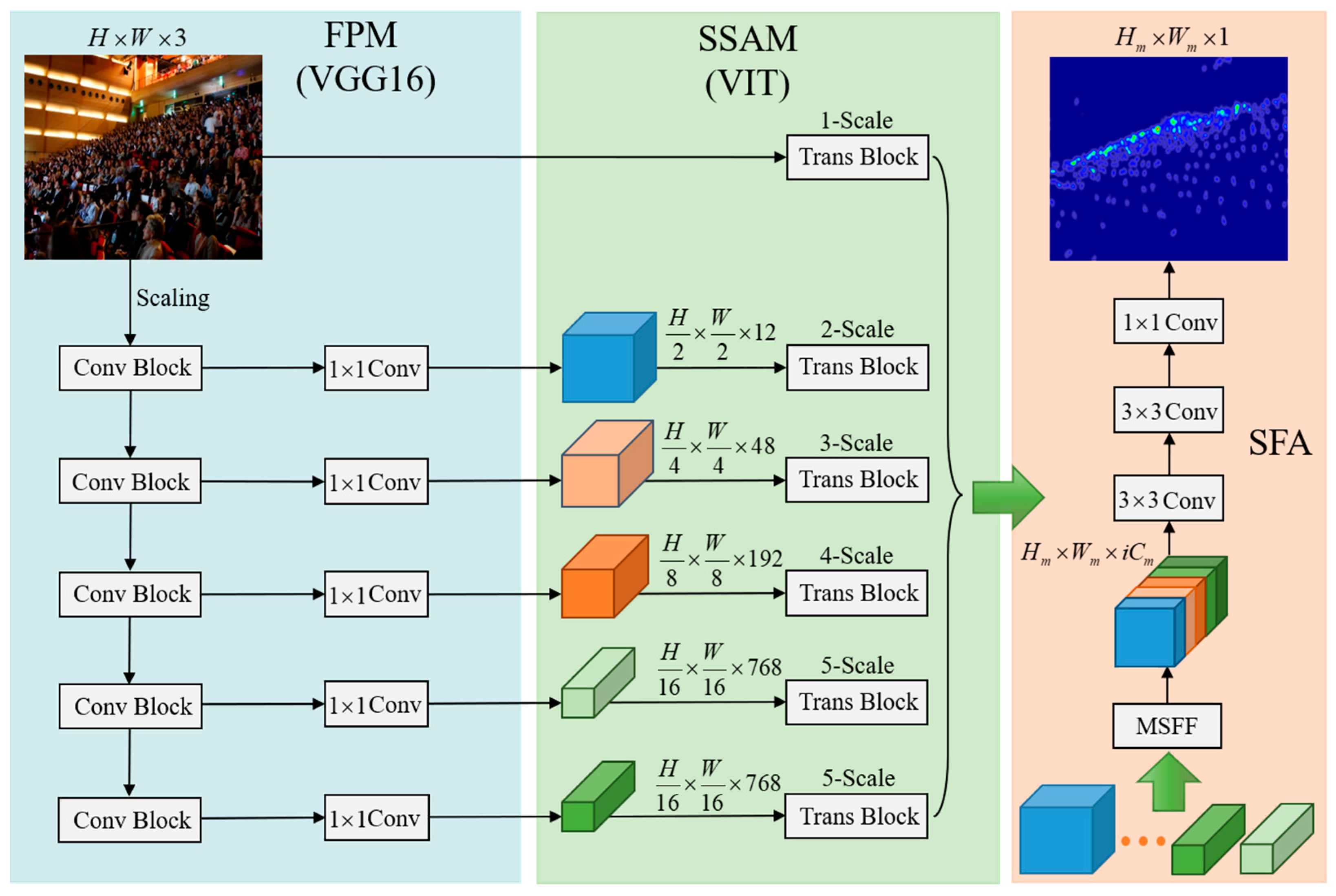

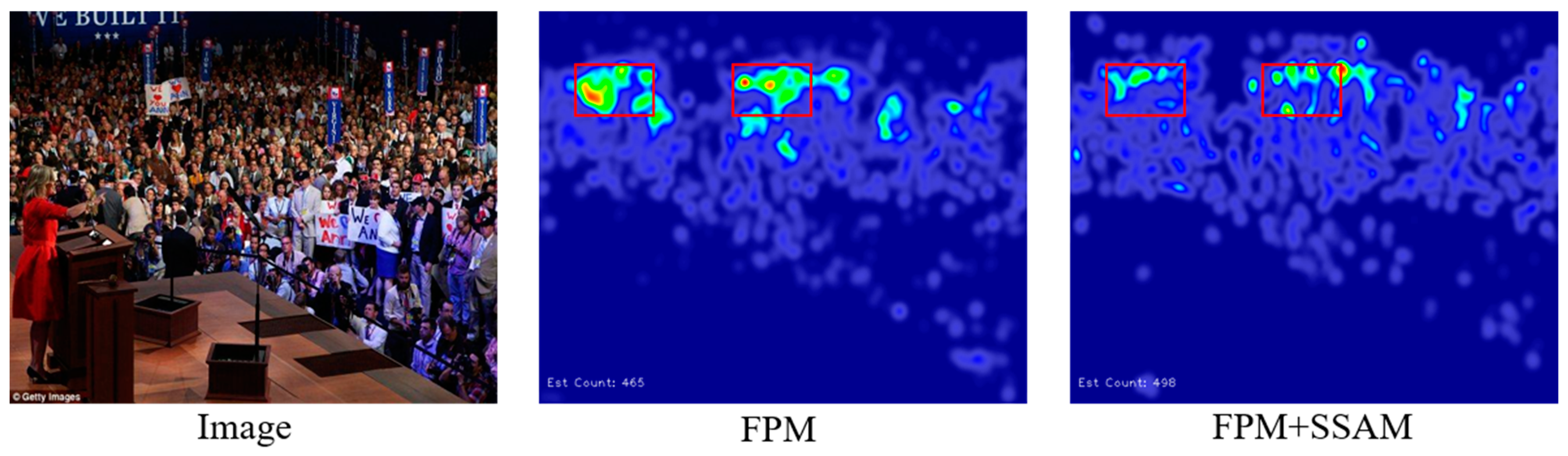

- We design the FPM and the SSAM, which facilitate the interaction between local features and global semantic information. As a result, our approach effectively mitigates the impact of scale variations and complex backgrounds in crowd images.

- (2)

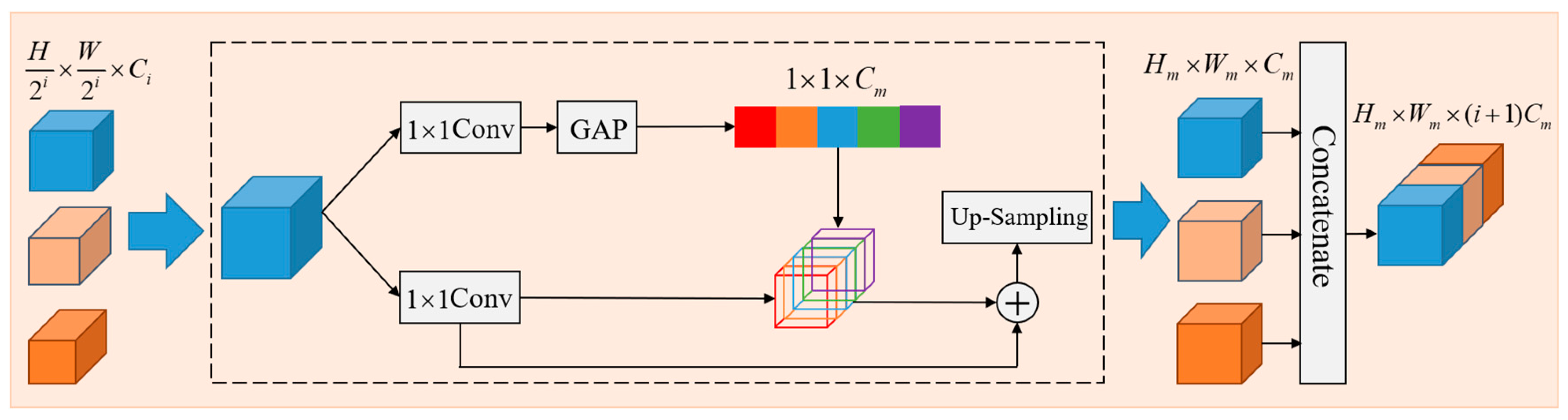

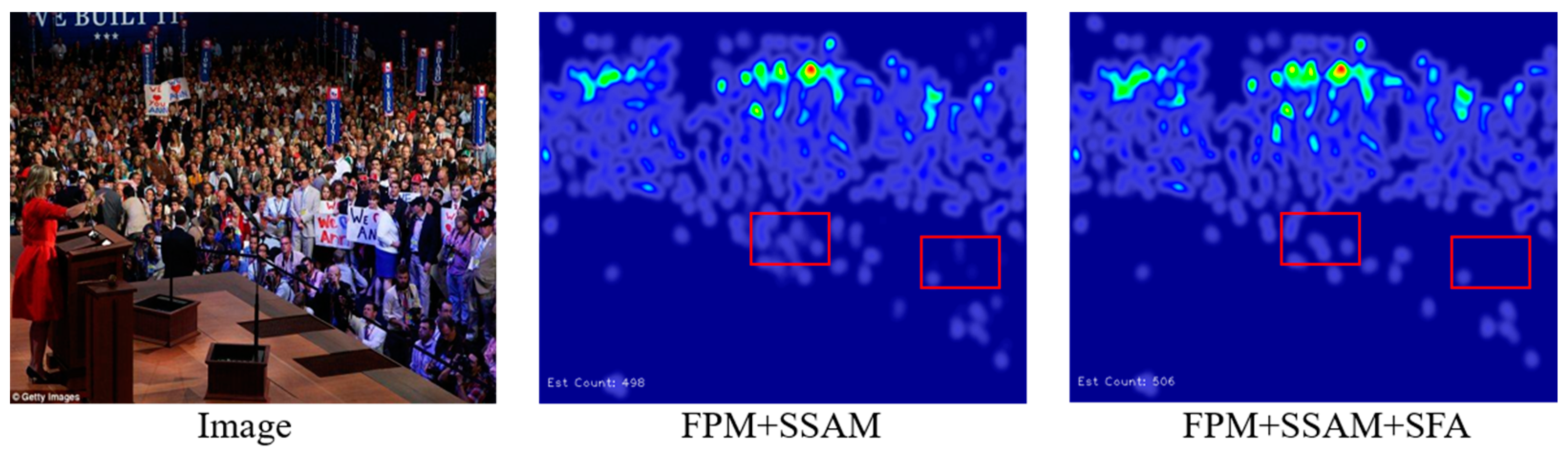

- We introduce the SFA that combines features from different scales to create a scale-aware feature representation. By integrating the global and local information of multi-scale features, the SFA generates accurate density maps that reflect the spatial distribution of crowds.

- (3)

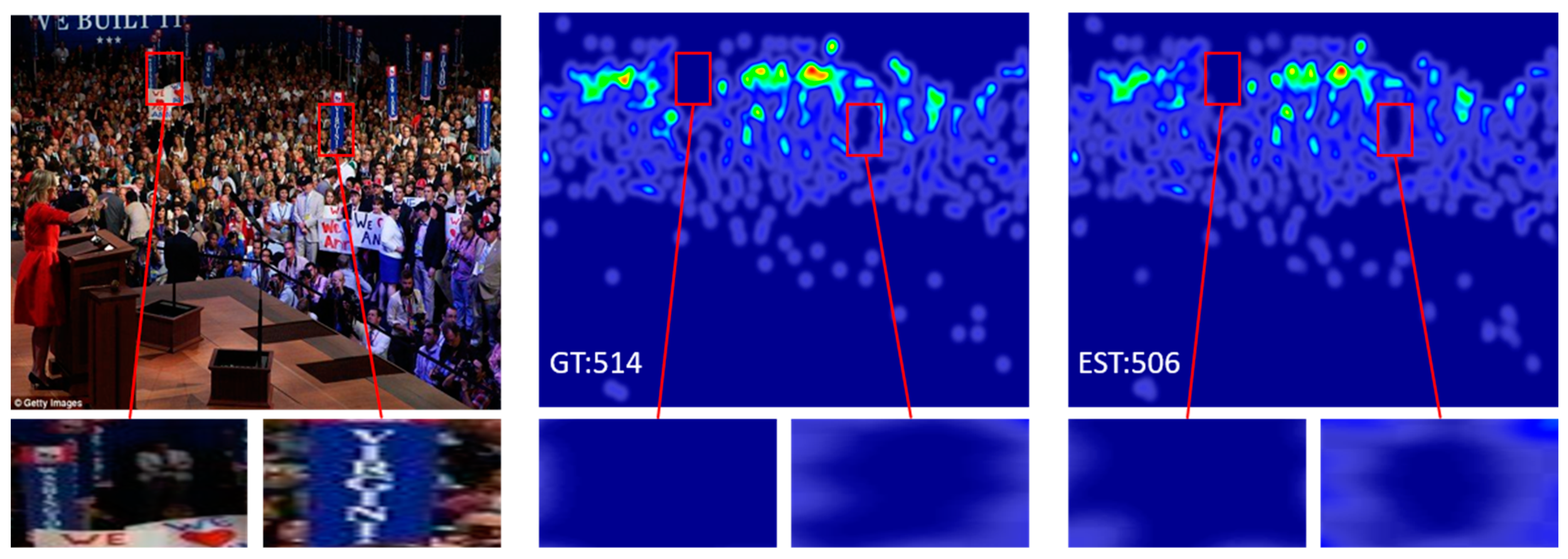

- The proposed method achieved remarkable performance on various benchmark datasets, surpassing most existing methods in terms of accuracy and quality of generated density maps. This state-of-the-art performance demonstrates the effectiveness and potential of our approach in crowd counting.

2. Related Work

2.1. Traditional Crowd Counting

2.2. Crowd Counting Based on CNN

2.3. Crowd Counting Based on Transformer

3. Methodology

3.1. Network Architecture

3.2. Feature Pyramid Module

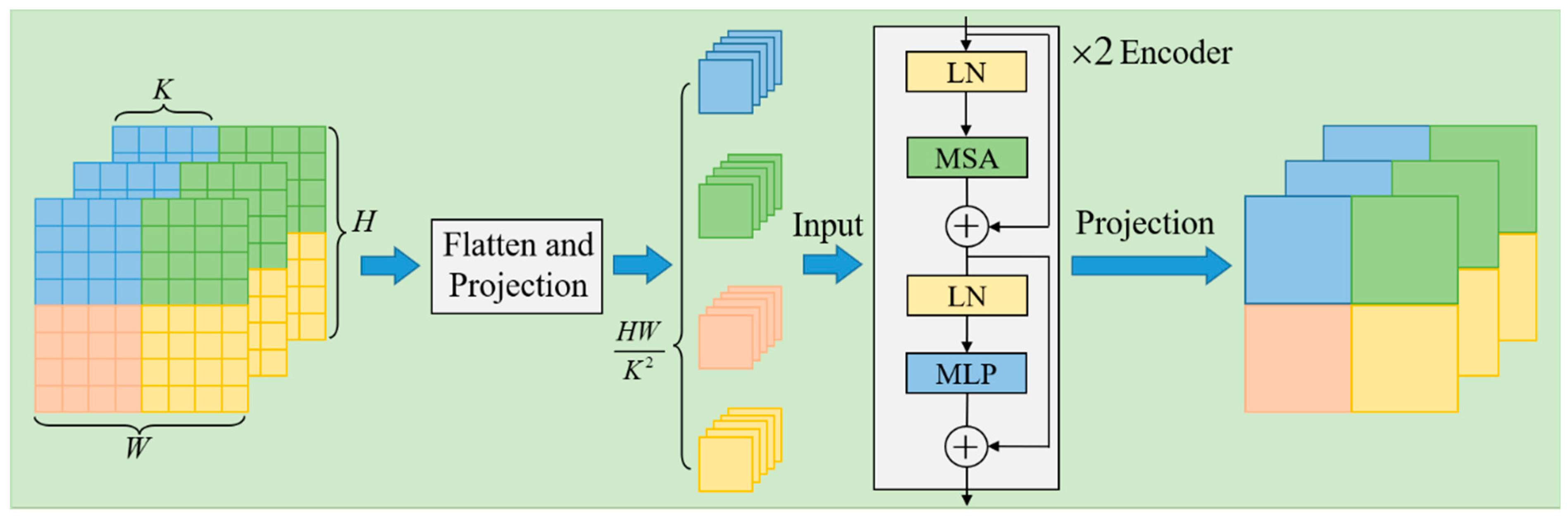

3.3. Scale Self-Attention Module

3.4. Scale-Aware Feature Aggregation Module

3.5. Loss Functions

4. Experiments and Discussion

4.1. Datasets

4.2. Performance Metrics

4.3. Comparison with Different Backbones

4.4. Comparison with the State-of-the-Art

4.5. Ablation Studies

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Hassen, K.B.A.; Machado, J.J.; Tavares, J.M.R. Convolutional neural networks and heuristic methods for crowd counting: A systematic review. Sensors 2022, 22, 5286. [Google Scholar] [CrossRef] [PubMed]

- Li, B.; Huang, H.; Zhang, A.; Liu, P.; Liu, C. Approaches on crowd counting and density estimation: A review. Pattern Anal. Appl. 2021, 24, 853–874. [Google Scholar] [CrossRef]

- Ilyas, N.; Shahzad, A.; Kim, K. Convolutional-neural network-based image crowd counting: Review, categorization, analysis, and performance evaluation. Sensors 2019, 20, 43. [Google Scholar] [CrossRef] [PubMed]

- Shi, Z.; Zhang, L.; Liu, Y.; Cao, X.; Ye, Y.; Cheng, M.-M.; Zheng, G. Crowd counting with deep negative correlation learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5382–5390. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, H.; Liu, W.; Li, M.; Lu, J.; Liu, Z. An efficient semi-supervised manifold embedding for crowd counting. Appl. Soft Comput. 2020, 96, 106634. [Google Scholar] [CrossRef]

- Reddy, M.K.K.; Hossain, M.A.; Rochan, M.; Wang, Y. Few-shot scene adaptive crowd counting using meta-learning. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 2814–2823. [Google Scholar] [CrossRef]

- Sindagi, V.A.; Yasarla, R.; Patel, V.M. Jhu-crowd++: Large-scale crowd counting dataset and a benchmark method. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2594–2609. [Google Scholar] [CrossRef]

- Bai, H.; Mao, J.; Chan, S.H.G. A survey on deep learning-based single image crowd counting: Network design, loss function and supervisory signal. Neurocomputing 2022, 508, 1–18. [Google Scholar] [CrossRef]

- Fan, Z.; Zhang, H.; Zhang, Z.; Lu, G.; Zhang, Y.; Wang, Y. A survey of crowd counting and density estimation based on convolutional neural network. Neurocomputing 2022, 472, 224–251. [Google Scholar] [CrossRef]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 743. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef]

- Chen, K.; Gong, S.; Xiang, T.; Chen, C. Cumulative attribute space for age and crowd density estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2467–2474. [Google Scholar] [CrossRef]

- Shi, Y.; Sang, J.; Wu, Z.; Wang, F.; Liu, X.; Xia, X.; Sang, N. MGSNet: A multi-scale and gated spatial attention network for crowd counting. Appl. Intell. 2022, 52, 15436–15446. [Google Scholar] [CrossRef]

- Wang, F.; Sang, J.; Wu, Z.; Liu, Q.; Sang, N. Hybrid attention network based on progressive embedding scale-context for crowd counting. Inf. Sci. 2022, 591, 306–318. [Google Scholar] [CrossRef]

- Liu, L.; Chen, J.; Wu, H.; Li, G.; Li, C.; Lin, L. Cross-modal collaborative representation learning and a large-scale rgbt benchmark for crowd counting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 4823–4833. [Google Scholar] [CrossRef]

- Jiang, X.; Zhang, L.; Xu, M.; Zhang, T.; Lv, P.; Zhou, B.; Yang, X.; Pang, Y. Attention scaling for crowd counting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4706–4715. [Google Scholar] [CrossRef]

- Miao, Y.; Lin, Z.; Ding, G.; Han, J. Shallow feature based dense attention network for crowd counting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11765–11772. [Google Scholar] [CrossRef]

- Chen, B.; Yan, Z.; Li, K.; Li, P.; Wang, B.; Zuo, W.; Zhang, L. Variational attention: Propagating domain-specific knowledge for multi-domain learning in crowd counting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16065–16075. [Google Scholar] [CrossRef]

- Zhang, C.; Li, H.; Wang, X.; Yang, X. Cross-scene crowd counting via deep convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 833–841. [Google Scholar] [CrossRef]

- Sam, D.B.; Surya, S.; Babu, R.V. Switching convolutional neural network for crowd counting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5744–5752. [Google Scholar] [CrossRef]

- Yang, Y.; Li, G.; Wu, Z.; Su, L.; Huang, Q.; Sebe, N. Reverse perspective network for perspective-aware object counting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4374–4383. [Google Scholar] [CrossRef]

- Song, Q.; Wang, C.; Jiang, Z.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Wu, Y. Rethinking counting and localization in crowds: A purely point-based framework. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3365–3374. [Google Scholar] [CrossRef]

- Cheng, J.; Xiong, H.; Cao, Z.; Lu, H. Decoupled two-stage crowd counting and beyond. IEEE Trans. Image Process. 2021, 30, 2862–2875. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Cao, G.; Ge, Z.; Hu, Y. Crowd counting method via a dynamic-refined density map network. Neurocomputing 2022, 497, 191–203. [Google Scholar] [CrossRef]

- Liang, D.; Chen, X.; Xu, W.; Zhou, Y.; Bai, X. TransCrowd: Weakly-supervised crowd counting with transformers. Sci. China Inf. Sci. 2022, 65, 160104. [Google Scholar] [CrossRef]

- Yang, S.; Guo, W.; Ren, Y. CrowdFormer: An overlap patching vision transformer for top-down crowd counting. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 23–29 July 2022; pp. 1545–1551. [Google Scholar]

- Deng, X.; Chen, S.; Chen, Y.; Xu, J.-F. Multi-level convolutional transformer with adaptive ranking for semi-supervised crowd counting. In Proceedings of the 4th International Conference on Algorithms, Computing and Artificial Intelligence, Sanya, China, 22–24 December 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Lin, H.; Ma, Z.; Ji, R.; Wang, Y.; Hong, X. Boosting crowd counting via multifaceted attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19628–19637. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the Computer Vision-ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Houlsby, N.; et al. An Image is worth 16×16 words: Transformers for image recognition at scale. In Proceedings of the 9th International Conference on Learning Representations, Virtual, 3–7 May 2021; pp. 1–22. [Google Scholar] [CrossRef]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H.; et al. Rethinking semantic segmentation from asequence-to-sequence perspective with transformers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 6881–6890. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable Detr: Deformable transformers for end-to-end object detection. In Proceedings of the 9th International Conference on Learning Representations, Virtual, 3–7 May 2021; pp. 23–39. [Google Scholar] [CrossRef]

- Zhang, J.; Li, C.; Yin, Y.; Zhang, J.; Grzegorzek, M. Applications of artificial neural networks in microorganism image analysis: A comprehensive review from conventional multilayer perceptron to popular convolutional neural network and potential visual transformer. Artif. Intell. Rev. 2023, 56, 1013–1070. [Google Scholar] [CrossRef]

- Tay, Y.; Dehghani, M.; Bahri, D.; Metzler, D. Efficient transformers: A survey. ACM Comput. Surv. 2022, 55, 1–28. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A survey on vision transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Khan, S.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in vision: A survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Zuo, S.; Xiao, Y.; Chang, X.; Wang, X. Vision transformers for dense prediction: A survey. Knowl. Based Syst. 2022, 253, 109552. [Google Scholar] [CrossRef]

- Han, X.; Wang, Y.T.; Feng, J.L.; Deng, C.; Chen, Z.H.; Huang, Y.A.; Su, H.; Hu, L.; Hu, P.W. A survey of transformer-based multimodal pre-trained modals. Neurocomputing 2023, 515, 89–106. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Li, H.; Zhang, S.; Kong, W. RGB-D crowd counting with cross-modal cycle-attention fusion and fine-coarse supervision. IEEE Trans. Ind. Inform. 2022, 19, 306–316. [Google Scholar] [CrossRef]

- Gu, L.; Pang, C.; Zheng, Y.; Lyu, C.; Lyu, L. Context-aware pyramid attention network for crowd counting. Appl. Intell. 2022, 52, 6164–6180. [Google Scholar] [CrossRef]

- Wan, J.; Chan, A. Modeling noisy annotations for crowd counting. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; pp. 3386–3396. [Google Scholar]

- Wan, J.; Liu, Z.; Chan, A.B. A generalized loss function for crowd counting and localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 1974–1983. [Google Scholar] [CrossRef]

- Wan, J.; Wang, Q.; Chan, A.B. Kernel-based density map generation for dense object counting. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1357–1370. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Liu, H.; Samaras, D.; Nguyen, M.H. Distribution matching for crowd counting. Adv. Neural Inf. Process. Syst. 2020, 33, 1595–1607. [Google Scholar] [CrossRef]

- Liu, H.; Zhao, Q.; Ma, Y.; Dai, F. Bipartite matching for crowd counting with point supervision. In Proceedings of the Thirtieth international Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021; pp. 860–866. [Google Scholar]

- Ma, Z.; Wei, X.; Hong, X.; Lin, H.; Qiu, Y.; Gong, Y. Learning to count via unbalanced optimal transport. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 2319–2327. [Google Scholar] [CrossRef]

- Liu, W.; Salzmann, M.; Fua, P. Context-aware crowd counting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5099–5108. [Google Scholar] [CrossRef]

- Song, Q.; Wang, C.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Wu, J.; Ma, J. To choose or to fuse? Scale selection for crowd counting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 2576–2583. [Google Scholar] [CrossRef]

| Dataset | Scale | Number | Annotation | Max | Mean | Min |

|---|---|---|---|---|---|---|

| ShanghaiTech Part_A | unfixed | 482 | 241,677 | 3139 | 501 | 33 |

| ShanghaiTech Part_B | 1024 × 768 | 716 | 88,488 | 578 | 124 | 9 |

| UCF-QNRF | unfixed | 1535 | 1,251,642 | 12,865 | 815 | 49 |

| NWPU-Crowd | unfixed | 5109 | 2,133,375 | 20,033 | 418 | 0 |

| Backbone | Part_A | Part_B | NWPU-Crowd | UCF-QNRF | ||||

|---|---|---|---|---|---|---|---|---|

| MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | |

| VGG16 | 59.3 | 94.8 | 7.5 | 11.9 | 75.8 | 334.6 | 81.7 | 141.0 |

| Resnet-50 | 58.6 | 92.9 | 7.2 | 11.4 | 74.4 | 327.3 | 80.9 | 139.8 |

| Resnet-101 | 57.8 | 91.3 | 6.9 | 10.8 | 73.9 | 323.5 | 80.8 | 137.5 |

| Method | Year | Part_A | Part_B | NWPU-Crowd | UCF-QNRF | ||||

|---|---|---|---|---|---|---|---|---|---|

| MAE | MSE | MAE | MSE | MAE | MSE | MAE | MSE | ||

| TransCrowd [25] | 2022 | 66.1 | 105.1 | 9.3 | 16.1 | 117.7 | 451.0 | 97.2 | 168.5 |

| NoiseCC [42] | 2020 | 61.9 | 99.6 | 7.4 | 11.3 | 96.9 | 534.2 | 85.8 | 150.6 |

| Gloss [43] | 2021 | 61.3 | 95.4 | 7.3 | 11.4 | 79.3 | 346.1 | 84.3 | 147.5 |

| KDMG [44] | 2020 | 63.8 | 99.2 | 7.8 | 12.7 | 100.5 | 415.5 | 99.5 | 173.0 |

| DM-count [45] | 2020 | 59.7 | 95.7 | 7.4 | 11.3 | 88.4 | 357.6 | 85.6 | 148.3 |

| BM-count [46] | 2021 | 57.3 | 90.7 | 7.4 | 11.8 | 83.4 | 358.4 | 81.2 | 138.6 |

| UOT [47] | 2021 | 58.1 | 95.9 | 6.5 | 10.2 | 87.8 | 387.5 | 83.3 | 142.3 |

| CA-Net [48] | 2019 | 62.3 | 100.0 | 7.8 | 12.2 | -- | -- | 107.0 | 183.0 |

| DKPNet [18] | 2021 | 55.6 | 91.0 | 6.6 | 10.9 | 74.5 | 327.4 | 81.4 | 147.2 |

| P2PNet [22] | 2021 | 52.7 | 85.1 | 6.2 | 9.9 | 77.4 | 362.0 | 85.3 | 154.5 |

| SASNet [49] | 2021 | 53.6 | 88.4 | 6.4 | 9.9 | -- | -- | 85.2 | 147.3 |

| Ours | -- | 57.8 | 91.3 | 6.9 | 10.8 | 73.9 | 323.5 | 80.8 | 137.5 |

| Module | ShanghaiTech Part_A | |

|---|---|---|

| MAE | MSE | |

| FPM | 69.1 | 114.3 |

| FPM + SSAM | 61.5 | 98.4 |

| FPM + SSAM | 59.3 | 94.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Li, M.; Guo, H.; Zhang, L. MSGSA: Multi-Scale Guided Self-Attention Network for Crowd Counting. Electronics 2023, 12, 2631. https://doi.org/10.3390/electronics12122631

Sun Y, Li M, Guo H, Zhang L. MSGSA: Multi-Scale Guided Self-Attention Network for Crowd Counting. Electronics. 2023; 12(12):2631. https://doi.org/10.3390/electronics12122631

Chicago/Turabian StyleSun, Yange, Meng Li, Huaping Guo, and Li Zhang. 2023. "MSGSA: Multi-Scale Guided Self-Attention Network for Crowd Counting" Electronics 12, no. 12: 2631. https://doi.org/10.3390/electronics12122631

APA StyleSun, Y., Li, M., Guo, H., & Zhang, L. (2023). MSGSA: Multi-Scale Guided Self-Attention Network for Crowd Counting. Electronics, 12(12), 2631. https://doi.org/10.3390/electronics12122631