Enhanced Information Graph Recursive Network for Traffic Forecasting

Abstract

1. Introduction

- (1)

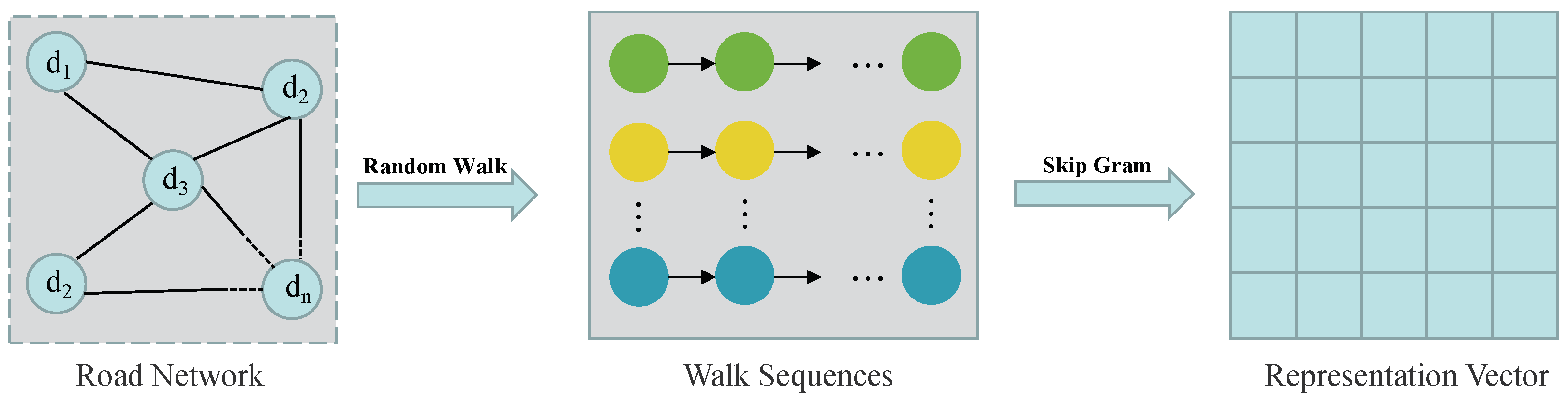

- Given that a traditional GCN only relies on a given topological graph to obtain the spatial correlation of data, a graph embedding-based adaptive matrix is designed to capture the hidden spatial dependence and learn the unique parameters of the GCN in each node.

- (2)

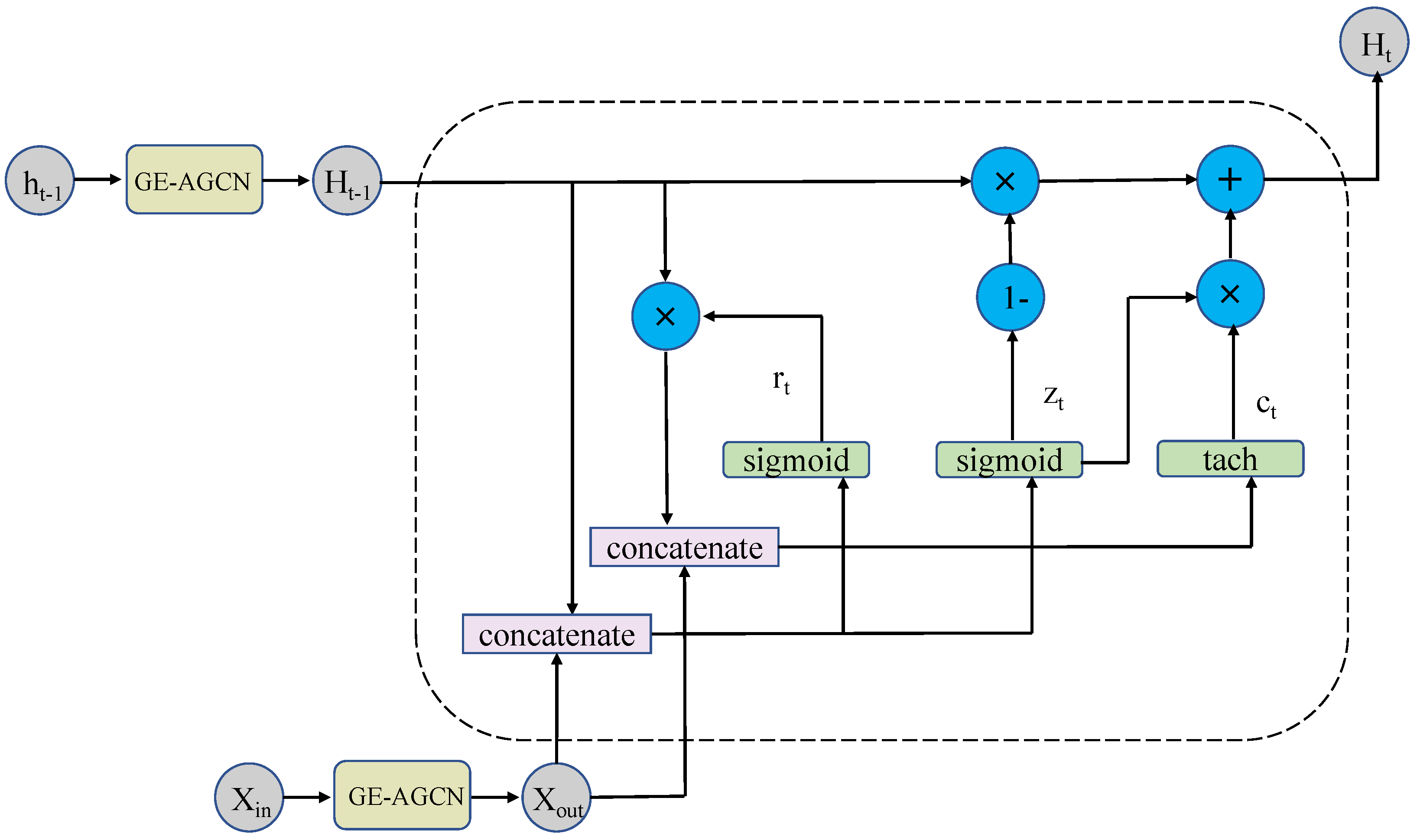

- In order to incorporate spatial relations while processing time sequences, we make in the GRU pass through the spatial model before entering the GRU so that learns the spatial correlation.

- (3)

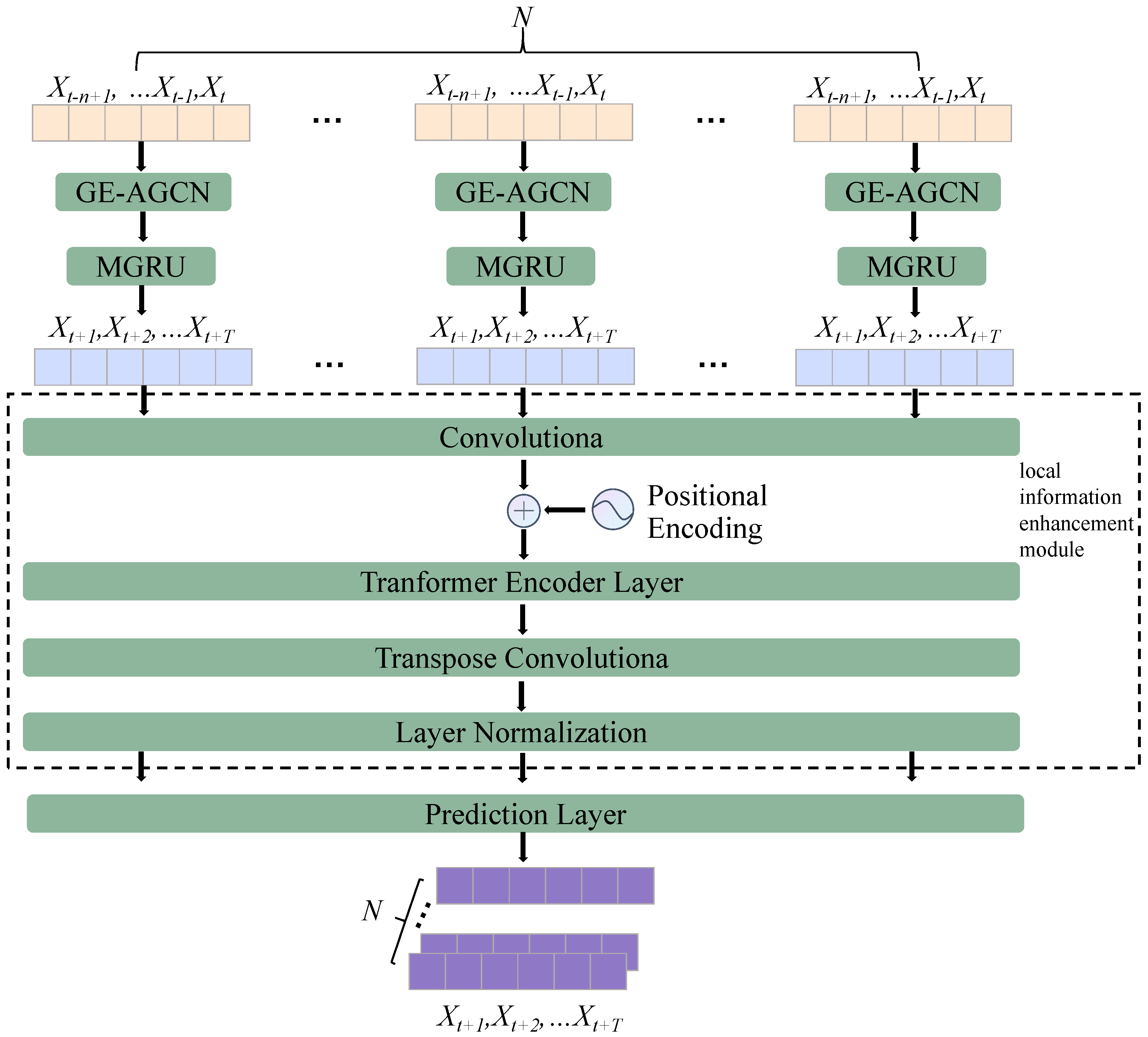

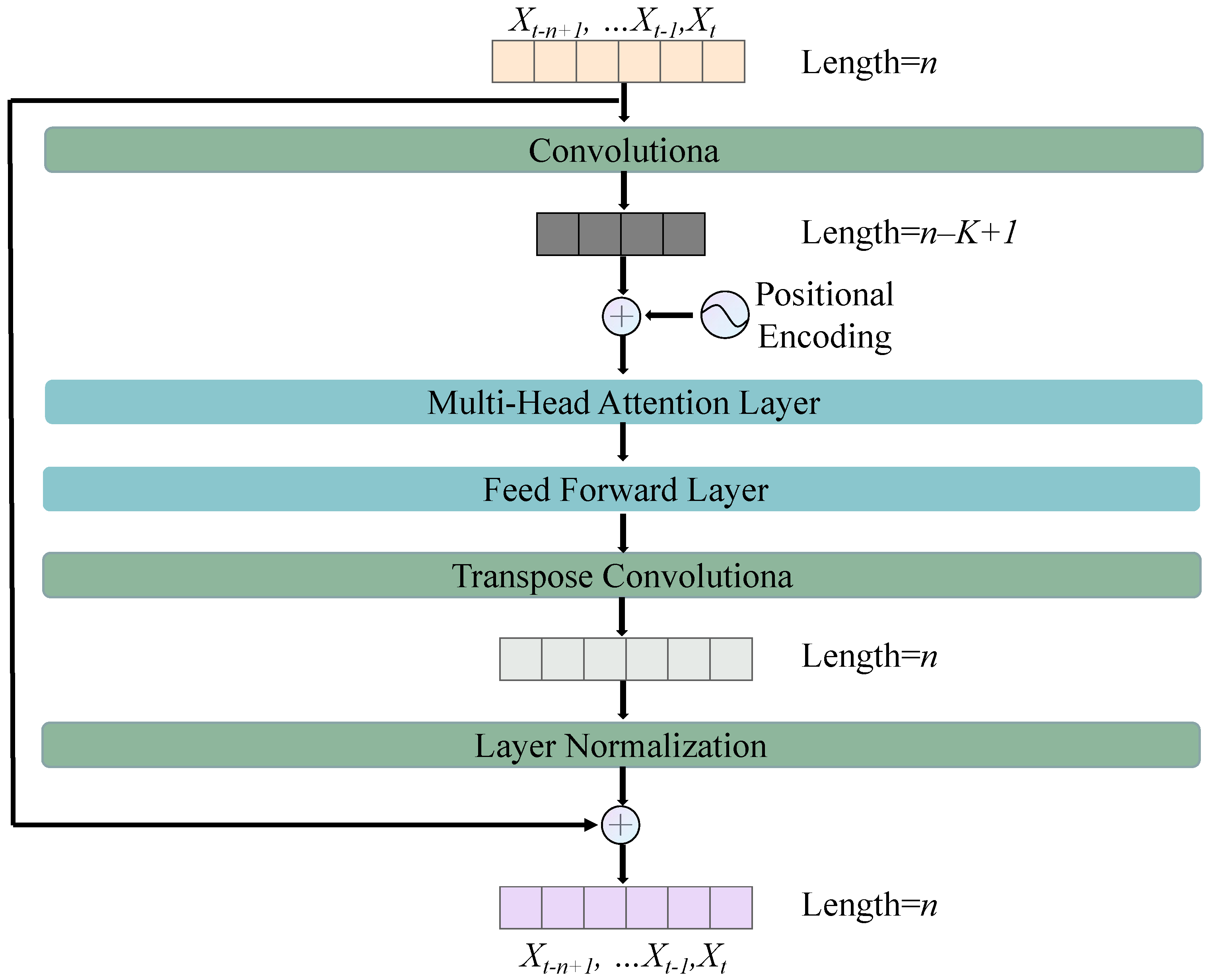

- The local information enhancement module is composed of a CNN and an attention mechanism and is designed to simultaneously capture the global and local correlations of data.

2. Related Works

3. Methodology

3.1. Problem Definition

3.2. Overview

3.3. Methodology

3.3.1. Modeling the Spatial Correlation

3.3.2. Modeling the Temporal Correlation

3.3.3. Global and Local Correlations

4. Experiments

4.1. Data Description

4.2. Evaluation Metrics

4.3. Hyperparameters

Baseline Methods

- (1)

- History Average (HA) model [31]: This model uses the average traffic information of the historical period for forecasting.

- (2)

- ARIMA [3]: Parameter model fitting of the observation time series is carried out to predict future traffic data.

- (3)

- Fully-connected LSTM (FC-LSTM) [32]: An RNN with fully connected LSTM hidden units.

- (4)

- STGCN [33]: The spatio-temporal graph convolution network integrates graph convolution into a one-dimensional convolution unit.

- (5)

- DRCNN [14]: This model combines a GCN with recursive units controlled by an encoder–decoder gate.

- (6)

- Graph WaveNet [15]: This model combines an adaptive adjacency matrix GCN with causal convolution.

- (7)

- STSGCN [34]: The STSGCN captures localized correlations independently by using localized spatial-temporal subgraph modules.

- (8)

- STTN [35]: The STNN dynamically captures spatio-temporal dependence using a Transformer model.

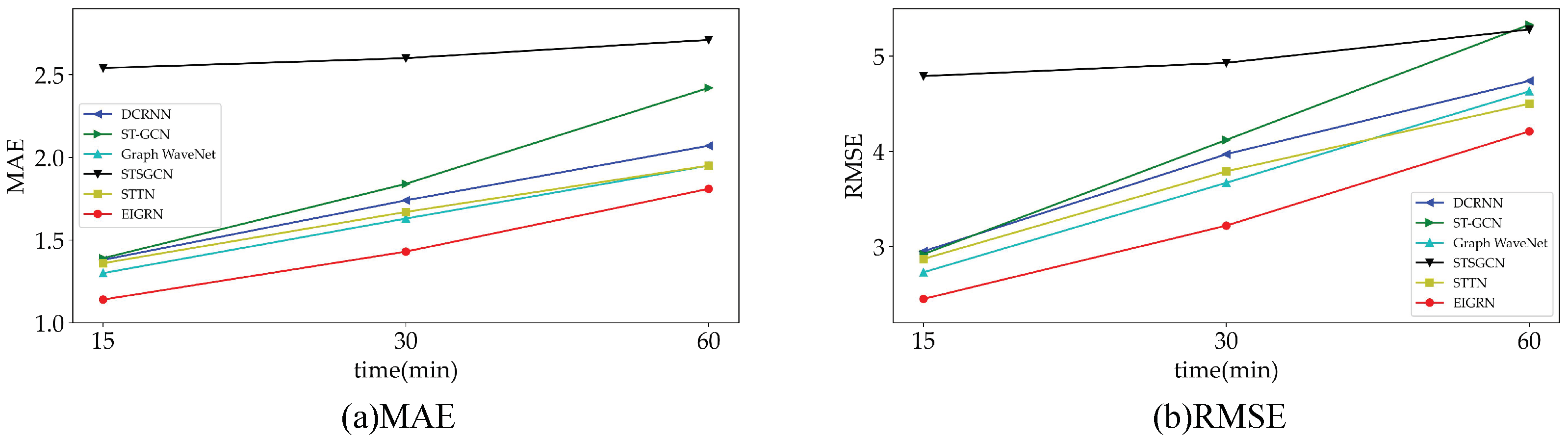

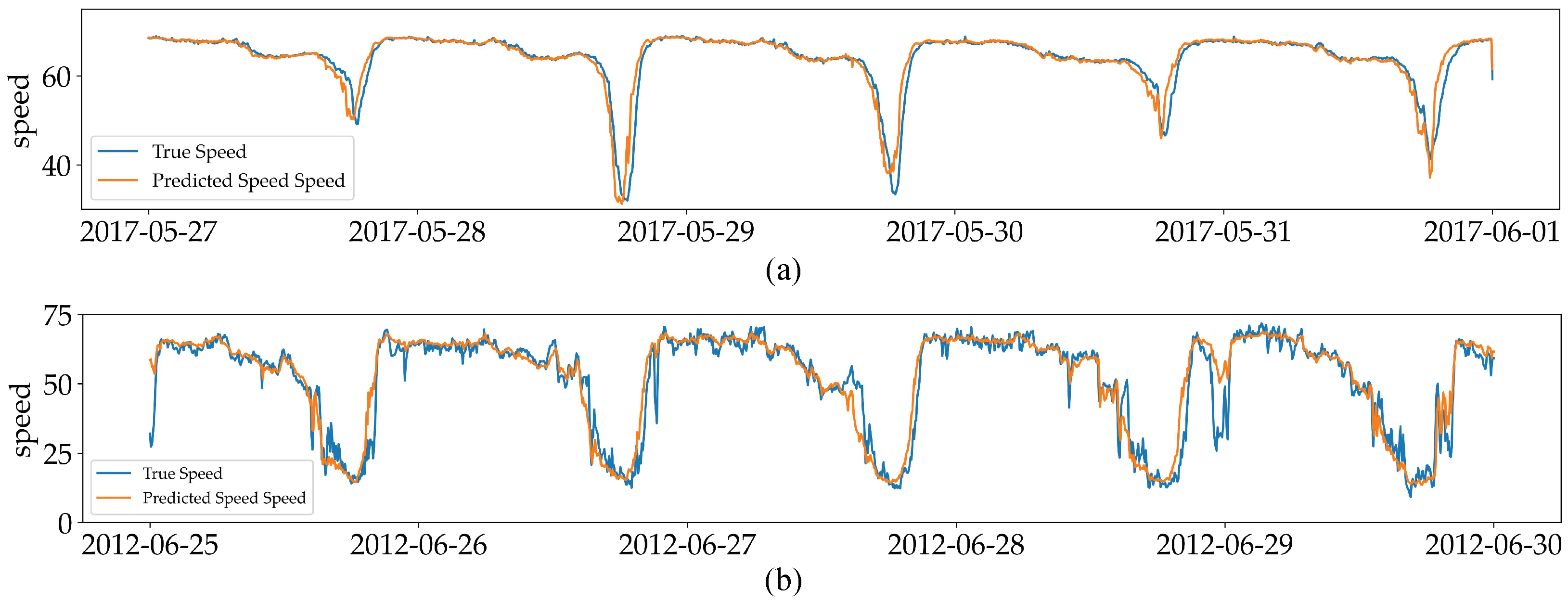

4.4. Experimental Results

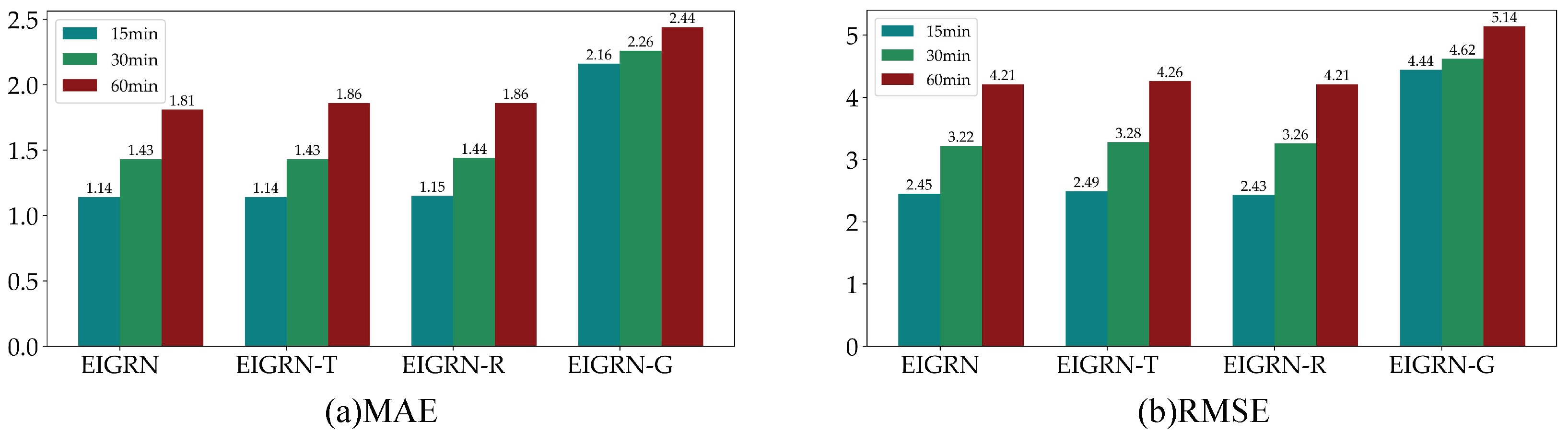

4.5. Ablation Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jian, Y.; Bingquan, F. Synthesis of short-term traffic flow forecasting research progress. Urban Transp. China 2012, 10, 73–79. [Google Scholar]

- Ahmed, M.S.; Cook, A.R. Analysis of freeway traffic time-series data by using Box-Jenkins techniques. Transp. Res. Rec. 1979, 722, 1–9. [Google Scholar]

- Hamed, M.M.; Al-Masaeid, H.R.; Said, Z.M.B. Short-term prediction of traffic volume in urban arterials. J. Transp. Eng. 1995, 121, 249–254. [Google Scholar] [CrossRef]

- Okutani, I.; Stephanedes, Y.J. Dynamic prediction of traffic volume through Kalman filtering theory. Transp. Res. Part B Methodol. 1984, 18, 1–11. [Google Scholar] [CrossRef]

- Wu, C.H.; Ho, J.M.; Lee, D.T. Travel-time prediction with support vector regression. IEEE Trans. Intell. Transp. Syst. 2004, 5, 276–281. [Google Scholar] [CrossRef]

- Yao, Z.s.; Shao, C.f.; Gao, Y.l. Research on methods of short-term traffic forecasting based on support vector regression. J. Beijing Jiaotong Univ. 2006, 30, 19–22. [Google Scholar]

- Sun, S.; Zhang, C.; Yu, G. A Bayesian network approach to traffic flow forecasting. IEEE Trans. Intell. Transp. Syst. 2006, 7, 124–132. [Google Scholar] [CrossRef]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28, 802–810. [Google Scholar]

- Zhang, J.; Zheng, Y.; Qi, D. Deep spatio-temporal residual networks for citywide crowd flows prediction. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Zhang, H.; Chen, L.; Cao, J.; Zhang, X.; Kan, S. A combined traffic flow forecasting model based on graph convolutional network and attention mechanism. Int. J. Mod. Phys. C 2021, 32, 2150158. [Google Scholar] [CrossRef]

- Ye, J.; Zhao, J.; Ye, K.; Xu, C. How to build a graph-based deep learning architecture in traffic domain: A survey. IEEE Trans. Intell. Transp. Syst. 2020, 23, 3904–3924. [Google Scholar] [CrossRef]

- Yin, X.; Wu, G.; Wei, J.; Shen, Y.; Qi, H.; Yin, B. A comprehensive survey on traffic prediction. arXiv 2020, arXiv:2004.08555. [Google Scholar]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-gcn: A temporal graph convolutional network for traffic prediction. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3848–3858. [Google Scholar] [CrossRef]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. arXiv 2017, arXiv:1707.01926. [Google Scholar]

- Wu, Z.; Pan, S.; Long, G.; Jiang, J.; Zhang, C. Graph wavenet for deep spatial-temporal graph modeling. arXiv 2019, arXiv:1906.00121. [Google Scholar]

- Bai, L.; Yao, L.; Li, C.; Wang, X.; Wang, C. Adaptive graph convolutional recurrent network for traffic forecasting. Adv. Neural Inf. Process. Syst. 2020, 33, 17804–17815. [Google Scholar]

- Li, M.; Zhu, Z. Spatial-temporal fusion graph neural networks for traffic flow forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 4189–4196. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Zhang, S.; Guo, Y.; Zhao, P.; Zheng, C.; Chen, X. A graph-based temporal attention framework for multi-sensor traffic flow forecasting. IEEE Trans. Intell. Transp. Syst. 2021, 23, 7743–7758. [Google Scholar] [CrossRef]

- An, J.; Guo, L.; Liu, W.; Fu, Z.; Ren, P.; Liu, X.; Li, T. IGAGCN: Information geometry and attention-based spatiotemporal graph convolutional networks for traffic flow prediction. Neural Netw. 2021, 143, 355–367. [Google Scholar] [CrossRef]

- Wang, X.; Ma, Y.; Wang, Y.; Jin, W.; Wang, X.; Tang, J.; Jia, C.; Yu, J. Traffic flow prediction via spatial temporal graph neural network. In Proceedings of the Web Conference 2020, Taipei, Taiwan, 20–24 April 2020; pp. 1082–1092. [Google Scholar]

- Liao, L.; Hu, Z.; Zheng, Y.; Bi, S.; Zou, F.; Qiu, H.; Zhang, M. An improved dynamic Chebyshev graph convolution network for traffic flow prediction with spatial-temporal attention. Appl. Intell. 2022, 54, 16104–16116. [Google Scholar] [CrossRef]

- Lan, S.; Ma, Y.; Huang, W.; Wang, W.; Yang, H.; Li, P. Dstagnn: Dynamic spatial-temporal aware graph neural network for traffic flow forecasting. In Proceedings of the International Conference on Machine Learning. PMLR, Baltimore, MD, USA, 17–23 July 2022; pp. 11906–11917. [Google Scholar]

- Li, S.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of transformer on time series forecasting. Adv. Neural Inf. Process. Syst. 2019, 32, 5243–5253. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Grover, A.; Leskovec, J. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 855–864. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. Adv. Neural Inf. Process. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Lai, S.; Liu, K.; He, S.; Zhao, J. How to generate a good word embedding. IEEE Intell. Syst. 2016, 31, 5–14. [Google Scholar] [CrossRef]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar]

- Liu, J.; Wei, G. A Summary of Traffic Flow Forecasting Methods. J. Highw. Transp. Res. Dev. 2004, 21, 82–85. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. Adv. Neural Inf. Process. Syst. 2014, 27, 3104–3112. [Google Scholar]

- Yu, B.; Yin, H.; Zhu, Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. arXiv 2017, arXiv:1709.04875. [Google Scholar]

- Song, C.; Lin, Y.; Guo, S.; Wan, H. Spatial-temporal synchronous graph convolutional networks: A new framework for spatial-temporal network data forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–8 February 2020; Volume 34, pp. 914–921. [Google Scholar]

- Xu, M.; Dai, W.; Liu, C.; Gao, X.; Lin, W.; Qi, G.J.; Xiong, H. Spatial-temporal transformer networks for traffic flow forecasting. arXiv 2020, arXiv:2001.02908. [Google Scholar]

| Model | Advantage | Disadvantage |

|---|---|---|

| T-GCN | Better spatio-temporal prediction ability | Spatial prediction using the original topology is insufficient |

| DCRNN | Uses diffusion convolution operations to capture spatial dependencies. | The correlation of the data is ignored. |

| Graph Wavenet | Uses an adaptive adjacency matrix to learn hidden spatial correlation. | All nodes share the same parameters. |

| AGCRN | Two adaptive modules of enhanced graph convolution are proposed to learn the hidden relationships between different traffic sequences. | The correlation of the data is ignored. |

| STGNN | The hidden spatial information of the data is obtained through the relative position representation of the road, and the global correlation of the data is captured using an attention mechanism. | The local information of the data is ignored. |

| Model | PEMS-BAY (15/30/60 min) | PeMSD7(M) (15/30/60 min) | ||

|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | |

| HA | 2.88 | 5.59 | 4.01 | 7.20 |

| ARIMA | 1.62/2.33/3.38 | 3.30/4.76/6.50 | 5.55/5.86/6.27 | 9.00/9.13/9.38 |

| FC-LSTM | 2.05/2.20/2.37 | 4.19/4.55/4.96 | 3.57/3.92/4.16 | 6.20/7.03/7.51 |

| DCRNN | 1.38/1.74/2.07 | 2.95/3.97/4.74 | 2.25/2.98/3.83 | 4.04/5.58/7.19 |

| STGCN | 1.39/1.84/2.42 | 2.92/4.12/5.33 | 2.24/3.02/4.01 | 4.07/5.70/7.55 |

| Graph WaveNet | 1.30/1.63/1.95 | 2.73/3.67/4.63 | 2.14/2.80/3.19 | 4.01/5.48/6.25 |

| STSGCN | 2.54/2.60/2.71 | 4.79/4.93/5.28 | 1.99/2.43/3.04 | 3.59/4.63/6.01 |

| STTN | 1.36/1.67/1.95 | 2.87/3.79/4.50 | 2.14/2.70/* | 4.04/5.37/* |

| EIGRN | 1.14/1.43/1.81 | 2.45/3.22/4.21 | 1.75/2.26/2.91 | 3.30/4.38/5.75 |

| Dataset | Average Training Time (Epoch) | ||

|---|---|---|---|

| 15 min | 30 min | 60 min | |

| PEMSBAY | 238 | 298 | 121 |

| PeMSD7(M) | 129 | 98 | 54 |

| Model | PEMS-BAY (15/30/60 min) | PeMSD7(M) (15/30/60 min) | ||

|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | |

| EIGRN | 1.14/1.43/1.81 | 2.45/3.22/4.21 | 1.75/2.26/2.91 | 3.30/4.38/5.75 |

| EIGRN-T | 1.14/1.43/1.86 | 2.49/3.28/4.26 | 1.76/2.29/2.98 | 3.31/4.45/5.84 |

| EIGRN-R | 1.15/1.44/1.86 | 2.43/3.26/4.21 | 1.76/2.27/2.96 | 3.33/4.44/5.88 |

| EIGRN-G | 2.16/2.26/2.44 | 4.44/4.62/5.14 | 3.81/3.83/4.11 | 7.47/7.50/7.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, C.; Sun, K.; Chang, L.; Qu, Z. Enhanced Information Graph Recursive Network for Traffic Forecasting. Electronics 2023, 12, 2519. https://doi.org/10.3390/electronics12112519

Ma C, Sun K, Chang L, Qu Z. Enhanced Information Graph Recursive Network for Traffic Forecasting. Electronics. 2023; 12(11):2519. https://doi.org/10.3390/electronics12112519

Chicago/Turabian StyleMa, Cheng, Kai Sun, Lei Chang, and Zhijian Qu. 2023. "Enhanced Information Graph Recursive Network for Traffic Forecasting" Electronics 12, no. 11: 2519. https://doi.org/10.3390/electronics12112519

APA StyleMa, C., Sun, K., Chang, L., & Qu, Z. (2023). Enhanced Information Graph Recursive Network for Traffic Forecasting. Electronics, 12(11), 2519. https://doi.org/10.3390/electronics12112519