Performance Improvement of Speech Emotion Recognition Systems by Combining 1D CNN and LSTM with Data Augmentation

Abstract

1. Introduction

2. Related Works

3. Database and Feature Extraction

3.1. Databases

3.2. Data Augmentation

3.3. Feature Value

- Step 1

- Pre-emphasis can enhance the high-frequency part of the speech signal. This step simulates the human ear’s automatic gain for high-frequency waves. The Equation (1) reveals the calculation of pre-emphasis.in which is the original signals and is the resulting signals after pre-emphasis.

- Step 2

- Framing helps the designed system to better analyze the relationship between signals and time-changing. The length of each frame is set to 256 points, and the overlap rate between frames is set to 50% in this study.

- Step 3

- For the purpose of reducing the interference caused by the discontinuity between frames when Fast Fourier transform is applied, all frames have to be multiplied by the Hamming window in Equation (2).

- Step 4

- The logarithmic power can be obtained by calculating the spectrum after Fourier transform through the triangular filter in Equation (3).in which is the number of filters, and is the center frequency of the th filter.

- Step 5

- The discrete cosine transform of the logarithmic power is used to obtain the MFCCs of audio signals based on Equations (4) and (5).in which is the spectrum of each frame in speech, and is the resulting MFCCs.

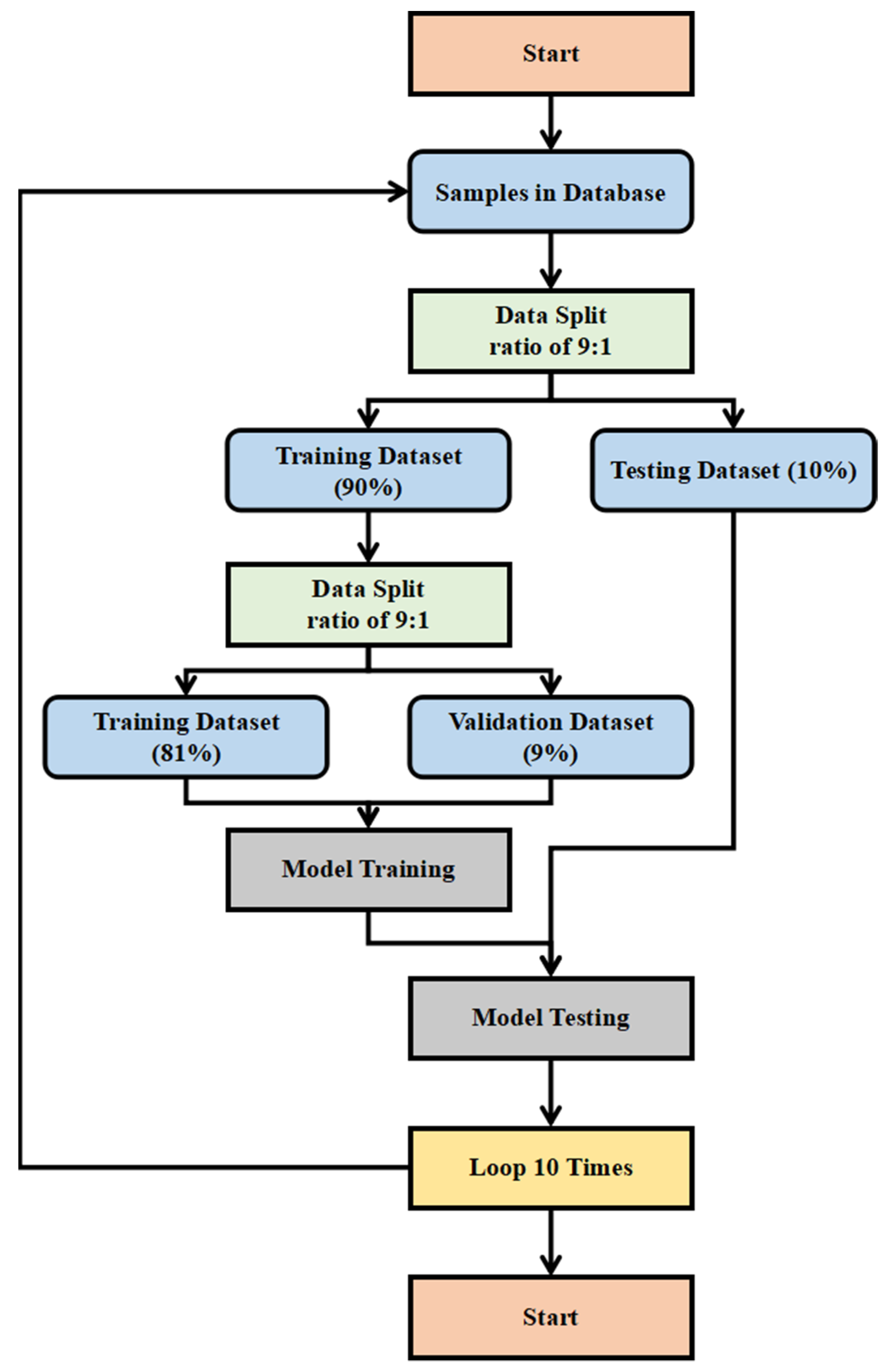

4. Methods and Experiments

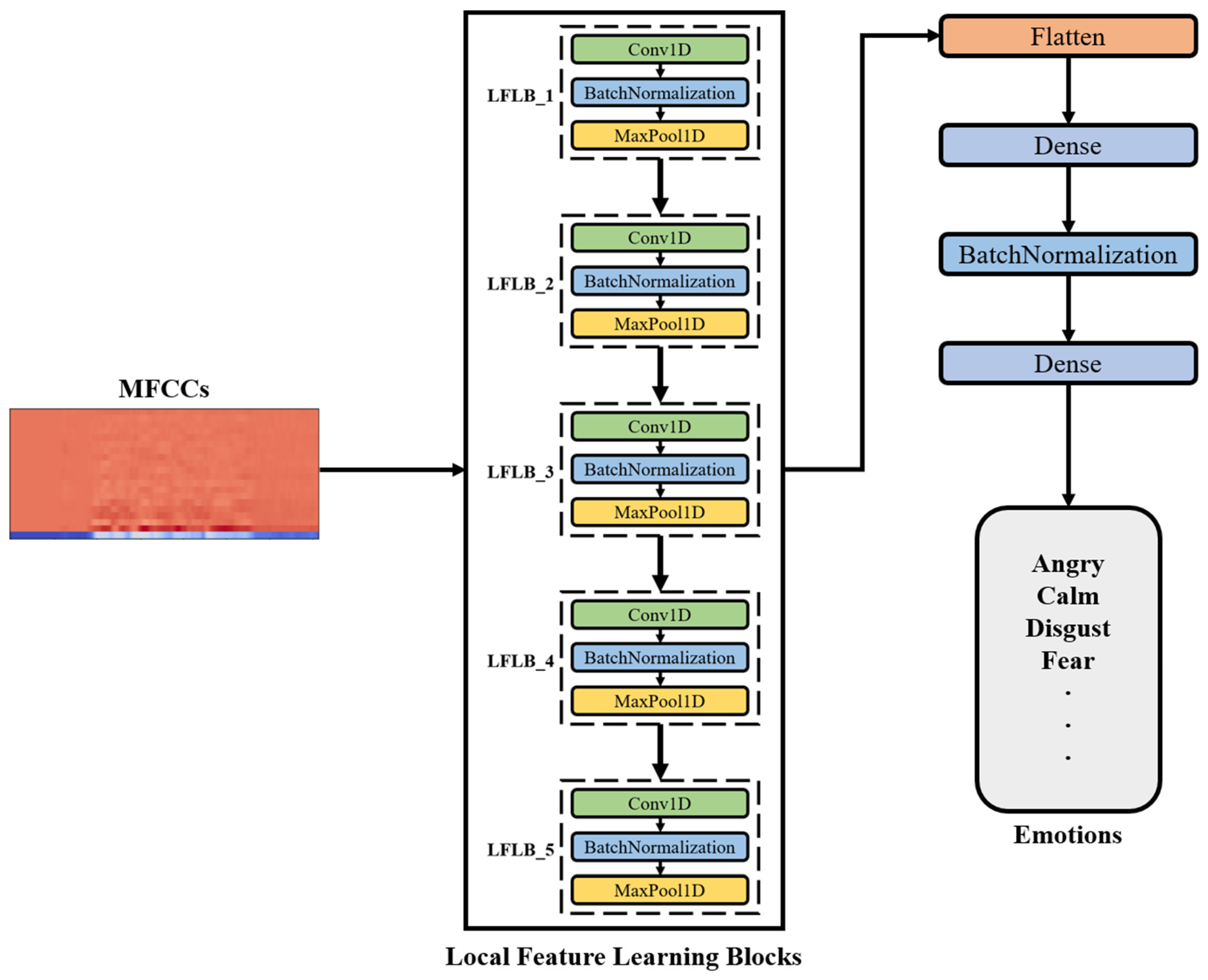

4.1. CNN-DNN Model

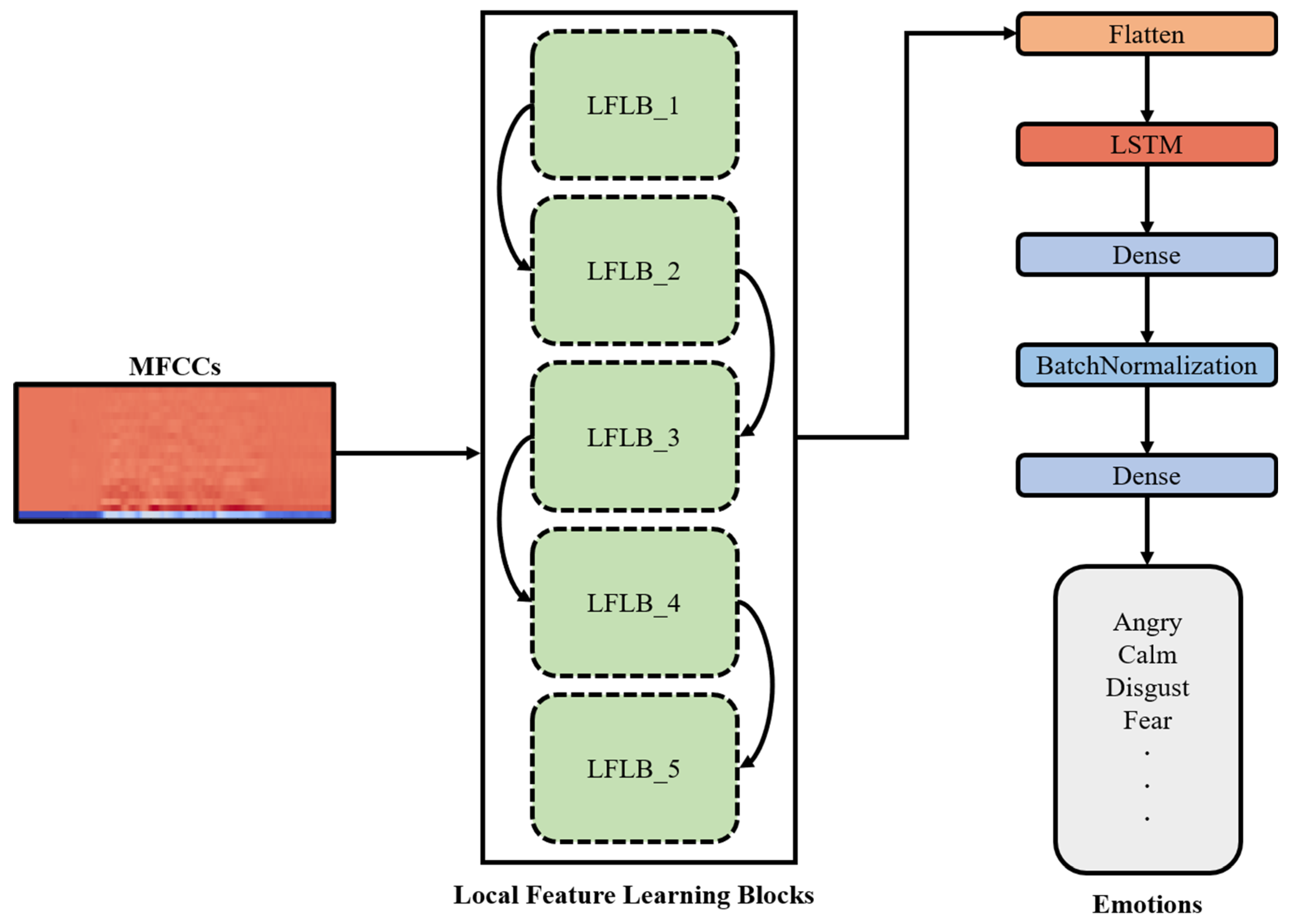

4.2. CLDNN Model

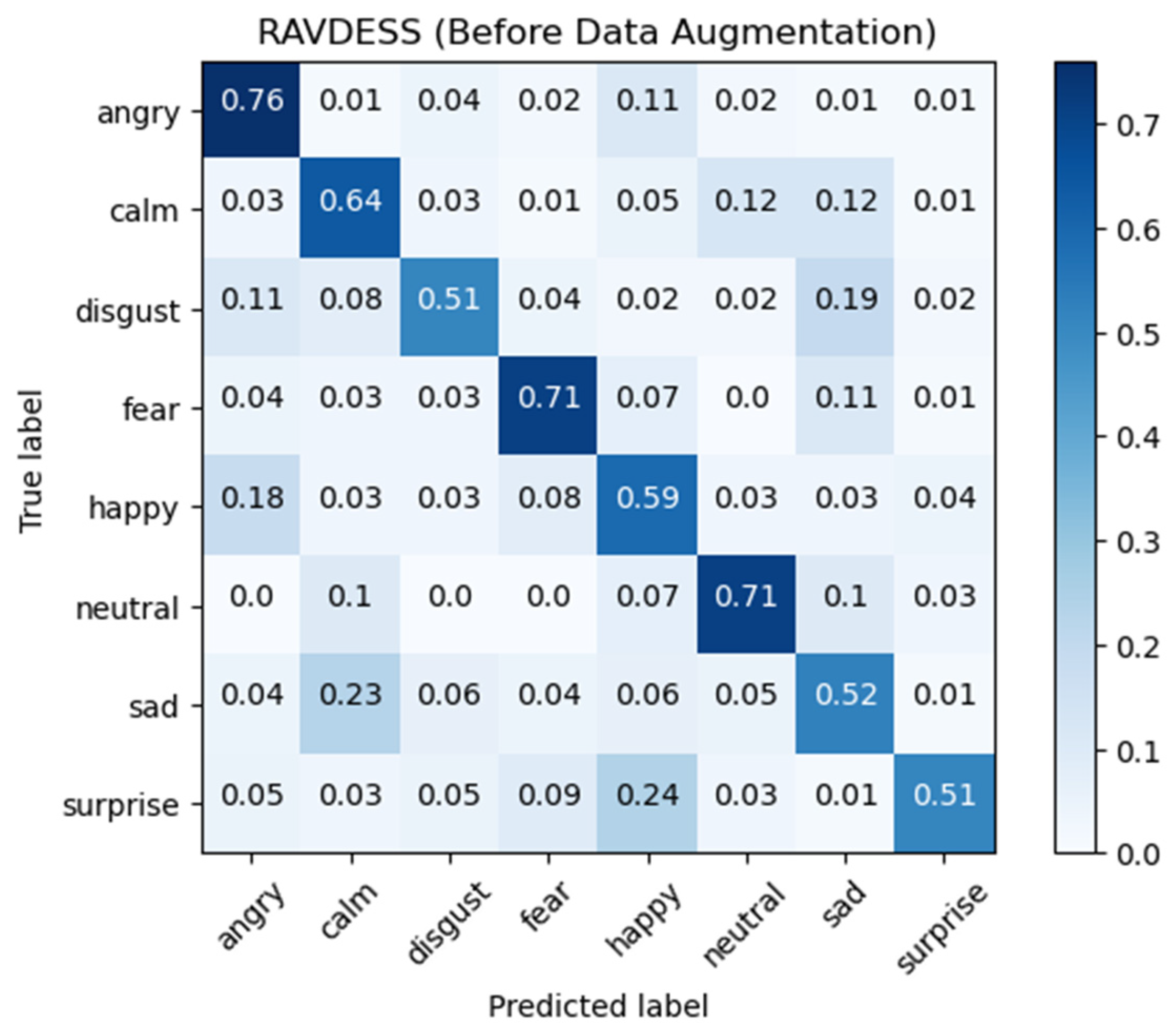

5. Results and Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Roccetti, M.; Ghini, V.; Pau, G.; Salomoni, P.; Bonfigli, M.E. Design and experimental evaluation of an adaptive playout delay control mechanism for packetized audio for use over the internet. Multimed. Tools Appl. 2001, 14, 23–53. [Google Scholar] [CrossRef]

- Weng, Z.; Qin, Z.; Tao, X.; Pan, C.; Liu, G.; Li, G.Y. Deep Learning Enabled Semantic Communications with Speech Recognition and Synthesis. arXiv 2022, arXiv:2205.04603. [Google Scholar] [CrossRef]

- Chung, J.S.; Nagrani, A.; Zisserman, A. Voxceleb2: Deep Speaker Recognition. arXiv 2018, arXiv:1806.05622. [Google Scholar]

- Valk, J.; Alumae, T. Voxlingua107: A Dataset for Spoken Language Recognition. In Proceedings of the IEEE Spoken Language Technology Workshop, Shenzhen, China, 19–22 January 2021. [Google Scholar]

- Sajjad, M.; Kwon, S. Clustering Based Speech Emotion Recognition by Incorporating Learned Features and Deep BiLSTM. IEEE Access 2020, 8, 79861–79875. [Google Scholar]

- Kwon, S. A CNN-Assisted Enhanced Audio Signal Processing for Speech Emotion Recognition. Sensors 2020, 20, 183. [Google Scholar]

- Bhavan, A.; Chauhan, P.; Shah, R.R. Bagged Support Vector Machines for Emotion Recognition from Speech. Knowl. Based Syst. 2019, 184, 104886. [Google Scholar] [CrossRef]

- Kakuba, S.; Poulose, A.; Han, D.S. Attention-Based Multi-Learning Approach for Speech Emotion Recognition with Dilated Convolution. IEEE Access 2022, 10, 122302–122313. [Google Scholar] [CrossRef]

- Amjad, A.; Khan, L.; Chang, H.T. Recognizing Semi-Natural and Spontaneous Speech Emotions Using Deep Neural Networks. IEEE Access 2022, 10, 37149–37163. [Google Scholar] [CrossRef]

- Zhao, J.; Mao, X.; Chen, L. Speech Emotion Recognition Using Deep 1D & 2D CNN LSTM Networks. Biomed. Signal Process Control 2019, 47, 312–323. [Google Scholar]

- Khan, M.; Kwon, S. CLSTM: Deep Feature-Based Speech Emotion Recognition Using the Hierarchical ConvLSTM Network. Mathematics 2020, 8, 2133. [Google Scholar]

- Chen, Z.Q.; Pan, S.T. Integration of Speech and Consecutive Facial Image for Emotion Recognition Based on Deep Learning. Master’s Thesis, National University of Kaohsiung, Kaohsiung, Taiwan, 2021. [Google Scholar]

- Amazon Polly. Available online: https://aws.amazon.com/polly/ (accessed on 5 April 2023).

- Google Speech-to-Text. Available online: https://cloud.google.com/speech-to-text (accessed on 5 April 2023).

- NVIDIA Emotion Classification. Available online: https://docs.nvidia.com/tao/tao-toolkit/text/emotion_classification/emotion_classification (accessed on 5 April 2023).

- Abbaschian, B.J.; Sosa, D.S.; Elmaghraby, A. Deep Learning Techniques for Speech Emotion Recognition, from Databases to Models. Sensor 2021, 21, 1249. [Google Scholar] [CrossRef] [PubMed]

- Han, W.; Jiang, T.; Li, Y.; Schuller, B.; Ruan, H. Ordinal Learning for Emotion Recognition in Customer Service Calls. In Proceedings of the ICASSP, Barcelona, Spain, 4–8 May 2020; pp. 6494–6498. [Google Scholar]

- Dhuheir, M.; Albaseer, A.; Baccour, E.; Erbad, A.; Abdallah, M.; Hamdi, M. Emotion Recognition for Healthcare Surveillance Systems using Neural Networks: A Survey. In Proceedings of the International Wireless Communications and Mobile Computing, Harbin City, China, 28 June–2 July 2021; pp. 681–687. [Google Scholar]

- Gomez-Canon, J.S.; Cano, E.; Eerola, T.; Herrera, P.; Hu, X.; Yang, Y.H.; Gomez, E. Music Emotion Recognition: Toward New, Robust Standards in Personalized and Context-Sensitive Applications. IEEE Signal Process. Mag. 2021, 38, 106–114. [Google Scholar] [CrossRef]

- Zhang, R.; Yin, Z.; Wu, Z.; Zhou, S. A novel automatic modulation classification method using attention mechanism and hybrid parallel neural network. Appl. Sci. 2021, 11, 1327. [Google Scholar] [CrossRef]

- Kulin, M.; Kazaz, T.; Poorter, E.D.; Moerman, I. A survey on machine learning-based performance improvement of wireless networks: PHY, MAC and network layer. Electronics 2021, 10, 318. [Google Scholar] [CrossRef]

- Mirmozaffari, M.; Yazdani, M.; Boskabadi, A.; Dolatsara, H.A.; Kabirifar, K.; Golilarz, N.A. A novel machine learning approach combined with optimization models for eco-efficiency evaluation. Appl. Sci. 2020, 10, 5210. [Google Scholar] [CrossRef]

- Noroznia, H.; Gandomkar, M.; Nikoukar, J.; Aranizadeh, A.; Mirmozaffari, M. A Novel Pipeline Age Evaluation: Considering Overall Condition Index and Neural Network Based on Measured Data. Mach. Learn. Knowl. Extr. 2023, 5, 252–268. [Google Scholar] [CrossRef]

- Han, X.; Chen, S.; Chen, M.; Yang, J. Radar specific emitter identification based on open-selective kernel residual network. Digit. Signal Process. 2023, 134, 103913. [Google Scholar] [CrossRef]

- Sainath, T.N.; Vinyals, O.; Senior, A.; Sak, H. Convolutional Long Short-Term Memory Fully Connected Deep Neural Networks. In Proceedings of the ICASSP, South Brisbane, QLD, Australia, 19–24 April 2015; pp. 4580–4584. [Google Scholar]

- Johnson, J.M.; Khoshgoftaar, T.M. Survey on Deep Learning with Class Imbalance. J. Big Data 2019, 6, 27. [Google Scholar] [CrossRef]

- Leng, Y.; Zhao, W.; Lin, C.; Sun, C.; Wang, R.; Yuan, Q.; Li, D. LDA-Based Data Augmentation Algorithm for Acoustic Scene Classification. Knowl. Based Syst. 2022, 195, 105600. [Google Scholar] [CrossRef]

- Jahangir, R.; Teh, Y.W.; Mujtaba, G.; Alroobaea, R.; Shaikh, Z.H.; Ali, I. Convolutional Neural Network-based Cross-corpus Speech Emotion Recognition with Data Augmentation and Features Fusion. Mach. Vis. Appl. 2022, 33, 41. [Google Scholar] [CrossRef]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A Dynamic, Multimodal Set of Facial and Vocal Expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef] [PubMed]

- Burkhardt, F.; Paeschke, A.; Rolfes, M.; Sendlmeier, W.F.; Weiss, B. A Database of German Emotional Speech. In Proceedings of the Interspeech 2015—Eurospeech, 9th European Conference on Speech Communication and Technology, Lisbon, Portugal, 4–8 September 2005. [Google Scholar]

- Busso, C.; Bulut, M.; Lee, C.C.; Kazemzadeh, A.; Mower, E.; Samuel, K.; Chang, J.N.; Lee, S.; Narayanan, S.S. IEMOCAP: Interactive Emotional Dyadic Motion Capture Database. Lang. Resour. Eval. 2018, 42, 335–359. [Google Scholar] [CrossRef]

- Wang, K.; An, N.; Li, B.; Zhang, Y.; Li, L. Speech Emotion Recognition Using Fourier Parameters. IEEE Trans. Affect. Comput. 2015, 6, 69–75. [Google Scholar] [CrossRef]

- Móstoles, R.; Griol, D.; Callejas, Z.; Fernández-Martínez, F. A Proposal for Emotion Recognition using Speech Features, Transfer Learning and Convolutional Neural Networks. Iberspeech 2021, 12, 55–60. [Google Scholar]

- Wu, H.J.; Pan, S.-T. Combination of 1D CNN and LSTM for Realization of Speech Emotion Recognition. In Proceedings of the 27th International Conference on Technologies and Applications of Artificial Intelligence (TAAI 2022), Tainan, Taiwan, 1–3 December 2022. [Google Scholar]

| Label | Number of Data | Proportion |

|---|---|---|

| Angry | 376 | 15.33% |

| Calm | 376 | 15.33% |

| Disgust | 192 | 7.83% |

| Fear | 376 | 15.33% |

| Happy | 376 | 15.33% |

| Neutral | 188 | 7.39% |

| Sad | 376 | 15.33% |

| Surprised | 192 | 7.83% |

| Total | 2452 | 100% |

| Label | Number of Data | Proportion |

|---|---|---|

| Angry | 127 | 23.74% |

| Boredom | 81 | 15.14% |

| Disgust | 46 | 8.6% |

| Fear | 69 | 12.9% |

| Happy | 71 | 13.27% |

| Neutral | 79 | 14.77% |

| Sad | 62 | 11.59% |

| Total | 535 | 100% |

| Label | Number of Data | Proportion |

|---|---|---|

| Angry | 1103 | 19.94% |

| Happy | 1636 | 29.58% |

| Neutral | 1708 | 30.88% |

| Sad | 1084 | 19.6% |

| Total | 5531 | 100% |

| Experimental Environment | |

|---|---|

| CPU | Intel® Core™ i7-10700 CPU 2.90 GHz Manufacturer: Intel Corporation, Santa Clara, CA, USA |

| GPU | NVIDIA GeForce RTX 3090 32 GB Manufacturer: NVIDIA Corporation, Santa Clara, CA, USA |

| IDE | Jupyter notebook (Python 3.7.6) |

| Cross-validation | 10-fold |

| Layer | Information | |

|---|---|---|

| LFLB 1 | Conv1D (input) BatchNormalization MaxPooling1D | filters = 256, kernel_size = 5, strides = 1 pool_size = 5, strides = 2 |

| LFLB 2 | Conv1D BatchNormalization MaxPooling1D | filters = 128, kernel_size = 5, strides = 1 pool_size = 5, strides = 2 |

| LFLB 3 | Conv1D BatchNormalization MaxPooling1D | filters = 128, kernel_size = 5, strides = 1 pool_size = 5, strides = 2 |

| LFLB 4 | Conv1D BatchNormalization MaxPooling1D | filters = 64, kernel_size = 3, strides = 1 pool_size = 5, strides = 2 |

| LFLB 5 | Conv1D BatchNormalization MaxPooling1D | filters = 64, kernel_size = 3, strides = 1 pool_size = 3, strides = 2 |

| Flatten | ||

| Dense | units = 256, activation = “relu” | |

| BatchNormalization | ||

| Dense (output) | activation = “softmax” | |

| Layer | Information |

|---|---|

| Conv1D (input) | filters = 256, kernel_size = 5, strides = 1 |

| Conv1D | filters = 128, kernel_size = 5, strides = 1 |

| Conv1D | filters = 128, kernel_size = 5, strides = 1 |

| Conv1D | filters = 64, kernel_size = 3, strides = 1 |

| Conv1D | filters = 64, kernel_size = 3, strides = 1 |

| Flatten | |

| LSTM | units = 50 |

| Dense | units = 256, activation = “relu” |

| Dense (output) | units = 8, activation = “softmax” |

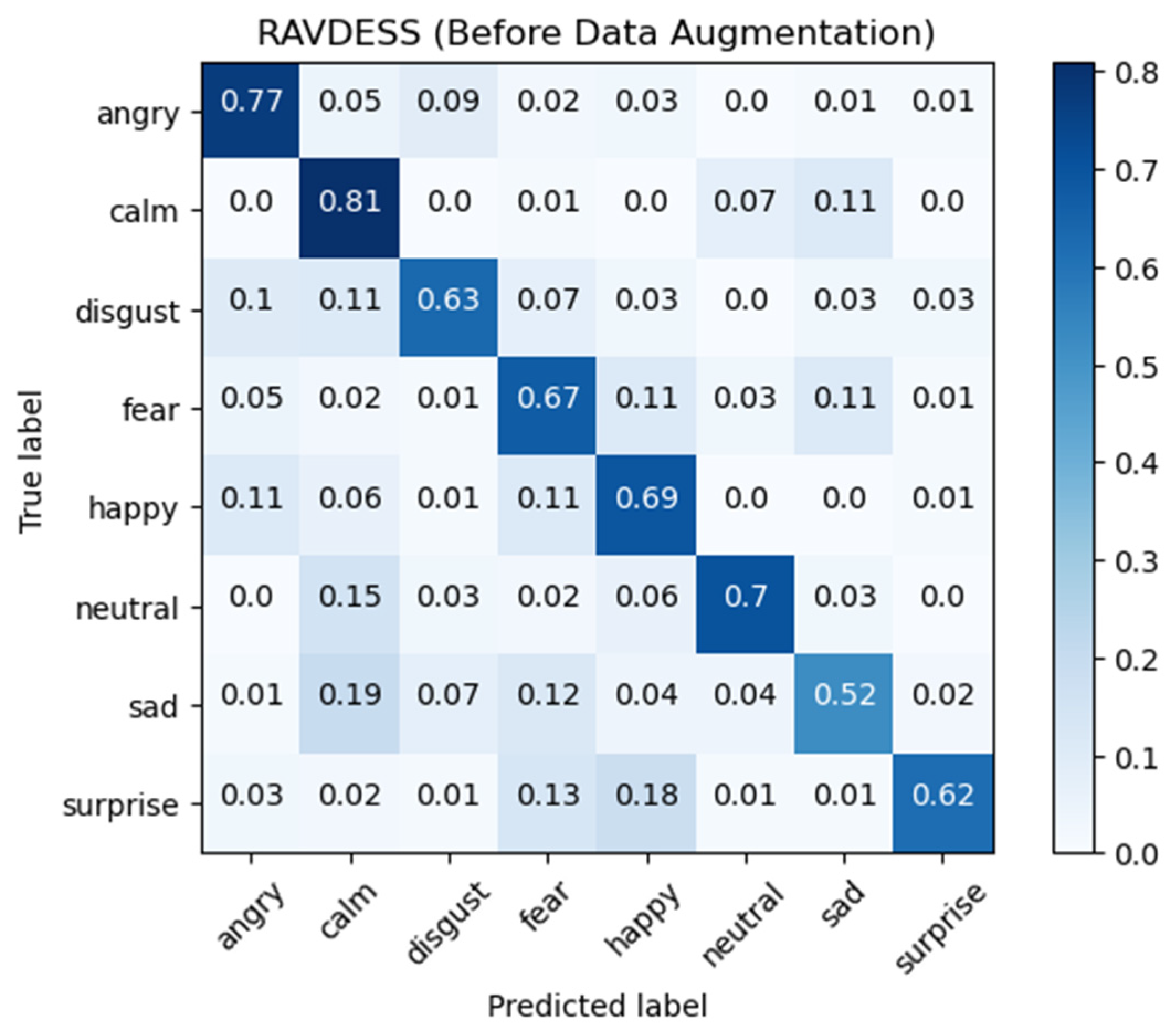

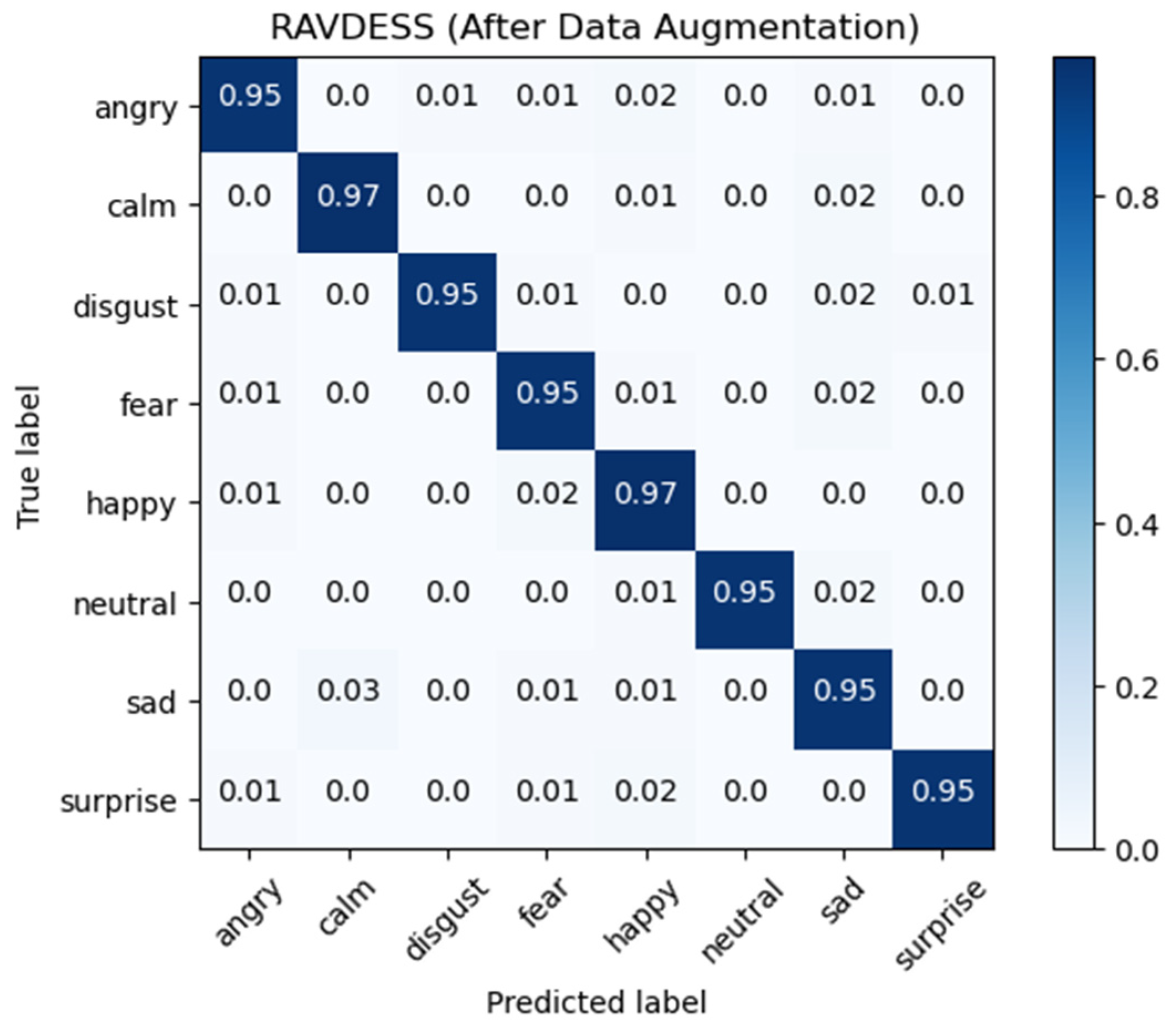

| Model | Raw Audio Data | Data Augmentation |

|---|---|---|

| CNN-DNN | 63.41% | 91.87% |

| CLDNN | 68.70% | 95.52% |

| Label | Precision | Recall | F1-Score |

|---|---|---|---|

| Angry | 93.54% | 93.85% | 93.69% |

| Calm | 93.53% | 95.99% | 94.74% |

| Disgust | 95.02% | 88.86% | 91.84% |

| Fear | 88.91% | 91.56% | 90.22% |

| Happy | 90.52% | 90.96% | 90.74% |

| Neutral | 92.84% | 91.07% | 91.95% |

| Sad | 88.35% | 91.52% | 89.91% |

| Surprised | 97.42% | 88.18% | 92.57% |

| Average | 92.52% | 91.50% | 91.96% |

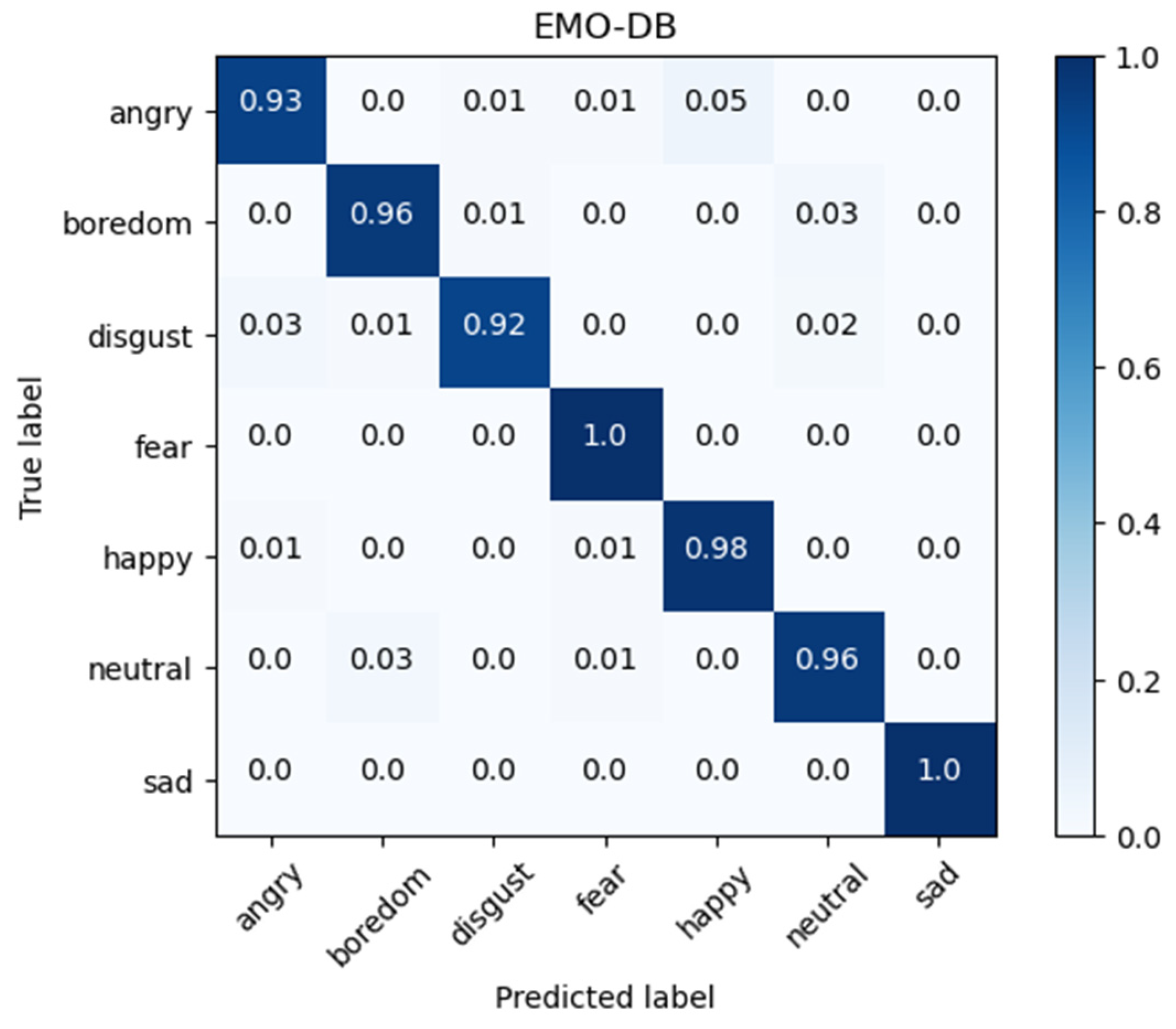

| Label | Precision | Recall | F1-Score |

|---|---|---|---|

| Angry | 98.04% | 92.59% | 95.24% |

| Boredom | 95.53% | 95.86% | 95.70% |

| Disgust | 95.10% | 92.38% | 93.72% |

| Fear | 94.65% | 100% | 97.25% |

| Happy | 90.44% | 98.15% | 94.14% |

| Neutral | 95.71% | 95.71% | 95.71% |

| Sad | 100% | 99.60% | 99.80% |

| Average | 95.64% | 96.33% | 95.94% |

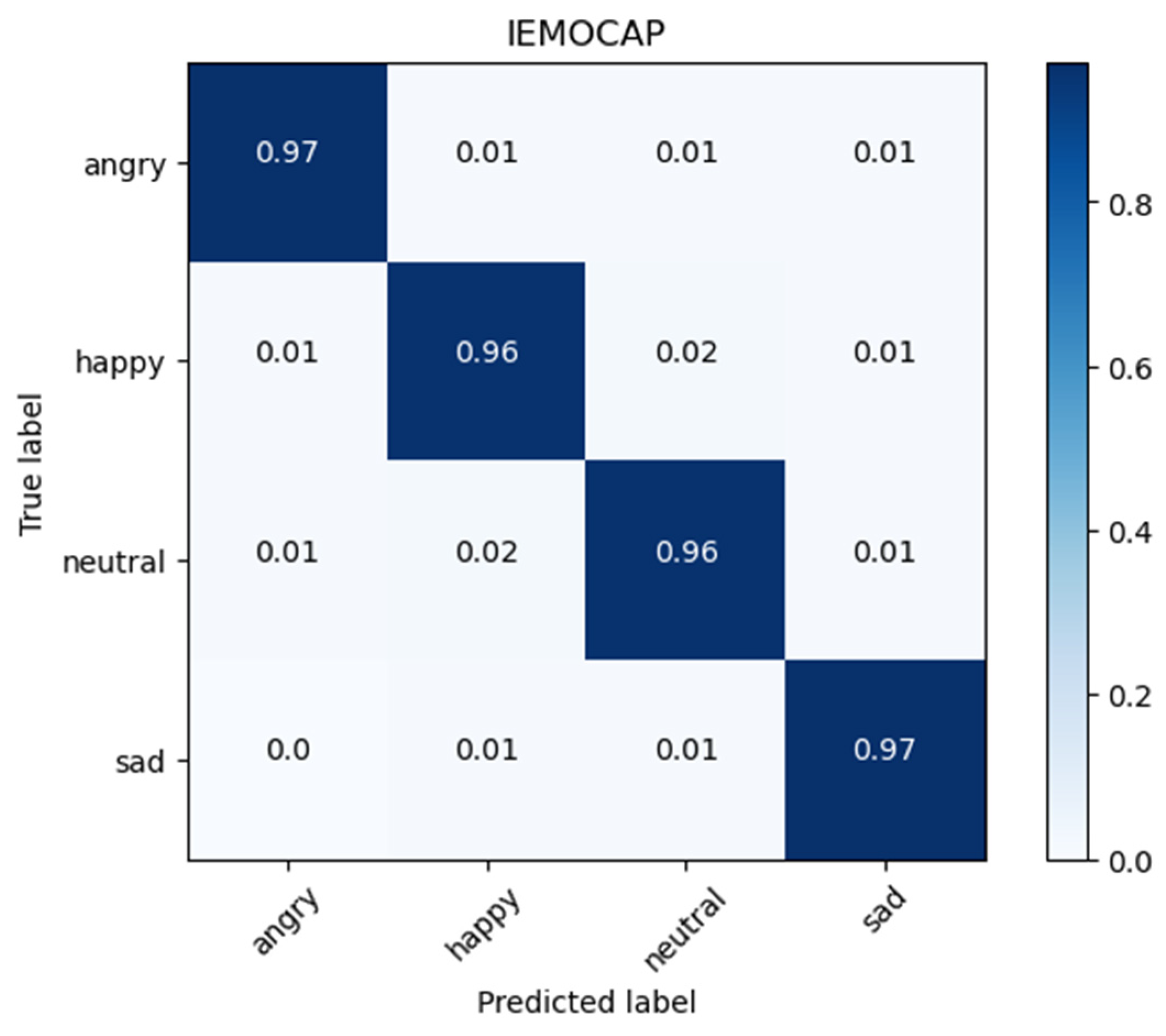

| Label | Precision | Recall | F1-Score |

|---|---|---|---|

| Angry | 95.92% | 96.92% | 96.42% |

| Happy | 96.18% | 95.53% | 95.86% |

| Neutral | 96.38% | 96.02% | 96.20% |

| Sad | 96.26% | 96.63% | 96.60% |

| Average | 96.19% | 96.28% | 96.27% |

| Methods | Cross-Validation | Accuracy |

|---|---|---|

| M. Sajjad et al. [5] | 5-fold | 77.02% |

| S. Kwon [6] | 5-fold | 79.50% |

| A. Bhavan et al. [7] | 10-fold | 75.69% |

| S. Kakuba et al. [8] | 10-fold | 85.89% |

| Proposed model | 10-fold | 95.52% |

| Methods | Cross-Validation | Accuracy |

|---|---|---|

| M. Sajjad et al. [5] | 5-fold | 85.57% |

| A. Bhavan et al. [7] | 10-fold | 92.45% |

| S. Kakuba et al. [8] | 10-fold | 95.93% |

| J. Zhao et al. [10] | 5-fold | 95.89% |

| Proposed model | 10-fold | 95.84% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, S.-T.; Wu, H.-J. Performance Improvement of Speech Emotion Recognition Systems by Combining 1D CNN and LSTM with Data Augmentation. Electronics 2023, 12, 2436. https://doi.org/10.3390/electronics12112436

Pan S-T, Wu H-J. Performance Improvement of Speech Emotion Recognition Systems by Combining 1D CNN and LSTM with Data Augmentation. Electronics. 2023; 12(11):2436. https://doi.org/10.3390/electronics12112436

Chicago/Turabian StylePan, Shing-Tai, and Han-Jui Wu. 2023. "Performance Improvement of Speech Emotion Recognition Systems by Combining 1D CNN and LSTM with Data Augmentation" Electronics 12, no. 11: 2436. https://doi.org/10.3390/electronics12112436

APA StylePan, S.-T., & Wu, H.-J. (2023). Performance Improvement of Speech Emotion Recognition Systems by Combining 1D CNN and LSTM with Data Augmentation. Electronics, 12(11), 2436. https://doi.org/10.3390/electronics12112436