iDehaze: Supervised Underwater Image Enhancement and Dehazing via Physically Accurate Photorealistic Simulations

Abstract

1. Introduction

- Design and implementation of a unique photorealistic 3D simulation system modelled after real-world conditions for underwater image enhancement and dehazing.

- A deep convolutional neural network (CNN) for underwater image dehazing and colour transfer, trained on pure synthetic data and a UIEB dataset.

- A customizable synthetic dataset/simulation toolkit for training and diagnosing of underwater image-enhancement systems with robust ground truth and evaluation metrics.

2. Related Work

- Underwater image enhancement: Restoring an underwater image is often labelled as “dehazing” or “enhancement” and presented as a cumulative process in which the colours of the image are enhanced through a colour correction pass, and local and global contrast is altered to yield the enhanced final image. Such pipelines are often collections of linear and non-linear operations through algorithms that break down images into regions [6], or estimate attenuation and scattering [7] to approximate real scattering and correct it accordingly. However, for reasons explored further in Section 3, colour correction and dehazing are two different problems that require their own separate solutions.

- Prior GAN-based approaches and synthetic data: The use of synthetic data has been the topic of several recent publications [8,9,10,11,12,13,14,15,16], and the application of synthetic data varies greatly depending on the method of data generation. In this context, synthetic data mostly refers to making underwater images from in-land images using different methods. One might apply a scattering filter effect [8], or make use of colour transformations to reach the look of an underwater image. Most notably, Li et al. converted real indoor on-land images to underwater-looking images via the use of a GAN (generative adversarial network) [9,10], which sparked an avalanche of GAN-based underwater image enhancement methods [11,12,13,14,15,16]. GANs remain a subject of interest for underwater image enhancement and restoration (UIER) due to the fact that labelled, high-quality data is rare in UIER, as discussed above. While such methods can be helpful, there are caveats and challenges to GAN-based synthetic data generation and image-enhancement models. GANs in image enhancement are typically finicky in the training process as they are very sensitive to hyper-parameters, and adjustments to the learning rate and momentum, making stable GAN training an open research problem and a very common issue in GAN-based approaches [11,15,17,18]. In comparison, CNNs are feedforward models that are far more controllable in training and testing. Furthermore, features of the underwater domain might differ from features learned and generated by the GAN, causing further inaccuracies in the supervised image-enhancement models that learn from this generated data. Therefore, the most accurate method of generating synthetic data for underwater scenarios is to use 3D photorealistic simulations that allow for granular control over every variable, can be modelled after many different environments, and allow for diagnostic methods and wider ground truth annotations [19].

- Lack of standardized data: Underwater image enhancement suffers from a lack of high-quality, annotated data, while there have been numerous attempts to gather underwater images from real environments [11,20], the inconsistencies between image resolution, amount of haze, and imaged objects makes the testing and training of deep learning models significantly more challenging. For example, the EUVP dataset [11] contains small images of resolution, while the UFO-120 dataset [12] contains and images, and the UIEB dataset [5] contains images of various resolutions, ranging from to pixels. This difference between image samples, especially in image quality, haze, and imaged objects, is an issue with many learning-based systems, both in training and evaluation.

- Underwater simulations: Currently, there are a handful of open-source underwater simulations available. Prior simulations exclusively developed for underwater use include UWSim (published in 2012) [21] and UUV (unmanned underwater vehicle) simulator (published in 2016) [22]. These provide tools for simulations of rigid body dynamics or sensors such as sonar or pressure sensors. However, these tools have not been recently updated or developed to support modern hardware. More importantly, these simulations do not focus on real-time, realistic image rendering with ray tracing, nor are they designed for modern diagnostic methods such as data ablation [1,23,24,25]. In contrast, our simulation supports real-time ray tracing, physics-based attenuation and scattering, allowing for dynamic modifications to the structure of the scenes and captured images.

3. Methods

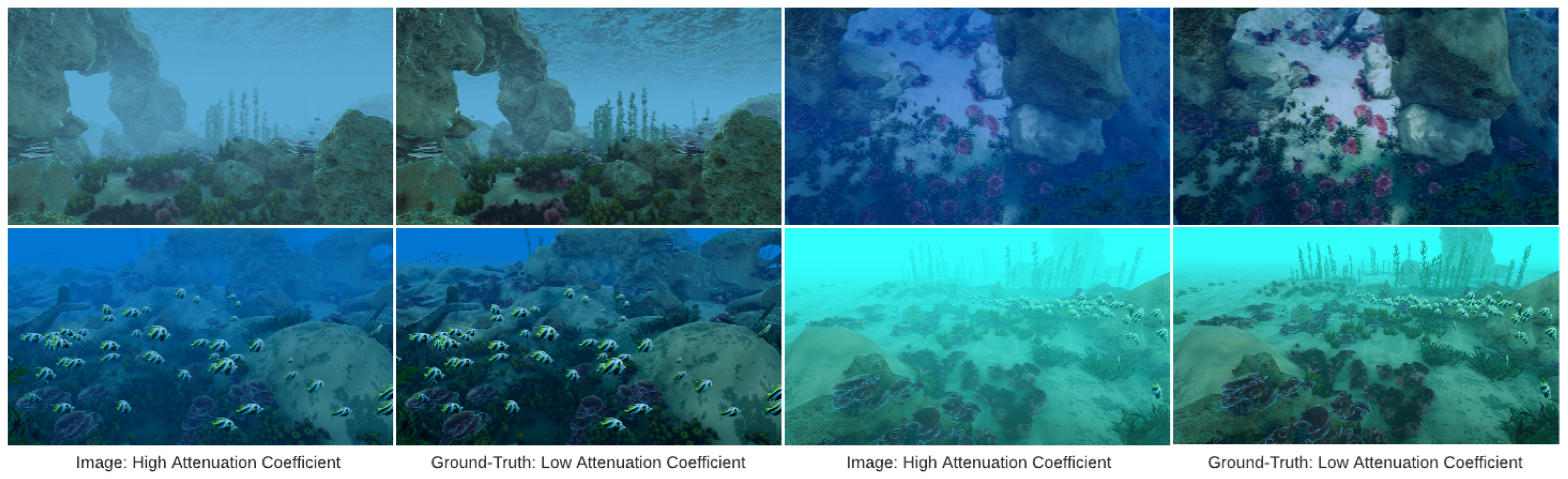

3.1. Simulation of Underwater Environments

3.2. Dehazing vs. Colour Transfer

4. Experiments

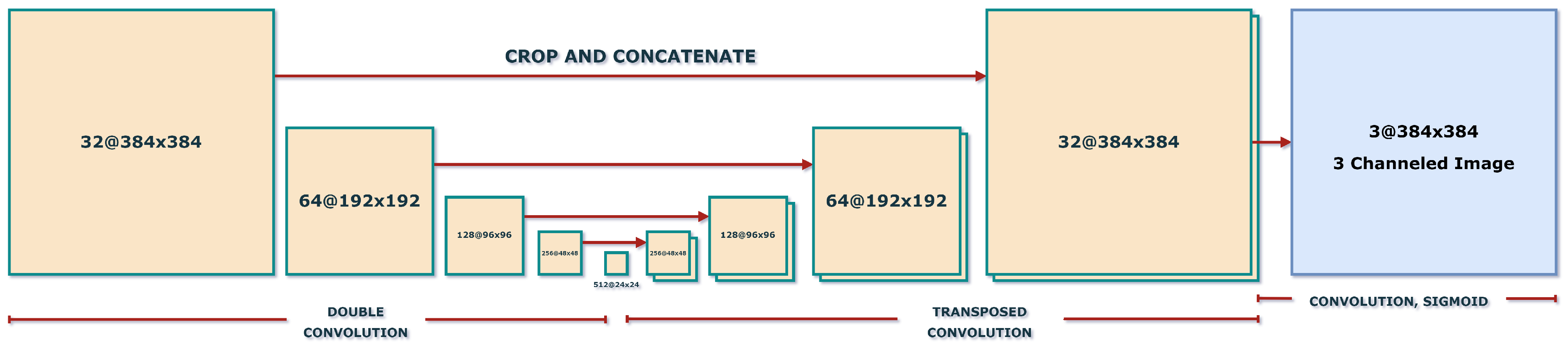

4.1. Neural Networks

4.2. Data Acquisition

4.3. Experimental Setup

4.4. Metrics

4.5. Datasets

5. Results

5.1. Discussion

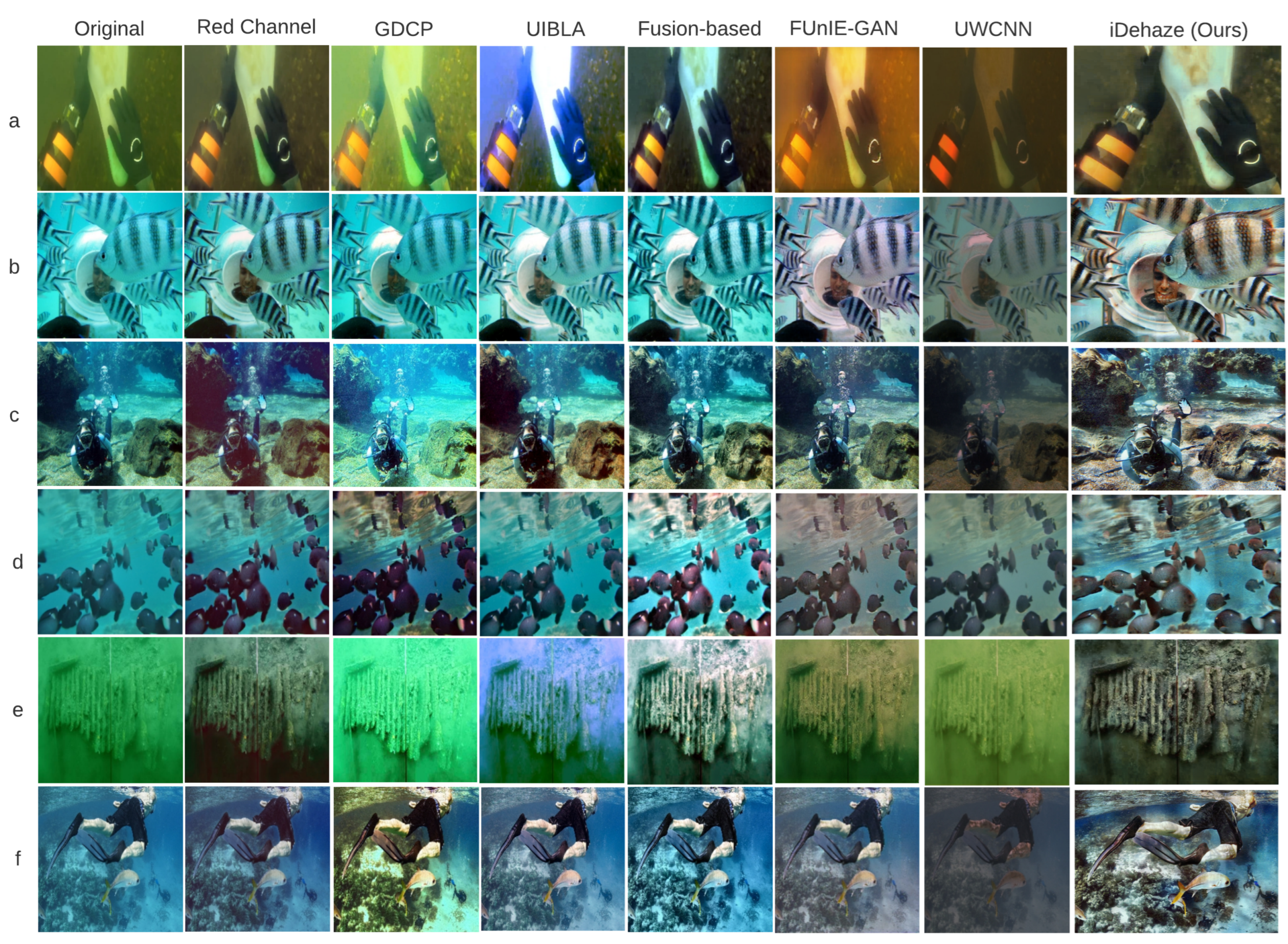

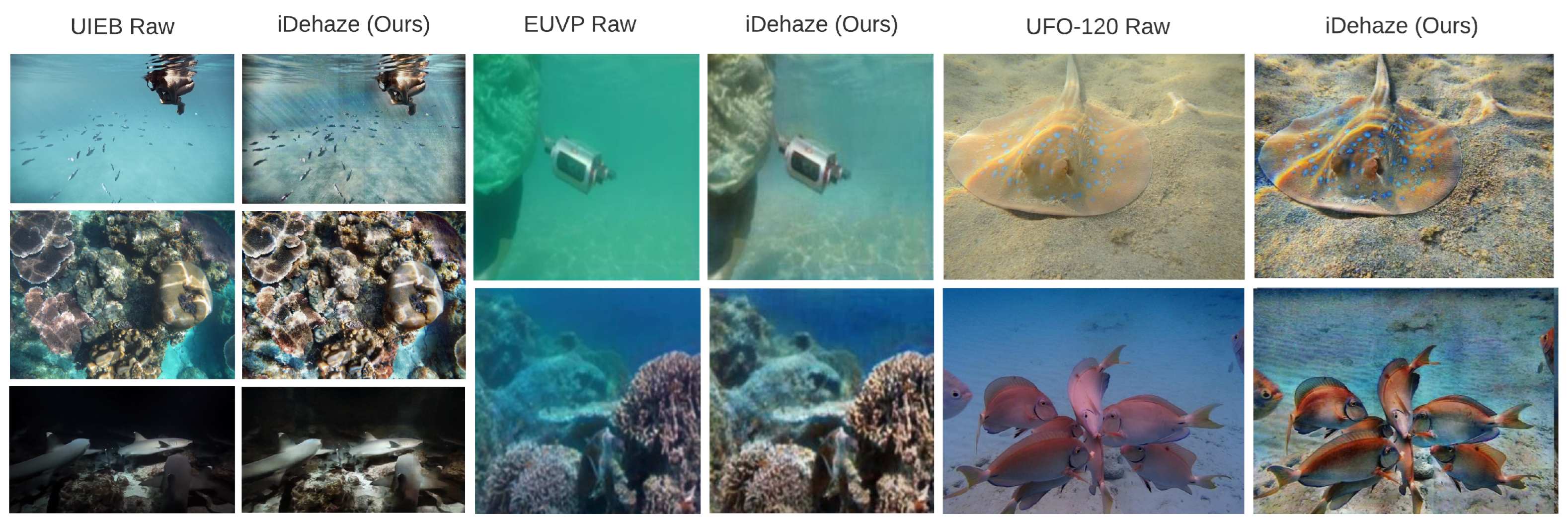

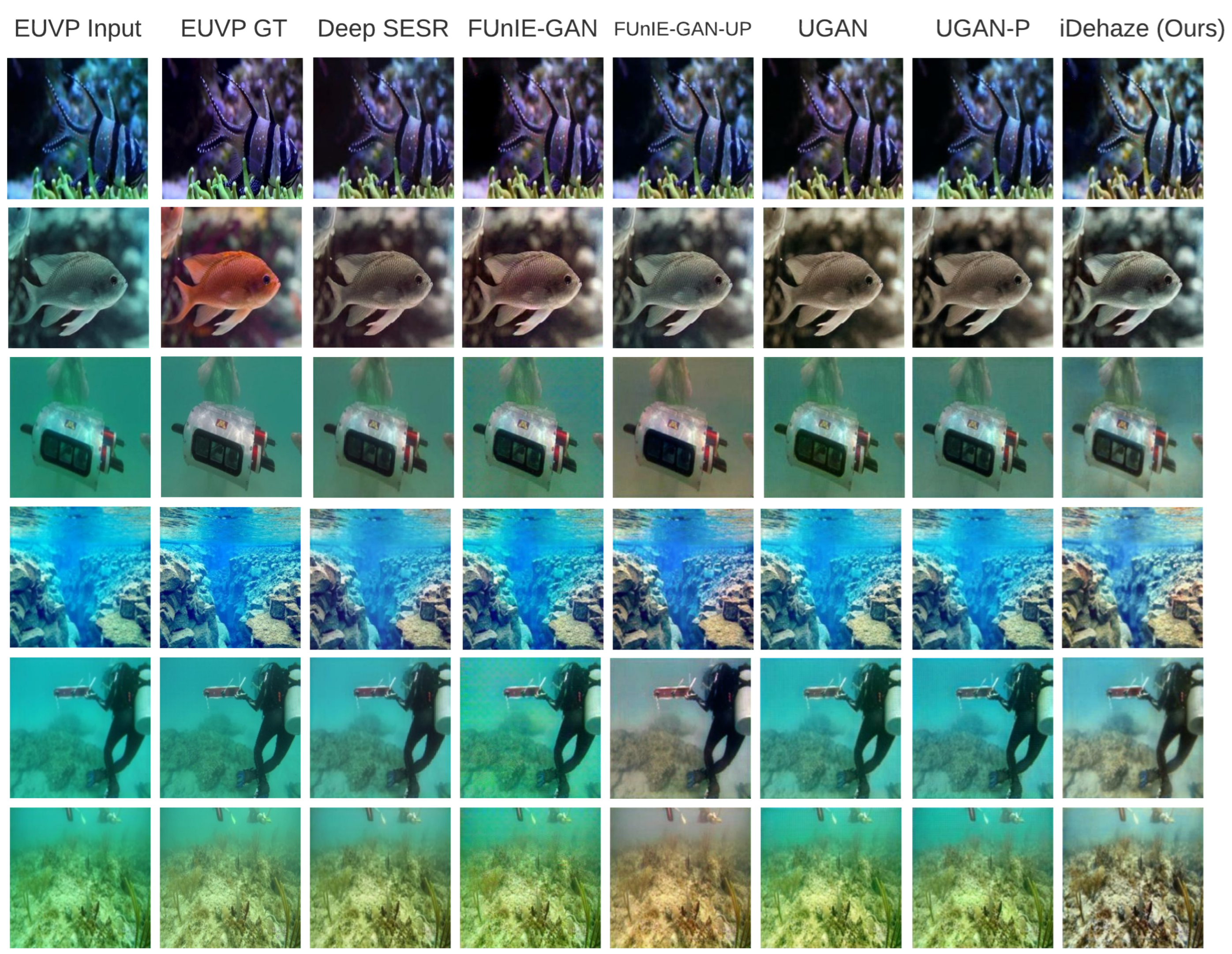

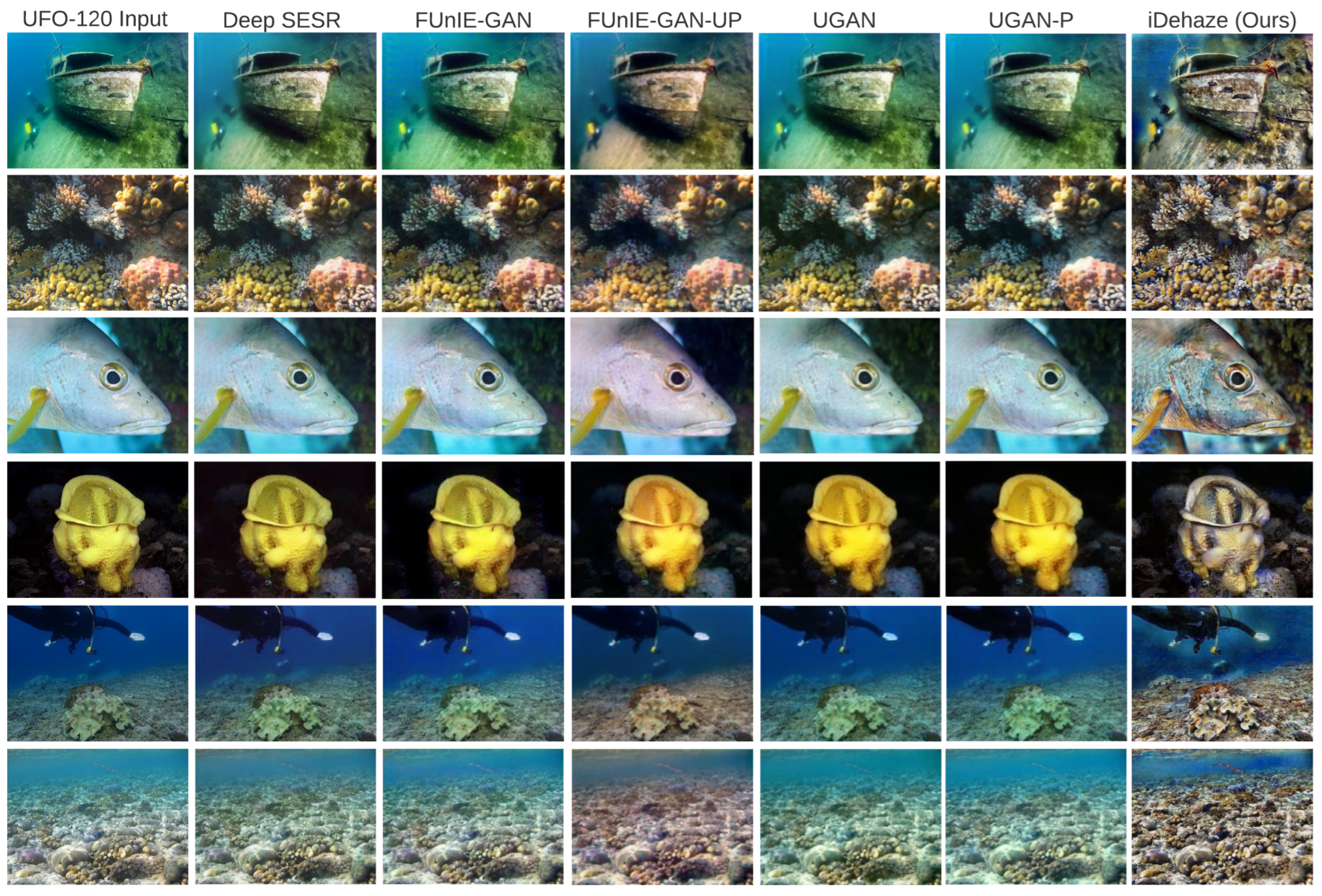

- Qualitative results: Figure 5, Figure 6, Figure 7 and Figure 8 show qualitative results from the output of several different image-enhancement methods. In Figure 5, the images had to be resized in previous works due to limitations in their implementation, such limitations do not exist in the iDehaze pipeline, which can dehaze images at their original aspect ratio.

- UIER metrics: The underwater image-enhancement field has a big challenge with metrics. Namely, it can be very difficult to express the relationships between metrics, such as UIQM and SSIM, when they are looked at in isolation. First, its important to state that our dehazing model learns to specifically deal with haze, and is trained on specialized data that isolates that feature in its image and ground truth. Therefore, it removes significant amounts of haze in the mid to high range in deep underwater images. This makes the output of the dehazing model noticeably sharper, and its structure noticeably clearer than the input, and even more different than the ground truth. “Enhancing” an image means changing its structure, and that will cause the SSIM value to inevitably drop. It can also be noted that a model that enhances an image but has a very low SSIM is not desirable; because the enhanced image needs to be substantially similar to the input image. Our pipeline dramatically changes the amount of haze in the input images, which will cause the SSIM score to decrease. We argue that to accurately evaluate the performance of any image-enhancement model, the SSIM/PSNR values should be considered in tandem with the UIQM and qualitative results. Furthermore, the results of such experiments should be calculated with the exact same constants ( and ) and ideally the exact same code to be accurately comparable.

- Strengths of the patch-based approach: In our pipeline, we split the images at the input of the U-NET [30] (the CNN used in the iDehaze pipeline) into patches and reconstruct them together at the output. Because of this, iDehaze is not sensitive to the input image size during training and can accept various sizes and image qualities as the input—an important feature when the availability of high-resolution, labelled real underwater images is limited. This also multiplies the available training data by a large factor. However, if this approach is used for inference, stitching the image patches together can create a patchwork texture in some images, which appears from time to time in the iDehaze image outputs. It is possible to remedy this by having large patch sizes, large overlaps between patches, and averaging the overlap prediction values at reconstruction. Our approach at inference exploits the flexible nature of the U-NET; therefore, we pad the images to the nearest square resolution divisible by 16, and feed the entire image to the network, resulting in clean inference output images with no patchwork issues or artefacts.

- The use of compressed images: A frustrating fact about the available image datasets in the UIER field is the use of compressed image formats. More specifically, the JPG and JPEG file formats use lossy compression to save disk space. Image compression can introduce artefacts that while invisible to the human eye, will affect the neural network’s performance. Hopefully, as newer and more sophisticated image datasets are gathered in the UIER field, the presence of compressed images will eventually fade away. To take a step in the correct direction, the iDehaze synthetic dataset uses lossless 32-bit PNG images and will be freely available for public use.

| Dataset | Metric | Deep SESR | FUnIE-GAN | FUnIE-GAN-UP | UGAN | UGAN-P | iDehaze (Ours) |

|---|---|---|---|---|---|---|---|

| EUVP | 25.30 ± 2.63 | 26.19 ± 2.87 | 25.21 ± 2.75 | 26.53 ± 3.14 | 26.53 ± 2.96 | 23.01 ± 1.97 | |

| 0.81 ± 0.07 | 0.82 ± 0.08 | 0.78 ± 0.06 | 0.80 ± 0.05 | 0.80 ± 0.05 | 0.84 ± 0.09 | ||

| 2.95 ± 0.32 | 2.84 ± 0.46 | 2.93 ± 0.45 | 2.89 ± 0.43 | 2.93 ± 0.41 | 3.11 ± 0.36 | ||

| UFO-120 | 26.46 ± 3.13 | 24.72 ± 2.57 | 23.29 ± 2.53 | 24.23 ± 2.96 | 24.11 ± 2.85 | 17.55 ± 1.86 | |

| 0.78 ± 0.07 | 0.74 ± 0.06 | 0.67 ± 0.07 | 0.69 ± 0.07 | 0.69 ± 0.07 | 0.72 ± 0.07 | ||

| 2.98 ± 0.37 | 2.88 ± 0.41 | 2.60 ± 0.45 | 2.54 ± 0.45 | 2.59 ± 0.43 | 3.29 ± 0.26 |

5.2. Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mousavi, M.; Khanal, A.; Estrada, R. AI Playground: Unreal Engine-based Data Ablation Tool for Deep Learning. In Proceedings of the International Symposium on Visual Computing, San Diego, CA, USA, 5–7 October 2020; pp. 518–532. [Google Scholar]

- Sajjan, S.S.; Moore, M.; Pan, M.; Nagaraja, G.; Lee, J.; Zeng, A.; Song, S. ClearGrasp: 3D Shape Estimation of Transparent Objects for Manipulation. arXiv 2019, arXiv:1910.02550. [Google Scholar]

- Mahler, J.; Liang, J.; Niyaz, S.; Laskey, M.; Doan, R.; Liu, X.; Ojea, J.A.; Goldberg, K. Dex-Net 2.0: Deep Learning to Plan Robust Grasps with Synthetic Point Clouds and Analytic Grasp Metrics. arXiv 2017, arXiv:1703.09312. [Google Scholar]

- Haltakov, V.; Unger, C.; Ilic, S. Framework for Generation of Synthetic Ground Truth Data for Driver Assistance Applications. In Proceedings of the GCPR, Saarbrücken, Germany, 3–6 September 2013. [Google Scholar]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An Underwater Image Enhancement Benchmark Dataset and Beyond. IEEE Trans. Image Process. 2019, 29, 4376–4389. [Google Scholar] [CrossRef] [PubMed]

- Li, T.; Rong, S.; Zhao, W.; Chen, L.; Liu, Y.; Zhou, H.; He, B. Underwater image enhancement using adaptive color restoration and dehazing. Opt. Express 2022, 30, 6216–6235. [Google Scholar] [CrossRef] [PubMed]

- Liang, Z.; Wang, Y.; Ding, X.; Mi, Z.; Fu, X. Single underwater image enhancement by attenuation map guided color correction and detail preserved dehazing. Neurocomputing 2021, 425, 160–172. [Google Scholar] [CrossRef]

- Iqbal, K.; Abdul Salam, R.; Azam, O.; Talib, A. Underwater Image Enhancement Using an Integrated Colour Model. IAENG Int. J. Comput. Sci. 2007, 34, 219–230. [Google Scholar]

- Li, N.; Zheng, Z.; Zhang, S.; Yu, Z.; Zheng, H.; Zheng, B. The Synthesis of Unpaired Underwater Images Using a Multistyle Generative Adversarial Network. IEEE Access 2018, 6, 54241–54257. [Google Scholar] [CrossRef]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised Generative Network to Enable Real-Time Color Correction of Monocular Underwater Images. IEEE Robot. Autom. Lett. 2018, 3, 387–394. [Google Scholar] [CrossRef]

- Islam, M.J.; Xia, Y.; Sattar, J. Fast Underwater Image Enhancement for Improved Visual Perception. IEEE Robot. Autom. Lett. 2020, 5, 3227–3234. [Google Scholar] [CrossRef]

- Islam, M.J.; Luo, P.; Sattar, J. Simultaneous Enhancement and Super-Resolution of Underwater Imagery for Improved Visual Perception. arXiv 2020, arXiv:2002.01155. [Google Scholar]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing Underwater Imagery using Generative Adversarial Networks. arXiv 2018, arXiv:1801.04011. [Google Scholar]

- Li, J.; Liang, X.; Wei, Y.; Xu, T.; Feng, J.; Yan, S. Perceptual Generative Adversarial Networks for Small Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Chen, Y.S.; Wang, Y.C.; Kao, M.H.; Chuang, Y.Y. Deep Photo Enhancer: Unpaired Learning for Image Enhancement From Photographs with GANs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar] [CrossRef]

- Ignatov, A.; Kobyshev, N.; Vanhoey, K.; Timofte, R.; Gool, L.V. DSLR-Quality Photos on Mobile Devices with Deep Convolutional Networks. arXiv 2017, arXiv:1704.02470. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. arXiv 2016, arXiv:1603.08155. [Google Scholar]

- Mousavi, M.; Vaidya, S.; Sutradhar, R.; Ashok, A. OpenWaters: Photorealistic Simulations For Underwater Computer Vision. In Proceedings of the 15th International Conference on Underwater Networks and Systems (WUWNet’21), Shenzhen, China, 22–24 November 2021; Association for Computing Machinery: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Berman, D.; Levy, D.; Avidan, S.; Treibitz, T. Underwater Single Image Color Restoration Using Haze-Lines and a New Quantitative Dataset. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2822–2837. [Google Scholar] [CrossRef]

- Prats, M.; Pérez, J.; Fernández, J.J.; Sanz, P.J. An open source tool for simulation and supervision of underwater intervention missions. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Algarve, Portugal, 7–12 October 2012; pp. 2577–2582. [Google Scholar] [CrossRef]

- Manhães, M.M.M.; Scherer, S.A.; Voss, M.; Douat, L.R.; Rauschenbach, T. UUV Simulator: A Gazebo-based package for underwater intervention and multi-robot simulation. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–8. [Google Scholar] [CrossRef]

- Mousavi, M.; Estrada, R. SuperCaustics: Real-time, open-source simulation of transparent objects for deep learning applications. arXiv 2021, arXiv:2107.11008. [Google Scholar]

- Álvarez Tuñón, O.; Jardón, A.; Balaguer, C. Generation and Processing of Simulated Underwater Images for Infrastructure Visual Inspection with UUVs. Sensors 2019, 19, 5497. [Google Scholar] [CrossRef]

- Mousavi, M. Towards Data-Centric Artificial Intelligence with Flexible Photorealistic Simulations. Ph.D. Thesis, Georgia State University, Atlanta, GA, USA, 2022. [Google Scholar] [CrossRef]

- Epic Games. Unreal Engine 4.26. 2020. Available online: https://www.unrealengine.com (accessed on 1 January 2022).

- Bouguer, P. Essai d’Optique sur la Gradation de la Lumière; Claude Jombert: Paris, France, 1729. [Google Scholar]

- Naik, A.; Swarnakar, A.; Mittal, K. Shallow-UWnet: Compressed Model for Underwater Image Enhancement. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021. [Google Scholar]

- Yan, X.; Wang, G.; Wang, G.; Wang, Y.; Fu, X. A novel biologically-inspired method for underwater image enhancement. Signal Process. Image Commun. 2022, 104, 116670. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical image computing and computer-assisted intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Panetta, K.; Gao, C.; Agaian, S. Human-Visual-System-Inspired Underwater Image Quality Measures. IEEE J. Ocean. Eng. 2016, 41, 541–551. [Google Scholar] [CrossRef]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic Red-Channel Underwater Image Restoration. J. Vis. Comun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cao, K.; Cosman, P.C. Generalization of the dark channel prior for single image restoration. IEEE Trans. Image Process. 2018, 27, 2856–2868. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef] [PubMed]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 81–88. [Google Scholar]

- Li, C.; Anwar, S.; Porikli, F. Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recognit. 2020, 98, 107038. [Google Scholar] [CrossRef]

| Dataset | Metric | WaterNet | FUnIE-GAN | Deep SESR | Shallow-UWnet | iDehaze (Ours) |

|---|---|---|---|---|---|---|

| UIEB | 19.11 ± 3.68 | 19.13 ± 3.91 | 19.26 ± 3.56 | 18.99 ± 3.60 | 17.96 ± 2.79 | |

| 0.79 ± 0.09 | 0.73 ± 0.11 | 0.73 ± 0.11 | 0.67 ± 0.13 | 0.80 ± 0.07 | ||

| 3.02 ± 0.34 | 2.99 ± 0.39 | 2.95 ± 0.39 | 2.77 ± 0.43 | 3.28 ± 0.33 | ||

| EUVP | 24.43 ± 4.64 | 26.19 ± 2.87 | 25.30 ± 2.63 | 27.39 ± 2.70 | 23.01 ± 1.97 | |

| 0.82 ± 0.08 | 0.82 ± 0.08 | 0.81 ± 0.07 | 0.83 ± 0.07 | 0.84 ± 0.09 | ||

| 2.97 ± 0.32 | 2.84 ± 0.46 | 2.95 ± 0.32 | 2.98 ± 0.38 | 3.11 ± 0.36 | ||

| UFO-120 | 23.12 ± 3.31 | 24.72 ± 2.57 | 26.46 ± 3.13 | 25.20 ± 2.88 | 17.55 ± 1.86 | |

| 0.73 ± 0.07 | 0.74 ± 0.06 | 0.78 ± 0.07 | 0.73 ± 0.07 | 0.72 ± 0.07 | ||

| 2.94 ± 0.38 | 2.88 ± 0.41 | 2.98 ± 0.37 | 2.85 ± 0.37 | 3.29 ± 0.26 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mousavi, M.; Estrada, R.; Ashok, A. iDehaze: Supervised Underwater Image Enhancement and Dehazing via Physically Accurate Photorealistic Simulations. Electronics 2023, 12, 2352. https://doi.org/10.3390/electronics12112352

Mousavi M, Estrada R, Ashok A. iDehaze: Supervised Underwater Image Enhancement and Dehazing via Physically Accurate Photorealistic Simulations. Electronics. 2023; 12(11):2352. https://doi.org/10.3390/electronics12112352

Chicago/Turabian StyleMousavi, Mehdi, Rolando Estrada, and Ashwin Ashok. 2023. "iDehaze: Supervised Underwater Image Enhancement and Dehazing via Physically Accurate Photorealistic Simulations" Electronics 12, no. 11: 2352. https://doi.org/10.3390/electronics12112352

APA StyleMousavi, M., Estrada, R., & Ashok, A. (2023). iDehaze: Supervised Underwater Image Enhancement and Dehazing via Physically Accurate Photorealistic Simulations. Electronics, 12(11), 2352. https://doi.org/10.3390/electronics12112352