A High-Throughput Processor for GDN-Based Deep Learning Image Compression

Abstract

1. Introduction

- (1)

- We provide a processor architecture suitable for deep-learning image compression. The processor can speed up the encoding and decoding calculation in image compression to obtain reconstructed images at six different bit rates.

- (2)

- Dynamic hierarchical quantization is proposed to quantize the weights into 16-bit fixed points, which is conducted to save memory and computation resources. A PE group array with local buffers is provided to evaluate convolutional layers. The array conforms to the various types of data reuse, which increases the utilization of hardware resources.

- (3)

- The algorithms of coding and decoding are analyzed to design a common hardware architecture. The proposed sampling unit realizes the calculation of upsampling and downsampling. The normalization unit is proposed to perform GDN and IGDN.

- (4)

- Using the Xilinx Zynq ZCU104 as the hardware implementation platform for the end-to-end image compression inference process. The input image with a resolution of 256 × 256 requires 15.87 ms and 14.51 ms for encoding and decoding, respectively. In addition, the processor throughput reaches 283.4 GOPS at 200 MHz.

2. Image Compression Methods

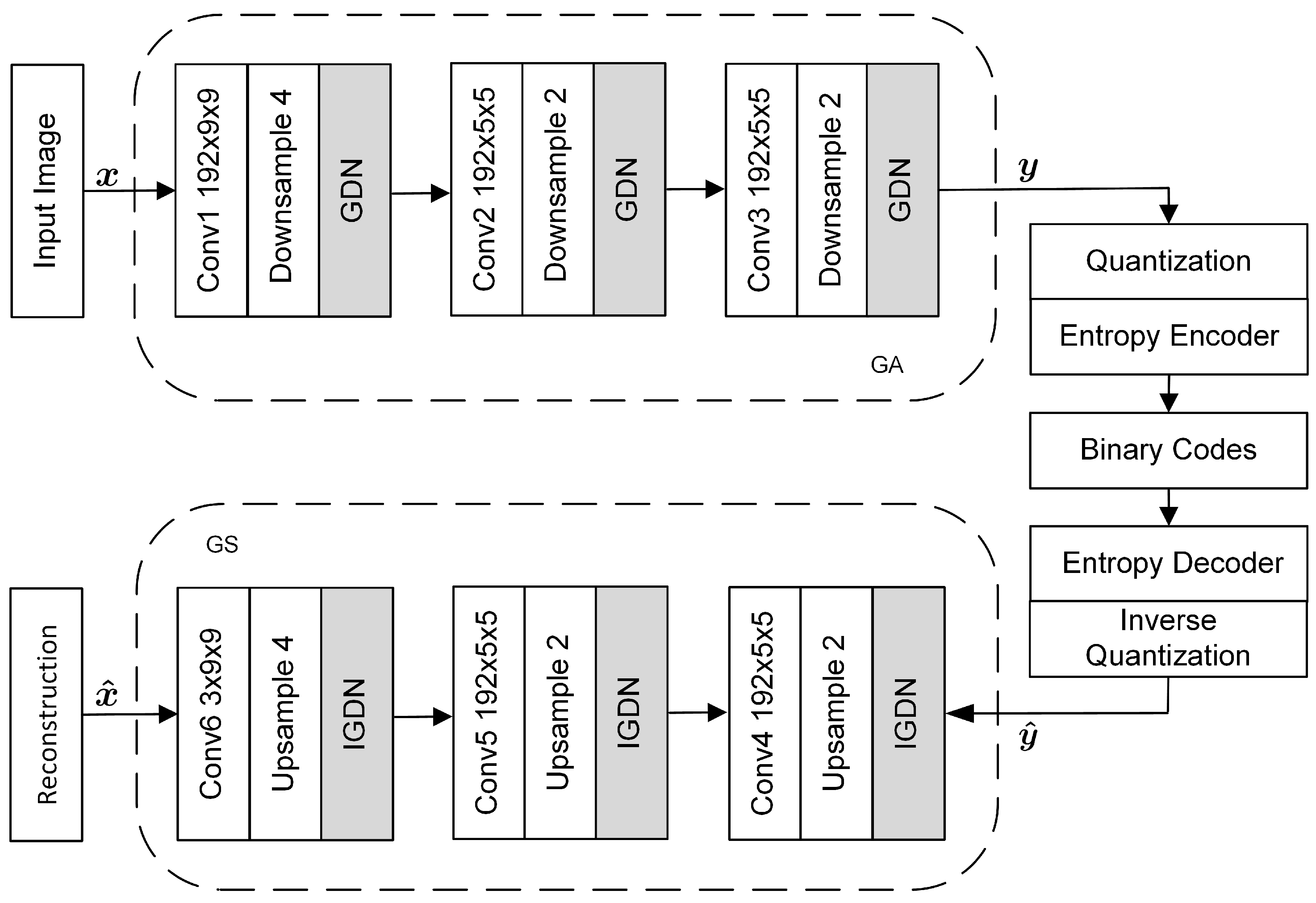

2.1. CNN Image Compression

2.2. GDN and IGDN

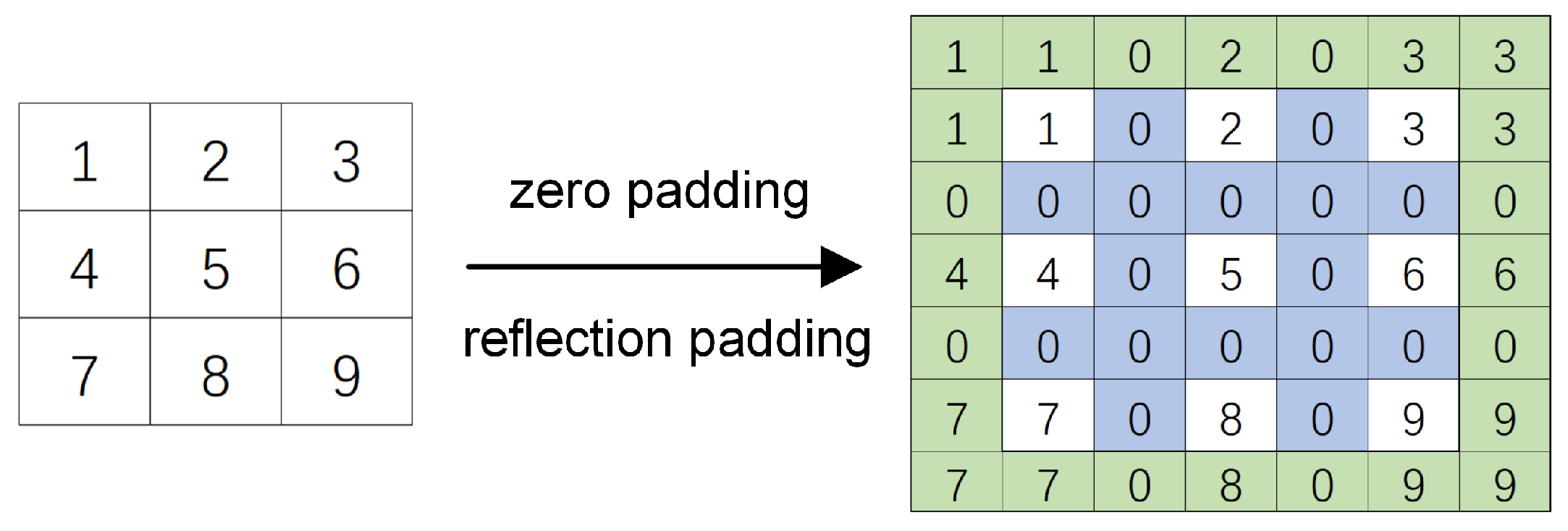

2.3. Downsampling and Upsampling

3. CNN Acceleration Strategy

3.1. Dynamic Hierarchical Quantification

3.2. Convolution Operations in CNN

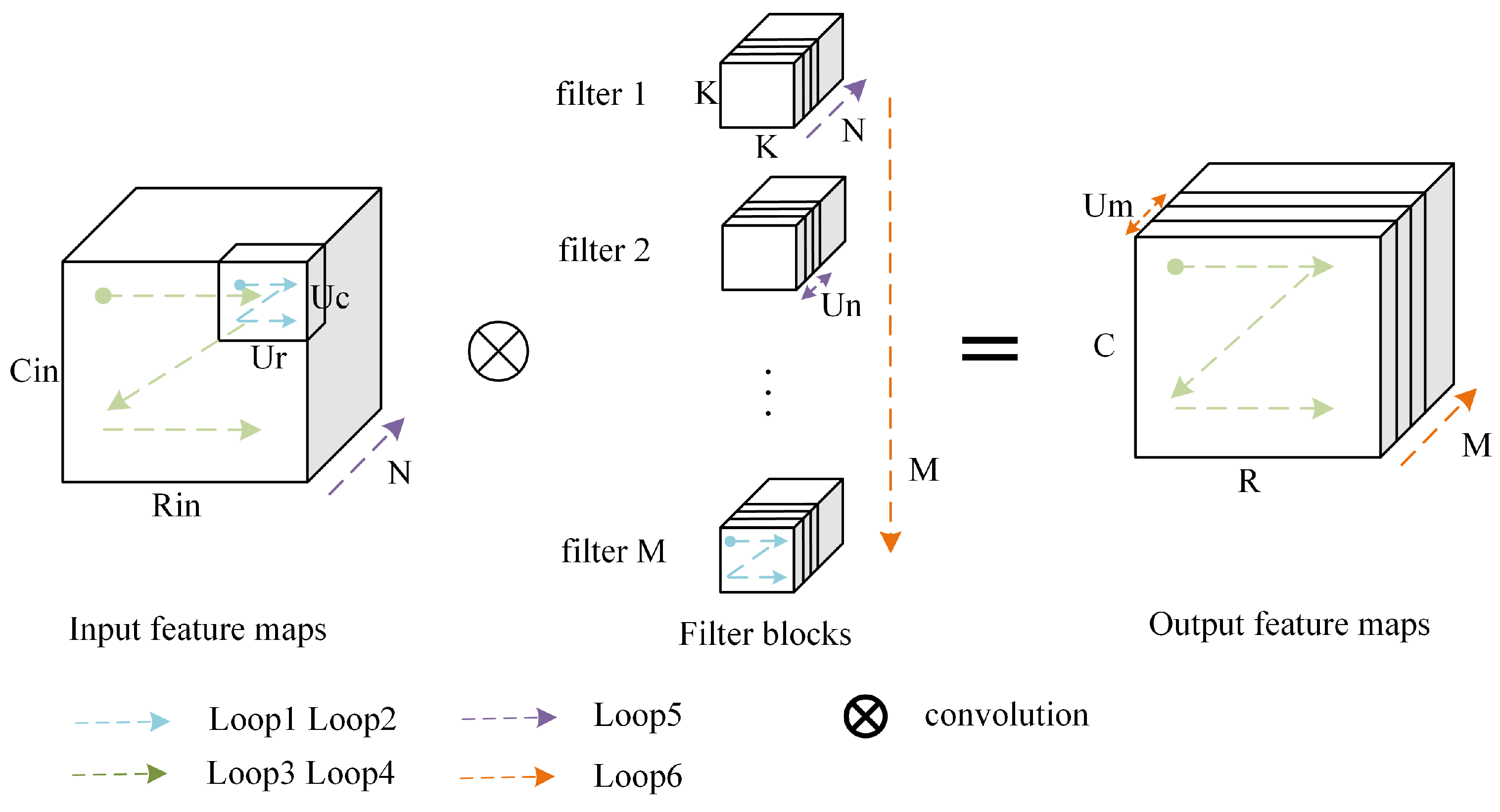

- Loop unrolling. The loop unrolling design variables are , which represents the number of parallel computations for each layer in the same loop. Loop unrolling is used to do the fully parallel computation for six levels of convolution loops, as shown in Figure 6, which contributes to improving the computational speed of CNN processing. However, some loops are too complicated to unroll under limited hardware resource constraints. On the other hand, it is impossible to unroll all of the loops due to the data reliance between the various loop dimensions.

- Loop tiling. The data required by the loop are not read one by one when executing the instruction of access for hardware, but a piece of data in a continuous area is stored in a cache line to avoid repeated reading. However, because of the limited size of the cache line, the original data would be lost if fresh data needed to be read but was not previously placed in the cache line. Therefore, it is necessary for loop tiling and storing the data used in convolution into the on-chip buffer to reduce repeated reads. Tiling sizes are represented by variables prefixed with “T”, which are denoted as .

- Loop interchange. The coarse-grained loop can be promoted outwards to increase parallelism and reduce synchronization overhead. You can also use loop vectorization inward to reduce dependencies between data. Loop interchanges are commutative only if their instances do not depend on each other.

3.3. Loop Optimization Modeling

3.3.1. Loop Unrolling Factor

3.3.2. Loop Tiling Factors

4. System Architecture

4.1. Overall Architecture

- Storage Buffers include Input Buffer (IB), Output Buffer (OB), and Intermediate Buffer (TB). IB uses a ping-pong structure to store the uncompressed image data and weights, reading, and writing data between the two buffers to increase data reading performance. The encoded or decoded data are stored by OB. The encoded data can either be sent to the operation module through control signals for image decoding operation, or it can be output centrally for decoding by the top computer.

- DMAs are employed to transport data and instructions between PS and PL to lessen the terminal workload of PS and increase the effectiveness of data transfer. The DMA controller is included internally to import the original image data and weights into different addresses of the input buffer, and the instructions received from the PS are transferred to the Controller after decoding. The enable signal is issued while the IB is filled to start the inference process of the DIC.

- Controller receives instructions from PS side. On the other hand, it controls the compression inference process according to the state of the operation module. Different from the common image classification application, image compression is divided into the encoding phase and decoding phase. The controller could configure the image to go through convolution, downsampling, and GDN operation during the encoding phase, as shown in Figure 7. The encoded data can be transmitted to the PS directly through the OB as dataflow. Moreover, it can be delivered to the operation module to be decoded, go through IGDN, upsampling, and convolution operation, and finally transmitted to the PS to display the compressed image.

- The Operation Module is the main part of the image compression processor that is capable of encoding and decoding by adjusting dataflow with the control signal. It contains three pipelined parts: Conv, Sample, and Norm units. Image data and weights are transferred to the Conv unit for convolution first during encoding. The output of the convolution unit would then flow immediately to the downsampling without an idle cycle, which would increase computing efficiency. We design the microarchitecture of the Norm unit with some multipliers and adders that are compatible with IGDN and Batch Normalization (BN).

4.2. Convolution Unit

4.3. Sampling Unit

4.4. Normalization Unit

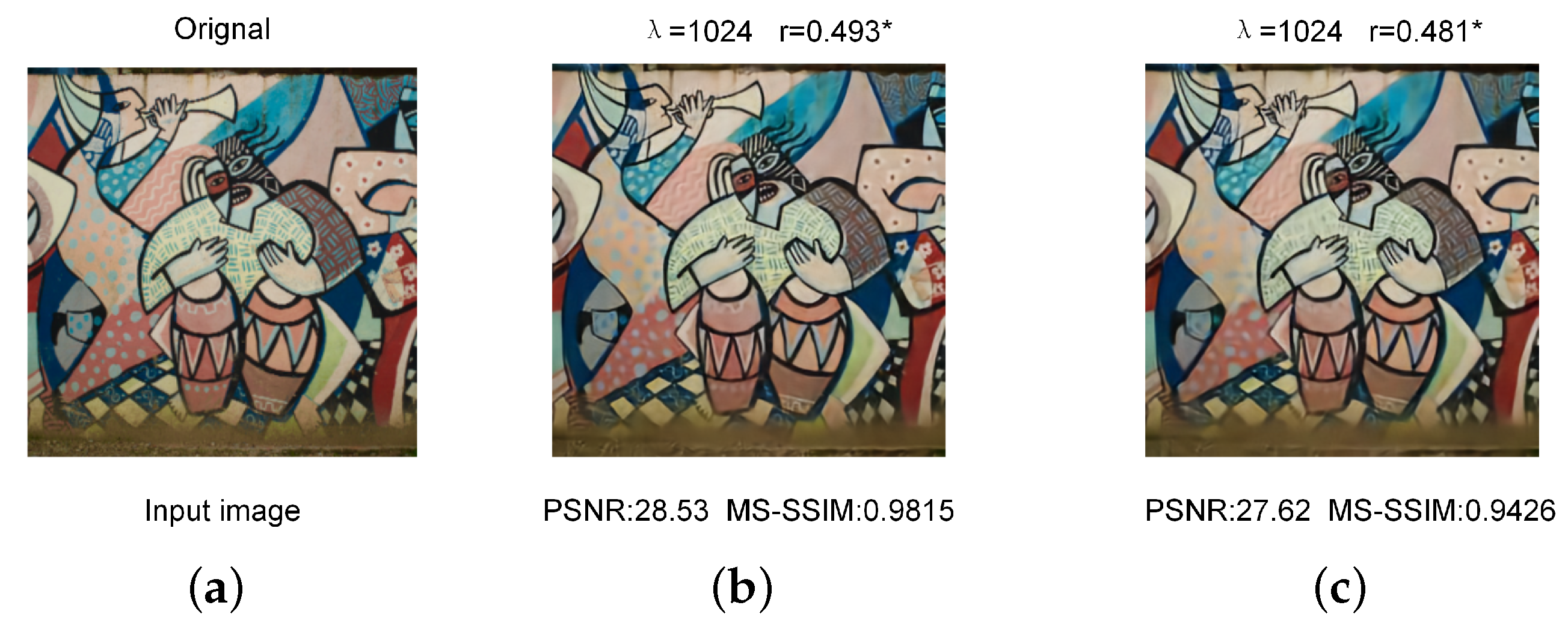

5. Experimental Results

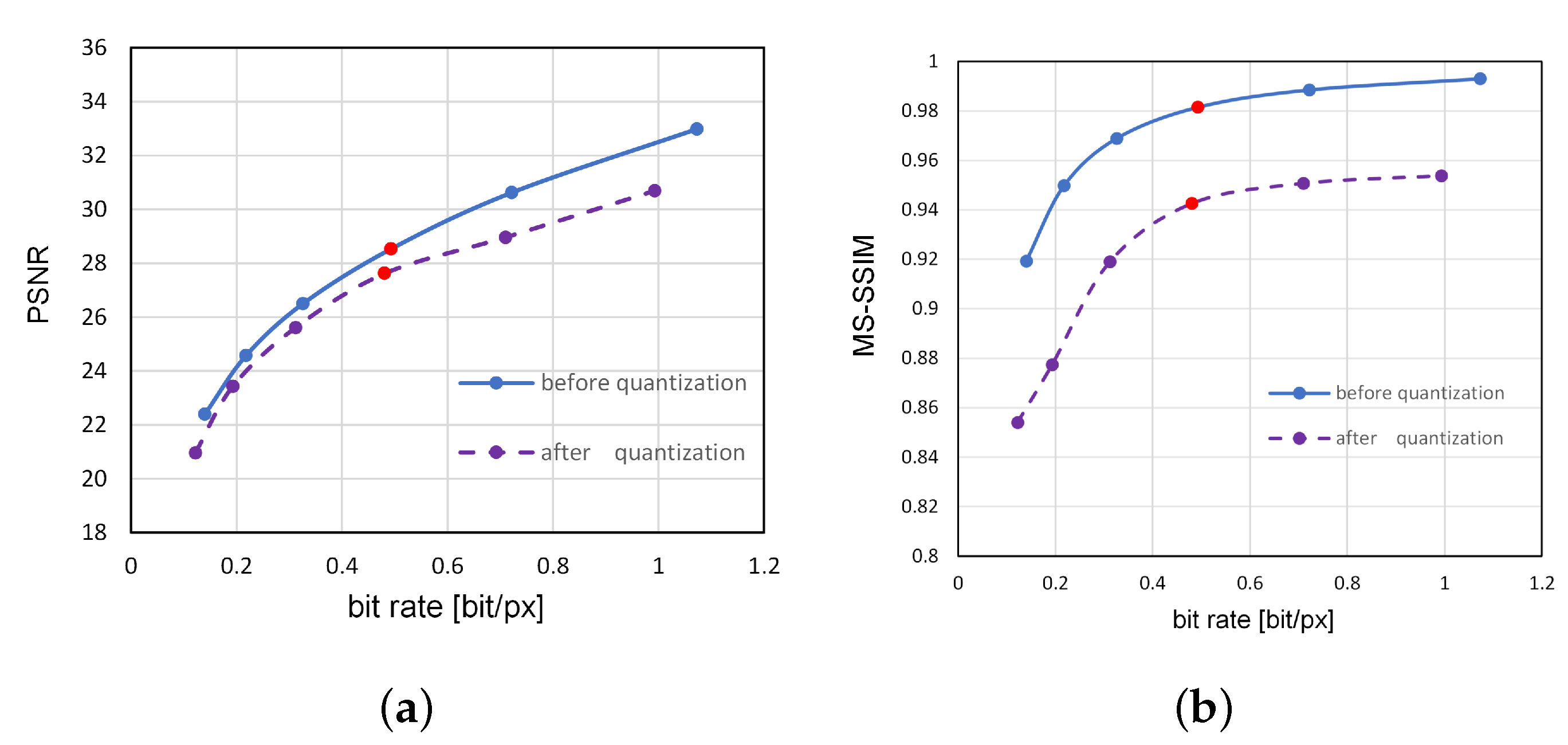

5.1. Evaluation of Quantization

5.2. Hardware Performance

6. Summary

Author Contributions

Funding

Conflicts of Interest

References

- Jiang, F.; Tao, W.; Liu, S.; Ren, J.; Guo, X.; Zhao, D. An end-to-end compression framework based on convolutional neural networks. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 3007–3018. [Google Scholar] [CrossRef]

- Yasin, H.M.; Abdulazeez, A.M. Image compression based on deep learning: A review. Asian J. Res. Comput. Sci. 2021, 8, 62–76. [Google Scholar] [CrossRef]

- Wallace, G.K. The JPEG still picture compression standard. IEEE Trans. Consum. Electron. 1992, 38, xviii–xxxiv. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, T.; Wen, W.; Jiang, L.; Xu, J.; Wang, Y.; Quan, G. DeepN-JPEG: A deep neural network favorable JPEG-based image compression framework. In Proceedings of the 55th Annual Design Automation Conference, San Francisco, CA, USA, 24–29 June 2018; pp. 1–6. [Google Scholar]

- Li, M.; Zuo, W.; Gu, S.; Zhao, D.; Zhang, D. Learning convolutional networks for content-weighted image compression. In Proceedings of the IEEE conference on computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3214–3223. [Google Scholar]

- Skodras, A.; Christopoulos, C.; Ebrahimi, T. The JPEG 2000 still image compression standard. IEEE Signal Process. Mag. 2001, 18, 36–58. [Google Scholar] [CrossRef]

- Sullivan, G.J.; Ohm, J.R.; Han, W.J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Guo, K.; Sui, L.; Qiu, J.; Yu, J.; Wang, J.; Yao, S.; Han, S.; Wang, Y.; Yang, H. Angel-eye: A complete design flow for mapping CNN onto embedded FPGA. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2017, 37, 35–47. [Google Scholar] [CrossRef]

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing FPGA-based accelerator design for deep convolutional neural networks. In Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2015; pp. 161–170. [Google Scholar]

- Qiu, J.; Wang, J.; Yao, S.; Guo, K.; Li, B.; Zhou, E.; Yu, J.; Tang, T.; Xu, N.; Song, S.; et al. Going deeper with embedded fpga platform for convolutional neural network. In Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 21–23 February 2016; pp. 26–35. [Google Scholar]

- Suda, N.; Chandra, V.; Dasika, G.; Mohanty, A.; Ma, Y.; Vrudhula, S.; Seo, J.s.; Cao, Y. Throughput-optimized OpenCL-based FPGA accelerator for large-scale convolutional neural networks. In Proceedings of the 2016 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 21–23 February 2016; pp. 16–25. [Google Scholar]

- Chen, Y.H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 2016, 52, 127–138. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part IV 13; Springer: Cham, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Agustsson, E.; Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–27 July 2017; pp. 126–135. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–27 July 2017; pp. 136–144. [Google Scholar]

- Ballé, J.; Laparra, V.; Simoncelli, E.P. Density modeling of images using a generalized normalization transformation. arXiv 2015, arXiv:1511.06281. [Google Scholar]

- Ballé, J.; Minnen, D.; Singh, S.; Hwang, S.J.; Johnston, N. Variational image compression with a scale hyperprior. arXiv 2018, arXiv:1802.01436. [Google Scholar]

- Ballé, J.; Laparra, V.; Simoncelli, E.P. End-to-end optimized image compression. arXiv 2016, arXiv:1611.01704. [Google Scholar]

- Zhang, X.; Wang, J.; Zhu, C.; Lin, Y.; Xiong, J.; Hwu, W.M.; Chen, D. DNNBuilder: An automated tool for building high-performance DNN hardware accelerators for FPGAs. In Proceedings of the 2018 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Diego, CA, USA, 5–8 November 2018; pp. 1–8. [Google Scholar]

- Han, S.; Liu, X.; Mao, H.; Pu, J.; Pedram, A.; Horowitz, M.A.; Dally, W.J. EIE: Efficient inference engine on compressed deep neural network. ACM SIGARCH Comput. Archit. News 2016, 44, 243–254. [Google Scholar] [CrossRef]

- Gondimalla, A.; Chesnut, N.; Thottethodi, M.; Vijaykumar, T. SparTen: A sparse tensor accelerator for convolutional neural networks. In Proceedings of the 52nd Annual IEEE/ACM International Symposium on Microarchitecture, Columbus, OH, USA, 12–16 October 2019; pp. 151–165. [Google Scholar]

- Li, H.; Fan, X.; Jiao, L.; Cao, W.; Zhou, X.; Wang, L. A high performance FPGA-based accelerator for large-scale convolutional neural networks. In Proceedings of the 2016 26th International Conference on Field Programmable Logic and Applications (FPL), Lausanne, Switzerland, 29 October–2 November 2016; pp. 1–9. [Google Scholar]

- Ma, Y.; Cao, Y.; Vrudhula, S.; Seo, J.s. Optimizing the convolution operation to accelerate deep neural networks on FPGA. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2018, 26, 1354–1367. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Nguyen, T.N.; Kim, H.; Lee, H.J. A high-throughput and power-efficient FPGA implementation of YOLO CNN for object detection. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2019, 27, 1861–1873. [Google Scholar] [CrossRef]

- Lu, L.; Xie, J.; Huang, R.; Zhang, J.; Lin, W.; Liang, Y. An efficient hardware accelerator for sparse convolutional neural networks on FPGAs. In Proceedings of the 2019 IEEE 27th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM), San Diego, CA, USA, 28 April–1 May 2019; pp. 17–25. [Google Scholar]

- Ma, Y.; Cao, Y.; Vrudhula, S.; Seo, J.S. Performance modeling for CNN inference accelerators on FPGA. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2019, 39, 843–856. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Xiao, Q.; Liang, Y.; Lu, L.; Yan, S.; Tai, Y.W. Exploring heterogeneous algorithms for accelerating deep convolutional neural networks on FPGAs. In Proceedings of the 54th Annual Design Automation Conference 2017, Austin, TX, USA, 18–22 June 2017; pp. 1–6. [Google Scholar]

- Zhang, S.; Cao, J.; Zhang, Q.; Zhang, Q.; Zhang, Y.; Wang, Y. An fpga-based reconfigurable cnn accelerator for yolo. In Proceedings of the 2020 IEEE 3rd International Conference on Electronics Technology (ICET), Chengdu, China, 8–11 May 2020; pp. 74–78. [Google Scholar]

- Wei, X.; Yu, C.H.; Zhang, P.; Chen, Y.; Wang, Y.; Hu, H.; Liang, Y.; Cong, J. Automated systolic array architecture synthesis for high throughput CNN inference on FPGAs. In Proceedings of the 54th Annual Design Automation Conference 2017, Austin, TX, USA, 18–22 June 2017; pp. 1–6. [Google Scholar]

- Huang, W.; Wu, H.; Chen, Q.; Luo, C.; Zeng, S.; Li, T.; Huang, Y. FPGA-based high-throughput CNN hardware accelerator with high computing resource utilization ratio. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 4069–4083. [Google Scholar] [CrossRef] [PubMed]

| Layer | Channel | Fm Size (R = C) | Kernel Size (K) | Stride | #Operations |

|---|---|---|---|---|---|

| Original image | 3 | 256 | —— | —— | —— |

| Conv1 | 192 | 77 | 9 | 4 | 553,246,848 |

| Conv2 | 192 | 37 | 5 | 2 | 2,523,340,800 |

| Conv3 | 192 | 17 | 5 | 2 | 532,684,800 |

| Conv4 | 192 | 37 | 5 | 2 | 2,523,340,800 |

| Conv5 | 192 | 77 | 5 | 2 | 553,246,848 |

| Conv6 | 3 | 256 | 9 | 4 | 1,887,436,800 |

| Resource | FF | LUT | DSP48 | BRAM (18 Kb) |

|---|---|---|---|---|

| Utilization | 197,843 | 214,705 | 1042 | 549 |

| Percent (Zynq ZCU102) | 36.02 | 78.33 | 41.27 | 30.1 |

| Percent (Zynq ZCU104) | 42.93 | 93.19 | 60.3 | 87.98 |

| Work | [8] | [10] | [30] | [31] | This Work | |

|---|---|---|---|---|---|---|

| Model | VGG16 | VGG16 | VGG16 | YOLOV2 | DIC | DIC |

| Platform | XC7Z020 | XC7Z020 | XC7Z045 | ZCU102 | ZCU102 | ZCU104 |

| Clock (MHz) | 214 | 150 | 100 | 300 | 200 | 200 |

| Precision | 8-bit fixed | 16-bit fixed | 8-bit fixed | 16-bit fixed | 16-bit fixed | 16-bit fixed |

| CNN size (GOP) | 30.76 | 30.76 | 30.76 | 5.4 | 8.57 | 8.57 |

| Power (W) | 3.5 | 9.63 | 9.4 | 11.8 | 13.7 | 13.2 |

| DSP used | 780 | 780 | 824 | 609 | 1042 | 1042 |

| Throughput | 84.3 | 187.8 | 239 | 237.6 | 250.2 | 283.4 |

| Efficiency | 24.1 | 19.50 | 24.42 | 20.13 | 18.26 | 21.47 |

| DSP Efficiency | 0.108 | 0.241 | 0.290 | 0.390 | 0.240 | 0.272 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shao, H.; Liu, B.; Li, Z.; Yan, C.; Sun, Y.; Wang, T. A High-Throughput Processor for GDN-Based Deep Learning Image Compression. Electronics 2023, 12, 2289. https://doi.org/10.3390/electronics12102289

Shao H, Liu B, Li Z, Yan C, Sun Y, Wang T. A High-Throughput Processor for GDN-Based Deep Learning Image Compression. Electronics. 2023; 12(10):2289. https://doi.org/10.3390/electronics12102289

Chicago/Turabian StyleShao, Hu, Bingtao Liu, Zongpeng Li, Chenggang Yan, Yaoqi Sun, and Tingyu Wang. 2023. "A High-Throughput Processor for GDN-Based Deep Learning Image Compression" Electronics 12, no. 10: 2289. https://doi.org/10.3390/electronics12102289

APA StyleShao, H., Liu, B., Li, Z., Yan, C., Sun, Y., & Wang, T. (2023). A High-Throughput Processor for GDN-Based Deep Learning Image Compression. Electronics, 12(10), 2289. https://doi.org/10.3390/electronics12102289