Automatic Emotion Recognition from EEG Signals Using a Combination of Type-2 Fuzzy and Deep Convolutional Networks

Abstract

1. Introduction

- Compilation of a standard database for automatic emotion recognition from EEG signals using musical stimulation.

- Providing an end-to-end algorithm for automatically detecting emotions from EEG signals without the use of feature selection/extraction block diagrams.

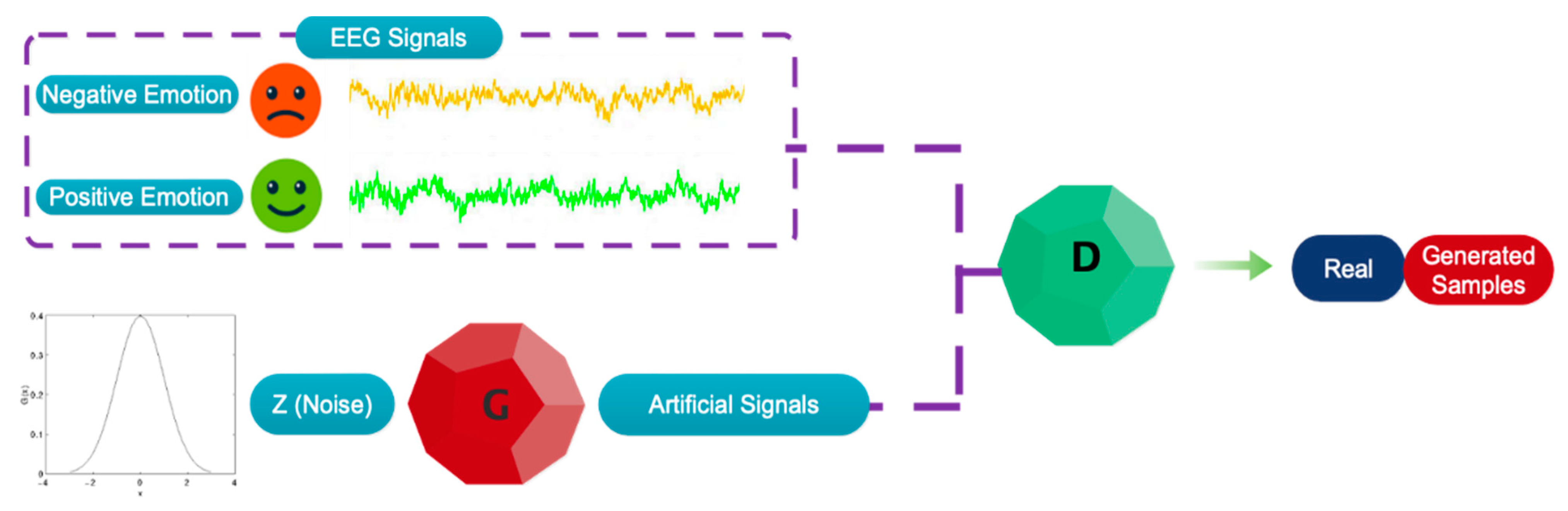

- Providing an automatic method for emotion recognition from EEG signals that is based on a combination of GAN, CNN, and type-2 fuzzy networks, as well as a customized architecture with high speed and accuracy.

- Providing an automatic method for emotion recognition from EEG signals that can withstand a wide range of environmental noises.

2. Materials and Methods

2.1. Brief Description of GAN

2.2. Brief Description of CNN Model

2.3. Type-2 Fuzzy Sets

3. Suggested Method

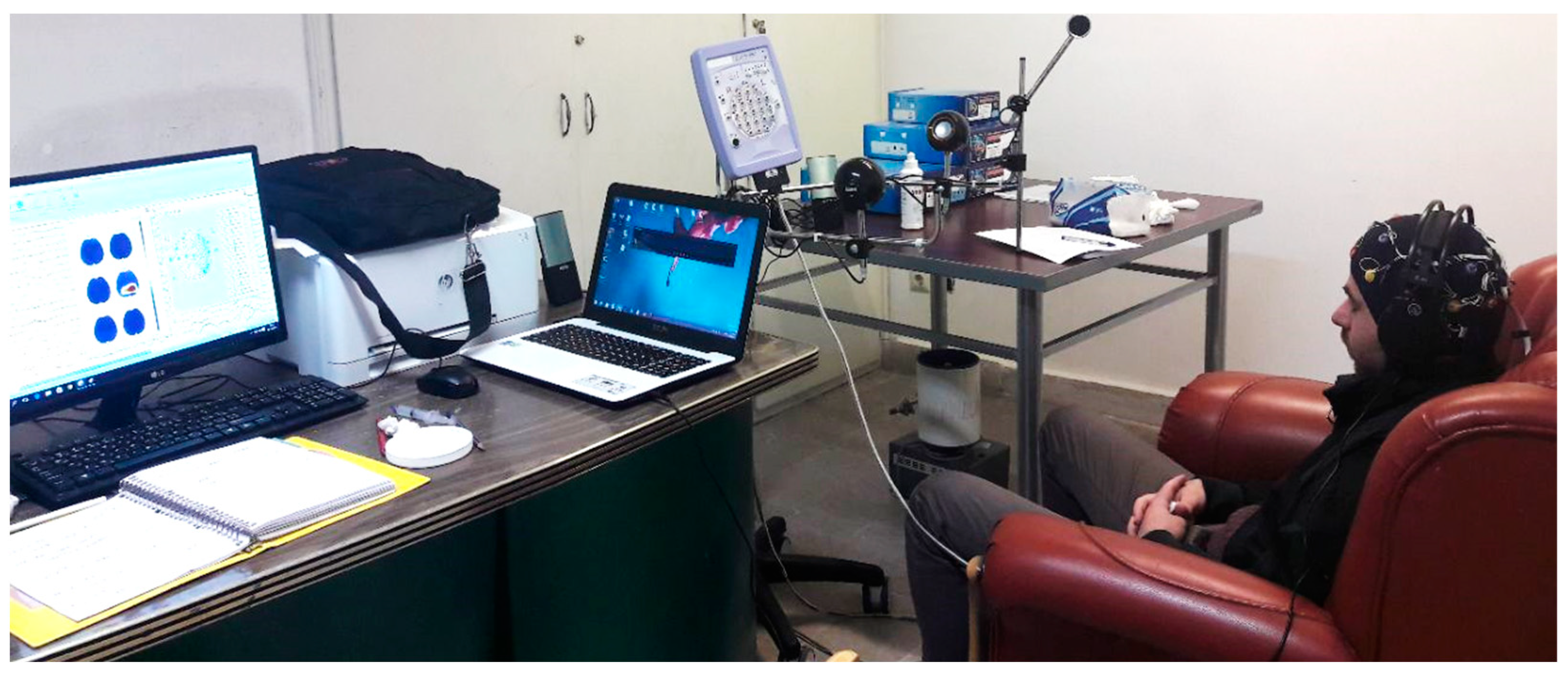

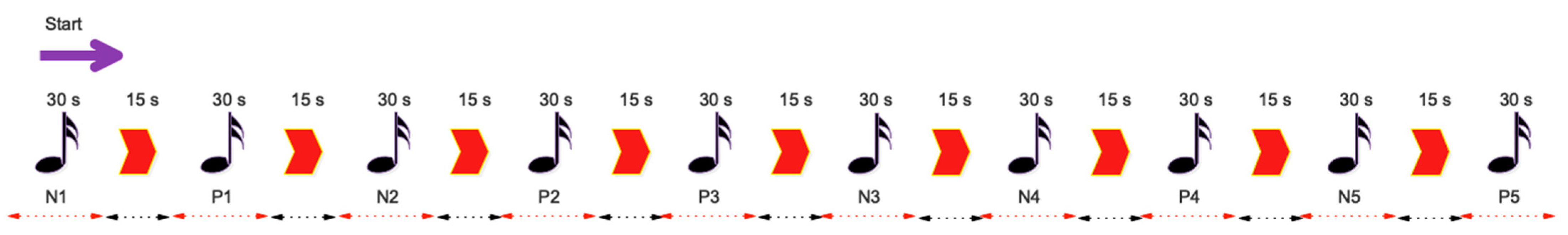

3.1. Data Collection

3.2. Data Pre-Processing

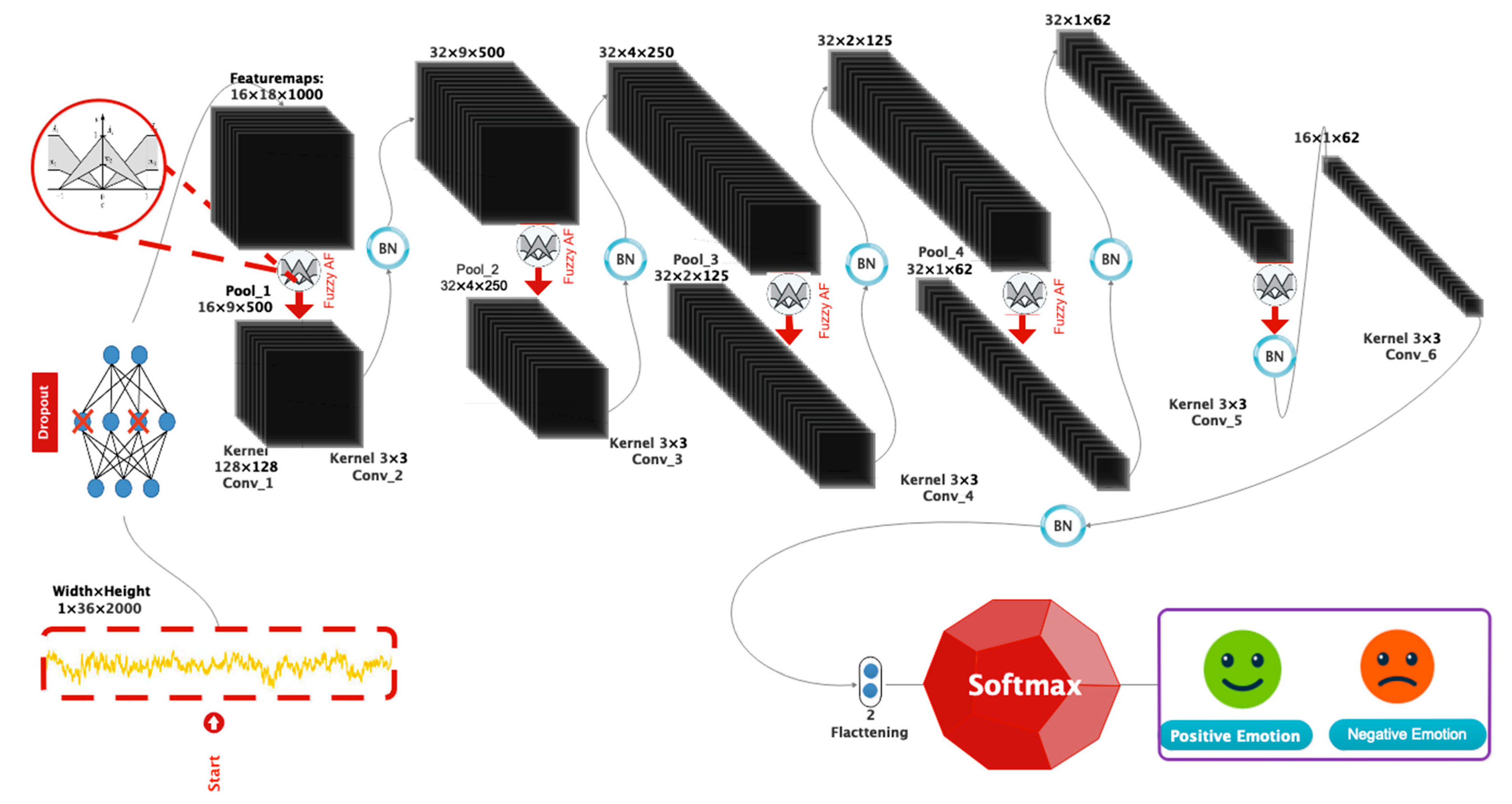

3.3. Proposed Deep Architecture

- A P = 0.3 dropout layer;

- A convolution layer with a type-2 fuzzy activation function, followed by the max-pooling layer and the BN layer;

- The preceding phase is repeated three times;

- A convolution layer with a type-2 fuzzy activation function, followed by a BN layer;

- The preceding process is carried out once more. To calculate points, the FC layer is combined with the nonlinear Softmax activation function.

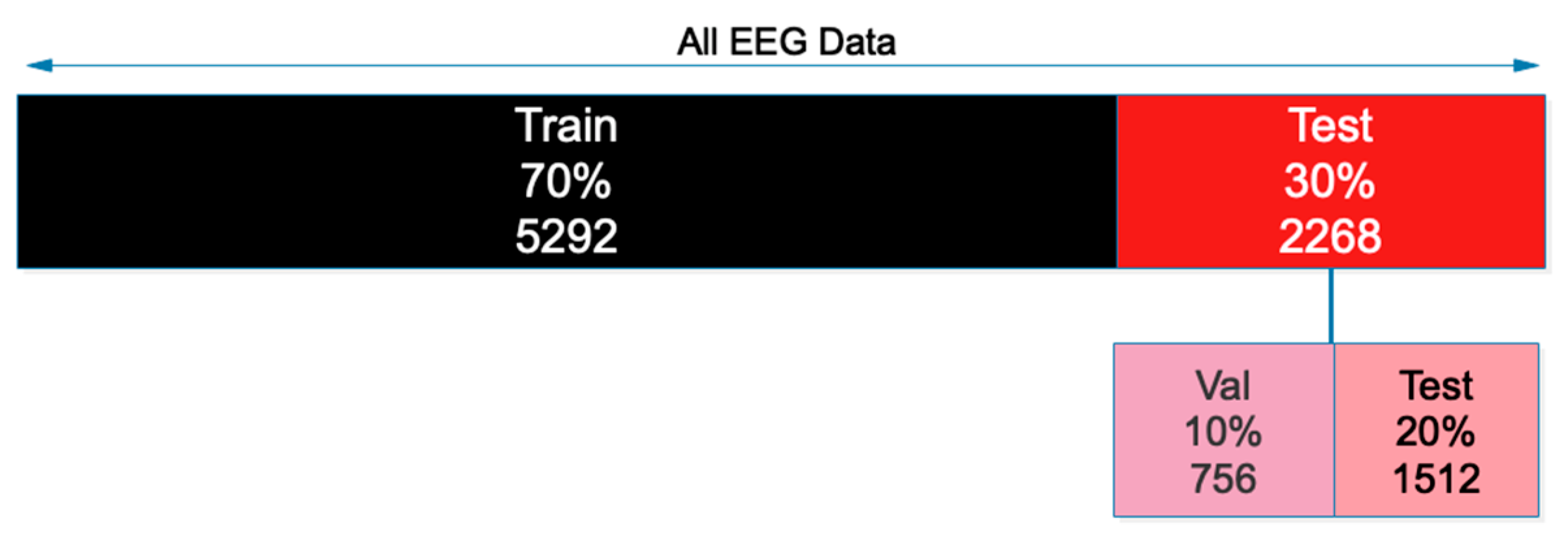

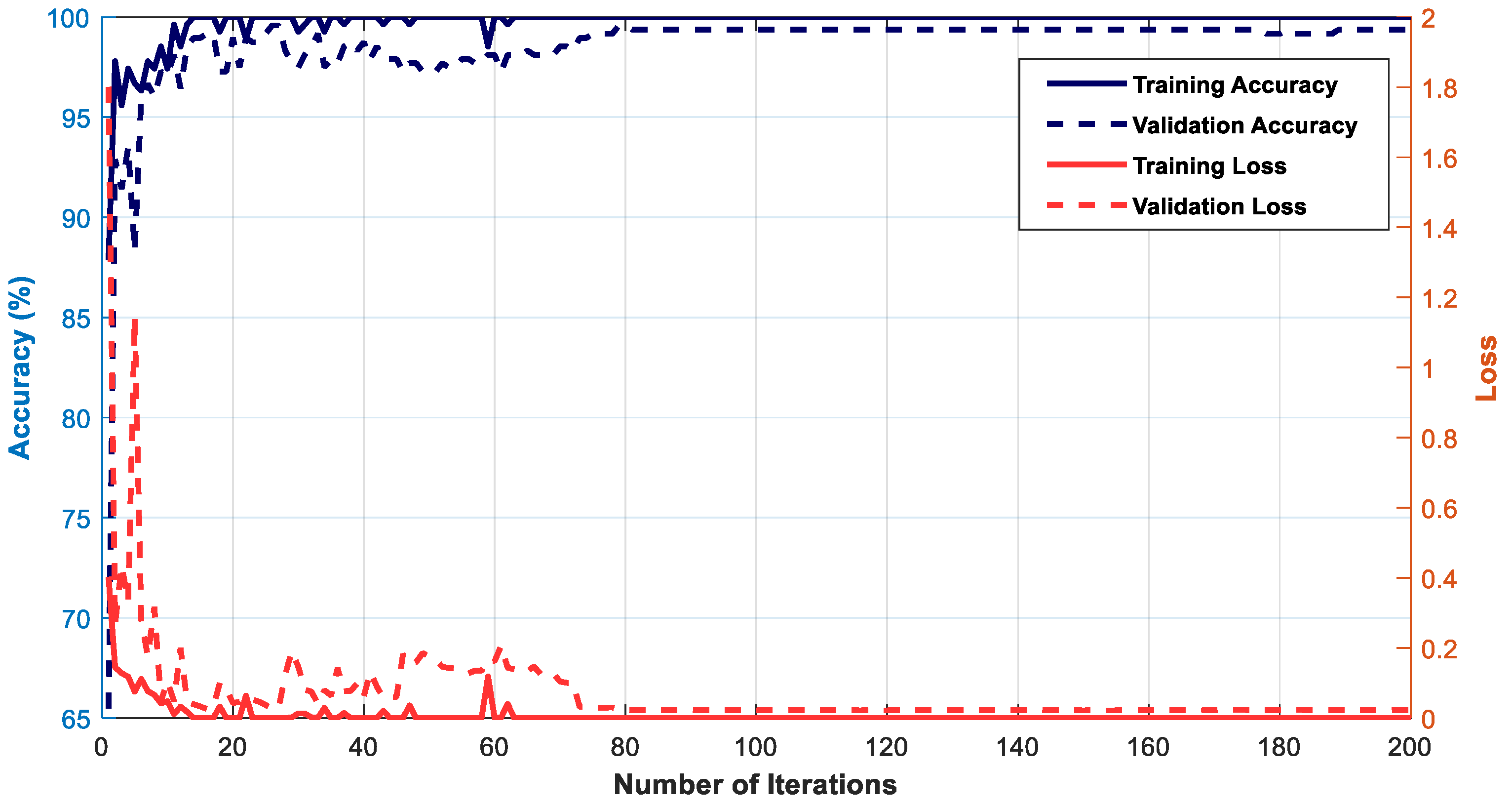

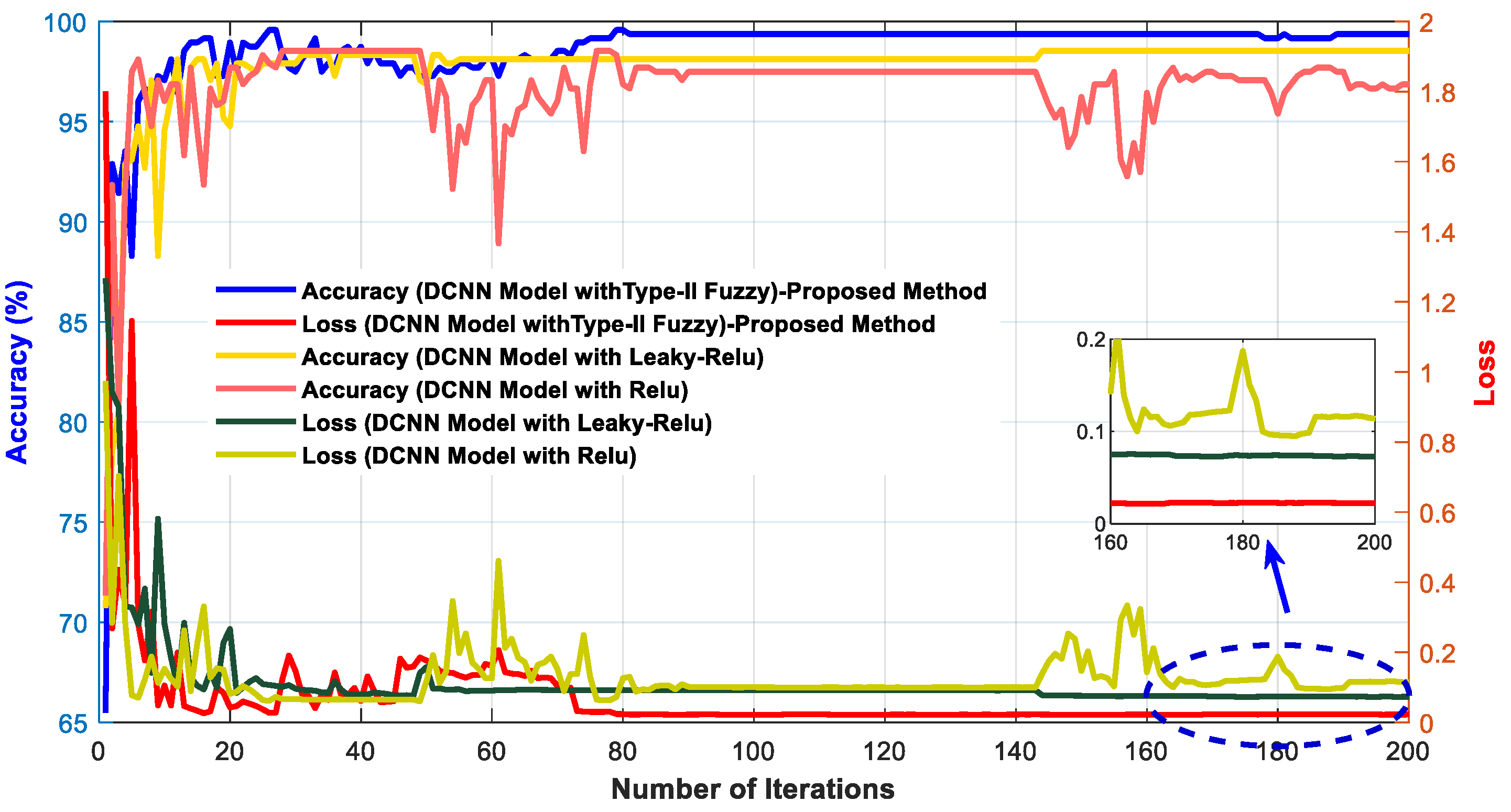

4. Results

- (a)

- Using large filters in the first layer.

- (b)

- Using small filters in the middle layer.

- (c)

- Using type-2 fuzzy functions as the activation function of a convolutional layer.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abbaschian, B.J.; Sierra-Sosa, D.; Elmaghraby, A. Deep learning techniques for speech emotion recognition, from databases to models. Sensors 2021, 21, 1249. [Google Scholar] [CrossRef] [PubMed]

- Abdullah, S.M.S.A.; Ameen, S.Y.A.; Sadeeq, M.A.; Zeebaree, S. Multimodal emotion recognition using deep learning. J. Appl. Sci. Technol. Trends 2021, 2, 52–58. [Google Scholar] [CrossRef]

- Sabahi, K.; Sheykhivand, S.; Mousavi, Z.; Rajabioun, M. Recognition COVID-19 cases using deep type-2 fuzzy neural networks based on chest X-ray image. Comput. Intell. Electr. Eng. 2023, 14, 75–92. [Google Scholar]

- Alswaidan, N.; Menai, M.E.B. A survey of state-of-the-art approaches for emotion recognition in text. Knowl. Inf. Syst. 2020, 62, 2937–2987. [Google Scholar] [CrossRef]

- Khaleghi, N.; Rezaii, T.Y.; Beheshti, S.; Meshgini, S.; Sheykhivand, S.; Danishvar, S. Visual Saliency and Image Reconstruction from EEG Signals via an Effective Geometric Deep Network-Based Generative Adversarial Network. Electronics 2022, 11, 3637. [Google Scholar] [CrossRef]

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human emotion recognition: Review of sensors and methods. Sensors 2020, 20, 592. [Google Scholar] [CrossRef] [PubMed]

- Issa, D.; Demirci, M.F.; Yazici, A. Speech emotion recognition with deep convolutional neural networks. Biomed. Signal Process. Control. 2020, 59, 101894. [Google Scholar] [CrossRef]

- Liu, Y.; Fu, G. Emotion recognition by deeply learned multi-channel textual and EEG features. Futur. Gener. Comput. Syst. 2021, 119, 1–6. [Google Scholar] [CrossRef]

- Pepino, L.; Riera, P.; Ferrer, L. Emotion recognition from speech using wav2vec 2.0 embeddings. arXiv 2021, arXiv:2104.03502. [Google Scholar]

- Mustaqeem; Sajjad, M.; Kwon, S. Clustering-Based Speech Emotion Recognition by Incorporating Learned Features and Deep BiLSTM. IEEE Access 2020, 8, 79861–79875. [Google Scholar] [CrossRef]

- Schoneveld, L.; Othmani, A.; Abdelkawy, H. Leveraging recent advances in deep learning for audio-visual emotion recognition. Pattern Recognit. Lett. 2021, 146, 1–7. [Google Scholar] [CrossRef]

- Zhang, J.; Yin, Z.; Chen, P.; Nichele, S. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review. Inf. Fusion 2020, 59, 103–126. [Google Scholar] [CrossRef]

- Xie, T.; Cao, M.; Pan, Z. Applying self-assessment manikin (sam) to evaluate the affective arousal effects of vr games. In Proceedings of the 2020 3rd International Conference on Image and Graphics Processing, Singapore, 8–10 February 2020; pp. 134–138. [Google Scholar]

- Li, X.; Hu, B.; Sun, S.; Cai, H. EEG-based mild depressive detection using feature selection methods and classifiers. Comput. Methods Programs Biomed. 2016, 136, 151–161. [Google Scholar] [CrossRef] [PubMed]

- Hou, Y.; Chen, S. Distinguishing Different Emotions Evoked by Music via Electroencephalographic Signals. Comput. Intell. Neurosci. 2019, 2019, 3191903-18. [Google Scholar] [CrossRef] [PubMed]

- Hasanzadeh, F.; Annabestani, M.; Moghimi, S. Continuous emotion recognition during music listening using EEG signals: A fuzzy parallel cascades model. Appl. Soft Comput. 2020, 101, 107028. [Google Scholar] [CrossRef]

- Keelawat, P.; Thammasan, N.; Numao, M.; Kijsirikul, B. Spatiotemporal emotion recognition using deep CNN based on EEG during music listening. arXiv 2019, arXiv:1910.09719. [Google Scholar]

- Chen, J.; Jiang, D.; Zhang, Y.; Zhang, P. Emotion recognition from spatiotemporal EEG representations with hybrid convolutional recurrent neural networks via wearable multi-channel headset. Comput. Commun. 2020, 154, 58–65. [Google Scholar] [CrossRef]

- Wei, C.; Chen, L.-L.; Song, Z.-Z.; Lou, X.-G.; Li, D.-D. EEG-based emotion recognition using simple recurrent units network and ensemble learning. Biomed. Signal Process. Control 2020, 58, 101756. [Google Scholar] [CrossRef]

- Sheykhivand, S.; Mousavi, Z.; Rezaii, T.Y.; Farzamnia, A. Recognizing Emotions Evoked by Music Using CNN-LSTM Networks on EEG Signals. IEEE Access 2020, 8, 139332–139345. [Google Scholar] [CrossRef]

- Er, M.B.; Çiğ, H.; Aydilek, I.B. A new approach to recognition of human emotions using brain signals and music stimuli. Appl. Acoust. 2020, 175, 107840. [Google Scholar] [CrossRef]

- Gao, Q.; Yang, Y.; Kang, Q.; Tian, Z.; Song, Y. EEG-based emotion recognition with feature fusion networks. Int. J. Mach. Learn. Cybern. 2022, 13, 421–429. [Google Scholar] [CrossRef]

- Hou, F.; Gao, Q.; Song, Y.; Wang, Z.; Bai, Z.; Yang, Y.; Tian, Z. Deep feature pyramid network for EEG emotion recognition. Measurement 2022, 201, 111724. [Google Scholar] [CrossRef]

- Din, N.U.; Javed, K.; Bae, S.; Yi, J. A Novel GAN-Based Network for Unmasking of Masked Face. IEEE Access 2020, 8, 44276–44287. [Google Scholar] [CrossRef]

- Iqbal, T.; Ali, H. Generative Adversarial Network for Medical Images (MI-GAN). J. Med. Syst. 2018, 42, 231. [Google Scholar] [CrossRef] [PubMed]

- Kattenborn, T.; Leitloff, J.; Schiefer, F.; Hinz, S. Review on Convolutional Neural Networks (CNN) in vegetation remote sensing. ISPRS J. Photogramm. Remote Sens. 2021, 173, 24–49. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Beke, A.; Kumbasar, T. Learning with Type-2 Fuzzy activation functions to improve the performance of Deep Neural Networks. Eng. Appl. Artif. Intell. 2019, 85, 372–384. [Google Scholar] [CrossRef]

- Hosseini-Pozveh, M.S.; Safayani, M.; Mirzaei, A. Interval Type-2 Fuzzy Restricted Boltzmann Machine. IEEE Trans. Fuzzy Syst. 2020, 29, 1133–1142. [Google Scholar] [CrossRef]

- Mohammadizadeh, A. Applications of Music Therapy in the Fields of Psychiatry, Medicine and Psychology including Music and Mysticism, Classification of Music Themes, Music Therapy and Global Unity; Asrardanesh: Tehran, Iran, 1918. [Google Scholar]

- Bérubé, A.; Turgeon, J.; Blais, C.; Fiset, D. Emotion Recognition in Adults With a History of Childhood Maltreatment: A Systematic Review. Trauma Violence Abus. 2023, 24, 278–294. [Google Scholar] [CrossRef]

- Sheykhivand, S.; Rezaii, T.Y.; Meshgini, S.; Makoui, S.; Farzamnia, A. Developing a deep neural network for driver fatigue detection using EEG signals based on compressed sensing. Sustainability 2022, 14, 2941. [Google Scholar] [CrossRef]

- Sheykhivand, S.; Meshgini, S.; Mousavi, Z. Automatic detection of various epileptic seizures from EEG signal using deep learning networks. Comput. Intell. Electr. Eng. 2020, 11, 1–12. [Google Scholar]

- Shahini, N.; Bahrami, Z.; Sheykhivand, S.; Marandi, S.; Danishvar, M.; Danishvar, S.; Roosta, Y. Automatically Identified EEG Signals of Movement Intention Based on CNN Network (End-To-End). Electronics 2022, 11, 3297. [Google Scholar] [CrossRef]

- Bhatti, A.M.; Majid, M.; Anwar, S.; Khan, B. Human emotion recognition and analysis in response to audio music using brain signals. Comput. Hum. Behav. 2016, 65, 267–275. [Google Scholar] [CrossRef]

- Chanel, G.; Rebetez, C.; Bétrancourt, M.; Pun, T. Emotion Assessment From Physiological Signals for Adaptation of Game Difficulty. IEEE Trans. Syst. Man Cybern. Part A Syst. Humans 2011, 41, 1052–1063. [Google Scholar] [CrossRef]

- Jirayucharoensak, S.; Pan-Ngum, S.; Israsena, P. EEG-Based Emotion Recognition Using Deep Learning Network with Principal Component Based Covariate Shift Adaptation. Sci. World J. 2014, 2014, 627892. [Google Scholar] [CrossRef] [PubMed]

- Sengupta, A.; Ye, Y.; Wang, R.; Liu, C.; Roy, K. Going Deeper in Spiking Neural Networks: VGG and Residual Architectures. Front. Neurosci. 2019, 13, 95. [Google Scholar] [CrossRef] [PubMed]

- Targ, S.; Almeida, D.; Lyman, K. Resnet in resnet: Generalizing residual architectures. arXiv 2016, arXiv:1603.08029. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

| Subject | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Sex | M | M | F | M | M | M | M | M | M | F | F | F | F | M | F | M |

| Age | 25 | 24 | 27 | 24 | 32 | 18 | 25 | 29 | 30 | 19 | 18 | 20 | 22 | 24 | 23 | 28 |

| BDI | 16 | 22 | 19 | 4 | 0 | 11 | 13 | 19 | 20 | 14 | 22 | 12 | 0 | 12 | 1 | 9 |

| Valence For P emotion | 9 | 6.8 | 6.2 | 7.4 | 5.8 | 5.6 | 7.2 | 7.8 | 7.4 | 6.8 | 7.8 | 8.6 | 6 | 8 | - | 7.4 |

| Arousal For P emotion | 9 | 6.2 | 7.4 | 7.6 | 5 | 5.4 | 7.4 | 7.4 | 7 | 6.6 | 8 | 8.6 | 6 | 8 | - | 8 |

| Valence For N emotion | 2 | 3.6 | 4.2 | 2.4 | 4.4 | 2 | 3.8 | 2.8 | 3.4 | 3.8 | 4.5 | 2 | 2 | 1.8 | - | 1.8 |

| Arousal For N emotion | 1 | 2 | 4.6 | 2.6 | 5.6 | 1.6 | 3.8 | 3 | 5.4 | 3.2 | 3 | 1.2 | 1.2 | 1.6 | - | 2 |

| Result of Test | ACC | REJ | REJ | ACC | REJ | REJ | REJ | ACC | REJ | REJ | REJ | ACC | ACC | ACC | REJ | ACC |

| Reason for rejection | - | Depressed 21 < 22 | Failure in the SAM test | - | Failure in the SAM test | Failure in the P emotion | Failure in the N emotion | - | Failure in the N emotion | Failure in the N emotion | Depressed 21 < 22 | - | - | - | Motion noise | - |

| Emotion Sign and Music Number | The Type of Emotion Created in the Subject | The Name of the Music |

|---|---|---|

| N1 | Negative | Advance income of Isfahan |

| P1 | Positive | Azari 6/8 |

| N2 | Negative | Advance income of Homayoun |

| P2 | Positive | Azari 6/8 |

| P3 | Positive | Bandari 6/8 |

| N3 | Negative | Afshari piece |

| N4 | Negative | Advance income of Isfahan |

| P4 | Positive | Persian 6/8 |

| N5 | Negative | Advance income of Dashti |

| P5 | Positive | Bandari 6/8 |

| L | Layer Type | Activation Function | Output Shape | Size of Kernel and Pooling | Strides | Number of Filters | Padding |

| 0–1 | Convolution 2-D | Fuzzy | (None, 18, 1000, 16) | 128 × 128 | 2 | 16 | yes |

| 1–2 | Max-Pooling 2-D | - | (None, 9, 500, 16) | 2 × 2 | 2 | - | no |

| 2–3 | Convolution 2-D | Fuzzy | (None, 9, 500, 32) | 3 × 3 | 1 | 32 | yes |

| 3–4 | Max-Pooling 2-D | - | (None, 4, 250, 32) | 2 × 2 | 2 | - | no |

| 4–5 | Convolution 2-D | Fuzzy | (None, 4, 250, 32) | 3 × 3 | 1 | 32 | yes |

| 5–6 | Max-Pooling 2-D | - | (None, 2, 125, 32) | 2 × 2 | 2 | - | no |

| 6–7 | Convolution 2-D | Fuzzy | (None, 2, 125, 32) | 3 × 3 | 1 | 32 | yes |

| 7–8 | Max-Pooling 2-D | - | (None, 1, 62, 32) | 2 × 2 | 2 | - | no |

| 8–9 | Convolution 2-D | Fuzzy | (None, 1, 62, 32) | 3 × 3 | 1 | 32 | yes |

| 10–11 | Convolution 2-D | Fuzzy | (None, 1, 62, 16) | 3 × 3 | 1 | 16 | yes |

| 11–12 | Flatten | - | (None, 100) | - | - | - | - |

| 12–13 | Softmax | - | (None, 2) | - | - | - | - |

| Parameters | Search Space | Optimal Value |

|---|---|---|

| Optimizer | RMSProp, Adam, Sgd, Adamax, Adadelta | SGD |

| Cost function | MSE, Cross-entropy | Cross-Entropy |

| Number of Convolution layers | 3, 5, 6, 9, 11 | 6 |

| Number of FC layers | 2, 3, 5 | 1 |

| Number of Filters in the first convolution layer | 16, 32, 64, 128 | 16 |

| Number of Filters in the second convolution layer | 16, 32, 64, 128 | 32 |

| Number of Filters in another convolution layers | 16, 32, 64, 128 | 32 |

| Activation Function | Relu, Leaky Relu, Type II Fuzzy | Type II Fuzzy |

| Size of filter in the first convolution layer | 3, 16, 32, 64, 128 | (128, 128) |

| Size of filter in another convolution layers | 3, 16, 32, 64, 128 | (3, 3) |

| Dropout rate | 0, 0.2, 0.3, 0.4, 0.5 | 0.3 |

| Batch size | 4, 8, 10, 16, 32, 64 | 64 |

| Learning rate | 0.01, 0.001, 0.0001 | 0.0001 |

| Function Used | Relu | Leaky-Relu | Type-2 Fuzzy |

|---|---|---|---|

| Training Time | 10,800 s | 10,978 s | 13,571 s |

| Recent Studies | Stimuli | Methods | Number of Emotions Considered | Accuracy (%) |

|---|---|---|---|---|

| Bhatti et al. [35] | Music | WT and MLP | 4 | 78.11 |

| Chanel et al. [36] | Video Games | Frequency band extraction | 3 | 63 |

| Jirayucharoensak et al. [37] | Video Clip | Principal component analysis | 3 | 49.52 |

| Er et al. [21] | Music | VGG16 | 4 | 74 |

| Sheykhivand et al. [20] | Music | CNN-LSTM | 2 | 96 |

| Hou et al. [23] | Video Clip | FPN + SVM | 3 | 95.50 |

| Proposed model | Music | CNN + Type-2 fuzzy | 2 | 98.2 |

| Hand-Crafted Features | Feature Learning | Model |

|---|---|---|

| 81.9% | 76.1% | MLP |

| 70% | 93.8% | CNN |

| 87.4% | 78% | SVM |

| 76.6% | 98.2% | Proposed Model |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Baradaran, F.; Farzan, A.; Danishvar, S.; Sheykhivand, S. Automatic Emotion Recognition from EEG Signals Using a Combination of Type-2 Fuzzy and Deep Convolutional Networks. Electronics 2023, 12, 2216. https://doi.org/10.3390/electronics12102216

Baradaran F, Farzan A, Danishvar S, Sheykhivand S. Automatic Emotion Recognition from EEG Signals Using a Combination of Type-2 Fuzzy and Deep Convolutional Networks. Electronics. 2023; 12(10):2216. https://doi.org/10.3390/electronics12102216

Chicago/Turabian StyleBaradaran, Farzad, Ali Farzan, Sebelan Danishvar, and Sobhan Sheykhivand. 2023. "Automatic Emotion Recognition from EEG Signals Using a Combination of Type-2 Fuzzy and Deep Convolutional Networks" Electronics 12, no. 10: 2216. https://doi.org/10.3390/electronics12102216

APA StyleBaradaran, F., Farzan, A., Danishvar, S., & Sheykhivand, S. (2023). Automatic Emotion Recognition from EEG Signals Using a Combination of Type-2 Fuzzy and Deep Convolutional Networks. Electronics, 12(10), 2216. https://doi.org/10.3390/electronics12102216