1. Introduction

Object detection is one of the most fundamental and challenging problems in computer vision, aiming to predict a bounding box with category labels for objects of interest in images. It has a wide range of applications in many fields, such as autonomous driving [

1], face detection [

2], remote sensing [

3], and medical care [

4]. In recent years, significant progress has been made in applying neural networks to image processing, and some researchers have also tried to introduce convolutional neural networks into object detection tasks. The input image of the object detector has to go through several convolutional layers of the backbone to extract features first, in which the scale of the input image is shrinking, the number of channels is increasing, and the semantic information represented becomes more and more abstract. In other words, the feature maps obtained from the shallow layer of the backbone contain more spatial information, which is beneficial for detecting small objects and predicting object locations. In contrast, the deep layer of the backbone contains more abstract semantic information, which is beneficial for detecting large objects and predicting classification, thus causing the problem of semantic imbalance between different feature maps. Some early object detectors use feature maps of different scales obtained from different stages of the backbone for direct prediction to enhance the model’s detection capability. However, this approach does not solve the problem of semantic imbalance between feature maps well. Therefore, the authors of [

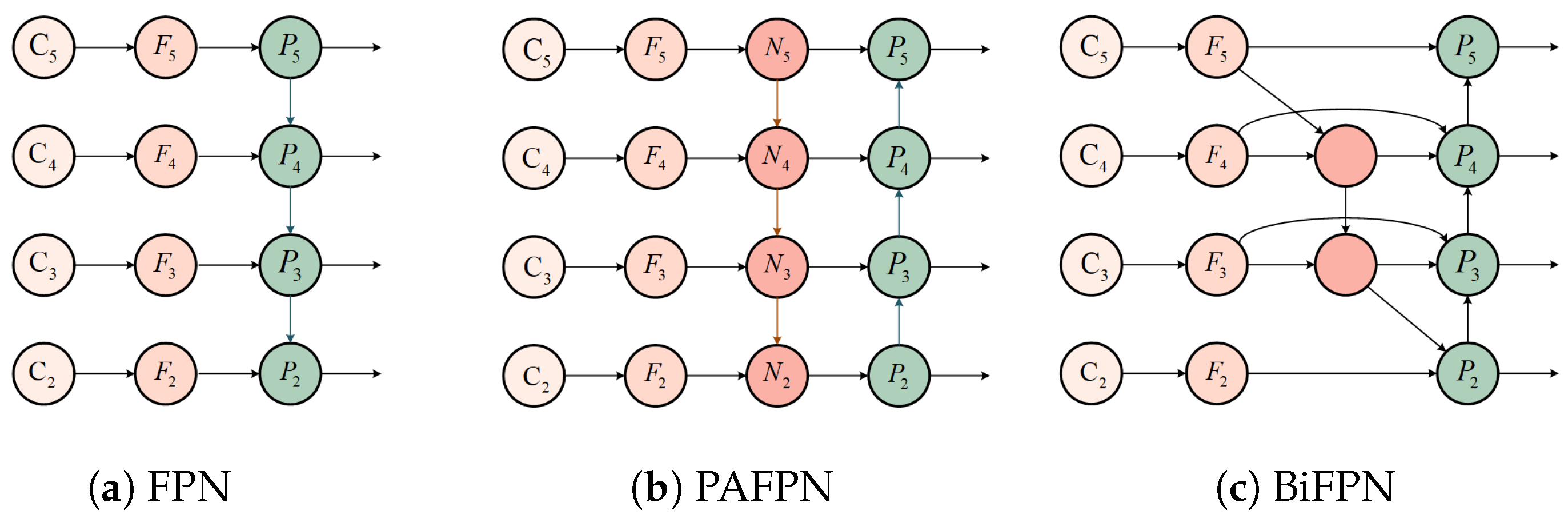

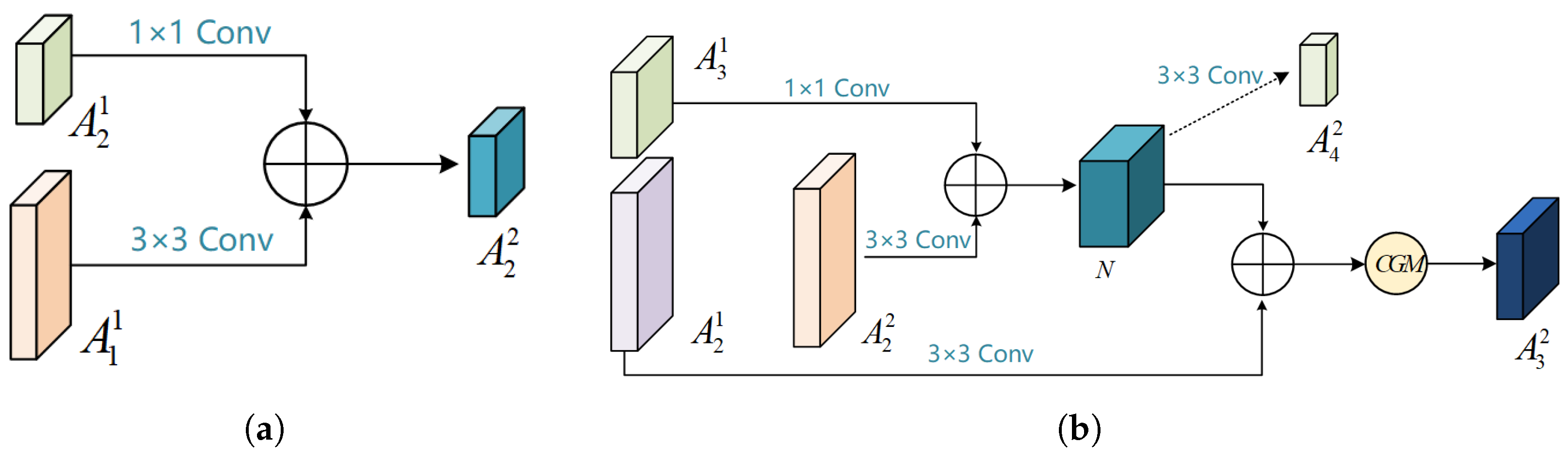

5] proposed a Feature Pyramid Network (FPN), as shown in

Figure 1. As can be seen from the figure, the FPN passes the high-level features of the backbone network to the low-level feature maps through a top-down structure, which complements the low-level semantics, so that a high-resolution, strongly semantic bottom-level feature map can be obtained, which is beneficial for the detection of small objects. The structure achieves the exchange of semantic information between feature maps at different levels, alleviating the imbalance problem between feature maps.

However, the FPN still has some problems. (1) Information decay. When FPN aggregates information from feature maps at different scales, multiple upsampling leads to information decay or misalignment, thus making the semantic information exchange between different feature maps inadequate.

Some methods counteract information decay by enhancing features or introducing new upsampling modules. AugFPN [

6] reduces the information loss in the top feature by residual feature enhancement. At the same time, FaPN [

7] enhances upsampling by introducing deformable convolution, thereby reducing the misalignment generated by feature map fusion. CARAFE [

8] proposed a general and lightweight feature upsampling method to mitigate information loss during upsampling. There are also methods to mitigate information decay by changing the structure of the FPN. In contrast to the single top-down fusion structure of FPN in

Figure 2a, the PANet [

9] shown in

Figure 2b adds a bottom-up structure to FPN. This structure enhances the entire feature hierarchy using accurate low-level spatial information, thus shortening the information path between the low-level and high-level features. The BiFPN [

10] in

Figure 2c adds residual connections to the PANet, removes the nodes of single input edges, and finally superimposes multiple BiFPN Layers to enhance the perception of multi-scale features of the detector. FPANet [

11] augmented the BiFPN with the proposed SeBiFPN. Second, the lateral connection of the FPN directly compresses the feature map channels from the backbone, which also causes a large amount of information fading. CE-FPN [

12] mitigates the information decay of lateral connections by introducing sub-pixel convolution, and reference [

13] applies deformable convolution to lateral connections with FPN to enhance the semantics. (2) Aliasing effects during fusion. Feature maps obtained at different stages of the backbone have completely different semantic information. A simple additive fusion of these feature maps will result in aliasing effects. Methods such as CE-FPN and FaPN redirect the fused feature maps for channel guidance to mitigate aliasing effects.

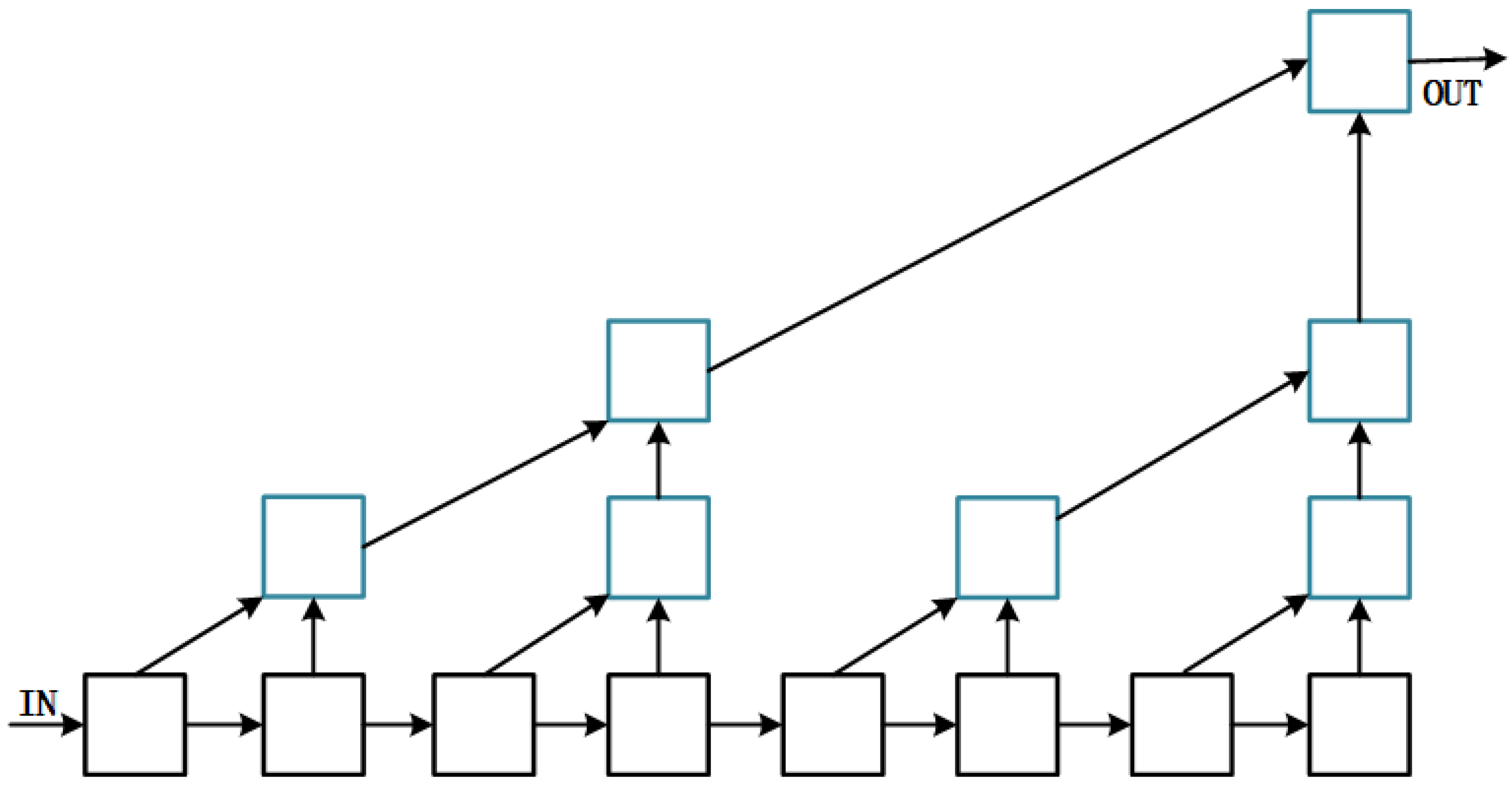

To address these issues, we have made three improvements to the FPN. (1) Unidirectional Cross-Level Residual Aggregation Module (UCLRAM). Models such as PANet and BiFPN have achieved good results in combating information fading using the underlying feature maps. However, we believe it is still possible to improve the scale awareness of FPN by enhancing this bottom-up structure. To this end, we improved the tree-structured aggregation network of DLA [

14] (as in

Figure 3), which aggregates the features extracted from two adjacent feature extraction blocks in the backbone of a tree structure that can better fuse semantic and spatial information. We only keep the lateral and bottom-up connections of the tree-structured aggregation network, add the bottom-up aggregation connections of the same stage, and finally adjust the overall structure to be better for feature fusion. We name the adjusted aggregation structure UCLRAM. UCLRAM aggregates the semantic information contained in the feature maps from different scales in a triangular structure from the bottom up to the top layer, countering the information decay during upsampling (top-down) by enhancing the semantic information at the topmost layer. (2) Introduction of residual structure. We introduce the information in the high-level of FPN to the bottom layer through the residual structure in upsampling, which also mitigates the information decay to a different extent. (3) Residual Squeeze and Excitation Module (RSEM). In order to mitigate aliasing effects, we designed a Residual Squeeze and Excitation Module and placed it at the three aggregation nodes with the highest degree of fusion to achieve the guidance of global semantic information by weighting the channel information of the feature map.

Regarding the overall fusion structure, there is some similarity between MSRA-FPN and the previously proposed PANet, as well as BiFPN, which both use a bottom-up structure to enhance the FPN. However, the differences in the structure of MSRA-FPN bring unique advantages. First, we front-loaded the bottom-up aggregation structure UCLRAM so that it can directly access the raw features from the backbone network, which can further enhance UCLRAM. Second, the triangular structure of UCLRAM has a more robust aggregation capability than the single-layer structure of PANet and BiFPN, enabling the high-level feature to have richer semantic information to enhance the detector’s ability to detect large objects.

Experimentally, it is demonstrated that on the PASCAL VOC [

15] dataset, our proposed method improves 0.5–1.9% compared to the baseline model and has an advantage over other methods. Furthermore, on the MS COCO [

16] dataset, our proposed method can also improve the AP of the baseline model by 0.8% and the

by 1.8%. On the Thangka Figure Dataset (TKFD), our proposed model improves the performance of the three baseline models by 2.9–4.7%, validating the effectiveness of the MSRA-FPN for Thangka figure detection as well as large object detection.

3. Method

3.1. OverAll

Adding a Feature Pyramid Network [

5] to the object detector is intended to transfer information between multi-level feature maps better. FPN conveys more abstract semantic information from the top to the bottom layer, alleviating the semantic imbalance between different feature maps.

This type of information transfer relies heavily on a top-down fusion structure. This structure requires multiple levels of input

, where

denotes features with a resolution of the

level of the input image, and the scale of the feature map increases to twice the original size with each upsampling, as shown in Equation (

1).

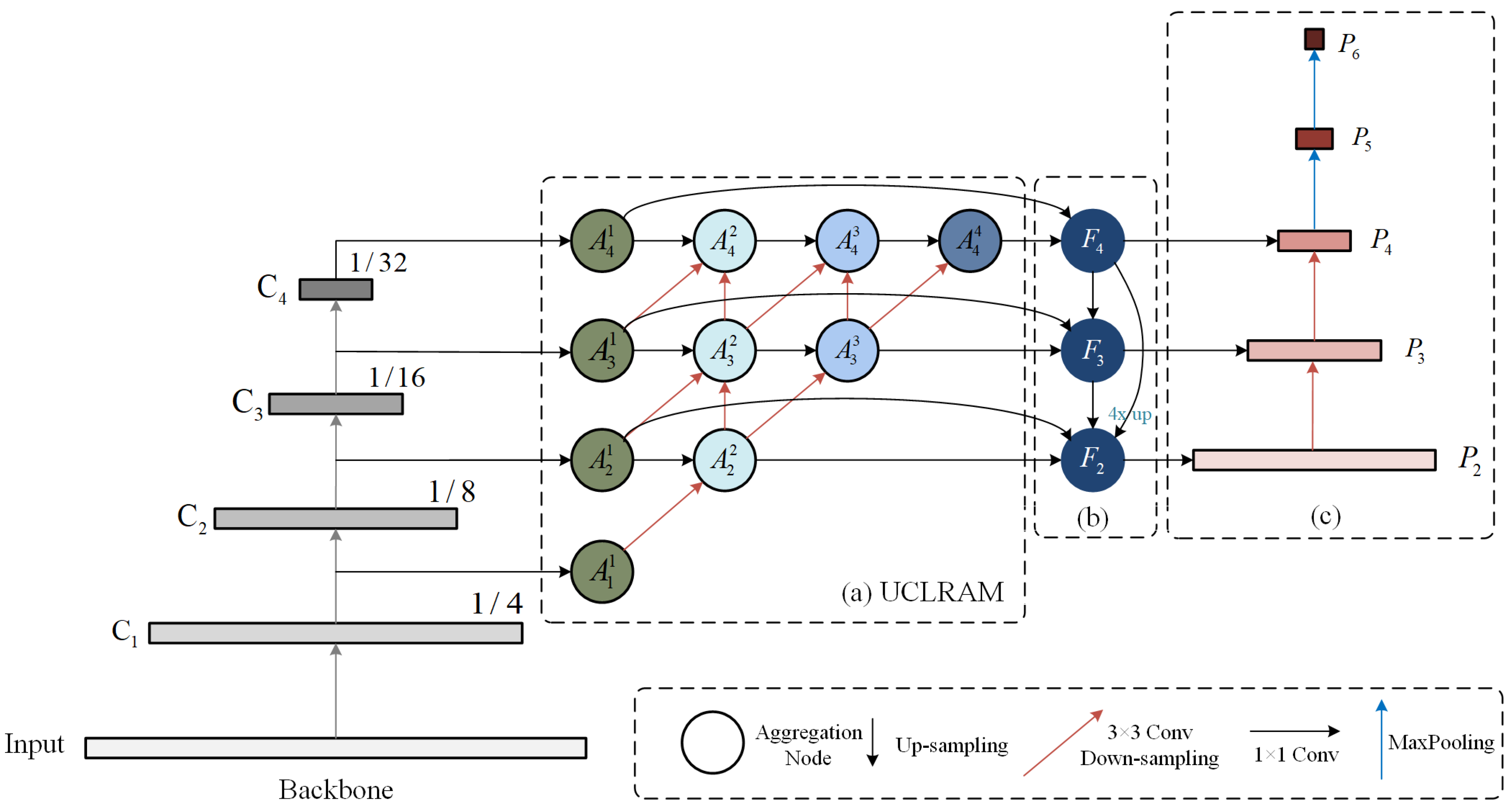

To counteract the semantic loss in upsampling and reduce the confounding phenomenon during feature map fusion, we designed MSRA-FPN based on the structure of FPN. MSRA-FPN consists of a bottom-up unidirectional cross-layer residual aggregation module (a), a top-down fusion module with residual connection (b), and a bottom-up fusion module (c), as shown in

Figure 4. The process of feature fusion is as follows.

(1) The MSRA-FPN inputs a four-layer feature map from the backbone, labeling it as . Its channel number is . To allow feature maps of the same scale to be summed, it is necessary to ensure that their channel numbers are of the same size, so it is necessary to input to a set of 1×1 convolutions first so that the channel numbers are normalized to 256. The normalized is input to the UCLRAM for feature fusion enhancement and output as three new feature maps , whose number of channels remains 256. We also designed the Residual Squeeze and Excitation Module to mitigate the aliasing effects caused by the fusion of multiple feature maps at different scales.

(2) Input into the structure of (b) for top-down feature fusion to obtain the feature map . The up-sampling in the fusion process uses the nearest neighbor interpolation method with a sampling rate of two. After the summation of the upsampled feature map and the feature map obtained from the lateral connection, one branch continues to aggregate downward, and the other branch needs to be summed with the feature map of the first stage of UCLRAM.

(3) Three feature maps for prediction are obtained after inputting into the structure of (c) with 256 channels. For better prediction at multiple scales, and are obtained after downsampling using Maxpooling in turn, starting from , and finally is input into the detection head module for category and bounding box prediction.

3.2. Unidirectional Cross-Level Residual Aggregation Module

To fully fuse the feature information from multiple layers into the top-level feature map, we designed the unidirectional cross-layer residual aggregation module UCLRAM, as shown in

Figure 4a. This module consists of four stages, and each stage aggregates the feature maps of the previous stage to form a new feature map, which we call an aggregation node. The number of aggregation nodes in each stage is reduced by one from front to back, forming a unidirectional triangular aggregation structure. These aggregation nodes are divided into two-input (e.g.,

) and three-input (e.g.,

) aggregation nodes according to the number of input feature maps.

Figure 5a shows an example of the detailed structure of the two-input aggregation node

. We abstract the computation of the two-input aggregation node, as shown in Equation (

2).

where

i denotes the

i-th fusion stage,

j denotes the

j-th aggregation node of the current stage. The feature map

from the same layer of the previous stage is through a

convolutional layer to obtain a new feature map

with size

(

W denotes the width of the feature map, and

H denotes the height of the feature map). The feature map

from the lower layer of the previous stage is of size

. To make them the same size, the fused feature map is then obtained by using cross-layer residual connection (3 × 3 convolutional layer) to downsample

into a feature map

of size

. Afterward,

and

are added together to obtain the fused feature map.

Figure 5b shows an example of the detailed structure of the computational three-input node

. We define the three-input aggregation node as Equation (

3).

When computing the feature map of the three-input convergence node, it is necessary to first pass the j-th feature map B from stage through a convolution layer to obtain a new feature map (whose size is ).

The feature map , one layer lower than the current aggregation node, is of size . In order to fuse and , we need to use cross-layer residual connectivity (3 × 3 convolution layer) to normalize the feature map to the size of [256, W, H] and then add and to obtain the feature map N. Afterward, is downsampled to obtain the feature map , and N and are summed and then guided by the RSEM module to obtain the aggregation node .

It should be noted that in the calculation of

, the feature map

N will enter a double branch to continue the aggregation, one of which is consistent with the above and obtains

after aggregation, while the other will continue to downsample and complete the aggregation after fusion with the topmost feature map, as shown in the dashed part in

Figure 5b, which we define as Equation (

4).

3.3. Residual Squeeze and Excitation Module

Different layers in the Feature Pyramid Network have different scales and channels, representing different semantic information. The feature at the deeper layer of the backbone is more abstract and richer in semantic information due to multiple convolution operations. In contrast, the feature map at the bottom layer is less abstract and contains more spatial information. The low-level feature has more spatial information, which helps object localization tasks and small object detection, as well as provides better access to local features of the image. The high-level feature maps are relatively small in scale. After multiple convolutional kernel downsampling of the backbone, most of its spatial information is transformed into abstract semantic information, which is more conducive to object classification and large object detection. At the same time, after the feature map reduces, the receptive field increases due to the rise in the relative area covered by one sliding of the convolutional kernel to focus more on the global information of the image.

Based on the above conclusions, due to the aggregation of a large number of feature maps at different levels in MSRA-FPN, aliasing effects are caused to some extent. To alleviate this problem, we introduced SENet [

36] and used a skip connection to add the input feature maps to a weighted feature map. The aliasing effects are mitigated by a guide of global-information-like channels, the structure of which is shown in

Figure 6. To balance the performance and parameters of the model, we did not use the module on all aggregation nodes but used RSEM on the three input aggregation nodes of UCLRAM.

In RSEM, the feature map obtained in the previous stage is first changed into a

(

C is the number of channels) feature map

z by an Avgpooling, and this step extracts the global information of the input feature map. Then the weighted vector

u of

z is obtained using the weighting function

. Then

u is multiplied by the input feature map to obtain the guided feature map

C. Finally, add

C and the input feature map using the residual connection. The operation is essentially a channel attention mechanism. The process is abstracted, as shown in Equations (

5) and (

6).

4. Experiments

We validated the MSRA-FPN on the publicly available dataset PASCAL VOC [

15]. We performed comparison experiments with other state-of-the-art FPN [

5] methods and ablation experiments on this dataset for different components of our proposed method. We also conducted comparative experiments on MS COCO. In addition, comparative experiments were carried out on the Thangka figure dataset to verify the effectiveness of MSRA-FPN for detecting large objects in images.

4.1. Implementation Details

We set the training batch size to 8, used a 3080ti for training, set the initial learning rate to 0.001, the momentum to 0.9, the weight decay to 0.0001, and used the first 500 iterations as a warm-up with a warm-up rate of 0.001. A simple random flip was used for data enhancement, with a flip rate of 0.5. It should be noted that we used the backbone parameters pre-trained on ImageNet for the initialization of the model and froze the backbone parameters in the first phase. Secondly, PASCAL VOC2007 and TKFD used the same training strategy for a total of 100 epochs, with a learning rate decay at epochs 60 and 90, with a decay rate of 0.1. Note that we trained a total of 36 epochs on MS COCO, decayed the learning rate by a factor of 0.1 at 30 epochs, and set the initial learning rate to 0.006 and scaled the input size to 320.

We used the Pytorch framework and the open source object detection framework MMDetection2 [

37] as experimental platforms and used three classical object detectors with FPN, RetinaNet [

20], FoveaBox [

28], and Reppoints [

23], as baseline models. All hyperparameters of the models in the experiments were kept consistent except for the Feature Pyramid Network part and used the same training strategy and experimental setting.

4.2. Dataset

4.2.1. PASCAL VOC

The PASCAL VOC dataset provides a standardized dataset including 20 object classes for object detection tasks. The comparative experiments section used two datasets to train the model, one using the PASCAL VOC2007 trainval set (5011 images and 12,608 objects in total). The other set was trained using the PASCAL VOC2007 and PASCAL VOC2012 trainval set (a total of 16,551 images and 40,058 objects) together. Both sets of experiments were tested on the test set of VOC2007.

4.2.2. MS COCO

MS COCO has 80 object classes containing 115k images for training (train2017) and 5k images for validation (val2017) and 20k (test-dev) images for testing. We trained the model on train2017 and report the results on val2017 for AP, AP50, AP75 and on small, medium, and large objects (, , ).

4.2.3. Thangka Figure Dataset

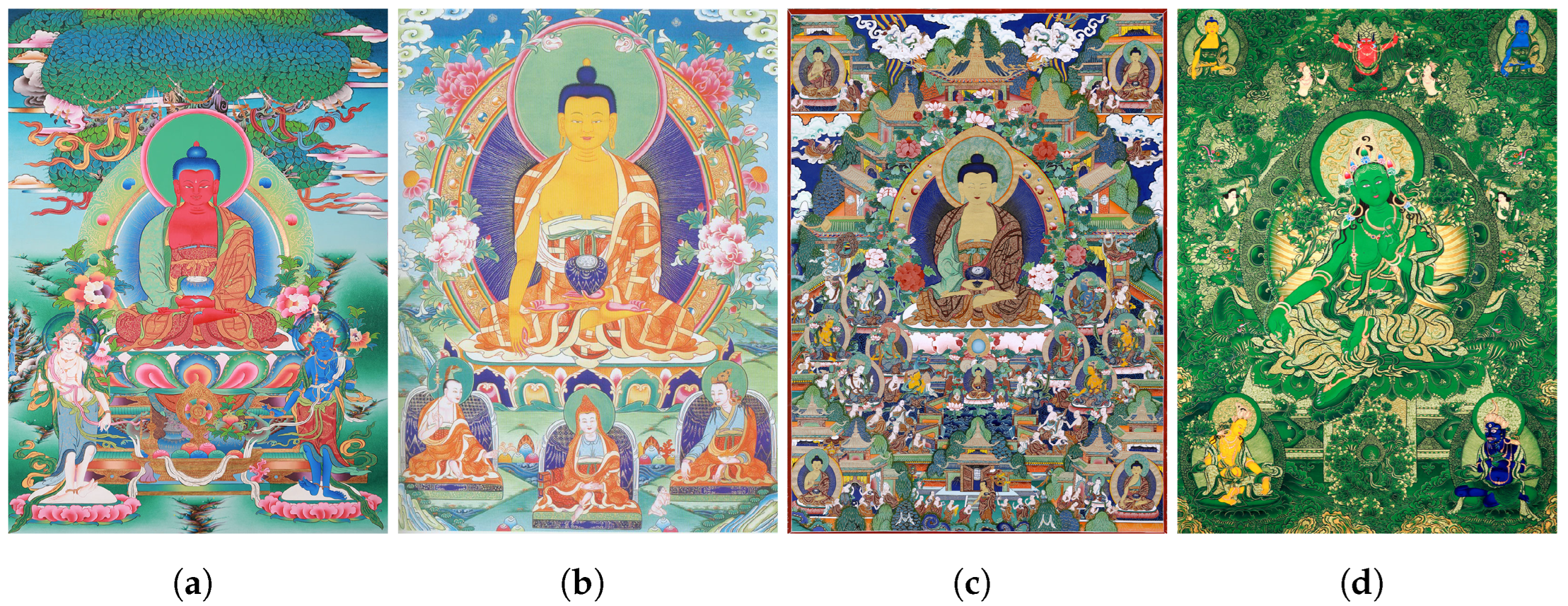

The Thangka is a unique Chinese painting art and an intangible piece of world cultural heritage.

Figure 7 shows various types of Thangka images. Our research group has been researching issues related to the digital preservation of Thangka for a long time, among which the object detection of Thangka images is a crucial research problem.

Most Thangkas are depictions of figures in Buddhism, which tend to take up a considerable proportion of the entire canvas, with a high degree of similarity between some of the figures, as in

Figure 7a,b, and a large number of figures in some of the Thangka images, as in

Figure 7c. In addition, the Thangka images themselves are characterized by bright colors, rich content and complex backgrounds, making them difficult to detect.

We have studied the detection of figures in Thangkas, produced a Thangka figure dataset (TKFD) with 26 types of typical figures as detection objects, and validated our proposed method, MSRA-FPN, on this data.

TKFD uses a total of 1693 Thangka images with 4327 objects, of which there are 1340 in the training set, 156 in the validation set, and 197 in the test set, involving a total of 26 detection categories, whose classes distribution is shown in

Figure 8. We classify objects in the dataset with an area less than 32 × 32 as small objects, those with an area greater than 32 × 32 and less than 96 × 96 as medium objects, those with an area greater than 96 × 96 and less than 512 × 512 as large objects, and those with area more than 512 × 512 as huge objects. As can be seen in

Figure 9, more than half of the objects in the Thangka figure dataset belong to large objects.

4.3. Main Results

4.3.1. Qualitative Evaluation

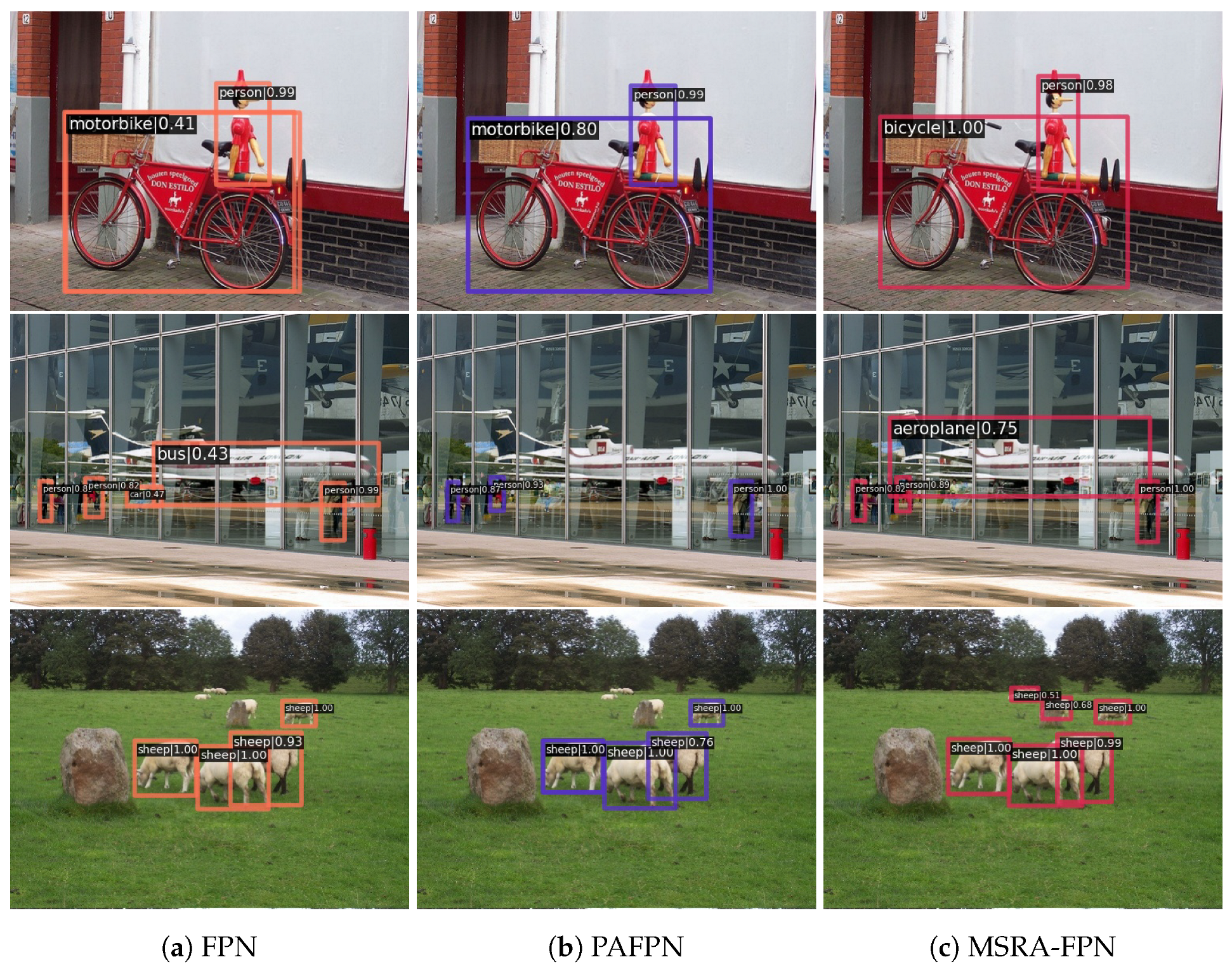

We compared the detection results of MSRA-FPN and other state-of-the-art FPN-based methods on PASCAL VOC2007 and TKFD, respectively. ResNet50 [

38] is uniformly used here as the backbone as well as the same model hyperparameters and experimental settings to ensure fairness.

PASCAL VOC: In

Figure 10, MSRA-FPN correctly predicts ‘bicycle’ in the figure, while both FPN and PANet [

9] detect it as ‘motorbike’; from the second row, it can be seen that MSRA-FPN detected the large object ‘airplane’, while FPN identified it as ‘bus’ and PANet did not identify any object; from the third row, it can be seen that MSRA-FPN detected the small object further away ‘sheep’, which was not detected by FPN or PANet. This demonstrates that our proposed MSRA-FPN has better detection results on large objects and can effectively fuse information on more scales, improving the detection capability and accuracy of the model.

TKFD:The first row of

Figure 11 shows a Thangka with a predominantly green color. Due to the background and object’s extremely similar color, FPN and PANet did not detect the ‘green-tara’ in the upper left corner, which MSRA-FPN correctly detected. The second row shows that FPN did not detect ‘manjusri’ and ‘vajradhara’ at the bottom of the Thangka image and missed the ‘bhaishajyaguru’ at the top right of the image, and PANet missed the ‘vajradhara’ at the top left of the image. MSRA-FPN detects the objects not detected by FPN and PANet. It can be seen from the third row that FPN did not detect ‘bhaishajyaguru’ and ‘aksobhya’ at the top left of the image, while PANet missed the ‘green-tara’, and MSRA-FPN detected these three missed figures.

4.3.2. Quantitative Evaluation

PASCAL VOC: We compared the detection performance of the proposed MSRA-FPN with the baseline model and the existing state-of-the-art FPN-based methods. The FPN part of RetinaNet [

20] was replaced with other state-of-the-art FPN-based methods, and comparison experiments were conducted with the same backbone, hyperparameters, and other experimental settings.

Table 1 reports the results with the PASCAL VOC2007 trainval set alone, and

Table 2 reports the results with both the trainval set of PASCAL VOC2007 and PASCAL VOC2012 together. Our proposed method improves the performance of RetinaNet by 1.6% when trained with PASCAL VOC2007 alone compared to the baseline model and by nearly 1% on VOC2007+VOC2012, and it is competitive with other state-of-the-art FPN-based methods.

To better validate the generality of MSRA-FPN, we also conducted comparative experiments using two anchor-free object detectors as baseline models, as shown in

Table 1 and

Table 2 for the Reppoints and FoveaBox experimental results. As can be seen, MSRA-FPN can improve the performance of both baseline models by 0.5% to 1.9%. Thus, our proposed MSRA-FPN can effectively improve the accuracy of anchor-based and anchor-free detectors.

A strong backbone can better extract the features of the images, and

Table 3 reports the performance of MSRA-FPN with different backbones. From

Table 3, it can be seen that the overall performance of the model is enhanced as the backbone parameters increase.

In addition, we also verified the performance of MSRA-FPN at different input scales. As seen in

Table 4, the model’s performance improves as the image input size increases.

The above four experimental results show that our proposed MSRA-FPN can effectively fuse feature information at different scales and improve the object detection capability of the detector.

MS COCO: We also evaluated MSRA-FPN on MS COCO, as shown in

Table 5. MSRA-FPN can improve the performance of the baseline model RetinaNet by 0.8% and can effectively improve the detection performance of large objects. As can be seen from the table, MSRA-FPN improves the baseline model APl value from 39.8% to 41.6%, an improvement of 1.8%. The same can be done to improve the performance of FCOS [

27] by 0.6%.

TKFD: In order to verify the effectiveness of MSRA-FPN in detecting figures in Thangka images, we have also conducted comparison experiments with other state-of-the-art FPN-based methods on TKFD. As shown in

Table 6, MSRA-FPN can significantly improve the mAP accuracy of several baseline models in TKFD. When using ResNet50 as the backbone, MSRA-FPN improved RetinaNet, Reppoints, and FoveaBox from 64.7%, 65.8%, and 65.3% to 69.4%, 68.7%, and 69.2%, respectively, on the TKFD test set by 4.7%, 2.9%, and 3.9%. After changing the backbone to ResNeXt50 [

39] DCN and expanding the input size to 1024×640, the model achieved an mAP value of 71.2%. Furthermore, when compared with two-stage detectors, such as Faster R-CNN, and single-stage detectors, such as YOLOv3 [

22], it is found that our proposed algorithm still has a significant advantage.

4.4. Ablation Study

To analyze the performance of each component in our proposed MSRA-FPN, we conducted ablation experiments on PASCAL VOC2007. This experiment was performed on RetinaNet with ResNet-50 as the backbone and an input image size of 640 × 640.

4.4.1. Ablation Studies for UCLRAM

Table 6 reports the number of parameters, FLOPs, and FPS for multiple models on TKFD. It can be seen that the number of RetinaNet parameters using MSRA-FPN increases from the original 36.62M to 45.77M relative to the baseline model when ResNet50 is used as the backbone and the input size is 640 × 640. In order to balance the conflict between accuracy and the number of parameters, the structure of the UCLRAM was ablated on PASCAL VOC2007 and compared with the structure shown in

Figure 4, the results of which are shown in

Table 7.

In this case, UCLRAM-lite1 removes the three bottom-up convolutional layers of stage2 and stage3 from the UCLRAM on the structure shown in

Figure 4, turning the three-input nodes into two-input nodes. The UCLRAM-lite2 version replaces the convolution of all cross-layer residuals with MaxPooling. As seen in

Table 7, the accuracy of the UCLRAM-lite2 version is 0.5% different from the original version. However, its number of parameters is essentially the same as that of the FPN. The FPS is also higher than the structure shown in

Figure 4, which better balances the contradiction between accuracy and the number of parameters.

In addition, we explored RSEM’s impact on the UCLRAM structure. The UCLRAM-RSEM2 version uses RSEM as a guide behind all aggregation nodes, and as can be seen from the data in

Table 7, it is less accurate than the structure shown in

Figure 4, and the number of parameters has increased.

4.4.2. Ablation Studies for Each Component

We analyzed two components in MSRA-FPN and performed an ablation study on the PASCAL VOC2007 dataset. The experimental results are shown in

Table 8. As can be seen from the results in

Table 8, our proposed UCLRAM structure improves by 1.2% compared with FPN, and the addition of CGB structure improves MSRA-FPN by 0.4%. From this, it can be concluded that each module of our proposed method has a meaningful impact on object detection performance.