A Machine Learning Approach for the Forecasting of Computing Resource Requirements in Integrated Circuit Simulation

Abstract

1. Introduction

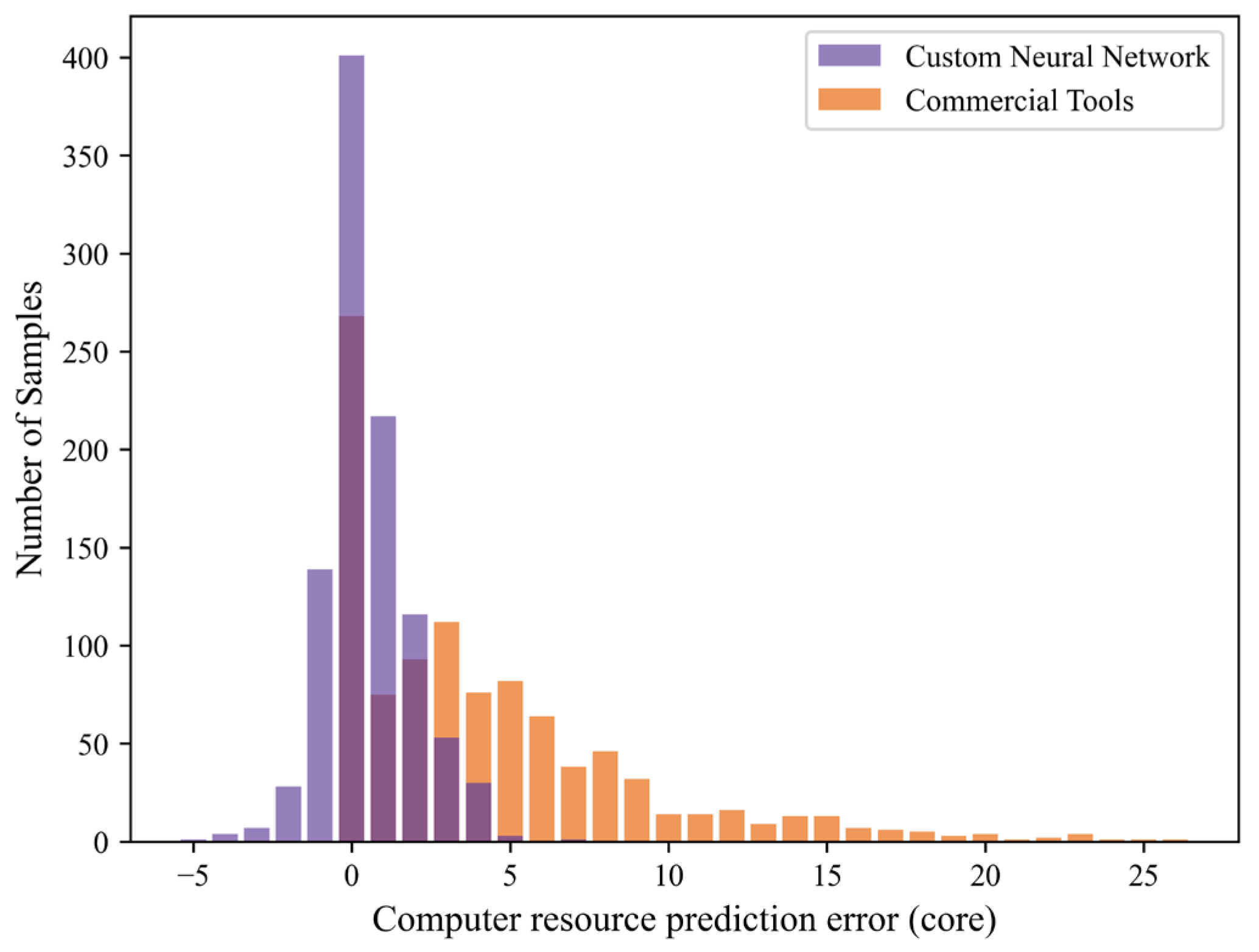

- Our algorithm outperforms commercial tools in terms of precision and consistency.

- To our knowledge, our method is the first to apply machine learning to predict the optimal resources required for integrated circuit simulations.

- Our model uses the custom Mean Squared Error (MSE) to enhance overall prediction outcomes and reduce simulation time.

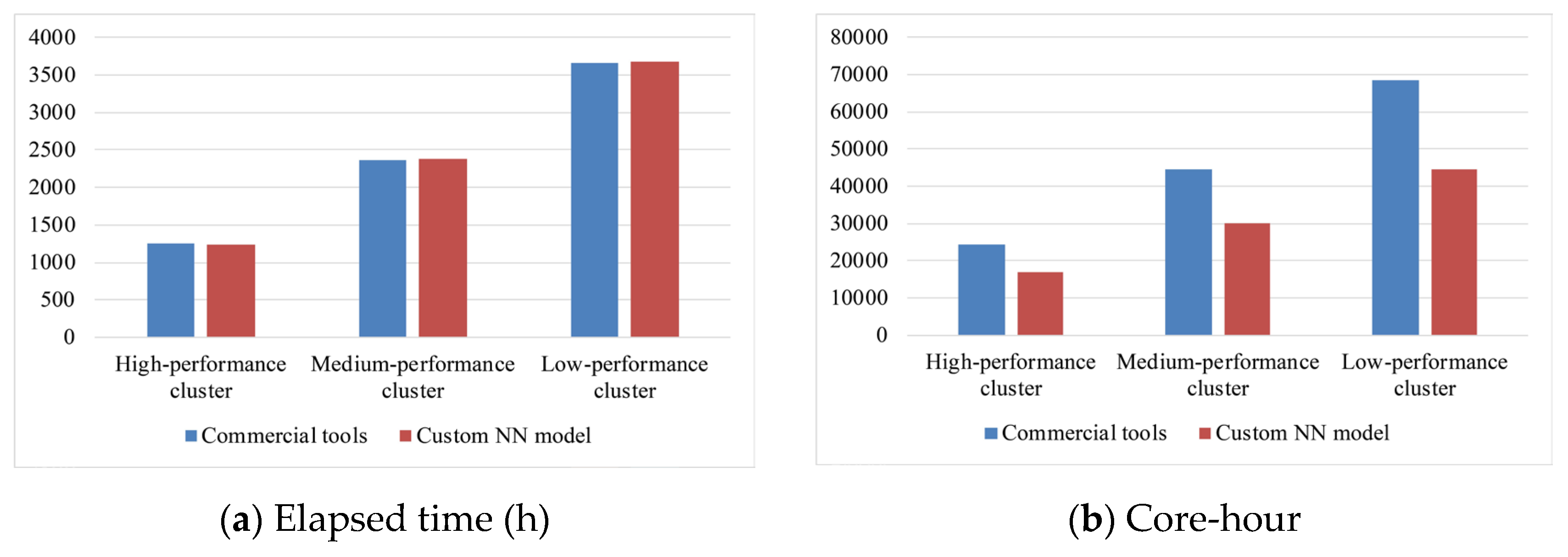

- Our method can reduce core hour consumption by approximately 30% compared to commercial tools while maintaining the same completion time.

2. Proposed Approach

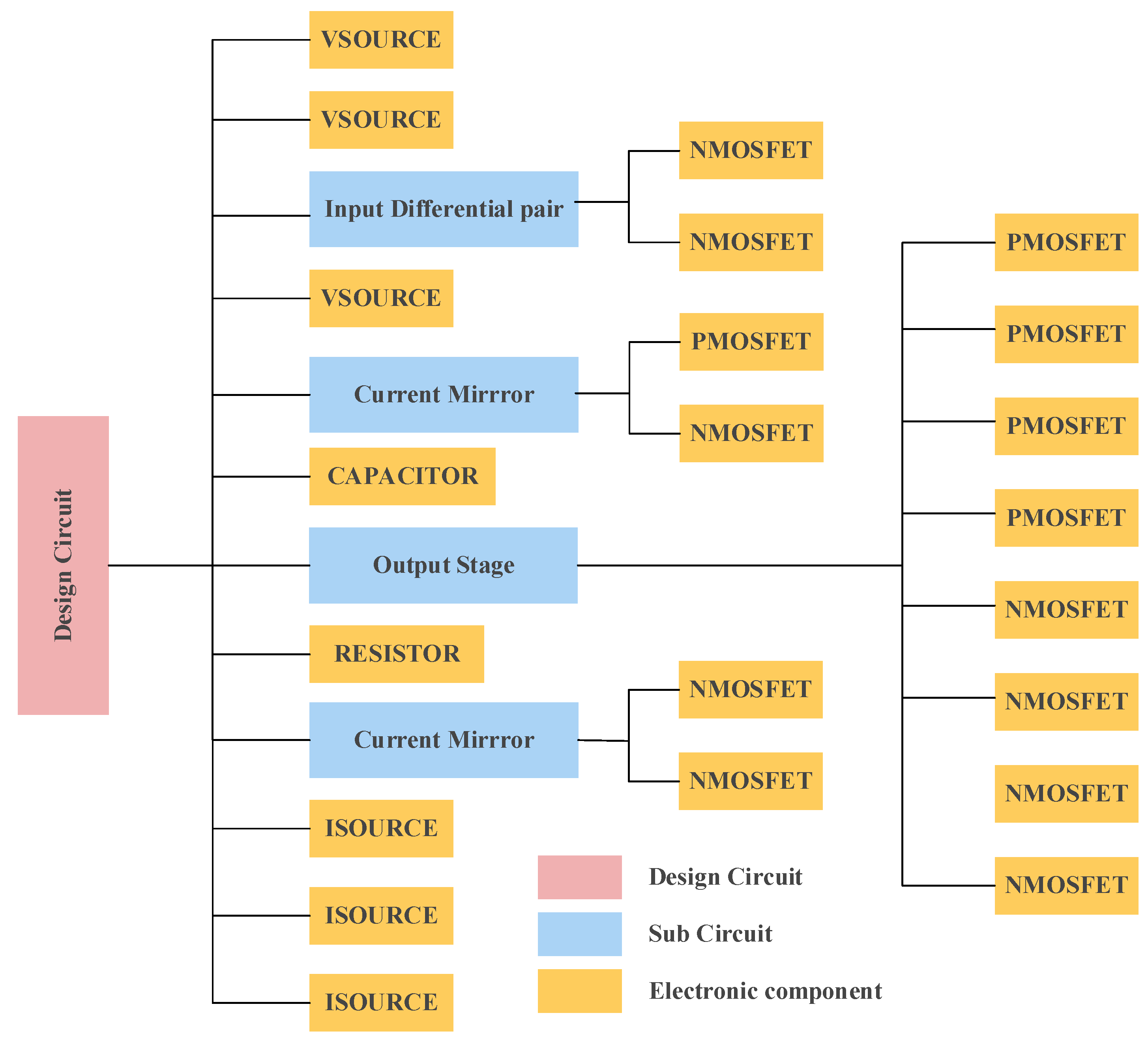

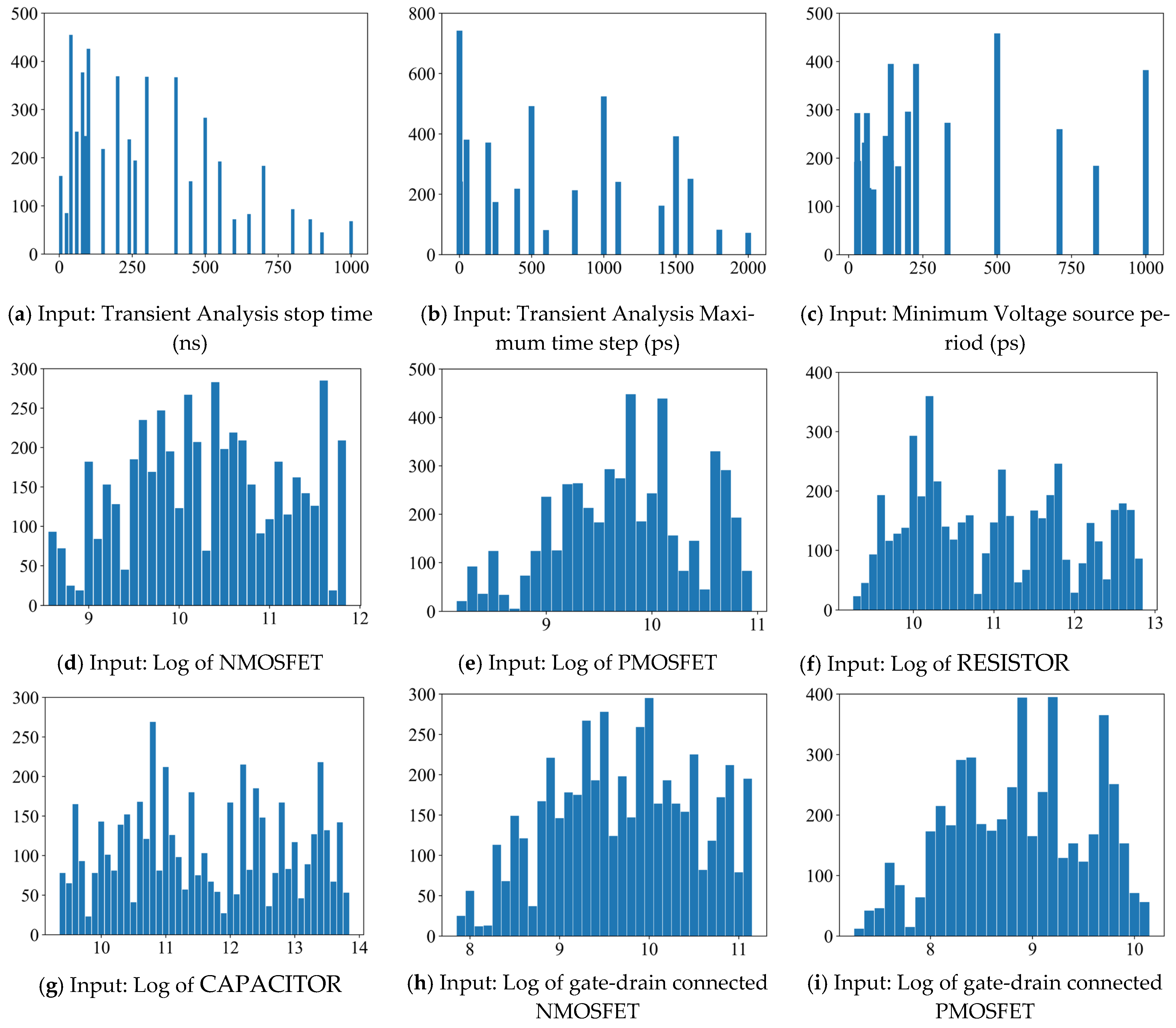

2.1. Model Feature

2.1.1. Component Distribution

2.1.2. Simulation Options

2.2. Model Engine

2.2.1. Support Vector Regression

2.2.2. Neural Network

2.2.3. Random Forest

3. Experiments and Discussion

3.1. Establishing Dataset

3.2. Model Training

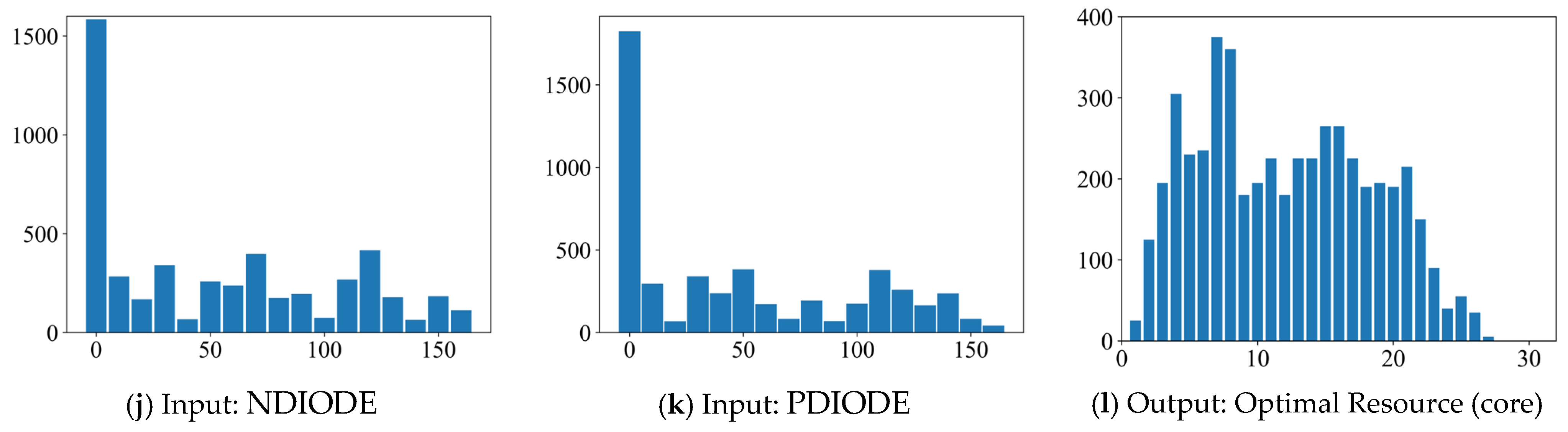

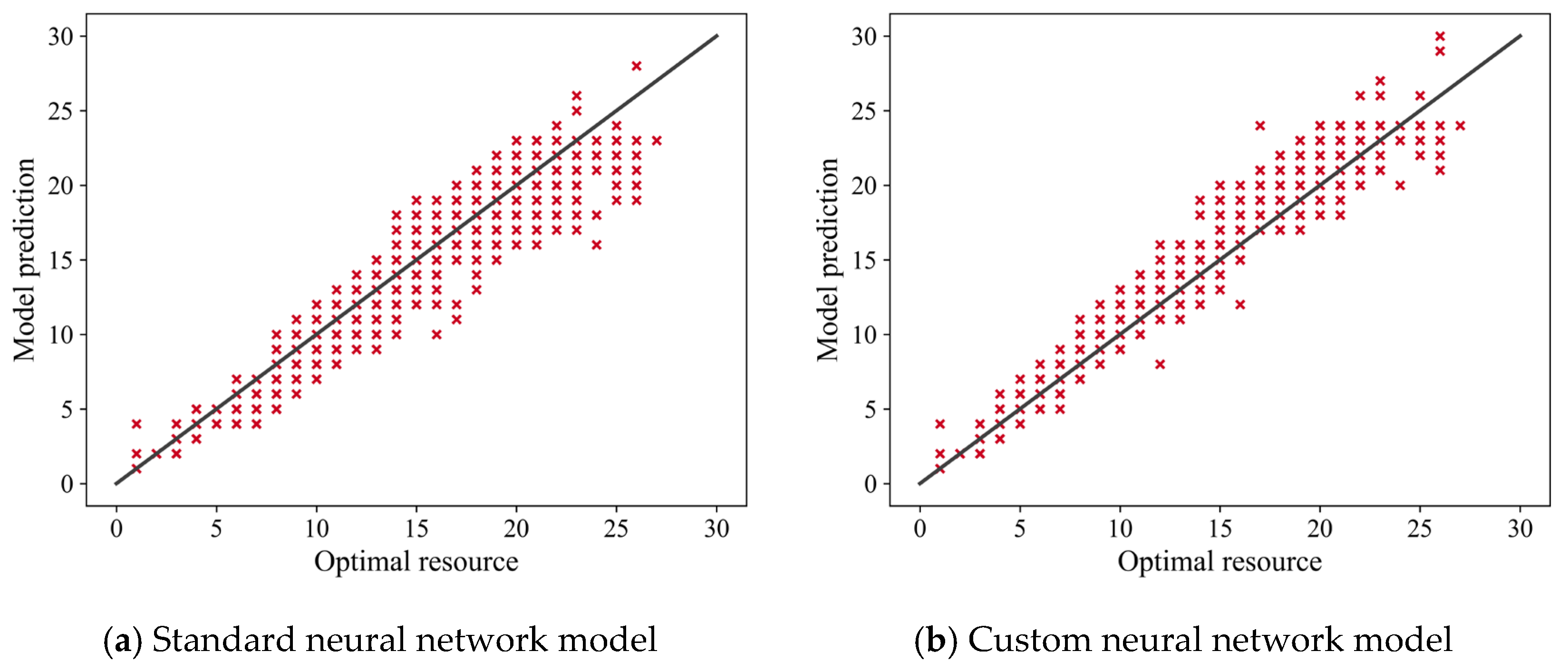

3.3. Prediction Results

3.4. Neural Network with Custom Loss Function

3.5. Error Distribution

3.6. Application Effect

4. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Amdahl, G.M. Validity of the Single Processor Approach to Achieving Large Scale Computing Capabilities. In Proceedings of the Spring Joint Computer Conference on—AFIPS ’67 (Spring), Atlantic City, NJ, USA, 18–20 April 1967; ACM Press: New York, NY, USA, 1967; p. 483. [Google Scholar]

- Albers, R.; Suijs, E.; de With, P.H.N. Triple-C: Resource-Usage Prediction for Semi-Automatic Parallelization of Groups of Dynamic Image-Processing Tasks. In Proceedings of the 2009 IEEE International Symposium on Parallel Distributed Processing, Rome, Italy, 23–29 May 2009; pp. 1–8. [Google Scholar]

- Spectre X Simulator. Available online: https://www.cadence.com/en_US/home/tools/custom-ic-analog-rf-design/circuit-simulation/spectre-x-simulator.html (accessed on 9 December 2022).

- Spectre Accelerated Parallel Simulator. Available online: https://www.cadence.com/en_US/home/tools/custom-ic-analog-rf-design/library-characterization/spectre-accelerated-parallel-simulator.html (accessed on 9 December 2022).

- Cadence Virtuoso Platform Provides 10x Improvement in Verification Time for VIS. Available online: https://www.cadence.com/en_US/home/company/newsroom/press-releases/pr/2006/cadencevirtuosoplatformprovides10ximprovementinverificationtimeforvis.html (accessed on 9 December 2022).

- Matsunaga, A.; Fortes, J.A.B. On the Use of Machine Learning to Predict the Time and Resources Consumed by Applications. In Proceedings of the 2010 10th IEEE/ACM International Conference on Cluster, Cloud and Grid Computing, Melbourne, VIC, Australia, 17–20 May 2010; pp. 495–504. [Google Scholar]

- Kholidy, H.A. An Intelligent Swarm Based Prediction Approach For Predicting Cloud Computing User Resource Needs. Comput. Commun. 2020, 151, 133–144. [Google Scholar] [CrossRef]

- Rezaei, M.; Salnikov, A. Machine Learning Techniques to Perform Predictive Analytics of Task Queues Guided by Slurm. In Proceedings of the 2018 Global Smart Industry Conference (GloSIC), Chelyabinsk, Russia, 13–15 November 2018; pp. 1–6. [Google Scholar]

- Balaji, M.; Aswani Kumar, C.; Rao, G.S.V.R.K. Predictive Cloud Resource Management Framework for Enterprise Workloads. J. King Saud Univ.-Comput. Inf. Sci. 2018, 30, 404–415. [Google Scholar] [CrossRef]

- Nikravesh, A.Y.; Ajila, S.A.; Lung, C.-H. Towards an Autonomic Auto-Scaling Prediction System for Cloud Resource Provisioning. In Proceedings of the 2015 IEEE/ACM 10th International Symposium on Software Engineering for Adaptive and Self-Managing Systems, Florence, Italy, 18–19 May 2015; pp. 35–45. [Google Scholar]

- Belgacem, A.; Beghdad Bey, K.; Nacer, H. Dynamic Resource Allocation Method Based on Symbiotic Organism Search Algorithm in Cloud Computing. IEEE Trans. Cloud Comput. 2020, 10, 1714–1725. [Google Scholar] [CrossRef]

- Ramasamy, V.; Pillai, S. An Effective HPSO-MGA Optimization Algorithm for Dynamic Resource Allocation in Cloud Environment. Clust. Comput. 2020, 23, 1711–1724. [Google Scholar] [CrossRef]

- Chaitra, T.; Agrawal, S.; Jijo, J.; Arya, A. Multi-Objective Optimization for Dynamic Resource Provisioning in a Multi-Cloud Environment Using Lion Optimization Algorithm. In Proceedings of the 2020 IEEE 20th International Symposium on Computational Intelligence and Informatics (CINTI), Budapest, Hungary, 5–7 November 2020; pp. 83–90. [Google Scholar] [CrossRef]

- Belgacem, A.; Beghdad-Bey, K.; Nacer, H.; Bouznad, S. Efficient Dynamic Resource Allocation Method for Cloud Computing Environment. Cluster Comput. 2020, 23, 2871–2889. [Google Scholar] [CrossRef]

- Barboza, E.C.; Shukla, N.; Chen, Y.; Hu, J. Machine Learning-Based Pre-Routing Timing Prediction with Reduced Pessimism. In Proceedings of the 56th Annual Design Automation Conference 2019 ACM, Las Vegas, NV, USA, 2–6 June 2019; pp. 1–6. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-Learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Razavi, B.; Behzad, R. RF Microelectronics; Prentice Hall: New York, NY, USA, 2012; Volume 2. [Google Scholar]

- Razavi, B. Design of Analog CMOS Integrated Circuits, 2nd ed.; McGraw-Hill Education: New York, NY, USA, 2017; ISBN 978-0-07-252493-2. [Google Scholar]

- Wu, J.; Zhang, K.; Chen, H.; Wang, Z.; Yu, F. A Sigma-Delta Modulator with Residual Offset Suppression. IEICE Electron. Express 2021, 18, 20210157. [Google Scholar] [CrossRef]

- Wang, T.; Zhou, M.; Liu, J.; Wang, Z.; Mo, J.; Chen, H.; Yu, F. A Highly Linear 10 Gb/s MOS Current Mode Logic Driver with Large Output Voltage Swing Based on an Active Inductor. IEICE Electron. Express 2020, 17, 20200160. [Google Scholar] [CrossRef]

- Wang, G.; Liu, J.; Xu, S.; Mo, J.; Wang, Z.; Yu, F. The Design of Broadband LNA with Active Biasing Based on Negative Technique. Inf. Midem-J. Microelectron. Electron. Compon. Mater. 2018, 48, 115–120. [Google Scholar]

- Wang, F.; Wang, Z.; Liu, J.; Yu, F. A 14-Bit 3-GS/s DAC Achieving SFDR >63dB Up to 1.4GHz with Random Differential-Quad Switching Technique. IEEE Trans. Circuits Syst. II-Express Briefs 2022, 69, 879–883. [Google Scholar] [CrossRef]

- Chen, M.; Wu, K.; Shen, Y.; Wang, Z.; Chen, H.; Liu, J.; Yu, F. A 14 bit 500 MS/s 85.62 dBc SFDR 66.29 dB SNDR SHA-Less Pipelined ADC with a Stable and High-Linearity Input Buffer and Aperture-Error Calibration in 40 nm CMOS. IEICE Electron. Express 2021, 18, 20210171. [Google Scholar] [CrossRef]

- Chen, J.; Li, H.; Wang, T.; Wang, Z.; Chen, H.; Liu, J.; Yu, F. A 92 Fs(Rms) Jitter Frequency Synthesizer Based on a Multicore Class-C Voltage-Controlled Oscillator with Digital Automatic Amplitude Control. IEICE Electron. Express 2021, 18, 20210136. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization 2017. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Author | Proposed Methodology | Main Finding |

|---|---|---|

| Mahdi Rezaei and Alexey Salnikov (2018) [8] | A machine learning (ML) system based on the collection of statistical data from the reference queue systems. | The accuracy of prediction is highly associated with prior information before submitting jobs. |

| Mahesh Balaji Philips and Aswani Kumar Cherukuri (2016) [9] | An innovative Predictive Resource Management Framework (PRMF) to overcome the drawbacks of the reactive Cloud Resource Management approach. | Reduced user-request rejections (error count), shorter user-wait time, higher request processing, and efficient utilization of available cloud resources. |

| Nikravesh et al., (2015) [10] | A predictive auto-scaling system for the Infrastructure as a Service (IaaS) layer of cloud computing. | Achieved dynamic selecting the most appropriate prediction algorithm as well as sliding window size. |

| Belgacem, Beghdad Bey and Nacer (2020) [11] | A scheduling method based on the Symbiotic Organism Search algorithm (MOSOS) to solve the dynamic resource allocation problem in cloud computing. | Achieved minimized makespan and cost, and adapted to the dynamic change of the cloud. |

| Vadivel Ramasamy and SudalaiMuthu Thalavai Pillai (2020) [12] | A novel dynamic resource allocation approach based on Hybrid Particle Swarm Optimization and Modified Genetic Algorithm (HPSO-MGA). | Achieved less time for dynamically allocating the resources in terms of average waiting time, response time, load balancing, relative error, and throughput. |

| Chaitra et.al. (2022) [13] | An independent task scheduling approach using a multi-objective task scheduling optimization based on the Artificial Bee Colony Algorithm with a Q-learning algorithm. | Outperformed in terms of reducing makespan, reducing cost, reducing the degree of imbalance, increasing throughput, and average resource utilization. |

| Ali Belgacem et al., (2020) [14] | A multi-objective search algorithm called the Spacing Multi-Objective Antlion algorithm (S-MOAL) to minimize both the makespan and the cost of using virtual machines. | Outperformed in terms of makespan, cost, deadline, fault tolerance, and energy consumption. |

| Type | Self-Connection | Number |

|---|---|---|

| ISOURCE | null | 3 |

| VSOURCE | null | 3 |

| NMOSFET | null | 7 |

| NMOSFET | gate, drain | 2 |

| PMOSFET | null | 4 |

| PMOSFET | gate, drain | 1 |

| RESISTOR | null | 1 |

| CAPACITOR | null | 1 |

| INDUCTOR | null | 0 |

| NDIODE | null | 0 |

| PDIODE | null | 0 |

| PNP-BJT | null | 0 |

| PNP-BJT | emitter, base | 0 |

| NPN-BJT | null | 0 |

| NPN -BJT | emitter, base | 0 |

| Feature | Attributes |

|---|---|

| Simulator type | categorical |

| Simulator accuracy | categorical |

| Error preset accuracy | categorical |

| Minimum Port source period | numerical |

| Minimum Voltage source period | numerical |

| Minimum Current source period | numerical |

| Relative convergence criterion | numerical |

| Voltage absolute tolerance convergence criterion | numerical |

| Current absolute tolerance convergence criterion | numerical |

| Charge absolute tolerance convergence criterion | numerical |

| Transient Analysis start time | numerical |

| Transient Analysis stop time | numerical |

| Transient Analysis Minimum time step | numerical |

| Transient Analysis Maximum time step | numerical |

| Model | MSE | Correlation |

|---|---|---|

| Commercial tools | 29.561 | 0.521 |

| Random Forest | 9.887 | 0.827 |

| Support Vector Regression | 13.891 | 0.804 |

| Neural network (Standard MSE) | 2.163 | 0.942 |

| Neural network (Custom MSE) | 0.942 | 0.939 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Y.; Chen, H.; Zhou, M.; Yu, F. A Machine Learning Approach for the Forecasting of Computing Resource Requirements in Integrated Circuit Simulation. Electronics 2023, 12, 95. https://doi.org/10.3390/electronics12010095

Wu Y, Chen H, Zhou M, Yu F. A Machine Learning Approach for the Forecasting of Computing Resource Requirements in Integrated Circuit Simulation. Electronics. 2023; 12(1):95. https://doi.org/10.3390/electronics12010095

Chicago/Turabian StyleWu, Yue, Hua Chen, Min Zhou, and Faxin Yu. 2023. "A Machine Learning Approach for the Forecasting of Computing Resource Requirements in Integrated Circuit Simulation" Electronics 12, no. 1: 95. https://doi.org/10.3390/electronics12010095

APA StyleWu, Y., Chen, H., Zhou, M., & Yu, F. (2023). A Machine Learning Approach for the Forecasting of Computing Resource Requirements in Integrated Circuit Simulation. Electronics, 12(1), 95. https://doi.org/10.3390/electronics12010095