A Novel Approach for Classifying Brain Tumours Combining a SqueezeNet Model with SVM and Fine-Tuning

Abstract

1. Introduction

- ➢

- It illustrates employing a hybrid deep learning model to detect early-stage brain cancers. This can expedite treatment and prevent the spread of malignant tissue.

- ➢

- It illustrates the accurate performance of the supervised and unsupervised classification for classifying MRI brain tumours based on the CNN structure of feature extraction.

- ➢

- It illustrates that a hybrid deep learning classification method that combines SqueezeNet and SVM produces more accurate and superior results than conventional methods.

- ➢

- It helps radiologists minimise errors while using magnetic resonance imaging (MRI) pictures to diagnose cancers manually without invasive procedures.

2. Related Work

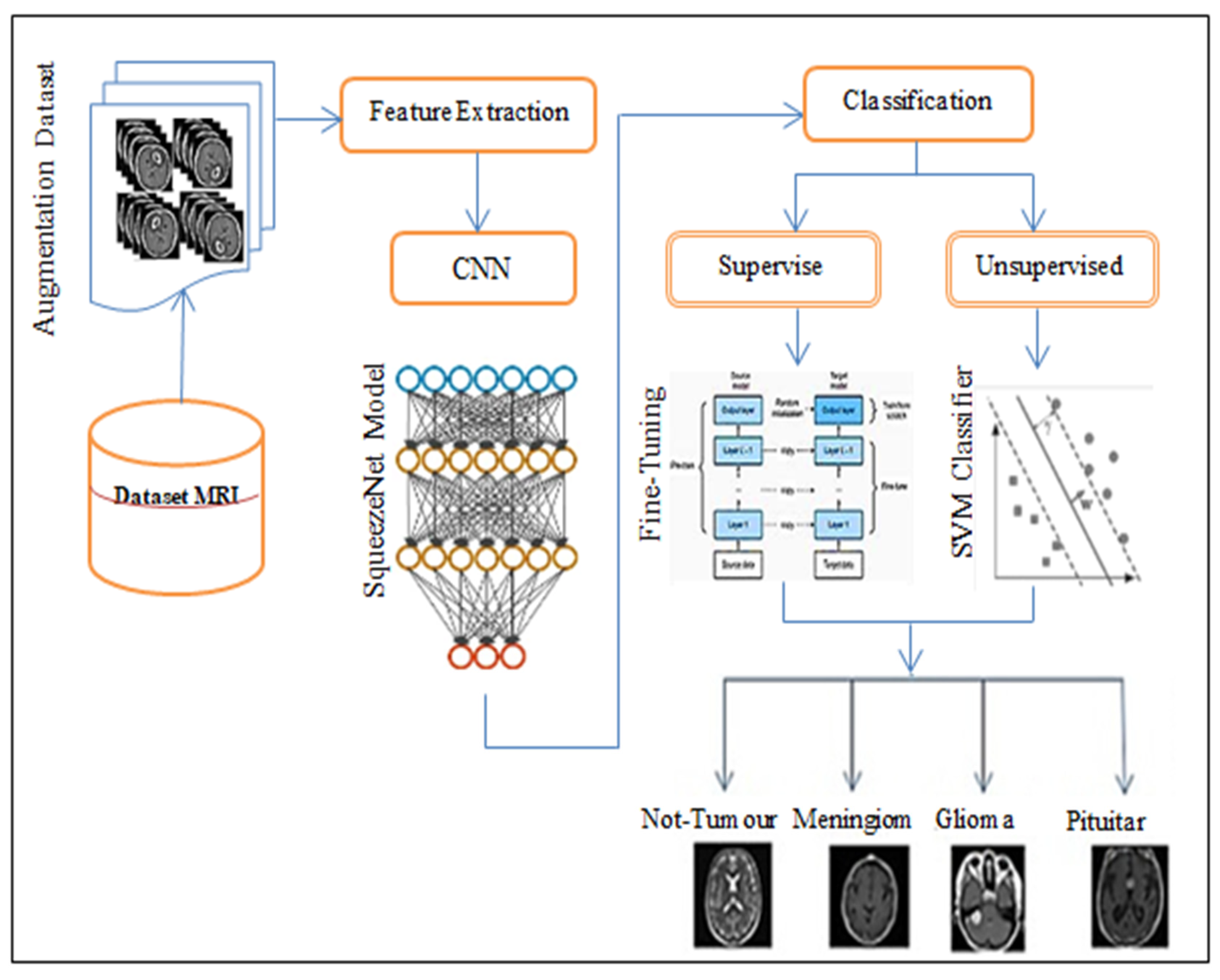

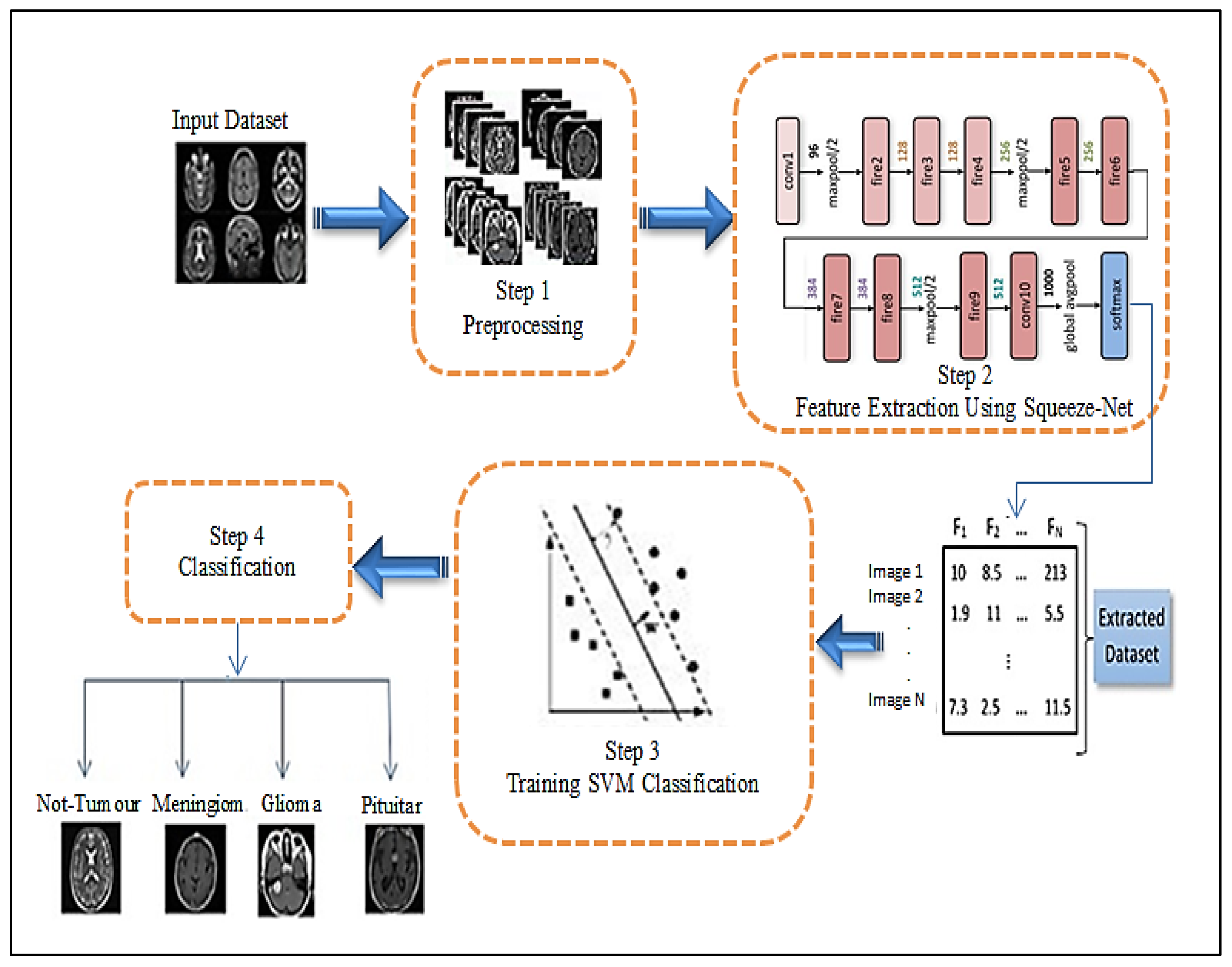

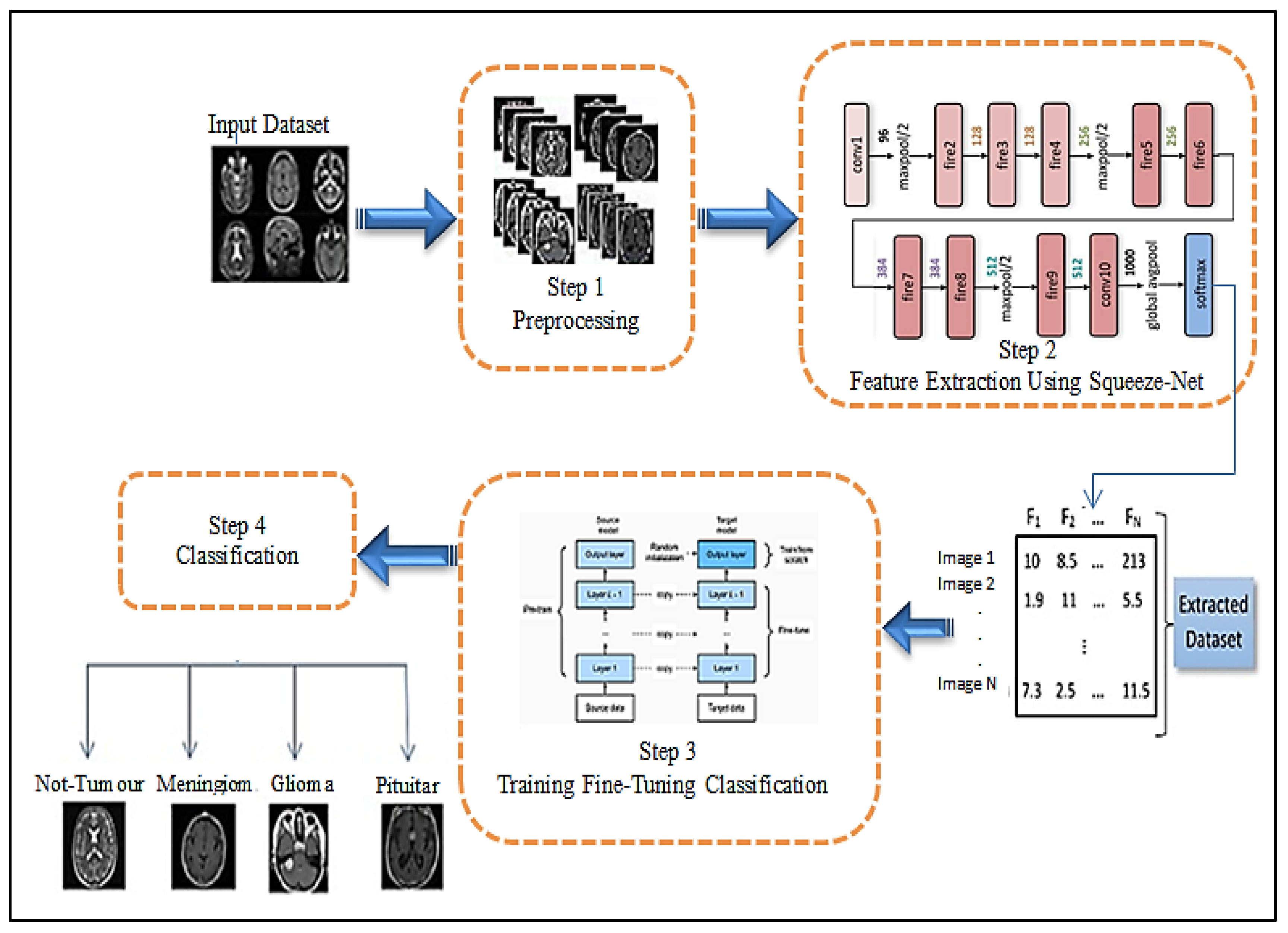

3. The Proposed Approach

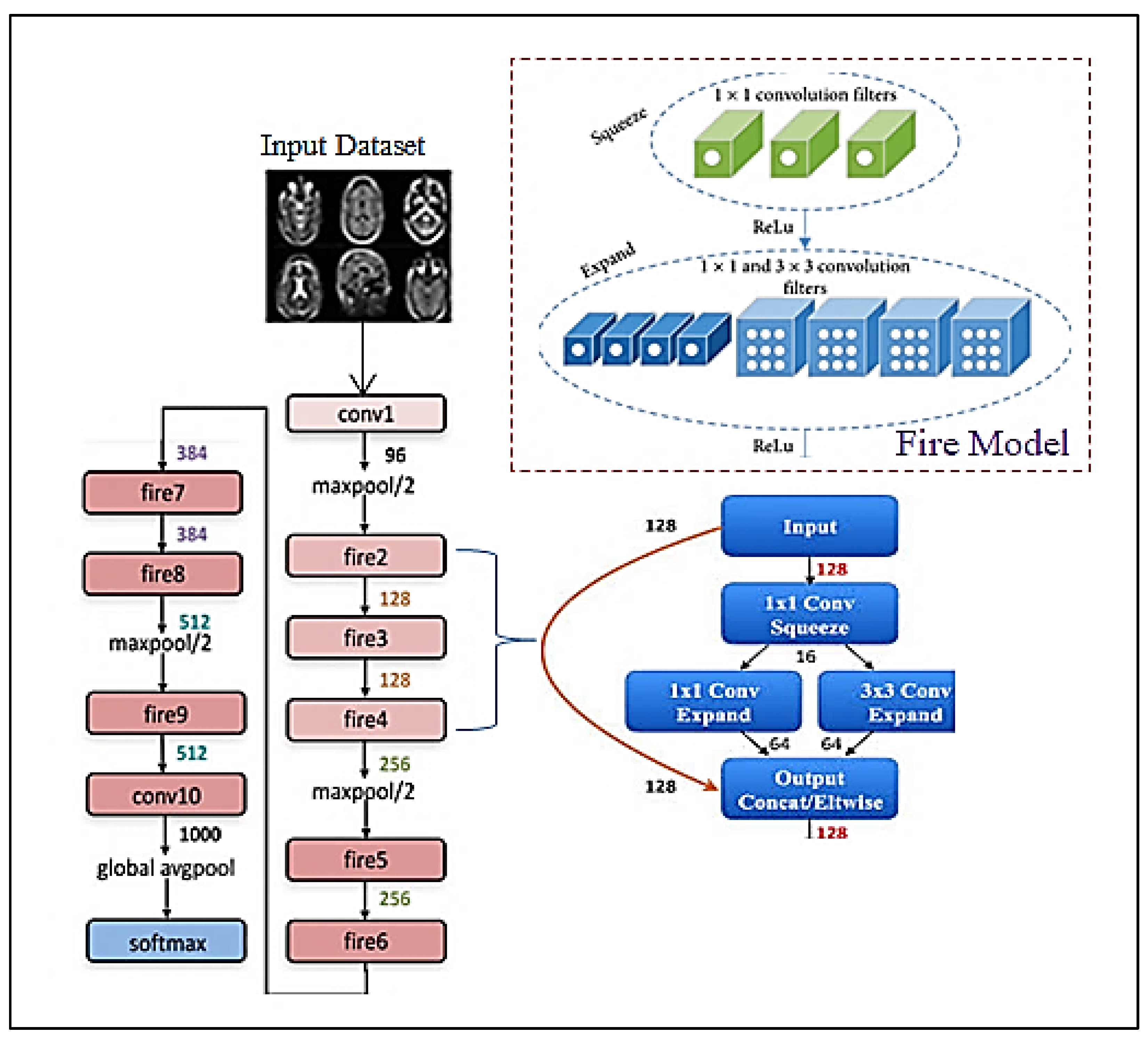

3.1. Features Extraction

3.2. Classification Subsection

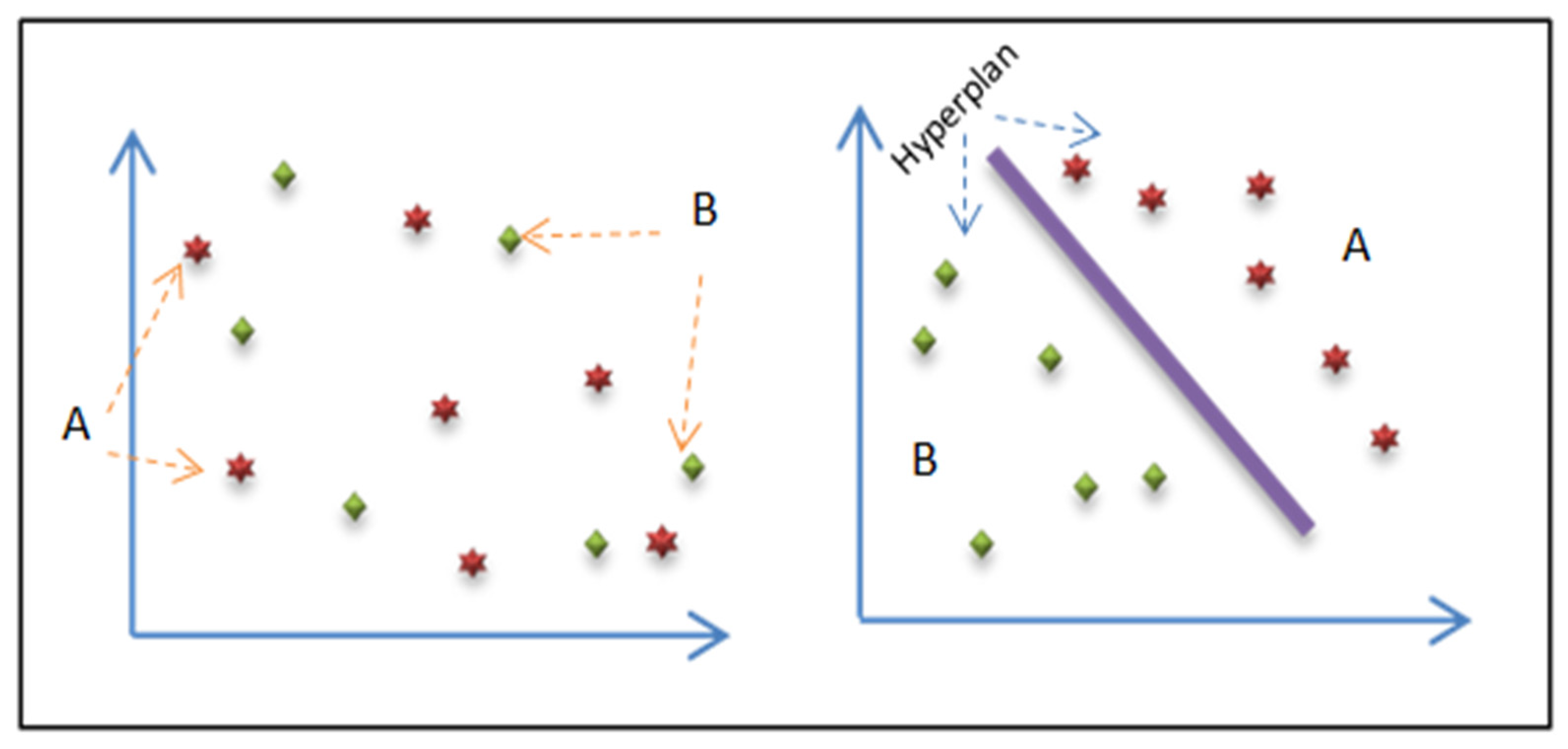

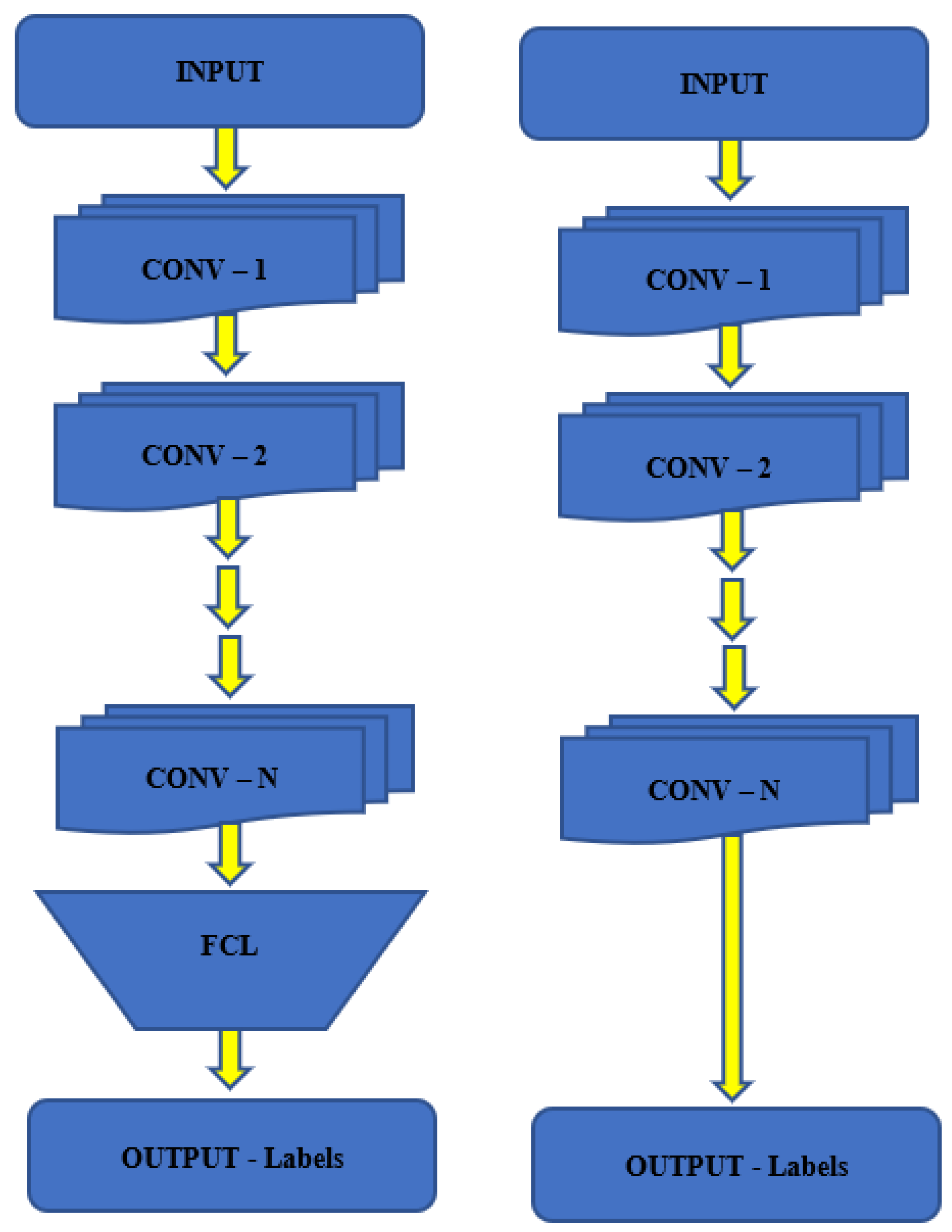

3.2.1. SN-SVM Model

- The basics of linearly separable groups;

- The expansion of the non-linearly detachable case using kernel functions;

- The application to non-linearly divisible groups.

- Feature extraction step.

- -

- In the hybrid CNN-SVM model and the automatically hybrid CNN-SVM model, the SqueezeNet model automatically generates features through the MRI brain images dataset.

- -

- Passing the features extracted to the Full Connection Layer (FCL).

- Classification step.

- -

- The SVM algorithm applies to the Full Connection Layer (FCL), also called the soft-max layer.

- -

- Passing the features to the SVM module for training and testing the MRI brain image dataset.

- -

- An RBF function is used as a kernel in the hybrid model.

- -

- Define SVM-based kernel as ,

- -

- The objective has two main classes:

- ✓

- Meningioma;

- ✓

- Pituitary;

- ✓

- Glioma.

3.2.2. SN-FT Model

- Feature extraction step.

- -

- The SqueezeNet model automatically generates features through the MRI of a human brain image dataset in the hybrid CNN-SVM model.

- -

- Passing the features extracted to the Full Connection Layer (FCL).

- Classification step.

- -

- Load the pre-train SqueezeNet model of the CNN algorithm (All model builds and associated parameters are replicated on the SqueezeNet model, except for the output layer).

- -

- Replace the pre-trained network’s last layer (soft-max) with our new output layer.

- -

- Add an output layer with as many outputs as categories to the target model.

- -

- Freeze the weights of some initial layers of the network’s pre-training. This is because the initial layers capture common features such as curves and edges that are also essential to our new situation.

- -

- Train the new model structure while maintaining weights. Next, the network learns dataset-specific features.

- -

- The objective of the output layer:

- Not-Tumour;

- Meningioma Tumour;

- Glioma Tumour;

- Pituitary Tumour.

4. Results and Discussion

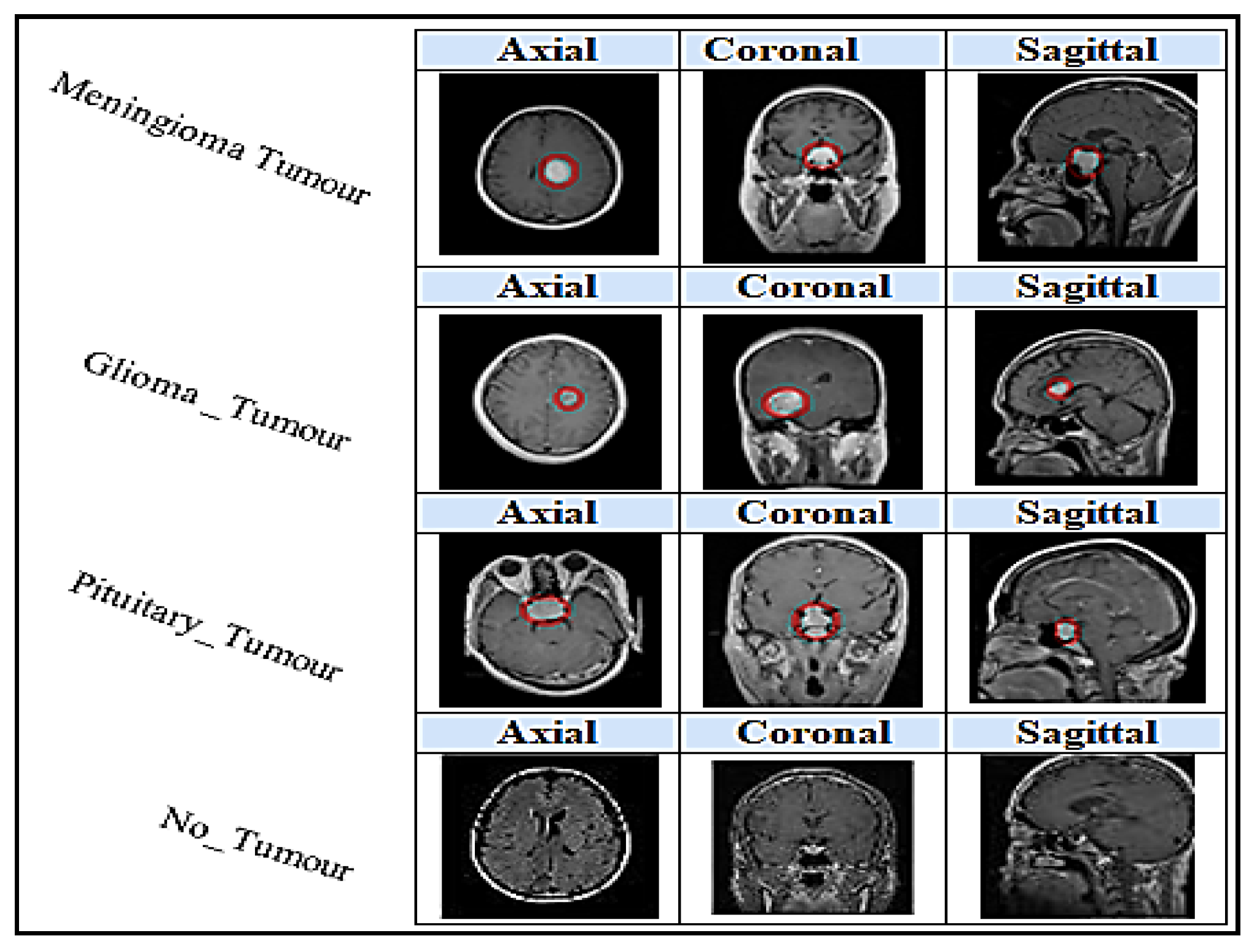

4.1. Dataset

4.2. Evaluation Measures

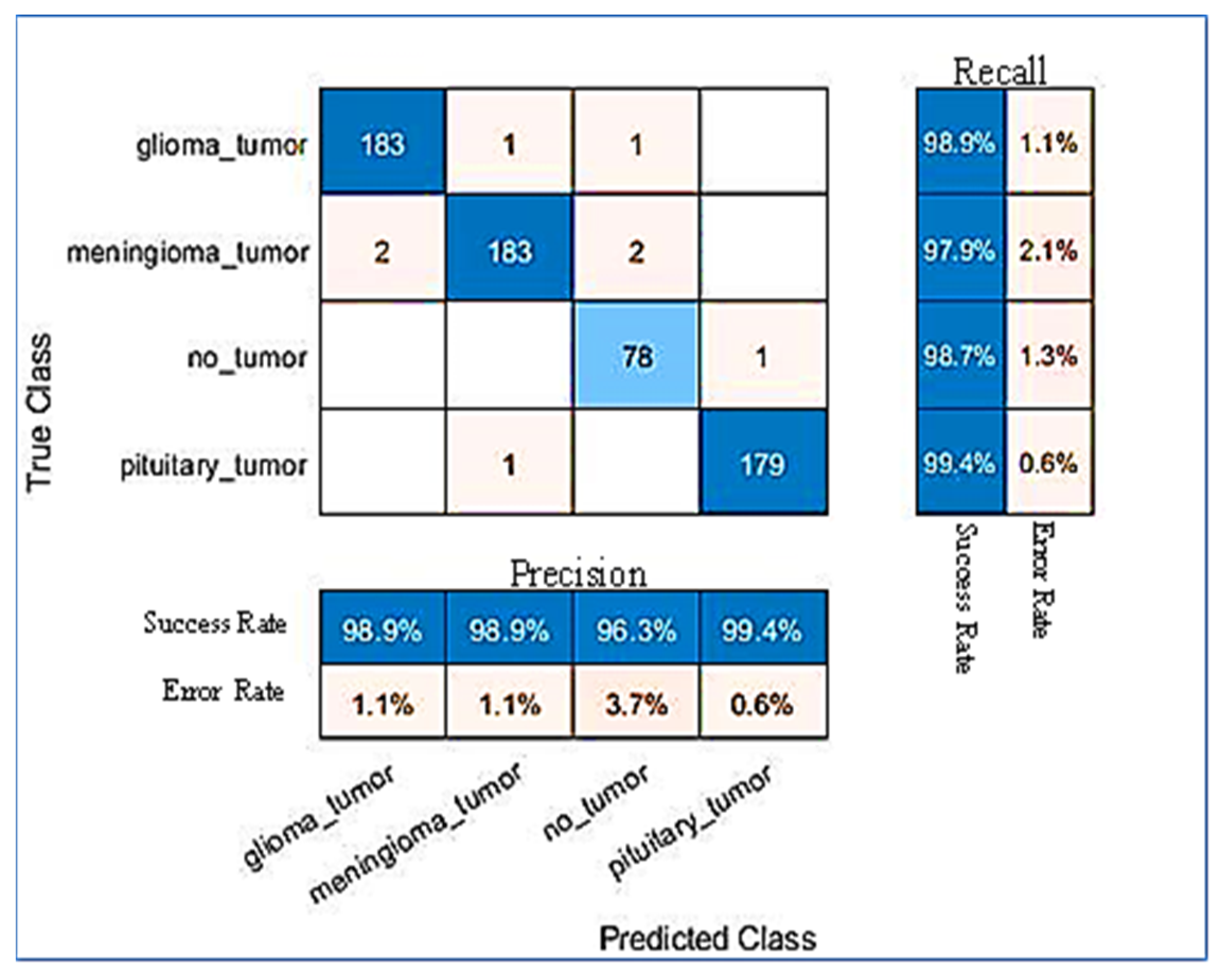

4.3. Performance Analysis

4.4. Model Evaluation Using Public Dataset

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rasool, M.; Ismail, N.A.; Boulila, W.; Ammar, A.; Samma, H.; Yafooz, W.M.; Emara, A.H.M. A Hybrid Deep Learning Model for Brain Tumour Classification. Entropy 2022, 24, 799. [Google Scholar] [CrossRef]

- Nayak, D.R.; Padhy, N.; Mallick, P.K.; Zymbler, M.; Kumar, S. Brain Tumor Classification Using Dense Efficient-Net. Axioms 2022, 11, 34. [Google Scholar] [CrossRef]

- Pradhan, A.; Mishra, D.; Das, K.; Panda, G.; Kumar, S.; Zymbler, M. On the Classification of MR Images Using “ELM-SSA” Coated Hybrid Model. Mathematics 2021, 9, 2095. [Google Scholar] [CrossRef]

- Wild, C.P.; Stewart, B.W.; Wild, C. World Cancer Report 2014; World Health Organization: Geneva, Switzerland, 2014. [Google Scholar]

- Reddy, A.V.; Krishna, C.; Mallick, P.K.; Satapathy, S.K.; Tiwari, P.; Zymbler, M.; Kumar, S. Analyzing MRI scans to detect glioblastoma tumor using hybrid deep belief networks. J. Big Data 2020, 7, 1–17. [Google Scholar] [CrossRef]

- Nayak, D.R.; Padhy, N.; Mallick, P.K.; Bagal, D.K.; Kumar, S. Brain Tumour Classification Using Noble Deep Learning Approach with Parametric Optimization through Metaheuristics Approaches. Computers 2022, 11, 10. [Google Scholar] [CrossRef]

- Mansour, R.F.; Escorcia-Gutierrez, J.; Gamarra, M.; Díaz, V.G.; Gupta, D.; Kumar, S. Artificial intelligence with big data analytics-based brain intracranial hemorrhage e-diagnosis using CT images. In Neural Computing and Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 1–13. [Google Scholar]

- Rehman, A.; Naz, S.; Razzak, M.I.; Akram, F.; Imran, M. A deep learning-based framework for automatic brain tumors classification using transfer learning. Circuits Syst. Signal Process. 2020, 39, 757–775. [Google Scholar] [CrossRef]

- Kaus, M.R.; Warfield, S.K.; Nabavi, A.; Black, P.M.; Jolesz, F.A.; Kikinis, R. Automated segmentation of MR images of brain tumors. Radiology 2001, 218, 586–591. [Google Scholar] [CrossRef]

- Jayadevappa, D.; Srinivas Kumar, S.; Murty, D. Medical image segmentation algorithms using deformable models: A review. Iete Tech. Rev. 2011, 28, 248–255. [Google Scholar] [CrossRef]

- Tiwari, A.; Srivastava, S.; Pant, M. Brain tumor segmentation and classification from magnetic resonance images: Review of selected methods from 2014 to 2019. In Pattern Recognition Letters; Elsevier: Amsterdam, The Netherlands, 2020; Volume 131, pp. 244–260. [Google Scholar]

- Gosavi, D.; Dere, S.; Bhoir, D.; Rathod, M. Brain Tumor Classification Using GLCM Features and Neural Network. In Proceedings of the 2nd International Conference on Advances in Science & Technology (ICAST), Mumbai, India, 8–9 April 2019. [Google Scholar]

- Giraddi, S.; Vaishnavi, S. Detection of Brain Tumor using Image Classification. In Proceedings of the 2017 International Conference on Current Trends in Computer, Electrical, Electronics and Communication (CTCEEC), Mysore, India, 8–9 September 2017; pp. 640–644. [Google Scholar]

- Soofi, A.A.; Awan, A. Classification techniques in machine learning: Applications and issues. J. Basic Appl. Sci. 2017, 13, 459–465. [Google Scholar] [CrossRef]

- Sultana, J. Predicting Indian Sentiments of COVID-19 Using MLP and Adaboost. Turk. J. Comput. Math. Educ. (Turcomat) 2021, 12, 706–714. [Google Scholar]

- Işın, A.; Direkoğlu, C.; Şah, M. Review of MRI-based brain tumor image segmentation using deep learning methods. Procedia Comput. Sci. 2016, 102, 317–324. [Google Scholar] [CrossRef]

- Lundervold, A.S.; Lundervold, A. An overview of deep learning in medical imaging focusing on MRI. Z. Für Med. Phys. 2019, 29, 102–127. [Google Scholar] [CrossRef]

- Dheir, I.M.; Mettleq, A.S.A.; Elsharif, A.A.; Abu-Naser, S.S. Classifying nuts types using convolutional neural network. Int. J. Acad. Inf. Syst. Res. (Ijaisr) 2019, 3, 12–18. [Google Scholar]

- Abhinav, G. Deep Learning Reading Group: SqueezeNet. Available online: https://www.kdnuggets.com/2016/09/deep-learning-reading-group-squeezenet.html (accessed on 5 May 2021).

- Gholami, A.; Kwon, K.; Wu, B.; Tai, Z.; Yue, X.; Jin, P.; Zhao, S.; Keutzer, K. Squeezenext: Hardware-aware neural network design. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1638–1647. [Google Scholar]

- Chappa, R.T.N.; El-Sharkawy, M. Squeeze-and-Excitation SqueezeNext: An Efficient DNN for Hardware Deployment. In Proceedings of the 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2020; pp. 0691–0697. [Google Scholar]

- Beheshti, N.; Johnsson, L. Squeeze u-net: A memory and energy efficient image segmentation network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Virtual, 14–19 June 2020; pp. 364–365. [Google Scholar]

- Latif, J.; Xiao, C.; Imran, A.; Tu, S. Medical imaging using machine learning and deep learning algorithms: A review. In Proceedings of the 2019 2nd International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Sindh, Pakistan, 30–31 January 2019; pp. 1–5. [Google Scholar]

- Nguyen, L. Tutorial on support vector machine. Appl. Comput. Math. 2017, 6, 1–15. [Google Scholar]

- Sharma, R.; Sungheetha, A. An efficient dimension reduction based fusion of CNN and SVM model for detection of abnormal incident in video surveillance. J. Soft Comput. Paradig. (Jscp) 2021, 3, 55–69. [Google Scholar] [CrossRef]

- Bhavsar, H.; Panchal, M.H. A review on support vector machine for data classification. Int. J. Adv. Res. Comput. Eng. Technol. (Ijarcet) 2012, 1, 185–189. [Google Scholar]

- Renda, A.; Frankle, J.; Carbin, M. Comparing rewinding and fine-tuning in neural network pruning. arXiv 2020, arXiv:2003.02389. [Google Scholar]

- Nagabandi, A.; Kahn, G.; Fearing, R.S.; Levine, S. Neural network dynamics for model-based deep reinforcement learning with model-free fine-tuning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 7559–7566. [Google Scholar]

- Gong, K.; Guan, J.; Liu, C.-C.; Qi, J. PET image denoising using a deep neural network through fine tuning. IEEE Trans. Radiat. Plasma Med Sci. 2018, 3, 153–161. [Google Scholar] [CrossRef]

- Dash, A.K.; Mohapatra, P. A Fine-tuned deep convolutional neural network for chest radiography image classification on COVID-19 cases. Multimed. Tools Appl. 2022, 81, 1055–1075. [Google Scholar] [CrossRef]

- Cheng, J. Brain Tumor Dataset. Available online: https://figshare.com/articles/dataset/brain_tumor_dataset/1512427/5 (accessed on 2 April 2017).

- Kadam, A.; Bhuvaji, S.; Bhumkar, P.; Dedge, S.; Kanchan, S. Brain Tumor Classification (MRI). Available online: https://www.kaggle.com/sartajbhuvaji/brain-tumor-classification-mri (accessed on 5 May 2021).

- Alqudah, A.M.; Albadarneh, A.; Abu-Qasmieh, I.; Alquran, H. Developing of robust and high accurate ECG beat classification by combining Gaussian mixtures and wavelets features. Australas. Phys. Eng. Sci. Med. 2019, 42, 149–157. [Google Scholar] [CrossRef]

- Alqudah, A.M.; Alquraan, H.; Qasmieh, I.A.; Alqudah, A.; Al-Sharu, W. Brain Tumor Classification Using Deep Learning Technique—A Comparison between Cropped, Uncropped, and Segmented Lesion Images with Different Sizes. arXiv 2020, arXiv:2001.08844. [Google Scholar] [CrossRef]

- Díaz-Pernas, F.J.; Martínez-Zarzuela, M.; Antón-Rodríguez, M.; González-Ortega, D. A deep learning approach for brain tumor classification and segmentation using a multiscale convolutional neural network. Healthcare 2021, 9, 153. [Google Scholar] [CrossRef] [PubMed]

- Badža, M.M.; Barjaktarović, M.Č. Classification of brain tumors from MRI images using a convolutional neural network. Appl. Sci. 2020, 10, 1999. [Google Scholar] [CrossRef]

- Hashemzehi, R.; Mahdavi, S.J.S.; Kheirabadi, M.; Kamel, S.R. Detection of brain tumors from MRI images base on deep learning using hybrid model CNN and NADE. Biocybern. Biomed. Eng. 2020, 40, 1225–1232. [Google Scholar] [CrossRef]

- Sajja, V.R. Classification of Brain Tumors using Fuzzy C-means and VGG16. Turk. J. Comput. Math. Educ. (Turcomat) 2021, 12, 2103–2113. [Google Scholar]

- Tazin, T.; Sarker, S.; Gupta, P.; Ayaz, F.I.; Islam, S.; Monirujjaman Khan, M.; Bourouis, S.; Idris, S.A.; Alshazly, H. A Robust and Novel Approach for Brain Tumor Classification Using Convolutional Neural Network. Comput. Intell. Neurosci. 2021, 2021, 2392395. [Google Scholar] [CrossRef]

- Khalil, M.; Ayad, H.; Adib, A. Performance evaluation of feature extraction techniques in MR-Brain image classification system. Procedia Comput. Sci. 2018, 127, 218–225. [Google Scholar] [CrossRef]

- Leo, M.J. MRI Brain Image Segmentation and Detection Using K-NN Classification. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2019; Volume 1362, p. 012073. [Google Scholar]

- Khawaldeh, S.; Pervaiz, U.; Rafiq, A.; Alkhawaldeh, R.S. Noninvasive grading of glioma tumor using magnetic resonance imaging with convolutional neural networks. Appl. Sci. 2018, 8, 27. [Google Scholar] [CrossRef]

| Layer No | Layer | Layer Name | Layer Properties |

|---|---|---|---|

| 1 | Covn1 | Image Input | 224 × 224 × 1 images |

| Convolutional | (1 × 1) filters instead of (3 × 3) with [2, 2] of stride. | ||

| No Liner Layer | ReLU activation | ||

| 2 | Max Pooling | Max Pooling | (3 × 3) max pooling with [2, 2] of stride and [0 0 0 0] of padding. |

| 3 | Fire2 | Convolutional | 96 (1 × 1) filters instead of (3 × 3) with [2, 2] of stride. |

| No Liner Layer | ReLU activation | ||

| 4 | Fire3 | Convolutional | 128 (1 × 1) filters instead of (3 × 3) with [2, 2] of stride. |

| No Liner Layer | ReLU activation | ||

| 5 | Fire4 | Convolutional | 128 (3 × 3) conv with [2, 2] of stride and [2, 2] of padding. |

| No Liner Layer | ReLU activation | ||

| 6 | Max Pooling | Max Pooling | (3 × 3) max pooling with [2, 2] of stride and [0 0 0 0] of padding. |

| 7 | Fire5 | Convolutional | 256 (3 × 3) conv with [2, 2] of stride and [2, 2] of padding. |

| No Liner Layer | ReLU activation | ||

| 8 | Fire6 | Convolutional | 256 (3 × 3) conv with [2, 2] of stride and [2, 2] of padding. |

| No Liner Layer | ReLU activation | ||

| 9 | Fire7 | Convolutional | 384 (3 × 3) conv with [2, 2] of stride and [2, 2] of padding. |

| No Liner Layer | ReLU activation | ||

| 10 | Fire8 | Convolutional | 384 (3 × 3) conv with [2, 2] of stride and [2, 2] of padding. |

| No Liner Layer | ReLU activation | ||

| 11 | Max Pooling | Max Pooling | (3 × 3) max pooling with [2, 2] of stride and [0 0 0 0] of padding. |

| 12 | Fire9 | Convolutional | 512 (3 × 3) conv with [2, 2] of stride and [2, 2] of padding. |

| No Liner Layer | ReLU activation | ||

| 13 | Dropout | Dropout | 50% dropout |

| 14 | Covn10 | Convolutional | 512 (3 × 3) conv with [2, 2] of stride and [2, 2] of padding. |

| No Liner Layer | ReLU activation | ||

| 15 | Max Pooling | Max Pooling | (3 × 3) max pooling with [2, 2] of stride and [0 0 0 0] of padding. |

| 16 | Fully Connected | Fully Connected | 1000 hidden neurons in a fully connected (FC) layer. |

| 17 | Soft-max | Soft-max | Soft-max |

| 18 | Output | Classification Output 4 | Output classes: “1” for meningioma tumour, “2” for glioma tumour, “3” for a pituitary tumour, “4” for not-tumour. |

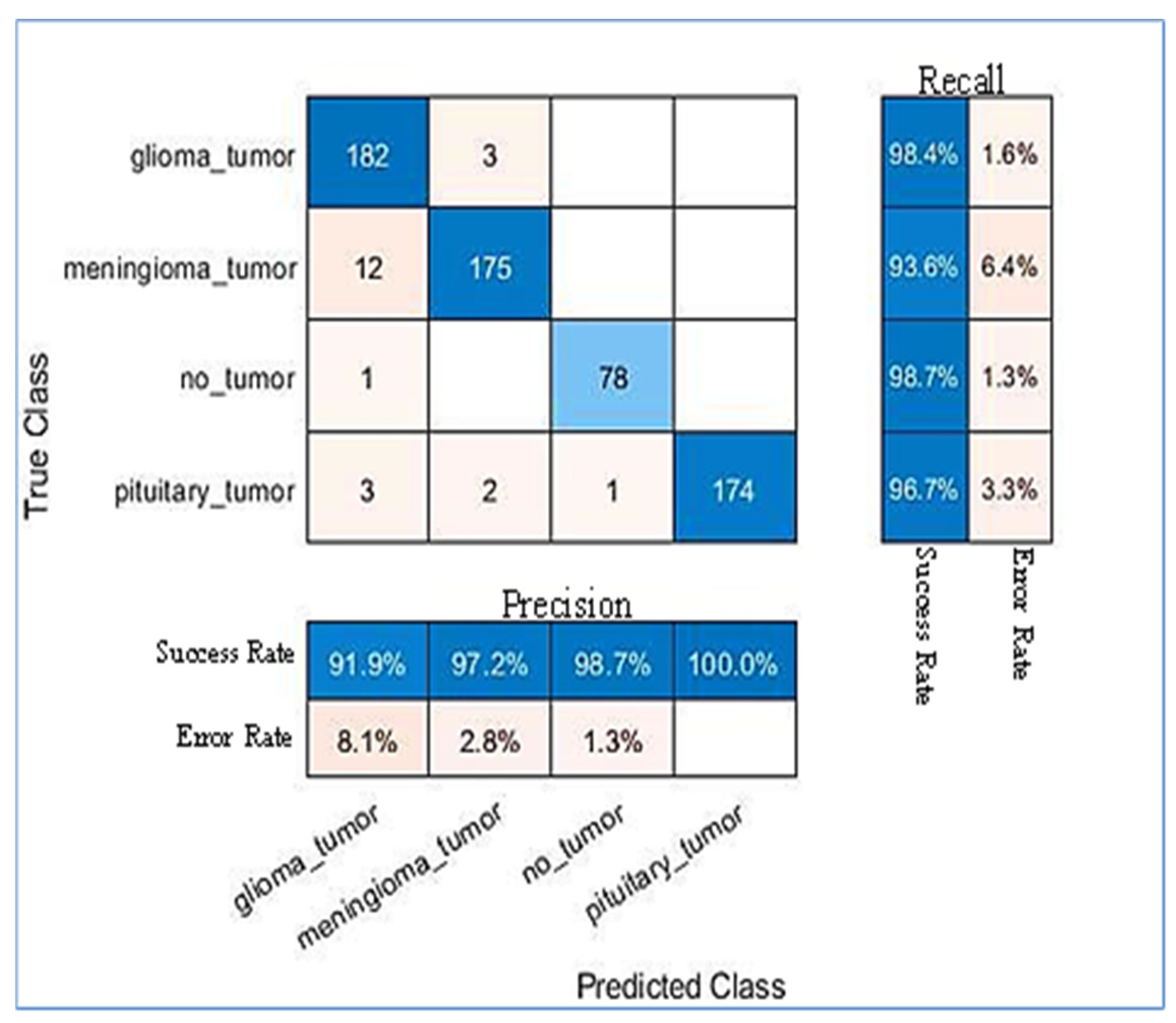

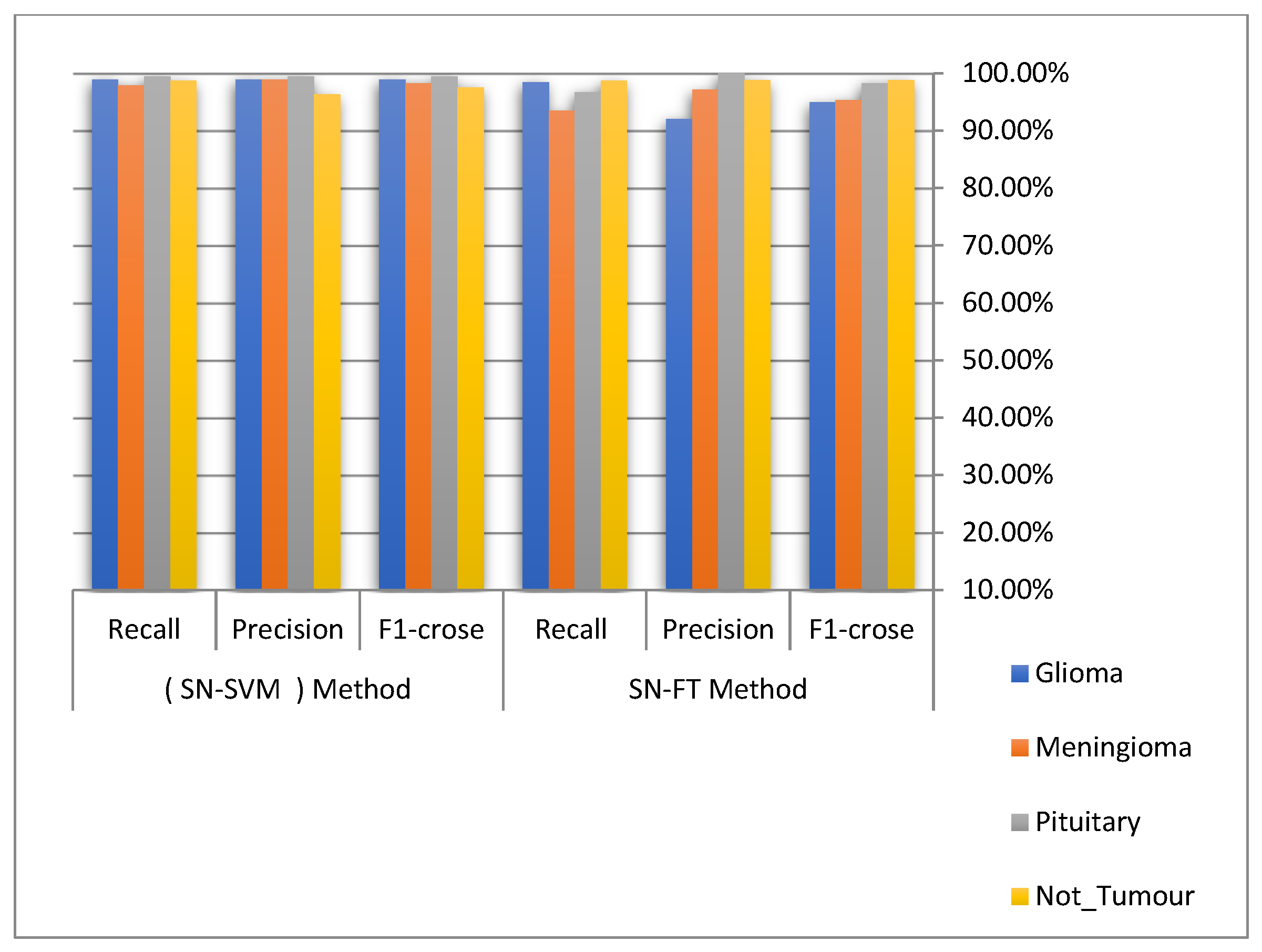

| Tumour Types | SN-SVM Proposed Method | ||

|---|---|---|---|

| Recall | Precision | F1-Score | |

| Glioma | 98.9% | 98.9% | 98.9% |

| Meningioma | 97.9% | 98.9% | 98.3% |

| Pituitary | 99.4% | 99.4% | 99.4% |

| Not_Tumour | 98.7% | 96.3% | 97.5% |

| Tumour Types | SN-FT Proposed Method | ||

|---|---|---|---|

| Recall | Precision | F1-Score | |

| Glioma | 98.4% | 92% | 95% |

| Meningioma | 93.5% | 97.2% | 95.3% |

| Pituitary | 96.7% | 100% | 98.3% |

| Not_Tumour | 98.7% | 98.8% | 98.8% |

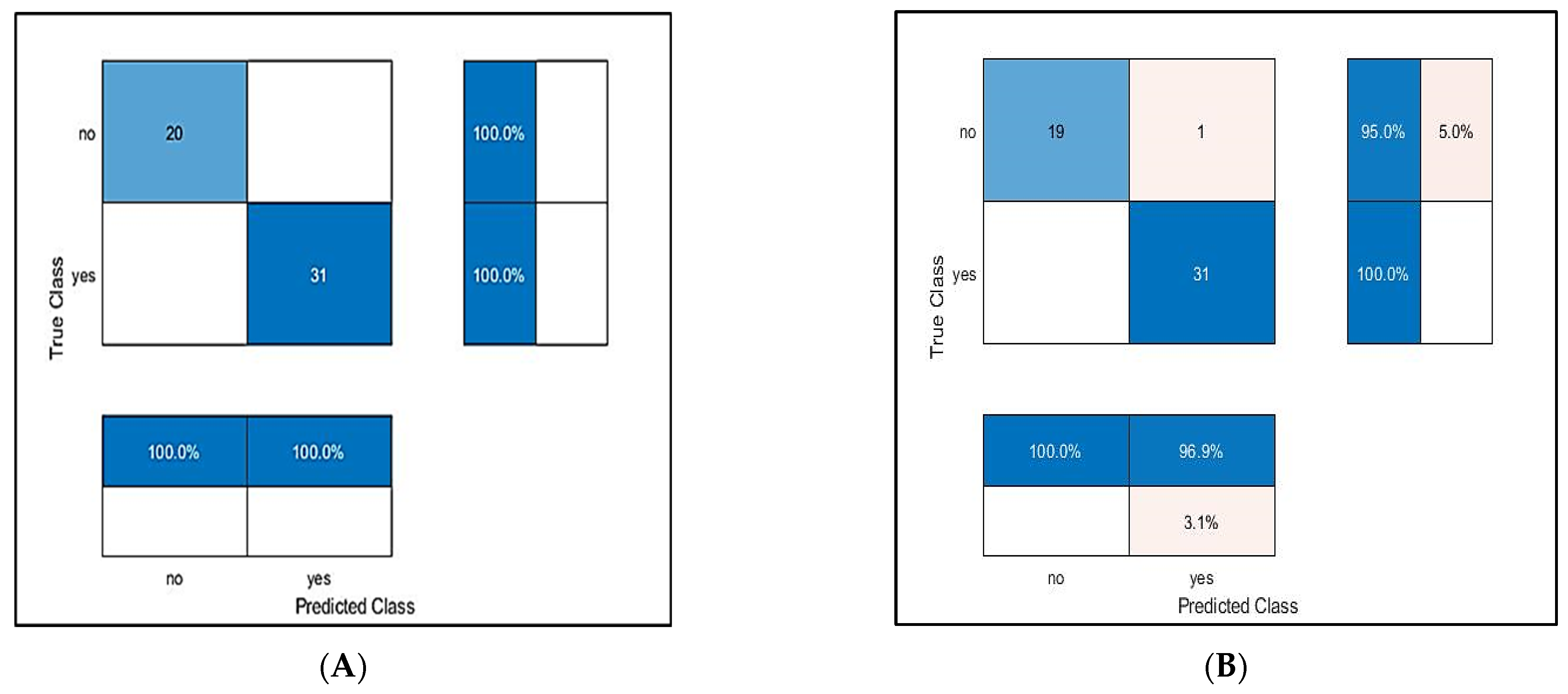

| SqueezeNet technique with the SVM technique (SN-SVM) | ||||

| Recall | Precision | F1-score | Error Rate | Accuracy |

| 98.7% | 98.3% | 98.5% | 1.3% | 98.7% |

| SqueezeNet technique with the Fine-Tuning technique (SN-FT) | ||||

| Recall | Precision | F1-crose | Error Rate | Accuracy |

| 96.8% | 97% | 96.8% | 3.5% | 96.5% |

| Ref. | Method | Accuracy | Precision | F1-Crose |

|---|---|---|---|---|

| Barjaktarovic et al. [36] | CNN | 96.56% | 94.81% | 94.94% |

| Hashemzehi et al. [37] | CNN and NAND | 96.00% | 94.49% | 94.56% |

| Diaz-Pernas et al. [35] | Multi-scale CNN | 97.00% | 95.80% | 96.07% |

| Sajja et al. [38] | Deep-CNN (VGG16) | 96.70% | 97.05% | 97.05% |

| Tazin et al. [39] | MobileNetV2 | 92.00% | 92.50% | 92.00% |

| Proposed methods | SN-SVM | 98.73% | 98.39% | 98.56% |

| SN-FT | 96.5% | 96.8% | 96.8% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rasool, M.; Ismail, N.A.; Al-Dhaqm, A.; Yafooz, W.M.S.; Alsaeedi, A. A Novel Approach for Classifying Brain Tumours Combining a SqueezeNet Model with SVM and Fine-Tuning. Electronics 2023, 12, 149. https://doi.org/10.3390/electronics12010149

Rasool M, Ismail NA, Al-Dhaqm A, Yafooz WMS, Alsaeedi A. A Novel Approach for Classifying Brain Tumours Combining a SqueezeNet Model with SVM and Fine-Tuning. Electronics. 2023; 12(1):149. https://doi.org/10.3390/electronics12010149

Chicago/Turabian StyleRasool, Mohammed, Nor Azman Ismail, Arafat Al-Dhaqm, Wael M. S. Yafooz, and Abdullah Alsaeedi. 2023. "A Novel Approach for Classifying Brain Tumours Combining a SqueezeNet Model with SVM and Fine-Tuning" Electronics 12, no. 1: 149. https://doi.org/10.3390/electronics12010149

APA StyleRasool, M., Ismail, N. A., Al-Dhaqm, A., Yafooz, W. M. S., & Alsaeedi, A. (2023). A Novel Approach for Classifying Brain Tumours Combining a SqueezeNet Model with SVM and Fine-Tuning. Electronics, 12(1), 149. https://doi.org/10.3390/electronics12010149