Abstract

Accelerated advances in science and technology drive the need for professionals with flexible problem-solving abilities towards a collaborative working environment. The advances pose a challenge to educational institutions about how to develop learning environments that contribute to meeting the aforementioned necessity. Additionally, the fast pace of technology and innovative knowledge are encouraging universities to employ challenge-based-learning (CBL) approaches in engineering education supported by modern technology such as unmanned aerial vehicles (UAVs) and other advanced electronic devices. Within the framework of competency-based education (CBE) and CBL are the design, implementation, and evaluation of an intensive 40 h elective course which includes a 5-day challenge to promote the development of disciplinary and transversal competencies in undergraduate engineering students whilst relying on UAVs as the medium where the teaching–learning process takes place. Within this credit course, a case study was carried out considering the framework of an exploratory mixed-methods educational research approach that sought a broad understanding of the studied phenomena using various data collection instruments with quantitative and qualitative characteristics. An innovative academic tool was introduced, namely a thematic UAV platform that systematically exposed students to the principles underlying robotic systems and the scientific method, thereby stimulating their intellectual curiosity as a trigger to solve the posed challenge. Moreover, students came up with innovative teamwork-based solutions to a designed challenge while having an enjoyable and motivating time flying drones on an indoor obstacle course arranged by themselves. The preliminary findings may contribute to the design of other CBL experiences, supported by technology applied for educational purposes, which could promote the development of more disciplinary and transversal competencies in future engineers.

1. Introduction

Society needs professionals with problem-solving competencies to change and improve ongoing paradigms. Recent debates about this suggest that it is not enough to have the knowledge; the knowledge must be applied through skills in order to solve significant real-world problems within communities. As a result, teaching–learning approaches have been studied, examined, and researched to respond to new social demands; in universities, competency-based education (CBE) is one course of action that is being applied.

CBE concentrates on developing students’ disciplinary and transversal competencies which they will need in their professional lives. Some competencies are specific to a study program, whereas others are transversal in all educational programs. Therefore, the main objective is to provide integral competencies across all educational programs. As key players in educating professionals under CBE, universities around the world have aligned their missions and visions with the demands and needs of society. Moreover, CBE focuses on developing competencies through a well-defined, planned, interdisciplinary process [1]. In this context, each educational program has a set of graduate competencies that range from a basic to an advanced level. In addition, CBE endows some benefits to the profiles of the graduates. This promotes the learning of self-management, integral, and transversal preparation, the ability to solve real-life problems outside the academic context, and more clarity about what the graduate can do [2,3].

The CBE paradigm implies major strong adjustments to the teaching–learning process, affecting the methodology of teaching, the profiles of the students and teachers, how the course content is delivered, when and where the teaching–learning process is carried out, the evaluation instruments (rubrics), and the evidence that supports the mastery-level for each defined competency [4]. For these reasons, the design and execution of a CBE require a comprehensive effort that must involve both experts in the discipline and skilled people with formal studies in educational innovation. Furthermore, Johnstone and Soares [5] pointed out that a CBE functions with teachers that are mediators to guide and orient the students in their learning rather than lecturers; moreover, students must be proactive and responsible for the development of their competencies throughout their academic program.

The designed challenges are the means to develop the specified competencies, and they include activities, tasks, and situations that specify deliberate effort to achieve mastery [6]. Moreover, the challenge becomes a stimulus that requires a high-order mental endeavor. Hence, the set of well-planned challenges within the context of CBE comprises what is called challenge-based learning (CBL) [7,8]. According to Johnson et al. [9], CBL is a teaching–learning approach adjusted to develop competencies through real-world challenges where problem-solving is more enriching than traditional classroom assignments. In addition to challenges, CBL is formed with well-defined learning modules (theory and/or practice) [10,11,12].

Efforts have been reported to improve the teaching–learning process using drones. Such endeavors include the use of simulators, whilst in other cases, they are a mixture of simulators with development platforms, or only the hardware part. They have been applied within K-12 education [13] and at universities as well. They have been single activities or complete courses, where manual control of the drone and autonomous sequences have been included.

Drone simulators are an option to improve programming skills and can be applied as an activity or throughout a complete course. Different software platforms which emulate the dynamics of drone flight have been developed [14]. In some cases, these simulators have been applied in isolated activities, but they are also part of courses with credits [15]. In this line, one of the most outstanding products is a simulation platform that improves programming skills in K-12 education. The solution includes the modeling and control of the drone’s dynamics. In addition, activity feedback was gathered through a survey [16]. The reported results also include the use of hardware platforms, sometimes complemented with simulation software. Whether as an activity, a set of them or a complete course, drones are a stimulus to motivate students to develop science–technology–engineering–mathematics (STEM) competencies prior to college. These efforts have been at the level of a proposal [17,18], or implementations [19,20,21,22]. In these deployments, the focus is mainly on the activities to be carried out and not a possible research methodology as the theoretical frame from which the activities to be implemented arise. However, there is a reported 6-weeks course during which high school students carry out the graphical programming of drones within a research methodology formulation with declared competencies. This course did not develop the dynamics of drones nor the simulation of the automatic control system because it was for K-12 education [23].

At the college and undergraduate levels, the focus has been the design and construction of drones in credit courses. For example, the process of building a drone has been reported as an educational proposal [24]. There is also the record of an implemented course where the students designed and built a test-rig, although the activities did not include the system’s dynamics modeling [25]. Another contribution is a 10-week engineering capstone course, where students implemented a drone from scratch [26].

Furthermore, for university engineering programs, drone platforms have been used to encourage the development of intellectual curiosity, teamwork and disciplinary skills related to automation, programming, sensors, actuators, among others. Laboratory proposals to familiarize oneself with the drone’s dynamics and its automatic control [27] have been described; in addition, the design of a toolkit to be applied to project-oriented-learning activities [28], as well as complete courses (from design to flight tests) [29] have been reported. Other results are implementations of activities and complete courses with simulated scenarios or real prototypes [30,31,32,33,34,35]. These activities have included components such as: calibration; computer-aided design (CAD); sensors; actuators; automatic control; and trajectory tracking.

An educational proposal to develop competencies as part of an intensive 40 h course with curricular credits is the so-called I-week (Innovation Week). Each elective course involves 8 h daily work in a 5-day time-frame. For students, an I-week represents 5% of the total grade of all enrolled courses in the academic period (semester) in which the I-week is carried out. I-week activities are designed by one or more academics before undergoing institutional review stages until they are approved by an independent expert committee. The design starts with a workshop, then an interactive design-evaluation process is executed until the challenge is approved or discarded. Finally, the activity is announced to the students, and if they meet the course’s prerequisites, they voluntarily enroll according to their preferences and availability on each campus. All I-week challenges have objectives, competencies to develop, a description of activities, calendar, products to deliver, and an evaluation rubric. It is important to mention that the average preparation time for an I-week activity is six months [36,37].

All reported outcomes are relevant because they contribute to improving the teaching–learning process and the skills developed by students. As part of a continuous improvement process, opportunity areas were detected in these contributions. Some reported efforts did not develop a research methodology, whereas other ones did not have a set of competencies to develop. Furthermore, most of them did not consider an introduction to the modeling and control of drones, or include a single activity, or were limited to certain graphic programming with a reduced list of commands. In these scenarios, a contribution was detected herein along with a justification for the research effort.

The contribution of this investigation is the design and implementation of an evidence-based, educational, pilot elective credit course for undergraduate engineering programs. The 40 h intensive course is a motivating learning experience within the CBL frame. The addressed challenge in this case study attempts to develop intellectual curiosity while fostering defined disciplinary competencies. The activities to be carried out by the students are part of a broader study that seeks to improve the teaching–learning process and the study has an exploratory approach with mixed-methods educational research. Although the deployments did not include a control group, the information generated from the instruments to collect the data support the guiding hypothesis. The applied research methodology goes beyond the pure quantitative instrument and its sample size because it includes the qualitative component by collecting field notes and generating examination records. Within the framework of an I-week focused on developing intellectual curiosity skills, and to the best of the authors’ knowledge, there is only one reported work with some comparable characteristics, but with a different activity, not the same research methodology, which was accomplished in one academic semester [38].

This paper is organized into five sections. Section 1 puts into context the relevance of this innovative educational research, the literature revision, and the contribution. Section 2 describes the research methodology. Section 3 discusses the design and deployment of the course’s activities. Section 4 presents the experimentation results and findings, whereas Section 5 presents the discussions and validity. Section 6 summarizes the conclusions and future research actions.

2. Definitions and Research Methodology

This section presents the guidelines that provide a structure and formality to the exploratory research effort and the focal point of the project. First, the research problem, the scope, and the empirical hypothesis are given; subsequently, the research plan defines the participants, instruments, workflow diagram, and other aspects relevant to the studied phenomenon for this hands-on pedagogical methodology.

2.1. Research Topic and Scope

This investigation was classified as applied research [39] with an experimental and descriptive scope [40]. The aim was to obtain preliminary results that could contribute to a hypothesis generated from the domain of CBL within CBE. The problem is defined in the context of a society that needs professionals who master pertinent competencies required to solve real complex challenges. Because some problems are not precisely defined until the engineer examines them, it is valuable to have competent professionals able to build upon their previous learning with a research approach to arrive at innovative solutions. In addition, during this project, the descriptive feedback of the participants was an important consideration of the experiment.

Descriptive and experimental scopes have a very important value in innovative educational research. From the professors’ and students’ viewpoints, the independent variable, the intensive CBL immersion supported by technology, is established to represent the stimulus for dependent variables, i.e., the development of competencies [41].

The empirical guiding hypothesis is defined as the implementation of a CBL immersion (cause) where undergraduate engineering students solve an unmanned aerial vehicle (UAV) challenge (effect) contributes to solve a challenge to develop disciplinary and transversal competencies (outcome). This inference guided this I-week instance and sought to yield evidence supporting the hypothesis.

2.2. Methodology

This inquiry, which includes design, implementation, and result analysis of an I-week, was substantiated using the scientific method in accordance with the educationally oriented mixed-methods approach [39]. The research technique was mixed, wherein the quantitative and qualitative methods carry the same importance. On the one hand, the quantitative aspect analyzes a possible cause–effect relationship among variables and the findings come from statistical analysis; on the other hand, the qualitative elements focus on describing and understanding possible relationships among the variables. Additionally, the participants’ viewpoints and the investigators’ observations are key aspects to consider. It is beneficial to combine quantitative and qualitative approaches to generate more fruitful research, rather than being limited to a single method [41].

From a qualitative perspective, the developed experiment complied with internal validity. The variation of the dependent variables only has an impact on the independent ones because measures were taken to counteract the presence of the main sources of internal invalidation [40]. Specifically, all the participants experienced the same events in compliance with the Helsinki Declaration [42], and the survey was designed by experts from the Academic Vice-rectory of the University without any participation from the teachers.

2.2.1. Participants

The universe of potential participants was composed of all undergraduate students enrolled between the 6th and 9th semesters in undergraduate bachelor’s degree programs in mechatronics, mechanics, electronics, computer systems, and information technologies at Tecnológico de Monterrey. From this set, the sample consisted of 16 students, as shown in Table 1. The largest subset of the sample was in the mechatronics bachelor program and there was no explicit recruitment effort from the authors. The sample size did not alter the CBL design because the proper facilities were provided by the university, except for the students who brought their own personal laptop computers. Moreover, for the experimental protocol, the volunteers comply with the exclusion and inclusion criteria.

Table 1.

Sample characteristics.

In a qualitative approach, the researchers have a more active role during the process, and so it happened in this inquiry where the professor–researchers guided the students throughout the entire activity, continuously listening and ensuring that their advances were aligned with the ongoing experiment [39].

2.2.2. Instruments

The professors took field notes (individual and group) during the execution of the experiment; anonymous individual surveys were answered by the students, and their records were examined to assess the development of competencies throughout the execution of the challenge. Together, these instruments provided evidence to identify the students’ features such as creativity, innovation, and motivation.

At the end of the week, the students answered (individually and anonymously) the institutional I-week activity survey, a 13-item questionnaire which included the subjective qualification of the teachers’ performance and the activity itself. Each item was rated from 0 to 10, or worst-to-best scale. All the questions included the option "not enough information to evaluate" in case the student felt that there were not enough elements to qualify a specific item. The 13 items of the survey were divided into twelve close-ended questions which constituted the quantitative part of the data collection, although it had pieces related to the qualitative instrument because some queries invoked self-analysis about the students’ commitment (attitude). The survey items are listed as follows:

- The teacher/mentor clearly established what was expected of me during the development of the activity.

- The teacher/mentor clearly explained the way to evaluate the activity.

- As for the guidance and advice I received from the teacher/mentor during the learning process, it was:

- The activities carried out allowed me to learn new concepts or apply already known theory.

- I believe that my attitude played a very important role in the development of the activity.

- Regarding the methodology, I had access to clear and concise explanations, innovative media and techniques or technological tools.

- There was time for meditation of acquired learning

- I believe that the acquired learning can be applied in other situations.

- I realized how I can add value to the community, organizations, and society in general through the activity.

- Regarding the level of challenge, the activity was:

- For the next I-week edition, I would recommend this activity to my colleagues.

- How satisfied do you feel to have participated in this I-week.

The qualitative part of the survey consisted of one open-ended question, which was, “Why would I recommend/not recommend the I-week?” There was space allowed for the participant to write their point of view about how the I-week implementation affected their student experience.

Another qualitative assessment instrument was the researchers’ field notes coming from observations that highlighted the insights they gained into the studied phenomenon. At the end of each daily session, the researchers shared and discussed their observations about the progress of the experiment, and in some cases, their observations were sufficiently significant to make adjustments to the ongoing activity; these adjustments are further discussed in Section 4.2.

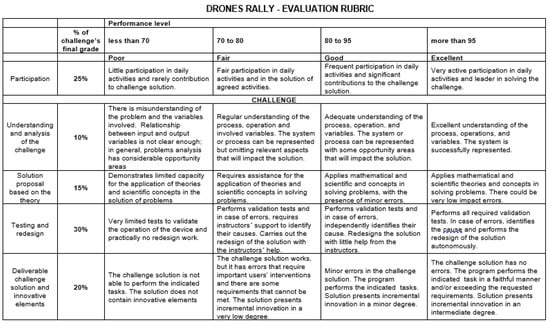

A third instrument consisted of the examination records (the deliverables—computer program and video evidence about the challenge solution) and the assessment rubric. In this rubric, the following aspects were evaluated:

- Assistance and participation;

- Understanding and analysis of the problem;

- Solution proposal based on the theory;

- Problem solution; and

- Testing and results analysis.

The evaluation rubric measures the degree of development attained by the students (individually) for each declared competency. The previously mentioned progress level was assigned via collegial work by the challenge instructors. The first item evaluates the individual participation of the student throughout the I-week. This refers to the extents to which the student was active and their contributions were relevant in the activities carried out during the week; this item counted for 25% of the final grade of the course. The other four components of the rubric refer to the activities directly related to the challenge. From the understanding and analysis to the challenge’s solution, the four main components of the challenge were evaluated and helped evaluate the development of the competencies listed in Section 3.1. Challenge activities constitute 75% of the course grade.

From the rubric’s dimensions, the professor–researchers measured the degree of development of the declared competencies and gathered information about the degree of motivation displayed by the students, as well as their creativity and the degree of innovation in the implemented solutions. This assessment instrument was composed of the researchers’ records about the students’ performance and the final deliverable. Appendix A provides information about the challenge evaluation rubric. In summary, all the instruments were designed to collect evidence supporting the hypothesis, as described in Table 2.

Table 2.

Relation between instruments and hypothesis.

Concerning the quality of the measurements, their reliability and validity were reviewed. The content and construct approaches underwent validation [40] and reliability [39] review. The criterion-related aspect was not revised because the results of the instrument were not compared with any external criteria. The summary of this qualitative analysis is presented in Table 3.

Table 3.

Instruments—qualitative reliability and validity.

2.2.3. Workflow Diagram and Calendar

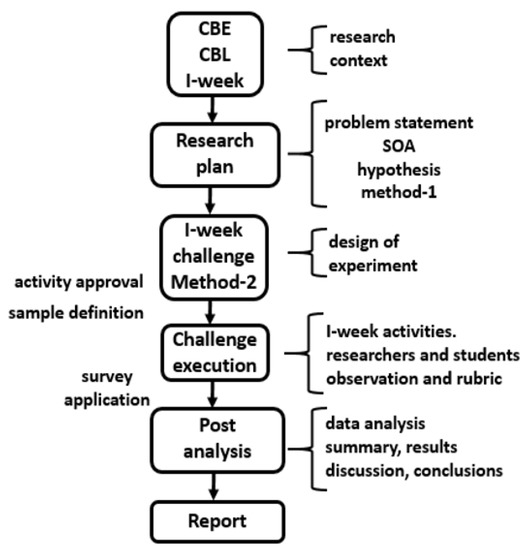

The experiment was defined from a CBE and CBL perspective and executed in September 2017. During the challenge, the researchers collected data using the previously specified instruments. At the end of the week, the student participants answered a survey, and afterwards, the data were analyzed to generate results and to arrive at conclusions. Figure 1 depicts the research process and Table 4 shows the project time schedule.

Figure 1.

General research process flowchart.

Table 4.

Research time schedule.

2.2.4. Budget

The activity was carried out in the Campus Sonora Norte facilities of Tecnológico de Monterrey, in Hermosillo, México. Some parts of the challenge were developed in a classroom with the capacity for 24 persons, and some activities took place in a computer room; the flying tests were performed in open-roofed spaces. Economic outlays were not required for the challenge. Personal computers and a network hub for laptop networking access were required for the challenge.

UAVs were the most specialized equipment employed in the challenge. The campus provided 10 drones (five Parrot MAMBO mini-drones and five Parrot ARDRONE 2.0) to carry out the activity. There were, then, eight UAVs for students and two for testing by the teachers.

The software was open source at no cost. The UAV-related computer programs for all I-week activities were freeware and were downloaded from the official Parrot developer’s website, and the template testing programs came from the GitHub website [43], where expert software developers continuously update the website with both open source and licensed programs [44]. Research centers and universities are part of the community that shares and downloads code. In addition, the LINUX and Windows operating systems were used on the computers of the teachers and students.

2.2.5. Assumptions and Limitations

This inquiry assumed that the institutional survey applied to the participants was reliable, valid, and relevant. Although no instrument validation was carried out during this inquiry, the survey was designed by academic experts independently of the professor–researchers. All students individually and anonymously answered the survey under ethical oath, i.e., they responded to each question alone in accordance with their honest perception without the influence of third parties; the participants’ anonymity was guaranteed under the Code of Ethics of the University.

By only considering the quantitative part of the research, the examination had limitations with respect to the participants. Even though a population size of 16 students would have been considered small, the inclusion of instruments such as the field notes and the examination records provided a broad perspective by taking advantage of mixed methods educational research rather than a quantitative approach [39,45]. In general terms, the sample was randomly selected; however, it was evident that the students who resided in the city where the experiment was conducted were more likely to participate in this “Drone Rally” activity. Another limitation was that this exploratory research work did not include a control group.

To the best of the authors’ knowledge, this is the first effort that includes multiple distinguishing features such as CBL in an I-week, specifically declared competencies, full immersion in a time frame of 40 h, knowledge not learned in any formal bachelor course, and drones. Because this was a pilot activity, the analysis did not include a control group or comparative statistics. The hypothesis guided the research, and although the exploratory nature of the research is evident, the preliminary results are relevant to the teaching–learning process in a technological CBL environment.

3. The Drone Rally I-Week Activity

The challenge design was based on the ideas of active learning education and the promotion of creativity. People learn when they experience, interpret, reason, and reflect on their experiences using prior knowledge [46]. The technological context that surrounds the activity is an environment wherein students experience, test, make mistakes, and learn, i.e., teachers and technology are partners in the learning process [47]. For instance, in this project, students learned within an experimental platform to program UAVs and solved the challenge provided to them. They generated and tested creative solutions through teamwork and under the instructors’ guidance.

This approach is divergent because it may result in several plausible solutions from one common challenge [48]. From the description of a couple of challenges, students developed their own versions of the solutions to the challenges. For 5 days, the students applied algorithms from the UAV research-oriented institutions to generate their own programs. With this experience, they developed their creativity through experimentation and the generation of innovative solutions using the scientific method to resolve a challenge involving the programming of aerial vehicles.

3.1. Designing the Challenge

The objective of the challenge was presented as follows: the student becomes sufficiently acquainted and competent with the programming of quadrotors to learn how to design an autonomous flight testbed. The declared competencies to be promoted during this activity were:

- Identification and resolution of engineering problems by proposing and validating models constructed using a research process, innovation, and the improvement of technological projects (disciplinary);

- Intellectual curiosity (transversal); and

- Collaborative work (transversal).

It is noteworthy that approximately 90% of the participants were not familiar with the foundations of this challenge, or even with the operating system LINUX-Ubuntu [49]. Moreover, the ARDrone 2.0 was programmed in Java, whereas the MAMBO unit was implemented using Python; both were previously unknown programming languages for almost all students who enrolled in this challenge.

3.2. I-Week Implementation

The students worked with two quadrotor drone platforms, namely the AR Drone 2.0 and Mambo platforms. They programmed routines, which included the autonomous tasks of taking-off, landing, and avoiding obstacles. The final products included: two videos (one for each drone model) with evidence of the autonomous trajectory and the source codes developed for each trajectory. The implementation of the I-week was divided into eight blocks executed over the span of 5 days. The description of each block is presented in the following subsections.

3.2.1. Opening and Introductory Activities

As a first step, the students were divided into teams of four people. Each team was given the responsibility of taking care of their equipment, which consisted of a Parrot AR-drone 2.0, two batteries, the charger, and one Parrot Mambo. The students began with a demo using the Mambo and the Parrot smartphone app and the FreeFlight Mini [50]. This first interaction included an intuitive way to pilot the quadrotor indoors, whereas the researchers were giving a brief explanation on how to fly the quadrotor. After the first Mambo flight, the students used the Parrot app AR.FreeFlight version 2.4.15 (which was downloaded from the Android or Apple store in their cellphones) to pilot the AR-drone 2.0 [51]. The professors observed that being digital natives made it very easy for the students to become familiar with this technology despite the fact it was new to them. Initially, it had been contemplated that more time for drone flying demonstration would be required, but the agenda was modified to move faster and finish the activity ahead of schedule.

In this first block, the students used the Mambo drone and learned how aerodynamic principles explain how the rotors of the quadrotor provide thrust to permit airborne movement in the airspace and how the angular speed of the rotors results in the tilting of the device, which consequently produces a translation of the quadrotor. With the AR-drone, they obtained their first interaction with wind gusts and communications delay in outdoor flights. By this point, the students understood the physics, capabilities, and limitations of the experimental platform. In the outdoor flight tests, students had some typical stability problems due to the strong wind gust in the test arena and their novice-level flying drones. All flight tests for the challenge were initially going to be performed outdoors, but as the wind negatively impacted the drones’ flight stability, the professor–researchers decided finally to only have indoor testing scenarios.

3.2.2. Software Installation

The first activity of this block was to install the Oracle Virtual Machine (VM) VirtualBox software [52] to virtualize the Ubuntu 14.04 operating system in a Windows environment. The Ubuntu Linux version was used because, after some tests, the VM was successful with it, and the GitHub files used for AD-Drone 2.0 properly ran on that version of the operating system. After the students received a brief explanation of the software and firmware engineering, they learned the correct setup for VirtualBox and installed all the required updates. They then arranged and executed the steps to install and test the AR-drone 2.0 software development kit (SDK) [53]. With the SDK, the students received access to the quadrotor sensor data and cameras; moreover, they were able to pilot the quadrotor using a joystick. Some basic commands of the SDK were taught, and by this point, the students started to understand the complexity of autonomously controlling the experimental platform.

During the pre-deployment tests, this approach worked properly but during the challenge, the SDK presented problems in recognizing the USB ports of the computers in the cluttered environment of many active links in Windows. After some queries in software forums, the professors and students decided to use laptops with Ubuntu Linux as the unique operating system, and the problem of port recognition was solved. However, the delays due to the port communication problems demanded changes in the challenge schedule. In the end, the students used the SDK to test the different ways in which the flight of the quadrotor can be controlled. It was important to perform this activity to realize an advantage of the SDK because the cellphone application did not have the possibility of connecting a joystick. At this point, the students started to notice the advantages of deepening their own understanding of the experimental platforms.

3.2.3. Modeling and Control of a Quadrotor Using MATLAB-Simulink

The students received a formal session about quadrotor modeling, actuation, and how to solve the under-actuation problem for controlling the position of the quadrotor [54,55]. After the explanation, teachers taught the students how to program a simulator of the attitude and position of a quadrotor using Simulink. They applied several controllers, including a proportional–integral–derivative (PID) strategy, and some robust control strategies.

In this block, the professors taught the students some basic robotic and control concepts such as degrees of freedom, under-actuation, and sliding mode control, all of which were previously unknown to the students. In addition, some remarks and explanations were given regarding MATLAB-Simulink to properly run the simulator. The final product they produced was a complex simulator that could even be used to perform research in robotics or control. All these content materials are graduate-level, and the studied articles and the results are not usually covered in engineering bachelor’s degree curricula, wherein case studies are generally restricted to systems with an input variable and an output variable; this activity demanded a high level of abstraction and study. Emphasis was placed on the scientific method to provide students with the knowledge they needed for this. They had to internalize it to move onto the next block.

3.2.4. Software Setup

After familiarizing themselves with the drones during manual flight tests performed through commercial portable-phone-oriented human–machine-interfaces (HMIs), the students downloaded and installed the libraries onto the drones.

The node-ar-drone is a module programmed in Java which makes use of the AR-drone 2.0 SDK and simplifies the use of the SDK thanks to an API client running in Ubuntu [56]. The use of the node-ar-drone library allowed the students to execute scripts in the laptop and then automatically control the quadrotor. Moreover, the students installed the AR-drone Autonomy library [57] and implemented some advanced scripts for autonomous navigation.

The flight was very motivating for the students because both libraries were experimental and needed testing for certain commands to work properly. They felt inspired knowing that they were using programming tools developed by other universities and research centers. In addition, the professor–researchers assisted the students in relating the course content material to the experimental data. At this point, the abstraction level required to associate the tuning procedure with the flight regime and data was at its core, and the professors took notes on the students’ development for the planning and design of future I-week activities.

For the Parrot-MAMBO UAV, the setup was performed in the Ubuntu Linux operating system. Students installed the Python-bluez and Python-pip packages to work with the Python language. Afterwards, the Bluepy library was set up from Harvey [58] to activate the Bluetooth communication module between the computer and the drone. Moreover, with the pymambo library provided by McGovern [59], the students developed the UAV programming interface to generate an autonomous flying sequence. With the setup ready, the students focused on testing the development libraries for further employment in codes which designed and implemented.

3.2.5. The Challenges

At this point, the students had obtained the necessary basics of quadrotor aerodynamic modeling, control, manual operation, and the required tools for autonomous flight and sensor monitoring. The teachers presented the final challenge for each UAV platform: the Parrot Mambo navigating through an obstacle course which was designed using classroom furniture and some extra tools; whilst for the AR-drone 2.0, the students relied on additional programming resources to meet the challenge of autonomous navigation in the cluttered environment. Furthermore, the students proposed adaptations to the challenge and to the obstacle course. For the rest of this activity, the professors assisted the students in designing the routine and encouraged them to explore and implement tools complementary to those studied and used in previous activities.

The students focused on experimenting, analyzing the results, and introducing appropriate changes to what they had accomplished. The educators’ main task in this phase was to advise the students on how to debug scripts, how to make the best use of their tools, and to give them insight into what they could accomplish with their projects.

3.2.6. Final Tests and Deliverables

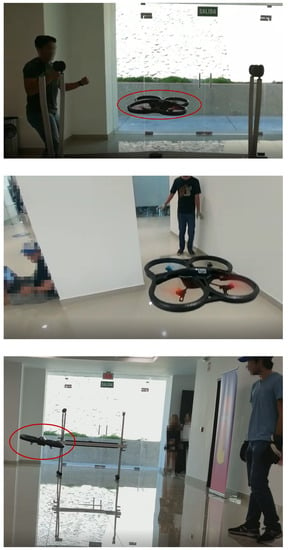

The last day was used to accomplish the final experiments for both drones. The participants performed autonomous routines, and the professors observed the progress of each team. After that, each team uploaded the videos with the result and the programming code for the quadrotors, and finally, the closing session of the I-week was held between the instructors and participants. Figure 2 shows some scenes of the intermediate and final tests with the ARDRONE 2.0 drone (in red ovals where required). Additionally, it can be seen that some tables were used to create obstacles during the tests. The images were edited to avoid revealing the identities of the students.

Figure 2.

Some scenes in which the students are testing the ARDRONE 2.0.

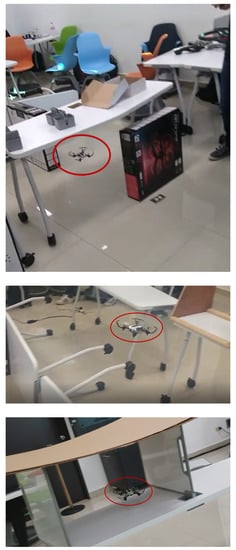

The other challenge demanded the use of the Parrot MAMBO drones. The students took boxes and classroom furniture to create the test tracks. Figure 3 shows some images of the final tests with the MAMBO drone. The test tracks were proposed and built by the students. They created two twin tracks, and thus doubled the time each team had to test their algorithms.

Figure 3.

MAMBO drone attempts on the test track (final day).

3.3. Data Analysis

This subsection explains how the analysis of data collected with each instrument was performed.

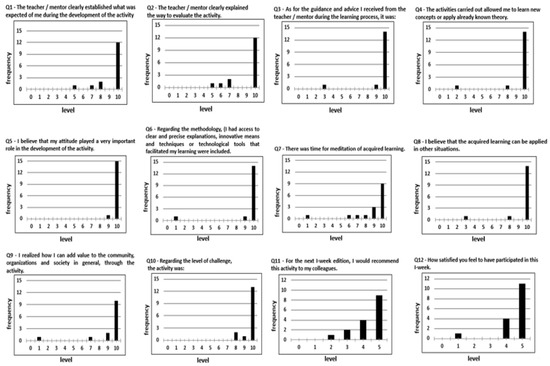

- Survey. From the results of the survey answered by the students, quantitative results were obtained for the data analysis which included descriptive statistics. From the first ten questions in the student surveys, the mode, mean, standard deviation, and percentage of opinions greater than 8.0 were obtained. For questions 11 and 12, the same indices were calculated. However, the calculated percentage we used was 4.0 or greater because these items were defined on a scale from 0 to 5. Bar graphs with frequency distribution were produced for all close-ended elements in the questionnaire and are shown in Appendix B. Each question was evaluated in a range of levels from 1 to 10. For example, for Q2, having answered with 1 implied that the instructor was not clear at all when explaining how to evaluate the activity, and a response of 10 indicated that the explanation of the evaluation was very clear. Each bar graph shows the distribution of scores that students gave for each question. The total frequency for each question must add up to 16 which were the people who answered the questionnaire. The following statistics were applied to the data collected for each closed question of the questionnaire: mode, mean, standard deviation, and percentage of “good” opinions ≥8.0. Moreover, the comments in the open-ended question were classified as positive, negative, or neutral, and the field notes and examination records were analyzed based on their impact on the hypothesis.

- Field notes. They were taken during the development of the activities without interrupting their flow and independently of the participants. The researchers, who also acted as the instructors, collected direct observations from the participants such as: reactions, way of working, interactions, and emotions. Additionally, the researchers recorded field notes on how the activities were carried out. At the end of the day, these observations were discussed among the researchers to eliminate possible duplicate notes and to objectively document them. Field notes also helped make possible adjustments to the implementation of the activities to be carried out in the following days. After the closure of the I-week, the field notes that had a relevant impact on the investigation were filtered and organized to be included in the manuscript.

- Examination records. Constant and effective communication between the researchers was required to evaluate each student, as well as to assess their competencies by consensus. The activities were evaluated according to the rubric in Appendix A. To evaluate the individual and collaborative work, each day, the level of participation of each student was recorded and how they were contributing to the solution of the activities and the challenge. For the final deliverable, the teachers evaluated the codes that each team programmed. The implemented solutions were reviewed and the contributions are highlighted. In addition, depending on the degree of depth, the review of the resources, contribution, and the developed intellectual curiosity were graded. The videos of the final deliverable were reviewed to complete the evaluation of the challenge.

4. Results and Findings

As listed in Table 2, the applied instruments were the survey, field notes, and examination records. The following subsections present the results obtained through the measurement instruments and state some findings and implications regarding the variables in the hypothesis.

4.1. Student Survey

The students answered a survey. The average, mode, and standard deviation were obtained for each of the instrument’s twelve questions. In addition, the results included the percentage of students who provided a favorable opinion, defined to be greater or equal to 8.0 in each question. Table 5 shows the results.

Table 5.

Mode (M), mean (), standard deviation (), and the percentage of “good” opinions ≥8.0 () for the first ten closed-ended students’ survey questions.

Table 5 highlights that the great majority qualified the activity with the maximum score (10.0 points), which caused practically all averages to be above 9.0. Moreover, in most cases, the standard deviation exhibited a small data dispersion and a few negative opinions. Hence, most of the participants stated a positive opinion about the challenging implementation and the instructors’ performance.

For the questions shown in Table 5, the results in Appendix B include the participants’ opinions that contributed to the hypothesis. In question 4, perceived that they learned entirely new concepts through the activity. In question 6, agreed that the challenge included innovative techniques and technological forms in the teaching–learning process. Other important aspects of this CBL included the special moments during which the students had to reflect upon (meditate on) the acquired learning; based on question 7, considered that they had those moments. Additionally, responded that they could extrapolate their learning to the solution of other problems (a CBL characteristic). Moreover, another observed CBL element was the level of challenge demanded to students; of the students perceived the activity to be challenging.

The last two questions of the student survey are shown in Table 6 and describe the overall students’ opinions. The results are presented in a separate table because they employ a different measurement scale. These queries were answered with the selection of responses ranging from 1 to 5 in a Likert-type scale format [40] that has a midpoint (neutral) and two opposite positions (extreme and moderate). Results included the mode, mean, standard deviation (), and percentage of “good” opinions. The results in Table 6 substantiate that the I-week challenge received a very positive acceptance among the students— would recommend signing up for this challenge. Moreover, felt satisfied (with a level of 4 or 5, in a range of 0–5) for having participated.

Table 6.

Mode (M), mean (), standard deviation (), and percentage of “good” opinions ≥8.0 () for the first overall performance questions (11 and 12) in the survey.

For the qualitative section of the survey, the following questions were asked: “Would I recommend this activity to my colleagues in the next I-Week?” and “Why would I recommend it or not recommend it?”. Although all 16 students answered the question, not all of them included comments. All their judgments are in Table 7, which classifies them (as either positive, negative, or neutral) according to the criteria of the researchers.

Table 7.

Comments associated with the questions Q11 and Q12 in the survey.

Table 7 shows eight positives; one negative; and one neutral—all out of ten. Positive comments focused on the qualifiers: fun, interesting, challenging, learning something new, and multidisciplinary; whereas the opportunity areas for improvement were the limitations on the drones’ flexibility and activity during the experimental phase.

4.2. Field Notes

The records made during the execution of the challenge were divided into two categories: (a) the direct observations of students’ reactions, interactions, and emotions, listed in Table 8; and (b) the field notes about the events that impacted the experiment as it was carried out, listed in Table 9.

Table 8.

Direct observations.

Table 9.

Field notes.

Most of the field notes shown in Table 8 contribute to supporting the hypothesis. The records included behaviors and reactions associated with the development of the two declared transversal competencies (collaborative work and intellectual curiosity), and the students’ motivation to solve the challenge. Motivation (individual and collective) was also perceived and recorded in notes. The notations made evident that this behavior was observed at different stages of the challenge. The comments and reactions of the students towards the tasks assigned and their dedication to reach the proposed goals provided evidence of the degree of motivation. Student motivation was especially evident during the last two days because they did not require external pressure to move forward to attain their goals.

The behaviors that demonstrated the cause–effect relationship between the implementation of the challenge and the development of collaborative work (teamwork) were observed throughout the week. In the teams, the students had to coordinate communication and tasks to reach the goal. Moreover, when a team member had questions, collaboration was encouraged so that comprehension could be attained and the course could be set; the professor–researchers observed the support from within the teams.

Observations also highlighted the intellectual curiosity of the participants. The instructors presented experimental platforms with limited capabilities and basic code modules that were tested in a shallow way, therefore:

- The students became familiar with these codes, applying them in the challenge and correlating them to formal concepts to which they were introduced;

- The participants also looked on their own for other experimental libraries; and

- The students were testing and modifying as they tried to solve their challenges. The researchers believed that these behaviors led to the intellectual curiosity in the students.

The following paragraphs expand and put into perspective the most important field notes related to the challenge progress shown in Table 9. They are presented in chronological order.

For the deliverable of the proposed solution, originally, the idea was to work with one drone per team at each step of the challenge, i.e., four persons working with a UAV. However, during the preliminary tests, some team members were distracted from the challenge objective because there were several students working simultaneously with a single drone. For this reason, the teachers set two drones per team during the challenge. With this modification, the final deliverable included two different drone models, each of which had its own required task, and two people were working per drone.

The previous field observations were very important in the daily closing sessions. At the end of the partial sessions (at noon) and after a daily wrap up, the teachers analyzed the progress of the challenge and the students’ performance, highlighting positive and negative aspects that might lead to possible adjustments. All the issues noted in the records were considered in consensual reviews by the professor–researchers.

To make the environment more pleasant, the students could listen to music if it was agreed upon to prevent annoying their classmates—no disturbance was observed. In addition, during their breaks, some of them played video games on their laptops. Apparently, this activity relaxed them a bit so that they could then continue with the challenge.

During the most intensive part of the challenge, the students performed multiple tests. They programmed and tested the flight sequences. They detected errors based on cause–effect analyses and then suggested a modified code. During this time, a lot of teamwork was observed in terms of error identification and correction under their own initiative; this was a clear indication of a typical behavior of motivation and collaboration to resolve the challenge.

The challenge related to the UAV MAMBO was the same for all teams. The drone needed to fly through a circuit from the starting point and reach the goal without collisions and unassisted by human intervention. As soon as this statement was communicated to the students, all the teams worked together and created the testing scenario without complaint. The agreement within and among the teams made the efforts very effective. Moreover, it was motivating that the students took the initiative and built a challenging scenario, i.e., they defined the degree of difficulty. The teachers evaluated that this behavior demonstrated a certain level of motivation and collaborative effort.

For the MAMBO drone challenge, one team had an almost complete result close to the deadline of the final solution. The researchers proposed to the students that their grades would depend upon the performance they had achieved. However, the students on this team preferred to continue the endeavor and complete the track as the other teams had done. Some minutes before the deadline, they did obtain the complete solution.

It is worth mentioning that one student exhibited a behavior change during the challenge progress. During the first two days, the person was barely engaged with the activities; however, when the VirtualBox problem occurred, this person volunteered to format their laptop and install LINUX-Ubuntu. After this event, the student increased their participation and contribution to the challenge solution; it was clear evidence of improvement in attitude toward the implementation of the challenge.

4.3. Examination Records

The instrument includes the evidence of the challenge solution implemented by the students, and the videos demonstrate the solution provided by each team. It also includes a rubric and records about the performance of the students. For more details, refer to Appendix A.

The implemented programming codes display the work conducted to resolve the challenge. The coding shows how students employed the experimental libraries, how they modified them, and how they applied them to their situations. The videos are evidence of the challenge solutions provided by all the teams. With Parrot ARdrone 2.0, the students proposed their flight sequences, whereas with Parrot Mambo, they had to make their drones fly autonomously through a circuit, common to all.

When considering the first dimension of the rubric (attendance and participation) the record displayed that only two participants missed some sessions, whereas the rest of the students had a presence. In general, all students contributed to the activities within their teams. This registry provides evidence of the acceptable degree of student motivation. The connection between the rubric and videos contributes to the proposed hypothesis. This factor demonstrates that the students were able to identify and resolve an engineering challenge, as stated by the disciplinary competency described in Section 3.1. All the teams achieved good or excellent levels of performance in the rubric’s challenge dimensions. The teams relied on theory to resolve the challenge, took time to test and correct their proposals, and obtained the final solution. This was an incremental type of innovation because the students took an experimental code, modified it, and applied it to obtain a tangible solution in steps.

5. Discussions and Validity

The findings provide evidence that supports the guiding hypothesis. The instruments collected evidence about the development of disciplinary and transversal competencies, including students’ perceptions. The observations are a source of qualitative data that supports various results and reflections of the research.

This relatively new teaching–learning experience pushed students beyond their comfort zones and encouraged them to work collaboratively to solve a challenge. From the beginning, they noted different known elements and had to work with the experimental code in a programming language that was new to almost all of them. In five days, the students implemented solutions, employing two new programming languages using an operating system they had not previously explored.

The series of practical activities, an alternative approach to learning from traditional sessions, encouraged the students to understand the problem and to develop creative solutions with a certain degree of innovation. Moreover, the students were motivated to develop their intellectual curiosity in an undergraduate program, something which aligned with the CBL paradigm.

The outcome of the students’ capability to do work with experimental code led to their understanding of the state of the art of certain technological software advances to autonomous UAV flight. Afterward, they became familiar with those new elements, and from that point, they adapted their solutions to the challenge.

The findings coming from the data highlight the relevance and value of these types of disruptive activities. It is expected that the preliminary results of this research effort and the experience of employing UAVs to develop disciplinary and transversal competencies will contribute to future educational research.

One aspect that increases the relevance of the results is how general they could be, i.e., the research has some degree of external validity. Even though this study does not include an extended analysis to determine the generality of outcomes, it takes into consideration the validity guidelines provided by experts in the field [39,40]. The following elements contribute to the external validity of this research effort:

- There was a random sample of participants.

- The challenge was carried out during the normal period of the semester; however, student engagement occurred during one full-time work week free from any assignments from other courses;

- All the activities were carried out on one campus with proper facilities;

- No contradictory results were observed;

- The scientific method was used in problem-solving.

6. Conclusions and Future Work

The findings reveal that the implemented CBL immersion activity, supported by UAVs, contributed to the competencies developed among undergraduate engineering students. The complete immersion activity was planned with a strong hands-on approach underpinning the activity, which was the experimental commanding and programming of UAVs using the scientific method. The outcomes may contribute to the improvement of the teaching–learning process in this CBE-CBL framework.

As a case study, an unmanned aerial vehicle in manual and autopilot modes was chosen because it requires interdisciplinary advanced knowledge and skills in modeling, control, programming, operating systems, robotics, and knowledge of sensors and actuators, among others. Synthesis in a one-week CBL was studied and reported under the paradigm of an exploratory, mixed-methods educational research project that employed commercial drones as technological tools.

The experiment generated preliminary results that reinforce the learning experience in a one-week CBL operating under a guiding hypothesis, which is something unusual in undergraduate education. The instruments revealed the students’ perception that the underlying scientific method guided this immersive activity to resolve a challenge, which led to the development of disciplinary and transversal competencies. The observations made by the professor–researchers became a source of qualitative data that supported the various results of the research problem and the reflections upon it.

This relatively new teaching–learning experience pushed students outside of their comfort zones and encouraged collaborative work and an thinking-outside-of-the-box approach to resolve the challenge—without forgetting that they did it in a fun and relaxed atmosphere. From the beginning, the students acknowledged the different basic and advanced elements put before them, known and unknown, and they researched and gathered others to have the skills required for the project, which even included an experimental code in an unfamiliar programming language.

The autonomous routines developed during the I-week are evidence that the students programmed and implemented solutions in two new programming languages running on an operating system previously unexplored by them. The performed activities encouraged the students to approach and understand the problem and to develop creative, innovative, and custom-made solutions.

The dimension of the research topic is another outcome to analyze; the undergraduate students’ capability to explore new approaches and to solve challenges has typically been underscored in undergraduate programs. In this study, it was acknowledged that technology and advanced software are in the grasp of the new generation, including the advances to learn to program autonomous flight UAVs. In this study, after a short introduction in a proper pedagogical environment, the students became unexpectedly familiar with these new elements of knowledge. From this point, they worked collaboratively in teams to resolve the challenge of their activity.

The preliminary findings reinforce the idea that the implementation of an innovative teaching activity along with proper guidance allows carrying out a competency-oriented one-week project. It is expected that the research methodology used in this project provides some pedagogical elements for developing learning competencies and contributes to educational research on CBL. The results of this study suggest that to improve the “Drone Rally” challenge in future I-weeks, more advanced knowledge and skills may be required, such as vision navigation and the programming classes to add capabilities for interaction with the environment. There could be experiences designed for a commercial venue, having drone applications in a novel design process of challenges.

The conducted exploratory mixed-methods type educational approach is broader than a purely quantitative study because it also includes two qualitative instruments to gather data. In addition to the statistical information from the students’ survey (limited to the well-known statistical guides on sample sizes), this inquiry considered the qualitative method by including two more instruments such as field notes and examination records. These two tools complemented the research with another perspective of the investigated phenomenon.

Currently, this research team is planning a new study that includes a comparative study, i.e., control and experimental groups in a larger sample size. New evidence could contribute to accept, reject, or modify this first guiding hypothesis. From the experience of this I-week experiment, researchers in this work are trying to further develop the concept: first by defining competencies and sub-competencies related to the areas of scientific research; and then by designing a complete block of courses for a specified semester in engineering, with the aim of providing more information about the student learning process.

As a part of this future work, this research team is considering the inclusion of challenges revolving around objects detection and tracking through vision systems. This topic is of great interest and represents a relevant family of applications for UAVs that could be addressed at an introductory level in an I-week challenge. As part of the course’s activities, students could apply data analysis and machine learning techniques for image recognition to implement detection and monitoring systems with vision [63,64]. Among the real-world applications that use vision systems in UAVs, there are: monitoring and surveillance; detecting crop conditions; inspection of building structures; search and rescue in situations of natural disaster or potentially dangerous environments; and collecting topological data [65].

Additionally, although not reported as direct applications with drones, UAVs could be used in search and rescue; or other hazardous environments to reach people and through vision, detect diseases and other health risk situations to speed up and better prepare the teams that will be arriving. Vision facial diagnosis techniques such as deep transfer learning from face recognition to facial diagnosis have recently been reported [66]. These results could be improved by applying vision techniques supported by big data, such as in the case of the full-stage data augmentation approach to improve the classification of images [67]. Whether for classification, identification or tracking, the duration of the course is crucial, so this research team would consider starting with a ready-to-use platform for vision and thus focus on the algorithms. The skills that the students could develop working with vision systems would be very useful in the commercial applications of UAVs.

Author Contributions

Conceptualization, L.C.F.-H. and C.I.-E.; methodology, L.C.F.-H., C.I.-E. and V.P.-V.; software, L.C.F.-H. and C.I.-E.; validation, A.S.-O., V.H.B. and J.d.-J.L.-S.; formal analysis, L.C.F.-H., C.I.-E., V.P.-V. and A.S.-O.; investigation, L.C.F.-H., C.I.-E., V.P.-V., A.S.-O., V.H.B. and J.d.-J.L.-S.; resources, L.C.F.-H. and C.I.-E.; data curation, L.C.F.-H. and C.I.-E.; writing—original draft preparation, L.C.F.-H. and C.I.-E.; writing—review and editing, V.P.-V., A.S.-O., V.H.B. and J.d.-J.L.-S.; visualization, V.P.-V., A.S.-O., V.H.B. and J.d.-J.L.-S.; supervision, L.C.F.-H., C.I.-E. and V.P.-V.; project administration, L.C.F.-H.; funding acquisition, J.d.-J.L.-S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors would like to acknowledge the financial and the technical support of the Writing Lab, TecLabs, Tecnologico de Monterrey, Mexico, in the production of this work. Furthermore, the authors are grateful to the Tecnologico de Monterrey IT department in Hermosillo, Sonora; and especially for all the support received by Eng. Francisco Vázquez Cinco during the I-week challenge implementation.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Figure A1.

Evaluation rubric for the drones rally challenge activity.

Appendix B

Figure A2.

Frequency distribution for surveys Q1–Q12.

References

- Lassnigg, L. Competence-based Education and Educational Effectiveness. In Competence-Based Vocational and Professional Education; Mulder, M., Ed.; Technical and Vocational Education and Training: Issues, Concerns and Prospects; Springer: Cham, Switzerland, 2017; Volume 23, pp. 667–693. [Google Scholar]

- Beneitone, P.; Esquetini, C.; González, J.; Marty, M.M.; Siufi, G.; Wagenaar, R. Reflections on and Outlook for Higher Education in Latin America; Final Report—Tuning Latin America Project 2004–2007; University of Deusto: Bizkaia, Spain; University of Groningen: Groningen, The Netherlands, 2007. [Google Scholar]

- Sturgis, C.; Casey, K. Quality Principles for Competency-Based Education; iNACOL, Competency Works: VIenna, VA, USA, 2018. [Google Scholar]

- Rodríguez, I.; Gallardo, K. Redesigning an Educational Technology Course under a Competency-Based Performance Assessment Model. Pedagogika 2017, 127, 186–204. [Google Scholar] [CrossRef] [Green Version]

- Johnstone, S.; Soares, L. Principles for Developing Competency-Based Education Programs. Chang. Mag. High. Learn. 2014, 46, 12–19. [Google Scholar] [CrossRef]

- Nichols, M.; Cator, K.; Torres, M. Challenge Based Learner User Guide; Digital Promise and The Challenge Institute: Redwood City, CA, USA, 2016. [Google Scholar]

- Jou, M.; Chen-Kang, H.; Shih-Hung, L. Application of Challenge Based Learning. Approaches Robot. Educ. 2010, 7, 17–20. [Google Scholar]

- Gaskins, W.; Johnson, J.; Maltbie, C.; Kukreti, A. Changing the Learning Environment in the College of Engineering and Applied Science Using Challenge Based Learning. Int. J. Eng. Pedagog. 2015, 5, 33–41. [Google Scholar] [CrossRef]

- Johnson, L.F.; Smith, R.S.; Smythe, J.T.; Varon, R.K. Challenge-Based Learning: An Approach for Our Time; The New Media Consortium: Austin, TX, USA, 2009. [Google Scholar]

- Maya, M.; García, M.; Britton, E.; Acuña, A. Play lab: Creating social value through competency and challenge-based learning. In Proceedings of the 19th International Conference on Engineering and Product Design Education (E&PDE17), Building Community: Design Education for a Sustainable Future, Oslo, Norway, 7–8 September 2017; pp. 710–715. [Google Scholar]

- O’Mahony, T.K.; Vye, N.J.; Bransford, J.D.; Sanders, E.A.; Stevens, R.; Stephens, R.D.; Richey, M.C.; Lin, K.Y.; Soleiman, M.K. A Comparison of Lecture-Based and Challenge-Based Learning in a Workplace Setting: Course Designs, Patterns of Interactivity, and Learning Outcomes. J. Learn. Sci. 2012, 21, 182–206. [Google Scholar] [CrossRef]

- Ramirez-Mendoza, R.A.; Cruz-Matus, L.A.; Vazquez-Lepe, E.; Rios, H.; Cabeza-Azpiazu, L.; Siller, H.; Ahuett-Garza, H.; Orta-Castañon, P. Towards a Disruptive Active Learning Engineering Education. In Proceedings of the IEEE Global Engineering Education Conference (EDUCON), Santa Cruz de Tenerife, Canary Islands, Spain, 17–20 April 2018; pp. 1251–1258. [Google Scholar]

- Tezza, D.; Garcia, S.; Andujar, M. Let us Learn! An Initial Guide on Using Drones to Teach STEM for Children. In Learning and Collaboration Technologies. Human and Technology Ecosystems. HCII 2020; Zaphiris, P., Ioannou, A., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12206. [Google Scholar]

- Tselegkaridis, S.; Sapounidis, T. Simulators in Educational Robotics: A Review. Educ. Sci. 2021, 11, 11. [Google Scholar] [CrossRef]

- Cañas, J.M.; Martín-Martín, D.; Arias, P.; Vega, J.; Roldán-Álvarez, D.; García-Pérez, L.; Fernández-Conde, J. Open-Source Drone Programming Course for Distance Engineering Education. Electronics 2020, 9, 2163. [Google Scholar] [CrossRef]

- Bermúdez, A.; Casado, R.; Fernández, G.; Guijarro, M.; Olivas, P. Drone challenge: A platform for promoting programming and robotics skills in K-12 education. Int. J. Adv. Robot. Syst. 2019, 16, 1–19. [Google Scholar] [CrossRef]

- Voštinár, P.; Horváthová, D.; Klimová, N. The Programmable Drone for STEM Education. In Entertainment Computing–ICEC 2018; Clua, E., Roque, L., Lugmayr, A., Tuomi, P., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerla, 2018; Volume 11112, pp. 205–210. [Google Scholar]

- Bong-Hyun, K. Development of Young Children Coding Drone using Block Game. Indian J. Sci. Technol. 2016, 9, 1–5. [Google Scholar]

- Yousuf, A.; Mustafa, M.A.; Hayder, M.M.; Cunningham, K.R.; Thomas, N. Drone Labs to Promote Interest in Science, Technology, Engineering, and Mathematics (STEM). In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 15–19 June 2019. [Google Scholar]

- Rawat, K.S.; Asthana, C.B. Students in Engineering Design Process and Applied Research. In Proceedings of the 2020 ASEE Virtual Annual Conference Content Access, Virtual Online, 22–26 June 2020. [Google Scholar]

- Jovanović, V.M.; McLeod, G.; Alberts, T.E.; Tomovic, C.; Popescu, O.; Batts, T.; Sandy, M.M.L. Exposing students to STEM careers through hands-on activities with drones and robots. In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 15–19 June 2019. [Google Scholar]

- Yousuf, A.; Lehman, C.C. Research & Engineering Apprenticeship Program (REAP). In Proceedings of the 2019 ASEE Annual Conference & Exposition, Tampa, FL, USA, 15–19 June 2019. [Google Scholar]

- Chou, P.-N. Smart Technology for Sustainable Curriculum: Using Drone to Support Young Students’ Learning. Sustainability 2018, 10, 3819. [Google Scholar] [CrossRef] [Green Version]

- Šustek, M.; Úředníček, Z. The basics of quadcopter anatomy. In MATEC Web of Conferences 210; EDP Sciences: Majorca, Spain, 2018; p. 01001. [Google Scholar]

- Samuelsen, D.A.H.; Graven, O.H. A Holistic View on Engineering Education: How to Educate Control Engineers. In Proceedings of the 2018 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wollongong, Australia, 4–7 December 2018; pp. 736–740. [Google Scholar]

- Swanson, S. Trial by Flyer: Building Quadcopters From Scratch in a Ten-Week Capstone Course. In Proceedings of the 50th ACM Technical Symposium on Computer Science Education, Minneapolis, MN, USA, 27 February–2 March 2019; pp. 146–152. [Google Scholar]

- Števek, J.; Fikar, M. Teaching Aids for Laboratory Experiments with AR.Drone2 Quadrotor. IFAC-PapersOnLine 2016, 49, 236–241. [Google Scholar] [CrossRef]

- Zapata, D.F.; Garzón, M.A.; Pereira, J.R.; Barrientos, A. QuadLab: A Project-Based Learning Toolkit for Automation and Robotics Engineering Education. J. Intell. Robot. Syst. 2016, 81, 97–116. [Google Scholar] [CrossRef] [Green Version]

- Bolam, R.C.; Vagapov, Y.; Anuchin, A. Curriculum development of undergraduate and post graduate courses on small unmanned aircraft. In Proceedings of the 52nd International Universities Power Engineering Conference (UPEC), Heraklion, Greece, 28–31 August 2017; pp. 1–5. [Google Scholar]

- Cañas, J.M.; Perdices, E.; García-Pérez, L.; Fernández-Conde, J. A ROS-Based Open Tool for Intelligent Robotics Education. Appl. Sci. 2020, 10, 7419. [Google Scholar] [CrossRef]

- EYDGAHI, A. An interdisciplinary platform for undergraduate research and design projects. In Proceedings of the 2019 IEEE World Conference on Engineering Education (EDUNINE), Lima, Peru, 17–20 March 2019; pp. 1–6. [Google Scholar]

- Wilkerson, S.A.; Forsyth, J.; Corpela, C.M. Project based learning using the robotic Operating System (ROS) for undergraduate research applications. In Proceedings of the 2017 ASEE Annual Conference & Exposition. American Society for Engineering Education, Columbus, OH, USA, 25–28 June 2017. [Google Scholar]

- Nitschkel, C.; Minami, Y.; Hiromoto, M.; Ohshima, H.; Sato, T. A quadrocopter automatic control contest as an example of interdisciplinary design education. In Proceedings of the 14th International Conference on Control, Automation and Systems (ICCAS 2014), Gyeonggi-do, Korea, 22–25 October 2014; pp. 678–685. [Google Scholar]

- Molina, C.; Belfort, R.; Pol, R.; Chacón, O.; Rivera, L.; Ramos, D.; Ortiz Rivera, E.I. The use of unmanned aerial vehicles for an interdisciplinary undergraduate education: Solving quadrotors limitations. In Proceedings of the 2014 IEEE Frontiers in Education Conference (FIE), Madrid, Spain, 22–25 October 2014; pp. 1–6. [Google Scholar]

- Abarca, M.; Saito, C.; Cerna, J.; Paredes, R.; Cuéllar, F. An interdisciplinary unmanned aerial vehicles course with practical applications. In Proceedings of the 2017 IEEE Global Engineering Education Conference (EDUCON), Athens, Greece, 25–28 April 2017; pp. 255–261. [Google Scholar]

- Dieck-Assad, G.; García-De-La-Paz, B.L.; Dieck-Assad, M.E.; Mejorado-Cavazos, A.; Marcos-Abed, J.; Avila-Ortega, A.; Martínez-Chapa, S.O. Innovation Week (i-Week), A Way to Link Students, Industry, Government and Universities; the case of Emergency First Response at the Tecnológico de Monterrey. In Proceedings of the 3rd International Congress on Educational Innovation CIIE-2016, México City, Mexico, 12–14 December 2016; pp. 14–16. [Google Scholar]

- Jorge Membrillo-Hernández, J.; Ramírez-Cadena, M.d.J.; Caballero-Valdés, C.; Ganem-Corvera, R.; Bustamante-Bello, R.; Benjamín-Ordoñez, J.A.; Elizalde-Siller, H. Challenge Based Learning: The Case of Sustainable Development Engineering at the Tecnológico de Monterrey, Mexico City Campus. Int. J. Eng. Pedagog. 2018, 8, 137–144. [Google Scholar] [CrossRef]

- Charles, D.; Martínez, S.; Soto, R.; Romero, M. Documentation of the design of a Semester-i based on competencies for the Research and Innovation Modality. In Proceedings of the 3rd International Congress on Educational Innovation CIIE-2016, México City, Mexico, 12–14 December 2016; pp. 1486–1498. [Google Scholar]

- Gay, L.R.; Mills, G.E.; Airasian, P. Educational Research: Competencies for Analysis and Applications; Merrill Prentice Hall: Columbus, OH, USA, 2006. [Google Scholar]

- Trochim, W.; Donnelly, J. The Research Methods Knowledge Base; Thomson: Mason, OH, USA, 2007. [Google Scholar]

- Krathwohl, D.R. Methods of Educational and Social Science Research: An Integrated Approach; Addison-Wesley: Reading, PA, USA, 1993. [Google Scholar]

- World Medical Association. World Medical Association Declaration of Helsinki. Ethical principles for medical research involving human subjects. Bull. World Health Organ. 2001, 79, 373–374. [Google Scholar]

- Open Source Software Used in Parrot Mambo. 2019. Available online: https://github.com/parrot-opensource/mambo-opensource (accessed on 10 April 2022).

- Dabbish, L.; Stuart, C.; Tsay, J.; Herbsleb, J. Social coding in GitHub: Transparency and collaboration in an open software repository. In Proceedings of the ACM 2012 Conference on Computer Supported Cooperative Work, Seattle, DC, USA, 11–15 February 2012; pp. 1277–1286. [Google Scholar]

- Krejcie, R.V.; Morgan, D.W. Determining Sample Size for Research Activities. Educ. Psychol. Meas. 1970, 30, 607–610. [Google Scholar] [CrossRef]

- Bruner, J. Acts of Meaning; Harvard University Press: Cambridge, MA, USA, 1990. [Google Scholar]

- Jonassen, D.H.; Peck, K.L.; Wilson, B.G. Learning with Technology, A Constructivist Perspective; Prentice Hall, Inc.: Upper Saddle River, NJ, USA, 1999. [Google Scholar]

- Carlile, O.; Jordan, A. Approaches to Creativity, a Guide for Teachers; McGraw-Hill: Maidenhead, UK, 2013. [Google Scholar]

- Operating System Ubuntu Linux. 2021. Available online: https://wiki.ubuntu.com/ (accessed on 10 April 2022).

- Google Play. FreeFlight Mini. Software Application. 2017. Available online: https://play.google.com/store/apps/details?id=com.parrot.freeflight4mini&hl=es (accessed on 18 June 2017).

- Google Play. AR.FreeFlight 2.4.15. Software Application. 2017. Available online: https://play.google.com/store/apps/details?id=com.parrot.freeflight&hl=es (accessed on 17 June 2017).

- Oracle. VirtualBox. 2017. Available online: https://www.virtualbox.org (accessed on 10 June 2017).

- Parrot. Parrot for Developers. 2016. Available online: http://developer.parrot.com/products.html (accessed on 8 June 2017).

- Izaguirre-Espinosa, C.; Muñoz-Vázquez, A.J.; Sánchez-Orta, A.; Parra-Vega, V.; Castillo, P. Attitude control of quadrotors based on fractional sliding modes: Theory and experiments. IET Control Theory Appl. 2016, 10, 825–832. [Google Scholar] [CrossRef]

- Sanchez-Orta, A.; Parra-Vega, V.; Izaguirre-Espinosa, C.; Garcia, O. Position—Yaw tracking of quadrotors. J. Dyn. Syst. Meas. Control 2015, 137, 061011. [Google Scholar] [CrossRef]

- Eisendörfer, F. A Node .js Client for Controlling Parrot AR Drone 2.0 Quadcopters. GitHub Repository. 2017. Available online: https://github.com/felixge/node-ar-drone (accessed on 5 July 2017).

- Eschenauer, L. Provides Key Building Blocks to Create Autonomous Flight Applications with the #Nodecopter (AR.Drone). GitHub Repository. 2017. Available online: https://github.com/eschnou/ardrone-autonomy (accessed on 5 July 2017).

- Harvey, I. Python interface to Bluetooth LE on Linux. GitHub Repository. 2017. Available online: https://github.com/IanHarvey/bluepy (accessed on 7 July 2017).

- McGovern, A. Python Interface to Parrot Mambo. GitHub Repository. 2017. Available online: https://github.com/amymcgovern/pymambo (accessed on 7 July 2017).

- Video1 Parrot ARDrone Iweek. YouTube. 2022. Available online: https://youtube.com/shorts/bl7mVELwxoU (accessed on 13 April 2022).

- Video1 Parrot MAMBO Iweek. YouTube. 2022. Available online: https://youtube.com/shorts/3bvOPEFt7qA (accessed on 13 April 2022).

- Video2 Parrot MAMBO Iweek. YouTube. 2022. Available online: https://youtu.be/n_ipdzDchrI (accessed on 19 April 2022).

- Zhang, H.; Sun, M.; Li, Q.; Liu, L.; Liu, M.; Ji, Y. An empirical study of multi-scale object detection in high resolution UAV images. Neurocomputing 2021, 421, 173–182. [Google Scholar] [CrossRef]

- Isaac-Medina, B.K.S.; Poyser, M.; Organisciak, D.; Willcocks, C.G.; Breckon, T.P.; Shum, H.P.H. Unmanned Aerial Vehicle Visual Detection and Tracking using Deep Neural Networks: A Performance Benchmark. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, Montreal, BC, Canada, 11–17 October 2021; pp. 1223–1232. [Google Scholar]

- Al-Kaff, A.; Martín, D.; García, F.; de la Escalera, A.; Armingol, J.M. Survey of computer vision algorithms and applications for unmanned aerial vehicles. Expert Syst. Appl. 2018, 92, 447–463. [Google Scholar] [CrossRef]