Abstract

In this work, aiming at the problem of cooperative task assignment for multiple unmanned aerial vehicles (UAVs) in actual combat, battlefield tasks are divided into reconnaissance tasks, strike tasks and evaluation tasks, and a cooperative task-assignment model for multiple UAVs is built. Meanwhile, heterogeneous UAV-load constraints and mission-cost constraints are introduced, the UAVs and their constraints are analyzed and the mathematical model is established. The exploration performance and convergence performance of the harmony search algorithm are analyzed theoretically, and the more general formulas of exploration performance and convergence performance are proved. Based on theoretical analysis, an algorithm called opposition-based learning parameter-adjusting harmony search is proposed. Using the algorithm to test the functions of different properties, the value range of key control parameters of the algorithm is given. Finally, four algorithms are used to simulate and solve the assignment problem, which verifies the effectiveness of the task-assignment model and the excellence of the designed algorithm. Simulation results show that while ensuring proper assignment, the proposed algorithm is very effective for the multi-objective optimization of heterogeneous UAV-cooperation mission planning with multiple constraints.

1. Introduction

It is very common to use multiple unmanned aerial vehicles to perform various tasks [1,2], especially military tasks [3]. UAVs cooperatively patrolling perimeters, monitoring areas of interest or escorting convoys have been key research topics [4,5,6]. Power inspection, air rescue, large parties and so on are the most common application examples of Multi-UAV cooperation [7,8,9]. Therefore, it is an important research aspect to seek an efficient task-assignment mechanism to solve the task assignment problem of multiple UAVs [10]. In this context, the problem of multi-UAV cooperative task allocation arises at the historic moment.

Collaborative task assignment of multiple UAVs is to assign one or more tasks to UAVs under various constraints in the battlefield environment and determine the sequence of tasks to be executed by UAVs. Task assignment is an important part of collaborative task planning of multiple UAVs, and is also the prerequisite for realizing collaborative operation of UAV groups [11]. In the allocation process, factors such as the type of tasks, timing constraints among tasks, types of tasks that can be performed by UAVs, combat capabilities and payloads should be fully considered, and combat tasks should be reasonably assigned to the UAV fleet with the goal of achieving optimal efficiency of all tasks [12,13]. Therefore, the collaborative task-assignment problem of multiple UAVs is a non-deterministic polynomial (NP) problem of combinatorial optimization under multiple constraints [14,15]. The key to solving this problem is to establish the task assignment model and use the assignment algorithm.

At present, many general mathematical models have been proposed for multi-UAV task allocation at home and abroad. The commonly used task-allocation models include the multiple traveling salesman problem (MTSP) [16] model, mixed-integer linear programming (MILP) model [17], the vehicle routing problem (VRP) [18] model, the network flow optimization (DNFO) [19] model, the cooperative multiple task assignment problem (CMTAP) [20] model, and the capacitated vehicle routing problem (CVRP) [21] model. Based on these general multi-UAV task-allocation models, many scholars have established targeted task-planning models.

However, the current research still has the following problems: (a) In the establishment of qualified task-allocation models, such as the MTSP model and the CVRP model, the requirements of the actual battlefield are ignored in order to meet the model standards. The established model simplifies the objectives of task allocation and battlefield constraints, which reduces the application effect of research results in actual combat. (b) UAV and task types in existing task-allocation models are mostly single, lacking consideration of heterogeneous UAV attributes and multi-task types. Existing UAVs in the formation are different from each other in terms of purpose, load, combat capability, etc. In actual combat, multiple types of UAVs are often needed to cooperate to complete a certain task. Therefore, the task-allocation model based on a single UAV does not support complex tasks.

In solving problem models, there are two main research results: optimization method and heuristic method. The optimization methods include linear programming, width/depth preference search, branch and bound, and so on. The optimization method has a high time and space complexity in solving problems. As the scale of a task-assignment problem increases, computing power is required and the time consumed is also increased. It is thus necessary to simplify the model to a large extent, which makes it difficult to apply to complex problems. The heuristic method of solving a model mainly focuses on the model’s objective function, which requires less complexity. This can result in a better feasible solution in a short time, and has practical feasibility for large-scale complex problems.

The harmony search (HS) algorithm not only has the advantages of a meta-heuristic algorithm, but also own its characteristics [22,23]: (a) HS integrates all the existing harmony vectors to create a new harmony vector, in contrast to GA, which uses two elderly vectors, while particle swarm optimization (PSO) only considers the individual optimal position and the global optimal position of the whole population; (b) HS independently adjusts each variable via improvisation, unlike PSO, which deals with a solution vector by single rule. Due to these characteristics, HS has been successfully applied to a wide variety of optimization problems, such as river ecosystems [24], selective assembly problems [25], mental health analysis [26], reservoir engineering [27], speech emotion [28] and wireless network positioning [29].

However, HS suffers from trapping in performing local searches, and a crucial factor of its performance is the key control parameters, such as harmony memory (HM), harmony memory size (HMS), harmony memory considering rate (HMCR), pitch adjusting rate (PAR), bandwidth (bw) and the number of improvisations (G). It is unfortunate that there are few studies on the mathematical theory of the underlying search mechanisms of HS in the open literature. S. Das et al. proposed a new algorithm called EHS, after discussing the relationship between the explorative power of HS and the control parameters through analyzing the evolution of the population variance of HS [30]. This algorithm has good exploration, but poor exploitation. Thus, this paper devotes significantly more attention to the search mechanism of HS and its parameters.

Therefore, the contribution of this paper is the proposal of a new model and a new method, which aim to: (a) considering the complexity of the actual missions, divide the missions into reconnaissance, strike and evaluation tasks, and considering the benefits, costs and constraints of the missions, establish the target allocation model of heterogeneous multi-UAV missions with different loads; (b) analyze the mathematical theory of the underlying search mechanisms of HS to balance exploration and exploitation, establish the iteration equation of HS and propose an improved HS; (c) use the improved HS algorithm to simulate and verify a multi-UAV task-allocation combat example.

The remainder of this paper is organized as follows. In Section 2, the modeling of heterogeneous UAV task-assignment planning is presented in detail. Section 3 is the theoretical analysis of harmony search. The proposed algorithm is described in Section 4. The experiments and their results of the proposed algorithm are presented in Section 5. The task-allocation simulation experiment is designed and discussed in Section 6. Section 7 discusses related work. Section 8 then makes concluding remarks and indicates future works.

2. Modeling Heterogeneous UAV Task Assignment Planning

Multi-UAV cooperative task assignment is a complex multi-objective optimization and decision problem. Most current research models assume that the mission attributes of UAVs are consistent. However, multi-UAV formation is not a linear addition of a certain number of individual UAVs. Heterogeneous formation disperses the functions of reconnaissance and surveillance, electronic interference, strike and evaluation into a large number of single UAVs with low cost and single function. Through the high level of cooperation and self-organization among a large number of heterogeneous individuals, the cluster function far exceeds the individual function that can be realized. In addition, as the multi-UAV formation combat involves various aspects of the combat target and the task complexity is different, some large tasks need a certain combat sequence, and it is necessary to deploy and combine UAVs with different functions and characteristics to complete the corresponding combat task. For the above reasons, this section proposes a heterogeneous multi-UAV task-allocation model to enhance the antagonism of combat subjects and achieve higher-formation combat effectiveness in view of future diversified combat requirements.

2.1. Objective Function of Multi-UAV Cooperative Target Assignment Model

If the UAV formation of our side performs the task , the target list of the task is , represents the task type, and are the number of UAVs in the formation and the number of targets in the task list respectively, represents reconnaissance, represents attack and represents evaluation. The essence of multi-UAV formation task assignment is to solve a target-assignment scheme to maximize target fitness (). Based on this, the objective function of a multi-UAV cooperative target-allocation model is given as follows

Definition 1.

whereisthe fitnessof the UAV numberedperforming the task numbered,isthe rewardof the UAV numberedperforming the task numberedandisthe costof the UAV numberedperforming the task numbered. It can be seen that task fitnessis directly proportional to the reward of performing tasks and inversely proportional to the cost of performing tasks.

2.2. Task Rewards Modeling

In this paper, the rewards of UAV numbered performing a certain task numbered are mainly determined by three factors:

(1) UAV’s capability to perform specific tasks. The capability of UAV to perform tasks is quantified by its payload, such as detection device and weapon system, combined with its own performance, and described in probability based on historical experience (whether it has performed relevant target tasks in the past).

(2) the worth of enemy targets. The worth of enemy targets is determined in advance based on the information obtained beforehand, combined with the tactical plan available for reference. For example, destroying the enemy’s command center can paralyze the enemy’s operation, so the worth of the command center is relatively high, while striking the enemy’s small warehouse has relatively low worth compared to the whole operation.

(3) the defense capability of enemy targets. Combined with the detected battlefield environment information and advance intelligence information, the enemy targets, such as surface-to-air missile positions, anti-aircraft gun positions, flight bases and ground radar are analyzed and quantified.

From the above analysis, the mission rewards of UAV can be defined as follows:

Definition 2.

whereis the rewards of a certain task numberedperformed by a UAV numberedwith a specific load,is the completion capability of the UAV numberedto perform tasks the task numberedandis the defense capability of the target.

The reward of each UAV mission meets the additivity, and the decision variable of the mission is introduced as follows:

Then, the total rewards of multiple UAV formation’s task execution on the target list are:

2.3. Task Cost Modeling

In the course of reconnaissance, attack or evaluation, UAV formation will encounter confrontational threats and terrain threats. Confrontational threats are mainly radar detection and fire attack by enemy targets during the execution of missions, so the defense capability of our UAV and the attack capability of enemy targets should be considered in task allocation. Terrain threats mainly involve the air-range cost of a UAV during its mission, terrain height and altitude change rate. On some terrain that is difficult to leap over, the UAV flying too high will consume too much energy, and the UAV climbing too-steep terrain is likely to impact the terrain and encounter other threats, so it should try to avoid the distribution results of too-long air-range or steep terrain. Based on the above analysis, the task-cost modeling in this section includes terrain-cost modeling and loss-cost modeling. At the same time, terrain cost and loss cost of different dimensions are normalized.

(1) Terrain Cost

The air-range cost of a UAV reflects the duration of the mission, the length of the UAV and the energy consumption. The Euclidean distance between the UAV and the enemy target is used in this paper to estimate the air-range cost. This is because the formation cannot accurately obtain the relevant data before the actual execution of the mission.

where is the initial position coordinate of the UAV numbered , is the position coordinate of the enemy target numbered , is the position coordinate of the enemy target numbered . Equation (5) gives the Euclidean distance between the UAV numbered and the task target numbered , as well as the Euclidean distance between the task numbered and the task numbered . To facilitate calculation, the matrix is established as the distance matrix.

According to the above equation, is a matrix of , which contains the Euclidean distance between UAV and enemy targets and the Euclidean distance between enemy targets. Thus, the total air-range of the UAV numbered returning to its initial position after completing the corresponding task target list can be given as follows:

where represents the total number of tasks performed by UAV numbered . Further analysis of Equation (7): when , represents the distance between the initial position of the UAV numbered and the mission target numbered 1. When , represents the Euclidean distance between the No. mission target, performed by the UAV numbered , and the No. mission target. When , represents the distance of UAV numbered returning to its starting point after completing the last mission target.

Considering the impact of terrain height and altitude-change rate on the UAV’s loss, terrain-steepness factor λ is introduced to the weighted UAV’s track. Assuming that the battlefield environment can be known in advance before the execution of the mission, the value of the terrain-steepness factor λ is determined by combining the result of task assignment with the terrain information known in advance. In this paper, λ ranges from [1.2, 2.5] according to the complexity of the terrain.

(2) Loss Cost

The loss cost of a UAV is mainly caused by the confrontational threats encountered by UAV in the execution of tasks. Therefore, the loss cost mainly considers the following factors: the worth of UAV with specific load, the defensive capability of UAV itself, and the attack capability of enemy targets. Combined with the significance of loss cost, it can be concluded from the above analysis:

Definition 3.

whereis the loss cost of the UAV numberedwhen executing the mission targetnumbered,is the attack capability of the mission targetnumbered,is the worth of UAV numbered,is the defense capability of UAV numbered.

Thus, it can be concluded that the task cost of multi-UAV formation in task allocation is as followed:

where is the maximal element with the total loss cost of performing all tasks, is the terrain steepness factor reflecting the complexity of different UAV flight paths, and respectively represent the UAV’s track weight and loss weight and is the task decision variables introduced in Section 2.2.

2.4. Modeling Constraints

(1) Constraints on Task Completion

During the execution of the mission, UAVs involved in such tasks as strike and reconnaissance must perform all the missions, and there cannot be a situation where some tasks are not assigned, namely,

where, and represent the number of UAVs and the number of assigned tasks respectively.

(2) Constraints on Task Non-Redundancy

During task execution, it must be ensured that each task can only be performed by a certain UAV, namely,

(3) Constraints on UAV’s Capability

The number of task targets numbered performed by the UAV numbered should not exceed the maximum of its own task capability:

where represents the maximum number of tasks that UAV numbered can execute.

(4) Constraints on UAV’s Attack Range

When task target assignment is completed, the air-range of the total mission performed by the UAV numbered cannot exceed its maximal flight distance, namely:

where is the terrain steepness factor, and is the maximal air-range of UAV numbered .

In order to transform constrained optimization problems into unconstrained problems, this section introduces the penalty function and designs an outpoint method to punish the consumption cost of infeasible solutions when infeasible solutions appear in the population. The constrained optimization method improves the efficiency of the task-assignment model. Therefore, Equation (1) is further updated, and the final total task fitness is as follows:

where is a positive number known as the penalty factor, and is the penalty function.

2.5. Task-Assignment Coding

Constructing the mapping relationship between particle and feasible solution of cooperative-task assignment for multiple UAVs is the key step for the swarm intelligence algorithm to solve the optimization problem. In the collaborative-task assignment process for multiple UAVs, the response of the UAVs to enemy target reconnaissance, attack and evaluation tasks should be ensured, and the timing of tasks should also be ensured. That is, an enemy target must be reconnoitered before the attack task, and finally the effect evaluation, which embodies the double-layer coding meaning of particles on the task-assignment problem. Referring to reference [31], this paper adopts real vector coding. Suppose that the dimension of the particle is the number of the task target list, the subscript of the particle corresponds to the number of tasks, the integer part of the particle position is rounded up to indicate the number of UAVs and the fractional part of the particle position corresponds to the task order of UAVs from small to large.

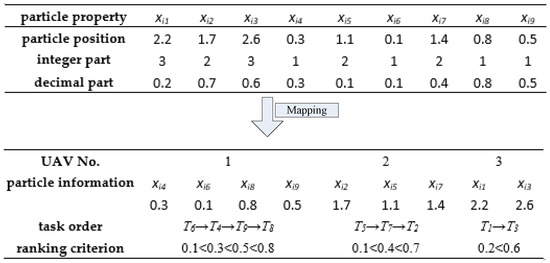

The mapping relationship between particle coding and task target assignment can be expressed by the following example. Suppose that three UAVs must perform nine tasks, and the task-assignment coding is shown in Figure 1.

Figure 1.

Mapping relationship between particle coding and UAV task assignment problem.

3. The Theoretical Analysis of Harmony Search

This section analyzes the performance of the HS algorithm from two aspects: exploration performance and iterative convergence performance.

3.1. Exploration Performance

The HS algorithm explores the solution space by improvisation, and its development is accomplished by updating the operation steps. In reference [30], the exploration ability of HS is measured by the evolution of the expected population variance with the number of iterations. However, only symmetric intervals , are considered for analyzing the evolution of the group variables of HS and its exploration performance. Here, this paper gives results for asymmetric intervals, as shown in Theorem 1.

Theorem 1.

Letrepresent the initial population of HS algorithm,refer to the population obtained after the improvisation session. The range of the decision variableis, then

Proof.

when , the following result as Equation (17) is obtained, which had been done by S. Das et al. in literature [30].

Let , then

where is the probability density function of . Then Equation (23) is obtained.

According to Equations (20) and (23), and can be obtained

From Equations (18), (19), (24) and (25), it can be concluded that

Let and , Equations (26) and (27) become

and because the following equations are true

Equation (31) can be deduced from Equation (30)

where and are independent random variables. Therefore, and are true, and then

According to Equations (30)–(32), it can be obtained

This proof is complete. □

3.2. Convergence Performance

If , then Equation (34) can be obtained from Equation (16).

According to literature [30], is obtained, so

Then, the expected variance of the population variable in the -th generation is:

With increasing evolution generations, the increase in expected population variance gives the algorithm strong exploration ability. However, if only the exploration ability is considered, the HS algorithm will not converge in the last generation, that is, the HS algorithm keeps searching for new information, but does not get effective information at the end of the algorithm. Therefore, the convergence of the algorithm is a very critical problem. In the following, the convergence of the HS algorithm is analyzed from the expectation of population variance and expectation of population mean.

Lemma 1.

For any initial vector, the iterative equationconverges if and only if, whererepresents the iteration matrix andis the spectral radius of the iteration matrixin literature [32].

Theorem 2.

Under the premise of Theorem 1 and, the sufficient condition for the convergence of the iterative equationis that, where

Proof.

If and , then the following equation is true.

It can be obtained from Equations (26) and (30):

Due to and , therefore, Equation (38) becomes Equation (39).

According to Equations (37) and (39), is obtained. After the evolution of g-th generation, can be obtained. Thus, the characteristic roots of the iterative equation can be obtained as and . It is clear that . Thus, is true. Therefore, the iterative equation converges.

This proof is complete. □

From the proof of Theorem 2, the following conclusions are obtained.

(1) The parameter is actually the spectral radius of the iteration matrix, which directly determines the convergence of the iteration equation.

(2) When is close to 0 and parameter is close to 1, the algorithm has a fast convergence rate, which verifies the rationality of .

(3) In order to find a balance between higher convergence accuracy and better exploration ability, the parameter can be adjusted to prevent the algorithm from falling into local optimal.

4. The Opposition-Based Learning Parameter-Adjusting Harmony Search (OLPDHS) Algorithm

Through the above analysis and discussion, on the one hand, , which is related to the original HM initialization, is the part of , so it is necessary to find a better initialization method to explore an effective offspring population for improving the algorithm’s exploration. On the other hand, adjusting key control parameters’ values is a good choice to obtain better fine-tuning properties and convergence speed.

The OLPDHS algorithm is proposed. On the one hand, the algorithm improves the initialization method. On the other hand, the fine-tuning properties and convergence speed of HS can be improved by adjusting parameter value. What the OLPDHS algorithm has in common with the classical HS algorithm is that both of them go through four processes: initializing the parameters involved in the algorithm, initializing the harmony memory, improvising a new harmony and updating the harmony memory. The difference between the two is the way of population initialization and the way of control parameter change.

4.1. The Opposite-Based Learning Strategy for Population Initialization

Opposite-based learning (OBL) was first proposed by Tizhooshin in the literature [33]. The main idea of OBL is to obtain a better approximated solution than the current candidate solution, by considering both an estimate and another estimate that is opposite to this estimate, so OBL expands the solution space and reduces the search time [34,35].

Definition 4.

Letbe a point in the dimensionalcoordinate system, where, then, the opposite pointofis completely determined according to.

In the original HS algorithm, the initial population is generated in a random way. In order to improve the quality of the initial harmonic memory warehouse, this paper proposes a variety of alternative opposite learning strategies, which include five methods based on opposite learning strategies [36]. Method 1: , Method 2: , Method 3: , Method 4: , Method 5: .

4.2. Parameter Adjustment Policy

In order to balance the exploration ability and development ability of the HS algorithm, it is necessary to adjust the key control parameter dynamically during the search process.

According to Theorem 2, the parameter () is dynamically adjusted as follows. To better detail , the more general expression for the average of the group is given as Equation (40):

where is the th dimension of the problem, is the maximum dimension of the problem and represents the average value of the th dimension variable. Then, get , here are randomly selected from HM and , thus can be obtained as Equation (41).

Thus the result of the parameter is obtained.

It can be seen from Equation (42) that the dynamic adjustment of the parameter mainly depends on the square root of the mean value of the harmony vectors of the population after each update. In this way, it is easier to realize the actual operation of dynamic adjustment of the parameter . When the new solution is fine-tuned according to Equation (40) or Equation (41), the information of the current population is carried out by the parameter . The fine-tuning of the new solution has global guiding significance. However, the fine-tuning solution with the fixed value of the parameter does not consider the current population search situation. Additional calculation after each evolution is required, which increases the calculation amount of the algorithm.

5. Simulation Experiments and Analysis of OLPDHS

In order to verify the rationality and effectiveness of the OLPDHS algorithm in this paper, MATLAB is used to test algorithm performance from different perspectives.

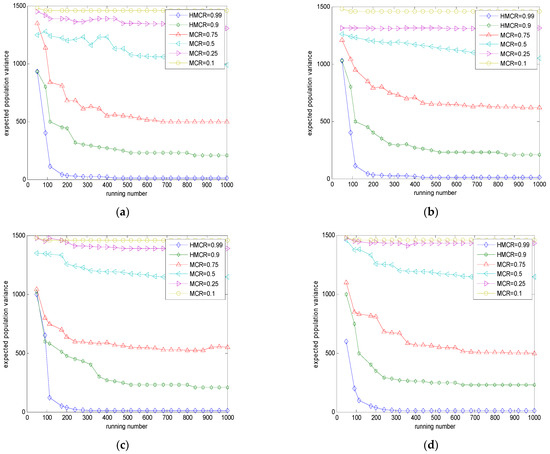

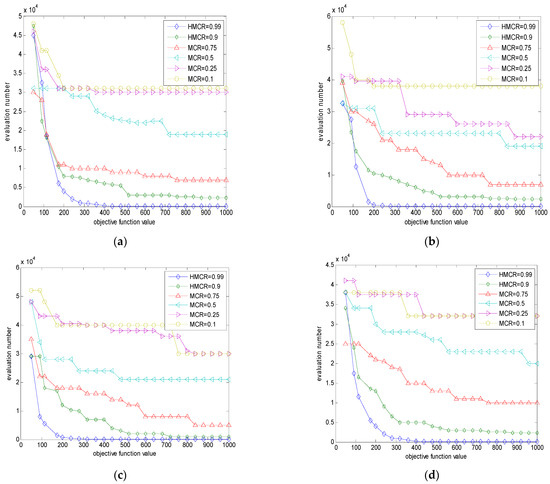

5.1. Influence of the Parameters HMCR, HMS and PAR on OLPDHS

Firstly, the influence of the parameter HMCR on the performance of the OLPDHS algorithm is analyzed by simulation. The unimodal function Sphere and the multimodal function Rastrigin are used as the test functions, and the parameters are set as HMS = 5, PAR = 0.5, , , the maximum number of evolutions , the dimension of the function is 30, and the HMCR is changed dynamically from 0.1 to 0.99, proceeding through 0.1, 0.25, 0.5, 0.75, 0.9 and 0.99. MATLAB is used for simulation, and the relationship between the population variance expectation and the iterations number is obtained, as shown in Figure 2. It reflects that for the function Sphere and the function Rastrigin, when the values of parameter HMCR are different, the search capability of the OLPDHS algorithm changes over iterations. Figure 3 shows the relation between the Sphere function value and the Rastrigin function value obtained by the OLPDHS algorithm under different parameter HMCR values with the change of evaluation numbers, reflecting the influence of different parameter HMCR values on the convergence performance of the OLPDHS algorithm.

Figure 2.

The expected population variance of OLPDHS with HMCR value for Sphere and Rastrigin: (a) Sphere: [−50, 100]; (b) Sphere: [−100, 50]; (c) Rastrigin: [−50, 100]; (d) Rastrigin: [−100, 50].

Figure 3.

Convergence of OLPDHS with different HMCR values for Sphere and Rastrigin: (a) Sphere: [−50, 100]; (b) Sphere: [−100, 50]; (c) Rastrigin: [−50, 100]; (d) Rastrigin: [−100, 50].

As can be seen from Figure 2 and Figure 3, the value of HMCR has a great impact on the expected population variance and the convergence of the OLPDHS algorithm. With the increase in the value of HMCR, the smaller the expected population variance and convergence accuracy, the larger the value of HMCR and the better the convergence rate of the OLPDHS algorithm.

In addition, when HMCR values are 0.01, 0.25, 0.5, 0.75, 0.9, 0.95, and 0.99, the mean value and variance results of the two test functions are shown in Table 1 and Table 2, respectively. For the Sphere function, Table 1 shows that the smaller the value of HMCR, the worse the performance of the OLPDHS algorithm. When HMCR < 0.9, the results obtained by the OLPDHS algorithm have deviated significantly from the global optimal solution. When HMCR > 0.9, the OLPDHS algorithm quickly converges to or near the global optimal solution. For the multimodal function Rastrigin, Table 2 shows that the value of HMCR has a similar impact on the performance of the OLPDHS algorithm to that in the Sphere function. According to Table 1 and Table 2, the convergence accuracy is better with the increase in HMCR value.

Table 1.

Numerical results with different HMCR values for Sphere.

Table 2.

Numerical results with different HMCR values for Rastrigin.

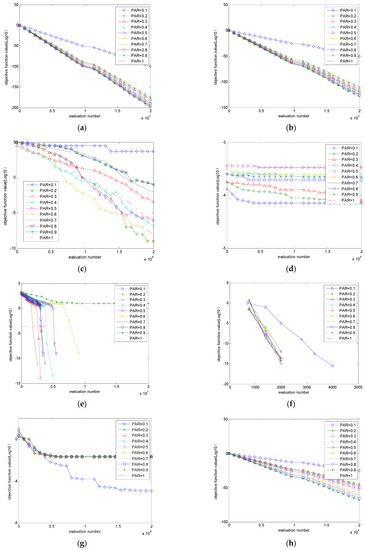

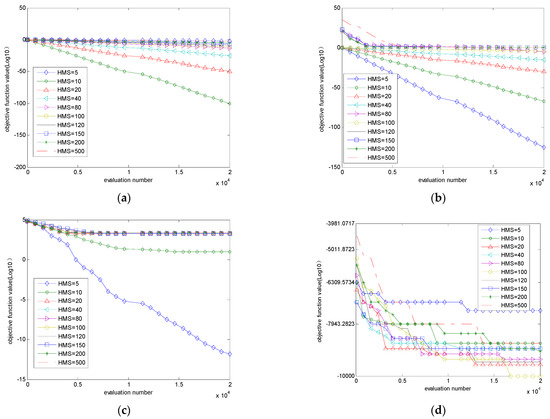

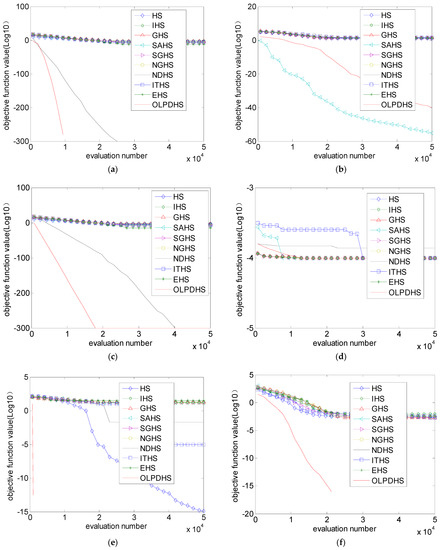

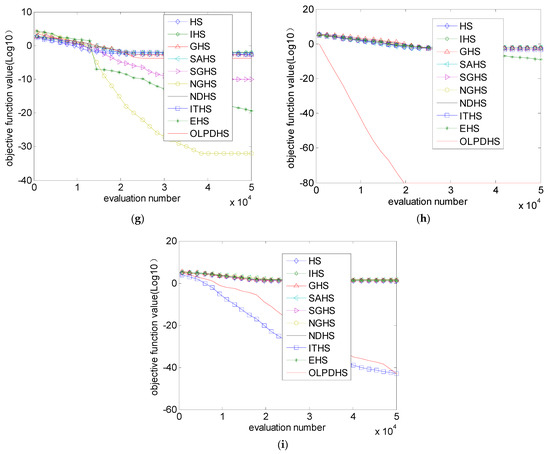

Then, the influence of parameter HMS and parameter PAR on the OLPDHS algorithm is analyzed through simulation experiments. Nine functions () with different characteristics are selected to study the impact of parameters HMS and PAR on the performance of the OLPDHS.

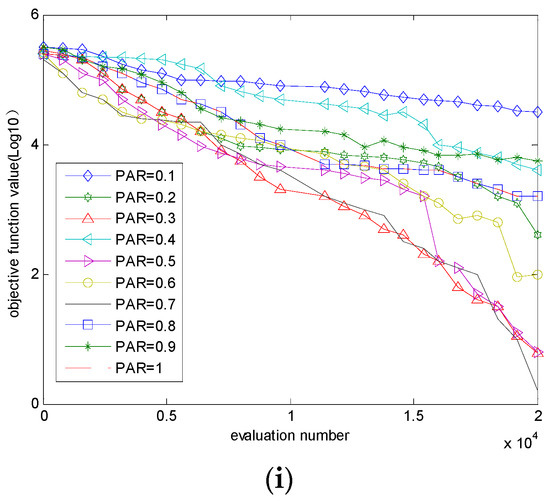

The dimension of all functions is set to 30, the maximum number of iterations to 20,000, and each function is set to run 30 times independently. Table 3 lists the mean value and standard deviation (SD) value of each function while running 30 times independently under different values of parameter PAR. Table 4 shows the mean value and SD value of each function while running 30 times independently under different values of parameter HMS. Figure 4 and Figure 5 visually show the optimization curves of nine functions under different values of parameters HMS and PAR.

Table 3.

Effects of PAR for nine functions.

Table 4.

Effects of HMS for nine functions.

Figure 4.

The optimization curve for different values of the parameter PAR: (a) ; (b) ; (c) ; (d) ; (e) ; (f) ; (g) ; (h) ; (i) .

Figure 5.

The optimization curve for different values of the parameter HMS: (a) ; (b) ; (c) ; (d) ; (e) ; (f) ; (g) ; (h) ; (i) .

As observed in Table 3, none of the PAR values performs well for all of the test functions. When the value of PAR is in the interval [0.3, 0.7], the mean and SD of all the functions except function and function are relatively good. For function , the larger value of parameter PAR results in poor performance of the OLPDHS algorithm. For function , the values of PAR have little effect on the performance of the OLPDHS algorithm. By observing Figure 4 and comparing the optimization curves, it can be found that when PAR is in the interval [0.3, 0.7], the optimization curves of the OLPDHS algorithm are better. Thus, the value of PAR has an impact on the performance of the OLPDHS algorithm, and the value is determined based on the analysis of the specific optimization problem.

As can be seen from Table 4, except for the function (when the HMS value is large, the optimization result is better), the convergence accuracy of other functions deteriorates as the HMS value increases. As shown in Figure 5, except for function , the optimization curve obtained by other functions is better with the decrease in HMS value. Through analysis, it can be concluded that the value of HMS is appropriate in the interval [5, 40].

Finally, the parameter value range of the OLPDHS algorithm to obtain better performance can be obtained through the above simulation analysis. The specific values of parameters should be determined by the optimization problem itself and the optimization model, which must be tested repeatedly in practice.

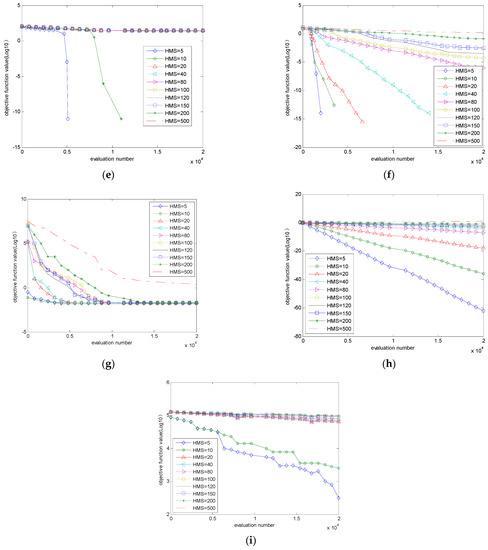

5.2. Performance Comparison of the OLPDHS Algorithm and Other HS Algorithms

In order to prove the efficiency of the OLPDHS algorithm, the OLPDHS algorithm is compared with the recently developed HS algorithm and its improved version (IHS [37], GHS [38], SAHS [39], SGHS [40], NGHS [41] NDHS [42], EHS [30] and ITHS [43]) by testing the unconstrained optimization problem. Related parameters are set as follows: (1) : SGHS is 0.98, NDHS, EHS, and ITHS are 0.99, OLPDHS is 0.9999, and other algorithms are 0.9; (2) : the value is 50 for EHS, 10 for ITHS and 5 for other algorithms; (3) : HS and EHS are set to 0.33, GHS, IHS and OLPDHS are set to 0.1 and 0.99, SAHS and ITHS are set to 0 and 1, NDHS is set to 0.01, 0.99 and SGHS is set to 0.9; (4) : HS is 0.01, SGHS is 0.0005 and , IHS is 0.0001 and , and EHS is ; In addition, in NGHS. Among the nine functions, ,, are single peak functions; ,, are multi-peak functions, and with the increase in the dimension of the problem, the number of locally optimal solutions increases. The functions and are low-dimensional functions, which have more local optimal solutions.

Figure 6 shows the convergence properties of 10 algorithms tested by 9 functions with 30 dimensions. In most cases, the OLPDHS algorithm has better convergence than the other nine HS algorithms. Except for functions and , the convergence of the OLPDHS algorithm is not as good as expected, while SGHS, NGHS and EHS algorithms have a better convergence trend. For functions and , the OLPDHS algorithm has a convergence no better than the ITHS algorithm, but the OLPDHS algorithm has better performance than other algorithms. On the whole, the OLPDHS algorithm has faster convergence than other HS algorithms.

Figure 6.

The evolution of optimum objective function value with 10 algorithms for 9 functions: (a) ; (b) ; (c) ; (d) ; (e) ; (f) ; (g) ; (h) ; (i) .

6. Task-Allocation Simulation Experiment Based on OLPDHS

In order to fully verify the effectiveness of the task-allocation algorithm and model designed in this paper, three unmanned aerial vehicles with different loads were used to perform eight different mission objectives as a combat example for simulation verification. In the simulation, four algorithms are used to independently solve the task assignment model 10 times, respectively. The simulation environment and algorithm parameters are consistent with the Section 5.

6.1. Initial Information of Simulation

Battlefield space is a 100 km × 100 km square map. According to the description of the modeling process in Section 2, the terrain-steepness factor is , track weight and loss weight are and the penalty factor of the constraint term is δ = 5. At the same time, the maximum number of tasks that each UAV can perform is set to , where represents the number of UAVs. The maximum range of the UAVs is set to . According to the task-allocation code in Section 2.5, the dimension of the allocation model is set to the task target number , the scope of individual search is (0,3), the number of individuals in each algorithm is set to 10, and the iteration number is 1000. The heterogeneity of multiple UAV formations with different loads is directly reflected by the capability value of performing a specific task. The specific parameters of UAVs and mission targets are shown in Table 5 and Table 6.

Table 5.

Performance parameters of three UAVs.

Table 6.

Parameters of eight mission objectives.

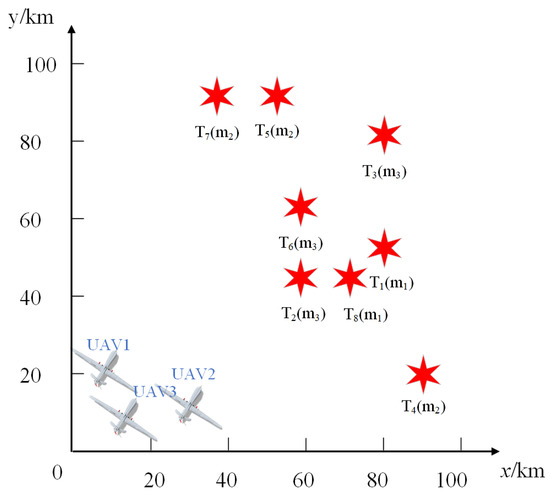

In order to visually display the battlefield environment and task-assignment process, this paper draws the battlefield environment through Visio 2017 software, as shown in Figure 7 below.

Figure 7.

The battlefield environment diagram.

6.2. Simulation and Analysis

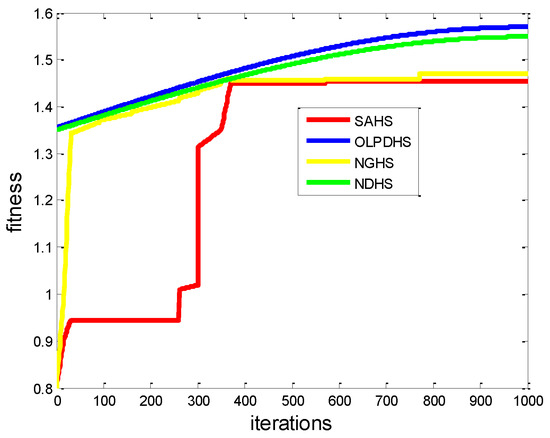

Four intelligent algorithms are used to independently solve the task-allocation model 10 times. The solution results are shown in Table 7, where represents the final task fitness; the higher the fitness, the better the feasible solution iterated by the algorithm. represents the success rate of the algorithm solution, and is the average of the solution results obtained 10 times for each algorithm.

Table 7.

The results of 10 times obtained independently by two algorithms.

Further analysis of Table 7 shows that the solving success rate of each algorithm is 100%, which verifies the effectiveness of the multi-UAV task-allocation model constructed in this paper. Meanwhile, the average fitness of 10 independent solutions of the OLPDHS algorithm is 1.5388, which is higher than the average fitness of the other three algorithms. Therefore, in solving task assignment problems, the optimization algorithm based on improved chaotic adaptive strategies designed in this paper is superior to the other three comparison algorithms. Figure 8 shows the fitness convergence process of four algorithms for solving the task assignment model. It can be seen from the figure that the OLPDHS algorithm can quickly converge to a relatively excellent fitness value in the early stage, and the fitness is higher than the other three algorithms in the late iteration, which further reflects the effectiveness of OLPDHS in solving the task assignment.

Figure 8.

The convergence curves of fitness of two algorithms.

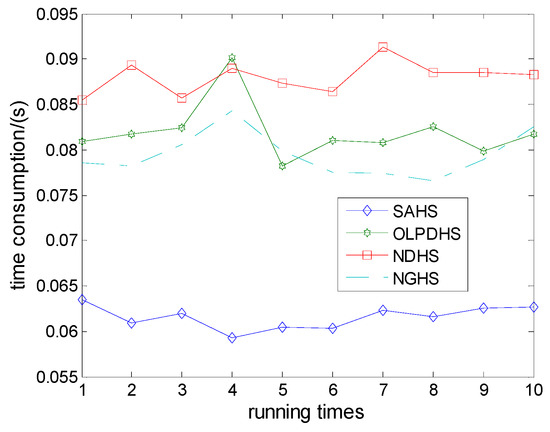

In order to directly reflect the time consumption of the two algorithms, the tic and toc functions in MATLAB are used to obtain the time-consumption data in Table 8. Based on the data in the table, the time-consumption graph of the four algorithms after 10 iterations of independent operation is drawn in Figure 9. It can be seen from the figure that the SAHS algorithm has the lowest time consumption, while the NDHS algorithm has the highest time consumption. Although OLPDHS is more time-consuming, OLPDHS improves the fitness of feasible solutions of the task-allocation model, so the cost of sacrificing some time is acceptable.

Table 8.

Assignment results of task objectives corresponding to optimal fitness.

Figure 9.

The average times of the four algorithms running independently 10 times.

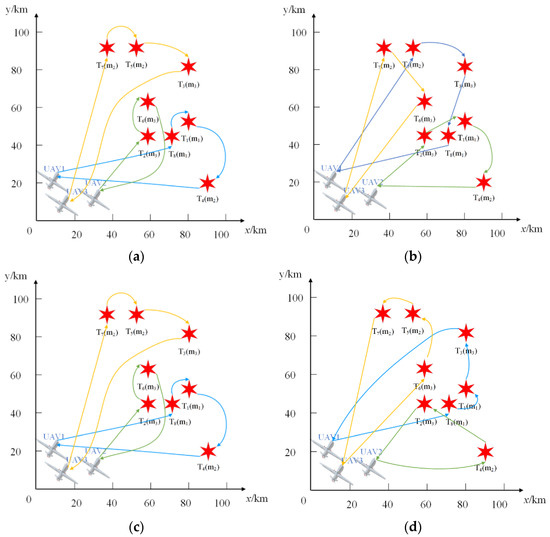

Table 8 shows the target allocation results corresponding to the optimal fitness obtained by running four algorithms for 10 times. Meanwhile, the allocation results are drawn on the basis of Figure 7 as shown in Figure 10.

Figure 10.

The schematic diagram of task assignment results of two algorithms: (a) OLPDHS; (b) SAHS; (c) NDHS; (d) NGHS.

7. Related Work

There are many researchers dedicated to the study of task allocation, and many methods have been put forward. This section mainly summarizes the methods of task allocation in various fields and the achievements achieved in UAV task allocation.

The threshold-based method is used to solve task-allocation problems. In 2005, extreme teams, large-scale agent teams operating in dynamic environments, were on the horizon. Low-communication approximate distributed constraint optimization (LADCOP), a distributed threshold-based algorithm, was proposed to solve task-allocation problems in the domain of simulated disaster rescue. The tasks are perceived by the agents in the environment [44]. In 2007, swarm generalized assignment problem (Swarm-GAP) was used to experiment in the RoboCup rescue simulator [45]. In 2010, considering the various actors in the RoboCup rescue, Swarm-GAP, LADCOP and a greedy method were used to solve distributed task allocation among teams of agents in a RoboCup rescue scenario [46]. The results showed that the performance of Swarm-GAP and LADCOP are similar and that they outperform a greedy strategy. The threshold-based approach is applied to division of labor control for a robot group [47], but the task ordering is performed by a central command unit, which generates a significant number of messages. Swarm-GAP was adopted to deal with this problem and a new method with three algorithm variants was proposed in 2017 [48]. This method effectively prevented some agents from being overloaded with tasks while others remained idle. The aforementioned researchers aimed at the optimization of their resource usage applied in the context of static environments. In 2020, Amorim J C evaluated the performance of three swarm-GAP variants in dynamic contexts, and extended these algorithms to properly address more realistic dynamic scenarios [49].

The market-based method is applied to task-allocation problems. Task specification trees (TSTs) as a highly expressive specification language for complex multiagent tasks were used. Meanwhile, a sound and complete distributed heuristic search algorithm for allocating the individual tasks in a TST to platforms was proposed in 2010 [50]. A decentralized distributed solution approach based on multi-agent systems (MAS) to manage emergency vehicles was developed, and a multi-agent architecture to fit real emergency systems was proposed. A more refined and efficient auction mechanism based on implicit agents’ coordination was examined to coordinate agents to reach good quality solutions in a distributed manner [51]. For the multi-robot dynamic task-allocation problem, multi-objective optimization (MOO) was used to estimate and subsequently make an offer for its assignment. That is, after task detection, an auction took place amongst robots capable of executing it. Robots calculated their bid using MOO [52].

There are also other works that propose methods for allocating tasks. ZORLU [22] considered the UAV load constraints and modeled the problem as a CVRP model with load constraints. Shima T [53] proposed the CMTAP model for UAVs, which divided the task types into identification, attack and damage assessment. The time sequence between tasks, execution time of tasks and multi-UAVs cooperative constraints were added into the model. Min Yao [54] designed a collaborative multi-task assignment model for UAV groups that is suitable for multiple UAVs, multi-task targets and multi-task types. Wen Gu [55] proposed a solution to UAV target allocation problem based on the Hungarian algorithm, and Jin Zhang et al. [56] extended the Hungarian algorithm to multi-target allocation. In addition, [57] used the MILP method to solve multi-UAV target allocation, and other optimization methods include dynamic programming and graph theory.

This current paper considers the type of task, the UAV load constraints, multiple UAVs, multi-task targets and multi-task types, features that are similar to previous studies in the literature [21,53,54]. Unlike these studies, this paper builds a model from two aspects of the rewards and costs of performing tasks.

8. Conclusions

In this paper, the complex task set was decomposed into sub-tasks suitable for a single UAV, and then the task-allocation problem was described and defined from the aspects of task rewards and task cost. The UAV’s own constraints and mission constraints were defined. The mathematical model of task assignment was established, being more suitable for actual battlefield environment. The OLPDHS algorithm was proposed through discussion of exploration performance and convergence performance and its parameter values (HMCR > 0.9, PAR is in the interval [0.3, 0.7], HMS is appropriate in [5, 40]) were given via a number of simulation experiments. In comparison with IHS, GHS, SAHS, SGHS, NGHS, NDHS, EHS and ITHS, the superior performance of OLPDHS was proven by a number of simulation experiments. A multi-UAV cooperative task-allocation example was designed to verify the superior performance of OLPDHS (Fitness is 1.5491, time cost is 0.0819). This is the first application of HS to the multi-UAV cooperative task-allocation problem. These performance qualities are ideal for helping decision-makers devise allocation schemes.

In the environment or in the system itself at runtime, real-world scenarios abound with the aforementioned dynamism. It is necessary for cooperative systems to deal with this dynamism to keep the execution and results level. Future work must study the dynamic redistribution problem, which is closer to real-world scenarios, focusing on how to establish the redistribution problem model in a dynamic environment, so that decision makers can make effective decisions in the battlefield’s changeable environment.

Author Contributions

Study design Y.C., W.D., H.L. and D.H.; conduct of the study Y.C., W.D. and D.H.; data collection Y.C., W.D. and D.H.; management Y.C., W.D. and H.L.; analysis W.D. and H.L.; data interpretation W.D. and H.L.; manuscript preparation Y.C., W.D., H.L. and D.H.; critical revision of the manuscript Y.C., W.D. and D.H., and final manuscript approval Y.C., W.D., H.L. and D.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shirzadeh, M.; Asl, H.J.; Amirkhani, A.; Jalali, A.A. Vision-based control of a quadrotor utilizing artificial neural networks for tracking of moving targets. Eng. Appl. Artif. Intell. 2017, 58, 34–48. [Google Scholar] [CrossRef]

- Sun, X.; Cai, C.; Yang, J.; Shen, X. Route evaluation for unmanned aerial vehicle based on type-2 fuzzy sets. Eng. Appl. Artif. Intell. 2015, 39, 132–145. [Google Scholar] [CrossRef]

- Nonami, K.; Kendoul, F.; Suzuki, S.; Nonami, K.; Wang, W. Autonomous Flying Robots: Unmanned Aerial Vehicles and Micro Aerial Vehicles; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Smith, K.; Stengel, R.F. Autonomous control of uninhabited combat air vehicles in heavily-trafficked military airspace. In Proceedings of the 14th AIAA Aviation Technology, Integration, and Operations Conference, Atlanta, GA, USA, 16–20 June 2014; p. 2287. [Google Scholar]

- Qu, X.; Zhang, W.; Wang, X. Research of UAVs’ attack strategy under uncertain condition. Flight Dyn. 2015, 4, 021. [Google Scholar]

- Song, B.D.; Kim, J.; Kim, J.; Park, H.; Morrison, J.R.; Shim, D.H. Persistent UAV service: An improved scheduling formulation and prototypes of system components. J. Intell. Robot. Syst. 2014, 74, 221–232. [Google Scholar] [CrossRef]

- Dias, M.B.; Zlot, R.; Kalra, N.; Stentz, A. Market-based multirobot coordination: A survey and analysis. Proc. IEEE 2006, 94, 1257–1270. [Google Scholar] [CrossRef] [Green Version]

- Johnson, L.B.; Choi, H.L.; How, J.P. The role of information assumptions in decentralized task allocation: A tutorial. IEEE Control Syst. Mag. 2016, 36, 45–58. [Google Scholar]

- Kim, M.H.; Kim, S.P.; Lee, S. Social-welfare based task allocation for multi-robot systems with resource constraints. Comput. Ind. Eng. 2016, 63, 994–1002. [Google Scholar] [CrossRef]

- Trigui, S.; Koubaa, A.; Cheikhrouhou, O.; Youssef, H.; Bennaceur, H.; Sriti, M.F.; Javed, Y. A distributed market-based algorithm for the multi-robot assignment problem. Procedia Comput. Sci. 2017, 32, 1108–1114. [Google Scholar] [CrossRef] [Green Version]

- Yin, G.Y.; Zhou, S.L.; He, P.C.; Lei, X.J.; Kang, Y.H. Research status and development trend of cooperative task assignment of multiple UAVs abroad. Maneuverable Missile 2016, 5, 54–58. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, T.; Cheng, N. Research on cooperative mission planning of multiple UAVs. J. Syst. Simul. 2009, 20, 194–199. [Google Scholar]

- Han, Q. Research on cooperate task allocation of multiple UAVs based on PSO algorithm. J. Ordnance Equip. Eng. 2019, 40, 74–78. [Google Scholar] [CrossRef]

- Liu, Y. Research on Tasks Assignment and Tracks Planning of Multi-UAVs Cooperative Operation; Shenyang Aerospace University: Shenyang, China, 2019. [Google Scholar]

- Korsah, G.A.; Stentz, A.; Dias, M.B. A comprehensive taxonomy for multi-robot task allocation. Int. J. Robot. Res. 2013, 32, 1495–1512. [Google Scholar] [CrossRef]

- Rostami, A.S.; Mohanna, F.; Keshavarz, H.; Hosseinabadi, A.R. Solving multiple traveling salesman problem using the gravitational emulation local search algorithm. Appl. Math. Inf. Sci. 2015, 9, 1–11. [Google Scholar]

- Omer, J.; Farges, J.L. Hybridization of nonlinear and mixed-integer linear programming for aircraft separation with trajectory recovery. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1218–1230. [Google Scholar] [CrossRef]

- Lincheng, S. Theory and Method of Autonomous Cooperative Control of Multiple UAVs; National Defense Industry Press: Beijing, China, 2013. [Google Scholar]

- Nygard, K.E.; Chandler, P.R.; Pachter, M. Dynamic network flow optimization models for air vehicle resource allocation. In Proceedings of the 2001 American Control Conference, Arlington, VA, USA, 25–27 June 2001; IEEE: Piscataway, NJ, USA, 1963; Volume 3, pp. 1853–1858. [Google Scholar]

- Edison, E.; Shima, T. Integrated task assignment and path optimization for cooperating uninhabited aerial vehicles using genetic algorithms. Comput. Oper. Res. 2011, 38, 340–356. [Google Scholar] [CrossRef]

- Zorlu, O. Routing unmanned aerial vehicles as adapting to capacitated vehicle routing problem with genetic algorithms. In Proceedings of the International Conference on Recent Advances in Space Technologies, Istanbul, Turkey, 16–19 June 2015; IEEE: Piscataway, NJ, USA, 1963; pp. 675–679. [Google Scholar]

- Mun, S.; Cho, Y.H. Modified harmony search optimization for constrained design problems. Expert Syst. Appl. 2012, 39, 419–423. [Google Scholar] [CrossRef]

- Alatas, B. Chaotic harmony search algorithms. Appl. Math. Comput. 2010, 216, 2687–2699. [Google Scholar] [CrossRef]

- Niu, W.J.; Feng, Z.K.; Jiang, Z.Q. Enhanced harmony search algorithm for sustainable ecological operation of cascade hydropower reservoirs in river ecosystem. Environ. Res. Lett. 2021, 16, 110–120. [Google Scholar] [CrossRef]

- Mahalingam, S.K.; Nagarajan, L.; Salunkhe, S. Harmony search algorithm for minimizing assembly variation in non-linear Assembly. Appl. Sci. 2021, 11, 9213. [Google Scholar] [CrossRef]

- Min, J.C. An analysis of children’s mental health based on HIS-FCM. Microcomput. Appl. 2020, 36, 62–64. [Google Scholar]

- Shams, M.; El-Banbi, A.; Sayyouh, H. Harmony search optimization applied to reservoir engineering assisted history matching. Pet. Explor. Dev. 2020, 47, 148–154. [Google Scholar] [CrossRef]

- Tao, Y.S.; Wang, K.X.; Yang, J. Hybridizing information gain and harmony search for feature selection on speech emotion. J. Chin. Comput. Syst. 2017, 38, 1164–1168. [Google Scholar]

- Fu, S.; Wang, H.D. Indoor positioning of wireless network based on harmony search algorithm optimizing neural network. J. Nanjing Univ. Sci. Technol. 2017, 41, 428–433. [Google Scholar]

- Das, S.; Mukhopadhyay, A.; Roy, A. Exploratory power of the harmony search algorithm: Analysis and improvements for global numerical optimization. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2011, 41, 89–106. [Google Scholar] [CrossRef]

- Huange, X.; Quli, M.; Tao, R. Mission assignment planning method for heterogeneous UAV cooperation based on sales contract strategy and PSO algorithm. J. Nav. Univ. Eng. 2018, 30, 1–5. [Google Scholar]

- Zhang, T.; Yan, J.B. Numeric Analysis. Metalurgical; Industry Press: Beijing, China, 2007. [Google Scholar]

- Tizhoosh, H.R. Opposition-based learning: A new scheme for machine intelligence. In Proceedings of the CIMCA/IAWTIC, Vienna, Austria, 28–30 November 2005; IEEE Computers Society: Piscataway, NJ, USA, 1963. [Google Scholar]

- Wu, W.H.; Guo, X.F.; Zhou, S.Y. Self-adaptive differential algorithm with random neighborhood-based strategy and generalized opposition-based learning. Syst. Eng. Electron. 2021, 43, 1928–1942. [Google Scholar]

- Rahnamayan, S.; Tizhoosh, H.R.; Salama, M. Opposition-based differential evolution. IEEE Trans. Evolut. Comput. 2018, 12, 6479. [Google Scholar]

- Zhang, Y.; Yuan, S.J.; Da, L.X. Adaptive opposition-based learning cuckoo algorithm based on local search enhancement strategy. Math. Pract. Theory 2020, 50, 191–200. [Google Scholar]

- Mahdavi, M.; Fesanghary, M.; Damangir, E. An improved harmony search algorithm for solving optimization problems. Appl. Math. Comput. 2007, 188, 1567–1579. [Google Scholar] [CrossRef]

- Omran, M.G.H.; Mahdavi, M. Global-best harmony search. Appl. Math. Comput. 2008, 198, 643–656. [Google Scholar] [CrossRef]

- Wang, C.M.; Huang, Y.F. Self-adaptive harmony search algorithm for optimization. Expert Syst. Appl. 2010, 37, 2826–2837. [Google Scholar] [CrossRef]

- Pan, Q.K.; Suganthan, P.N.; Tasgetiren, M.F.; Liang, J.J. A self-adaptive global best harmony search algorithm for continuous optimization problems. Appl. Math. Comput. 2010, 216, 830–848. [Google Scholar] [CrossRef]

- Zou, D.; Gao, L.; Wu, J.; Li, S. Novel global harmony search algorithm for unconstrained problems. Neurocomputing 2010, 73, 3308–3318. [Google Scholar] [CrossRef]

- Chen, J.; Pan, Q.; Li, J. Harmony search algorithm with dynamic control parameters. Appl. Math. Comput. 2012, 219, 592–604. [Google Scholar] [CrossRef]

- Yadav, P.; Kumar, R.; Panda, S.K.; Chang, C.S. An intelligent tuned harmony search algorithm for optimization. Inf. Sci. 2012, 196, 47–72. [Google Scholar] [CrossRef]

- Scerri, P.; Farinelli, A.; Okamoto, S.; Tambe, M. Allocating tasks in extreme teams. In Proceedings of the 4th International Joint Conference on Autonomous Agents and Multiagent Systems, Utrecht, The Netherlands, 25–29 July 2005; ACM: New York, NY, USA, 1947; pp. 727–734. [Google Scholar]

- Ferreira, P.R., Jr.; Boffo, F.S.; Bazzan, A.L. A swarm based approximated algorithm to the extended generalized assignment problem (e-gap). In Proceedings of the 6th International Joint Conference on Autonomous Agents And Multiagent Systems, AAMAS, Honolulu HI, USA, 14–18 May 2007; ACM: New York, NY, USA, 1947; pp. 1231–1233. [Google Scholar]

- Ferreira, P.R., Jr.; Dos Santos, F.; Bazzan, A.L.; Epstein, D.; Waskow, S.J. RoboCup Rescue as multiagent task allocation among teams: Experiments with task interdependencies. Auton. Agents Multi-Agent Syst. 2010, 20, 421–443. [Google Scholar] [CrossRef]

- Ikemoto, Y.; Miura, T.; Asama, H. Adaptive division of labor control for robot group. In Proceedings of the International Conference on Intelligent Robot and Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 2409–2414. [Google Scholar]

- Schwarzrock, J.; Zacarias, I.; Bazzan, A.L.C.; de Araujo Fernandes, R.Q.; Moreira, L.H.; de Freitas, E.P. Solving task allocation problem in multi unmanned aerial vehicles systems using swarm intelligence. Eng. Appl. Artif. Intell. 2018, 72, 10–20. [Google Scholar] [CrossRef]

- Amorim, J.C.; Alves, V.; de Freitas, E.P. Assessing a swarm-GAP based solution for the task allocation problem in dynamic scenarios. Expert Syst. Appl. 2020, 152, 113437. [Google Scholar] [CrossRef]

- Landén, D.; Heintz, F.; Doherty, P. Complex task allocation in mixed-initiative delegation: A UAV case study. In Proceedings of the International Conference on Principles and Practice of Multi-Agent Systems, Kolkata, India, 12–15 November 2010; Springer: Berlin/Heidelberg, Germany, 2012; pp. 288–303. [Google Scholar]

- Ibri, S.; Nourelfath, M.; Drias, H. A multi-agent approach for integrated emergency vehicle dispatching and covering problem. Eng. Appl. Artif. Intell. 2012, 5, 554–565. [Google Scholar] [CrossRef]

- Tolmidis, A.T.; Petrou, L. Multi-objective optimization for dynamic task allocation in a multi-robot system. Eng. Appl. Artif. Intell. 2013, 26, 1458–1468. [Google Scholar] [CrossRef]

- Shima, T.; Rasmussen, S.J.; Sparks, A.G.; Passino, K.M. Multiple task assignments for cooperating uninhabited aerial vehicles using genetic algorithms. Comput. Oper. Res. 2006, 33, 3252–3269. [Google Scholar] [CrossRef]

- Fei, S.; Yan, C.; Lin, S. Collaborative multi-task assignment of unmanned aerial vehicles based on ant colony algorithm. Chin. J. Aeronaut. 2008, 1, 189–196. [Google Scholar]

- Wen, G. Research and Application of Target Assignment Problem Based on Evolutionary Hungarian Algorithm; Xidian University: Xi’an, China, 2013. [Google Scholar]

- Jin, Z.; Hao, G.; Tong, C. Weapon-target assignment based on adaptable hungarian algorithm. Acta Armamentarh 2021, 42, 1339–1344. [Google Scholar]

- Bellingham, J.; Tillerson, M.; Richards, A.; How, J.P. Multi-task allocation and path planning for cooperating UAVs. In Cooperative Control: Models, Applications and Algorithms; Springer: Boston, MA, USA, 2003; pp. 23–41. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).