Abstract

Solid-state drives (SSDs) are rapidly replacing hard disk drives (HDDs) in many applications owing to their numerous advantages such as higher speed, low power consumption, and small size. NAND flash memories, the memory devices used for SSDs, require garbage collection (GC) operations to reclaim wasted storage space due to obsolete data. The GC is the major source of performance degradation because it greatly increases the latency for SSDs. The latency for read or write operations is sometimes significantly long if the operations are requested by users while GC operations are in progress. Reducing the frequency of GC invocation while maintaining the storage space requirement may be an ideal solution to remedy this problem, but there is a minimal number of GC operations to reserve storage space. The other approach is to reduce the performance overhead due to GC rather than reducing GC frequency. In this paper, following the latter approach, we propose a new GC scheme that reduces GC overhead by intelligently controlling the priorities among read/write and GC operations. The experimental results show the proposed scheme consistently improve the overall latency for various workloads.

1. Introduction

Solid-state drives (SSDs) offer advantages in terms of speed, power consumption, and size over hard disk drives (HDDs). Thus, SSDs are gaining wide acceptance in many applications [1]. However, in-place overwrite operations are not feasible with SSDs, which is a critical weakness of SSDs. Thus, obsolete data must be deleted using erase operations before the write operation is applied to the same space. This restriction is due to inherent features of NAND flash memory devices constituting SSDs [2,3]. Another critical limitation of NAND flash memory devices is that erase operations are allowed only in a few hundred kilo-byte unit called a block.

After multiple delete and rewrite operations are applied to a block, obsolete data would occupy the storage before the block is initialized by a block-erase operation [4], which means a significant waste of storage space. To reclaim such wasted storage space, valid data from multiple blocks are copied to a new block and then old blocks are initialized by complete deletion of their contents. This process is called garbage collection (GC). This suggests that the actual number of write operations applied to an SSD is greater than that intended by the host. Although GC can improve storage utilization [5,6], it adversely affects the lifetime and performance of an SSD.

A simple yet effective solution to extend the lifetime of SSD is to accurately predict the lifetime of data, which is the interval between the time of its creation and the time the data is invalidated due to deletion or modification. To minimize GC frequency, pages with similar lifetime can be grouped together in the same block. This is the basic concept of a multi-stream drive. A stream refers to a block that contains pages with similar lifetime. However, it is challenging to implement this simple idea because there is a limitation in predicting the lifetime of the data on each page. Therefore, extensive research has been conducted to increase the accuracy of multi-stream recently [6,7].

Garbage collection is a source of occasionally long latency in accessing data in SSDs. The inherent limitation of GC is that some parts of SSD must stop their regular read or write operations while GC is being applied. Therefore, reducing performance degradation due to GC has been actively investigated recently. Depending on its implementation, GC can block the entire read/write operations to the whole SSD, a specific channel, or a specific block which is called controller blocking, channel blocking, or plane blocking, respectively, in [8]. The overhead in managing the blocking operation is one of the key issues. The finer the level of blocking, the lower the penalty due to GC. This is because when GC occurs in a block inside SSD, the lower the blocking level, the more blocks are free from GC, meaning that pages are accessible for regular read/write operations. Channel blocking is the simplest and safest option at the expense of the highest performance penalty.

An alternative way to reduce GC overhead is to interrupt GC if a new RW operation requires to access the SSD unit to which GC is being applied [9]. This approach is ideal in terms of performance because RW operations are not delayed due to GC. Unfortunately, pre-emption might cause serious problems to the integrity of the data unless the pre-emption schedule is carefully controlled.

In this paper, we propose an algorithm that can reduce the overhead caused by GC by allowing R/W operations generated from the host and GC to coexist. To this end, we modeled SSD operation by implementing queues that can separately manage requests by the host and operations caused by GC for each channel. Among many queues, host-requested queues are given the highest priority, thus improving the basic SSD speed. In addition, this allows access to internal blocks of SSDs where GC does not occur by lowering the blocking level to block units. A more detailed algorithm can be found in contents shown below.

2. Background

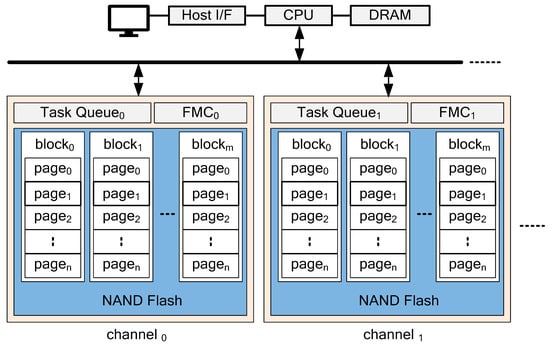

The basic operation unit of a NAND flash memory device is a page which means that a read or a write operation is performed only in the unit of a page. The size of a page ranges from 4 kilobytes to 16 kilobytes in most NAND flash memories [10,11]. An erase operation is performed in the unit of a block, which consists of multiple pages. A group of blocks is called a plane. A die contains a single plane or multiple planes. The die is a physical unit of NAND flash memories. To increase the overall performance, multiple NAND flash memory dies called channels are configured in most SSDs, as shown in Figure 1 [12]. Various techniques, such as channel stripping, flash-chip pipelining, die-interleaving, and plane sharing, are proposed to exploit parallelism utilizing multiple channels. Those techniques all require a dedicated flash memory controller for each channel to amortize the penalty due to the latency of flash memory [13].

Figure 1.

Multi-channel SSD configuration (FMC: Flash Memory Controller).

A conventional procedure of a file-write operation of multi-channel SSD is as follows. Once a host generates a write request for a file, the controller receives the file from the host and stores it in a temporary buffer inside the SSD controller. Flash translation layer (FTL) performs an intermediate function between host interface and flash memory controller to handle various tasks including address mapping. The distribution of a single file to multiple channels involves intelligent algorithms to maximize the performance of the entire SSD system, such as speed and lifetime. Thus, most modern SSDs are configured with multiple CPUs to handle such tasks. Once pages are assigned to a respective flash memory channel, the write-request task is queued into the corresponding task queue associated with each channel. The hardware logic then performs read or write operations in a unit of page [14].

For file-read request from the host, the file name is translated to a physical address in the SSD. The physical address is associated with a set of page addresses in flash memories. Page-level read operations are stacked in the task queue associated with each channel. The flash memory controller reads out each page data and store the data in the temporary buffer inside the SSD controller. When all page data arrive in the buffer, the entire file is sent to the host [15].

Erase operation on flash memory is different from other types of data storage, such as a hard disk. Each memory cell must be initialized for future writes to the flash memory. An erase operation must be performed as a block unit due to the physical structure of the flash memory. Another important feature of erase operation is that it is performed in the unit of a block. If a page in a block is to be erased, the page is marked invalid until the entire block is erased.

Overwrite operation in flash memories must be preceded by erase operation due to physical characteristics of the device. If a page in a block is to be updated, the page with existing data is invalidated and new data are written on another valid page.

The prescribed operation mechanism suggests that an SSD may contain many blocks consisting of many invalid pages. This means a significant waste of storage. To reclaim the storage space, blocks with many invalid pages are initialized using a process called garbage collection. Data in valid pages from garbage collection targets are copied to another block called copy-back. The whole block data are then erased [3,16]. This results in an increased storage space at the expense of SSD execution time and reduced lifetime.

Based on parameters from previous studies [17,18], the total time consumed for reading and writing 4 KB are 14.2 ms and 15.1 ms, respectively, in HDD. The time consumed for reading and writing 4 KB are 25 μs and 230 μs in SSD, respectively [19].

When calculating the time required to perform GC for one block in a channel, GC can be applied to a block when the number of invalid pages in the block exceeds 70% [2]. Copy-back operations for 30% pages of the block and erasing one block will take 9.8 ms and 0.7 ms, respectively [19]. That is, the total GC time required for one block is 10.5 ms.

It is well-known that SSDs are much faster than HDDs for basic read and write operations. However, the overhead due to erase and overwrite operations and garbage collection in SSD sometimes makes SSDs slower than HDDs [12]. If a read or write operation is preceded by multiple GCs, SSDs access time is significantly longer than that of HDDs. Thus, an intelligent algorithm that can reduce or hide the GC overhead is essential for SSDs.

3. Related Work

3.1. RW Blocking Level

GC can block regular read/write operations of the entire SSD, controller, a specific channel, a specific plane, or a specific block which is called blocking [8]. If the unit of blocking can be adjusted to the block level, which is the unit of GC, the performance will be greatly improved. However, due to the physical structure of the SSD, several methods must be used to lower the level of blocking to the block unit. This is because there is only one register inside the plane. When writing or reading a page to any block inside the plane, all blocks share this one register. Therefore, there must be a pre-emption or other ways to stop GC in the middle to lower the level blocking to the block unit.

3.2. Pre-Emption

GC operation to a block takes long execution time and regular read or write operations to the same block must wait until the GC is complete to ensure data consistency in conventional SSDs. With an intelligent scheme that allows regular read or write operations to interrupt on-going GC without causing data inconsistency, the overall performance of the SSD can be significantly improved. Unfortunately, however, preemption may cause data inconsistency unless very carefully designed. It has been claimed in [9] that GC can be interrupted after read or write operations for GC copy-back. This is based on the idea that clean data can be accessed using updated data as long as the update of metadata, such as address mapping information, is complete. When a host generates a new read or write request, the host checks if the request involves any block to which GC is being applied. If it does, the host also checks the precise status of the GC to determine if metadata update is finished. This scheme may reduce the GC overhead because regular read or write operations do not wait until the block erase operation to the GC target block is executed. However, the frequent status check on GC may incur non-trivial overhead especially when this scheme is implemented by software.

4. Proposed Garbage Collection Algorithm

Garbage collection is essential to reclaim wasted storage in SSDs, but it may increase SSD’s response time to regular read or write requests from users. In this section, we propose a strategy to reduce the delay penalty due to garbage collection.

4.1. Motivation

Only a single die can be accessed at a time. Concurrent access of multiple parts of a single die is not possible. Therefore, a plane is the most fine-grain level of blocking.

We claim that a plane-blocking and a block-blocking have different impacts on performance because multiple blocks in a single plane can be accessed in flexible order in block-blocking while only a single block can be accessed in plane-blocking if GC is applied to the plane.

Suppose a block B in a plane P is the GC target. The SSD controller will block the entire P for R/W while the ongoing GC is complete in case of plane-blocking. On the contrary, only the block B is blocked in block-blocking while other blocks in P can be accessed. As mentioned earlier, only a single block can be accessed at a time physically. Thus, block-blocking is not different from plane-blocking unless pre-emption is allowed. We can implement pre-emption using priority queues, which will be described later. This enables us to halt the GC for plane P while another block in P is accessed for R/W operation.

The major source of performance degradation is the entire RW operation to/from a channel if there is at least a single block to which GC is applied. That is, if a single block is under GC operation, the whole channel is stalled in terms of normal RW until the GC operation is finished.

One way to avoid such penalty is to pre-empt GC which means interrupting the ongoing GC while normal RW operation is complete. However, this approach is practically infeasible due to data hazards. In particular, most modern SSD controllers are configured with NOC, which means that every transaction is distributed over multiple masters and slaves on the chip. It is very challenging to ensure the integrity of the data if multiple operations, such as GC and normal RW, are interleaved.

We can solve this problem by using the following two strategies. First, we can ensure that there is no GC interruption at a block level. That is, the channel may be shared between GC and normal operation. However, a block is not shared by the two operations. Once an operation begins, the other type of operation must wait until the ongoing operation is complete. Second, we can give priority to normal RW operation if there are pending RW and GC operations to begin for a channel. To be specific, there might be normal RW operations and GC-driven RW operations for copy-back for the same channel. As long as they are not related to the same channel, they can be executed in any order. We can give priority to normal RW operations. This can reduce the latency of SSD.

4.2. Block-Level Blocking

The blocking scheme means R/W and GC operations are mutually exclusive. That is, a new GC cannot be initiated if a normal R/W operation is being executed. R/W operations cannot be initiated if GC is being applied. This is a simple algorithm to avoid data hazards. Blocking units in previous GC algorithms were mostly channels presumably because of the simplicity in implementation. However, the performance degradation due to blocking is severe because the user’s R/W request must wait if GC which takes a relatively long time is underway. The penalty is higher for multi-channel SSDs where pages are distributed over multi-channels because the R/W operation cannot be complete if any channel is experiencing GC.

We propose a block-level blocking in which we can lower the granularity to the block unit. That is, only one RW operation and GC operation can be initiated in a single block. This strategy can make all other blocks belonging to the same channel become available for RW operation unless they are free from GC. The realization of this seemingly simple idea requires an effective mechanism to manage the availability of each block for R/W and GC.

Before presenting details of our block-blocking GC, the overall address mapping scheme for the SSD controller needs to be discussed. Normal address mapping algorithms followed previous studies [20,21]. However, we proceeded with address mapping through a published method [22] from our previous work in which we introduced a hardware-based address mapping table.

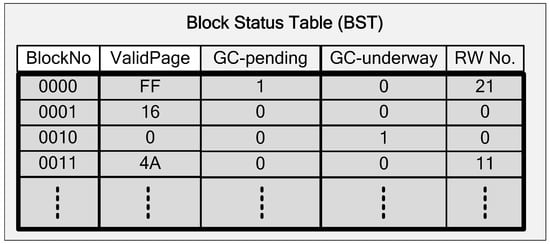

Each block is associated with Block Status Table (BST) which keeps a record of the number of valid pages, GC status, and the number of pending RW operations.

GC status of a block can be one of GC-underway or GC-pending. GC-underway means GC is being executed to the block and new regular R/W operations must wait to enter the task queue until the GC is complete. GC-pending means regular read or write tasks have been queued already when a new GC is initiated to the same block, so GC-related read or write request must wait until ongoing regular operations are complete.

GC management logic monitors the number of valid pages and sets the GC-pending field on. If the GC-pending bit is one and the RW No. is zero, then the status of the block is changed to GC-underway as GC is initiated.

W-management logic checks if the target block is in GC-underway status. If the target block has an ongoing GC status, it means that it is searching for a new target block and proceeding with W request. If not, the logic increases the RW No. and enqueues the W request to the corresponding task queue.

Care must be taken to avoid the situation that a new GC and a new RW are simultaneously set in BST. This can be avoided by using a GC-pending flag because a new RW is not registered if GC-pending is set. Figure 2 below shows the structure of a BST.

Figure 2.

Block status table configuration.

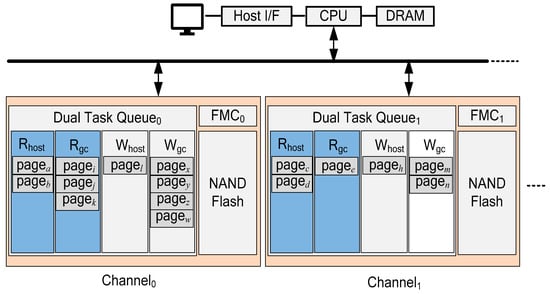

4.3. Dual-Task Queues (DTQ) for Priority Control

In many commercial SSD controller SOCs, read, write, and GC requests are stored in respective task queues. Dedicated hardware then executes individual operations in sequence. The GC operation is applied in the unit of a block because the NAND flash memory erase operation is executed in the unit of a block. However, most of the time taken for GC is copy-back operations. Page-write takes 230 μs. Page-read takes 25 μs and block-erase takes 0.7 ms for our modeling SSD [15]. We note that page-write and page-read operations in task queues could be executed in any order. It does not matter whether the tasks are for normal RW operation or for GC because the fact that the read and write operations are in the task queue means there is no conflict between them as block-level blocking is enforced.

To expedite the service of a host-generated regular read operation, we propose to separate queues for regular read operations and GC-oriented read operations and give priority to regular read operations. That is, if regular read operations and read operations for GC copy-back are both waiting to be executed to the same block, we execute regular read operations first to reduce their latencies.

Figure 3 below shows a configuration of our proposed multi-channel SSD. Each channel has a Dual Task Queue (DTQ) inside. For read and write requests, it distinguishes whether the operation is generated by the host or by the GC and stores the page to be moved to the destination in the corresponding queue.

Figure 3.

Configuration of the proposed multi-channel SSD algorithm.

We note that write operation is not allowed to the block if GC is being applied to the block for the following reasons.

Suppose copy-back to a page is executed, and the corresponding page in the target block becomes invalid. That means the valid page data are moved to a different block, and the meta data including the page-list information associated with the file the page belongs to must be updated before file rewrite is initiated.

On the contrary, if re-write operation is executed to a valid page before a pending copy-back is applied to the page, the valid page becomes invalid which makes the pending copy-back operation obsolete. Unless a sophisticated scheme to clean up such obsolete tasks, it can lead to data inconsistency problem.

For the above reasons, the DTQ accepts only regular read requests while GC is being applied to the same block. The frequency of read operation is much higher than that of re-write operation. So, we can expect significant performance gain even if the advantage of using DTQ is limited to read operations.

We note that Whost is still part of DTQ, as shown in Figure 3, because it stores regular write requests while GC is not being applied. That is, Whost is not storing requests only during GC phase.

5. Evaluation

5.1. Experimental Setup

We modeled SSDs using C code to estimate the efficiency of the Dual-Queue Garbage Collection (DQGC) algorithm. We referred to existing studies [23,24,25,26] when designing the simulator. We modeled operations of SSD running trace files, which consisted of commands including LBA, operation type, size, and arrival time of each actual request from the host. Trace files were created by storing and reading dummy data of workload in various benchmark programs on an SSD and extracting information we wanted using ftrace [27], blktrace, and blkparse [28]. The SSD product name used in the experiment was Samsung T5 Portable SSD 1 TB. Linux kernel version was 3.10.0-1160.36.2.el7.x86_64 and the OS version was Red Hat Enterprise Linux Workstation release 7.9. The CPU corresponding to the host used Intel(R) Core™ i7-9700K with 32GB RAM.

The most important point of the simulator is to prioritize tasks requested by the host and by the GC. When R/W operations caused by host and R/W operations caused by GC coexist on the same channel, performing R/W operations caused by host first, and then performing R/W operations caused by GC. Requests from GC were modeled with GC-policy described in [1,2,4,20] and based on Algorithm 1 mentioned below. Request from the host were modeled based on Algorithms 2 and 3 which were mentioned below.

| Algorithm 1: Enqueue CopyBack request to DualTaskQueue GC |

|

| Algorithm 2: Enqueue file-write request to DualTaskQueue |

|

| Algorithm 3: Enqueue file-read request to DualTaskQueue |

|

Table 1 below lists parameters of the flash memory inside the modeled SSD. The modeled SSD has 4 channels, 8 planes per channel, 2048 blocks per plane, and 128 pages per block. Read, write and erase operation time were 25 μs, 230 μs, and 0.7 ms, respectively [15].

Table 1.

Parameters of SSD configuration.

5.2. Trace File Analysis

Table 2 shows characteristics of trace files extracted from the six databases used in our experiment. Each trace file was extracted after installing a database and a manual of each benchmark program, such as MySQL based on sysbench [29,30], Yahoo Cloud Serving Benchmark (YCSB) family [31] Cassandra [32], MongoDB [33], RocksDB [34], and Phoronix Test Suite [35] family SQLite and Dbench. The reason for analyzing the trace file was that different access patterns for each benchmark could affect simulation results. To determine which behavior between read and write was more serious about the damage caused by garbage collection, we investigated the ratio of trace file’s internal read and write operation. Cassandra, MongoDB, and RocksDB corresponding to the YCSB series had a higher read ratio than write. Trace files for the remaining three benchmarks had an overwhelmingly higher write ratio. In the case of YCSB, data can be loaded and run by dividing the ratio of new (=new write), update (=re-write), and read actions after creating a table in which data are contained in the server. In the load operation, we can track the command for read operation and extract it to the trace file. In the run operation, we can track the command for write and extract it to the trace file. That is, the YCSB can generate a trace file by arbitrarily setting the ratio of the operation by the user to increase the weight of the desired operation. We divided 30,000,000 dummy data with 10 tables as the default setting so that they could load and run on SSD. Regarding the ratio, extraction was performed at new:update:read ratio of 0.25:0.25:0.50. In the case of MySQL, a table is created as a sysbench series to divide dummy data and benchmark. However, it is not classified as load/run like YCSB. SQLite and Dbench in the “Phoronix-test-suite” series enter the thread and copy figures, not tables, and perform insertion actions first. That is, in the case of benchmark other than YCSB, since the storage command of the data of the table to the SSD was prioritized and the ratio of read operation could not be determined, a trace file with a high write ratio was extracted. The ratio of sequential and random was carried out by referring to the standard of Storage Workload Identification described previously in [36,37].

Table 2.

Characteristics of trace files.

5.3. Results

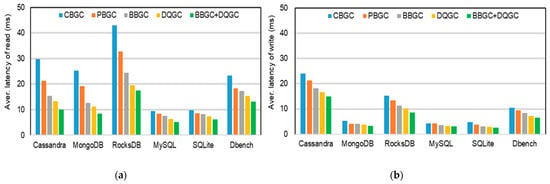

Performances of Channel Blocking GC (CBGC), Plane Blocking GC (PBGC) presented in [8], our Block Blocking GC (BBGC), Dual-QueueGC (DQGC), and the combination of BBGC and DQGC were compared. We first measured the average latency for each file which was the interval between the request time from the host and the time all pages arrived their destination in flash memories for file write case or the buffer in the host side for file read case. Results showed a significant reduction in terms of latency when the proposed algorithms were applied.

Figure 4 shows how much the performance is improved in terms of average read latency and average write latency, respectively, compared to the existing GC method when our method is applied. When our algorithm was applied to all trace files, it could be seen that the performance of read and write was improved for most trace files.

Figure 4.

Average latency comparison. (a) Read, (b) Write (CBGC = Channel Blocking GC; PBGC = Plane Blocking GC [8]; BBGC = Block Blocking GC; DQGC = Dual-Queue GC).

If we analyze in more detail, in the case of read, we applied our block-blocking method described in 4.2 to lower the blocking level due to GC to block unit. Since the number of requests from hosts blocked by GC was decreased, the performance was improved more than existing CBGC (Channel-Blocking GC) and PBGC (Plane-Blocking GC). DQGC, which introduced Dual-Queue to BBGC (Block-Blocking GC), also increased the processing speed for overlapping GC and host requests through the task management method introduced in 4.4 pseudo-code. Thus, the performance of DQGC (Dual-Queue GC) was improved.

In the case of write, the SSD we modeled can receive pages from the host regardless of the GC method, stay in the write buffer, receive address mapping, and is transferred. Because of this, there is less overlap with GC than with read operation. Thus, the value of the average write latency is smaller than the average read latency. The performance improvement is smaller because the damage caused by GC is less. Still, it can be seen that the overall performance is improved when the block unit is lowered and the dual-queue is applied through the block-blocking proposed by us. On the other hand, in the average write response time measurement, performance improvement of MongoDB, MySQL, and SQLite were small compared to other trace files. Reasons are as follows.

First, in the case of MongoDB and MySQL, the ratio of sequential I/O is high. In the case of sequential write, the page corresponding to the file has a continuous LBA. The address is mapped at once in the write buffer and then transferred to the flash memory channel [21,37]. Therefore, in the case of write operations in MongoDB and MySQL, all pages belonging to the file are mapped at the same timing. All blocks in which GC occurs are avoided as much as possible. All pages can be saved. Since they received as little damage by GC as possible during the experiment, the average write latency of two trace files was measured to be small and the performance improvement was insignificant.

Second, in the case of SQLite, the average write time was short, but the random I/O request rate was high compared to the above two trace files. Therefore, in the case of SQLite, the reason was different from the above two trace files. The reason was that the average R/W size of SQLite shown in Table 2 was very small compared to other trace files. Specifically, the file size was nearly one-page size. In other words, since the average file request was about one page and R/W operated on a page-by-page basis, the overlap with GC was significantly less compared to other trace files in the total I/O request of the file. The average latency was also measured to be small.

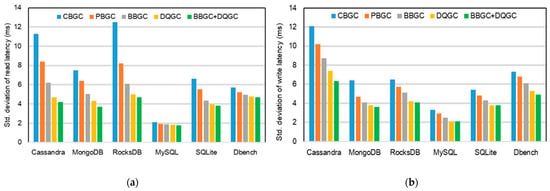

Figure 5 shows the standard deviation for each trace file. Looking at the chart, when our algorithm was applied, the standard deviation gradually decreased compared to the existing method. In other words, the latency of each file in the workload was getting closer to the average.

Figure 5.

Standard deviation of latency. (a) Read, (b) Write (CBGC = Channel Blocking GC; PBGC = Plane Blocking GC [8]; BBGC = Block Blocking GC; DQGC = Dual-Queue GC).

Standard deviations for the YCSB family Casandra, MongoDB, and RocksDB also decreased as average read latency decreased, indicating that when our algorithm was applied, the performance improved and the read latency of files in the workload gradually approached the improved average. Likewise, in the YCSB family, the write operation approached the average value, meaning that the performance was improved.

In the case of MySQL, the standard deviation of read was almost constant. Since the average read latency did not improve well, the standard deviation also had a small change in the average value. The standard deviation was small because the read latency of files was close to the average latency.

In the case of SQLite and Dbench, the standard deviation was similar to or larger than the size of the average. Both trace files had a big ratio of random I/O requests. In the case of random I/O, the latency was basically longer than that of sequential I/O and the storage location of the page in the file was randomly assigned. It might overlap with GC and become greatly affected by the experimental situation. Therefore, the mean value and the standard deviation were measured to be similar or larger.

However, in the case of RocksDB, as shown in Table 2, although the random I/O request was high, the standard deviation was measured to be smaller than the average. This was because the size of the average R/W file of RocksDB was much larger than the other two trace files. Since the size of the average file basically had a large random I/O, it was less affected by the experimental situation that two trace files. This was why the average latency was measured to be larger than the standard deviation compared to the other two trace files.

Regarding performance, in the case of read, our algorithms, BBGC and DQGC, showed significantly better performance than the existing GC method. In the case of DQGC, even when compared to BBGC, it showed the best performance among methods because the speed of host requests was increased to the maximum possible in GC that occurred multiple times.

In the case of write, as in the case of average latency, the value was smaller than that of read and the performance improvement was small. The reason was that, as with average latency, GC could be avoided as much as possible even in the worst case of write. However, unlike the average value, since values measured with each method were very large, the performance improvement for the worst case was found to be better than the existing GC method for both read and write.

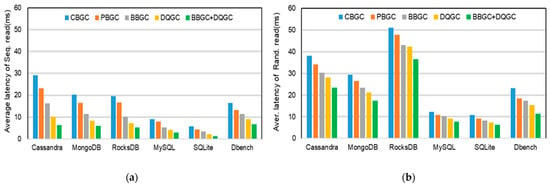

Figure 6 shows the average latency in sequential and random reads. In the case of a sequential read. When our methods BBGC and DQGC were applied, the performance showed a lot of improvement. When both methods were applied, the performance was greatly improved compared to existing GC methods.

Figure 6.

Average read latency of sequential read and random read (a) sequential read, (b) random read. (CBGC = Channel Blocking GC, PBGC = Plane Blocking GC [8], BBGC = Block Blocking GC, DQGC = Dual-Queue GC).

Conversely, in the case of a random read, the latency was measured larger than that of a sequential read. The reason was because LBAs of pages belonging to a file were not sequential. When they are stored, addresses are mapped page by page and pages are transmitted page by page. Thus, pages belonging to a file are often scattered and stored in several blocks or channels randomly instead of being stored in blocks with the same address in each flash memory channel. Therefore, sequential read is basically faster than random read. When GC occurs in SSD, random read if often seen to overlap with the block in which GC occurs. Thus, the average latency of a random read is higher than that of a sequential read and the improvement is smaller than that of sequential read. Additionally, it was confirmed that the performance improved when our algorithm was applied. Unlike a sequential read, when the two methods were applied separately, the performance improvement was small. However, when the two methods were applied together, the performance was greatly improved. Accordingly, it can be said that the SSD to which our method is applied has the least increase in latency and the smallest decrease in performance due to GC.

6. Conclusions

In this paper, we proposed a scheme to improve the latency in SSD. The scheme allows the read or write requests from the host and garbage collection to coexist and priority is given to host-generated requests. The scheme also reduces the level of data blocking by garbage collection to block units, so all other blocks not related with on-going garbage collection is free from the stall due to garbage collection.

The experimental results over various workload prove the effectiveness of the proposed scheme in terms of latency reduction. The implementation of the proposed scheme requires slight modification of conventional task queues for flash memory controllers, status book-keeping for each block. Therefore, the proposed scheme can be applied to most conventional SSD controllers without adding significant hardware or software complexities.

Author Contributions

Conceptualization, J.A. and Y.H.; methodology, J.A. and Y.H.; software, J.A.; validation, J.A. and Y.H.; formal analysis, J.A. and Y.H.; investigation, J.A.; resources, J.A.; writing—original draft preparation J.A.; writing—review and editing, J.A. and Y.H.; visualization J.A. and Y.H.; supervision, Y.H.; project administration, J.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

The EDA tool was supported by the IC Design Education Center (IDEC), Korea.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chen, F.; Koufaty, D.A.; Zhang, X. Understanding Intrinsic Characteristics and System Implications of Flash Memory Based Solid State Drives. In Proceedings of the 11th International Joint Conference on Measurement and Modeling of Computer Systems (SIGMETRICS 09), Seatle, WA, USA, 15–19 June 2009. [Google Scholar]

- Bux, W.; Iliadis, I. Performance of greedy garbage collection in flash-based solid-state drives. Perform. Eval. 2010, 67, 1172–1186. [Google Scholar] [CrossRef]

- Jang, K.H.; Han, T.H. Efficient garbage collection policy and block management method for NAND flash memory. In Proceedings of the 2010 2nd International Conference on Mechanical and Electronics Engineering (ICMEE), Kyoto, Japan, 1–3 August 2010. [Google Scholar]

- Zhang, Q.; Li, X.; Wang, L.; Zhang, T.; Wang, Y.; Shao, Z. Optimizing deterministic garbage collection in NAND flash storage systems. In Proceedings of the 21st IEEE Real-Time and Embedded Technology and Applications Symposium (RTAS), Seatle, WA, USA, 13–16 April 2015. [Google Scholar]

- Seppanen, E.; O’Keefe, M.T.; Lilja, D.J. High-performance solid-state storage under Linux. In Proceedings of the 2010 IEEE 26th Symposium on Mass Storage Systems and Technologies (MSST), Nevada, NV, USA, 3–7 May 2010. [Google Scholar]

- Guo, J.; Hu, Y.; Mao, B.; Wu, S. Parallelism and Garbage Collection Aware I/O Scheduler with Improved SSD Performance. In Proceedings of the 2017 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Orlando, FL, USA, 29 May–2 June 2017. [Google Scholar]

- Rho, E.; Joshi, K.; Shin, S.U.; Shetty, N.J.; Hwang, J.; Cho, S.; Lee, D.D.; Jeong, J. Fstream: Managing flash streams in the file system. In Proceedings of the 16th USENIX Conference on File and Storage Technologies (FAST 18), Oakland, CA, USA, 12–15 February 2018. [Google Scholar]

- Yan, S.; Li, H.; Hao, M.; Tong, M.H.; Sundararaman, S.; Chien, A.A.; Gunawi, H.S. Tiny-tail flash: Near-perfect elimination of garbage collection tail latencies in NAND SSDs. In Proceedings of the 15th USENIX Conference on File and Storage Technologies (FAST’17), Santa Clara, CA, USA, 27 February–2 March 2017. [Google Scholar]

- Lee, J.; Kim, Y.; Shipman, G.M.; Oral, S.; Kim, J. Preemptible I/O Scheduling of Garbage Collection for Solid State Drives. IEEE Trans. Comput.-Aided Des. Integr. Circ. Syst. 2013, 32, 247–260. [Google Scholar] [CrossRef]

- Yu, S.; Chen, P.Y. Emerging Memory Technologies: Recent Trends and Prospects. IEEE Solid-State Circ. Mag. 2016, 8, 43–56. [Google Scholar] [CrossRef]

- Spinelli, A.S.; Compagnoni, C.M.; Lacaita, A.L. Reliability of NAND Flash Memories: Planar Cells and Emerging Issues in 3D Devices. Computers 2017, 6, 16. [Google Scholar] [CrossRef]

- Jung, M.; Choi, W.; Srikantaiah, S.; Yoo, J.; Kandemir, M.T. HIOS: A host interface I/O scheduler for solid state disks. ACM SIGARCH Comput. Archit. News 2014, 42, 289–300. [Google Scholar] [CrossRef]

- Jin, Y.; Lee, B. Chapter One—A comprehensive survey of issues in solid state drives. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2019; Volume 114, pp. 1–69. [Google Scholar]

- Wang, M.; Hu, Y. Exploit real-time fine-grained access patterns to partition write buffer to improve SSD performance and life-span. In Proceedings of the 32nd IEEE International Performance Computing and Communications Conference(IPCCC), San Diego, CA, USA, 6–8 December 2013. [Google Scholar]

- Li, J.; Xu, X.; Peng, X.; Liao, J. Pattern-based Write Scheduling and Read Balance-oriented Wear-Leveling for Solid State Drives. In Proceedings of the 35th Symposium on Mass Storage Systems and Technologies (MSST), Santa Clara, CA, USA, 20–24 May 2019. [Google Scholar]

- Park, J.K.; Kim, J. An MTS-CFQ I/O scheduler considering SSD garbage collection on virtual machine. In Proceedings of the 14th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (ECTI-CON), Phuket, Thailand, 27–30 June 2017. [Google Scholar]

- Shi, X.; Liu, W.; He, L.; Jin, H.; Li, M.; Chen, Y. Optimizing the SSD Burst Buffer by Traffic Detection. ACM Trans. Archit. Code Optim. 2020, 17, 1–26. [Google Scholar] [CrossRef] [Green Version]

- Hitcachi Global Storage Technologies Hard Disk Drive Specification Deskstar P7K500, CinemaStar P7K500 3.5 Inch Hard Disk Drive. Available online: https://www.manualslib.com/manual/280071/Hitachi-Cinemastar-P7k500.html?page=33#manual (accessed on 13 December 2021).

- Micron NAND Flash Memory MT29F16G08ABABA 16Gb Asynchronous/Synchonous NAND Features datasheet. Available online: https://www.micron.com/products/nand-flash/slc-nand/part-catalog/mt29f16g08ababawp-ait (accessed on 15 July 2020).

- Chung, T.S.; Park, D.J.; Park, S.; Lee, D.H.; Lee, S.W.; Song, J.H. A survey of Flash Translation Layer. J. Syst. Archit. 2009, 55, 332–343. [Google Scholar] [CrossRef]

- Park, C.; Cheon, W.; Kang, J.; Roh, K.; Cho, W.; Kin, J.S. A reconfigurable FTL (flash translation layer) architecture for NAND flash-based applications. ACM Trans. Embed. Comput. Syst. 2008, 7, 1–23. [Google Scholar] [CrossRef]

- Song, Y.; So, H.; Chun, Y.; Kim, H.S.; Hong, Y. An implementation of low latency address-mapping logic for SSD controllers. IEICE Electron. Express 2019, 16, 20190521. [Google Scholar] [CrossRef] [Green Version]

- Chamazcoti, S.A.; Miremadi, S.G. On designing endurance-aware erasure code for SSD-based storage systems. Microprocess. Microsyst. 2016, 45, 283–296. [Google Scholar] [CrossRef]

- Gal, E.; Toledo, S. Algorithms and data structures for flash memories. ACM Comput. Surv. 2005, 37, 138–163. [Google Scholar] [CrossRef]

- Wu, F.; Zhou, J.; Wang, S.; Du, Y.; Yang, C.; Xie, C. FastGC: Accelerate Garbage Collection via an Efficient Copyback-based Data Migration in SSDs. In Proceedings of the 55th ACM/ESDA/IEEE Design Automation Conference (DAC), San Fransisco, CA, USA, 24–28 June 2018. [Google Scholar]

- Agrawal, N.; Prabhakaran, V.; Wobber, T.; Davis, D.J.; Manasse, M.; Panigrahy, R. Design Tradeoffs for SSD Performance. In Proceedings of the 2008 USENIX Annual Technical Conference, Boston, MA, USA, 22–27 June 2008. [Google Scholar]

- Rostedt, S. Ftrace Linux Kernel tracing. In Proceedings of the Linux Conference Japan, Tokyo, Japan, 27–29 September 2010. [Google Scholar]

- Brunelle, A.D. Block i/o layer tracing: Blktrace. In Proceedings of the Gelato-Itanium Conference and Expo (Gelato ICE), San Jose, CA, USA, 23–26 April 2006. [Google Scholar]

- Sysbench Manual. Available online: http://imysql.com/wpcontent/uploads/2014/10/sysbench-manual.pdf (accessed on 22 December 2020).

- MySQL 8.0 Reference Manual. Available online: https://dev.mysql.com/doc/refman/8.0/en/ (accessed on 22 December 2020).

- Cooper, B.F.; Silberstein, A.; Tam, E.; Ramakrishnan, R.; Sears, R. Benchmarking cloud serving systems with YCSB. In Proceedings of the 1st ACM Symposium on Cloud Computing (SOCC 10), Indianapolis IN, USA, 10–11 June 2010. [Google Scholar]

- Apache Cassandra Documentation v4.0-beta4. Available online: https://cassandra.apache.org/doc/latest/ (accessed on 22 December 2020).

- MongoDB Documentation v5.0. Available online: https://docs.mongodb.com/manual/ (accessed on 13 July 2021).

- RocksDB Documentation. Available online: http://rocksdb.org/docs/getting-started.html (accessed on 13 July 2021).

- Phoronix Test Suite v10.0.0 User Manual. Available online: https://www.phoronix-test-suite.com/documentation/phoronix-test-suite.pdf (accessed on 22 December 2020).

- Basak, J.; Wadhwani, K.; Voruganti, K. Storage Workload Identification. ACM Trans. Storage 2016, 12, 1–30. [Google Scholar] [CrossRef]

- Kim, J.; Seo, S.; Jung, D.; Kim, J.S.; Huh, J. Parameter-Aware I/O Management for Solid State Disks (SSDs). IEEE Trans. Comput. 2011, 61, 636–649. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).