Abstract

Face comparison/face mapping is one of the promising methods in face biometrics which needs relatively little effort compared with face identification. Various factors may be used to verify whether two faces are of the same person, among which facial landmarks are one of the most objective indicators due to the same anatomical definition for every face. This study identified major landmarks from 2D and 3D facial images of the same Korean individuals and calculated the distance between the reciprocal landmarks of two images to examine their acceptable range for identifying an individual to obtain standard values from diverse facial angles and image resolutions. Given that reference images obtained in the real-world could be from various angles and resolutions, this study created a 3D face model from multiple 2D images of different angles, and oriented the 3D model to the angle of the reference image to calculate the distance between reciprocal landmarks. In addition, we used the super-resolution method of artificial intelligence to address the inaccurate assessments that low-quality videos can yield. A portion of the process was automated for speed and convenience of face analysis. We conclude that the results of this study could provide a standard for future studies regarding face-to-face analysis to determine if different images are of the same person.

1. Introduction

The face is one of the most important physical features of an individual that externally expresses identity via its own distinctive characteristics. Therefore, the face and face images are critical data in the identity verification process. Currently, with increasing utilization of security and surveillance systems, acquired images are commonly used in public safety and image forgery detection [1,2,3,4]. Therefore, numerous studies have attempted to develop methods to improve the accuracy of identity verification using facial images.

The methods for identity verification using facial images can be categorized into two major approaches. The first is called automated facial recognition (AFR), which uses computer algorithms to compare a database of facial images with the target facial image and identify the most similar database images. Another method is to compare and analyze facial images of the person side-by-side. The second approach is called facial comparison/mapping; this method can be relatively easy for people to use as compared with AFR, which requires a large image database and considerable development cost [5].

In a forensic context, landmark-based face comparison methods can be useful. A method that uses likelihood ratios (LRs) to assess the grouping of facial images based on the morphometric indices was presented in [6]. The landmark values, such as averages and SDs for the reference image, were estimated, and it calculated the probability of these values from the probability distributions for the suspect as well as the population to acquire the likelihood ratio. In addition, using the facial ratio from inter-landmark distances, it performed intra- and inter-sample comparisons using the mean absolute value, Euclidean distance and cosine distance between ratios [7]. The statistics of the two frontal-faces were examined to determine the calculation that best identifies a detectable correlation difference between faces belonging to the same person. In addition, a series of area triplets was constructed for geometric invariance including the area ratio and angle, then used as feature vectors in face identification by the detected landmarks [8].

Some more specific ways of verifying identity are photoanthropometry, morphological analysis, and facial superimposition [9,10,11,12]. When using these methods, many standards can be used in the analysis, such as facial landmarks, the distance between landmarks, the ratio and angle of the landmarks, and distinctive facial structures [13,14,15]. Facial landmarks have the same anatomical definition for every face. Therefore, such landmarks could be used as an objective standard if one could place reciprocal landmarks on test and comparison faces.

The human face has about 80 to 90 nodal points. Facial verification systems measure important aspects, such as the distance between the eyes, the width of the nose, and the length of the chin [16,17]. In addition, the reciprocal landmarks in the frontal or profile face are used directly or defined newly by extending the points [7,18,19,20]. As already demonstrated by many studies, deep facial features for face verification perform much better than the methods that use facial landmarks [18,21,22]. However, the facial landmarks are not only visually easier to explain than deep facial features, but also objective indicators that define the appearance of a face. Therefore, the face landmarks can be useful when forensic cases need to be discussed in court [23,24,25]. The face mapping/comparison is a task of finding out if two people are the same person, similar to inferring lookalike. Applications such as Google’s ‘Art & Culture’ app and Microsoft’s ‘CelebLike’ are famous for their ability to show the faces of celebrities who resemble the user. Finding a similar face is related to the face verification that selects one ID that is most likely to match the input in that it infers the face image that is most perceptually similar to the input [21,26,27]. Humans can identify two people as different identities, even if their faces are very similar [27]. To estimate the similarity between two face images, many studies have utilized the distance between low-level feature descriptors, such as SIFT (Scale-Invariant Feature Transform), facial shape, facial features, or facial texture [8,28,29,30,31]. Recent studies have explored improving facial verification performance by combining these traditional features and deep-learning technologies [32,33,34].

However, there can exist differences between identifiable features based on the image resolution or angle of the face. To handle the pose problem, a promising method is to use 3D facial information because the restored 3D face model is available to render target facial poses. It is an ill-posed problem to generate 3D information from a single image [35], therefore, prior-model based methods have been proposed, which require 3D scan data of a large number of people to reproduce various face shapes of the input [36,37,38]. In addition, a 3D geometry can be directly estimated from images using the SfM (Structure from Motion)—methods for estimating camera movements from image sequences with various viewpoints [39,40]. In addition, if there are several photos of the object illuminated in different directions, the depth can be estimated more accurately by combining this information. These photometric stereo techniques have been traditionally applied for 3D reconstruction [41,42]. Recently, deep-learning-based super-resolution studies have brought great progress in image quality improvement. There are many methods for super resolution that are based on deep-learning technology, such as super resolution using deep convolutional network (SRCNN), coupled deep convolutional auto-encoder (CDCA), and very deep convolutional network-based super resolution (VDSR) [43,44,45].

Theoretically, the distance between reciprocal landmarks would be close to zero if the facial pose of two images obtained from an individual were aligned perfectly. However, it is impossible for the calculated distance to be zero due to the bias that occurs when a professional analyst identifies landmarks or due to inaccuracies that occur when images of different resolutions are compared. Nevertheless, if one could minimize such potential errors and accurately locate landmarks on both images, then the distance between reciprocal landmarks would approach zero if the images being compared were of the same individual. Therefore, it might be possible to use the distance between reciprocal landmarks of images of the same individual as a standard for identity verification.

In general, large-scale datasets, such as MS-Celeb-1M [46] with 10 million face images, are required for face identification, and complex algorithms and deep-learning architectures need to be designed to process them. Our study has the novelty in that it provides effective landmarks for face identification by analyzing traditional facial landmarks that represent the structure of a face, as a way to determine whether it is the same person by comparing two input images. This can provide visual clues for identification in that it is simpler and contains more interpretable morphometric features than the deep-learning features. In addition, the images of suspects acquired from security cameras contain variations in facial pose and expression, low-image resolution, and occlusion of the face by hair or accessories. These factors can degrade the comparison performance of experts such as investigators [47,48].

This study aimed to resolve issues caused by different poses and image resolutions. First, to solve the problems caused by pose variation between reference and comparison images, we created a 3D face model of a subject via multiple images acquired from multiple cameras with various angles, then rotated the model pose to match that of the comparison image. Second, the comparison image obtained from CCTV (Closed-Circuit Television) generally has low resolution, which can degrade the performance of the face analyst who must manually locate facial landmarks. Thus, image enhancement could improve the accuracy of the location of landmarks. In this paper, a super-resolution method via deep learning is applied to solve this problem. In addition, to improve speed, convenience, and accuracy of face analysis, we utilized a machine learning-based facial landmark detector, which is widely used in computer vision. Further, we provide an index for identity verification based on facial landmarks and an associated threshold from which to determine whether different images have the same identity.

The contributions of this study can be summarized as follows:

- We present a landmark-based face mapping method which can represent the morphology of the face and easier provide visual and interpretable cues than deep features.

- We provide the landmark indices and associated thresholds by which to determine whether input images have the same identity.

- To cope with the images of low resolution and various poses, this study extracts more accurate facial landmarks from the input faces through restoring the 3D model to correct the poses and improving the image quality.

2. Materials and Methods

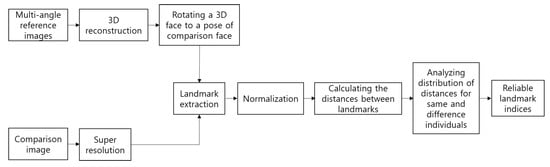

The overall flow of the identity verification method described in this paper, which uses the distance between reciprocal landmarks of a reference image and comparison image, is as follows.

First, to estimate a 3D geometry of the comparison face, we used photogrammetric range imaging technique from a sequence of facial images. The facial pose of the reconstructed 3D face model is rotated into the same pose as the comparison image obtained by security cameras such as CCTV. In addition, super-resolution technology is applied to the comparison image with low resolution to accurately extract facial landmarks. Next, the facial landmarks within each image are detected by an elastic model-based facial landmark detector; a professional facial analyst may adjust the detected landmarks for increased accuracy. Based on these facial landmarks, size normalization is performed to generate two images of same size and enable comparison of the locations of landmarks. The size of the facial image is adjusted by setting the interpupillary distance (IPD) to 100 pixels for a frontal presentation of the image. For other views, the distance between the nasion (midline depth of the nasal root) and gnathion (lowest median landmark on the lower border of the chin) are set to 100 pixels. Finally, we analyze the distribution of distances for the same individual and different individuals by calculating the distance between reciprocal landmarks of normalized facial images. The whole process is described in Figure 1.

Figure 1.

Overall framework of the proposed method.

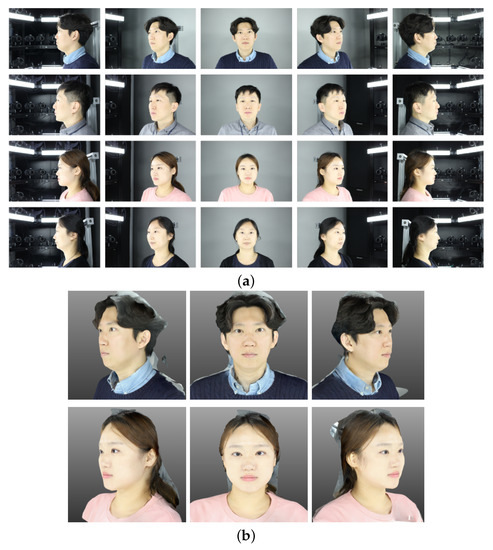

To perform an identity verification experiment, we first constructed a Korean face dataset that consisted of over 2500 images (the K-Face DB [49]. https://github.com/k-face/k-face_2019, accessed on 21 December 2019). The dataset was collected from 50 Koreans (25 males and 25 females) who ranged in age from 20 to 50 years. By using a multiple-camera system with various angles and a light controller, over 50 images of each person were captured. Specifically, 40 images were collected as comparison images with 5 different facial angles, 2 different lighting conditions under 400 lux and 200 lux, and 4 different resolutions which include the ones higher than WQXGA (Wide Quad Extended Graphics Array) and WVGA (Wide Video Graphics Array) and lower than WVGA. The obtained image resolutions are 2592 × 1728, 864 × 576, 346 × 230, 173 × 115, respectively. Here, we assume the image with higher resolution than WQXGA and WVGA as high-resolution and mid-resolution image, respectively, and the rest as low-resolution image. Additionally, 12–14 images from each camera angle under uniform lighting were acquired to generate the 3D face models. The 3D face reconstruction process extracted 2D feature points from images, calculated their correspondences, extracted a mesh, and restored the facial textures based on the SfM technique [39]. We applied GPU (Graphics Processing Unit) acceleration such that the total execution time was less than one minute. Generally, there exists a problem of fixing the model’s scale and rotation coordinate system when restoring 3D data from photos. To address this issue, we post-processed all models to have physical dimensions and coordinate system based on measured distances and locations of some of the cameras of the multi-camera system. With these steps, we created a dataset that consisted of a 3D face model and 40 2D facial images per person, which we used to conduct the distance-calculation experiment for identity verification. Before collecting data, we informed the research participants about the general purpose of the research and allowed them to sign a consent form if they wished to participate. An example of a constructed dataset, which included obtained images and reconstructed 3D face models, is shown in Figure 2. The resolutions of images collected by real-world security cameras vary. If an image is low-definition, there are limitations in accurately determining facial landmarks. To address this issue, we utilized a VDSR network [45] which has simple and good performance by learning the mapping between low-resolution and high-resolution images based on residual learning. The overall structure is that it passes an input image through several convolutional layers and acquires an enhanced image as a result. To train the architecture, the CelebA and BERC databases were used [50,51]. Figure 3 shows an example of the result of super resolution based on deep learning.

Figure 2.

Example of dataset construction: (a) obtained images; (b) 3D face model based on 3D image reconstruction.

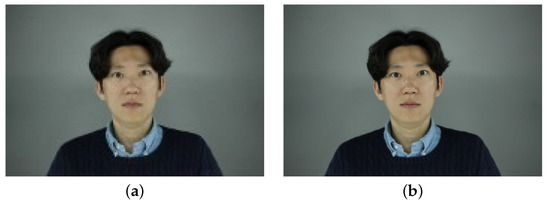

Figure 3.

Example of the result of super resolution: (a) low-resolution input images; (b) result of super resolution.

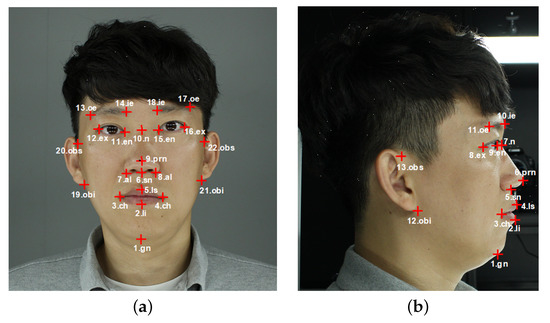

Next, the facial landmarks are detected in the reference image and comparison image via the above steps. For the automatic landmark detection process, we utilized the wild-feature detector [52] for feature detection tasks. This assumes that the face shape is a tree structure, and uses a part-based model for face detection, pose estimation, and facial feature detection. In the current approach, the landmark detector extracts the facial region, facial pose angle, and facial landmarks concurrently, using 3D face structure and an elastic model. In particular, the facial landmarks considered in this paper are shown in Table 1 and Table 2. These landmarks were chosen with reference to previous studies of identity verification, and include 4 positions on eyebrows to utilize this part of the face in identity verification [2,7,20,53] as described in Figure 4.

Table 1.

Frontal pose facial landmark index numbers and definitions [2,7,20,53].

Table 2.

Side pose facial landmark index numbers and definition [2,7,20,53].

Figure 4.

The location of facial landmarks: (a) frontal pose; (b) side pose.

To train the facial landmark detector, the Multi-PIE database was used [54]. The Multi-PIE database is a large-scale facial database that consists of different poses, illumination conditions, and facial expressions of 337 of a variety of races, with a male-to-female ratio of 7 to 3. Figure 5 is a sample from the Multi-PIE database.

Figure 5.

Example face images from the Multi-PIE database [54].

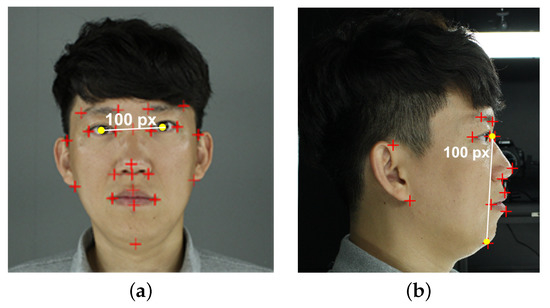

After detecting the 22 facial landmarks and 13 facial landmarks for the frontal pose and side pose, respectively, professional facial analysts manually adjusted the detected facial landmarks. Before calculating the distance between reciprocal landmarks, image size normalization was performed. This procedure normalized the face sizes within a comparison pair of images with different face sizes. Different images of the same individual could differ in size because of the characteristics of the respective cameras, the distance between camera and subject, and so on. Here, we set the IPD to 100 pixels for frontal poses and the distance between the nasion and gnathion to 100 pixels for other poses. Figure 6 describes the examples of facial region size differences for two images of the same person and Figure 7 illustrates the example of size normalization, respectively.

Figure 6.

Example of facial region size differences for two images of the same person obtained using different cameras.

Figure 7.

Example of size normalization: (a) frontal pose; (b) side pose.

3. Results

Using the materials and data described above, we experimentally assessed identity verification in 3 ways. First, we calculated the distance between each reciprocal landmarks. The nasion and gnathion were excluded when calculating the distances because they were used to normalize image size. Thus, 22 distances for frontal poses and 11 distances for side poses were calculated. Euclidean Distance (ED) was used as the distance measure, according to Equation (1).

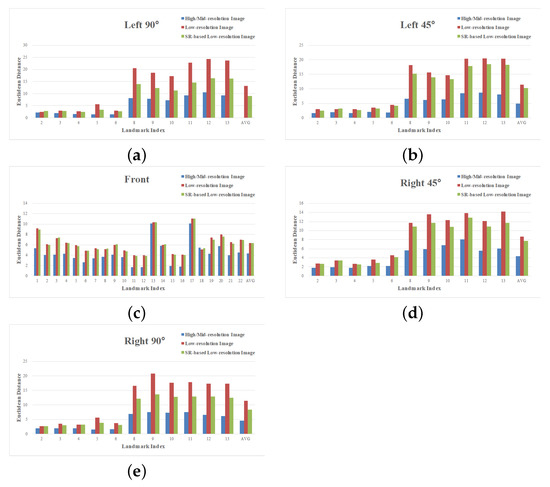

The dissimilarity of each landmark position was subject to pixel error. IPD is generally 63.36 mm [55]. Thus, a 1-pixel error was equivalent to 0.63 mm of distance because we set the IPD to 100 pixels. Accordingly, we analyzed the pixel error distribution for the same individual and for two different individuals to obtain standard values for identity verification. Before the experiment, we compared the average distance between reciprocal landmarks of each image resolution. Figure 8 shows the result of such comparison among high/mid-resolution, low-resolution, and enhanced low-resolution based on the super-resolution method. As mentioned in Section 1, low-image resolution can degrade the comparison performance of investigators. Thus, low-resolution images cause a problem of making the EDs larger, even if two images are identical. As shown in Figure 8, the EDs of low-resolution images (red bar) are more than double the distance of EDs of high/mid-resolution images (blue bar). By applying the super-resolution to low-resolution image (green bar), we could reduce the EDs for the same identity. Based on this result, we constructed a comparison image dataset which consists of high/mid-resolution images and enhanced low-resolution images obtained via super resolution. By using the constructed comparison dataset, we measured the EDs between each reciprocal landmarks according to 5 facial pose angles. The results are shown in Figure 8 and Table 3. In Figure 8, the X-axis, Y-axis, blue bars, and red bars refer to the landmark indexes defined in Table 1, calculated ED, distances for different images of the same individual, and distance for images of different individuals, respectively.

Figure 8.

The comparison of average ED of same identity between high/mid-resolution, low-resolution, and enhanced low-resolution based on super-resolution method: (a) Left 90°; (b) Left 45°; (c) Front; (d) Right 45°; (e) Right 90°.

Table 3.

Mean and Standard deviation of ED between each reciprocal landmark.

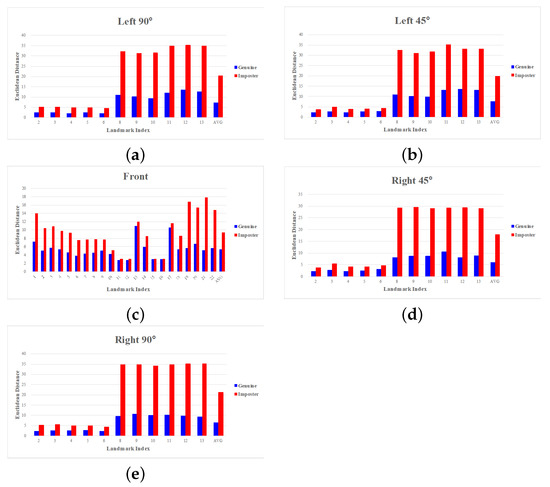

As shown in Figure 9 and Table 3, the distance between reciprocal landmarks tended to decrease as the pose angle approached that of a frontal pose. In particular, the landmarks near the eyes for the frontal pose had small values due to the size normalization based on the center of the two eyes; this decreased the distance between landmarks for different individuals. Thus, it was difficult to distinguish individuals using the landmarks near the eyes for frontal poses. For example, the right exocanthion (index number 16) had an average pixel ED of 2.96 for the same identity and 3.05 for different identities. If these distances are converted into mm, the values are 1.86 mm and 1.92 mm, respectively. Thus, if the facial analyst even slightly mis-positioned the landmark, two images obtained from different persons might be diagnosed as the same identity. In the case of landmarks, except the eye area, the distance between landmarks for the same identity was about half as small as that for different persons, and the average EDs of different identity near the ear area was almost three times larger than that of the same identity. Among the landmarks in the ear area, we could confirm that both otobasion inferius (index number 19, 21) are the most discriminable based on the average distance between same and different individual in case of frontal facial pose. For side poses, the ED between landmarks near the eye and ear were significantly different for the same identity versus different identities, which validates the importance of landmarks near the eye and ear for identity verification.

Figure 9.

Average ED between each reciprocal landmark with different facial poses: (a) Left 90°; (b) Left 45°; (c) Front; (d) Right 45°; (e) Right 90°.

Based on the mean and standard deviation of ED of the landmark, we measured the value to select the reliability of the facial landmark [56]. The measurement is statistically used to calculate the distance between two distributions based on both mean and standard deviation. The equation of is as follows.

In Equation (2), and denote the mean of ED for same identity and different identity, respectively. In addition, -genuine and -imposter are the standard deviations of them. As the distance between the two distributions increases, the value of also becomes greater. Based on this, it is possible to select the reliable landmarks that can distinguish the identity of the same and different individuals. As shown in Table 3, we can confirm that the landmarks in the eye and ear region have the reliability to verify the same identity compared to other landmarks.

In addition, we calculated the confidence interval of the population mean to be 95% confidence. In case of this experiment, the number of samples for the same identity was 400, so the confidence interval of the population of right otobasion inferius (index number 19) ranged from 5.261 to 5.938. From this result, we set the maximum value of the confidence interval as standard value for identity verification. Thus, we are confident that the probability of two images having the same identity is high if the ED from otobasion inferius is smaller than 5.938. The threshold for identity verification of all the landmarks are shown in the rightmost column in Table 3.

In the second experiment, we divided the face into 4 regions (eye, nose, lip, and ear) and calculated EDs using the landmarks in each region. This experiment enabled us to determine which facial regions were more effective for identity verification. The facial landmarks and index numbers in each region are shown in Table 4.

Table 4.

Facial landmarks and index numbers of each facial region.

In this experiment, the landmarks used for size normalization were excluded, as in the previous experiment. From the result, the in the eye region of the frontal face has a very low value as in the previous experiment, which means that it could not be utilized for same identity verification. Based on the , the eye region, except the frontal pose and ear region, are the important facial parts to verify same identity which have more reliability than other regions. The results are shown in Table 5.

Table 5.

Mean and Std. of EDs between reciprocal landmarks in each region.

In the last experiment, we calculated the ED based on entire facial landmarks, as shown in Table 6. The between two distributions of the overall pose angle were similar or higher than that of the previous experiment. In other words, as the use of overall landmarks, the probability of distinction between the same person and different person would increase, which means that it can be used more reliably in identity verification. All of the standard values for identity verification in each facial pose were 2.879, 3.002, 1.406, 2.307, 2.507, respectively. All proposed methods are described in Algorithm 1.

| Algorithm 1 Proposed Method for Selecting Reliable Landmark Indices. |

| Input: Multi-view references images, comparison image Output: ED and landmark indices

|

Table 6.

Mean and Std. of EDs between reciprocal landmarks in each region.

4. Discussion and Conclusions

In this paper, by creating a 3D face model from multiple images taken from multiple angles and reorienting it to match the angle of a comparison image, we resolved the issues associated with comparisons of different face angles and poses. Inaccuracies caused by low-resolution images typical of security cameras frequently occurred. To resolve this problem, we applied the super-resolution method based on deep learning, which converted the input into a high definition image, and proved the effect of super resolution by comparing the average distance with that from a low-resolution image. In addition, we applied an automatic facial landmark detector to improve speed, convenience, and accuracy for face analysts who typically must manually indicate facial landmarks on each image.

Finally, we provided a standard ED value and more reliable facial landmarks for identity verification. By using the measurement between two distributions, which are from same identity and different identity, we could confirm the reliable landmarks according to each facial pose. As mentioned in the experimental results, the landmarks near the eyes and ears had a higher distinguish ability than others. The most reliable landmarks were otobasion inferius and otobasin superius in overall facial pose, and exocanthion, endocanthion, inner eyebrow, outer eyebrow were also reliability ones in the case of side pose. In addition, we proved that eyes and ears are significant facial components for identity verification by analyzing the distributions from landmark grouping comparison experiment. In addition, the standard threshold for identity verification was determined with 95% confidence by statistical analysis based on mean and standard deviation.

In future work, we plan to study standard measures by applying the proposed methods from various face datasets and, further, identity verification based on image comparisons that are robust to different facial expressions and occlusion of facial areas by hair or accessories. We also aim to improve on the reliability of our identity verification method by analyzing the relationships between lines and angles that define landmarks.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The author declares no conflict of interest.

References

- Bashbaghi, S.; Granger, E.; Sabourin, R.; Parchami, M. Deep learning architectures for face recognition in video surveillance. arXiv 2019, arXiv:1802.09990. [Google Scholar]

- Lee, W.J.; Kim, D.M.; Lee, U.Y.; Cho, J.H.; Kim, M.S.; Hong, J.H.; Hwang, Y.I. A preliminary study of the reliability of anatomical facial landmarks used in facial comparison. J. Forensic Sci. 2018, 64, 519–527. [Google Scholar] [CrossRef] [PubMed]

- Johnston, R.A.; Bindemann, M. Introduction to forensic face matching. Appl. Cogn. Psychol. 2013, 27, 697–699. [Google Scholar] [CrossRef]

- Li, Y.; Meng, J.; Luo, Y.; Huang, X.; Qi, G.; Zhu, Z. Deep convolutional neural network for real and fake face discrimination. In Proceedings of the Chinese Intelligent Systems Conference, Shenzhen, China, 24–25 October 2020; pp. 590–598. [Google Scholar] [CrossRef]

- FISWG. Guidelines for fa7ial Comparison Methods. Available online: www.fiswg.org (accessed on 8 August 2018).

- Rajesh, V.; Navdha, B.; Arnav, B.; Kewal, K. Towards facial recognition using likelihood ratio approach to facial landmark indices from images. Forensic Sci. Int. Rep. 2022, 5, 100254. [Google Scholar]

- Kleinberg, K.F.; Siebert, J.P. A study of quantitative comparisons of photographs and video images based on landmark derived feature vectors. Forensic Sci. Int. 2012, 219, 248–258. [Google Scholar] [CrossRef] [PubMed]

- Juhong, A.; Pintavirooj, C. Face recognition based on facial landmark detection. In Proceedings of the 10th Biomedical Engineering International Conference, Hokkaido, Japan, 31 August–2 September 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Houlton, T.M.R.; Steyn, M. Finding Makhubu: A morphological forensic facial comparison. Forensic Sci. Int. 2018, 285, 77–82. [Google Scholar] [CrossRef] [PubMed]

- Stavrianos, C.; Zouloumis, L.; Papadopoulos, C.; Emmanouil, J.; Petalotis, N.; Tsakmalis, P. Facial mapping: Review of current methods. Res. J. Med. Sci. 2012, 6, 13–20. [Google Scholar] [CrossRef]

- Moreton, R.; Morley, J. Investigation into the use of photoanthropometry in facial image comparison. Forensic Sci. Int. 2011, 212, 231–237. [Google Scholar] [CrossRef]

- Kleinberg, K.F.; Vanezis, P.; Burighton, A.M. Failure of anthropometry as a facial identification technique using high-quality photographs. J. Forensic Sci. 2007, 52, 779–783. [Google Scholar] [CrossRef]

- Atsuchi, M.; Tsuji, A.; Usumoto, Y.; Yoshino, M.; Ikeda, N. Assessment of some problematic factors in facial image identification using a 2D/3D superimposition technique. Leg. Med. 2013, 15, 244–248. [Google Scholar] [CrossRef]

- Porighter, G.; Doran, G. An anatomical and photographic technique for forensic facial identification. Forensic Sci. Int. 2000, 114, 97–105. [Google Scholar] [CrossRef]

- Catterick, T. Facial measurements as an aid to recognition. Forensic Sci. Int. 1992, 56, 23–27. [Google Scholar] [CrossRef]

- NEC Corporation FR White Paper. Available online: https://www.nec.com/en/global/solutions/safety/pdf/NEC-FR_white-paper.pdf (accessed on 20 August 2021).

- Thorat, S.B.; Nayak, S.K.; Dandale, J.P. Facial recognition technology: An analysis with scope in India. Int. J. Comput. Netw. Inf. Secur. 2010, 8, 325–330. [Google Scholar]

- Giuseppe, A.; Fabrizio, F.; Claudio, G.; Claudio, V. A Comparison of face verification with facial landmarks and deep features. In Proceedings of the 2018 the Tenth International Conference on Advances in Multimedia, Athens, Greece, 22–26 April 2018; pp. 1–6. [Google Scholar]

- Bakshi, S.; Kumari, S.; Raman, R.; Sa, P.K. Evaluation of periocular over face biometric: A case study. Procedia Eng. 2012, 38, 1628–1633. [Google Scholar] [CrossRef][Green Version]

- Bottino, A.; Cumani, S. A fast and robust method for the identification of face landmarks in profile images. WSEAS Trans. Comput. 2008, 7, 1250–1259. [Google Scholar]

- Parkhi, O.M.; Vedaldi, A.; Zisserman, A. Deep face recognition. In Proceedings of the 2015 British Machine Vision Conference, Swansea, UK, 7–10 September 2015; pp. 41.1–41.12. [Google Scholar] [CrossRef]

- Taigman, Y.; Yang, M.; Ranzato, M.A.; Wolf, L. DeepFace: Closing the gap to human-level performance in face verification. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar] [CrossRef]

- Arbab-Zavar, B.; Wei, X.; Bustard, J.D.; Nixon, M.S.; Li, C.-T. On forensic use of biometrics. In Handbook of Digital Forensics of Multimedia Data and Devices Publishing House; Wiley-IEEE Press: Hoboken, NJ, USA, 2007; pp. 207–304. [Google Scholar]

- Khan, M.A.; Anand, S.J. Suspect identification using local facial attributed by fusing facial landmarks on the forensic sketch. In Proceedings of the 2020 International Conference on Contemporary Computing and Applications (IC3A), Lucknow, India, 5–7 February 2020; pp. 18–186. [Google Scholar]

- Vezzetti, E.; Marcolin, F.; Tornincasa, S.; Moos, S.; Violante, M.G.; Dagnes, N.; Monno, G.; Uva, A.E.; Fiorentino, M. Facial landmarks for forensic skull-based 3d face reconstruction: A literature review. In Proceedings of the 2016 Augmented Reality, Virtual Reality, and Computer Graphics, Otranto, Italy, 24–27 June 2016; pp. 172–180. [Google Scholar]

- Zhu, Z.; Luo, Y.; Chen, S.; Qi, G.; Mazur, N.; Zhong, C.; Li, Q. Camera style transformation with preserved self-similarity and domain-dissimilarity in unsupervised person re-identification. J. Vis. Commun. Image Represent. 2021, 80, 103303. [Google Scholar] [CrossRef]

- Sadovnik, A.; Gharbi, W.; Vu, T.; Gallagher, A. Finding your lookalike: Measuring face similarity rather than face identity. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2345–2353. [Google Scholar] [CrossRef]

- Cao, Q.; Ying, Y.; Li, P. Similarity metric learning for face recognition. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2408–2415. [Google Scholar] [CrossRef]

- Tredoux, C. A direct measure of facial similarity and its relation to human similarity perceptions. J. Exp. Psychol. Appl. 2002, 8, 180–193. [Google Scholar] [CrossRef]

- Abudarham, N.; Yovel, G. Same critical features are used for identification of familiarized and unfamiliar faces. Vis. Res. 2019, 157, 105–111. [Google Scholar] [CrossRef]

- Pierrard, J.-S.; Vetter, T. Skin detail analysis for face recognition. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Yang, J.; Bulat, A.; Tzimiropoulos, G. FAN-Face: A simple orthogonal improvement to deep face recognition. In Proceedings of the 2020 Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12621–12628. [Google Scholar] [CrossRef]

- Macarulla Rodriguez, A.; Geradts, Z.; Worring, M. Likelihood ratios for deep neural networks in face comparison. J. Forensic Sci. 2020, 65, 1169–1183. [Google Scholar] [CrossRef]

- Songsri, K.; Zafeiriou, S. Complement face forensic detection and localization with facial landmarks. arXiv 2019, arXiv:1910.05455. [Google Scholar]

- Kemelmacher-Shlizerman, I.; Seitz, S.M. Face reconstruction in the wild. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 1746–1753. [Google Scholar]

- Blanz, V.; Vetter, T. A morphable model for the synthesis of 3D faces. In Proceedings of the SIGGRAPH ’99: 26th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 8–13 August 1999; pp. 187–194. [Google Scholar]

- Paysan, P.; Knothe, R.; Amberg, B.; Romdhani, S.; Vetter, T. A 3D face model for pose and illumination invariant face recognition. In Proceedings of the IEEE Conference on Advanced Video and Signal Based Surveillance, Genova, Italy, 2–4 September 2009; pp. 296–301. [Google Scholar]

- Vlasic, D.; Brand, M.; Pfister, H.; Popović, J. Face transfer with multilinear models. ACM Trans. Graph. 2005, 24, 426–433. [Google Scholar] [CrossRef]

- Snavely, N.; Seitz, S.M.; Szeliski, R. Photo tourism: Exploring photo collections in 3D. ACM Trans. Graph. 2006, 25, 835–848. [Google Scholar] [CrossRef]

- Debevec, P.E.; Taylor, C.J.; Malik, J. Modeling and rendering architecture from photographs: A hybrid geometry-and image-based approach. In Proceedings of the 1996 SIGGRAPH, New Orleans, LA, USA, 4–9 August 1996; pp. 11–20. [Google Scholar] [CrossRef]

- Kemelmacher-Shlizerman, I.; Basri, R. 3D Face Reconstruction from a Single Image Using a Single Reference Face Shape. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 394–405. [Google Scholar] [CrossRef] [PubMed]

- Roth, J.; Tong, Y.; Liu, X. Unconstrained 3D face reconstruction. In Proceedings of the Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 2606–2615. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE T. Pattern Anal. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Sharma, M.; Chaudhury, S.; Lall, B. Deep learning based frameworks for image super-resolution and noise-resilient super-resolution. In Proceedings of the 2017 IEEE International Joint Conference on Neural Networks, Anchorage, AK, USA, 14–19 May 2017; pp. 744–751. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution sing Very Deep Convolutional Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar] [CrossRef]

- Guo, Y.; Zhang, L.; Hu, Y.; He, X.; Gao, J. MS-Celeb-1M: A dataset and benchmark for large-scale face recognition. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 87–102. [Google Scholar] [CrossRef]

- Ogawa, Y.; Wada, B.; Taniguchi, K.; Miyasaka, S.; Imaizumi, K. Photo anthropometric variations in Japanese facial features: Establishment of large-sample standard reference data for personal identification using a three-dimensional capture system. Forensic. Sci. Int. 2015, 257, e1–e9. [Google Scholar] [CrossRef]

- Yoshino, M.; Noguchi, K.; Atsuchi, M.; Kubota, S.; Imaizumi, K.; Thomas, C.D.; Clement, J.G. Individual identification of disguised faces by morphometrical matching. Forensic Sci. Int. 2002, 127, 97–103. [Google Scholar] [CrossRef]

- Choi, Y.; Park., H.; Nam., G.P.; Kim, H.; Choi, H.; Cho, J.; Kim, I.-J. K-FACE: A large-Scale KIST face database in consideration with unconstrained environments. arXiv 2021, arXiv:2103.02211. [Google Scholar]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar] [CrossRef]

- Choi, S.H.; Lee, Y.J.; Lee, S.J.; Park, K.R.; Kim, J. Age estimation using a hierarchical classifier based on global and local facial features. Pattern Recogn. 2011, 44, 1262–1281. [Google Scholar] [CrossRef]

- Zhu, X.; Ramanan, D. Face detection, pose estimation, and landmark localization in the wild. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2879–2886. [Google Scholar] [CrossRef]

- Wu, J.; Tse, R.; Heike, C.L.; Shapiro, L.G. Learning to compute the plane of symmetry for human faces. In Proceedings of the 2nd ACM Conference on Bioinformatics, Computational Biology and Biomedicine, Chicago, IL, USA, 1–3 August 2011; pp. 471–474. [Google Scholar] [CrossRef]

- Gross, R.; Matthews, I.; Cohn, J.F.; Kanada, T.; Baker, S. Multi-PIE. In Proceedings of the 2008 8th IEEE International Conference on Automatic Face Gesture Recognition, Amsterdam, Netherlands, 17–19 September 2008; pp. 1–8. [Google Scholar] [CrossRef]

- Gordon, C.C.; Blackwell, C.L.; Bradtmiller, B.; Parham, J.L.; Barrientos, P.; Paquette, S.P.; Corner, B.D.; Carosn, J.M.; Venezia, J.C.; Rockwell, B.M.; et al. 2012 Anthropometric Survey of U.S. Army Personnel: Methods and Summary Statistics; Technical Report NATICK/15-007; U.S. Army Natick Soldier Research, Development and Engineering Center: Natick, MA, USA, 2014. [Google Scholar]

- Thomas, W. Elementary Signal Detection Theory; Oxford University Press: New York, NY, USA, 2001. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).