Abstract

In this study, we propose a simple and effective preprocessing method for subword segmentation based on a data compression algorithm. Compression-based subword segmentation has recently attracted significant attention as a preprocessing method for training data in neural machine translation. Among them, BPE/BPE-dropout is one of the fastest and most effective methods compared to conventional approaches; however, compression-based approaches have a drawback in that generating multiple segmentations is difficult due to the determinism. To overcome this difficulty, we focus on a stochastic string algorithm, called locally consistent parsing (LCP), that has been applied to achieve optimum compression. Employing the stochastic parsing mechanism of LCP, we propose LCP-dropout for multiple subword segmentation that improves BPE/BPE-dropout, and we show that it outperforms various baselines in learning from especially small training data.

1. Introduction

1.1. Motivation

Subword segmentation has been established as a standard preprocessing method in neural machine translation (NMT) [1,2]. In particular, byte-pair encoding (BPE)/BPE-dropout [3,4] is the most successful compression-based subword segmentation. We propose another compression-based algorithm, denoted by LCP-dropout, that generates multiple subword segmentations for the same input; thus, enabling data augmentation especially for small training data.

In NMT, a set of training data is given to the learning algorithm, where training data are pairs of sentences from the source and target languages. The learning algorithm first transforms each given sentence into a sequence of tokens. In many cases, the tokens correspond to words in the unigram language model.

The extracted words are projected from a high-dimensional space consisting of all words to a low-dimensional vector space by word embedding [5], which enables us to easily handle distances and relationships between words and phrases. The word embedding has been shown to boost the performance of various tasks [6,7] in natural language processing. The space of word embedding is defined by a dictionary constructed from the training data, where each component of the dictionary is called vocabulary. Embedding a word means representing it by a set of related vocabularies.

Constructing an appropriate dictionary is one of the most important tasks in this study. Here, consider the simplest strategy that uses the words themselves in the training data as the vocabularies. If a word does not exist in the current dictionary, it is called an unknown word, and the algorithm decides whether or not to register it in the dictionary. Using a sufficiently large dictionary can reduce the number of unknown words as much as desired; however, as a trade-off, overtraining is likely to occur, so the number of vocabularies is usually limited to and . As a result, subword segmentation has been widely used to construct a small dictionary with high generalization performance [8,9,10,11,12].

1.2. Related Works

Subword segmentation is a recursive decomposition of a word into substrings. For example, let the word ‘study’ be registered as a current vocabulary. By embedding other words ’studied’ and ’studying’, we can learn that these three words are similar; however, each time a new word appears, the number of vocabularies grows monotonically.

On the other hand, when we focus on the common substrings of these words, we can obtain a decomposition, such as ‘stud_y’, ‘stud_ied’, and ‘stud_ying’ with the explicit blank symbol ‘_’; therefore, the idea of subword segmentation is not to register the word itself as a vocabulary but to register its subwords. In this case, ‘study’ and ‘studied’ are regarded as known words because they can be represented by combining subwords already registered. These subwords can also be reused as parts of other words (e.g., student and studied), which can suppress the growth of vocabulary size.

In the last decade, various approaches have been proposed along this line. SentencePiece [13] is a pioneering study based on likelihood estimation over the unigram language model, which has high performance. Since maximum likelihood estimation requires quadratic time in the size of training data and the length of the longest subword, a simpler subword segmentation [3] based on BPE [14,15], which is known as one of fastest data compression algorithms, and therefore has many applications, especially in information retrieval [16,17] has been proposed.

BPE-based segmentation starts from a state where a sentence is regarded as a sequence of vocabularies where the set of vocabularies is initially identical to the set of alphabet symbols (e.g., ASCII characters). BPE calculates the frequency of any bigram, merges all occurrences of the most frequent bigram, and registers the bigram as a new vocabulary. This process is repeated until the number of vocabularies reaches the limit. Thanks to the simplicity of the frequency-based subword segmentation, BPE runs in linear time in the size of input string.

However, frequency-based approaches may generate inconsistent subwords for the same substring occurrences. For example, ‘impossible’ and its substring ‘possible’ are possibly decomposed into undesirable subwords, such as ‘po_ss_ib_le’ and ‘i_mp_os_si_bl_e’, depending on the frequency of bigrams. Such merging disagreements can also be caused by misspellings of words or grammatical errors. BPE-dropout [4] proposed a robust subword segmentation for this problem by ignoring each merge with a certain probability. It has been confirmed that BPE-dropout can be trained with higher accuracy than the original BPE and SentencePiece on various languages.

1.3. Our Contribution

We propose LCP-dropout: a novel compression-based subword segmentation employing the stochastic compression algorithm, called locally consistent parsing (LCP) [18,19], to improve the shortcomings of BPE. Here, we describe an outline of the original LCP. Suppose we are given an input string and a set of vocabularies, where similarly to BPE, the set of vocabularies is initially identical to the set of symbols appearing in the string. LCP randomly assigns the binary label for each vocabulary. Then, we obtain a binary string corresponding to the input string where the bigram ‘10’ works as a landmark. LCP merges any bigram in the input string corresponding to a landmark in the binary string, and adds the bigram to the set of vocabularies. The above process is repeated until the number of vocabularies reaches the limit.

By this random assignment, it is expected that any sufficiently long substring contains a landmark. Furthermore, we note that two different landmarks never overlap each other; therefore, LCP can merge bigrams appropriately, avoiding the undesirable subword segmentation that occurs in BPE. Using these characteristics, LCP has been theoretically shown to achieve almost optimal compression [19]. The mechanism of LCP has also been mainly applied to information retrieval [18,20,21].

A notable feature of the stochastic algorithm is that LCP assigns a new label to each vocabulary for each execution. Owing to this randomness, the LCP-based subword segmentation is expected to generate different subword sequences representing a same input; thus, it is more robust than BPE/BPE-dropout. Moreover, these multiple subword sequences can be considered as data augmentation for small training data in NMT.

LCP-dropout consists of two strategies: landmark by random labeling for all vocabularies and dropout of merging bigrams depending on the rank in the frequency table. Our algorithm requires no segmentation training in addition to counting by BPE and labeling by LCP and uses standard BPE/LCP in test time; therefore, our algorithm is simple. With various language corpora including small datasets, we show that LCP-dropout outperforms the baseline algorithms: BPE/BPE-dropout/SentencePiece.

2. Background

We use the following notations throughout this paper. Let be the set of alphabet symbols, including the blank symbol. A sequence S formed by symbols is called a string. and are i-th symbol and substring from to of S, respectively. We assume the meta symbol ‘−’ not in to explicitly represent each subwords in S. For a string S from , a maximal substring of S including no − is called a subword. For example, contains the subwords in , respectively.

In subword segmentation, the algorithm decomposes all the symbols in S by the meta symbol. When a trigram is merged, the meta symbol is erased and the new subword is added to the vocabulary, i.e., is treated as a single vocabulary.

In the following, we describe previously proposed subword segmentation algorithms, called SentencePiece (Kudo [13]), BPE (Sennrich et al. [3]), and BPE-dropout (Provilkov et al. [4]). We assume that our task in NMT is to predict a target sentence T given a source sentence S, where these methods including our approach are not task-specific.

2.1. SentencePiece

SentencePiece [13] can generate different segmentations for each execution. Here, we outline SentencePiece in the unigram language model. Given a set of vocabularies, V, a sentence T, and the probability of occurrence of , the probability of the partition for is represented as , where . The optimum partition for T is obtained by searching for the x that maximizes from all candidate partitions .

Given a set of sentences, D, as training data for a language, the subword segmentation for D can be obtained through the maximum likelihood estimation of the following with as a hidden variable by using EM algorithm, where is the s-th sentence in D.

SentencePiece was shown to achieve significant improvements over the method based on subword sequences; however, this method is rather complicated because it requires a unigram language model to predict the probability of subword occurrence, EM algorithm to optimize the lexicon, and Viterbi algorithm to create segmentation samples.

2.2. BPE and BPE-Dropout

BPE [14] is one of practical implementations of Re-pair [15], which is known as the algorithm with the highest compression ratio. Re-pair counts the frequency of occurrence of all bigrams in the input string T. For the most frequent , it replaces all occurrences of in T such that , with some unused character z. This process is repeated until there are no more frequent bigrams in T. The compressed T can be recursively decoded by the stored substitution rules .

Since the naive implementation of Re-pair requires time, we use a complex data structure to achieve linear time; however, it is not practical for large-scale data because it consumes of space. As a result, we usually split into substrings of a constant length and process each by the naive Re-pair without special data structure, called BPE. Naturally, there is a trade-off between the size of the split and the compression ratio. BPE-based subword segmentation [3] (called BPE simply) determines the priority of bigrams according to their frequency and adds the merged bigrams as the vocabularies.

Since BPE is a deterministic algorithm, it splits a given T in one way. Thus, it is not easy to generate multiple partitions such as the stochastic approach (e.g., [13]). As a result, BPE-dropout [4], ignoring the merging process with a certain probability, was proposed. In BPE-dropout, for the current T and the most frequent , for each occurrence i satisfying , merging is dropped with a certain small probability p (e.g., ). This mechanism makes BPE-dropout probabilistic and generates a variety of splits. BPE-dropout has been recorded to outperform SentencePiece in various languages. Additionally, BPE-based methods are faster and easier to implement than likelihood-based approaches.

2.3. LCP

Frequency-based compression algorithms (e.g., [14,15]) are known to be not optimum from a theoretical point of view. Optimum compression here means a polynomial-time algorithm that satisfies with the output of the algorithm for the input T and an optimum solution . Note that computing is NP-hard [22].

For example, consider a string . Assuming the rank of these frequencies: , merging for T is possibly ; however, the desirable merging would be considering the similarity of these substrings.

Since such pathological merging cannot be prevented by frequency information alone, frequency-based algorithms cannot obtain asymptotically optimum compression [23]. Various linear time and optimal compressions have been proposed to improve this drawback. LCP is one of the simplest optimum compression algorithms. The original LCP, similar to Re-pair, is a deterministic algorithm. Recently, the introduction of probability into LCP [19] has been proposed, and in this study, we focus on the probabilistic variant. The following is a brief description of the probabilistic LCP.

We are given an input string of length n and a set of vocabularies, V. Here, V is initialized as the set of all characters appearing in T.

- Randomly assign a label to each .

- According to , compute the sequence .

- Merge all bigram provided .

- Set and repeat the above process.

The difference between LCP and BPE is that BPE merges bigrams with respect to frequencies, whereas LCP pays no attention to them. Instead, LCP merges based on the binary labels assigned randomly. The most important point is that any two occurrences of ‘10’ never overlap. For example, when T contains a trigram , there is no possible assignment allowing and simultaneously. By this property, LCP can avoid the problem that frequently occurs in BPE. Although LCP theoretically guarantees almost optimum compression, as far as the authors know, this study is the first result of applying LCP to machine translation.

3. Our Approach: LCP-Dropout

BPE-dropout allows diverse subword segmentation for BPE by ignoring bigram merging with a certain probability; however, since BPE is a deterministic algorithm, it is not trivial to generate various candidates of bigram. In this study, we propose an algorithm that enables multiple subword segmentation for the same input by combining the theory of LCP with the original strategy of BPE.

3.1. Algorithm Description

We define the notations used in our LCP-dropout (Algorithm 1) and its subroutine (Algorithm 2). Let be an alphabet and ‘_’ be the explicit blank symbol not in . A string w formed from is called word, denoted by , and a string is called a sentence.

We also assume the meta symbol ‘−’ not in . By this, a sentence x is extended to have all possible merges: Let be the string of all symbols in x separated by −, e.g., for . For strings x and y, if y is obtained by removing some occurrences of − in x, then we express the relation and y is said to be a subword segmentation of x.

After merging (i.e., is replaced by ), the substring is treated as a single symbol. Thus, we extend the notion of bigram to vocabularies of length more than two. For a string of the form such that each contains no −, each is defined to be a bigram consisting of the vocabularies and .

| Algorithm 1 LCP-dropout. |

|

| Algorithm 2 %subroutine of LCP-dropout. |

|

3.2. Example Run

Table 1 presents an example of subword segmentation using LCP-dropout. Here, the input X consists of a single sentence . The hyperparameters are . First, the set of vocabularies is initialized to ; for each , a label is randomly assigned (depth 0). Next, find all occurrences of 10 in L, and the corresponding bigrams are merged depending on their frequencies. Here, but only is top-k bigram assigned 10, and then is merged to . The resulting string is shown in the depth 1 over the new vocabularies . This process is repeated while for the next m. The condition terminates the inner-loop of LCP-dropout, and then the subword is generated. Since , the algorithm generates the next subword segmentations for the same input. Finally, we obtain the multiple subword segmentation and for the same input string.

Table 1.

Example of multiple subword segmentation using LCP-dropout for the single sentence ‘’ with the hyperparameters , where the meta symbol − is omitted, and L is the label of each vocabulary assigned by LCP. The resulting subword segmentation is .

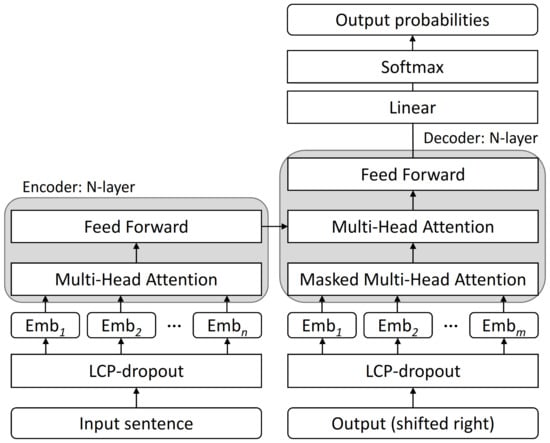

3.3. Framework of Neural Machine Translation

Figure 1 shows the framework of our transformer-based machine translation model with LCP-dropout. Transformer is the most successful NMT model [24]. The model mainly consists of an encoder and decoder. The encoder converts the input sentence in the source language into a word embedding (Emb in Figure 1), taking into account the positional information of the characters. Here, the notion of word is extended to that of subword in this study. The subwords are obtained by our proposed method, LCP-dropout. Next, the correspondences in the input sentence are acquired as attention (Multi-Head Attention). Then, the normalization is performed through a forward propagation network formed by linear transformation, activation by ReLU function, and linear transformation. These processes are performed in N = 6 layers for the decoder.

Figure 1.

Framework of our neural machine translation model with LCP-dropout.

For the decoder, it receives the candidate sentence generated by the encoder and the input sentence for the decoder. Then, it acquires the correspondence between those sentences as attention (Multi-Head Attention). This process is also performed in N = 6 layers. Finally, the predicted probability of each label is calculated by linear transformation and softmax function.

4. Experimental Setup

4.1. Baseline Algorithms

Baseline algorithms are SentencePiece [13] with the unigram language model and BPE/BPE-dropout [3,4]. SentencePiece takes the hyperparameters l and , where l specifies how many best segmentations for each word are produced before sampling and controls the smoothness of the sampling distribution. In our experiment, we used , which performed best on different data in the previous studies.

BPE-dropout takes the hyperparameter p, where during segmentation, at each step, some merges are randomly dropped with the probability p. If , the segmentation is equal to the original BPE and , the algorithm outputs the input string itself. Then, the value of p can be used to control the granularity of segmentation. In our experiment, we used for the original BPE and for the BPE-dropout with the best performance.

4.2. Data Sets, Preprocessing, and Vocabulary Size

We verified the performance of the proposed algorithm for a wide range of datasets with different sizes and languages. Table 2 summarizes the details of the datasets and hyperparameters. These data are used to compare the performance of LCP-dropout and baselines (SentencePiece/BPE/BPE-dropout) with appropriate hyperparameters and vocabulary sizes shown in [4].

Table 2.

Overview of the datasets and hyperparameters. The hyperparameter v (vocabulary size) is common to all algorithms (baselines and ours) and others (ℓ and k) are specific to LCP-dropout only.

Before subword segmentation, we preprocess all datasets with the standard Moses toolkit (https://github.com/moses-smt/mosesdecoder, accessed on 5 January 2022) where for Japanese and Chinese, subword segmentations are trained almost from raw sentences because these languages have no explicit word boundaries; thus, Moses tokenizer does not work correctly.

Based on a recent research on the effect of vocabulary size on translation quality, the vocabulary size is modified according to the dataset size in our experiments (Table 2).

To verify the performance of the proposed algorithm for small training data, we use News Commentary v16 (https://data.statmt.org/news-commentary/v16, accessed on 5 January 2022), a subset of WMT14 (https://www.statmt.org/wmt14/translation-task.html, accessed on 1 February 2022), as well as KFTT (http://www.phontron.com/kftt, accessed on 5 January 2022). In addition, we use a large training data in WMT14. The training step is set to 200,000 for all data. In training, pairs of sentences of source and target languages were batched together by approximate length. As shown in Table 2, the batch size was standardized to approximately for all datasets.

4.3. Model, Optimizer, and Evaluation

NMT was realized by the seq2seq model, which takes a sentence in the source language as input and outputs a corresponding sentence in the target language [25]. A transformer is an improvement of seq2seq model, that is the most successful NMT [24].

In our experiments, we used OpenNMT-tf [26], a transformer-based NMT, to compare LCP-dropout and other baselines algorithms. The parameters of OpenNMT-tf were set as in the experiment of BPE-dropout [4]. The batch size was set to 3072 for training and 32 for testing. We also used the regularization and optimization procedure as described in BPE-dropout [4].

The quality of machine translation is quantitatively evaluated by BLEU score, i.e., the similarity between the result and the reference of translation. It is calculated using the following formula based on the number of matches in their n-grams. Let and be the i-th translation and reference sentences, respectively.

where N is a small constant (e.g., ), and is the brevity penalty when , where otherwise. In this study, we use SacreBLEU [27]. For Chinese, we add option–tok zh to SacreBLEU. Meanwhile, we use character-based BLEU for Japanese.

5. Experiments and Analysis

All experiments were conducted using the following: OS: Ubuntu 20.04.2 LTS, CPU: Intel(R) Xeon(R) W-2135 CPU @ 3.70 GHz, GPU: GeForce RTX 2080 Ti Rev. A, Memory: 64 GB RAM, Storage: 2TB SSD, Python-3.8.6, SentencePiece-0.1.96 (https://pypi.org/project/sentencepiece/, accessed on 5 January 2022) (Python wrapper for SentencePiece including BPE/BPE-dropout runtime). The numerical results are averages of three independent trials.

5.1. Estimation of Hyperparameters for LCP-Dropout

First, we estimate suitable hyperparameters for LCP-dropout. Table 3 summarizes the effect of hyperparameters on the proposed LCP-dropout. This table shows the details of multiple subword segmentation using LCP-dropout and BLEU scores for the language pair of English (En) and German (De) from News Commentary v16 (Table 2). For each threshold , En and De indicate the number of multiple subword sequences generated for the corresponding language, respectively. The last two values are the BLEU scores for De → En with and for , respectively.

Table 3.

Experimental results of LCP-dropout for News Commentary v16 (Table 2) with respect to the specified hyperparameters, where the translation task is De → En. Bold indicates the best score.

The threshold k controls the dropout rate, and ℓ contributes to the multiplicity of the subword segmentation. The results show that k and ℓ affect the learning accuracy (BLEU). The best result is obtained when . This can be explained by the results in Table 4, which show the depth of the executed inner loop of the LCP-dropout for randomly assigning to vocabularies, where, when , means the average before the outer-loop terminates. As a result, the larger this value is, the more likely it is that longer subwords will be generated; however, unlike BPE-dropout, the value of k alone is not enough to generate multiple subwords. The proposed LCP-dropout guarantees the diversity by initializing the subword segmentation by ℓ . Using this result, we fix as the hyperparameter of LCP-dropout.

Table 4.

Depth of label assignment in LCP-dropout.

5.2. Comparison with Baselines

Table 5 summarizes the main results. We show BLEU scores for News Commentary v16 and KFTT: En and De are the same in Table 3. In addition to these languages, we set French (Fr), Japanese (Ja), and Chinese (Zh). For each language, we show the average of the number of multiple subword sequences generated by LCP-dropout. For almost datasets, LCP-dropout outperforms the baselines algorithms. Meanwhile, we use the best ones reported in the previous study for the hyperparameters of BPE-dropout and SentencePiece.

Table 5.

Experimental results of LCP-dropout (denoted by LCP), BPE-dropout (denoted by BPE), and SentencePiece (denoted by SP) on various languages in Table 2 (small corpus: News Commentary v16 and KFTT, and large corpus: WMT14), where ‘multiplicity’ denotes the average number of sequences generated per input string. Bold indicates the best score.

Table 5 extracts the effect of alphabet size on subword segmentation. In general, Japanese (Ja) and Chinese (Zh) alphabets are very large, containing at least alphabet symbols even if we limit them in common use; therefore, the average length of words is small and subword semantics is difficult. For these cases, we confirmed that LCP-dropout has higher BLEU scores than other methods for these languages.

Table 5 also presents the BLEU scores for a large corpus (WMT14) for the translation De → En. This experiment shows that LCP-dropout cannot outperform baselines with the hyperparameter we set. This is because the ratio of the vocabulary size to dropout rate k is not appropriate. As data to support this conjecture, it can be confirmed that the multiplicity in the large datasets is much smaller than that of small corpus (Table 5). This is caused by the reduced repetitions of label assignments, as shown in Table 6 compared to Table 4. The results show that the depth of the inner loop is significantly reduced, which is why enough subword sequences cannot be generated.

Table 6.

Depth of label assignment for large corpus.

Table 7 presents several translation results. The ‘Reference’ represents the correct translation for each case, and the BLEU score is obtained from the pair of the reference and each translation result. We also show the average length for each reference sentence indicated by the ‘ave./word’. These results show the characteristics of successful and unsuccessful translations by the two algorithms related to the length of words.

Table 7.

Examples of translated sentences by LCP-dropout and BPE-dropout with the reference translation for News Commentary v16. We show the average word length (ave./word) for each reference sentence as well as the average subword length (ave./subword) generated by the respective algorithms for the entire corpus. We also show the BLEU scores between the references and translated sentences as well as their standard deviations (SD).

Considering subword segmentation as a parsing tree, LCP produces a balanced parsing tree, whereas the tree produced by BPE tends to be longer for a certain path. For example, for a substring , LCP tends to generate subwords such as , while BPE generates them such as . In this example, the average length of the former is shorter than that of the latter. This trend is supported by the experimental results in Table 7 showing the average length of all subwords generated by LCP/BPE-dropout for real datasets. Due to this property, when the vocabulary size is fixed, LCP tends not to generate subwords of approximate length because it decomposes a longer word into excessively short subwords.

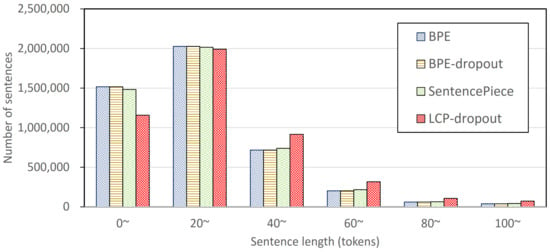

Figure 2 shows the distributions of sentence length of English. The sentence length denotes the number of tokens in a sentence. BPE-dropout is a well-known fine-grained segmentation approach. The figure shows that LCP-dropout produces more fine-grained segmentation than the other three segmentation approaches; therefore, LCP-dropout is considered to be superior in subword segmentation for languages consisting of short words. Table 5 including the translation results for Japanese and Chinese also supports these characteristics.

Figure 2.

Distribution of sentence length. The number of tokens in each sentence by LCP-dropout tends to be larger than the others: BPE, BPE-dropout, and SentencePiece.

6. Conclusions, Limitations, and Future Research

6.1. Conclusions and Limitations

In this study, we proposed the LCP-dropout as an extension of BPE-dropout [4] for multiple subword segmentation by applying a near-optimum compression algorithm. The proposed LCP-dropout can properly decompose strings without background knowledge of the source/target language by randomly assigning binary labels to vocabularies. This mechanism allows generating consistent multiple segmentations for the same string. As shown in the experimental results, LCP-dropout enables data augmentation for small datasets, where sufficient training data are unavailable on minor languages or limited fields.

Multiple segmentation can also be achieved by likelihood-based methods. After SentencePiece [13], various extensions have been proposed [28,29]. In contrast to these studies, our approach focuses on a simple linear-time compression algorithm. Our algorithm does not require any background knowledge of the language compared to word replacement-based data augmentation, [30,31] where some words in the source/target sentence are swapped with other words preserving grammatical/semantic correctness.

6.2. Future Research

The effectiveness of LCP-dropout was confirmed for almost small corpora. Unfortunately, the optimal hyperparameter obtained in this study did not work well for a large corpus. Further, the learning accuracy was found to be affected by the alphabet size of the language. Future research directions include an adaptive mechanism for determining the hyperparameters depending on training data and alphabet size.

In the experiments in this paper, we considered word-by-word subword decomposition. On the other hand, multi-words are known to violate the compositeness of language; therefore, by considering multi-words as longer words and performing subword decomposition, LCP-dropout can be applied to language processing related to multi-words. In this study, subword segmentation was applied to machine translation. To improve the BLEU score, there are other approaches such as data augmentation [32]. Incorporating the LCP-dropout with them is one interesting approach. In this paper, we handled several benchmark datasets with major languages. Recently, machine translation of low-resource languages is an important task [33]. Applying the LCP-dropout to this task is also important future work.

Although the proposed LCP-dropout is currently applied only to machine translation, we plan to apply our method to other linguistic tasks including sentiment analysis, parsing, and question answering in future studies.

Author Contributions

Conceptualization, H.S.; methodology, T.I.; software, K.N.; validation, T.O. and H.S.; formal analysis, H.S.; investigation, H.S.; resources, K.N.; data curation, K.S. and K.Y.; writing—original draft preparation, H.S.; writing—review and editing, T.O., K.S. and H.S.; visualization, K.S. and H.S.; supervision, H.S.; project administration, H.S.; funding acquisition, H.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded in part by JSPS KAKENHI (Grant Number 21H05052, 17H01791).

Acknowledgments

The authors thank all the reviewers who contributed to the reviewing process.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Barrault, L.; Bojar, O.; Costa-jussà, M.R.; Federmann, C.; Fishel, M.; Graham, Y.; Haddow, B.; Huck, M.; Koehn, P.; Malmasi, S.; et al. Findings of the 2019 Conference on Machine Translation (WMT19). In Proceedings of the Fourth Conference on Machine Translation, Florence, Italy, 1–2 August 2019; pp. 1–61. [Google Scholar]

- Bojar, O.; Federmann, C.; Fishel, M.; Graham, Y.; Haddow, B.; Koehn, P.; Monz, C. Findings of the 2018 Conference on Machine Translation (WMT18). In Proceedings of the Third Conference on Machine Translation: Shared Task Papers, Belgium, Brussels, 31 October–1 November 2018; pp. 272–303. [Google Scholar]

- Sennrich, R.; Haddow, B.; Birch, A. Neural machine translation of rare words with subword units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Berlin, Germany, 7–12 August 2016; pp. 1715–1725. [Google Scholar]

- Provilkov, I.; Emelianenko, D.; Voita, E. BPE-Dropout: Simple and Effective Subword Regularization. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 1882–1892. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Jeff Dean, J. Distributed Representations of Words and Phrases and their Compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013; pp. 3111–3119. [Google Scholar]

- Socher, R.; Bauer, J.; Manning, C.; Ng, A. Parsing with compositional vector grammars. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics, Sofia, Bulgaria, 4–9 August 2013; pp. 455–465. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. [Google Scholar]

- Creutz, M.; Lagus, K. Unsupervised models for morpheme segmentation and morphology learning. ACM Trans. Speech Lang. Process. 2007, 4, 1–34. [Google Scholar] [CrossRef]

- Schuster, M.; Nakajima, K. Japanese and Korean Voice Search. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing, Kyoto, Japan, 25–30 March 2012; pp. 5149–5152. [Google Scholar]

- Chitnis, R.; DeNero, J. Variablelength word encodings for neural translation models. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 2088–2093. [Google Scholar]

- Kunchukuttan, A.; Bhattacharyya, P. Orthographic syllable as basic unit for SMT between related languages. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 1912–1917. [Google Scholar]

- Banerjee, T.; Bhattacharyya, P. Meaningless yet meaningful: Morphology grounded subword-level NMT. In Proceedings of the Second Workshop on Subword/Character Level Models, New Orleans, LA, USA, 5 June 2018; pp. 55–60. [Google Scholar]

- Kudo, T. Subword regularization: Improving neural network translation models with multiple subword candidates. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 66–75. [Google Scholar]

- Gage, P. A new algorithm for data compression. C Users J. 1994, 12, 23–38. [Google Scholar]

- Larsson, N.J.; Moffat, A. Off-line dictionary-based compression. Proc. IEEE 2000, 88, 1722–1732. [Google Scholar] [CrossRef] [Green Version]

- Karpinski, M.; Rytter, W.; Shinohara, A. An Efficient Pattern-Matching Algorithm for Strings with Short Descriptions. Nord. J. Comput. 1997, 4, 172–186. [Google Scholar]

- Kida, T.; Matsumoto, T.; Shibata, Y.; Takeda, M.; Shinohara, A.; Arikawa, S. Collage system: A unifying framework for compressed pattern matching. Theor. Comput. Sci. 2003, 298, 253–272. [Google Scholar] [CrossRef] [Green Version]

- Cormod, G.; Muthukrishnan, S. The string edit distance matching problem with moves. ACM Trans. Algorithms 2007, 3, 1–19. [Google Scholar] [CrossRef]

- Jeż, A. A really simple approximation of smallest grammar. Theor. Comput. Sci. 2016, 616, 141–150. [Google Scholar] [CrossRef]

- Takabatake, Y.; I, T.; Sakamoto, H. A Space-Optimal Grammar Compression. In Proceedings of the 25th Annual European Symposium on Algorithms, Vienna, Austria, 6–9 September 2017; pp. 1–15. [Google Scholar]

- Gańczorz, M.; Gawrychowski, P.; Jeż, A.; Kociumaka, T. Edit Distance with Block Operations. In Proceedings of the 26th Annual European Symposium on Algorithms, Helsinki, Finland, 20–22 August 2018; pp. 33:1–33:14. [Google Scholar]

- Lehman, E.; Shelat, A. Approximation algorithms for grammar-based compression. In Proceedings of the Thirteenth Annual ACM-SIAM Symposium on Discrete Algorithms, San Francisco, CA, USA, 6–8 January 2002; pp. 205–212. [Google Scholar]

- Lehman, E. Approximation Algorithms for Grammar-Based Data Compression. Ph.D. Thesis, MIT, Cambridge, MA, USA, 1 February 2002. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, V.Q. Sequence to sequence learning with neural networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 2 December 2014; pp. 3104–3112. [Google Scholar]

- Klein, G.; Kim, Y.; Deng, Y.; Senellart, J.; Rush, A. OpenNMT: Open-source toolkit for neural machine translation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 67–72. [Google Scholar]

- Post, M. A call for clarity in reporting BLEU scores. In Proceedings of the Third Conference on Machine Translation, Brussels, Belgium, 31 October–1 November 2018; pp. 186–191. [Google Scholar]

- He, X.; Haffari, G.; Norouzi, N. Dynamic Programming Encoding for Subword Segmentation in Neural Machine Translation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 3042–3051. [Google Scholar]

- Deguchi, H.; Utiyama, M.; Tamura, A.; Ninomiya, T.; Sumita, E. Bilingual Subword Segmentation for Neural Machine Translation. In Proceedings of the 28th International Conference on Computational Linguistics, Online, 8–13 December 2020; pp. 4287–4297. [Google Scholar]

- Fadaee, M.; Bisazza, A.; Monz, C. Data augmentation for low-resource neural machine translation. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 567–573. [Google Scholar]

- Wang, X.; Pham, H.; Dai, Z.; Neubig, G. SwitchOut: An efficient data augmentation algorithm for neural machine translation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 856–861. [Google Scholar]

- Ranto Sawai, R.; Paik, I.; Kuwana, A. Sentence Augmentation for Language Translation Using GPT-2. Electronics 2021, 10, 3082. [Google Scholar] [CrossRef]

- Park, C.; Yang, Y.; Park, K.; Heuiseok Lim, H. Decoding Strategies for Improving Low-Resource Machine Translation. Electronics 2020, 9, 1562. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).