Sound Localization Based on Acoustic Source Using Multiple Microphone Array in an Indoor Environment

Abstract

:1. Introduction

2. Materials and Methods

2.1. Acoustic Signal Model

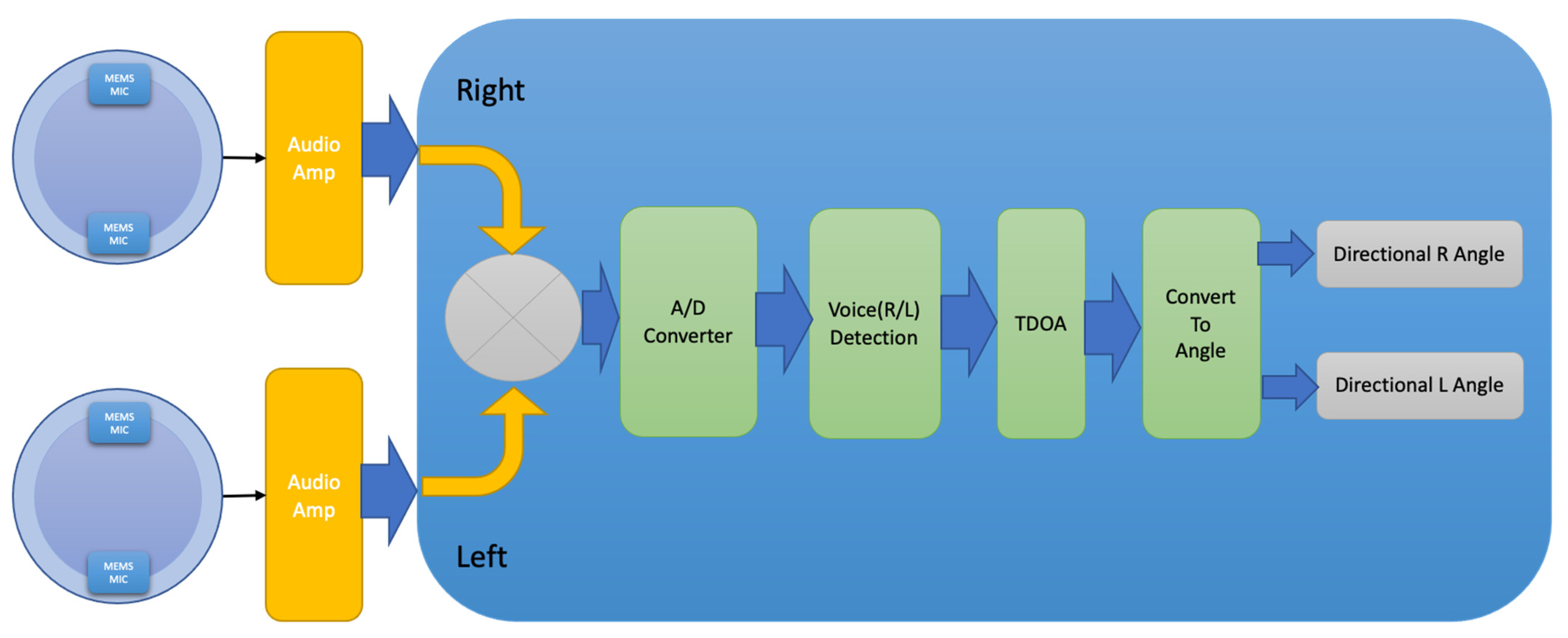

2.2. Signal Processing Method

2.2.1. Time Difference of Arrival

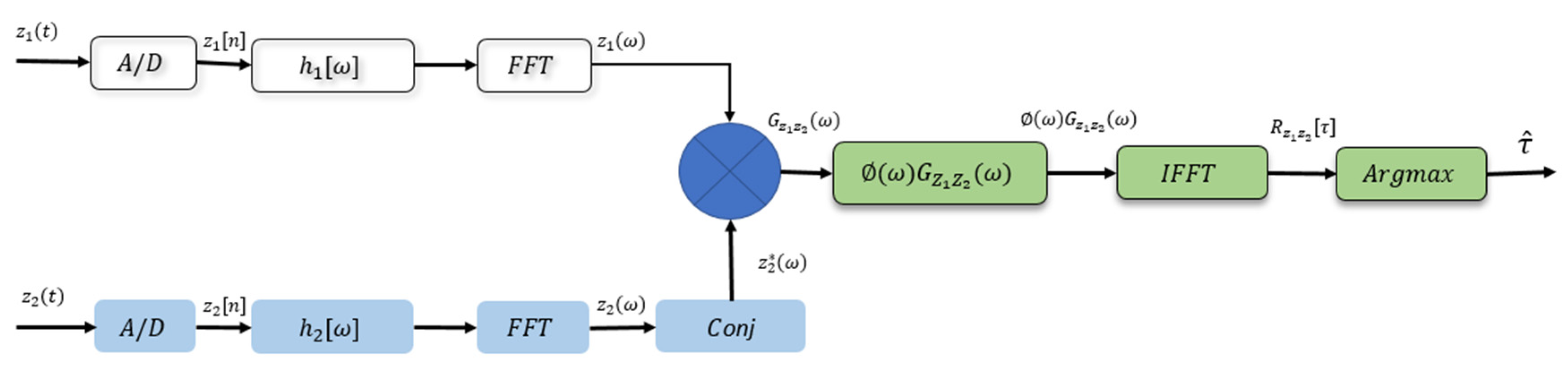

2.2.2. Generalized Cross Correlation

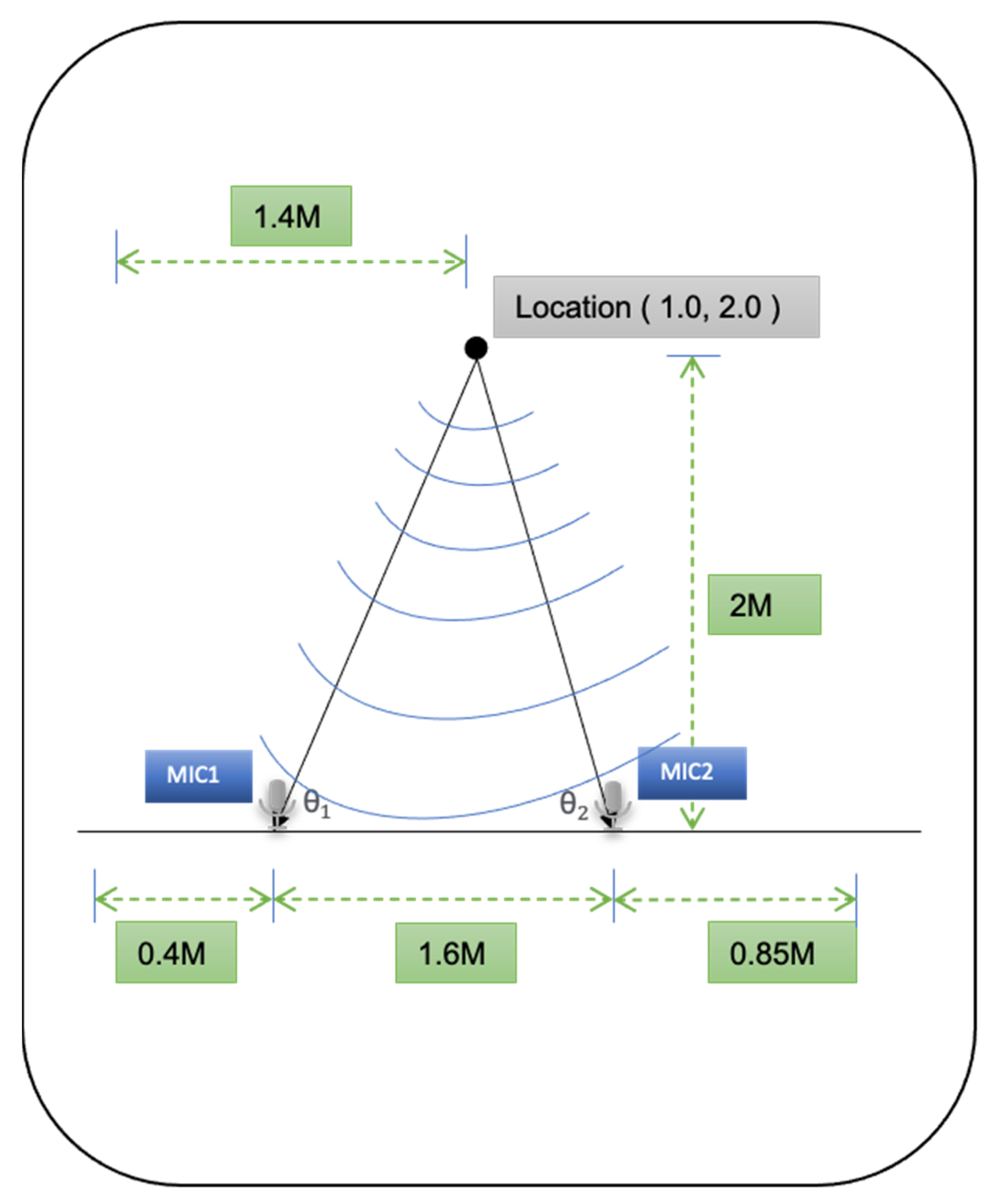

2.2.3. Sound Source Localization

3. Results

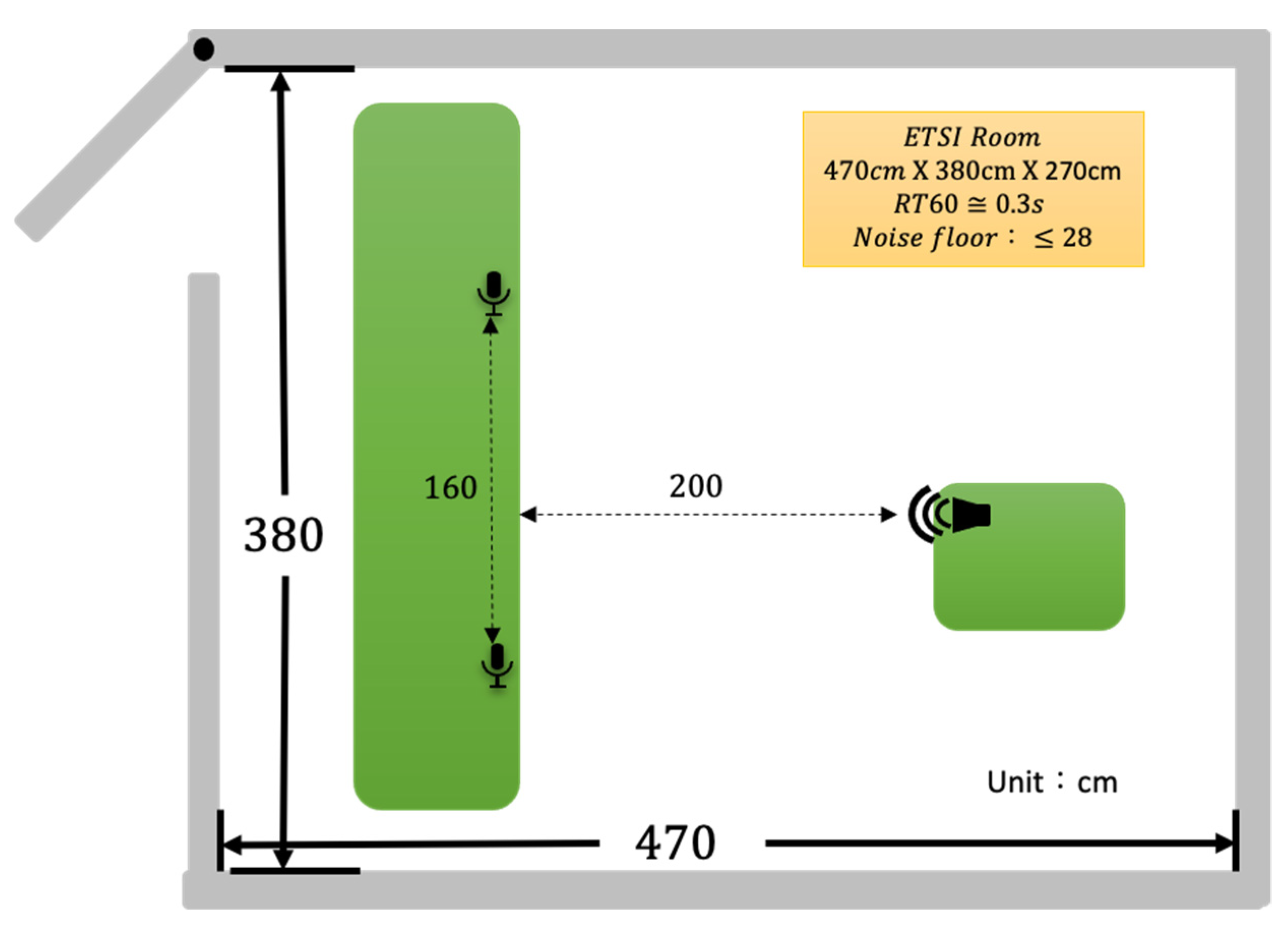

3.1. Testing Environment

- ISO 1996-1: Acoustics—Description, measurement and assessment of environmental noise-Part 1: Basic quantities and assessment procedures, 2003;

- ASTM Designation: E336-97 Standard Test Method for Measurement of Airborne Sound Insulation in Buildings;

- ASTM Designation: E413-16 Classification for Rating Sound Insulation.

- ISO 3382-2: 2008(E) Acoustics-Measurement of room acoustic parameters-Part 2: Reverberation time in ordinary rooms.

3.2. Experimental Methodology and Analysis of Results

3.2.1. Experiment 1: Measurement of Different Frequencies

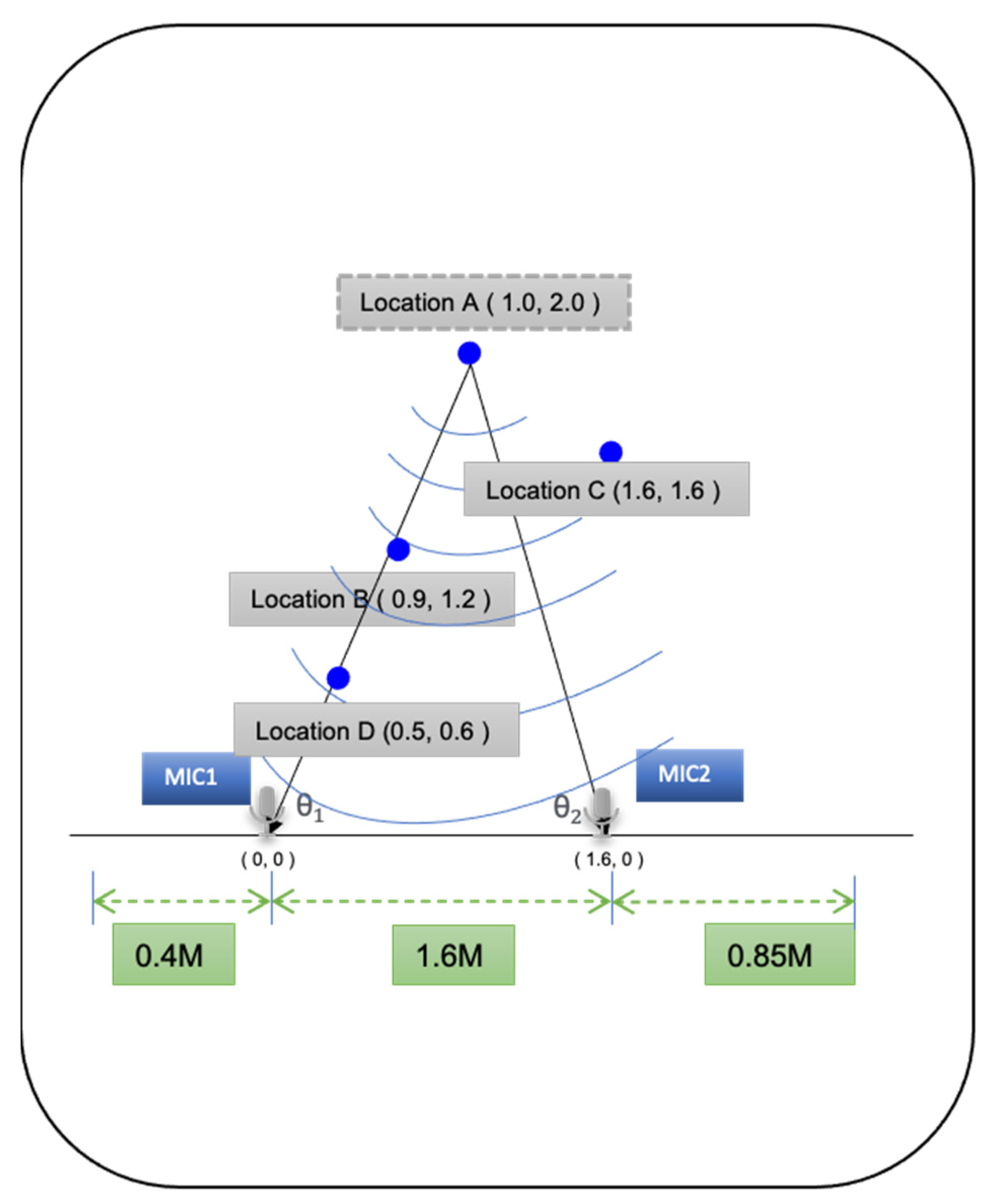

3.2.2. Experiment 2: Sound Source Estimation Position Accuracy Test

3.3. Discussion

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Barbieri, L.; Brambilla, M.; Trabattoni, A.; Mervic, S.; Nicoli, M. UWB localization in a smart factory: Augmentation methods and experimental assessment. IEEE Trans. Instrum. Meas. 2021, 70, 1–18. [Google Scholar] [CrossRef]

- Shi, D.; Mi, H.; Collins, E.G.; Wu, J. An indoor low-cost and high-accuracy localization approach for AGVs. IEEE Access 2020, 8, 50085–50090. [Google Scholar] [CrossRef]

- Yi, D.-H.; Lee, T.-J.; Cho, D.-I. A new localization system for indoor service robots in low luminance and slippery indoor environment using afocal optical flow sensor based sensor fusion. Sensors 2018, 18, 171. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, B.; Lu, Y.; Karimi, H.R. Adaptive Fading Extended Kalman Filtering for Mobile Robot Localization Using a Doppler–Azimuth Radar. Electronics 2021, 10, 2544. [Google Scholar] [CrossRef]

- Upadhyay, J.; Rawat, A.; Deb, D.; Muresan, V.; Unguresan, M.-L. An RSSI-based localization, path planning and computer vision-based decision making robotic system. Electronics 2020, 9, 1326. [Google Scholar] [CrossRef]

- Ryumin, D.; Kagirov, I.; Axyonov, A.; Pavlyuk, N.; Saveliev, A.; Kipyatkova, I.; Zelezny, M.; Mporas, I.; Karpov, A. A multimodal user interface for an assistive robotic shopping cart. Electronics 2020, 9, 2093. [Google Scholar] [CrossRef]

- Savkin, A.V.; Huang, H. Range-based reactive deployment of autonomous drones for optimal coverage in disaster areas. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 4606–4610. [Google Scholar] [CrossRef]

- Muhammad, K.; Ahmad, J.; Lv, Z.; Bellavista, P.; Yang, P.; Baik, S.W. Efficient deep CNN-based fire detection and localization in video surveillance applications. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1419–1434. [Google Scholar] [CrossRef]

- Shah, S.A.; Fioranelli, F. RF sensing technologies for assisted daily living in healthcare: A comprehensive review. IEEE Aerosp. Electron. Syst. Mag. 2019, 34, 26–44. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.-C.; Jeong, Y.-S.; Park, S.-O. RFID-based indoor location tracking to ensure the safety of the elderly in smart home environments. Pers. Ubiquitous Comput. 2013, 17, 1699–1707. [Google Scholar] [CrossRef]

- Zhou, M.; Wang, Y.; Liu, Y.; Tian, Z. An information-theoretic view of WLAN localization error bound in GPS-denied environment. IEEE Trans. Veh. Technol. 2019, 68, 4089–4093. [Google Scholar] [CrossRef]

- Becerra, V.M. Autonomous control of unmanned aerial vehicles. Electronics 2019, 8, 452. [Google Scholar] [CrossRef] [Green Version]

- Al-Sadoon, M.A.G.; De Ree, M.; Abd-Alhameed, R.A.; Excell, P.S. Uniform sampling methodology to construct projection matrices for Angle-of-Arrival estimation applications. Electronics 2019, 8, 1386. [Google Scholar] [CrossRef] [Green Version]

- Luo, M.; Chen, X.; Cao, S.; Zhang, X. Two new shrinking-circle methods for source localization based on TDoA measurements. Sensors 2018, 18, 1274. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Xu, C.; Ji, M.; Qi, Y.; Zhou, X. MCC-CKF: A distance constrained Kalman filter method for indoor TOA localization applications. Electronics 2019, 8, 478. [Google Scholar] [CrossRef] [Green Version]

- Vera-Diaz, J.M.; Pizarro, D.; Macias-Guarasa, J. Towards end-to-end acoustic localization using deep learning: From audio signals to source position coordinates. Sensors 2018, 18, 3418. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fabregat, G.; Belloch, J.A.; Badia, J.M.; Cobos, M. Design and implementation of acoustic source localization on a low-cost IoT edge platform. IEEE Trans. Circuits Syst. II Express Briefs 2020, 67, 3547–3551. [Google Scholar] [CrossRef]

- Salvati, D.; Drioli, C.; Foresti, G.L. A low-complexity robust beamforming using diagonal unloading for acoustic source localization. IEEE/ACM Trans. Audio Speech Lang. Processing 2018, 26, 609–622. [Google Scholar] [CrossRef] [Green Version]

- Xue, C.; Zhong, X.; Cai, M.; Chen, H.; Wang, W. Audio-Visual Event Localization by Learning Spatial and Semantic Co-attention. IEEE Trans. Multimed. 2021. [Google Scholar] [CrossRef]

- Arakawa, T. Recent research and developing trends of wearable sensors for detecting blood pressure. Sensors 2018, 18, 2772. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chien, J.-C.; Dang, Z.-Y.; Lee, J.-D. Navigating a service robot for indoor complex environments. Appl. Sci. 2019, 9, 491. [Google Scholar] [CrossRef] [Green Version]

- Han, J.-H. Tracking control of moving sound source using fuzzy-gain scheduling of PD control. Electronics 2020, 9, 14. [Google Scholar] [CrossRef] [Green Version]

- Yvanoff-Frenchin, C.; Ramos, V.; Belabed, T.; Valderrama, C. Edge Computing Robot Interface for Automatic Elderly Mental Health Care Based on Voice. Electronics 2020, 9, 419. [Google Scholar] [CrossRef] [Green Version]

- Liang, M.; Xi-Hai, L.; Wan-Gang, Z.; Dai-Zhi, L. The generalized cross-correlation Method for time delay estimation of infrasound signal. In Proceedings of the 2015 Fifth International Conference on Instrumentation and Measurement, Computer, Communication and Control (IMCCC), Qinhuangdao, China, 18–20 September 2015; pp. 1320–1323. [Google Scholar]

- Hożyń, S. A Review of Underwater Mine Detection and Classification in Sonar Imagery. Electronics 2021, 10, 2943. [Google Scholar] [CrossRef]

- Shi, F.; Chen, Z.; Cheng, X. Behavior modeling and individual recognition of sonar transmitter for secure communication in UASNs. IEEE Access 2019, 8, 2447–2454. [Google Scholar] [CrossRef]

- Lemieszewski, Ł.; Radomska-Zalas, A.; Perec, A.; Dobryakova, L.; Ochin, E. The Spoofing Detection of Dynamic Underwater Positioning Systems (DUPS) Based on Vehicles Retrofitted with Acoustic Speakers. Electronics 2021, 10, 2089. [Google Scholar] [CrossRef]

- Sakavičius, S.; Serackis, A. Estimation of Azimuth and Elevation for Multiple Acoustic Sources Using Tetrahedral Microphone Arrays and Convolutional Neural Networks. Electronics 2021, 10, 2585. [Google Scholar] [CrossRef]

- Seo, S.-W.; Yun, S.; Kim, M.-G.; Sung, M.; Kim, Y. Screen-based sports simulation using acoustic source localization. Appl. Sci. 2019, 9, 2970. [Google Scholar] [CrossRef] [Green Version]

- Pu, H.; Cai, C.; Hu, M.; Deng, T.; Zheng, R.; Luo, J. Towards robust multiple blind source localization using source separation and beamforming. Sensors 2021, 21, 532. [Google Scholar] [CrossRef] [PubMed]

- Huang, G.; Benesty, J.; Cohen, I.; Chen, J. A simple theory and new method of differential beamforming with uniform linear microphone arrays. IEEE/ACM Trans. Audio, Speech Lang. Process. 2020, 28, 1079–1093. [Google Scholar] [CrossRef]

- Piotto, M.; Ria, A.; Stanzial, D.; Bruschi, P. Design and characterization of acoustic particle velocity sensors fabricated with a commercial post-CMOS MEMS process. In Proceedings of the 2019 20th International Conference on Solid-State Sensors, Actuators and Microsystems & Eurosensors XXXIII (TRANSDUCERS & EUROSENSORS XXXIII), Berlin, Germany, 23–27 June 2019; pp. 1839–1842. [Google Scholar]

- Wang, Z.Q.; Le Roux, J.; Hershey, J.R. Multi-channel deep clustering: Discriminative spectral and spatial embeddings for speaker-independent speech separation. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 1–5. [Google Scholar]

- Liu, L.; Li, Y.; Kuo, S.M. Feed-forward active noise control system using microphone array. IEEE/CAA J. Autom. Sin. 2018, 5, 946–952. [Google Scholar] [CrossRef]

- Qin, M.; Hu, D.; Chen, Z.; Yin, F. Compressive Sensing-Based Sound Source Localization for Microphone Arrays. Circuits, Syst. Signal Process. 2021, 40, 4696–4719. [Google Scholar] [CrossRef]

- VandenDriessche, J.; Da Silva, B.; Lhoest, L.; Braeken, A.; Touhafi, A. M3-AC: A Multi-Mode Multithread SoC FPGA Based Acoustic Camera. Electronics 2021, 10, 317. [Google Scholar] [CrossRef]

- Singh, A.P.; Tiwari, N. An improved method to localize simultaneously close and coherent sources based on symmetric-Toeplitz covariance matrix. Appl. Acoust. 2021, 182, 108176. [Google Scholar] [CrossRef]

- Kim, S.; Park, M.; Lee, S.; Kim, J. Smart Home Forensics—Data Analysis of IoT Devices. Electronics 2020, 9, 1215. [Google Scholar] [CrossRef]

- Isyanto, H.; Arifin, A.S.; Suryanegara, M. Design and implementation of IoT-based smart home voice commands for disabled people using Google Assistant. In Proceedings of the 2020 International Conference on Smart Technology and Applications (ICoSTA), Surabaya, Indonesia, 20–20 February 2020; pp. 1–6. [Google Scholar]

- Chan-Ley, M.; Olague, G.; Altamirano-Gomez, G.E.; Clemente, E. Self-localization of an uncalibrated camera through invariant properties and coded target location. Appl. Opt. 2020, 59, D239–D245. [Google Scholar] [CrossRef] [PubMed]

- Krause, M.; Müller, M.; Weiß, C. Singing Voice Detection in Opera Recordings: A Case Study on Robustness and Generalization. Electronics 2021, 10, 1214. [Google Scholar] [CrossRef]

- Li, X.; Xing, Y.; Zhang, Z. A Hybrid AOA and TDOA-Based Localization Method Using Only Two Stations. Int. J. Antennas Propag. 2021, 2021, 1–8. [Google Scholar] [CrossRef]

- Kraljevic, L.; Russo, M.; Stella, M.; Sikora, M. Free-Field TDOA-AOA Sound Source Localization Using Three Soundfield Microphones. IEEE Access 2020, 8, 87749–87761. [Google Scholar] [CrossRef]

- Khalaf-Allah, M. Particle filtering for three-dimensional TDoA-based positioning using four anchor nodes. Sensors 2020, 20, 4516. [Google Scholar] [CrossRef]

- Tian, Z.; Liu, W.; Ru, X. Multi-Target Localization and Tracking Using TDOA and AOA Measurements Based on Gibbs-GLMB Filtering. Sensors 2019, 19, 5437. [Google Scholar] [CrossRef] [Green Version]

- Carter, G. Coherence and time delay estimation. Proc. IEEE 1987, 75, 236–255. [Google Scholar] [CrossRef]

- Knapp, C.; Carter, G. The generalized correlation method for estimation of time delay. IEEE Trans. Acoust. Speech Signal Process. 1976, 24, 320–327. [Google Scholar] [CrossRef] [Green Version]

| Item |

|---|

| Loca6 I2S interface; 1 × 8-bit and 5 × 4-bit |

| 8 × DMIC |

| Soundwire for speaker amp |

| Slimbus for optional codec |

| Slimbus for BT audio interface |

| Frequency/Hz | Angular Error | ||

|---|---|---|---|

| Average | |||

| 1 k | 10.641 | 7.861 | 9.25 |

| 2 k | 2.0878 | 9.777 | 5.93 |

| 3 k | 0.3012 | 3.3485 | 1.82 |

| 4 k | 3.1258 | 3.0462 | 3.09 |

| 5 k | 2.5395 | 1.1583 | 1.85 |

| 6 k | 0.2682 | 2.0954 | 1.18 |

| 7 k | 1.1033 | 0.2188 | 0.66 |

| 8 k | 1.3596 | 0.3753 | 0.87 |

| 9 k | 1.153 | 0.5023 | 0.83 |

| 10 k | 0.988 | 0.4831 | 0.74 |

| 11 k | 0.7901 | 0.8688 | 0.83 |

| 12 k | 0.2022 | 1.2288 | 0.72 |

| 13 k | 1.1424 | 0.0251 | 0.58 |

| 14 k | 0.5973 | 0.0048 | 0.30 |

| 15 k | 0.009 | 1.1542 | 0.58 |

| Frequency/Hz | Distance Offset(cm) | ||

|---|---|---|---|

| X | Y | Max | |

| 1 k | −41.01 | −2.43 | 41.01 |

| 2 k | 28.55 | −16.26 | 28.55 |

| 3 k | −8.10 | 15.36 | 15.36 |

| 4 k | −5.65 | −37.81 | 37.81 |

| 5 k | 1.63 | 16.22 | 16.22 |

| 6 k | 1.00 | 2.1449 | 2.14 |

| 7 k | 1.00 | 1.9464 | 1.95 |

| 8 k | 1.45 | 3.42 | 3.42 |

| 9 k | −2.26 | −5.11 | 5.11 |

| 10 k | 0.99 | 1.9098 | 1.91 |

| 11 k | 0.93 | −1.94 | 1.94 |

| 12 k | −3.09 | 1.72 | 3.09 |

| 13 k | 0.98 | 2.0033 | 2.00 |

| 14 k | 1.01 | 1.9815 | 1.98 |

| 15 k | −0.48 | −7.44 | 7.44 |

| Target (X, Y) (m) | Measure (X, Y) (m) | Accuracy (X, Y) (cm) | |

|---|---|---|---|

| Location A | (1.0, 2.0) | (0.98, 2.02) | (2.32, 2.02) |

| Location B | (0.9, 1.2) | (0.91, 1.22) | (1.30, 1.55) |

| Location C | (1.6, 1.6) | (1.59, 1.58) | (−0.40, −1.9) |

| Location D | (0.5, 0.6) | (0.49, 0.58) | (−0.41, −1.55) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, M.-A.; Chou, H.-C.; Lin, C.-W. Sound Localization Based on Acoustic Source Using Multiple Microphone Array in an Indoor Environment. Electronics 2022, 11, 890. https://doi.org/10.3390/electronics11060890

Chung M-A, Chou H-C, Lin C-W. Sound Localization Based on Acoustic Source Using Multiple Microphone Array in an Indoor Environment. Electronics. 2022; 11(6):890. https://doi.org/10.3390/electronics11060890

Chicago/Turabian StyleChung, Ming-An, Hung-Chi Chou, and Chia-Wei Lin. 2022. "Sound Localization Based on Acoustic Source Using Multiple Microphone Array in an Indoor Environment" Electronics 11, no. 6: 890. https://doi.org/10.3390/electronics11060890

APA StyleChung, M.-A., Chou, H.-C., & Lin, C.-W. (2022). Sound Localization Based on Acoustic Source Using Multiple Microphone Array in an Indoor Environment. Electronics, 11(6), 890. https://doi.org/10.3390/electronics11060890