The Use of Convolutional Neural Networks and Digital Camera Images in Cataract Detection

Abstract

:1. Introduction

2. Related Work

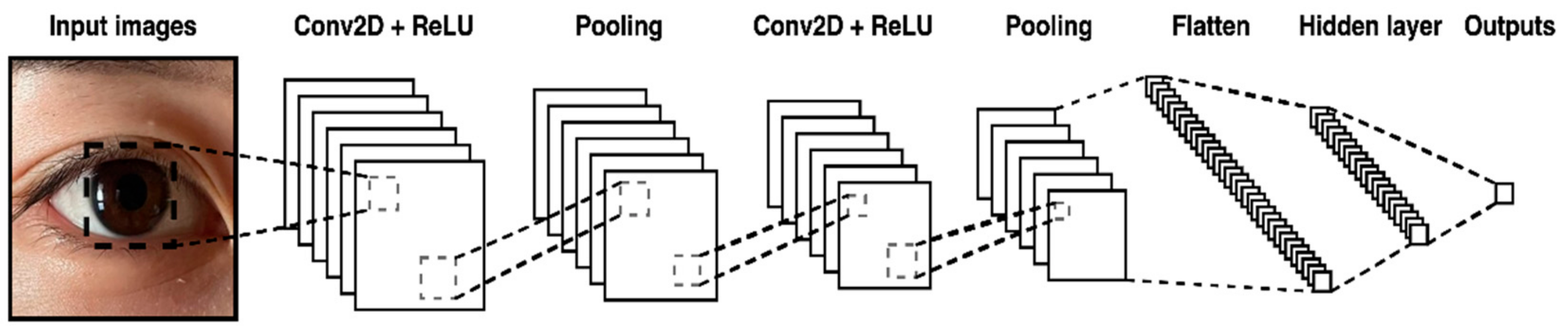

3. Convolutional Neural Networks

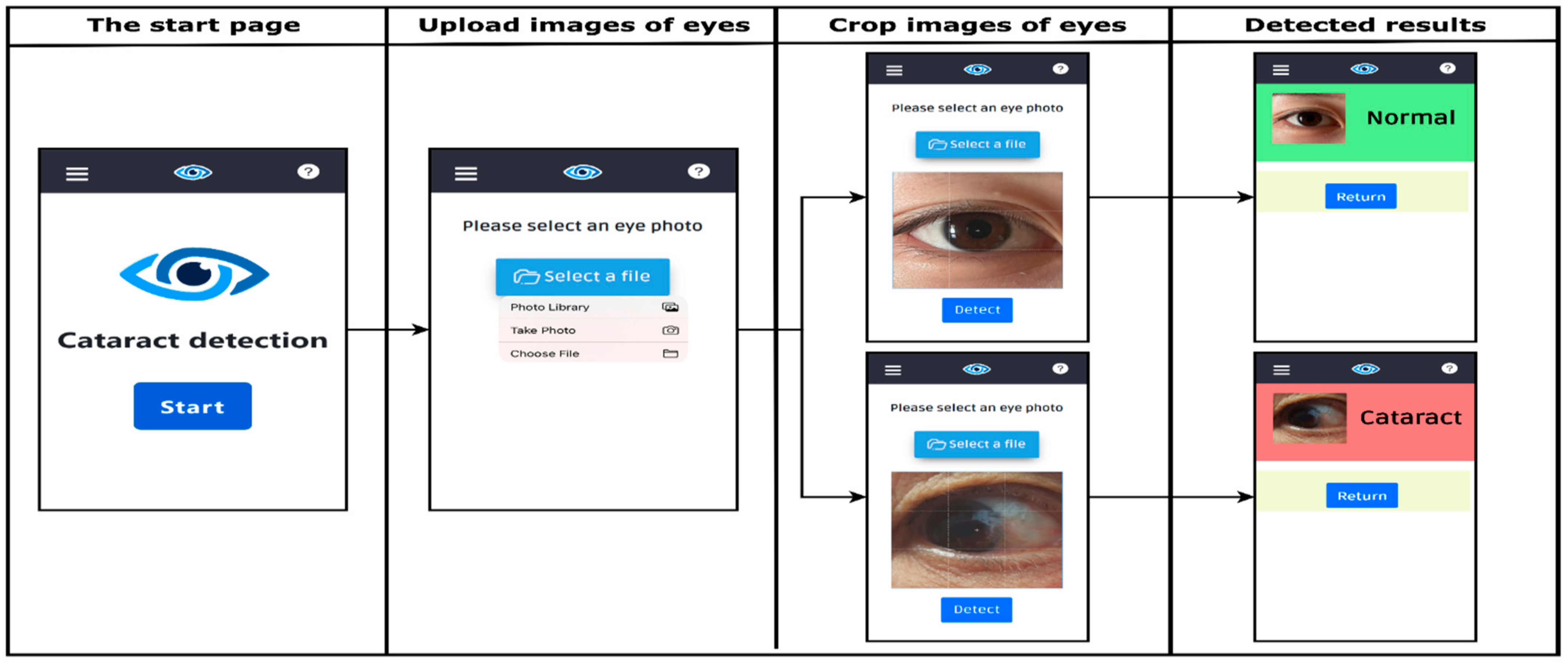

4. The Proposed CNNDCI System for Cataracts Detection and Numerical Results

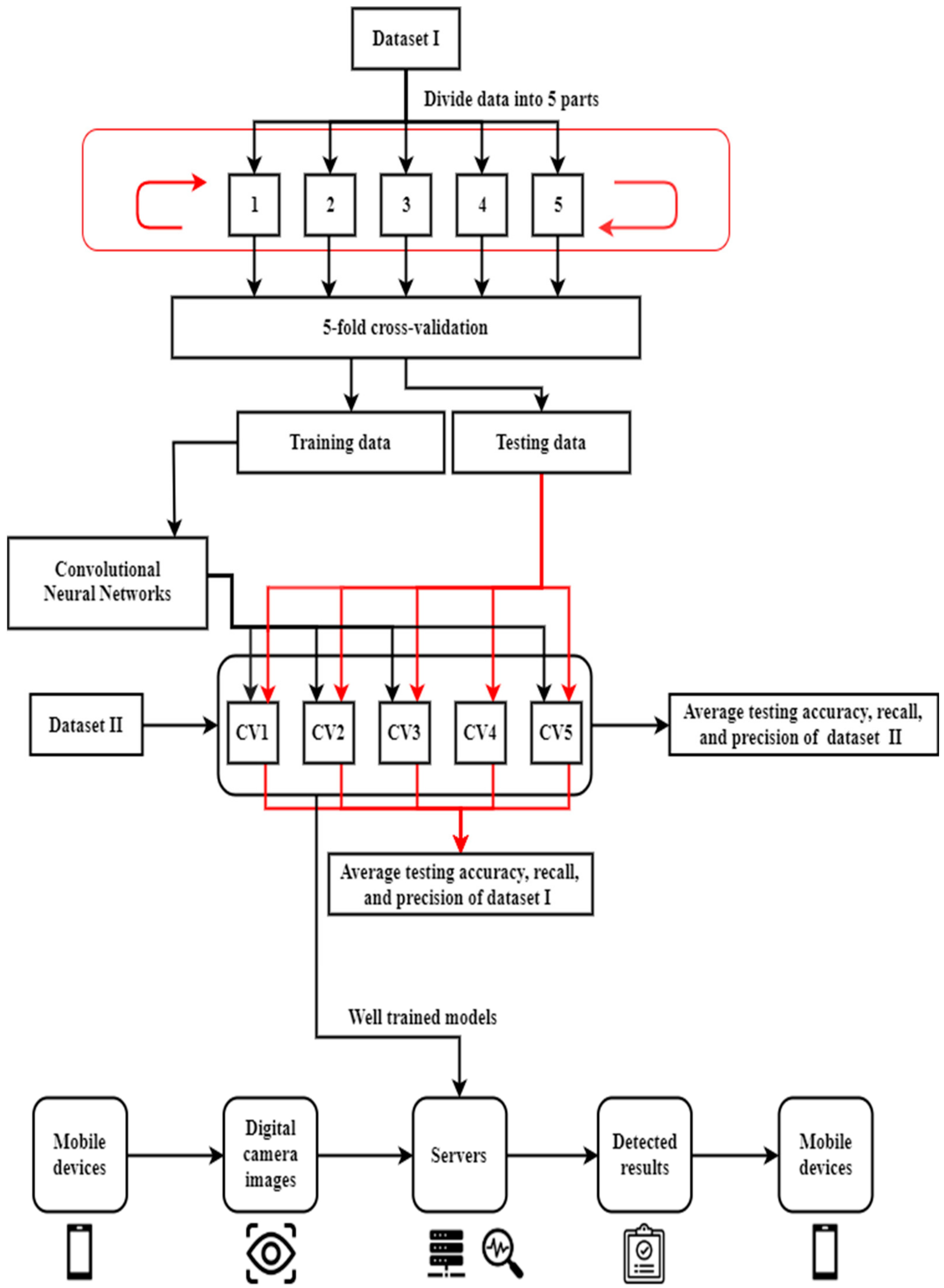

4.1. The Proposed CNNDCI System

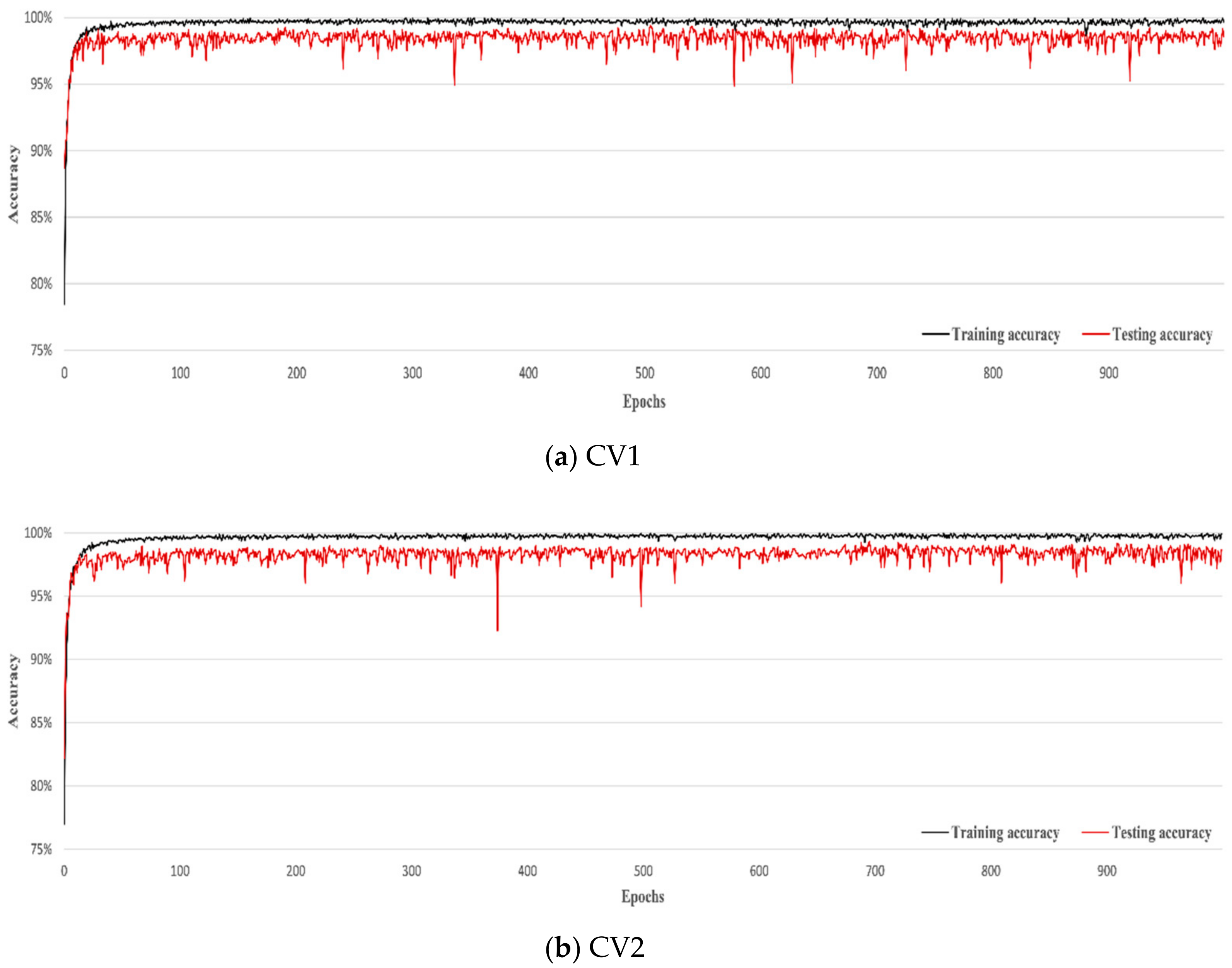

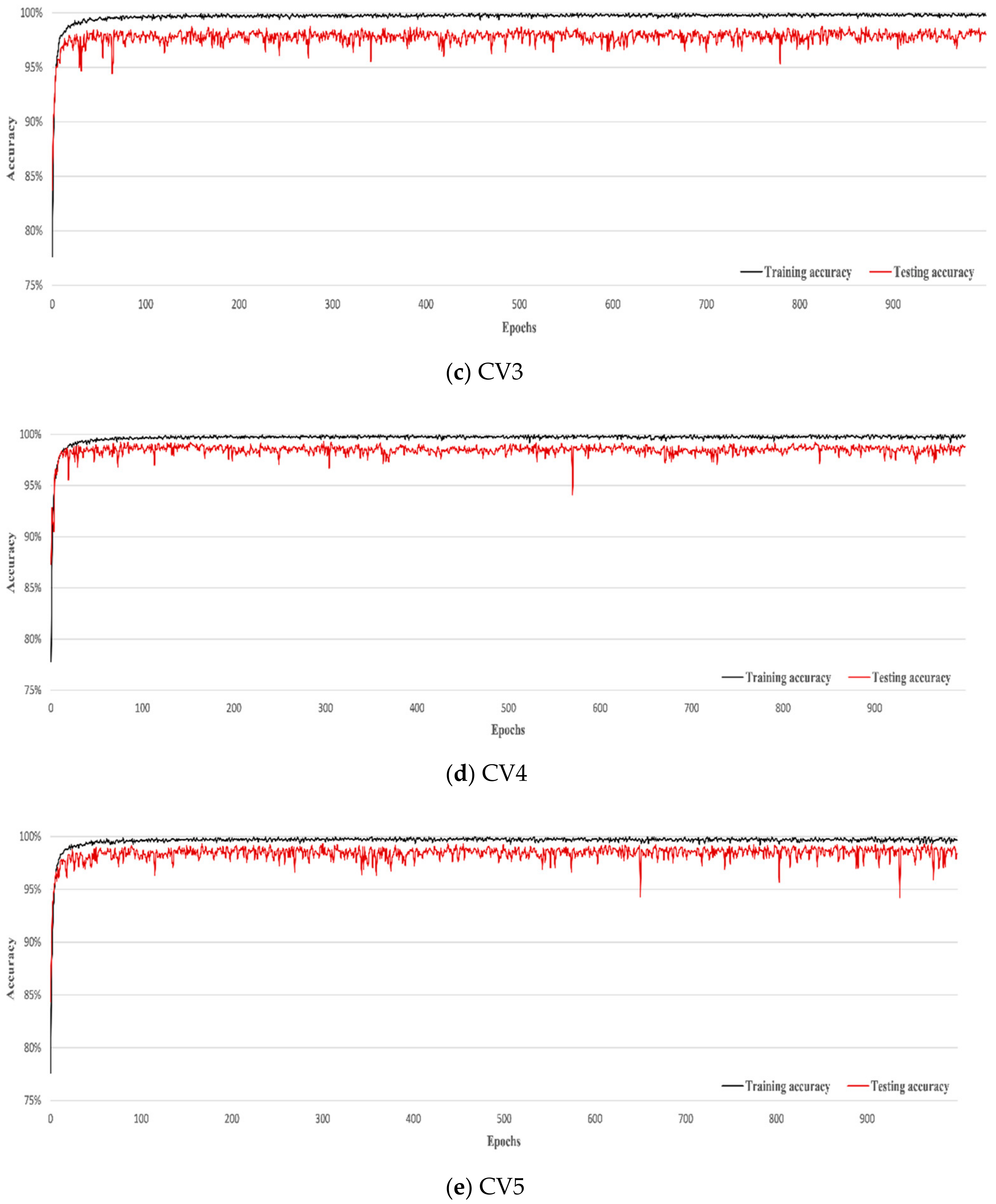

4.2. Numerical Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- World Health Organization. Blindness and Vision Impairment. Available online: https://www.who.int/en/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 14 October 2021).

- Luo, X.; Li, J.; Chen, M.; Yang, X.; Li, X. Ophthalmic Disease Detection via Deep Learning With A Novel Mixture Loss Function. IEEE J. Biomed. Health Inform. 2021, 25, 3332–3339. [Google Scholar] [CrossRef] [PubMed]

- Patil, D.; Nair, A.; Bhat, N.; Chavan, R.; Jadhav, D. Analysis and study of cataract detection techniques. In Proceedings of the 2016 International Conference on Global Trends in Signal Processing, Information Computing and Communication (ICGTSPICC), Jalgaon, India, 22–24 December 2016. [Google Scholar]

- Hu, S.; Wang, X.; Wu, H.; Luan, X.; Qi, P.; Lin, Y.; He, X.; He, W. Unified Diagnosis Framework for Automated Nuclear Cataract Grading Based on Smartphone Slit-Lamp Images. IEEE Access 2020, 8, 174169–174178. [Google Scholar] [CrossRef]

- Pathak, S.; Kumar, B. A robust automated cataract detection algorithm using diagnostic opinion based parameter thresholding for telemedicine application. Electronics 2016, 5, 57. [Google Scholar] [CrossRef] [Green Version]

- Chylack, L.T.; Wolfe, J.K.; Singer, D.M.; Leske, M.C.; Bullimore, M.A.; Bailey, I.L.; Friend, J.; McCarthy, D.; Wu, S.Y. The lens opacities classification system III. Arch. Ophthalmol. 1993, 111, 831–836. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Lim, J.H.; Liu, J.; Wing, D.; Wong, K.; Wong, T.Y. Feature analysis in slit-lamp image for nuclear cataract diagnosis. In Proceedings of the 2010 3rd International Conference on Biomedical Engineering and Informatics, Yantai, China, 16–18 October 2010. [Google Scholar]

- Sigit, R.; Triyana, E.; Rochmad, M. Cataract Detection Using Single Layer Perceptron Based on Smartphone. In Proceedings of the 2019 3rd International Conference on Informatics and Computational Sciences (ICICoS), Semarang, Indonesia, 29–30 October 2019. [Google Scholar]

- Xu, X.; Zhang, L.; Li, J.; Guan, Y.; Zhang, L. A hybrid global-local representation CNN model for automatic cataract grading. IEEE J. Biomed. Health Inform. 2019, 24, 556–567. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qian, X.; Patton, E.W.; Swaney, J.; Xing, Q.; Zeng, T. Machine learning on cataracts classification using SqueezeNet. In Proceedings of the 2018 4th International Conference on Universal Village (UV), Boston, MA, USA, 21–24 October 2018. [Google Scholar]

- Liu, X.; Jiang, J.; Zhang, K.; Long, E.; Cui, J.; Zhu, M.; An, Y.; Zhang, J.; Liu, Z.; Lin, Z.; et al. Localization and diagnosis framework for pediatric cataracts based on slit-lamp images using deep features of a convolutional neural network. PLoS ONE 2017, 12, e0168606. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y.; Gao, X.; Lin, S.; Wong, D.W.K.; Liu, J.; Xu, D.; Cheng, C.Y.; Cheung, C.Y.; Wong, T.Y. Automatic grading of nuclear cataracts from slit-lamp lens images using group sparsity regression. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Nagoya, Japan, 22–26 September 2013. [Google Scholar]

- Zhang, W.; Li, H. Lens opacity detection for serious posterior subcapsular cataract. Med. Biol. Eng. Comput. 2017, 55, 769–779. [Google Scholar] [CrossRef] [PubMed]

- Caixinha, M.; Amaro, J.; Santos, M.; Perdigão, F.; Gomes, M.; Santos, J. In-vivo automatic nuclear cataract detection and classification in an animal model by ultrasounds. IEEE Trans. Biomed. Eng. 2016, 63, 2326–2335. [Google Scholar] [CrossRef] [PubMed]

- Caxinha, M.; Velte, E.; Santos, M.; Perdigão, F.; Amaro, J.; Gomes, M.; Santos, J. Automatic cataract classification based on ultrasound technique using machine learning: A comparative study. Phys. Procedia 2015, 70, 1221–1224. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Xiao, Z.; Higashita, R.; Chen, W.; Yuan, J.; Fang, J.; Hu, Y.; Liu, J. A Novel Deep Learning Method for Nuclear Cataract Classification Based on Anterior Segment Optical Coherence Tomography Images. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020. [Google Scholar]

- Khan, M.S.M.; Ahmed, M.; Rasel, R.Z.; Khan, M.M. Cataract Detection Using Convolutional Neural Network with VGG-19 Model. In Proceedings of the 2021 IEEE World AI IoT Congress (AIIoT), Seattle, WA, USA, 10–13 May 2021. [Google Scholar]

- Yang, J.J.; Li, J.; Shen, R.; Zeng, Y.; He, J.; Bi, J.; Li, Y.; Zhang, Q.; Peng, L.; Wang, Q. Exploiting ensemble learning for automatic cataract detection and grading. Comput. Methods Programs Biomed. 2016, 124, 45–57. [Google Scholar] [CrossRef] [PubMed]

- Guo, L.; Yang, J.J.; Peng, L.; Li, J.; Liang, Q. A computer-aided healthcare system for cataract classification and grading based on fundus image analysis. Comput. Ind. 2015, 69, 72–80. [Google Scholar] [CrossRef]

- Zheng, J.; Guo, L.; Peng, L.; Li, J.; Yang, J.; Liang, Q. Fundus image based cataract classification. In Proceedings of the 2014 IEEE International Conference on Imaging Systems and Techniques (IST) Proceedings, Santorini, Greece, 14–17 October 2014. [Google Scholar]

- Yang, M.; Yang, J.J.; Zhang, Q.; Niu, Y.; Li, J. Classification of retinal image for automatic cataract detection. In Proceedings of the 2013 IEEE 15th International Conference on e-Health Networking, Applications and Services (Healthcom 2013), Lisbon, Portugal, 9–12 October 2013. [Google Scholar]

- Nayak, J. Automated classification of normal, cataract and post cataract optical eye images using SVM classifier. In Proceedings of the World Congress on Engineering and Computer Science, San Francisco, CA, USA, 23–25 October 2013. [Google Scholar]

- Fuadah, Y.N.; Setiawan, A.W.; Mengko, T.L.R. Performing high accuracy of the system for cataract detection using statistical texture analysis and K-Nearest Neighbor. In Proceedings of the 2015 International Seminar on Intelligent Technology and Its Applications (ISITIA), Surabaya, Indonesia, 20–21 May 2015. [Google Scholar]

- Khan, A.A.; Akram, M.U.; Tariq, A.; Tahir, F.; Wazir, K. Automated Computer Aided Detection of Cataract. In Proceedings of the International Afro-European Conference for Industrial Advancement, Marrakesh, Morocco, 21–23 November 2016. [Google Scholar]

- Tawfik, H.R.; Birry, R.A.; Saad, A.A. Early Recognition and Grading of Cataract Using a Combined Log Gabor/Discrete Wavelet Transform with ANN and SVM. Int. J. Comput. Inf. Eng. 2018, 12, 1038–1043. [Google Scholar]

- Agarwal, V.; Gupta, V.; Vashisht, V.M.; Sharma, K.; Sharma, N. Mobile application based cataract detection system. In Proceedings of the 2019 3rd International Conference on Trends in Electronics and Informatics (ICOEI), Tirunelveli, India, 23–25 April 2019. [Google Scholar]

- Yusuf, M.; Theophilous, S.; Adejoke, J.; Hassan, A.B. Web-Based Cataract Detection System Using Deep Convolutional Neural Network. In Proceedings of the 2019 2nd International Conference of the IEEE Nigeria Computer Chapter (NigeriaComputConf), Zaria, Nigeria, 14–17 October 2019. [Google Scholar]

- Zhang, X.; Fang, J.; Hu, Y.; Xu, Y.; Higashita, R.; Liu, J. Machine Learning for Cataract Classification and Grading on Ophthalmic Imaging Modalities: A Survey. arXiv 2020, arXiv:2012.04830. [Google Scholar]

- Krishnabojha. Cataract_Detection-Using-CNN. Available online: https://github.com/krishnabojha/Cataract_Detection-using-CNN (accessed on 27 March 2021).

- Piygot5. Cataract-Detection-and-Classification. Available online: https://github.com/piygot5/Cataract-Detection-and-Classification (accessed on 27 March 2021).

- Keras. Image Data Generator. Available online: https://keras.io/zh/preprocessing/image/ (accessed on 27 March 2021).

- Jahanbakhshi, A.; Abbaspour-Gilandeh, Y.; Heidarbeigi, K.; Momeny, M. A novel method based on machine vision system and deep learning to detect fraud in turmeric powder. Comput. Biol. Med. 2021, 136, 104728. [Google Scholar] [CrossRef] [PubMed]

- Patwari, M.A.U.; Arif, M.D.; Chowdhury, M.N.; Arefin, A.; Imam, M.I. Detection, categorization, and assessment of eye cataracts using digital image processing. In Proceedings of the First International Conference on Interdisciplinary Research and Development, Thailand, China, 31 May–1 June 2011. [Google Scholar]

- Khaldi, Y.; Benzaoui, A.; Ouahabi, A.; Jacques, S.; Taleb-Ahmed, A. Ear recognition based on deep unsupervised active learning. IEEE Sens. J. 2021, 21, 20704–20713. [Google Scholar] [CrossRef]

- Khaldi, Y.; Benzaoui, A. A new framework for grayscale ear images recognition using generative adversarial networks under unconstrained conditions. Evol. Syst. 2021, 12, 923–934. [Google Scholar] [CrossRef]

- Khan, A.; Jin, W.; Haider, A.; Rahman, M.; Wang, D. Adversarial Gaussian Denoiser for Multiple-Level Image Denoising. Sensors 2021, 21, 2998. [Google Scholar] [CrossRef] [PubMed]

| Partitions | Cataract Cases | Normal Cases |

|---|---|---|

| Partition 1 | 902 | 1030 |

| Partition 2 | 903 | 1031 |

| Partition 3 | 903 | 1031 |

| Partition 4 | 903 | 1031 |

| Partition 5 | 903 | 1031 |

| CV | Training Data | Testing Data |

|---|---|---|

| CV 1 | Partition 1, Partition 2, Partition 3, Partition 4 | Partition 5 |

| CV 2 | Partition 1, Partition 2, Partition 3, Partition 5 | Partition 4 |

| CV 3 | Partition 1, Partition 2, Partition 4, Partition 5 | Partition 3 |

| CV 4 | Partition 1, Partition 3, Partition 4, Partition 5 | Partition 2 |

| CV 5 | Partition 2, Partition 3, Partition 4, Partition 5 | Partition 1 |

| Layers | Components | Outputs | Kernel Size | Stride |

|---|---|---|---|---|

| 0 | Image input (width, height, channels) | (64, 64, 3) | ||

| 1 | Convolutional (width, height, channels) | (62, 62, 32) | 3 × 3 | 1 |

| 2 | Max-pooling (width, height, channels) | (31, 31, 32) | 2 × 2 | |

| 3 | Convolutional (width, height, channels) | (29, 29, 32) | 3 × 3 | 1 |

| 4 | Max-pooling (width, height, channels) | (14, 14, 32) | 2 × 2 | |

| 5 | Flatten(nodes) | (6272) | ||

| 6 | Dense(nodes) | (128) | ||

| 7 | Dense(nodes) | (1) |

| Confusion Matrix | Actual | ||

|---|---|---|---|

| True | False | ||

| Predicted | True | True positive | False positive |

| False | False negative | True negative | |

| Metrics | CV1 | CV2 | CV3 | CV4 | CV5 | Average | |

|---|---|---|---|---|---|---|---|

| Dataset I | Training accuracy | 99.1% | 98.2% | 98.6% | 99.5% | 99.3% | 98.9% |

| Testing accuracy | 99.1% | 98.2% | 97.7% | 99.1% | 98.8% | 98.5% | |

| Recall | 98.8% | 97.4% | 97.1% | 98.5% | 98.0% | 97.9% | |

| Precision | 99.2% | 98.7% | 97.9% | 99.4% | 99.4% | 98.9% | |

| Dataset II | Testing accuracy | 94.3% | 89.8% | 92.1% | 92.1% | 92.1% | 92% |

| Recall | 95.3% | 90.6% | 88.3% | 90.6% | 90.6% | 91% | |

| Precision | 93.1% | 88.6% | 95.0% | 92.8% | 92.8% | 92.4% |

| References | Method | Accuracy | Training Data | Testing Data | Cross-Validation |

|---|---|---|---|---|---|

| [5] | K-means Clustering | 98% | Not available | 200 | No |

| [8] | Single Layer Perceptron | 85% | 30 | 20 | No |

| [23] | Support Vector Machine | 88.39% | 125 | 49 | No |

| [24] | K-nearest Neighbor | 94.5% | 80 | 80 | No |

| [25] | Support Vector Machine | 69.4% | 58 | 36 | No |

| [26] | Support Vector Machine | 96.8% | 78 | 42 | No |

| Artificial Neural Network | 92.3% | ||||

| [27] | K-nearest Neighbor | 83% | Not available | 1152 | No |

| Support Vector Machine | 75.2% | ||||

| Naïve Bayes | 76.6% | ||||

| [28] | Convolutional Neural Network | 78% | 100 | 30 | No |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lai, C.-J.; Pai, P.-F.; Marvin, M.; Hung, H.-H.; Wang, S.-H.; Chen, D.-N. The Use of Convolutional Neural Networks and Digital Camera Images in Cataract Detection. Electronics 2022, 11, 887. https://doi.org/10.3390/electronics11060887

Lai C-J, Pai P-F, Marvin M, Hung H-H, Wang S-H, Chen D-N. The Use of Convolutional Neural Networks and Digital Camera Images in Cataract Detection. Electronics. 2022; 11(6):887. https://doi.org/10.3390/electronics11060887

Chicago/Turabian StyleLai, Chi-Ju, Ping-Feng Pai, Marvin Marvin, Hsiao-Han Hung, Si-Han Wang, and Din-Nan Chen. 2022. "The Use of Convolutional Neural Networks and Digital Camera Images in Cataract Detection" Electronics 11, no. 6: 887. https://doi.org/10.3390/electronics11060887

APA StyleLai, C.-J., Pai, P.-F., Marvin, M., Hung, H.-H., Wang, S.-H., & Chen, D.-N. (2022). The Use of Convolutional Neural Networks and Digital Camera Images in Cataract Detection. Electronics, 11(6), 887. https://doi.org/10.3390/electronics11060887