Green Demand Aware Fog Computing: A Prediction-Based Dynamic Resource Provisioning Approach

Abstract

:1. Introduction

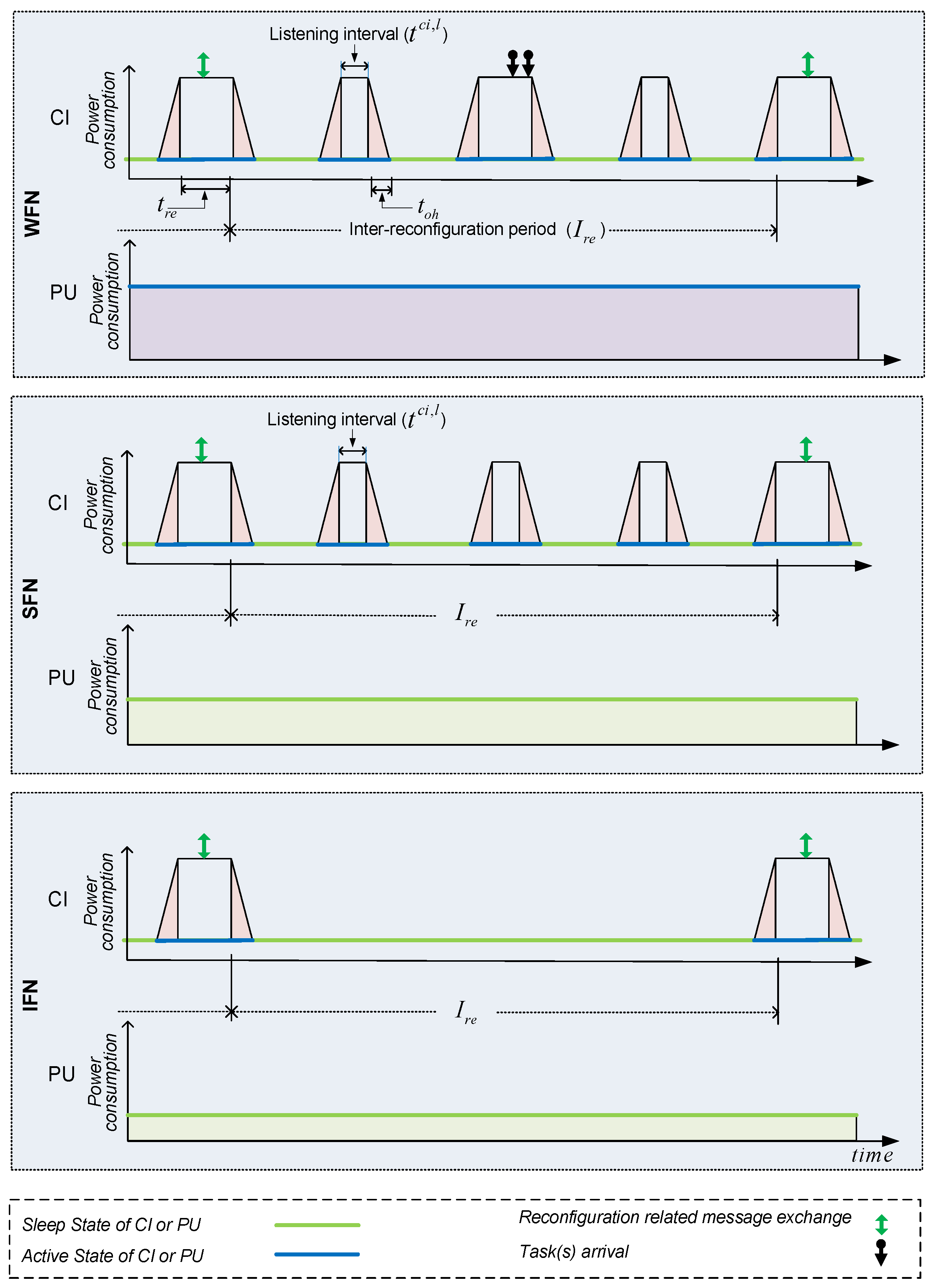

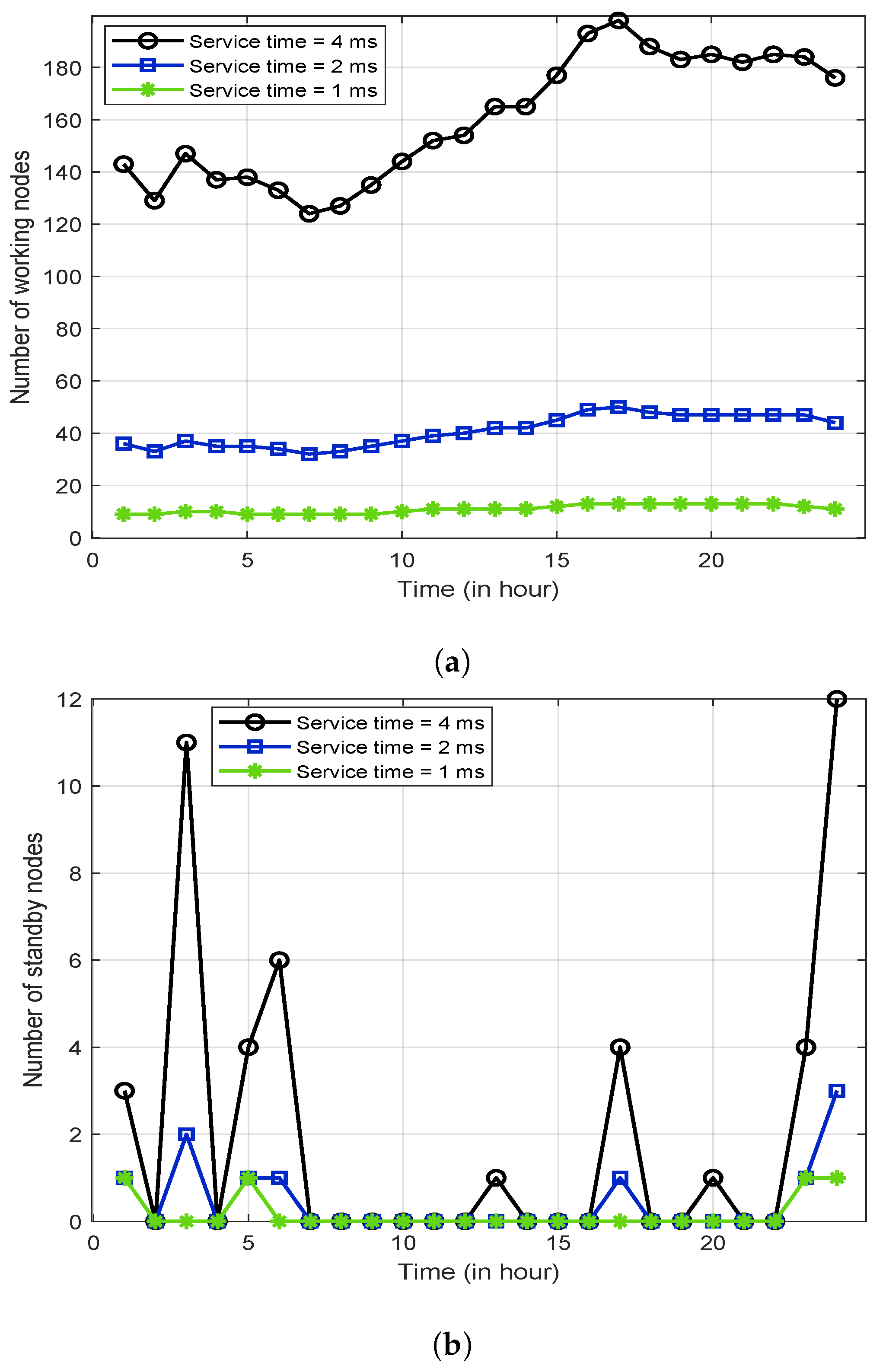

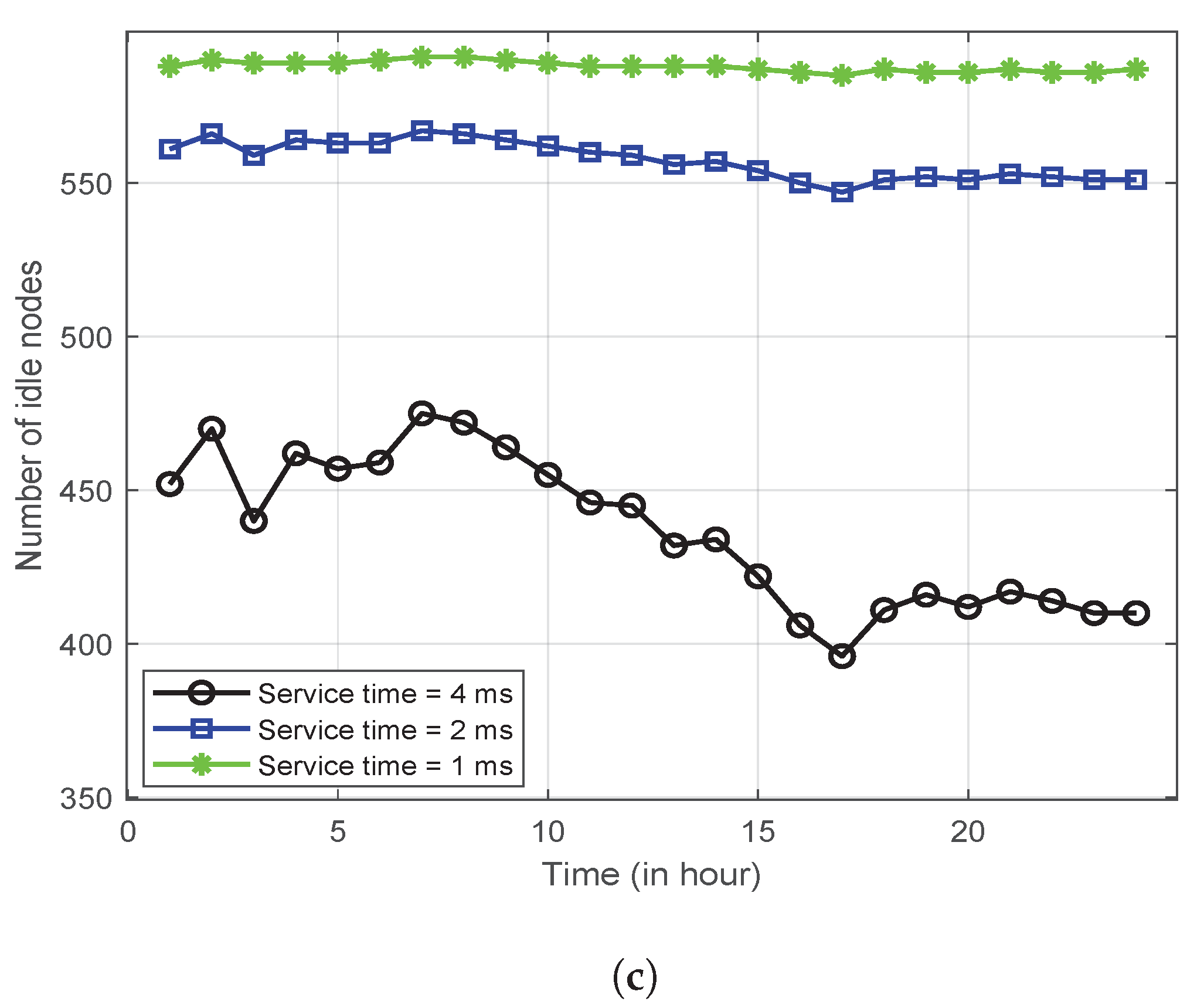

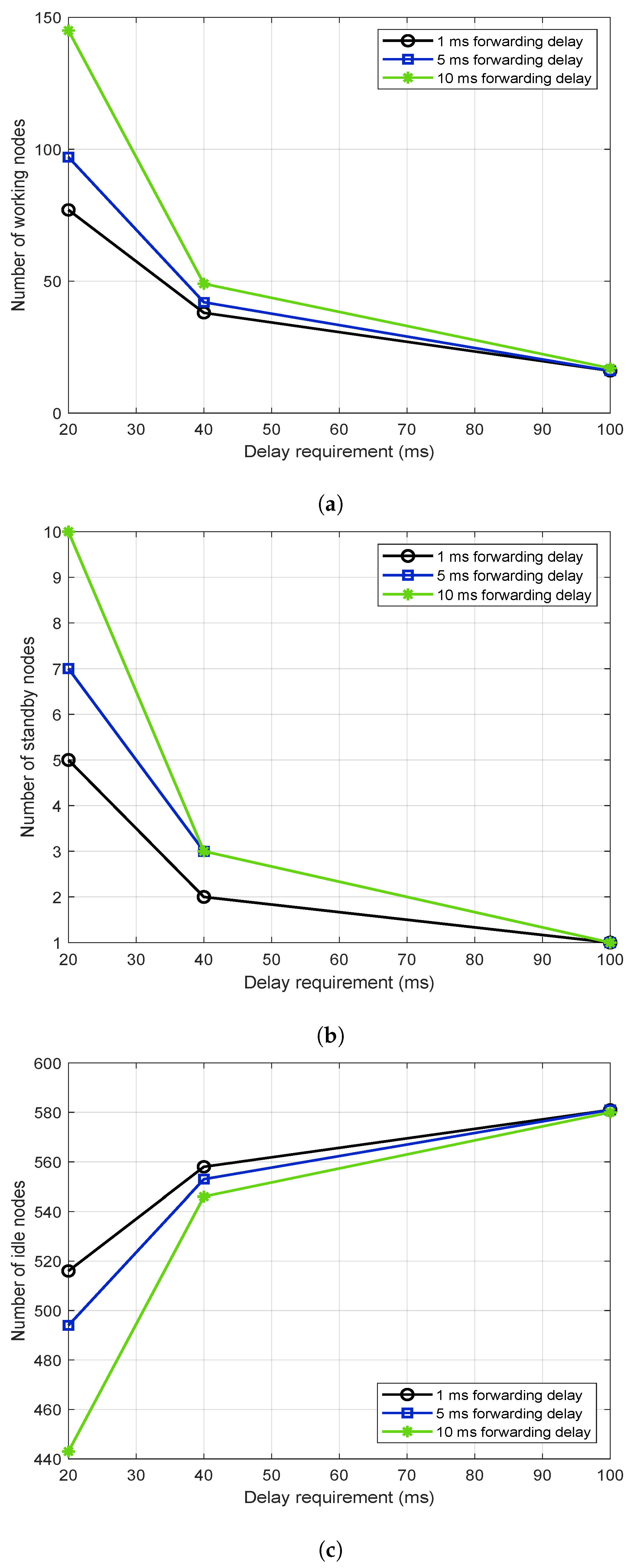

- We propose a dynamic allocation of fog nodes into three separate groups: working fog nodes, standby fog nodes, and idle fog nodes. Based on predicted load, only the necessary fog nodes are deployed during a specific period to meet the delay requirement of the tasks, whereas the remaining fog nodes are put into a low-power state, i.e., to sleep, to conserve energy.

- Unlike the existing works (e.g., [9]), in which the number of fog nodes deployed for backup is static, this solution proposes to deploy backup fog nodes (standby fog nodes) dynamically.

- Furthermore, to maximize energy savings, this solution proposes separate sleep mode management for the communication interface and processing unit of a fog node; the duration of the sleep interval for the communication interface/processing unit of a fog node depends on its assigned role (i.e., working fog nodes, standby fog nodes, or idle fog nodes) and on the imposed delay requirement.

2. Literature Review

2.1. Energy-Efficient Fog Computing

2.2. Forecasting Network Traffic with Machine Learning

3. Proposed Green-Demand-Aware Fog Computing (GDAFC) Solution

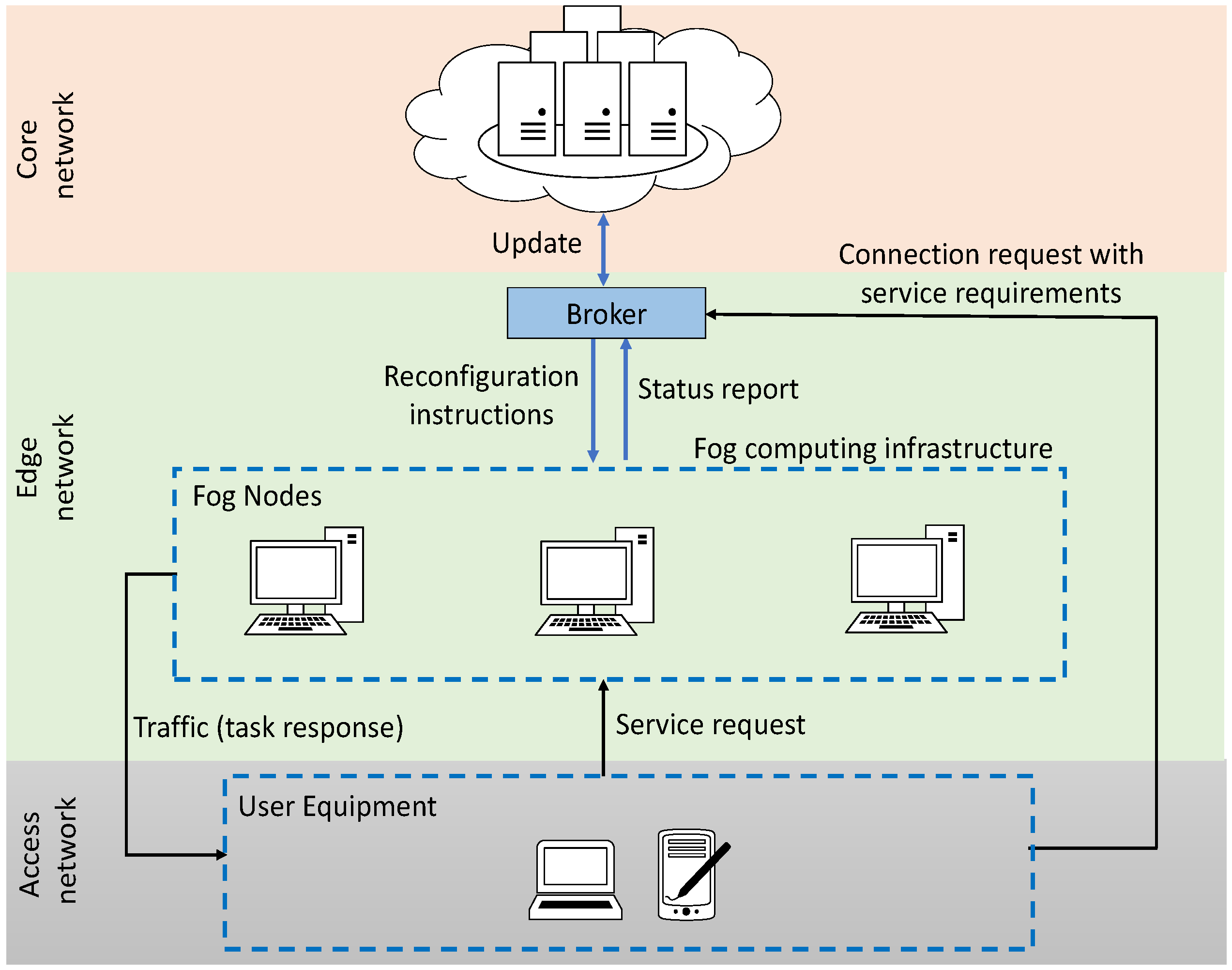

3.1. System Model

- Broker: In this study, a broker is used as an intermediary entity to facilitate the collaboration between the core network and the fog nodes in the edge network. The broker acts as the coordinator for the fog computing, responsible for providing fog services to the interested customers (IoTs) by renting resources from the eligible fog nodes. In particular, the rented resources are used to execute latency-sensitive cloud services that the cloud itself cannot perform without violating the QoS requirements.

- Fog nodes: We assume that computing resources of fog nodes are purchased by the broker to deliver fog services to the UEs and IoTs. The fog nodes need to meet a set of requirements to be part of an FCI. We consider that the prime criteria for a fog node to be part of an FCI are having sufficient storage and computing resources, as well as the capability to communicate through communication interface(s) and move to a low-power state to conserve energy. The authors in [29] implemented Cloudy software (http://cloudy.community/, accessed on 31 December 2021) technologies in home gateways in order to facilitate FCI. Similarly to [29], in order to model end-users’ computing resources, e.g., personal computer (PC), as part of an FCI, we assume that Cloudy software is installed on all the fog nodes, enabling cloud computing to be conducted at the edge; hence, the PCs are transformed into fog nodes.As the fog nodes need to be able to sleep to reduce energy consumption, having the ability to switch to low-power mode is a must. A system in an idle state consumes a lot of energy despite doing nothing; thus, in our fog scenario, we use the C-States low-power mode, whereby the processor can be put to sleep when idle [30]. This is the standardized power-saving mode of the ACPI specification (http://www.acpi.info/spec50a.htm, accessed on 31 December 2021) for computer systems. The reason we choose C-States is because the wake-up latency (transition overhead) is well below the delay requirement of low-latency applications and the power reduction is significant.Here, we assume that the fog nodes will only go to sleep when there is no incoming request that will not exceed the delay requirement. While being deployed to provide fog service, each fog node will monitor and record its own request traffic, calculating how many requests it received from the customer premises before the next re-configuration period (RECON). When no request arrives, the fog node will transition to sleep mode for a specific amount of time before waking up and listening for new request. Once a new request arrives, it will remain in an active state, process the request and listen for another requests.

- End-users: IoTs and UEs are collectively referred to as end-users in this paper, catering to different sorts of applications including those that have very stringent latency requirements. To be connected to a fog service, these devices must first send a connection request and define their expected level of service to the broker. Once SLA has been achieved, devices are permitted to send tasks to and receive responses from the nearby fog nodes of an FCI.

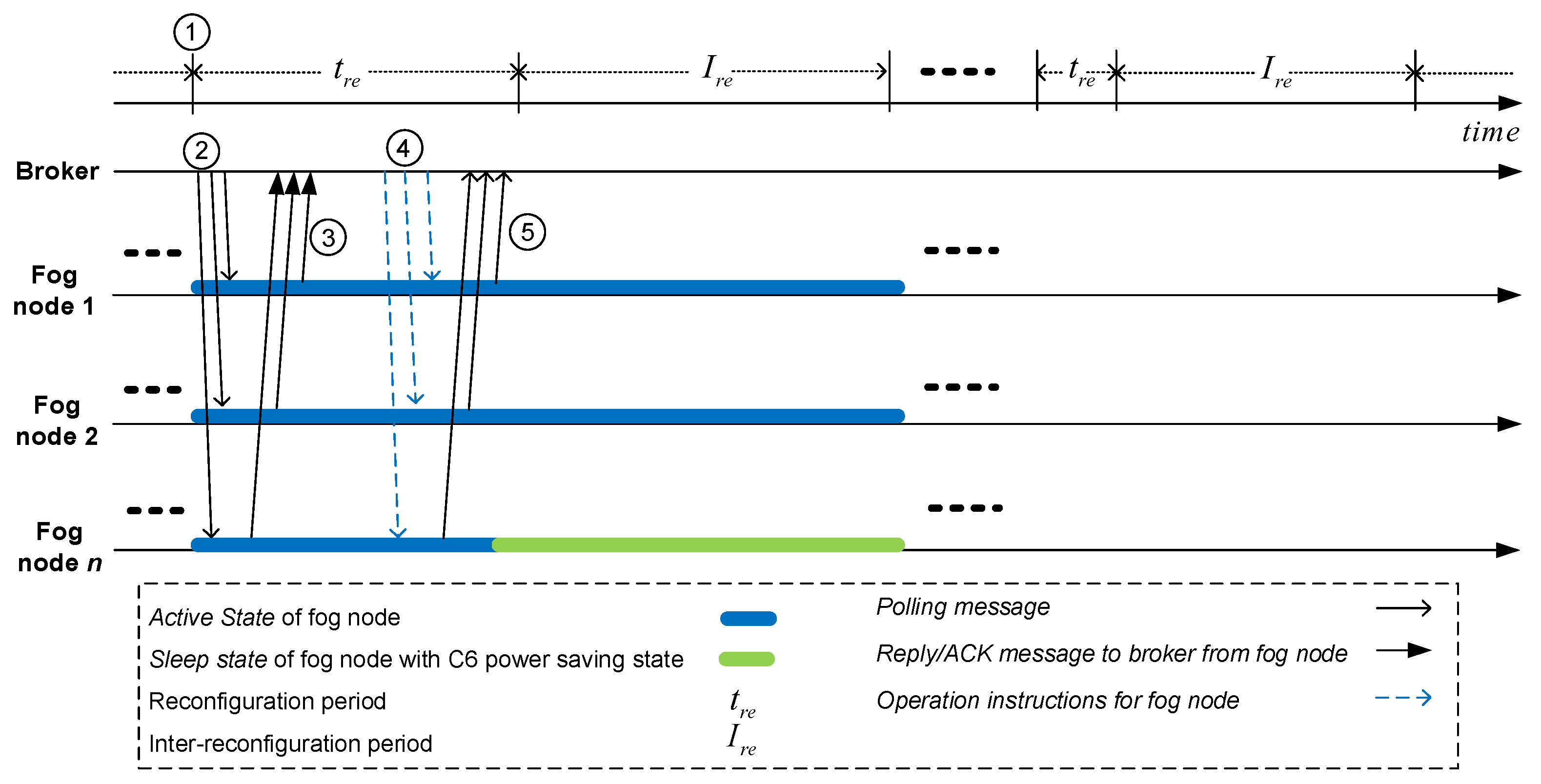

3.2. Proposed Workflow in GDAFC

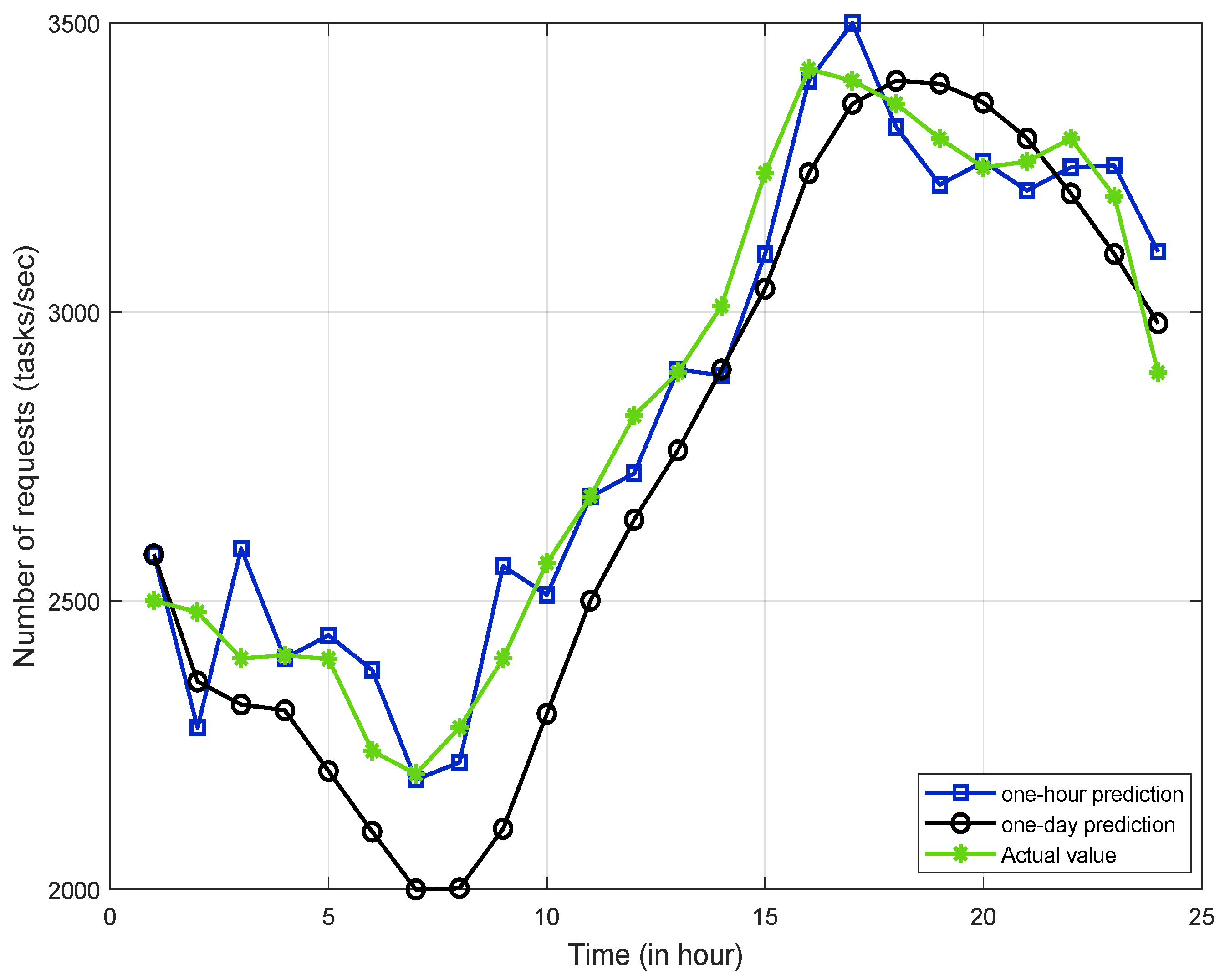

- Network load prediction: Using time-series analysis, historical network traffic traces are analyzed and processed to train our prediction model. Using the model, the broker predicts the total incoming requests for the next . This will be elaborated further in Section 3.2.1.

- Dynamic allocation of fog nodes: Based on the predicted number of request arrivals in the next , the broker allocates the fog nodes available in the system into three possible role groups: WFNs, SFNs and IFNs. This will be elaborated further in Section 3.2.2.

- Determining appropriate power-saving techniques: We assume that the fog nodes can selectively turn on/off their CI and PU whenever required. Once fog nodes are allocated to their respective groups, the broker determines the appropriate sleep mode for the CI and PU of the WFNs, SFNs and IFNs. Next, it notifies the fog nodes their respective role and power-saving-related parameters (e.g., sleep interval length and sleep state of the processing unit) ➃; see an example illustration in Figure 3. In reply, the fog nodes send an acknowledgment (ACK) message to the broker ➄. The energy conservation operation of the fog nodes will be elaborated further in Section 3.2.3.

3.2.1. Network Load Prediction

3.2.2. Dynamic Allocation of Fog Nodes

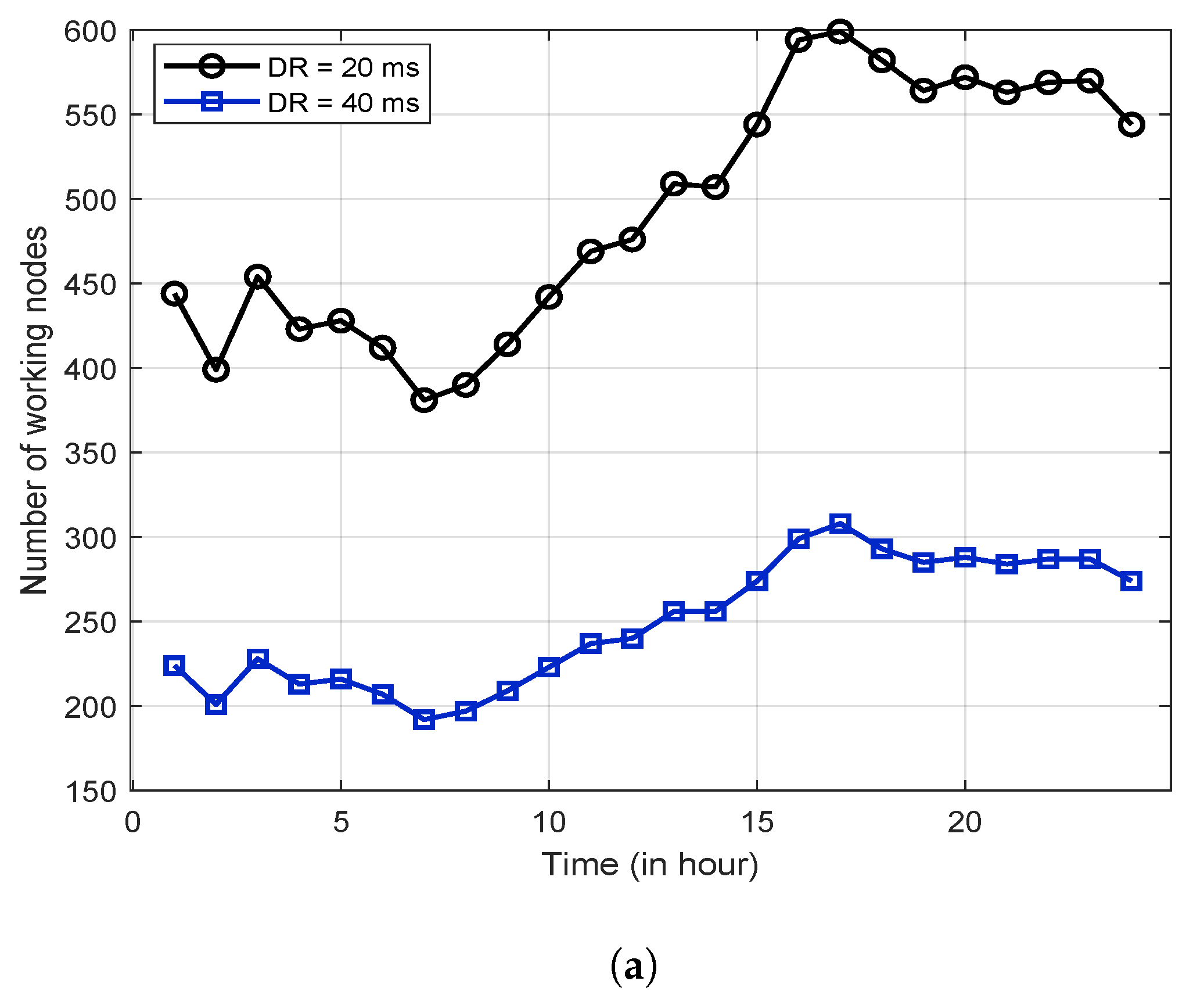

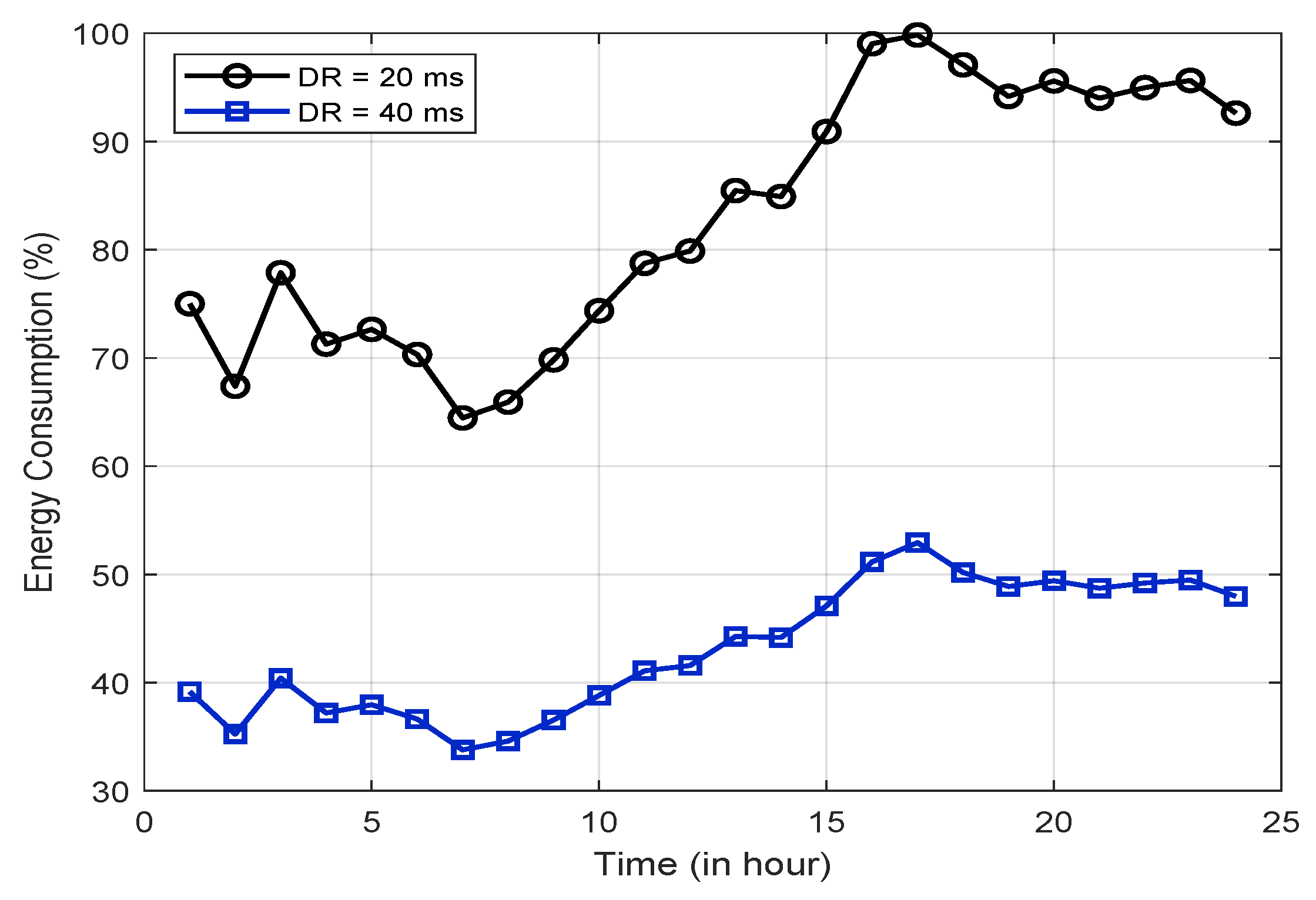

- Working fog nodes: Working fog nodes (WFNs) are the fog nodes that are expected to serve the requests arriving at the fog network. Here, our objective is to find the minimum number of fog nodes from that are sufficient to meet the demand for task-processing requests, measured using the prediction model based on ARIMA. In order to attain this objective, we first measure the total delay that a request may experience for a given task arrival rate.where is the service delay per fog node, which is measured using (2), and is the task-forwarding delay to a user.where, is the request arrival rate and is the task-processing service time at a fog node. To find the optimal WFN, we propose Algorithm 1, which returns an optimal number of WFNs from that are sufficient to meet the imposed delay requirement () for a given task request arrival.In Algorithm 1, initially, the of current is measured using (1), taking into account the and . Next, the is either incremented or decremented depending on the current workload trend () and the predicted workload trend (). If both trends are either increasing or fluctuating and , then is incremented until to ensure optimal performance. Otherwise, if decreasing trends are observed from T to , and , the algorithm will attempt to reduce to minimize the number of working nodes in order to conserve energy, while not exceeding . Since our objective is to deploy enough working nodes for the incoming rate of requests, we assume that the request arrivals at each fog node during a time period are independent and follow a Poisson distribution [36]. We apply the system in (2) to calculate the waiting time in the queue.

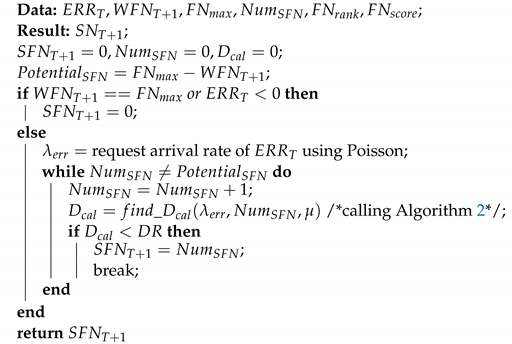

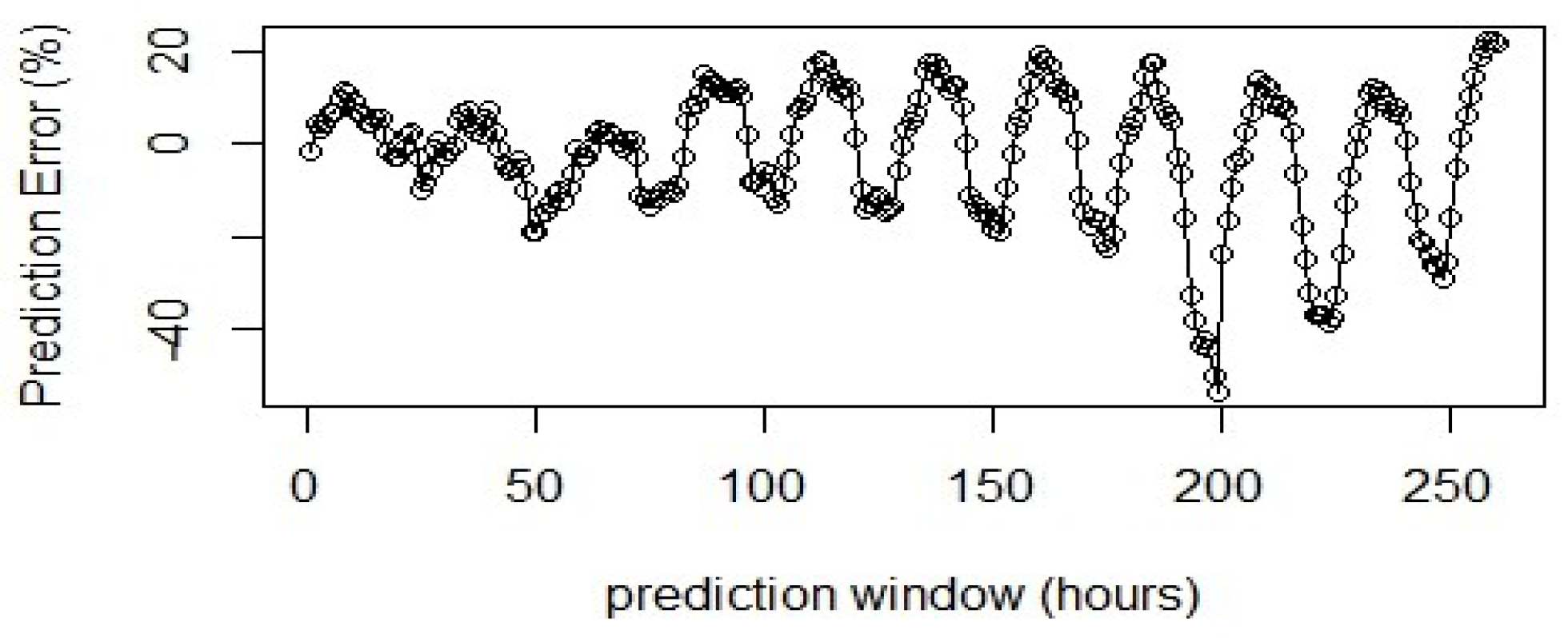

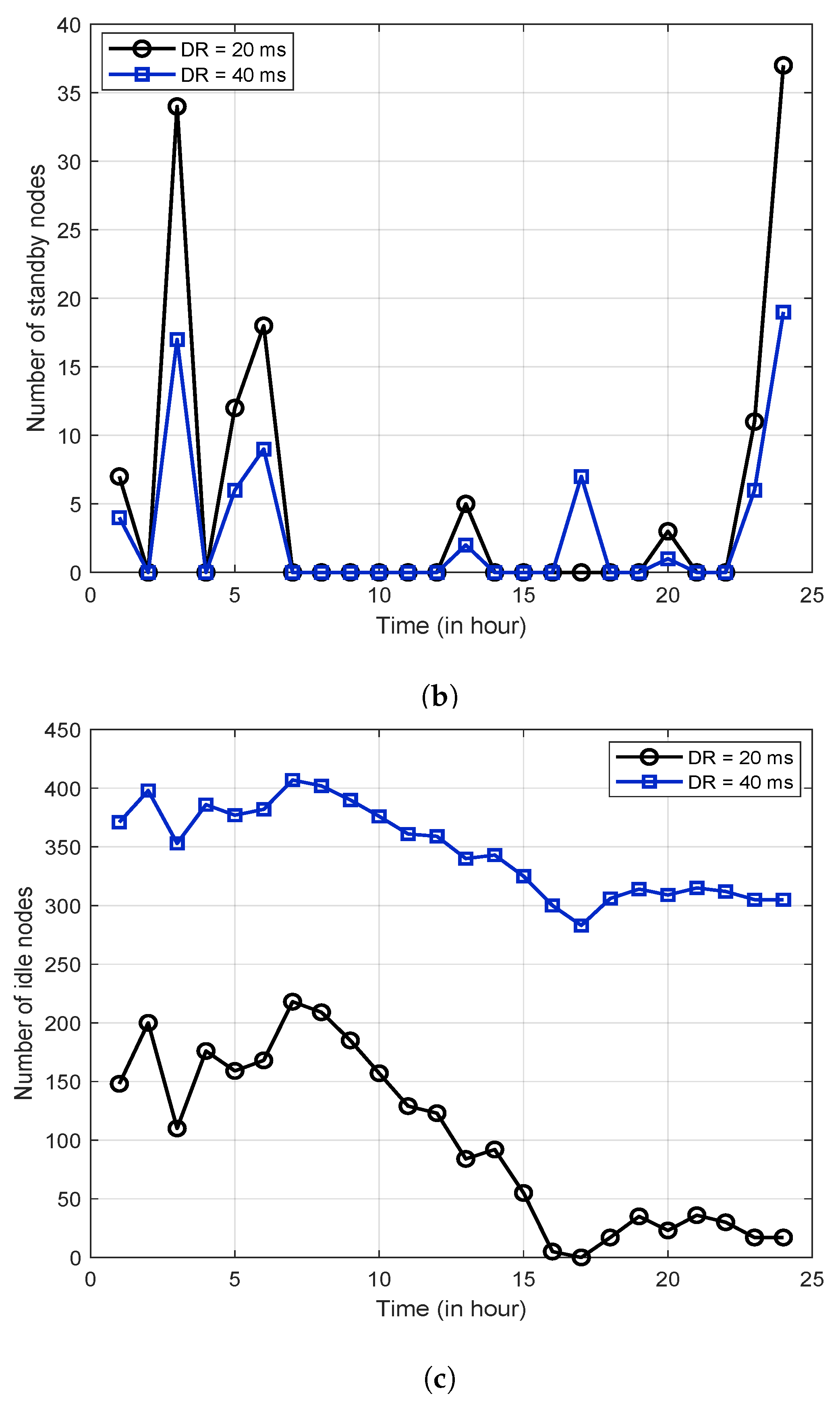

- Standby fog nodes: As the provisioning of fog nodes is highly dependent on the output of the prediction model, having 100% prediction accuracy is essential to ensure that the right number of fog nodes are deployed in the network to meet the SLA-specified QoS requirements. However, our prediction model is based on ARIMA, which is a statistical model, and statistical models generally produce an average level performance, indicating that they are not able to yield optimum performance at all times [37]. Note that the prediction model could over-estimate or under-estimate the actual workload. Addressing the latter is our main concern as a lack of resources available in the network when demand is higher than expected could degrade performance in relation to delay requirements. Therefore, deploying extra fog nodes (SFNs) alongside the WFNs would be an ideal solution. We use Algorithm 3 to measure the number of SFNs required.

- Idle fog nodes: After the working nodes and the standby nodes have been determined, the remaining fog nodes, if any, are set as idle nodes. This is measured simply using Algorithm 4.

| Algorithm 1:: determining the number of WFNs. |

|

| Algorithm 2: : calculate the expected delay in the system with current number of fog nodes. |

| Data: ; |

| Result: ; |

| initialize |

| return |

| Algorithm 3: : determining the number of SFNs. |

|

| Algorithm 4: : determining the number of IFNs. |

Data: Result: initialize ; ; return |

3.2.3. Energy Conservation Operation in Fog Nodes

3.3. Energy Consumption Measurements in GDAFC

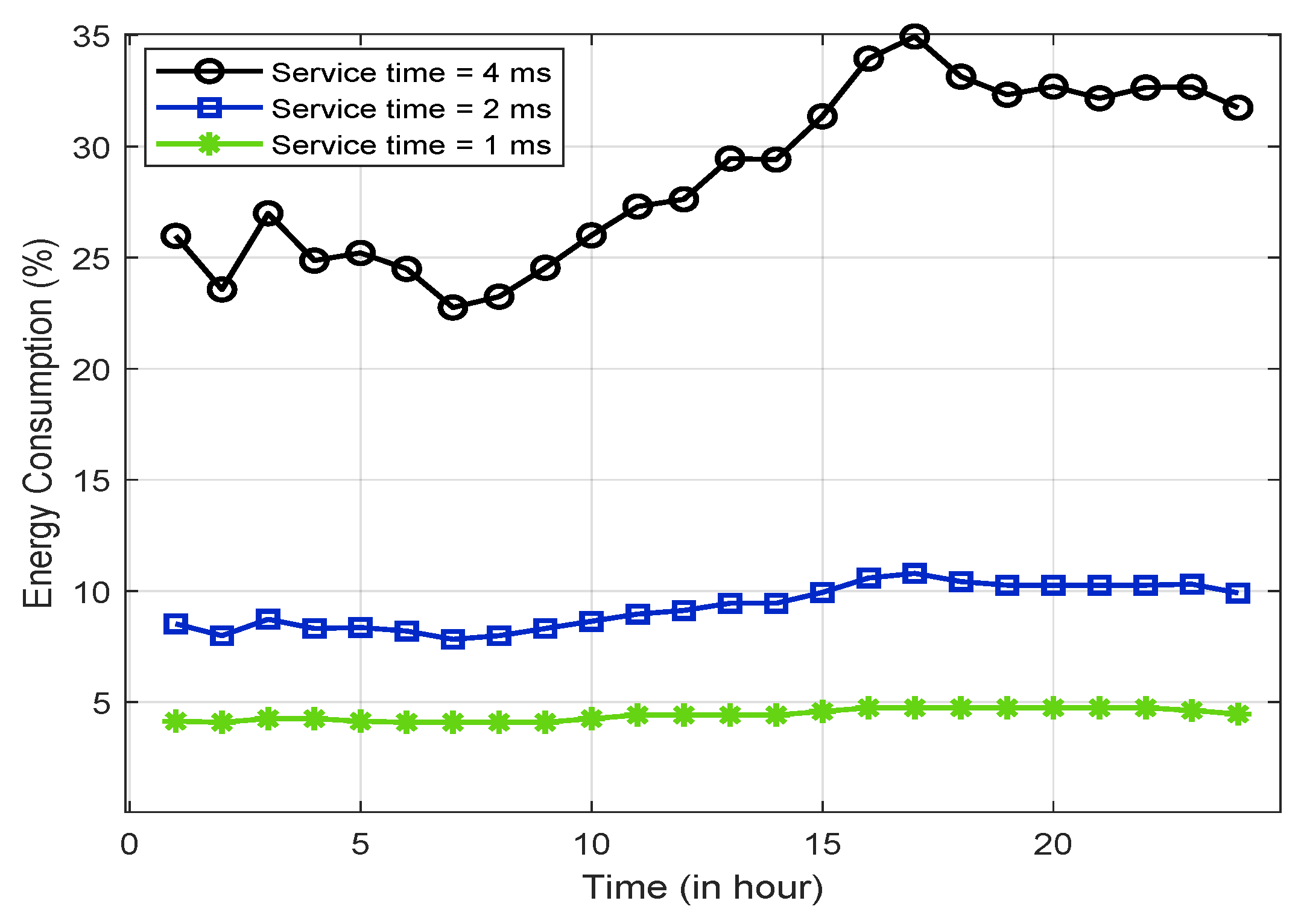

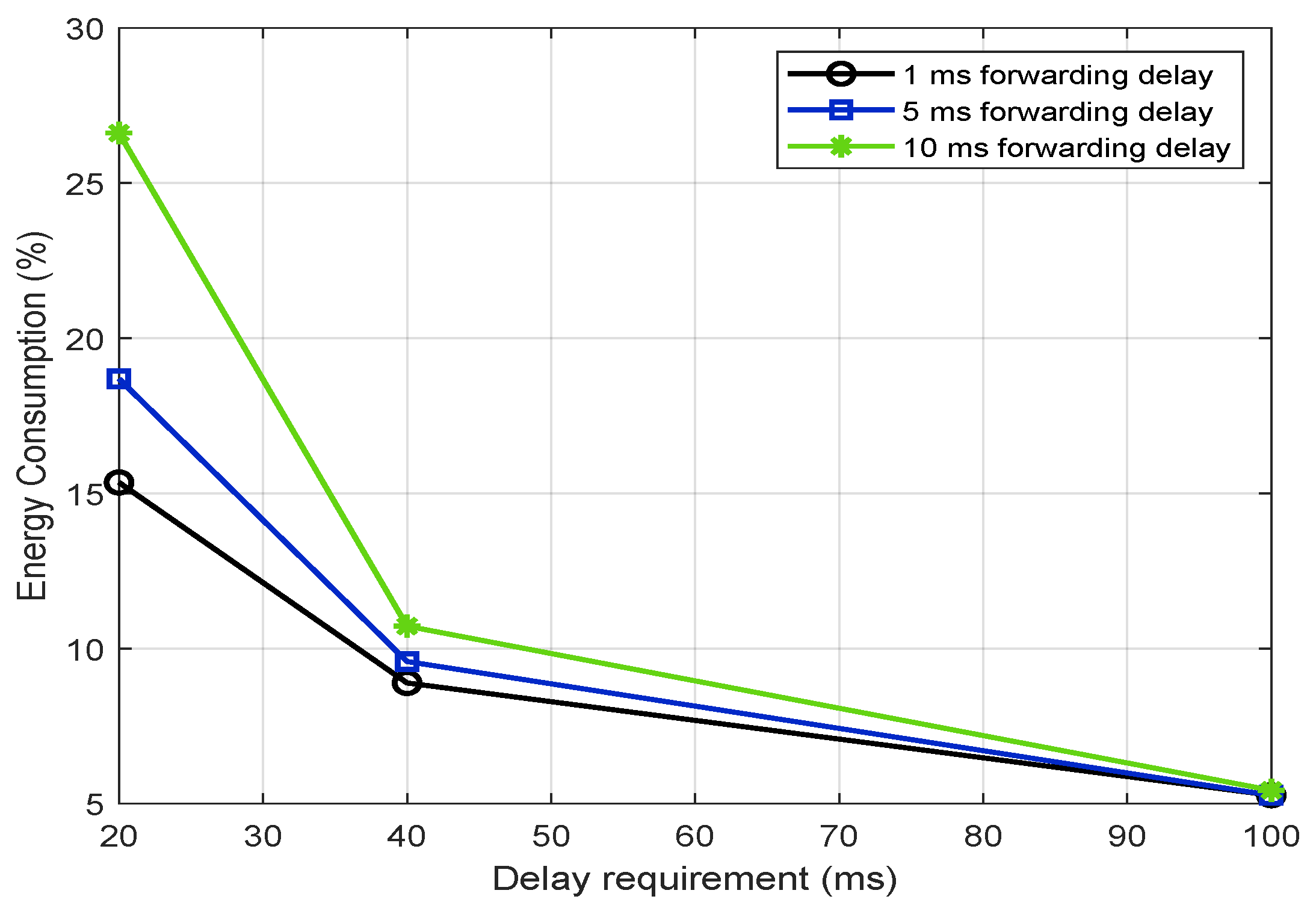

4. Performance Evaluation

5. Discussion and Open Research Issues

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| WFN | Working Fog Node |

| SFN | Standby Fog Node |

| IFN | Idle Fog Node |

| QoS | Quality-of-Service |

| RECON | Reconfiguration period |

| SLA | Service Level Agreement |

| UE | User Equipment |

| FCI | Fog Computing Infrastructure |

| CI | Communication Interface |

| PU | Processing Unit |

| ARIMA | Autoregressive Integrated Moving Average |

References

- Beloglazov, A.; Buyya, R.; Lee, Y.C.; Zomaya, A. A taxonomy and survey of energy-efficient data centers and cloud computing systems. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2011; Volume 82, pp. 47–111. [Google Scholar]

- Boru, D.; Kliazovich, D.; Granelli, F.; Bouvry, P.; Zomaya, A.Y. Energy-efficient data replication in cloud computing datacenters. Clust. Comput. 2015, 18, 385–402. [Google Scholar] [CrossRef]

- Consumerlab, E. Hot consumer trends 2016. Retrieved Sept. 2016, 1, 10. [Google Scholar]

- Weiner, M.; Jorgovanovic, M.; Sahai, A.; Nikolié, B. Design of a low-latency, high-reliability wireless communication system for control applications. In Proceedings of the 2014 IEEE International Conference on Communications (ICC), Sydney, NSW, Australia, 10–14 June 2014; pp. 3829–3835. [Google Scholar]

- Byers, C.C. Architectural imperatives for fog computing: Use cases, requirements, and architectural techniques for FOG-enabled IoT networks. IEEE Commun. Mag. 2017, 55, 14–20. [Google Scholar] [CrossRef]

- Leverich, J.; Kozyrakis, C. On the energy (in) efficiency of hadoop clusters. ACM SIGOPS Oper. Syst. Rev. 2010, 44, 61–65. [Google Scholar] [CrossRef] [Green Version]

- Oma, R.; Nakamura, S.; Duolikun, D.; Enokido, T.; Takizawa, M. An energy-efficient model for fog computing in the internet of things (IoT). Internet Things 2018, 1, 14–26. [Google Scholar] [CrossRef]

- Hou, S.; Ni, W.; Zhao, S.; Cheng, B.; Chen, S.; Chen, J. Frequency-reconfigurable cloud versus fog computing: An energy-efficiency aspect. IEEE Trans. Green Commun. Netw. 2019, 4, 221–235. [Google Scholar] [CrossRef]

- Rahman, F.H.; Newaz, S.S.; Au, T.W.; Suhaili, W.S.; Lee, G.M. Off-street vehicular fog for catering applications in 5G/B5G: A trust-based task mapping solution and open research issues. IEEE Access 2020, 8, 117218–117235. [Google Scholar] [CrossRef]

- Amudha, S.; Murali, M. DESD-CAT inspired algorithm for establishing trusted connection in energy efficient FoG-BAN networks. Mater. Today Proc. 2021, in press. [Google Scholar] [CrossRef]

- Fang, W.; Zhang, W.; Chen, W.; Liu, Y.; Tang, C. TME 2 R: Trust Management-Based Energy Efficient Routing Scheme in Fog-Assisted Industrial Wireless Sensor Network. In International Conference on 5G for Future Wireless Networks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 155–173. [Google Scholar]

- Wang, T.; Qiu, L.; Sangaiah, A.K.; Xu, G.; Liu, A. Energy-efficient and trustworthy data collection protocol based on mobile fog computing in Internet of Things. IEEE Trans. Ind. Inform. 2019, 16, 3531–3539. [Google Scholar] [CrossRef]

- Malik, U.M.; Javed, M.A.; Zeadally, S.; ul Islam, S. Energy efficient fog computing for 6G enabled massive IoT: Recent trends and future opportunities. IEEE Internet Things J. 2021. [Google Scholar] [CrossRef]

- Wan, J.; Chen, B.; Wang, S.; Xia, M.; Li, D.; Liu, C. Fog computing for energy-aware load balancing and scheduling in smart factory. IEEE Trans. Ind. Inform. 2018, 14, 4548–4556. [Google Scholar] [CrossRef]

- Shahid, M.H.; Hameed, A.R.; ul Islam, S.; Khattak, H.A.; Din, I.U.; Rodrigues, J.J. Energy and delay efficient fog computing using caching mechanism. Comput. Commun. 2020, 154, 534–541. [Google Scholar] [CrossRef]

- Jiang, Y.L.; Chen, Y.S.; Yang, S.W.; Wu, C.H. Energy-efficient task offloading for time-sensitive applications in fog computing. IEEE Syst. J. 2018, 13, 2930–2941. [Google Scholar] [CrossRef]

- Xiao, Y.; Krunz, M. Distributed optimization for energy-efficient fog computing in the tactile Internet. IEEE J. Sel. Areas Commun. 2018, 36, 2390–2400. [Google Scholar] [CrossRef] [Green Version]

- Rahman, F.H.; Newaz, S.S.; Au, T.W.; Suhaili, W.S.; Mahmud, M.P.; Lee, G.M. EnTruVe: ENergy and TRUst-aware Virtual Machine allocation in VEhicle fog computing for catering applications in 5G. Future Gener. Comput. Syst. 2022, 126, 196–210. [Google Scholar] [CrossRef]

- Reddy, K.H.K.; Luhach, A.K.; Pradhan, B.; Dash, J.K.; Roy, D.S. A genetic algorithm for energy efficient fog layer resource management in context-aware smart cities. Sustain. Cities Soc. 2020, 63, 102428. [Google Scholar] [CrossRef]

- Meisner, D.; Gold, B.T.; Wenisch, T.F. PowerNap: Eliminating server idle power. In ACM Sigplan Notices; ACM: New York, NY, USA, 2009; Volume 44, pp. 205–216. [Google Scholar]

- Mathew, V.; Sitaraman, R.K.; Shenoy, P. Energy-aware load balancing in content delivery networks. In Proceedings of the INFOCOM, 2012 Proceedings IEEE, Orlando, FL, USA, 25–30 March 2012; pp. 954–962. [Google Scholar]

- Kamitsos, I.; Andrew, L.; Kim, H.; Chiang, M. Optimal sleep patterns for serving delay-tolerant jobs. In Proceedings of the 1st International Conference on Energy-Efficient Computing and Networking, Passau, Germany, 13–15 April 2010; ACM: New York, NY, USA, 2010; pp. 31–40. [Google Scholar]

- Sarji, I.; Ghali, C.; Chehab, A.; Kayssi, A. Cloudese: Energy efficiency model for cloud computing environments. In Proceedings of the 2011 International Conference on Energy Aware Computing, Istanbul, Turkey, 30 November–2 December 2011; pp. 1–6. [Google Scholar]

- Duan, L.; Zhan, D.; Hohnerlein, J. Optimizing cloud data center energy efficiency via dynamic prediction of cpu idle intervals. In Proceedings of the Cloud Computing (CLOUD), 2015 IEEE 8th International Conference on Cloud Computing IEEE, New York, NY, USA, 27 June–2 July 2015; pp. 985–988. [Google Scholar]

- Dias, M.P.I.; Karunaratne, B.S.; Wong, E. Bayesian estimation and prediction-based dynamic bandwidth allocation algorithm for sleep/doze-mode passive optical networks. J. Light. Technol. 2014, 32, 2560–2568. [Google Scholar] [CrossRef]

- Farahnakian, F.; Pahikkala, T.; Liljeberg, P.; Plosila, J.; Tenhunen, H. Utilization prediction aware VM consolidation approach for green cloud computing. In Proceedings of the Cloud Computing (CLOUD), 2015 IEEE 8th International Conference on Cloud Computing, New York, NY, USA, 27 June–2 July 2015; pp. 381–388. [Google Scholar]

- Zhang, S.; Zhao, S.; Yuan, M.; Zeng, J.; Yao, J.; Lyu, M.R.; King, I. Traffic Prediction Based Power Saving in Cellular Networks: A Machine Learning Method. In Proceedings of the 25th ACM SIGSPATIAL International Conference on Advances in Geographic Information Systems, Redondo Beach, CA, USA, 7–10 November 2017; ACM: New York, NY, USA, 2017; p. 29. [Google Scholar]

- Saker, L.; Elayoubi, S.E.; Chahed, T. Minimizing energy consumption via sleep mode in green base station. In Proceedings of the Wireless Communications and Networking Conference (WCNC), Sydney, NSW, Australia, 18–21 April 2010; pp. 1–6. [Google Scholar]

- Khan, A.M.; Freitag, F. On Participatory Service Provision at the Network Edge with Community Home Gateways. Procedia Comput. Sci. 2017, 109, 311–318. [Google Scholar] [CrossRef]

- Chou, C.H.; Wong, D.; Bhuyan, L.N. Dynsleep: Fine-grained power management for a latency-critical data center application. In Proceedings of the 2016 International Symposium on Low Power Electronics and Design, San Francisco, CA, USA, 8–10 August 2016; pp. 212–217. [Google Scholar]

- Rahman, F.H.; Au, T.W.; Newaz, S.S.; Suhaili, W.S.; Lee, G.M. Find my trustworthy fogs: A fuzzy-based trust evaluation framework. Future Gener. Comput. Syst. 2020, 109, 562–572. [Google Scholar] [CrossRef] [Green Version]

- Ge, C.; Sun, Z.; Wang, N. A survey of power-saving techniques on data centers and content delivery networks. IEEE Commun. Surv. Tutor. 2013, 15, 1334–1354. [Google Scholar]

- Luan, T.H.; Gao, L.; Li, Z.; Xiang, Y.; Wei, G.; Sun, L. Fog computing: Focusing on mobile users at the edge. arXiv 2015, arXiv:1502.01815. [Google Scholar]

- Ross, T.J. Fuzzy Logic with Engineering Applications; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Izquierdo, L.R.; Olaru, D.; Izquierdo, S.S.; Purchase, S.; Soutar, G.N. Fuzzy logic for social simulation using NetLogo. J. Artif. Soc. Soc. Simul. 2015, 18, 1. [Google Scholar] [CrossRef]

- Li, S.; Da Xu, L.; Wang, X. Compressed sensing signal and data acquisition in wireless sensor networks and internet of things. IEEE Trans. Ind. Inform. 2013, 9, 2177–2186. [Google Scholar] [CrossRef] [Green Version]

- Kayacan, E.; Ulutas, B.; Kaynak, O. Grey system theory-based models in time series prediction. Expert Syst. Appl. 2010, 37, 1784–1789. [Google Scholar] [CrossRef]

- Dabbagh, M.; Hamdaoui, B.; Guizani, M.; Rayes, A. Toward energy-efficient cloud computing: Prediction, consolidation, and overcommitment. IEEE Netw. 2015, 29, 56–61. [Google Scholar] [CrossRef]

- Yan, S.; Gu, Z.; Nguang, S.K. Memory-event-triggered H∞ output control of neural networks with mixed delays. IEEE Trans. Neural Netw. Learn. Syst. 2021. [Google Scholar] [CrossRef]

- Kliazovich, D.; Arzo, S.T.; Granelli, F.; Bouvry, P.; Khan, S.U. Accounting for load variation in energy-efficient data centers. In Proceedings of the Communications (ICC), 2013 IEEE International Conference on Communications (ICC), Budapest, Hungary, 9–13 June 2013; pp. 2561–2566. [Google Scholar]

| Parameters | Values |

|---|---|

| 1.5 ms | |

| 600 fog nodes | |

| 53 W | |

| 15 W | |

| 2.22 W | |

| 1.28 W | |

| 2 ms | |

| 1 ms | |

| 600 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pg. Ali Kumar, D.S.N.K.; Newaz, S.H.S.; Rahman, F.H.; Lee, G.M.; Karmakar, G.; Au, T.-W. Green Demand Aware Fog Computing: A Prediction-Based Dynamic Resource Provisioning Approach. Electronics 2022, 11, 608. https://doi.org/10.3390/electronics11040608

Pg. Ali Kumar DSNK, Newaz SHS, Rahman FH, Lee GM, Karmakar G, Au T-W. Green Demand Aware Fog Computing: A Prediction-Based Dynamic Resource Provisioning Approach. Electronics. 2022; 11(4):608. https://doi.org/10.3390/electronics11040608

Chicago/Turabian StylePg. Ali Kumar, Dk. Siti Nur Khadhijah, S. H. Shah Newaz, Fatin Hamadah Rahman, Gyu Myoung Lee, Gour Karmakar, and Thien-Wan Au. 2022. "Green Demand Aware Fog Computing: A Prediction-Based Dynamic Resource Provisioning Approach" Electronics 11, no. 4: 608. https://doi.org/10.3390/electronics11040608

APA StylePg. Ali Kumar, D. S. N. K., Newaz, S. H. S., Rahman, F. H., Lee, G. M., Karmakar, G., & Au, T.-W. (2022). Green Demand Aware Fog Computing: A Prediction-Based Dynamic Resource Provisioning Approach. Electronics, 11(4), 608. https://doi.org/10.3390/electronics11040608